Abstract

Affective computing has focused on emotion acquisition using techniques of objective (sensors, facial recognition, physiological signals) and subjective measurement (self-report). Each technique has advantages and drawbacks, and a combination of the information generated from each could provide systems more balanced and accurate information about user emotions. However, there are several benefits to self-reporting emotions, over objective techniques: the collected information may be more precise and it is less intrusive to determine. This systematic literature review focuses on analyzing which technologies have been proposed to conduct subjective measurements of emotions through self-report. We aim to understand the state of the art regarding the features of interfaces for emotional self-report, identify the context for which they were designed, and describe several other aspects of the technologies. A SLR was conducted, resulting in 18 selected papers, 13 of which satisfied the inclusion criteria. We identified most existing systems use graphical user interfaces, and there are very few proposals that use tangible user interfaces to self-report emotional information, which may be an opportunity to design novel interfaces, especially for populations with low digital skills, e.g. older adults.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Emotions are central to many human processes (e.g. perception, understanding), and may enhance the effectiveness of some systems [22]. Emotions are composed of behavioral, expressive, physiological, and subjective reactions (feelings) [4]. An instrument may measure only one of these components [4].Therefore, many technological instruments have been proposed, e.g. some that seek to recognize emotions through computer vision or physiological sensors, and others that require users to input their feelings.

Ubiquitous computing is technology that “disappears”, with the goal of designing computers that fit the human environment [26]. An example of this type of technology are Tangible User Interfaces (TUIs). TUIs allow users to manipulate digital information and physically interact with it [11]. TUIs take advantage of users’ knowledge of how the physical world works [13], which may make them especially suitable for users without much knowledge of the digital world.

There are several scenarios in which systems benefit from acquiring information about users’ emotions, but in which users have low digital skills and therefore may have difficulty expressing these emotions. For example, a training center to introduce older and underprivileged adults to computing has trouble gathering their opinions and feelings about the course. TUIs may be less intimidating, taking advantage of their knowledge of the physical world and blending into the environment.

The goal of this work is to study which types of interfaces currently exist or have been proposed that deal with user emotions. This will allow us to understand whether populations such as the one mentioned above are well served by these interfaces. We aim to understand the characteristics of interfaces dealing with emotions, to provide as a contribution an overview of the important elements and considerations when designing an interface for users to report their feelings. To achieve this goal, we conducted a systematic literature review (SLR). SLR is a means of identifying, evaluating and interpreting all available research relevant to a particular research question, or topic area, or phenomenon of interest [15]. This technique is useful for reviewing existing evidence about a technology and identifying gaps in current research.

This paper is organized as follows. In Sect. 2, we define relevant terms for our literature review. Section 3 describes our methodology, describing the research questions, search strategy, selection criteria and how we extracted the data. Section 4 summarizes the results, and finally, in Sect. 5 we present the discussion, conclusions, and directions for future research.

2 User Interfaces: A Brief Introduction

This section presents a brief overview of the concepts of Interaction Style and Types of user Interfaces.

The concept of interaction may be understood as a metaphor of translation between two languages, while an interaction style is defined as a dialogue between computer and user [5]. Interaction style may also be defined as the way that a user can communicate or interact with a computer system [3]. Many different interaction styles have been proposed [3, 5], e.g. natural language (speech or typed human language recognition), form-fills and spreadsheets, WIMP (windows, icons, menus, pointers), point-and-click, three dimensional interfaces (virtual reality).

A user interface is the representation of a system with which a user can interact [12]. To the best of our knowledge, there is not one agreed upon taxonomy to define every possible type of user interface. Command-line interfaces (CLI) are interfaces in which the user types in commands [14]. Graphical user interface (GUI) represent information through an image-based representation in a display [12, 14]. Natural user interfaces (NUI) allow users to interact by using e.g. body language, gestures, or facial expressions [14, 27].Organic user interface (OUI) define an interface that may change its form, shape or being [8, 16]. Tangible user interface (TUI) is a user interface in which a person uses a physical object in order to interact with digital information [10].

3 Literature Review Methodology

In general, a SLR can be divided in three phases. Even though - due to space concerns - we do not show each of the phases completely, our work was developed following them. The phases are the following ones: [15, 19]:

-

1.

Planning the review: Define a protocol that specifies the plan that the SLR will follow to identify, assess, and collate evidence.

-

2.

Conducting the review: Execute the planned protocol.

-

3.

Reporting the review: Write up the results of the review and disseminate the results to potentially interested parties.

3.1 Need for a Systematic Literature Review

Recently, there have been several proposals of user interfaces and interaction styles to report, register and share human emotions. This SLR aims to identify which technologies are being used, who the target users are, and how the technology has been evaluated. We aim to identify trends in this area, under-served populations of users, and avenues of future research.

3.2 Research Questions

The goal of this review is to find how software technologies support self-report of emotional information. However, this question is too generic, so it was sub-divided into several questions, that focus on specific aspects of the evaluation.

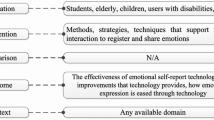

To define our research questions we followed the Population, Intervention, Comparison, Outcome and Context (PICOC) structure [15] (Table 1). This structure helps capture the attributes that should be considered when defining research questions in a SLR. This review does not aim to compare interventions, so the attribute comparison is not applicable.

A set of research questions was defined, related to understanding the types of interfaces, interactions, evaluation methodologies, of novel interfaces and technologies to self-report emotions. Hence, our SLR aims to answer the following research questions:

3.3 Search Strategy

Based on these questions, we identified the keywords to be used to search for the primary studies. The initial set of keywords was: emotion/s, mood/s, affect/s, share, interaction, self-report. With these keywords, the search string was built using boolean AND and OR operators, resulting in the following search string:

The search for primary studies was done on the following digital libraries: ACM Digital LibraryFootnote 1, IEEE Xplore Digital LibraryFootnote 2, ScienceDirectFootnote 3 and Springer LinkFootnote 4. These libraries were chosen because they are among the most relevant sources of scientific articles in several computer science areas [19]. Table 2 presents the number of papers that the search on each of the digital libraries produced.

We removed duplicates automatically (and then re-checked manually), finding 56 duplicated papers that were excluded. After this step, there were 271 papers in our corpus.

3.4 Selection Criteria

Once the potentially relevant primary studies had been selected, we evaluated them to decide whether they should be included in the review. For this, the following inclusion and exclusion criteria were defined:

-

1.

Inclusion Criteria:

-

(a)

The paper is in English.

-

(b)

The paper is a peer-reviewed article and it was obtained from a journal, conference or workshop.

-

(c)

The paper was published on or before May 2015.

-

(d)

The paper is focused on technologies for reporting/registering/communicating human emotions.

-

(e)

The paper reasonably presents the technology and its validation.

-

(f)

The paper present as measuring subjective emotions as its main purpose.

-

(a)

-

2.

Exclusion Criteria:

-

(a)

The paper is not available online.

-

(b)

The paper is a survey or SLR.

-

(c)

The paper does not include validation of the technology.

-

(d)

The paper includes human-robot/agent interaction.

-

(e)

The paper does not include an objective measurement of emotions.

-

(a)

Four researchers individually read the titles and abstracts of the 271 selected papers, and applied the criteria to accept or reject papers from the study. The papers all researchers agreed should be accepted or rejected (as well as papers with only 1 acceptance) were automatically included or removed. A fifth researcher was asked to decide for papers with 2 or 3 acceptances (25 papers in total). After this step, out of our corpus of 271 papers, 18 papers remained.

3.5 Data Extraction

For this step, three researchers read the 18 selected papers, with the focus on answering the research questions introduced in Sect. 3.2. The obtained information was compiled into an ad-hoc Excel template. Moreover, during this detailed reading and analysis, the application of exclusion criteria was refined in some cases. Thus, 5 papers were excluded and only 13 papers remained for the data analysis step. These papers are presented in Table 3.

4 Results

This section presents the results produced by our SLR. Table 4 shows the per-year distribution of selected studies, separated by publication type. 85 % of the reviewed papers were published between 2009 and 2015. The following sections present the results, structured as answers to the research questions.

4.1 Results of Interaction Styles and Type of Interfaces

Out of the analyzed papers, 70 % presented a GUI interface, with WIMP, point-and-click, Menu and Q&A interaction styles (see Table 5). Only 30 % were TUI interfaces. The target user from the reviewed studies is in 70 % of cases generic (there is no specific target population), and only 15 % include users with specific characteristics such as patients, caregivers, workers.

4.2 Validation of Registered Emotions

Out of the analyzed studies, 80 % used additional mechanisms to validate the self-reported emotions (Table 6). The validations were e.g. measurement of physiological signals (facial expressions, gestures, heart rate).

4.3 Sharing Emotions

Sharing emotions is allowed in 65 % of the reviewed proposals. They allow sharing emotions with several types of users (Table 7). The interfaces that allow emotion sharing are in 60 % of cases GUIs, and in 40 % of cases TUIs.

4.4 Methodologies of Evaluation

This SLR studied evaluation methods to understand which are commonly used in this type of interface (Table 8). 85 % include an evaluation of the proposed technology, and most used mixed-methods approaches. The chosen participants were students, or users with particular characteristics, or in some cases, any available user. Only two studies conducted evaluation with users in a real context, e.g. a mental illness such as depression. Regarding the length of study, out of the studies with evaluation, 55 % specified how long it took them to make the evaluation. The average number of participants was 30 (\(min=10\), \(max=59\)).

4.5 Benefits of Register Emotions

We identified the benefits of using technology to register emotions (Table 9). 50 % suggest an improvement on the goals of the study, which were either supporting self-reflection, encouraging people to reflect on their emotions, or improving emotion identification by participants. 45 % show evidence that technologies to report emotions facilitate tasks such as user experience studies and cultural research. This research suggests there is no particular evidence of differences in this aspect between articles published in conferences and journals.

4.6 Discussion

The most common interaction style was WIMP, with a GUI interface. We did not find a well-defined interaction style for TUI interfaces, which may have several explanations: TUIs are newer and not as well established as GUIs, and there are fewer research projects that study TUIs in multiple real contexts. This may open an interesting field of research, that tries to uncover the interaction styles that are relevant for new interfaces, considering their particular characteristics.

It is interesting to note that 80 % of the analyzed interfaces implemented a second method to validate the emotions users reported. It is important and noteworthy that researchers recognize that due to the drawbacks, all instruments inherently have, emotions should ideally be validated both through objective and subjective methods.

Over 60 % of the reviewed studies allowed emotion sharing. This opens up another interesting aspect that needs further research, privacy: how do users feel about sharing something that is deeply personal, such as an emotion?

Registering emotions was considered to have several benefits, e.g. allowing users to self-reflect on emotional states. Delivering appropriate instances of self-reflection may benefit users, especially in contexts such as systems related to mental health, or for users at a higher risk for depression.

One challenge that is still open is to conduct evaluations of these systems with real users in real contexts of use. Naturally, this is a difficult task, as in any research with real users - however, evaluations should begin to incorporate real users to be able to truly understand the impact of self-reporting emotions.

5 Conclusions and Future Work

This work presented a SLR regarding interfaces for emotional self-report. We analyzed several dimensions of the interfaces, e.g. used technology, target user, evaluation process and benefits. The main contribution of this research is to present a rigorous and formal SLR that characterizes research in the area of user interfaces for self-reporting emotions.

In general, researchers have identified that it is important to share emotions with other users. However, our results show that most self-report interfaces for emotions are GUIs. This may mean that some categories of users (older adults, children who do not yet know how to read) may be left out of these technologies, which suggests the importance of studying these users to propose technologies with new interaction styles specific to them.

We found a small number of relevant papers, which is a motivation to continue expanding our literature review. For example, we can consider other digital libraries (e.g. ScopusFootnote 5, Wiley OnlineFootnote 6) to widen the scope of our literature review and take into account a greater number of primary studies. It would be especially interesting to explore clinical-focused journals to expand the scope of our review. The small number of papers is also a signal that this area of research requires more studies (especially involving users in real contexts) and interfaces (with new interaction styles).

References

Bardzell, S., Bardzell, J., Pace, T.: Understanding affective interaction: Emotion, engagement, and internet videos. In: Proceedings of the 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops (ACII 2009), pp. 1–8. IEEE, September 2009

Caon, M., Khaled, O.A., Mugellini, E., Lalanne, D., Angelini, L.: Ubiquitous interaction for computer mediated communication of emotions. In: Proceedings of the Humaine Association Conference on Affective Computing and Intelligent Interaction (ACII 2013), pp. 717–718. IEEE Computer Society, September 2013

Design, I.: Interaction Style (2014). http://www.interaction-design.org. Accessed May 2015

Desmet, P.: Measuring emotion: development and application of an instrument to measure emotional responses to products. In: Blythe, M., Overbeeke, K., Monk, A., Wright, P. (eds.) Funology: From Usability to Enjoyment. Funology, Human-Computer Interaction Series, vol. 3, pp. 111–123. Springer, Dordrecht (2005)

Dix, A., Finlay, J., Abowd, G.D., Beale, R.: Human-Computer Interaction, 3rd edn. Pearson Education Limited, Harlow (2004)

Doryab, A., Frost, M., Faurholt-Jepsen, M., Kessing, L.V., Bardram, J.E.: Impact factor analysis: combining prediction with parameter ranking to reveal the impact of behavior on health outcome. Pers. Ubiquit. Comput. 19(2), 355–365 (2015)

Gallacher, S., O’Connor, J., Bird, J., Rogers, Y., Capra, L., Harrison, D., Marshall, P.: Mood squeezer: Lightening up the workplace through playful and lightweight interactions. In: Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing (CSCW 2015), pp. 891–902. ACM, March 2015

Holman, D., Vertegaal, R.: Organic user interfaces: designing computers in any way, shape, or form. Commun. ACM 51(6), 48–55 (2008)

Isbister, K., Höök, K., Laaksolahti, J., Sharp, M.: The sensual evaluation instrument: developing a trans-cultural self-report measure of affect. Int. J. Hum. Comput. Stud. 65(4), 315–328 (2007)

Ishii, H.: Tangible bits: beyond pixels. In: Proceedings of the 2nd International Conference on Tangible and Embedded Interaction, pp. xv-xxv. ACM, February 2008

Ishii, H.: The tangible user interface and its evolution. Commun. ACM 51(6), 32–36 (2008)

Jacko, J.A.: Human Computer Interaction Handbook: Fundamentals, Evolving Technologies, and Emerging Applications. CRC Press, Boca Raton (2012)

Jacob, R.J., Girouard, A., Hirshfield, L.M., Horn, M.S., Shaer, O., Solovey, E.T., Zigelbaum, J.: Reality-based interaction: a framework for post-wimp interfaces. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 2008), pp. 201–210. ACM, April 2008

Jain, J., Lund, A., Wixon, D.: The future of natural user interfaces. In: Extended Abstracts on Human Factors in Computing Systems (CHI EA 2011), pp. 211–214. ACM, May 2011

Kitchenham, B., Charters, S.: Guidelines for performing Systematic Literature Reviews in Software Engineering. Technical report EBSE 2007–01, Keele University and Durham University, July 2007

Lahey, B., Girouard, A., Burleson, W., Vertegaal, R.: Paperphone: understanding the use of bend gestures in mobile devices with flexible electronic paper displays. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 2011), pp. 1303–1312. ACM, May 2011

Laurans, G., Desmet, P., Hekkert, P.: The emotion slider: A self-report device for the continuous measurement of emotion. In: Proceedings of the 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops (ACII 2009), pp. 1–6, September 2009

Lin, C.L., Gau, P.S., Lai, K.J., Chu, Y.K., Chen, C.H.: Emotion Caster: Tangible emotion sharing device and multimedia display platform for intuitive interactions. In: Proceedings of the 13th International Symposium on Consumer Electronics (ISCE 2009), pp. 988–989. IEEE, May 2009

Lisboa, L.B., Garcia, V.C., Lucrédio, D., de Almeida, E.S., de Lemos Meira, S.R., de Mattos Fortes, R.P.: A systematic review of domain analysis tools. Inf. Softw. Technol. 52(1), 1–13 (2010)

Niforatos, E., Karapanos, E.: EmoSnaps: a mobile application for emotion recall from facial expressions. Pers. Ubiquit. Comput. 19(2), 425–444 (2015)

Oliveira, E., Martins, P., Chambel, T.: Accessing movies based on emotional impact. Multimedia Syst. 19(6), 559–576 (2013)

Picard, R.W.: Affective computing: challenges. Int. J. Hum. Comput. Stud. 59(1–2), 55–64 (2003)

Read, R., Belpaeme, T.: Using the AffectButton to measure affect in child and adult-robot interaction. In: Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2013), pp. 211–212. IEEE Press, March 2013

Sánchez, J.A., Kirschning, I., Palacio, J.C., Ostróvskaya, Y.: Towards mood-oriented interfaces for synchronous interaction. In: Proceedings of the 2005 Latin American Conference on Human-Computer Interaction (CLIHC 2005), pp. 1–7. ACM, October 2005

Schubert, E., Ferguson, S., Farrar, N., Taylor, D., McPherson, G.E.: The six emotion-face clock as a tool for continuously rating discrete emotional responses to music. In: Aramaki, M., Barthet, M., Kronland-Martinet, R., Ystad, S. (eds.) CMMR 2012. LNCS, vol. 7900, pp. 1–18. Springer, Heidelberg (2013)

Weiser, M.: The computer for the 21st century. In: Baecker, R.M., Grudin, J., Buxton, W.A.S., Greenberg, S. (eds.) Human-Computer Interaction, pp. 933–940. Morgan Kaufmann Publishers Inc., San Francisco (1995)

Wigdor, D., Wixon, D.: Brave NUI World: Designing Natural User Interfaces for Touch and Gesture. Elsevier, Amsterdam (2011)

Yu, S.H., Wang, L.S., Chu, H.H., Chen, S.H., Chen, C.C.H., You, C.W., Huang, P.: A mobile mediation tool for improving interaction between depressed individuals and caregivers. Pers. Ubiquit. Comput. 15(7), 695–706 (2011)

Acknowledgements

This project was partially funded by CONICYT Chile PhD scholarship, CONICIT and MICIT Costa Rica PhD scholarship and Universidad de Costa Rica, and Fondecyt (Chile) Project No. 1150365.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Fuentes, C., Gerea, C., Herskovic, V., Marques, M., Rodríguez, I., Rossel, P.O. (2015). User Interfaces for Self-reporting Emotions: A Systematic Literature Review. In: García-Chamizo, J., Fortino, G., Ochoa, S. (eds) Ubiquitous Computing and Ambient Intelligence. Sensing, Processing, and Using Environmental Information. UCAmI 2015. Lecture Notes in Computer Science(), vol 9454. Springer, Cham. https://doi.org/10.1007/978-3-319-26401-1_30

Download citation

DOI: https://doi.org/10.1007/978-3-319-26401-1_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-26400-4

Online ISBN: 978-3-319-26401-1

eBook Packages: Computer ScienceComputer Science (R0)