Abstract

In 2007, Labeled Faces in the Wild was released in an effort to spur research in face recognition, specifically for the problem of face verification with unconstrained images. Since that time, more than 50 papers have been published that improve upon this benchmark in some respect. A remarkably wide variety of innovative methods have been developed to overcome the challenges presented in this database. As performance on some aspects of the benchmark approaches 100 % accuracy, it seems appropriate to review this progress, derive what general principles we can from these works, and identify key future challenges in face recognition. In this survey, we review the contributions to LFW for which the authors have provided results to the curators (results found on the LFW results web page). We also review the cross cutting topic of alignment and how it is used in various methods. We end with a brief discussion of recent databases designed to challenge the next generation of face recognition algorithms.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Face Recognition

- Face Image

- Local Binary Pattern

- Convolutional Neural Network

- Linear Support Vector Machine

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Face recognition is a core problem and popular research topic in computer vision for several reasons. First, it is easy and natural to formulate well-posed problems, since individuals come with their own label, their name. Second, despite its well-posed nature, it is a striking example of fine-grained classification—the variation of two images within a class (images of a single person) can often exceed the variation between images of different classes (images of two different people). Yet human observers have a remarkably easy time ignoring nuisance variables such as pose and expression and focusing on the features that matter for identification. Finally, face recognition is of tremendous societal importance. In addition to the basic ability to identify, the ability of people to assess the emotional state, the focus of attention, and the intent of others are critical capabilities for successful social interactions. For all these reasons, face recognition has become an area of intense focus for the vision community.

This chapter reviews research progress on a specific face database, Labeled Faces in the Wild (LFW), that was introduced to stimulate research in face recognition for images taken in common, everyday settings. In the remainder of the introduction, we review some basic face recognition terminology, provide the historical setting in which this database was introduced, and enumerate some of the specific motivations for introducing the database. In Sect. 2, we discuss the papers for which the curators have been provided with results. We group these papers by the protocols for which they have reported results. In Sect. 3, we discuss alignment as a cross-cutting issue that affects almost all of the methods included in this survey. We conclude by discussing future directions of face recognition research, including new databases and new paradigms designed to push face recognition to the next level.

1.1 Verification and Identification

In this chapter, we will refer to two widely used paradigms of face recognition: identification and verification. In identification, information about a specific set of individuals to be recognized (the gallery) is gathered. At test time, a new image or group of images is presented (the probe). The task of the system is to decide which of the gallery identities, if any, is represented by the probe. If the system is guaranteed that the probe is indeed one of the gallery identities, this is known as closed set identification. Otherwise, it is open set identification, and the system is expected to identify when an image does not belong to the gallery.

In contrast, the problem of verification is to analyze two face images and decide whether they represent the same person or two different people. It is usually assumed that neither of the photos shows a person from any previous training set.

Many of the early face recognition databases and protocols focused on the problem of identification. As discussed below, the difficulty of the identification problem was so great that researchers were motivated to simplify the problem by controlling the number of image parameters that were allowed to vary simultaneously. One of the salient aspects of LFW is that it focused on the problem of verification exclusively, although it was certainly not the first to do so.Footnote 1 While the use of the images in LFW originally grew out of a motivation to study learning from one example and fine-grained recognition, a side effect was to render the problem of face recognition in real-world settings significantly easier—easier enough to attract the attention of a wide range of researchers.

1.2 Background

In the early days of face recognition by computer, the problem was so daunting that it was logical to consider a divide-and-conquer approach. What is the best way to handle recognition in the presence of lighting variation? Pose variation? Occlusions? Expression variation? Databases were built to consider each of these issues using carefully controlled images and experiments.Footnote 2 One of the most comprehensive efforts in this direction is the CMU Multi-PIEFootnote 3 database, which systematically varies multiple parameters over an enormous database of more than 750, 000 images [38].

Studying individual sources of variation in images has led to some intriguing insights. For example, in their efforts to characterize the structure of the space of images of an object under different lighting conditions, Belhumeur and Kriegman [15] showed that the space of faces under different lighting conditions (with other factors such as expression and pose held constant) forms a convex cone. They propose doing lighting invariant recognition by examining the distance of an image to the convex cones defined for each individual.

Despite the development of methods that could successfully recognize faces in databases with well-controlled variation, there was still a gap in the early 2000s between the performance of face recognition on these controlled databases and results on real face recognition tasks, for at least two reasons:

-

Even with two methods, call them A and B, that can successfully model two types of variation separately, it is not always clear how to combine these methods to produce a method that can address both sources of variation. For example, a method that can handle significant occlusions may rely on the precise registration of two face images for the parts that are not occluded. This might render the method ineffective for faces that exhibit both occlusions and pose changes. As another example, the method cited above to handle lighting variations [15] relies on all of the other parameters of variation being fixed.

-

There is a significant difference between handling controlled variations of a parameter, and handling random or arbitrary values of a parameter. For example, a method that can address five specific poses may not generalize well to arbitrary poses. Many previously existing databases studied fixed variations of parameters such as pose, lighting, and decorations. While useful, this does not guarantee the handling of more general cases of these parameters. Furthermore, there are too many sources of variation to effectively cover the set of possible observations in a controlled database. Some databases, such as the ones used in the 2005 Face Recognition Grand Challenge [77], used certain “uncontrolled settings” such as an office, a hallway, or outdoor environments. However, the fact that these databases were built manually (rather than mining previously existing photos) naturally limited the number of settings that could be included. Hence, while the settings were uncontrolled in that they were not carefully specified, they were drawn from a small fixed set of empirical settings that were available to the database curators. Algorithms tuned for such evaluations are not required to deal with a large amount of previously unseen variability.

In 2006, while results on some databases were saturating, there was still poor performance on problems with real-world variation.

1.3 Variations on Traditional Supervised Learning and the Relationship to Face Recognition

In parallel to the work in the early 2000s on face identification, there was a growing interest in the machine learning community in variations of the standard supervised learning problem with large training sets. These variations included:

-

transfer learning—that is, sharing parameters from certain classes or distributions to other classes or distributions that may have less training data available [76], and

-

semi-supervised learning, in which some training examples have no associated labels (e.g. [73]).

Several researchers chose face verification as a domain in which to study these new issues [21, 30, 36]. In particular, since face verification is about deciding whether two face images match (without any previous examples of those identities), it can be viewed as an instance of learning from a single training example. That is, letting the two images presented be I and J, I can be viewed as a single training example for the identity of a particular person. Then the problem can be framed as a binary classification problem in which the goal is to decide whether image J is in the same class as image I or not.

In addition, face verification is an ideal domain for the investigation of transfer learning, since learning the forms of variation for one person is important information that can be transferred to the understanding of how images of another person vary.

One interesting paper in this vein was the work of Chopra et al. from CVPR 2005 [30]. In this paper, a convolutional neural network (CNN) was used to learn a metric between face images. The authors specifically discuss the structure of the face recognition problem as a problem with a large number of classes and small numbers of training examples per class. In this work, the authors reported results on the relatively difficult AR database [66]. This paper was a harbinger of the recent highly successful application of CNNs to face verification.

1.3.1 Faces in the Wild and Labeled Faces in the Wild

Continuing the work on fine-grained recognition and recognition from a small number of examples, Ferencz et al. [36, 57] developed a method in 2005 for deciding whether two images represented the same object. They presented this work on data sets of cars and faces, and hence were also addressing the face verification problem. To make the problem challenging for faces, they used a set of news photos collected as part of the Berkeley “Faces in the Wild” project [18, 19] started by Tamara Berg and David Forsyth. These were news photos taken from typical news articles, representing people in a wide variety of settings, poses, expressions, and lighting. These photos proved to be very popular for research, but they were not suited to be a face recognition benchmark since (a) the images were only noisily labeled (more than 10 % were labeled incorrectly), and (b) there were large numbers of duplicates. Eventually, there was enough demand that the data were relabeled by hand, duplicates were removed, and protocols for use were written. The data were released as “Labeled Faces in the Wild” in conjunction with the original LFW technical report [49].

There were several goals behind the introduction of LFW. These included

-

stimulating research on face recognition in unconstrained images;

-

providing an easy-to-use database, with low barriers to entry, easy browsing, and multiple parallel versions to lower pre-processing burdens;

-

providing consistent and precise protocols for the use of the database to encourage fair and meaningful comparisons;

-

curating results to allow easy comparison, and easy replication of results in new research papers.

In the following section, we take a detailed look at many of the papers that have been published using LFW. We do not review all of the papers. Rather we review papers for which the authors have provided results to the curators, and which are documented on the LFW results web page.Footnote 4 We now turn to describing results published on the LFW benchmark.

2 Algorithms and Methods

In this section, we discuss the progression of results on LFW from the time of its release until the present. LFW comes with specific sets of image pairs that can be used in training. These pairs are labeled as “same” or “different” depending upon whether the images are of the same person. The specification of exactly how these training pairs are used is described by various protocols.

2.1 The LFW Protocols

Originally, there were two distinct protocols described for LFW, the image-restricted and the unrestricted protocols. The unrestricted protocol allows the creation of additional training pairs by combining other pairs in certain ways. (For details, see the original LFW technical report [49].)

As many researchers started using additional training data from outside LFW to improve performance, new protocols were developed to maintain fair comparisons among methods. These protocols were described in a second technical report [47].

The current six protocols are:

-

1.

Unsupervised.

-

2.

Image-restricted with no outside data.

-

3.

Unrestricted with no outside data.

-

4.

Image-restricted with label-free outside data.

-

5.

Unrestricted with label-free outside data.

-

6.

Unrestricted with labeled outside data.

In order to make comparisons more meaningful, we discuss the various protocols in three groups.

In particular, we start with the two protocols allowing no outside data. We then discuss protocols that allow outside data not related to identity, and then outside data with identity labels. We do not address the unsupervised protocol in this review.

2.1.1 Why Study Restricted Data Protocols?

Before starting on this task, it is worth asking the following question: Why might one wish to study methods that do not use outside data when their performance is clearly inferior to those that do use additional data? There are several possible answers to this question.

Utility of methods for other tasks. One reason to consider methods which use limited training data is that they can be used in other settings in which training data are limited. That is, it may be the case that in recognition problems other than face recognition, there may not be available the hundreds of thousands or millions of images that are available to train face recognizers. Thus, a method that uses less training data is more transportable to other domains.

Statistical efficiency versus asymptotic optimality. It has been known since the mid-seventies [87] that many methods, such as K-nearest neighbors (K-NN), continue to increase in accuracy with increasing training data until they reach optimal performance (also known as the Bayes error rate). In other words, if one only cares about accuracy with unlimited training data and unlimited computation time, there is no method better than K-NN.

Thus, we know not only that many methods will continue to improve as more training data is added, but that many methods, including some of the simplest methods, will achieve optimal performance. This makes the question of statistical efficiency a primary one. The question is not whether we can achieve optimal accuracy (the Bayes error rate), but rather, how fast (in terms of training set size) we get there. Of course, a closely related question is which method performs best with a fixed training set size.

At the same time, using equivalent data sets for training removes the question that plagues papers trained on huge, proprietary data sets: how much of their performance is due to algorithmic innovation, and how much is simply due to the specifics of the training data?

Despite our interest in fixed training set protocols, at the same time, the practical issues of how to collect large data sets, and find methods that can benefit from them the most, make it interesting to push performance as high as possible with no ceiling on the data set size. The protocols of LFW consider all of these questions.

Human learning and statistical efficiency. Closely related to the previous point is to note that humans solve many problems with very limited training data. While some argue that there is no particular need to mimic the way that humans solve problems, it is certainly interesting to try to discover the principles which allow them to learn from small numbers of examples. It seems likely that these principles will improve our ability to design efficient learning algorithms.

2.1.2 Order of Discussion

Within each protocol, we primarily discuss algorithms in the order with which we received the results. Note that this order does not always correspond to the official publication order.Footnote 5 We make every effort to remark on the first authors to use a particular technique, and also to refer to prior work in other areas or on other databases that may have used similar techniques previously. We apologize for any oversights in advance. Note that some methods, especially some of the commercial ones, do not give much detail about their implementations. Rather than devoting an entire section to methods for which we have little detail, we summarize them in Sect. 2.5. We now start with protocols incorporating labeled outside data.

2.2 Unrestricted with Labeled Outside Data

This protocol allows the use of same and different training pairs from outside of LFW. The only restriction is that such data sets should not include pictures of people whose identities appear in the test sets. The use of such outside data sets has dramatically improved performance in several cases.

2.2.1 Attribute and Simile Classifiers for Face Verification, 2009 [53]

Kumar et al. [53] present two main ideas in this paper. The first is to explore the use of describable attributes for face verification. For attribute classifiers 65 describable visual traits such as gender, age, race, and hair color are used. At least 1000 positive and 1000 negative pairs of each attribute were used for training each attribute classifier. The paper gives the accuracy of each individual attribute classifier. Note that the attribute classifier does not use labeled outside data, and thus, when not used in conjunction with the simile classifier, qualifies for the unlabeled outside data protocols.

The second idea develops what they call simile classifiers, in which various classifiers are trained to rate face parts as “similar” or “not similar” to the face parts of certain reference individuals. To train these “simile” classifiers, multiple images of the same individuals (from outside of the LFW training data) are used, and thus this method uses outside labeled data.

The original paper [53] gives an accuracy of 85.29 ± 1.23 % for the hybrid system, and a follow-up journal paper [54] gives slightly higher results of 85.54 ± 0.35 %. These numbers should be adjusted downward slightly to 84.52 and 84.78 % since there was an error in how their accuracies were computed.Footnote 6

This paper was also notable in that it gave results for human recognition on LFW (99.2 %). While humans had an unfair advantage on LFW since many of the LFW images were celebrities, and hence humans have seen prior images of many test subjects, which is not allowed under any of the protocols, these results have nevertheless been widely cited as a target for research. The authors also noted that humans could do remarkably well using only close crops of the face (97.53 %), and even using only “inverse crops”, including none of the face, but portions of the hair, body, and background of the image (94.27 %).

2.2.2 Face Recognition with Learning-Based Descriptor, 2010 [26]

Cao et al. [26] develop a visual dictionary based on unsupervised clustering. They explore K-means, principal components analysis (PCA) trees [37] and random projection trees [37] to build the dictionary. While this was a relatively early use of learned descriptors, they were not learned discriminatively, i.e. to optimize performance.

One of the other main innovative aspects of this paper was building verification classifiers for various combinations of poses such as frontal-frontal, or rightfacing-leftfacing, to optimize feature weights conditioned on the specific combination of poses. This was done by finding the nearest pose to training and test examples using the Multi-PIE data set [38]. Because the Multi-PIE data set uses multiple images of the same subject, this paper is put in the category with outside labeled data. However, it seems plausible that this method could be used on a subset of multi-PIE that did not have images of the same person, as long there was a full range of labeled poses. Such a method, if pursued would qualify these techniques for the category image-restricted with label-free outside data.

The highest accuracy reported for their method was 84.45 ± 0.46 %.

2.2.3 An Associate-Predict Model for Face Recognition, 2011 [110]

This paper was one of the first systems to use a large additional amount of outside labeled data, and was, perhaps not coincidentally, the first system to achieve over 90 % on the LFW benchmark.

The main idea in this paper (similar to some older work [13]) was to associate a face with one person in a standard reference set, and use this reference person to predict the appearance of the original face in new poses and lighting conditions.

Building on the previous work by one of the co-authors [26], this paper also uses different strategies depending upon the relative poses of the presented face pair. If the poses of the two faces are deemed sufficiently similar, then the faces are compared directly. Otherwise, the associate-predict method is used to try to map between the poses. The best accuracy of this system on the unrestricted with labeled outside data was 90.57 ± 0.56 %.

2.2.4 Leveraging Billions of Faces to Overcome Performance Barriers in Unconstrained Face Recognition, 2011 [95]

This proprietary method from Face.com uses 3D face frontalization and illumination handling along with a strong recognition pipeline and achieves 91.30 ± 0.30 % accuracy on LFW. They report having amassed a huge database of almost 31 billion faces from over a billion persons.

They further discuss the contribution of effective 3D face alignment (or frontalization) to the task of face verification, as this is able to effectively take care of out-of-plane rotation, which 2D based alignment methods are not able to do. The 3D model is then used to render all images into a frontal view. Some details are given about the recognition engine—it uses non-parametric discriminative models by leveraging their large labeled data set as exemplars.

2.2.5 Tom-vs-Pete Classifiers and Identity-Preserving Alignment for Face Verification, 2012 [16]

This work presented two significant innovations. The first was to do a new type of non-affine warping of faces to improve correspondences while preserving as much information as possible about identity. While previous work had addressed the problem of non-linear pose-normalization (see, for example, the work by Asthana et al. [10, 11]), it had not been successfully used in the context of LFW.

In particular, as the authors note, simply warping two faces to maximize similarity may reduce the ability to perform verification by eliminating discriminative information between the two individuals. Instead, a warping should be done to maximize similarity while maintaining identity information. The authors achieve this identity-preserving warping by adjusting the warping algorithm so that parts with informative deviations in geometry (such as a wide nose) are preserved better (see the paper for additional details). This technique makes about a 2 % (91.20–93.10 %) improvement in performance relative to more standard alignment techniques.

This paper was also one of the first evaluated on LFW to use the approximate symmetry of the face to its advantage. Since using the above warping procedure tends to distort the side of the face further from the camera, the authors reflect the face, if necessary, such that the side closer to the camera is always on the right side of the photo. This results in the right side of the picture typically being more faithful to the appearance of the person. As a result, the learning algorithm which is subsequently applied to the flipped faces can learn to rely more on the more faithful side of the face. It should be noted, however, that the algorithm pays a price when the person’s face is asymmetric to begin with, since it may need to match the left side of a person’s face to their own right side. Still this use of facial symmetry improves the final results.

The second major innovation was the introduction of so-called Tom-vs-Pete classifiers as a new type of learned feature. These features were developed by using external labeled training sets (also labeled with part locations) to develop binary classifiers for pairs of identities, such as two individuals named Tom and Pete. For each of the \({n\choose 2}\) pairs of identities in the external training set, k separate classifiers are built, each using SIFT features from a different region of the face. Thus, the total number of Tom-vs-Pete classifiers is \(k \times { n\choose 2}\). A subset of these were chosen by maximizing discriminability.

The highest accuracy of their system was 93.10 ± 1.35 %. However, they increased accuracy (and reduced the standard error) a bit further by adding attribute features based upon their previous work, to 93.30 ± 1.28 %.

2.2.6 Bayesian Face Revisited: A Joint Formulation, 2012 [28]

One of the most important aspects of face recognition in general, viewed as a classification problem, is that all of the classes (represented by individual identities) are highly similar. At the same time, within each class is a significant amount of variability due to pose, expression, and so on. To understand whether two images represent the same person, it can be argued that one should model both the distribution of identities, and also the distribution of variations within each identity.

This basic idea was originally proposed by Moghaddam et al. in their well-known paper “Bayesian face recognition” [69]. In that paper, the authors defined a difference between two images, estimated the distribution of these differences conditioned on whether the images were drawn from the same identity or not, and then evaluated the posterior probability that this difference was due to the two images coming from different identities.

Chen et al. [28] point out a potential shortcoming of the probabilistic method applied to image differences. They note that by forming the image difference, information available to distinguish between two classes (in this case the “same” versus “different” classes of the verification paradigm) may be thrown out. In particular, if \(\mathbf{x}\) and \(\mathbf{y}\) are two image vectors of length N, then the pair of images, considered as a concatenation of the two vectors, contains 2N components. Forming the difference image is a linear operator corresponding to a projection of the image pair back to N dimensions, hence removing some of the information that may be useful in deciding whether the pair is “same” or “different”. This is illustrated in Fig. 1. To address this problem, Chen et al. focus on modeling the joint distribution of image pairs (of dimension 2N) rather than the difference distribution (of dimension N). This is an elegant formulation that has had a significant impact on many of the follow-up papers on LFW.

When the information from two images is projected to a lower dimension by forming the difference, discriminative information may be lost. The joint Bayesian approach [28] strives to avoid this projection, thus preserving some of the discriminative information

Another appealing aspect of this paper is the analysis that shows the relationship between the joint Bayesian method and the reference-based methods, such as the simile classifier [53], the multiple one-shots method [96], and the associate-predict method [110]. The authors show that their method can be viewed as equivalent to a reference method in the case that there are an infinite number of references, and that the distributions of identities and within class variance are Gaussian.

The accuracy of this method while using outside data for training (unrestricted with labeled outside data) was 92.42 ± 1.08 %.

2.2.7 Blessing of Dimensionality: High-Dimensional Feature and Its Efficient Compression for Face Verification, 2013 [29]

This paper argues that high-dimensional descriptors are essential for high performance, and also describes a method for compression termed as rotated sparse regression. They construct the high-dimensional feature using local binary patterns (LBP), histograms of oriented gradients (HOG) and others, extracted at 27 facial landmarks and at five scales on 2D aligned images. They use principal components analysis (PCA) to first reduce this to 400 dimensions and use a supervised method such as linear discriminant analysis (LDA) or a joint Bayesian model [28] to find a discriminative projection. In a second step, they use L1-regularized regression to learn a sparse projection that directly maps the original high-dimensional feature into the lower-dimensional representation learned in the previous stage.

They report accuracies of 93.18 ± 1.07 % under the unrestricted with label-free outside data protocol and 95.17 ± 1.13 % using their WDRef (99,773 images of 2995 subjects) data set for training following the unrestricted with labeled outside data protocol.

2.2.8 A Practical Transfer Learning Algorithm for Face Verification, 2013 [25]

This paper applies transfer learning to extend the high performing joint Bayesian method [28] for face verification. In addition to the data likelihood of the target domain, they add the KL-divergence between the source and target domains as a regularizer to the objective function. The optimization is done via closed-form updates in an expectation-maximization framework. The source domain is the non-public WDRef data set used in their previous versions [28, 29] and the target is set to be LFW. They use the high-dimensional LBP features from [29], reducing its size from over 10,000 dimensions to 2000 by PCA.

They report 96.33 ± 1.08 % accuracy on LFW in the unrestricted with labeled outside data protocol, which improves over the results from using joint Bayesian without the transfer learning on high dimensional LBP features [29].

2.2.9 Hybrid Deep Learning for Face Verification, 2013 [91]

This method [91] uses an elaborate hybrid network of convolutional neural networks (CNNs) and a Classification-RBM (restricted Boltzmann machine), trained directly for verification. A pair of 2D aligned face images are input to the network. At the lower part, there are 12 groups of CNNs, which take in images each covering a particular part of the face, some in colour and some in grayscale. Each group contains five CNNs that are trained using different bootstrap samples of the training data. A single CNN consists of four convolutional layers and a max-pooling layer. Similar to [48], they use local convolutions in the mid- and high-level layers of the CNNs. There can be eight possible “input modes” or combinations of horizontally flipping the input pair of images and each of these pairs are fed separately to the networks. The output from all these networks is in layer L0, having 8 ∗ 5 ∗ 12 neurons. The next two layers average the outputs, first among the eight input modes and then the five networks in a group. The final layer is a classification RBM (models the joint distribution of class labels, binary input vectors and binary hidden units) with two outputs that indicate same or different class for the pairs, which is discriminatively trained by minimizing the negative log probability of the target class given the input, using gradient descent. The CNNs and the RBM are trained separately; then the whole model is jointly fine-tuned using back-propagation. Model averaging is done by training the RBM with five different random sets of training data and averaging the predictions. They create a new training data set, “CelebFaces”, consisting of 87,628 images of 5436 celebrities collected from the web. They report 91.75 ± 0.48 % accuracy on the LFW in the unrestricted with label-free outside data protocol and 92.52 ± 0.38 % following the unrestricted with labeled outside data protocol.

2.2.10 POOF: Part-Based One-vs-One Features for Fine-Grained Categorization, Face Verification, and Attribute Estimation, 2013 [17]

When annotations of parts are provided, this method learns highly discriminative features between two classes based on the appearance at a particular landmark or part that has been provided. They formulate face verification as a fine-grained classification task, for which this descriptor is designed to be well suited.

For training a single “POOF” or Part-Based One-vs-One Feature, it is provided a pair of classes to distinguish and two part locations—one for alignment and the other for feature extraction. All the images of the two classes are aligned with respect to the two locations using similarity transforms with 64 pixels horizontal distance between them. A crop of 64 × 128 at the mid-point of the two locations is taken and grids of 8 × 8 and 16 × 16 are placed on it. Gradient direction histograms and color histograms are used as base features for each cell and concatenated. A linear support vector machine (SVM) is trained on these to separate the two classes. These SVM weights are used to find the most discriminative cell locations and a mask is obtained by thresholding these values. Starting from a given part location as a seed, its connected component is found in the thresholded mask. Base features from cells in this connected component are concatenated and another linear SVM is used to separate the two classes. The score from this SVM is the score of that part-based feature.

They learn a random subset of 10,000 POOFs using the database in [16], getting two 10,000-dimensional vectors for each LFW pair. They use both absolute difference ( | f(A) − f(B) | ) and product (f(A). f(B)) of these vectors to train a same-versus-different classifier on the LFW training set. They report 93.13 ± 0.40 % accuracy on LFW following the unrestricted with labeled outside data protocol.

2.2.11 Learning Discriminant Face Descriptor for Face Recognition, 2014 [58]

This approach learned a “Discriminative Face Descriptor” (DFD) based upon improving the LBP feature (which are essentially differences in value of a particular pixel to its neighbours). They use the Fisher criterion for maximizing between class and minimizing within class scatter matrices to learn discriminative filters to extract features at the pixel level as well as find optimal weights for the contribution of neighbouring pixels in computing the descriptor. K-means clustering is used to find the most dominant clusters among these discriminant descriptors (typically of length 20). They reported best performance using K = 1024 or 2048.

They used the LFW-a images and cropped the images to 150 × 130. They further used a spatial grid to encode separate parts of the face separately into their DFD representation and also apply PCA whitening. The descriptors themselves were learned using the FERET data set (unrestricted with labeled outside data), however the authors note that the distribution of images in FERET is quite different from that of LFW—performance on LFW is an indicator of the generalizable power of their descriptor. They report an LFW accuracy of 84.02 ± 0.44 %.

2.2.12 Face++, 2014

We discuss two papers from the Face++/Megvii Inc. group here, both involving supervised deep learning on large labeled data sets. These, along with Facebook’s DeepFace [97] and DeepID [92], exploited massive amounts of labeled outside data to train deep convolutional neural networks (CNNs) and reach very high performance on LFW.

In the first paper from the Face++ group, a new structure, which they term the pyramid CNN [34] is used. It conducts supervised training of a deep neural network one layer at a time, thus greatly reducing computation. A four-level Siamese network trained for verification was used. The network was applied on four face landmarks and the outputs were concatenated. They report an accuracy of 97.3 % on the LFW unrestricted with labeled outside data protocol.

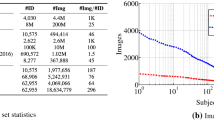

The Megvii Face Recognition System [113] was trained on a data set of five million labeled faces of around 20,000 identities. A ten-layer network was trained for identification on this data set. The second-to-last layer, followed by PCA, was used as the face representation. Face verification was done using the L2 norm score, achieving 99.50 ± 0.36 % accuracy. With the massive training data set size, they argue that the advantages of using more sophisticated architectures and methods become less significant. They investigate the long tail effect of web-collected data (lots of persons with very few image samples) and find that after the first 10,000 most frequent individuals, including more persons with very few images into the training set does not help. They also show in a secondary experiment that high performance on LFW does not translate to equally high performance in a real-world security certification setting.

2.2.13 DeepFace: Closing the Gap to Human-Level Performance in Face Verification, 2014 [97]

This paper from Facebook [97] has two main novelties—a method for 3D face frontalizationFootnote 7 and a deep neural net trained for classification. The neural network featured 120 million parameters, and was trained on 4000 identities having four million images (the non-public SFC data set). This paper was one of the first papers to achieve very high accuracies on LFW using CNNs. However, as mentioned above, other papers that used deep networks for face recognition predated this by several years [48, 70]. Figure 2 shows the basic architecture of the DeepFace CNN, which is typical of deep architectures used on other non-face benchmarks such as ImageNet.

The architecture of the DeepFace convolutional neural network [97]. This type of architecture, which has been widely used in other object recognition problems, has become a dominant presence in the face recognition literature

3-D frontalized RGB faces of size 152 × 152 are taken as input, followed by 32 11 × 11 convolution filters (C1), a max-pooling layer (2 × 2 size with stride of two pixels, M2) and another convolutional layer with 16 9 × 9 filters (C3). The next three layers (L4–6) are locally connected layers [48], followed by two fully connected layers (F7–8). The 4096-dimensional F7 layer output is used as the face descriptor. ReLU activation units are used as the non-linearity in the network and dropout regularization is applied to F7 layer. L 2-normalization is applied to the descriptor. Training the network for 15 epochs took three days. The weighted χ 2 distance is used as the verification metric. Three different input image types (3D aligned RGB, grayscale with gradient magnitude and orientation and 2-D aligned RGB) are used, and their scores are combined using a kernel support vector machine (SVM). Using the restricted protocol, this reaches 97.15 % accuracy. Under the unrestricted protocol, they train a Siamese network (initially using their own SFC data set, followed by two epochs on LFW pairs), reaching 97.25 % after combining the Siamese network with the above ensemble. Finally, adding four randomly-seeded DeepFace networks to the ensemble a final accuracy of 97.35 ± 0.25 % is reached on LFW following the unrestricted with labeled outside data protocol.

2.2.14 Recover Canonical-View Faces in the Wild with Deep Neural Networks, 2014 [114]

In this paper, the authors train a convolutional neural network to recover the canonical view of a face by training it on 2D images without any use of 3D information. They develop a formulation using symmetry and matrix-rank terms to automatically select the frontal face image for each person at training time. Then the deep network is used to learn the regression from face images in arbitrary view to the canonical (frontal) view.

After this canonical pose recovery is performed, they detect five landmarks from the aligned face and train a separate network for each patch at each landmark along with one network for the entire face. These small networks (two convolutional and two pooling layers) are connected at the fully connected layer and trained on the CelebFaces data set [91] with the cross-entropy loss to predict identity labels. Following this, a PCA reduction is done, and an SVM is used for the verification task, resulting in an accuracy of 96.45 ± 0.25 % under the unrestricted with labeled outside data protocol.

2.2.15 Deep Learning Face Representation from Predicting 10,000 Classes, 2014 [92]

In this approach, called “DeepID” [92], the authors trained a network to recognize 10,000 face identities from the “CelebFaces” data set [91] (87,628 face images of 5436 celebrities, non-overlapping with LFW identities). The CNNs had four convolutional layers (with 20, 40, 60 and 80 feature maps), followed by max-pooling, a 160-dimensional fully-connected layer (DeepID-layer) and a softmax layer for the identities. The higher convolutional layers had locally shared weights. The fully-connected layer was connected to both the third and fourth convolutional layers in order to see multi-scale features, referred to as a “skipping” layer. Faces were globally aligned based on five landmarks. The input to a network was one out of 60 patches, which were square or rectangular and could be both colour or grayscale. Sixty CNNs were trained on flipped patches, yielding a 160 ∗ 2 ∗ 60 dimensional descriptor of a single face. PCA reduction to 150 dimensions was done before learning the joint Bayesian model, reaching an accuracy of 96.05 %. Expanding the data set (CelebFaces+ [88]) and using the joint Bayesian model for verification gives them a final accuracy of 97.45 ± 0.26 % under the unrestricted with labeled outside data protocol.

2.2.16 Surpassing Human-Level Face Verification Performance on LFW with GaussianFace, 2014 [65]

This method uses multi-task learning and the discriminative Gaussian process latent variable model (DGP-LVM) [55, 100] to be one of the top performers on LFW. The DGP-LVM [100] maps a high-dimensional data representation to a lower-dimensional latent space using a discriminative prior on the latent variables while maximizing the likelihood of the latent variables in the Gaussian process (GP) framework for classification. GPs themselves have been observed to be able to make accurate predictions given small amounts of data [55] and are also robust to situations when the training and test data distributions are not identical. The authors were motivated to use DGP-LVM over the more usual GPs for classifications as the former, by virtue of its discriminative prior, is a more powerful predictor.

The DGP-LVM is reformulated using a kernelized linear discriminant analysis to learn the discriminative prior on latent variables and multiple source domains are used to train for the target domain task of verification on LFW. They detail two uses of their Gaussian Face model—as a binary classifier and as a feature extractor. For the feature extraction, they use clustering based on GPs [51] on the joint vectors of two faces. They compute first and second order statistics for input joint feature vectors and their latent representations and concatenate them to form the final feature. These GP-extracted features are used in the GP-classifier in their final model.

Using 200,000 training pairs, the “GaussianFace” model reached 98. 52 ± 0. 66 % accuracy on LFW under the unrestricted with labeled outside data protocol, surpassing the recorded human performance on close-cropped faces (97.53 %).

2.2.17 Deep Learning Face Representation by Joint Identification-Verification, 2014 [88]

Building on the previous model, DeepID [92], “DeepID2” [88] used both an identification signal (cross-entropy loss) and a verification signal (L2 norm verification loss between DeepID2 pairs) in the objective function for training the network, and expanded the CelebFaces data set to “CelebFaces+”, which has 202,599 face images of 10,177 celebrities from the web. 400 aligned face crops were taken to train a network for each patch and a greedy selection algorithm was used to select the best 25 of these. A final 4000 (25 ∗ 160) dimensional face representation was obtained, followed by PCA reduction to 180-dimensions and joint Bayesian verification, achieving 98.97 % accuracy.

The network had four convolutional layers and max-pooling layers were used after the first three convolutional layers. The third convolutional layer was locally connected, sharing weights in 2 × 2 local regions. As mentioned before, the loss function was a combined loss from identification and verification signals. The rationale behind this was to encourage features that can discriminate identity, and also reduce intra-personal variations by using the verification signal. They show that using either of the losses alone to train the network is sub-optimal and the appropriate loss function is a weighted combination of the two.

A total of seven networks are trained using different sets of selected patches for training. The joint Bayesian scores are combined using an SVM, achieving 99.15 ± 0.13 % accuracy under the unrestricted with labeled outside data protocol.

2.2.18 Deeply Learned Face Representations are Sparse, Selective and Robust, 2014 [93]

Following on with the DeepID “family” of models, “DeepID2+” [93] increased the number of feature maps to 128 in the four convolutional layers, the DeepID size to 512 dimensions and expanded their training set to around 290,000 face images from 12,000 identities by merging the CelebFaces+ [88] and WDRef [29] data sets. Another interesting novelty of this method was the use of a loss function at multiple layers of the network, instead of the standard supervisory signal (loss function) in the top layer. They branched out 512-dimensional fully-connected layers at each of the four convolutional layers (after the max-pooling step) and added the loss function (a joint identification-verification loss) after the fully-connected layer for additional supervision at the early layers. They show that removal of the added supervision lowers their performance, as well as some interesting analysis on the sparsity of the neural activations. They report that only about half the neurons get activated for an image, and each neuron activates for about half the images. Moreover they found a difference of less than 1 % when using a binary representation by thresholding, which led them to state that the fact that a neuron is activated or not is more important than the actual value of that activation.

This report an accuracy of 99.47 ± 0.12 % (unrestricted with labeled outside data) using the joint Bayesian model trained on 2000 people in their training set and combining the features from 25 networks trained on the same patches as DeepID2 [88].

2.2.19 DeepID3: Face Recognition with Very Deep Neural Networks, 2015 [89]

“DeepID3” uses a deeper network (10–15 feature extraction layers) with Inception layers [94] and stacked convolution layers (successive convolutional layers without any pooling layer in between) on a similar overall pipeline to DeepID2+ [93]. Similar to DeepID2+, they include unshared weights in later convolutional layers, max-pooling in early layers and the addition of joint identification-verification loss functions to branched-out fully connected layers from each pooling layer in the network.

They train two networks, one using the stacked convolution and the other using the recently-proposed Inception layer used in the GoogLeNet architecture, which was a top-performer in the ImageNet challenge in 2015 [94]. The two networks reduce the error rate of DeepID2+ by 0.81 % and 0.26 %, respectively.

The features from both the networks on 25 patches is combined into a vector of about 30,000 dimensions. It is PCA reduced to 300 dimensions, followed by learning a joint Bayesian model. It achieved 99.53 ± 0.10 % verification accuracy on LFW (unrestricted with labeled outside data).

2.2.20 FaceNet: A Unified Embedding for Face Recognition and Clustering, 2015 [82]

This model from Google, called the FaceNet [82], uses 128-dimensional representations from very deep networks, trained on a 260-million image data set using a triplet loss at the final layer—the loss separates a positive pair from a negative pair by a margin. An online hard negative exemplar mining strategy within each mini-batch is used in training the network. This loss directly optimizes for the verification task and so a simple L2 distance between the face descriptors is sufficient.

They use two variants of networks. In NN1, they add 1 × 1 × d convolutional layers between the standard Zeiler&Fergus CNN [112] resulting in 22 layers. In NN2, they use the recently proposed Inception modules from GoogLeNet [94] which is more efficient and has 20 times lesser parameters. The L2-distance threshold for verification is estimated from the LFW training data. They report results, following the unrestricted with labeled outside data protocol, on central crops of LFW (98.87 ± 0.15 %) and when using a proprietary face detector (99.6 ± 0.09 %) using the NN1 model, which is the highest score on LFW in the unrestricted with labeled outside data protocol. The scores from using the NN2 model were reported to be statistically in the same range.

2.2.21 Tencent-BestImage, 2015 [8]

This commercial system followed the unrestricted with labeled outside data protocol and built their system combining an alignment system, a deep convolutional neural network with 12 convolution layers, and the joint Bayesian method for verification. The whole system was trained on their data set—“BestImage Celebrities Face” (BCF), which contains about 20,000 individuals and one million face images and is identity-disjoint with respect to LFW. They divided the BCF data into two subsets for training and validation. The network was trained on the BCF training set with 20 face patches. The features from each patch were concatenated, followed by PCA and the joint Bayesian model learned on BCF validation set. They report an accuracy of 99.65 ± 0.25 % on LFW under the unrestricted with labeled outside data protocol.

2.3 Label-Free Outside Data Protocols

In this section, we discuss two of the LFW protocols together—image-restricted with label-free outside data and unrestricted with label-free outside data. While these results are curated separately for fairness on the LFW page, conceptually they are highly similar, and are not worth discussing separately.

These protocols allow the use of outside data such as additional faces, landmark annotations, part labels, and pose labels, as long as this additional information does not contain any information that would allow making pairs of images labeled “same” or “different”. For example, a set of images of a single person (even if the person were not labeled) or a video of a person would not be allowed under these protocols, since any pair of images from the set or from the video would allow the formation of a “same” pair.

Still, large amounts of information can be used by these methods to understand the general structure of the space of faces, to build supervised alignment methods, to build attribute classifiers, and so on. Thus, these methods would be expected to have a significant advantage over the “no outside data” protocols.

2.3.1 Face Recognition Using Boosted Local Features, 2003 [50]

One of the earliest methods applied to LFW was developed at Mitsubishi Electric Research Labs (MERL) by Michael Jones and Paul Viola [50]. This work built on the authors’ earlier work in boosting for face detection [101], adapting it to learn a similarity measure between face images using a modified AdaBoost algorithm. They use filters that act on a pair of images as features, which are a set of linear functions that are a superset of the “rectangle” filters used in their face detection system. A threshold on the absolute difference of the scalar values returned by a filter applied on a pair of faces can be used to determine valid or invalid variation of a particular property or aspect of a face (the validity being with respect to whether the faces belong to the same identity).

The technical report was released before LFW, and so does not describe application to the database, but the group submitted results on LFW after publication, achieving 70. 52 ± 0. 60 %.

2.3.2 LFW Results Using a Combined Nowak Plus MERL Recognizer, 2008 [46]

This early system combined the method of Nowak and Jurie [74] with an unpublished method [46] from Mitsubishi Electric Research Laboratory (MERL), and thus technically counts as a method whose full details are not published. However, some details are given in a workshop paper [46].

The MERL system initially detects a face using a Viola-Jones frontal face detector, followed by alignment based on nine facial landmarks (also detected using a Viola-Jones detector). After alignment, some simple lightning normalization is done. The score of the MERL face recognition system [50] is then averaged with the score from the best-performing system of that time (2007), by Nowak and Jurie [74].

The accuracy of this system was 76.18 ± 0.58 %.

2.3.3 Is That You? Metric Learning Approaches for Face Identification, 2009 [39]

This paper presents two methods to learn robust distance measures for face verification, the logistic discriminant-based metric learning (LDML) and marginalized K-nearest neighbors (MkNN) classifier. The LDML learns a Mahalanobis distance between two images to make the distances between positive pairs smaller than the distances between negative pairs and obtain a probability that a pair is positive in a standard linear logistic discriminant model. The MkNN classifies an image pair belongs to the same class with the marginal probability that both of them are assigned to the same class using a K-nearest neighbor classifier. In the experiments, they represent the images as stacked multi-scale local descriptors extracted at nine facial landmarks. The facial landmarks detector is trained with outside data. Without using the identify labels for the LFW training data, the LDML achieves 79. 27 ± 0. 6 % under the image-restricted with label-free outside data protocol. They obtain this accuracy by fusing the scores from eight local features including LBP, TPLBP, FPLBP, SIFT and their element-wise square root variants with a linear combination. This multiple feature fusion method is shown to be effective in a number of literatures. Under the unrestricted with label-free outside data protocol, they show that the performance of LDML is significantly improved with more training pairs formed using the identity labels. And they obtain their best performance 87. 50 ± 0. 4 % accuracy by linearly combining the 24 scores with the three methods LDML, large margin nearest neighbor (LMNN) [102] and MkNN over the eight local features.

2.3.4 Multiple One-Shots for Utilizing Class Label Information, 2009 [96]

The authors extend the one-shot similarity (OSS) introduced in [104] which we will describe under the image-restricted, no outside data protocol. In brief, the OSS for an image pair is obtained by training a binary classifier with one image in the pair as the positive sample and a set of pre-defined negative samples to classify the other image in the pair. This paper extends the OSS to be multiple one-shots similarity vector by producing OSS scores with different negative sample sets. Each set reflecting either a different subject or a different pose. In their face verification system, the faces are firstly aligned with a commercial face alignment system. The aligned faces are published as the “aligned” LFW data set or LFW-a data set. The face descriptors are then constructed by stacking local descriptors extracted densely over the face images. The information theoretic metric learning (ITML) method is adopted to obtain a Mahalanobis matrix to transform the face descriptors and a linear SVM classifies a pair of faces to be matched or not based on the multiple OSS scores. They achieve their best result 89. 50 ± 0. 51 % accuracy by combining 16 multiple OSS scores including eight descriptors (SIFT, LBP, TPLBP, FPLBP and their square root variants) under two settings of the multiple OSS scores (the subject-based negative sample sets and pose-based negative sample sets).

2.3.5 Attribute and Simile Classifiers for Face Verification, 2009 [53]

We discussed the attribute and simile classifiers for face verification [53] under the unrestricted with labeled outside data protocol. The authors’ result with the attribute classifier qualifies for the unrestricted with label-free outside data protocol. They reported their result with the attribute classifier on LFW as 85. 25 ± 0. 60 % accuracy in their follow-up journal paper [54].

2.3.6 Similarity Scores Based on Background Samples, 2010 [105]

In this paper, Wolf et al. [105] extend the one-shot similarity (OSS) introduced in [104] to the two-shot similarity (TSS). The TSS score is obtained by training a classifier to classify the two face images in a pair against a background face set. Although the TSS score by itself is not discriminative for face verification, they show that the performance is improved by combining the TSS scores with OSS scores and other similarity scores. They extend the OSS and TSS framework to use linear discriminant analysis instead of an SVM as the online trained classifier. In addition to OSS and TSS, they propose to represent each image in the pair with its rank vector obtained by retrieving similar images from the background face set. The correlation between the two rank vectors provides another dimensionality of the similarity measure of the face pair. In their experiments, they use the LFW-a data set to handle alignment. Combining the similarities introduced above with eight variants of local descriptors, they obtain an accuracy of 86. 83 ± 0. 34 % under the image-restricted with label-free outside data protocol.

2.3.7 Rectified Linear Units Improve Restricted Boltzmann Machines, 2010 [70]

Restricted Boltzmann machines (RBMs) are often formulated as having binary-valued units for the hidden layer and Gaussian units for the real-valued input layer. Nair and Hinton [70] modify the hidden units to be “noisy rectified linear units” (NReLUs), where the value of a hidden unit is given by the rectified output of the activation and some added noise, i.e. max(0, x + N(0, V )), where x is the activation of the hidden unit given an input, and N(0, V ) is the Gaussian noise. RBMs with 4000 NReLU units in the hidden layer are first pre-trained generatively, then discriminatively trained as a feed-forward fully-connected network using back-propagation (in the latter case the Gaussian noise term is dropped in the rectification).

In order to model face pairs, they use a “Siamese” network architecture, where the same network is applied to both faces and the cosine distance is the symmetric function that combines the two outputs of the network. They show that that NReLUs are translation equivariant and scale equivariant(the network outputs change in the same way as the input), and combined with the scale invariance of cosine distance the model is analytically invariant to the rescaling of its inputs. It is not translation invariant. LFW images are center-cropped to 144 × 144, aligned based on the eye location and sub-sampled to 32 × 32 3-channel images. Image intensities are normalized to be zero-mean and unit-variance. They report an accuracy of 80.73 ± 1.34 % (image-restricted with label-free outside data). It should be noted that because the authors use manual correction of alignment errors, this paper does not conform to the LFW protocols, and thus need not be used as a comparison against fully automatic methods.

2.3.8 Face Recognition with Learning-based Descriptor, 2010 [26]

This paper was discussed under the unrestricted with labeled outside data protocol. With the holistic face as the only component, the method qualifies for the image-restricted with label-free outside data protocol, under which the authors obtain an accuracy of 81.22 ± 0.35 %.

2.3.9 Cosine Similarity Metric Learning for Face Verification, 2011 [72]

This paper proposes cosine similarity metric learning (CSML) to learn a transformation matrix to project faces into a subspace in which cosine similarity performs well for verification. They define the objective function to maximize the margin between the cosine similarity scores of positive pairs and cosine similarity scores of negative pairs while regularizing the learned matrix by a predefined transformation matrix. They empirically demonstrate that this straightforward idea works well on LFW and that by combining scores from six different feature descriptors their method achieves an accuracy of 88. 00 ± 0. 38 % under the image-restricted with label-free outside data protocol. Subsequent communication with the authors revealed an error in the use of the protocol. Had the protocol been followed properly, our experiments suggest that the results would be about three percent lower, i.e., about 85 %. Still, this method has played an important role in subsequent research as a popular choice for the comparison of feature vectors.

2.3.10 Beyond Simple Features: A Large-Scale Feature Search Approach to Unconstrained Face Recognition, 2011 [31]

This method [31] uses the biologically-inspired V1-like features that are designed to approximate the initial stage of the visual cortex of primates. It is essentially a cascade of linear and non-linear functions. These are stacked into two and three layer architectures, HT-L2 and HT-L3 respectively. These models take in 100 × 100 and 200 × 200 grayscale images as inputs. A linear SVM is trained on a variety of vector comparison functions between two face descriptors. Model selection is done on 5915 HT-L2 and 6917 HT-L3 models before the best five were selected. Multiple kernels were used to combine data augmentations (rescaled crops of 250 × 250, 150 × 150 and 125 × 75), blend the top five models within each “HT class”, and also blend models across HT classes. The HT-L3 gives an accuracy of 87.8 % while combining all of the models gives a final accuracy of 88.13 ± 0.58 % following the image-restricted with label-free outside data protocol.

2.3.11 Face Verification Using the LARK Representation, 2011 [84]

This work extends previous work [83] in which two images are represented as two local feature sets and the matrix cosine similarity (MCS) is used to separate faces from backgrounds. All kinds of visual variations are addressed implicitly in the MCS which is the weighted sum of the cosine similarities of the local features. In this work, the authors present the locally adaptive regression kernel (LARK) local descriptor for face verification. LARK is defined as the self-similarity between a center pixel and its surroundings. In particular, the distance between two pixels is the geodesic distance. They consider an image as a 3D space which includes the 2D coordinates and the gray-scale value at each pixel. The geodesic distance is then the shortest path on the image surface. PCA is then adopted to reduce the dimensionality of the local features. They further apply an element-wise logistic function to generate a binary-like representation to remove the dominance of large relative weights to increase the discriminative power of the local features. They conduct experiments on LFW under both the unsupervised protocol and the image restricted protocol.

In the unsupervised setting, they compute LARKs of size 7 × 7 from each face image. They evaluate various combinations of different local descriptors and similarity measures and report that the LBP with Chi-square distance achieves the best 69. 54 % accuracy among the baseline methods. Their method achieves 72. 23 % accuracy. Under the image-restricted with label-free outside data protocol, they adopt the OSS with LDA for face verification and achieve an accuracy of 85.10 ± 0.59 % by fusing scores from 14 combinations of local descriptors (SIFT, LBP, TPLBP and pcaLARK) and similarity measures (OSS, OSS with logistic function, MCS and MCS with logistic function).

2.3.12 Probabilistic Models for Inference About Identity, 2012 [62]

This paper presents a probabilistic face recognition method. Instead of representing each face as a feature vector and measuring the distances between faces in the feature space, they propose to construct a model in which identity is a hidden variable in a generative description of the image data. Other variations in pose, illumination and etc., is described as noise. The face recognition is then framed as a model comparison task.

More concretely, they present a probabilistic latent discriminant analysis (PLDA) model to describe the data generation. In PLDA, the data generation depends on the latent identity variable and an intra-class variation variable. This design helps factorize the identity subspace and within-individual subspace. The model is learned by expectation-maximization (EM) and the face verification is conducted by looking at the likelihood ratio of an image pair generated by a similar pair model over a dissimilar pair model. The PLDA model is further extended to be a mixture of PLDA models to describe the potential non-linearity of the face manifold. Extensive experiments are conducted to evaluate the PLDA and its variants in face analysis. Their face verification result on LFW is 90. 07 ± 0. 51 % under the unrestricted with label-free outside data protocol.

2.3.13 Large Scale Strongly Supervised Ensemble Metric Learning, with Applications to Face Verification and Retrieval, 2012 [43]

High-dimensional overcomplete representations of data are usually informative but can be computationally expensive. This paper proposes a two-step metric learning method to enforce sparsity and to avoid features with little discriminability and improve computational efficiency. The two-step design is motivated by the fact that straightforwardly applying the group lasso with row-wise and column-wise L 1 regularization is very expensive in high-dimensional feature spaces. In the first step, they iteratively select μ groups of features. In each iteration, the feature group which gives the largest partial derivative of the loss function is chosen and the Mahalanobis matrix of a weak metric for the selected feature group is learned and assembled into a sparse block diagonal matrix A †. With an eigenvalue decomposition, they obtain a transformation matrix to reduce the feature dimensionality. After that, in the second step, another Mahalanobis matrix is learned to exploit the correlations between the selected feature groups in the lower-dimensional subspace. They adopt the projected gradient descent method to iteratively learn the Mahalanobis matrix.

In their experiments, they use the LFW-a data set and center crop the face images to 110 × 150. By concatenating two types of features (covariance matrix descriptors and soft local binary pattern histograms) after the first step, they achieve 92. 58 ± 1. 36 % accuracy under the image-restricted with label-free outside data protocol.

2.3.14 Distance Metric Learning with Eigenvalue Optimization, 2012 [111]

In this paper, the authors present an eigenvalue optimization framework for learning a Mahalanobis metric. They learn the metric by maximizing the minimal squared distances between dissimilar pairs while maintaining an upper bound for the sum of squared distances between similar pairs. They further show that this is equivalent to an eigenvalue optimization problem. Similarly, the previous metric learning method LMNN can also be formulated as a general eigenvalue decomposition problem.

They further develop an efficient algorithm to solve this optimization problem, which will only involve the computation of the largest eigenvector of a matrix. In the experiments, they show that the proposed method is more efficient than other metric learning methods such as LMNN and ITML. On LFW, they evaluate this method with both the LFW funneled data set and the LFW-a data set. They use SIFT features computed at the fiducial points for faces on the funneled LFW data set and achieve 81. 27 ± 2. 30 % accuracy. On the “aligned” LFW data set, they evaluate three types of features including concatenated raw intensity values, LBP and TPLBP. Combining the scores from the four different features with a linear SVM, they achieve 85. 65 ± 0. 56 % accuracy under the image-restricted with label-free outside data protocol.

2.3.15 Learning Hierarchical Representations for Face Verification with Convolutional Deep Belief Networks, 2012 [48]

In this work [48], a local convolutional deep belief network is used to generatively model the distribution of faces. Then, a discriminatively learned metric (ITML) is used for the verification task. The shared weights of convolutional filters (10 × 10 in size) in the CRBM (convolutional RBM) makes it possible to use high-resolution images as input. Probabilistic max-pooling is used in the CRBM to have local translation invariance and still allow top-down and bottom-up inference in the model.

The authors argue that in images like faces, that exhibit clear spatial structure, the weights of a hidden unit being shared across the locations in the whole image is not desirable. On the other hand, using a layer with fully-connected weights may not be computationally tractable without either subsampling the input image or first applying several pooling layers. In order to exploit this structure, the image is divided into overlapping regions and the weight-sharing in the CRBM is restricted to be local. Contrastive divergence is used to train the local CRBM. Two layers of these CRBMs are stacked to form a deep belief network (DBN). The local CRBM is used in the second layer of their network. In addition to using raw pixels, the uniform LBP descriptor is also used as input to the DBN. The two features are combined at the score level by using a linear SVM. The LFW-a face images are used as input, with three croppings at sizes 150 × 150, 125 × 75, 100 × 100, resized to the same size before input to the DBN. The deep learned features give competitive performance (86.88 ± 0.62 %) to hand-crafted features (87.18 ± 0.49 %), while combining the two gives the highest of 87.77 ± 0.62 % (image-restricted with label-free outside data).

2.3.16 Bayesian Face Revisited: A Joint Formulation, 2012 [28]

We discussed this paper under the unrestricted with labeled outside data protocol. The authors also present their result under the unrestricted with label-free outside data protocol. Combining scores of four descriptors (SIFT, LBP, TPLBP and FPLBP), they achieve an accuracy of 90. 90 ± 1. 48 % on LFW.

2.3.17 Blessing of Dimensionality: High-dimensional Feature and Its Efficient Compression for Face Verification, 2013 [29]

We discussed this paper under the unrestricted with labeled outside data protocol. Without using the WDRef data set for training, they report an accuracy of 93. 18 ± 1. 07 % under the unrestricted with label-free outside data protocol.

2.3.18 Fisher Vector Faces in the Wild, 2013 [85]

In this paper, Simonyan et al. [85] adopt the Fisher vector (FV) for face verification. The FV encoding had been shown to be effective for general object recognition. This paper demonstrates that this encoding is also effective for face recognition. To address the potential high computational expense due to the high dimensionality of the Fisher vectors, the authors propose a discriminative dimensionality reduction to project the vectors into a low dimensional subspace with a linear projection.

To encode a face image with FV, it is first processed into a set of densely extracted local features. In this paper, the dense local feature of an image patch is the PCA-SIFT descriptor augmented by the normalized image patch location in the image. They train a Gaussian mixture model (GMM) with diagonal covariance over all the training features. As shown in Fig. 3, to encode a face image with FV, the face image is first aligned with respect to the fiducial points. The Fisher vector is then the stacked, average first and second order differences of the image features over each GMM component center. To construct a compact and discriminative face representation, the authors propose to adopt a large-margin dimensionality reduction step after the Fisher vector encoding.

The Fisher vector face encoding work-flow [85]

In their experiments, they report their best result as 93. 03 ± 1. 05 % accuracy on LFW under the unrestricted with label-free outside data protocol.

2.3.19 Fusing Robust Face Region Descriptors via Multiple Metric Learning for Face Recognition in the Wild, 2013 [32]

In this paper, the authors present a region-based face representation. They divide each face image into spatial blocks and sample image patches from a fixed grid of positions. The patches are then represented by nonnegative sparse codes and sum pooled to construct the representation for the block. PCA whitening is then applied to reduce its dimensionality. After processing each image into a sequence of block representations, the distance between two images are the fusion of pairwise block-to-block distances. They further propose a metric learning method to jointly learn the sequence of Mahalanobis matrices for discriminative block-wise distances. Their best result on LFW is 89. 35 ± 0. 50 % (image-restricted with label-free outside data) fusing 8 distances from two different scales of face images and four different spatial partitions of blocks.

2.3.20 Towards Pose Robust Face Recognition, 2013 [108]

This paper presents a pose adaptive framework to handle pose variations in face recognition. Given an image with landmarks, they present a fitting algorithm to fit a 3D shape of the given face. The 3D shape is used to project the pre-defined 3D feature points to the 2D image to reliably locate facial feature points. They then extract descriptors around the feature points with Gabor filtering and concatenate local descriptors to represent the face. In their method, an additional technique to address self-occlusion is to use descriptors from the less-occluded half face for matching. In their experiments, they show that this pose adaptive framework can handle pose variations well in unconstrained face recognition. They obtain 87. 77 ± 0. 51 % accuracy on LFW (image-restricted with label-free outside data).

2.3.21 Similarity Metric Learning for Face Recognition, 2013 [23]

This paper presents a framework to learn a similarity metric for unconstrained face recognition. The learned metric is expected to be robust to the large intra-personal variation and discriminative in order to differentiate similar image pairs from dissimilar image pairs. The robustness is introduced by projecting the face representations into the intra-personal subspace, which is spanned by the top eigenvectors of the intra-personal covariance matrix after the whitening process. After mapping the images to the intra-personal subspace, the discrimination is incorporated in learning the similarity metric. The similarity metric is defined as the difference of the image pair similarity against the distance measure, parameterized by two matrices respectively. The matrices are learned by minimizing the hinge loss and regularizing the two matrices to identity matrices. In their experiments, they use LBP and TBLBP descriptors on the LFW-a data set and SIFT descriptors on the LFW funneled data set computed at nine facial key points. Under the image-restricted with label-free outside data protocol, combining six scores from the three descriptors and their square roots variants they achieve 89. 73 ± 0. 38 % accuracy. Under the unrestricted with label-free outside data protocol, they generate more training pairs with the identity labels and improve the accuracy to 90. 75 ± 0. 64 %.

2.3.22 Fast High Dimensional Vector Multiplication Face Recognition, 2013 [12]

In this method, the authors propose the over-complete LBP (OCLBP) descriptor, which is the concatenation of LBP descriptors extracted with different block and radius sizes. The OCLBP based face descriptor is then processed by Whiten-PCA and LDA. They further introduce a non-linear dimensionality reduction technique Diffusion Maps (DM) with the proposed framework. Extensive experiments are conducted with different local features and dimensionality reduction methods combinations. They report 91. 10 ± 0. 59 % accuracy under the image-restricted with label-free outside data protocol and 92. 05 ± 0. 45 % under the unrestricted with label-free outside data protocol.

2.3.23 Discriminative Deep Metric Learning for Face Verification in the Wild, 2014 [41]