Abstract

In this paper, we propose an efficient higher order total variation regularization scheme for image denoising problem. By relaxing the constraints appearing in the traditional infimal convolution regularization, the proposed higher order total variation can remove the staircasing effects caused by total variation as well as preserve sharp edges and finer details well in the restored image. We characterize the solution of the proposed model using fixed point equations (via the proximity operator) and develop convergent proximity algorithms for solving the model. Our numerical experiments demonstrate the efficiency of the proposed method.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Noise removal is a long standing problem in image processing. Many different methods have been proposed for image denoising problem, among which the well-known Rudin-Osher-Fatemi (ROF) total variation (TV) model is one of the most popular methods [8]. This model restores the image by solving a minimization problem

where f is the observed noisy image, \(\mathrm {TV}(u)\) denotes the total variation of u and \(\lambda \) is the regularization parameter to balance both terms in (1). The distinctive feature of the total variation is that it can preserve sharp edges well. However, natural images usually contain smooth part that can be destroyed by TV, and therefore, the denoised image by total variation regularization often suffers the undesired staircasing effects in the flat region of the image [10]. Addressing this drawback of the total variation, many improved ROF models have been proposed in the recent literature. These approaches can be roughly divided into two categories. In the first category, some local gradient information is added to the total variation to make it differentiate different features of the image [4, 5, 11]. Another category to reduce the staircasing effect in the restored image is to incorporate higher order derivatives into the regularization term. One successful approach in this direction was give in [2] who suggested to use the infimal convolution of functionals with first and second order derivatives as regularizer. The resulting image denoising model has the form of

where \(\mathrm {ICTV}(u)\) is the infimal convolution regularization that will be defined in Sect. 2. Although the staircaing effects caused by total variation can be alleviated to some extent, the infimal convolution regularization often penalizes the smooth regions of the image too much and may blur the sharp edges and finer details of the image.

In this paper, a novel higher order total variation regularization is proposed to remove the staircasing effects while still preserve sharp edges and finer details in the restored image. We first give an equivalent formulation of the traditional infimal convolution regularizer and motivate the proposed higher order total variation by relaxing the constrains appearing in traditional infimal convolution regularizer. This modification will not increase any difficulties for the numerical treatments of the resulting image denoising model. We characterize the solution of the proposed model via fixed points of fixed point equations in which the proximity operators of convex functions are involved. Based on the characterization, the convergent proximity algorithm is developed to solve the model.

The paper is organized as follows. We shall briefly introduce the difference matrices that are used to define total variation and the regularization terms in this paper in Sect. 2. In Sect. 3, we present the higher order total variation regularization term and the proposed image denoising model. We characterize the solution of the model via fixed point equations and develop numerical algorithms for the proposed model in Sect. 4. Numerical experiments showing the performance of our method is given in Sect. 5. Finally, we conclude our paper in Sect. 6.

2 Difference Matrices and Total Variation

In this section, we present the precise definition of the regularization terms appearing in model (1) and (2). For the sake of simplicity, we assume the image is an \(n\times n\) square matrix for some integer n. To describe the problem in matrix algebra language, we treat the image as a vector in \(\mathbb {R}^N\) by sequentially concatenating the columns of the image. Here we assume that \(N=n^2\). To define the total variation of the image u, we need a \(n \times n\) backward difference matrix

then \(-D^\top D\) is the second order central difference matrix. Since we reshape the square image into a vector, we can use

as the first order difference matrices for the image, where \(\otimes \) denotes the Kronecker product of the matrices and \(I_n\) denotes the \(n\times n\) identity matrix. Hence, by setting the gradient matrix

which is formed by stacking two matrices into a single one, and \(\varphi :\mathbb {\mathbb {R}}^{2N}\rightarrow \mathbb {R}\) defined for \(z\in \mathbb {R}^{2N}\) as

the composition \(\varphi (B_1u)\) computes the total variation \(\mathrm {TV}(u)\) appearing in the model (1).

The second order difference matrices for the image can be defined as

using which we can get the second order gradient matrix

The first and second order gradient matrices \(B_1\) and \(B_2\) are related by

where

Based on our notation, the regularizer \(\mathrm {ICTV}(u)\) in (2) which use the infimal convolution of functionals with first and second order derivatives has the form of

where \(\lambda _1\) and \(\lambda _2\) are positive parameters balancing between the first and second order derivatives, and \(\psi :\mathbb {\mathbb {R}}^{4N}\rightarrow \mathbb {R}\) is defined \(z\in \mathbb {R}^{4N}\) as

3 The Proposed Model

In this section, we give the proposed higher order total variation regularization term and the resulting image denoising model. The proposed regularizer can be seen as the improved version of the infimal convolution regularizer in (4). The following lemma presents an equivalent form of the infimal convolution regularizer in (4) which facilitates us to motivate the proposed higher order total variation regularization term.

Lemma 1

For any u, we have that

where \(\mathcal {R}(\cdot )\) denotes the range of a matrix.

Proof

Clearly, we can conclude that

by setting \(d=B_1u_1\) and \(x=B_1u_2\).

Since \(u=u_1+u_2\) implies that \(B_1u=B_1u_1+B_1u_2\), we can get

On the other hand, for any \(u_1,~u_2\) satisfying \(B_1u=B_1u_1+B_1u_2\), there exists \(v\in \mathcal {N}(B_1)\) such that \(u=u_{1}+u_{2}+v\), and we can have

which implies that

This together with (7) complete the proof.

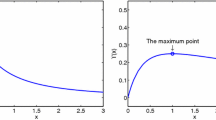

The proposed higher order total variation regularization term can be seen as the improved version of the infimal convolution regularizer and has the form of

As can be seen from Lemma 1, the difference between the original infimal convolution relarization and its modified version (8) consists in the relaxed conditions on the new regularizer. For \(\mathrm {HTV}(u)\) we no longer have the restriction that \(d,x\in \mathcal {R}(B_1)\) and \(B_1u\) can be decomposed into any components d and x. The authors in [9] showed that this modification can generally lead to better numerical results than the original model (4). This modification was given a theoretical fundament in [1] based on tensor algebra and the corresponding new regularizer was called total generalized variation.

Using the higher order total variation in (8) as the regularizer, the proposed image denoising model is

which can be reformulated as an equivalent unconstrained two variables minimization problem

Both above models are equivalent in the sense that if the pair \((\hat{u},\hat{d})\) is the solution of model (10) then \(\hat{u}\) is the solution of model (9). Therefore, we can solve model (10) as an alternative of directly solving model (9).

4 Minimization Algorithm

The main focus of this section is to give numerical proximity algorithm that solve the proposed model (10). Proximity algorithm was proposed in [6] for solving the ROF model and outperformed the benchmarking split Bregman iteration method. The application of the proximity algorithm to other image models can be found in [3, 7]. The main idea of this algorithm is firstly characterize the solution of the model via the fixed point of fixed point equations in which the proximity operators of the convex functions are included, and then develop efficient numerical algorithms via various fixed point iterations. The proximity operator of a real-valued function g is defined for \(x\in \mathbb {R}^d\) and \(\gamma >0\) by

For convenience of developing numerical algorithms, we can rewrite the proposed model into a general form of

by defining the matrix B, the vector w and the function F as

and

respectively. The following proposition characterizes a solution of model (11) in terms of fixed point equations.

Proposition 1

If \(\hat{w}\) is a solution of model (11), then for any \(\sigma ,\gamma >0\), there exist two vectors b, c such that

Conversely, if there exist positive parameters \(\sigma ,\gamma \) and vectors b, c and \(\hat{w}\) satisfying equations in (12), then \(\hat{w}\) is a solution of model (11).

Proof

See Proposition 5 in [3].

According to Proposition 1, the solution of model (10) is characterized by the fixed point equations in (12). Based on these fixed point equations, we can naturally propose an iterative algorithm that generates the sequence \(\{w^k,c^k,b^k\}\) from arbitrary initial vector \((w^0,c^0,b^0)\). This iterative procedure may be stated as follows:

The following theorem establishes a convergence result of the proposed iterative scheme (13), its proof can be found in Theorem 4.1 in [3].

Theorem 1

If the positive numbers \(\gamma \) and \(\sigma \) are chosen to satisfy

then the sequence \(\{(w^k,b^k,c^k)\}\) generated by the iterative scheme (13) converges to the solution of model (10).

Implementing the iterative procedure (13) requires the availability of explicit forms of two proximity operators \(\mathrm {prox}_{\frac{1}{\sigma }F}\) and \(\mathrm {prox}_{\frac{1}{\gamma }\psi }\). We next present explicit forms of these proximity operators. We first give the closed forms of the proximity operator of F. Remembering that \(w=[u;d]\) and \(F(w)=\frac{1}{2}\Vert u-f\Vert _2^2+\lambda _1\varphi (d)\), the corresponding proximity operator of F in (13) reads

The minimization problem in (15) is very easy to compute since it can solved separately with respect to \(u^{k+1}\) and \(d^{k+1}\), that is we have

Both of the proximity operators \(\mathrm {prox}_{\frac{1}{\gamma }\psi }\) and \(\mathrm {prox}_{\frac{\lambda _1}{\sigma }\varphi }\) appearing in (13) and (16) have the closed-form solutions respectively. For \(t>0\) and \(x\in \mathbb {R}^{2N}\) and \(\widetilde{x}\in \mathbb {R}^{4N}\), set \(y:=\mathrm {prox}_{t\varphi }(x)\) and \(z:=\mathrm {prox}_{t\psi }(\widetilde{x})\), writing \(\overline{x}_i:=[x_i;x_{i+N}]\), and \(\overline{\widetilde{x}}_i:=[\widetilde{x}_i;\widetilde{x}_{i+N};\widetilde{x}_{i+2N};\widetilde{x}_{i+3N}]\), for \(i=1,2,\cdots ,N\), we have

and

respectively.

In summary, we have the following convergent algorithm for solving problem (10):

5 Experiments

In this section, we justify the proposed higher order total variation regularization term and image denoising model by its performance in removing Gaussian noise from images. The peak signal-to-noise ration(PSNR) is used to measure the quality of the restored image. We select the standard pepper and lena images and a CT image as our testing data. Both of the lena and pepper are with the size of \(256\times 256\), and the CT image is with the size of \(555\times 555\). All of the images are shown in Fig. 1. The regularization parameters are chosen to produce the highest PSNR values in all of the experiments. The stopping criterion of all of the algorithms is that the relative error between the successive iterates of the restored images should satisfy the following inequality

where \(u^i\) is the denoised image at the i-th iteration. Each PSNR value reported in this section is the averaged result of three runs.

We add additive white Gaussian noise with standard deviation \(\delta =10\) and \(\delta =20\) to the lena, pepper and CT images respectively. We use the ROF model (1), the infimal convolution model (2), and the model (9) which is based on our proposed higher order total variation to restore the images. The PSNR results of all restored images are shown in Table 1. We can see that our proposed model can produce the restored images with the higher PSNR values than those of the ROF model and infimal convolution model. To see the visual effects of the restored images, we show the CT images (the case of \(\delta =10\)) in Fig. 2. Figure 3 is the zoomed version of Fig. 2. It can be clearly seen the staircasing effects caused by the total variation when the image is zoomed in. The infimal convolution regularization can remove the staircasing effects caused by TV, however, the visible blurring artifacts at the edges are introduced. Our proposed higher order total variation regularization can produce more favorable result without visual artifacts while still preserve sharp edges and finer details well.

6 Concluding Remarks

We propose an higher order total variation regularization for image denoising problem. This regularization term can be seen as the improvement of the traditional infimal convolution type regularization and can alleviate the staircasing effects in the restored image caused by total variation. We use the proximity algorithm to solve the resulting model and establish the convergence result of the algorithm. Numerical results presented in this paper demonstrate the efficiency of the proposed method.

References

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3, 492–526 (2010)

Chambolle, A., Lions, P.: Image recovery via total variation minimization and related problems. Numer. Math. 76, 167–188 (1997)

Chen, F., Shen, L., Yuesheng, X., Zeng, X.: The moreau envelope approach for the l1/tv image denoising model. Inverse Probl. Imaging 8(1), 53–77 (2014)

Chen, Q., Montesinos, P., Sun, Q., Heng, P., Xia, D.: Adaptive total variation denoising based on difference curvature. Image Vis. Comput. 28(3), 298–306 (2010)

Dong, Y., Hintermuller, M., Rincon-Camacho, M.: Automated regularization parameter selection in mutiscale total variation methods for image restoration. J. Math. Imaging Vis. 40(1), 82–104 (2011)

Micchelli, C.A., Shen, L., Xu, Y.: Proximity algorithms for image models: Denosing. Inverse Probl. 27(4), 045009(30pp) (2011)

Micchelli, C.A., Shen, L., Yuesheng, X., Zeng, X.: Proximity algorithms for the \(l_1/tv\) image denosing models. Adv. Comput. Math. 38(2), 401–426 (2013)

Rudin, L., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D 60, 259–268 (1992)

Setzer, S., Steidl, G., Teuber, T.: Infimal convolution regularizations with discrete l1-type functionals. Commun. Math. Sci. 9(3), 797–872 (2011)

Strong, D., Blomgren, P., Chan, T.: Spatially adaptive local feature-driven total variation minimizing image restoration. In: Proceedings of SPIE, vol. 3167, pp. 222–233 (1997)

Zeng, X., Li, S.: An efficient adaptive total variation regularization for image denoising. In: 2013 Seventh International Conference on Image and Graphics (ICIG), pp. 55–59. IEEE (2013)

Acknowledgment

This work is supported by the Promotive Research Fund for Excellent Young Scientists of Shandong Province (No. BS2014DX003), by the Specialized Research Fund for the Doctoral Program of Higher Education of China (No. 20130132120022), and the Fundamental Research Funds of the Central Universities of Ocean University of China (No. 201313009).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Zeng, W., Zeng, X., Yue, Z. (2015). Image Denoising with Higher Order Total Variation and Fast Algorithms. In: Zhang, YJ. (eds) Image and Graphics. ICIG 2015. Lecture Notes in Computer Science(), vol 9218. Springer, Cham. https://doi.org/10.1007/978-3-319-21963-9_37

Download citation

DOI: https://doi.org/10.1007/978-3-319-21963-9_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21962-2

Online ISBN: 978-3-319-21963-9

eBook Packages: Computer ScienceComputer Science (R0)