Abstract

In recent years the optimization methods are based on physical mimic natural biological processes among others, seek to strike a balance between exploitation and exploration of the search space to achieve good performance technical search and global optimization. In this work a simulated algorithm (SA) is described to analyze their convergence in mathematical functions, setting parameters were performed manually for Algorithm Benchmark 6 math functions, this algorithm apply high temperatures, the temperature in cooling process is reduced gradually.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Since the simulated annealing algorithm developed by Scott Kirkpatrick, C. Daniel P. Gelatt and Vecchi Mario was introduced in 1983 [1, 2], has proved efficient to solve various optimization problems.

Simulated Annealing algorithm described in its original form, fit parameters to the existing literature was performed manually based algorithm has been applied to mathematics reference functions and tables showing the results Annealing optimization algorithm is presented I simulated.

The comparative study of the original algorithm with the algorithm and a fuzzy system is carried out in order to show the effectiveness of the simulated annealing algorithm address problems optimization just as effective against the diffuse test algorithm.

This paper is organized as follows: Sect. 2 describes the Simulated Annealing algorithm in Sect. 3 the description section mathematical functions, Sect. 4 the results of the simulations where you can see the modified algorithm SA and SA are described.

2 Simulated Annealing

The simulated annealing algorithm appears to early 80s [3], is the resolution for the optimization of combinatorial problems with local minimum. Your approximation is to randomly generate a near current solution, based on the probability distribution proportional temperature scale solution. SA algorithm generates a new point if it is a better solution than the current point this process continues until an improvement is not generated, the algorithm to avoid being trapped in local minima not necessarily accept the overall allows elevating the objective points observed that local-search algorithms could often obtain better final objective criterion values if they were permitted to periodically accept solutions with worse criterion values [4].

The algorithm SA been used in different areas of research for solving different problems then it makes mention of some: Simulated annealing for the unconstrained quadratic pseudo-Boolean function [3], A medical diagnostic tool based on radial basis function classifiers and evolutionary simulated annealing [5], An approach based on simulated annealing to optimize the performance of extraction of the flower region using mean-shift segmentation [6], A simulated annealing algorithm for sparse recovery by l0 minimization Neurocomputing [7], An efficient simulated annealing algorithm for design optimization of truss structures [8].

Transition probability

Is the probability that the simulated annealing algorithm will move randomly indicate your current position, your changes will be accepted with probability p, [2] is determined by

Accepting a change to a random number r as a threshold is used, is determined by

Temperature

The concept of TC in SA is analogous to the freezing point of a liquid, i.e., the temperature at which the liquid undergoes a phase change to form a solid [9]. The temperature is a parameter of the simulated annealing algorithm, the initial temperature Τ0 defines when you start the cooling process to control general search results in each dimension is used to limit the scope of the search in that dimension, you must select an initial temperature adequate to prevent the algorithm from being trapped in a local minimum must be high enough to allow the system to have a high degree of freedom for changing neighboring solutions.

Step Walk

The random steps are widely used for randomization and local search in different algorithms, it is important to consider an appropriate step size which experienced search.

If the search step is too large, then the new solution x generated will be too far from the previous solution. Then, the measure is unlikely to be accepted. If the search step is too small, the change is too small to be significant, and therefore such a search is not efficient.

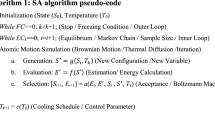

2.1 Pseudo Code for Simulated Annealing Algorithm

-

Objective function \( f\left( x \right);x = (x_{1} , \ldots ,x_{p} )^{T} \)

-

Initialize initial temperature T 0 and initial guess X (0)

-

Set final temperature T f \( \psi \) and max number of iterations N

-

Define cooling schedule \( T \to \alpha T,(0 < \alpha < 1) \)

-

While \( (T > T_{f} \,y\,n < N) \)

-

Move randomly to new locations: \( x_{n + 1} = x_{n} + \epsilon \) (random walk)

-

Calculate \( \varDelta f = f_{n + 1} (x_{n + 1} ) - f_{n} (x_{n} ) \)

-

Accept the new solution if better

-

if not improved

-

Generate a random number r

-

Accept if \( p = \exp [ - \Delta f/T] > r \)

-

end if

-

Update the best \( x_{*} \,y\,f_{*} \)

-

n = n + 1

-

end while

3 Benchmark Mathematical Functions

In this section the functions used to check the effectiveness of the algorithm SA is a series of tests were performed to estimate the processing time taken for the execution of the algorithm.

In the area of optimization Have Been used in different mathematical functions work Which Mentioned below: Optimal design of fuzzy classification systems using PSO With dynamic parameter adaptation-through fuzzy logic [10], A new gravitational search algorithm using fuzzy logic to Parameter adaptation [11], Parallel Particle Swarm Optimization With Parameters Adaptation Using Fuzzy Logic [12, 13].

The mathematical functions are shown below:

-

Ackley

$$ f\left( x \right) = \mathop \sum \limits_{i = 1}^{n} \frac{{x_{i}^{2} }}{4000} - \mathop \prod \limits_{i = 1}^{n} \cos\left( {\frac{{x_{i} }}{\sqrt i }} \right) + 1 $$(3) -

Griewank

$$ \left( x \right) = \mathop \sum \limits_{i = 1}^{n} \frac{{x_{i}^{2} }}{4000} - \mathop \prod \limits_{i = 1}^{n} \cos\left( {\frac{{x_{i} }}{\sqrt i }} \right) + 1 $$(4) -

Rastrigin

$$ f_{2} \left( x \right) = \mathop \sum \limits_{i = 1}^{n - 1} 100\left( {x_{i + 1} - x_{i}^{2} } \right)^{2} + (1 - x_{i} )^{2} $$(5) -

Rosenbrock

$$ \varvec{f}_{2} \left( \varvec{x} \right) = \mathop \sum \limits_{{\varvec{i} = 1}}^{{\varvec{n} - 1}} 100(\varvec{x}_{{\varvec{i} + 1}} - \varvec{x}_{\varvec{i}}^{2} )^{2} + (1 - \varvec{x}_{\varvec{i}} )^{2} $$(6) -

Shubert

$$ f\left( x \right) = \left( {\mathop \sum \limits_{i = 1}^{5} i\,\cos \left( {\left( {i + 1} \right)x_{1} + i} \right)} \right)\left( {\mathop \sum \limits_{i = 1}^{5} i\,\cos \left( {\left( {i + 1} \right)x_{2} + i} \right)} \right) $$(7) -

Sphere

$$ f\left( x \right) = \mathop \sum \limits_{i = 1}^{n} 2_{i}^{2} $$(8)

4 Fuzzy System Design

Design of the input variable can be seen in Fig. 1 showing iteration as input, three granulated triangular membership functions.

The fuzzy system has iteration as input, as shown in Fig. 2.

To the output variable, values between 0 and 0.001 so that the output variables were designed using this range are used. Granulated output three triangular membership functions, design variables can be seen in Fig. 3.

The rules for the fuzzy system are show in Fig. 4.

5 Simulation Results

Comparison algorithm Simulated Annealing with the modification of search step parameter, each algorithm is presented 6 math functions were integrated in this section Benchmark was performed separately in different sizes 2, 4, 8, 16, 32, were handled 30 tests for each function.

The parameters in the simulated annealing algorithm are:

-

Initial temperature = 1,0

-

Finial stopping temperature = 1e-10

-

Min value of the function = 0

-

Maximum number of rejections = 1000

-

Maximum number of runs = 500

-

Maximum number of accept = 400

-

Initial search period = 300

5.1 Simulation Results with Bat Algorithm

In this section the results obtained by the simulated annealing algorithm in separate tables of mathematical functions.

From Table 1 it can be appreciated that after executing the Simulated Annealing Algorithm 30 times, with different dimension, we can see the best, average and worst results for the Ackley function.

From Table 2 it can be appreciated that after executing the Simulated Annealing Algorithm 30 times, with different dimension, we can see the best, average and worst results for the Griewank function.

From Table 3 it can be appreciated that after executing the Simulated Annealing Algorithm 30 times, with different dimension, we can see the best, average and worst results for the Rastrigin function.

From Table 4 it can be appreciated that after executing the Simulated Annealing Algorithm 30 times, with different dimension, we can see the best, average and worst results for the Rosenbrock function.

From Table 5 it can be appreciated that after executing the Simulated Annealing Algorithm 30 times, with different dimension, we can see the best, average and worst results for the Sphere function.

The results obtained with the Shuber function, we can see that after being executed 30 times the simulated annealing algorithm with 2 dimensions, could appreciate the following results: best—186.7308, worst—176.2034 and average—123.5715.

5.2 Simulation Results with Simulated Annealing Algorithm with the Modification of Search Step

In this section we show the results obtained by the genetic algorithm in separate tables of the math functions.

In Table 6 we can see that after running the Simulated Annealing algorithm 30 times, with different dimensions, we can see the best, worst and average results for the Ackley function.

In Table 7 we can see that after running the Simulated Annealing algorithm 30 times, with different dimensions, we can see the best, worst and average results for the Griewank function.

In Table 8 we can see that after running the Simulated Annealing algorithm 30 times, with different dimensions, we can see the best, worst and average results for the Rastrigin function.

In Table 9 we can see that after running the Simulated Annealing algorithm 30 times, with different dimensions, we can see the best, worst and average results for the Rosenbrock function.

In Table 10 we can see that after running the Simulated Annealing algorithm 30 times, with different dimensions, we can see the best, worst and average results for the Sphere function.

The results obtained with the Shuber function, we can see that after being executed 30 times the simulated annealing algorithm with 2 dimensions, could appreciate the following results: best—186.7309, worst—184.6257 and average—123.5767.

6 Conclusions

In the simulation analysis for the comparative study of genetic algorithms and the effectiveness of the bat algorithm, bat algorithm during the modification of parameters by trial and error is shown, the rate of convergence of the genetic algorithm is much faster, the comparison was made with the original versions of the two algorithms with the recommended literature parameters, this conclusion is based on 6 Benchmark math functions results may vary according to mathematical or depending on the values set in the parameters of the algorithm.

The application of various problems in bat algorithm has a very wide field where the revised items its effectiveness is demonstrated in various applications, their use can be mentioned in the processing digital pictures, search for optimal values, neural networks, and many applications.

References

Jeong, S.-J., Kim, K.-S., Lee, Y.-H.: The efficient search method of simulated annealing using fuzzy logic controller. Expert Syst. Appl. 36(3/2), 7099–7103 (2009). ISSN 0957-4174. http://dx.doi.org/10.1016/j.eswa.2008.08.020

Yang, X.: A new metaheuristic bat-inspired algorithm. Department of Engineering, University of Cambridge, Trumpington Street, Cambridge CB2 1PZ, UK (2010)

Alkhamis, T.M., Hasan, M., Ahmed, M.A.: Simulated annealing for the unconstrained quadratic pseudo-Boolean function. Eur. J. Oper. Res. 108(3), 641–652 (1998). ISSN 0377-2217

Brusco, M.J.: A comparison of simulated annealing algorithms for variable selection in principal component analysis and discriminant analysis. Comput. Stat Data Anal. 77, 38–53 (2014). ISSN 0167-9473

Alexandridis, A., Chondrodima, E.: A medical diagnostic tool based on radial basis function classifiers and evolutionary simulated annealing. J. Biomed. Inf. 49, 61–72 (2014). ISSN 1532-0464. http://dx.doi.org/10.1016/j.jbi.2014.03.008

Bahadir, K.: An approach based on simulated annealing to optimize the performance of extraction of the flower region using mean-shift segmentation, Appl. Soft Comput. 13(12), 4763–4785 (2013). ISSN 1568-4946

Du, X., Cheng, L., Chen, D.: A simulated annealing algorithm for sparse recovery by l0 minimization. Neurocomputing 131, 98–104 (2014). ISSN 0925-2312

Lamberti, L.: An efficient simulated annealing algorithm for design optimization of truss structures. Comput. Struct. 86(19–20), 1936–1953 (2008). ISSN 0045-7949. http://dx.doi.org/10.1016/j.compstruc.2008.02.004

Tavares, R.S., Martins, T.C., Tsuzuki, M.S.G.: Simulated annealing with adaptive neighborhood: a case study in off-line robot path planning. Expert Syst. Appl. 38(4), 2951–2965 (2011). ISSN 0957-4174

Melin, P., Olivas, F., Castillo, O., Valdez, F., Soria, J., Garcia, J.: Optimal design of fuzzy classification systems using PSO with dynamic parameter adaptation through fuzzy logic. Expert Syst. Appl. 40(8), 3196–3206 (2013)

Sombra, A., Valdez, F., Melin, P., Castillo, O.: A new gravitational search algorithm using fuzzy logic to parameter adaptation. In: IEEE Congress on Evolutionary Computation, pp. 1068–1074 (2013)

Valdez, F., Melin, P., Castillo, O.: Evolutionary method combining particle swarm optimization and genetic algorithms using fuzzy logic for decision making. In: Proceedings of the IEEE International Conference on Fuzzy Systems, pp. 2114–2119 (2009)

Valdez, F., Melin, P., Castillo, O.: Parallel particle swarm optimization with parameters adaptation using fuzzy logic. MICAI 2 374–385 (2012)

Acknowledgments

We would like to express our gratitude to the CONACYT and Tijuana Institute of Technology for the facilities and resources granted for the development of this research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Avila, C., Valdez, F. (2015). An Improved Simulated Annealing Algorithm for the Optimization of Mathematical Functions. In: Melin, P., Castillo, O., Kacprzyk, J. (eds) Design of Intelligent Systems Based on Fuzzy Logic, Neural Networks and Nature-Inspired Optimization. Studies in Computational Intelligence, vol 601. Springer, Cham. https://doi.org/10.1007/978-3-319-17747-2_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-17747-2_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-17746-5

Online ISBN: 978-3-319-17747-2

eBook Packages: EngineeringEngineering (R0)