Abstract

Trust is a social phenomenon that impacts the situation awareness of individuals and indirectly their decision-making. However, most of the existing computational models of situation awareness do not take interpersonal trust into account. Contrary to those models, this study introduces a computational, agent-based situation awareness model incorporating trust to enable building more human-like decision making tools. To illustrate the proposed model, a simulation case study has been conducted in the airline operation control domain. According to the results of this study, the trustworthiness of information sources had a significant effect on airline operation controller’s situation awareness.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Decision makers in complex sociotechnical systems, such as airline operation control and train traffic control centers, often encounter complex and dynamic situations that require optimal decisions to be made rapidly based on the available information. The sustainability of such complex systems is highly dependent on the quality of decisions made in these situations. The concept of situation awareness has been identified as an important contributor to the quality of decision-making in complex, dynamically changing environments [6, 12]. According to one of the most cited definitions, provided by Endsley [5], the concept of situation awareness refers to the level of awareness that an individual has of a situation and an operator’s dynamic understanding of ‘what is going on’.

In complex and dynamic environments, there is a vast amount of information flows that shape the decision maker’s situation awareness. To understand how situation awareness is formed in such environments, it is crucial to understand how decision makers treat the information received from diverse sources. Imagine that information gathered from two information sources is conflicting. Which information source should be taken into account in decision making? In general, the choice would depend not only on the content of information, but also on the trustworthiness of the information source [11]. As the trustworthiness of information sources affects decision maker’s assessment of the situation, it is important to incorporate interpersonal trust in computational models of situation awareness.

To the best of our knowledge, interpersonal trust has not been taken into account while modeling the situation awareness (SA) of an intelligent software agent. To address this gap, in this paper we propose a novel, computational agent-based model of SA, which integrates SA with trust. Our model is based on the theoretical three-level SA model of Endsley [5]. Level \(1\) involves the perception by an individual of elements in the situation. At Level \(2\) data perceived at Level \(1\) are being interpreted and understood in relation to the individual’s tasks and goals. At Level \(3\) the individual predicts future states of the systems and elements in the environment based on his/her current state.

In the proposed model, SA of an agent is represented by a belief network, in which beliefs are activated by communicated or observed information, as well as by propagation of belief activation in the network. The perceived trustworthiness of an information source and the degree of (un)certainty of information provided by this source determine to what extent the communicated or observed information contributes to the activation level of the corresponding belief. The perceived trustworthiness of a source can be evaluated by taking into account direct experience with the source, categorization, and reputation [7]. Furthermore, trust is context dependent. For example, when the context is “repairing an instrument problem of a particular aircraft” one’s trust in an aircraft maintenance engineer would probably be higher than one’s trust in a pilot. However, one’s trust in a pilot might be higher in another context such as “landing an aircraft in a rainy weather”. Moreover, a similarity between opinions of individuals has a high impact on their trust relationship [9]. These arguments are also in line with Morita and Burns’ model on trust and situation awareness formation [13]. Following these arguments, an experience based trust model from the literature has been adopted in our paper to model the trustworthiness of information sources [8, 18].

A case study in the airline operation control domain has been conducted to validate the applicability of our model, and an agent-based simulation has been developed to investigate the effect of trust on the airline operation controller’s situation awareness in the case study. The results of our study support that the trustworthiness of the information sources has a significant influence on decision maker’s situation awareness.

The rest of this paper is organized as follows. In Sect. 2 related work is discussed. The proposed situation awareness model is explained in Sect. 3. Section 4 describes the case study and experimental results. Section 5 provides conclusions with a review of the contribution of this research and future work.

2 Related Work

In simple terms situation awareness (SA) refers to knowing what is happening around oneself [6]. The specific definition and operationalization of SA has been strongly debated amongst researchers in the nineties, whereas two main streams could be identified: the traditional information-processing approach in psychology versus ecological psychology [21]. Basically, these two theoretical views differentiate on the extent the natural environment plays a role in relation to an individual’s cognition and the relationship between perception and action. Thus, in accordance with the information-processing school, SA and performance can be seen as separable concepts, whereas the ecological school considers them as inseparable. Another disagreement is whether situation awareness is seen as respectively a product or state, or as a process. Following the debate on the different approaches, Endsley’s three-level model of situation awareness has received the predominant support for defining and operationalizing the situation awareness of individual operators [17]. This approach takes upon the information-processing perspective, in which situation awareness is seen as a state and mental models are identified to provide an input to the development of the comprehension of elements in the situation (level 2) and the projection of these elements in the near future (level 3 SA) [5]. Mental models can be seen as long-term memory structures [5]. More specifically, they can be defined as “mechanisms whereby humans are able to generate descriptions of system purpose and form, explanations of system functioning and observed system states, and predictions of future states” (p. 7) [16].

The role of mental models in developing SA as defined by this psychological approach have been the basis in developing computational models for software agents. In particular, in developing a computational agent-based model of SA, Hoogendoorn et al. [10] connected the observation, belief formation in the current situation and related mental models of an agent to the formation of the agent’s belief in the future situation. So et al. [19] proposed another agent-based model of SA, in which SA is considered as a form of a meta-level control over deliberation of agents about their intentions, plans and actions. Another computational model developed by Aydoğan et al. [1] considers formation of SA as interplay between possible actions and predicted consequences. To the best of our knowledge, integration of SA and trust has not been considered in agent-based modelling before. However, recently experimental studies on relationships between trust and SA were performed involving human subjects in the context of social games [14, 20]. These studies have indeed confirmed that trust plays an important role in formation and maintenance of SA of humans. In particular, they established that trust impacted the reasoning of subjects of the experiment about the strategy choices in the game. Previously, an important role of trust for SA was also acknowledged by researchers from the human factors area [15]. In particular, they argued that trust in automation has important consequences for the quality of mental models and SA of operators, and thus should be taken seriously by system designers.

3 Situation Awareness Model

In the proposed model, the SA of an agent is represented by a belief network - a directed graph with the nodes representing agent’s beliefs and the edges representing dependencies between beliefs. In our model belief networks have similarities with neural networks in the way that beliefs may contribute positively (reinforce) or negatively (inhibit) to the activation of other beliefs. As such, the activation of beliefs is propagated through the network. In line with the model of Endsley, based on the perceived information (Level \(1\)), some nodes of the network will be activated. This activation will spread further through the network, representing the process of comprehension (Level \(2\)) and projection (Level \(3\)). We assume that the perception of the information is performed via sensors, through which the agent receives observations from the environment and communication from diverse information sources. This information is incorporated into the agent’s belief network to the extent the agent trusts the information source providing the information.

Similar to the Hoogendoorn et al.’s situation awareness model [10], two types of beliefs, simple beliefs and complex beliefs are distinguished in our model. Simple beliefs are leaf nodes in a belief network. They may be generated by the perception of observed or communicated information. Complex beliefs are obtained by aggregating multiple beliefs, e.g. by using mental models and reasoning. Both simple and complex beliefs are updated after obtaining relevant observed or communicated information. To illustrate this, consider an example depicted in Fig. 1. In this example the airline operation controller observes that a storm is approaching Canberra airport. Based on this observation a simple belief is created. Similarly, based on the information communicated by the engineer “the repair of the aircraft NBO takes longer than four hours” another simple belief is generated. These two beliefs activate the controller’s complex belief, “the repair of the aircraft NBO will be delayed”. Note that according to the domain expert the second simple belief is a better predictor for the complex belief than the first simple belief, which is indicated in the weights of the corresponding edges. Furthermore, to generate a conclusive complex belief both simple beliefs need to be activated.

Each belief has a confidence value denoting to what extent the agent is certain of that belief. Over time, the confidence of some beliefs may change (e.g. increase or decrease depending on environmental inputs about the content of the belief). Equation 1 shows how the belief confidence value is updated in general, regardless of its type. In this formula, \(C_{i,B}(t+\varDelta t)\) represents Agent i’s updated confidence value about the belief \(B\) at time point \(t+\varDelta t\) and \(C_{i,B}(t)\) denotes the confidence of that belief at time \(t\). \(B\) is a belief state property expressed using some state language, e.g., a predicate logic. As both past and current experiences play a role in the situation assessment of an individual, this is taken into account in updating the confidence of a belief in our model. The parameter \(\alpha \) determines to what extent the past experiences are taken into account in the updated confidence value of a belief. This parameter may be set dynamically for each particular application by taking the environmental factors into consideration. For instance, the faster the environment changes, the lower value \(\alpha \) will take. Moreover, the more sensitive the belief system to changes, the lower value this parameter should be assigned. \(F_{i,B}(t)\) denotes Agent i’s confidence value change at time point \(t\). This change is based on the perceived environmental inputs at time \(t\).

\(F_{i,B}(t)\) is calculated differently for simple and complex beliefs. First let us consider how it is calculated for simple beliefs. Information supporting a simple belief might be received from different sources at the same time. This means that the confidence value change of a belief should be evaluated by aggregating information from all information sources.

Assume that there are inputs from \(n\) sources regarding belief \(B\) at time \(t\), then the confidence value change of this belief is estimated as specified in Eq. 2, where \(EI_{i,B,j}(t)\) denotes the evaluated information, which was gathered from the \(j^{th}\) source by Agent i regarding the belief \(B\).

Note that the type of aggregation of the evaluated information components \(EI\) may vary according to the person’s personality traits. Following one of the Big Five dimensions, the trait “agreeableness” has been related to being tolerant, cooperative and trusting [2]. Thus, if the person is skeptical or uncooperative, then MIN function could be used for aggregation, while MAX function would be more appropriate for trusting or positively thinking people. Alternatively, we may aggregate \(EI\) values by means of a weighted sum, where different weights may be assigned to each information source. In particular, the weights may be assigned according to the extent to which the agent is inclined to trust those information sources. Note that when the agent observes information in the environment, the corresponding weight may be determined by the trust of the agent to itself. There could be situations in which an agent does not trust its observations fully (e.g. when the agent is stressed). For instance, consider that the airline operation controller observes the weather himself and he also receives weather forecast information from a service point. All this information is aggregated to determine the confidence value of the belief “A storm is approaching”. This example is illustrated in Fig. 2 where the information fusion box represents the aggregation function.

Equation 3 shows how to evaluate information received from \(j^{th}\) source. If the information is obtained by communication from an information source \(Agent j\), then \(\omega _{j}\) is equal to 1 and the evaluated information is defined by multiplying Agent i’s trust in Agent j w.r.t. domain \(D\) by Agent j’s confidence of belief \(B\) where \(j \ne i\). If the information is observed, then \(\omega _{j}\) is equal to 0 and the evaluated information is defined by multiplying Agent i’s trust in itself w.r.t. domain \(D\) by Agent i’s confidence of its observation (\(O_{B}(t)\)). In this formula, domain \(D\) is a set of state properties \(B\), over which beliefs could be formed. For example, if \(B\) is “the technical problem in the aircraft can be fixed in one hour”, then \(D\) may be the aircraft maintenance domain. Note that state properties are expressed using a standard multi-sorted first-order predicate language. An agent may have a high trust in another agent w.r.t. some domain \(D\) (e.g., driving a car) and at the same time a low trust in the same agent w.r.t. another domain D (e.g. flying an aircraft).

Here, one of the important issues is how to model the trustworthiness of an information source (\(T_{i,j,D}(t)\)) in the specific sense that it determines to what extent the information provided by the information source is incorporated in the agent’s belief network.

A variety of trust models have been proposed in trust literature. Many of these models identify experience as an essential basis of trust, e.g., [8, 11, 18]. The models differ in the way how they represent experience and how different experiences are aggregated over time. In this study, we adopt the experience-based trust as shown in Eq. 4 proposed by Şensoy et al. [18] where \(r(w)\) and \(s(w)\) denotes the functions that map the opinion \(w\) to positive and negative evidence respectively. That corresponds to the ratio of positive evidence to the all evidence, which takes the prior trust value, \(a\), into account.

To define the mapping functions, we adopt the approach introduced in [8], where the authors estimated the trust based on the difference between the agent’s own belief and the information source’s belief. This is also supported by the principle from social science that the closer the opinions of the interacting agents, the higher the mutual influence of the agents is or will be, and, thus, the more the tendency of the agents to accept information will be [4]. Additionally, it is proposed that a higher similarity between individuals is related to a higher degree of trust [22].

In our model the belief differences are calculated according to Eq. 5. Here, Agent i believes that Agent j believes \(B\) with the confidence \(C_{i,belief(j,B)}(t)\). An option is to infer an agent’s estimation of a confidence value is through observation. Alternatively, through communication the agent can directly ask the other agent for its confidence value.

To decide whether \(L_{i,j,B}(t)\) represents a positive or negative evidence that can be used in Eq. 4, a threshold value \(\mu \) is introduced. If \((1-L_{i,j,P}(t))\) is greater than \(\mu \), it is taken as a positive evidence (see Eq. 7); otherwise, it is taken as a negative evidence (see Eq. 8). Thus, trust \(T_{i,j,D}(t)\) is estimated according to Eq. 6.

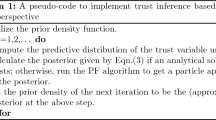

For complex beliefs, confidence value change \(F_{i,B}(t)\) is calculated based on two types of updates. When an agent receives information regarding complex belief \(S\), \(F_{i,B}(t)\) is calculated in the same way as for the simple beliefs, according to Eq. 2. When \(B\) is activated by a propagation in the belief network, \(F_{i,B}(t)\) is estimated by Eq. 9 proposed by Farrahi et al. [8]. In this formula, \(\theta \) denotes the set of beliefs which contribute positively or negatively to complex belief \(B\), \(w_{r, B}\) is the weight of the edge between contributing belief \(r\) and belief \(B\) in the belief network, and \(\beta \) and \(\gamma \) are respectively the steepness and threshold parameters of the logistic function. Weights of edges may have negative values; in such a case they represent inhibition edge in a belief network. Note that trust is implicitly included in Eq. 9 through aggregation of simple belief confidence values.

4 Case Study

To analyze the proposed situation awareness model, a case study has been constructed by drawing on airline operations control scenarios that Bruce designed and conducted [3]. These scenarios aimed at understanding the decision making process of airline operation controllers who guide domestic and international flights in airline Operations Control Centers (OCC). In the case study, our focus is to model the airline operation controller’s situation awareness with the proposed approach. Figure 3 illustrates the belief network of the airline operation controller (AOC) according to the scenario explained below.

-

1.

The aircraft NBO with flight number \(876\) took off from Melbourne to Canberra. At this moment, the airline operation controller believes that flight \(876\) will land in Canberra on time (AOC-B1) and the passengers will be able to catch their transit flight in Canberra (AOC-B2).

-

2.

During the flight, the pilot encounters an instrument problem and he recalls that they had the same problem before taking off. Therefore, he informs the airline operation controller about this problem. When the airline operation controller learns about this problem, the following beliefs are activated:

-

AOC-B3: Aircraft NBO has the instrument problem during the flight from Melbourne (MEL) to Canberra (CBR).

-

AOC-B4: The problem of this type should be fixed before the next flight according to the Minimum Equipment List describing possible problems, how and when they should be fixed.

-

The controller does not know whether this problem can be fixed in Canberra; therefore, under high uncertainty he will believe the following two beliefs related to two possible decision options, which are in conflict with each other:

-

AOC-B5: The problem can be fixed in Canberra (CBR).

-

AOC-B9: The problem should be fixed in Melbourne (MEL).

-

-

Therefore, his confidence about the belief AOC-B6: The flight can continue to CBR, will decrease.

-

That also decreases his confidence about AOC-B1 and AOC-B2.

-

-

3.

After that, the airline operation controller contacts a maintenance engineer in Melbourne to inquire about the instrument problem. The engineer is aware that NBO had the same problem before taking off and believes that the reparation of the reoccurred instrument problem would take about four hours. The engineer believes that the instrument problem is significantly more complex and needs an expert who would be able to fix it. He believes that the engineer in Canberra has not done this type of repair before; therefore, the problem should be fixed in Melbourne. That means the flight \(876\) should return to Melbourne for a proper fix. After obtaining this information, the airline operation controller does the following modification of his belief network:

-

This communicated information reduces the confidence of AOC-B6 and increases the confidence of AOC-B8, AOC-B9 and AOC-B10.

-

AOC-B8: There is no expert to fix the problem in CBR.

-

AOC-B9: The problem should be fixed in MEL.

-

AOC-B10: The aircraft NBO needs to turn back to Melbourne. That decreases the confidence of AOC-B1 and AOC-B2.

-

-

4.

After taking into consideration the information from the maintenance engineer in Melbourne, the airline operation controller decides to contact the OCC maintenance engineer as a second opinion in order to make the right decision. It is worth noting that returning the aircraft to Melbourne is a very costly option since the passengers will not arrive on time and some of them will not be able to catch their transit flight. The OCC maintenance engineer confirms the estimated reparation time, but he also thinks that it is possible to send a more experienced maintenance engineer from Sydney to Canberra to fix the problem. Through this way, the instrument problem can be fixed in Canberra. Furthermore, he also believes that the problem is not sufficiently severe to restrain the flight from continuing to its destination. Thus, the flight can continue to CBR. After this conversation, the airline operation controller maintains his belief network in the following way:

-

Two following beliefs are added:

-

AOC-B11: An experienced engineer from Sydney can be sent to CBR to fix the problem.

-

AOC-B7: An expert can fix the problem in Canberra.

-

-

These beliefs trigger the following beliefs:

-

AOC-B5: The problem can be fixed in CBR.

-

AOC-B6: The flight can continue to CBR.

-

AOC-B1: The flight 876 will land in CBR on time.

-

AOC-B2: Passengers will catch their transit flight in CBR.

-

-

At the same time, the controller decreases the confidence of AOC-8, AOC-9, and AOC-10.

-

-

5.

Lastly, the airline operation controller receives weather forecast information from the flight planning controller about a potential fog problem in Sydney. Based on the communicated information, the airline operation controller maintains his belief network as follows:

-

AOC-B12: There is fog in Sydney.

-

AOC-B13: An experienced maintenance engineer from Sydney cannot be sent to CBR to fix the problem.

-

This information decreases the belief confidence of AOC-B11, AOC-B7, AOC-B5, AOC-B6, AOC-B1, and AOC-B2 while it causes an increase in AOC-B8, AOC-B9, and AOC-10.

-

To analyze this case study in detail, we have developed an agent-based simulation in Java. The belief network depicted in Fig. 3 has been used to model the airline operation controller’s situation awareness. Note that the initial belief network was built based on the empirical information from Bruce [3] and interviews with domain experts in which operators’ reasoning about the problem was captured. At runtime, information required to build the belief network is retrieved from the mental model, where it’s stored, to the working memory [10]. The initial confidence values for AOC-B1, AOC-B2, AOC-B6 are taken as \(1\), while \(0\) is assigned to the rest (see the first row of Table 1). Since the environment in which the airline controller operates changes rapidly, a low value (0.05) has been assigned to the parameter \(\alpha \). This parameter determines to what extent the past experiences are taken into account in the update of the beliefs. The parameters for updating the complex beliefs - \(\gamma \) and \(\beta \) - are taken as \(5\) and \(2.5\) respectively. These values correspond to an unbiased agent (i.e., an agent without strong under- or overestimation of the belief confidence values). In the first test setting, it is assumed that the airline operation controller trusts all information sources fully and equally. Note that to focus on the gradual formation of situation awareness based on information from different sources, constant trust values were used in this case study; however, in a more complex scenario having multiple interactions between the same agents, the trust values can be updated after each interaction as explained in Sect. 3. At each simulation time step, the information described in the scenario above is communicated to the airline operation controller and based on this communication, s/he maintains his/her belief network. The details of this communication is listed below:

-

At \(\mathrm{{t}} = 1\), the pilot informs the airline operation controller about AOC-B3. The controller’s trust in the pilot is taken as \(T_{AOC, pilot, Aircraft}(1)= 1.0\), and the confidence of the pilot about AOC-B3 is taken as \(C_{pilot, AOC-B3}(1)=0.90\).

-

At \(\mathrm{{t}} = 2\) the maintenance engineer in Melbourne informs the controller about AOC-B8. The controller’s trust in the maintenance engineer is taken as \(T_{AOC, ME, Repair}(2)= 1.0\), and the confidence of the maintenance engineer is taken as \(C_{ME, AOC-B8}(2)=0.85\).

-

At \(\mathrm{{t}} = 3\), the OCC maintenance engineer informs the controller about AOC-B11. The controller’s trust in the OCC maintenance engineer is taken as \(T_{AOC,OCC, Repair}(3)=1.0\), and the confidence of the OCC engineer is taken as \(C_{OCC, AOC-B11}(3)=0.90\).

-

At \(\mathrm{{t}} = 4\), the flight planning controller informs the airline operation controller about AOC-B12. The controller’s trust in the planning controller is taken as \(T_{AOC, PC, Weather}(4)=1\)), and the confidence of the planning controller is taken as \(C_{PC, AOC-B12}(4)=0.95\).

Table 1 shows the estimated belief confidence values of the controller agent at each time step. As seen from Table 1, the generated belief confidence values are consistent with the given scenario. When the pilot informed the airline operation controller about the instrument problem (\(\mathrm{{t}} = 2\)), the controller’s confidence about AOC-B1 and AOC-B2 drastically decreased (0.14 and 0.18 respectively). The confidence values for AOC-B5 and AOC-B9 are the same since at that moment the controller does not know where exactly the problem can be fixed. When the maintenance engineer in Melbourne informed the controller that there was no expert to fix this problem in Canberra (\(\mathrm{{t}} = 3\)), the controller’s belief confidence about AOC-B8, AOC-B9 and AOC-B10 increased. That means that he believes that the aircraft NBO needs to turn back to Melbourne. After the OCC maintenance engineer’s advice (\(\mathrm{{t}} = 4\)), the airline operation controllers started to believe that the problem can be fixed in Canberra and the flight can continue to Canberra with the confidence of \(0.72\). After that, the flight planning controller noticed that there is a fog in Sydney, which means that the experienced engineer could not be sent from Sydney to Canberra. This information reduced the airline operation controller’s confidence of AOC-B5 and AOC-B6 while increasing his confidence on AOC-B9 and AOC-B10. As the result, the controller was more inclined to make the decision of returning the aircraft to Melbourne.

4.1 Studying the Effect of \(\beta \) and \(\gamma \) Parameters

In this section, the effect of \(\beta \) and \(\gamma \) parameters has been studied. Recall that \(\beta \) and \(\gamma \) are the parameters adjusting the shape of the logistic function that is used in the calculation of the agent’s confidence value change of a given complex belief as seen in Eq. 9. When \(\beta \) and \(\gamma \) are taken as \(2.5\) and \(5.0\), respectively, this corresponds to an unbiased agent, i.e., an agent without strong under- or overestimation of the belief confidence values. When \(\beta \) and \(\gamma \) are taken as \(5\) and \(10\) respectively, this corresponds to a biased agent that is more inclined to underestimate the beliefs with a low confidence value and to overestimate the beliefs with a high confidence value. For instance, when the weighted sum of the belief confidence values supporting the given complex belief is equal to \(0.3\), the biased agent updates the confidence value change as \(0.12\) (an example for underestimation) while the unbiased agent updates as \(0.27\). However, when the weighted sum of belief confidence values is high, for example \(0.85\), the biased agent estimates it as \(0.97\). Figure 4 depicts the shape of this function with different values of these parameters.

To analyze how biases of the agent would affect the simulation results, we run the same scenario with only \(\beta \) and \(\gamma \) parameters changed to \(5\) and \(10\) respectively. Table 1 represents the belief confidence values for the unbiased agent, while Table 2 shows the corresponding results for the biased agent. Since our case study is sensitive to the incoming information, \(\alpha \) is taken as \(0.05\) in both settings. When we compare the results for both agents, it can be seen that the biased agent has higher belief confidence values for the complex beliefs, which confidence values are high, than the unbiased agent (e.g. \(0.94\) versus \(0.72\) for AOC-B10 at \(t=5\), \(0.92\) versus \(0.72\) for AOC-B5 at \(t=4\)). For the beliefs with low confidence values the opposite can be observed, since the biased agent underestimates them (e.g. \(0.06\) versus \(0.14\) for AOC-B1 at \(t=2\), \(0.08\) versus \(0.28\) for AOC-B9 at \(t=4\)).

4.2 Investigating the Effect of \(\alpha \) Parameter

In this section we analyze how the value of parameter \(\alpha \) influences the belief update and, by implication, the situation awareness of the controller. Basically, this parameter determines to what extent the information perceived by the controller agent changes its beliefs. The low values should be chosen for the cases where the belief maintenance system is sensitive to the incoming information, while high values may be preferred for the cases where the agent should be more conservative w.r.t. belief update. Furthermore, this parameter may also reflect a personal characteristic such as openness to experience from the Five Factor Model [2]. Choosing the right value for the given scenario is crucial.

Table 3 shows the belief confidence values of the airline operation controller at the last time point of the simulation obtained using different \(\alpha \) values. Here, we adopt the unbiased agent type where \(\beta \) and \(\gamma \) are \(2.5\) ad \(5.0\) respectively. According to the scenario we consider the confidence values for AOC-B8, AOC-B9 and AOC-10, in which they are expected to be high. As seen from the results, the most appropriate values are obtained when \(\alpha \) is equal to \(0.05\) and \(0.1\) (low values). This is because the airline operation controller in the examined scenario is very sensitive to new information. The results are not well aligned with the case study, when \(\alpha \) is \(0.5\) (high value), i.e. a conservative agent.

4.3 Analyzing the Effect of Trustworthiness of Information Sources

In order to study how the controller agent’s trust in information sources affects its situation awareness, we run the same scenario with varying trust values, when \(\alpha \) is taken equal to \(0.1\). Table 4 describes the test cases that we investigated and Table 5 shows the belief confidence values for each test case at \(\mathrm{{t}}=5\).

It can be seen that for the first three cases the confidence values of beliefs AOC-B9 and AOC-10 are much higher than the confidence values of AOC-B5 and AOC-B6. In these cases the controller’s trust in the flight planning controller is equal to one (\(T_{AOC, PC, Weather}(4)=1.0\)). Since the airline operation controller trusts the planning controller fully, the information about the weather conditions in Sydney will have a negative influence on the confidence of AOC-B5 and AOC-B6 while activating AOC-B9 and AOC-B10. However, in the forth and fifth test cases, it is observed that the confidence values of AOC-B5 and AOC-B6 are a bit higher than those for AOC-B9 and AOC-10. This means that in these test cases the airline operation controller is more inclined to allow the flight to continue to Canberra. The airline operation controller’s trust in the flight planning controller in these cases is low; therefore, it does not have a positive effect on the activation of AOC-B9 and AOC-B10. On the contrary, such an effect has not been observed in the last case because the airline operation controller’s trust in the OCC maintenance in this case is low. This low value of trust causes a decrease in the confidence values of AOC-B5 and AOC-B6, while increasing the confidence of AOC-B9 and AOC-10. These results support our hypothesis that trust in information sources of an agent plays a crucial role in the development of the agent’s situation awareness.

5 Conclusion

In this paper a novel computational model for situation awareness of an intelligence agent has been proposed. The model is based on the theoretical three-level SA model of Endsley and Hoogendoorn’s computational model of SA. The contribution of this work is threefold. First, a computational model for situation awareness that incorporates interpersonal trust is introduced. Second, a case study to validate the applicability of the model in real life domains has been conducted. Lastly, it has been shown for the given scenario that the trustworthiness of information sources has a significant impact on agent’s situation awareness and by implication on its decision-making.

The proposed model can be used to develop a decision support tool for human operators in complex and dynamic sociotechnical systems to increase the quality of their decisions. To develop such a tool, the following aspects need to be addressed: (1) representing an extensive domain knowledge to capture diverse situations over which SA may be formed, (2) perceiving inputs from the environment, (3) reasoning about incoming information, and lastly (4) foreseeing the consequences of the actions to be taken. The focus was of this paper was on individual SA. As a future work, we shall study SA in teams, since it has a significant impact on the team performance. Another challenge for our future research is measuring individual and team SA in real life settings.

References

Aydoğan, R., Lo, J.C., Meijer, S.A., Jonker, C.M.: Modeling network controller decisions based upon situation awareness through agent-based negotiation. In: Meijer, S.A., Smeds, R. (eds.) ISAGA 2013. LNCS, vol. 8264, pp. 191–200. Springer, Heidelberg (2014)

Barrick, M.R., Mount, M.K.: The big five personality dimensions and job performance: a meta-analysis. Pers. Psychol. 1(44), 1–26 (1991)

Bruce, P.J.: Understanding Decision-Making Processes in Airline Operations Control. Ashgate, Burlington (2011)

Byrne, D., Clore, G., Smeaton, G.: The attraction hypothesis: do similar attitudes affect anything? J. Pers. Soc. Psychol. 51(6), 1167–1170 (1986)

Endsley, M.R.: Towards a theory of situation awareness in dynamic systems. Hum. Factors 37(1), 32–64 (1995)

Endsley, M.R.: Theoretical underpinnings of situation awareness: a critical review. In: Endsley, M.R., Garland, D.J. (eds.) Situation Awareness Analysis and Measurement. Lawrence Erlbaum Associates, Mahwah (2000)

Falcone, R., Castelfranchi, C.: Generalizing trust: inferencing trustworthiness from categories. In: Falcone, R., Barber, S.K., Sabater-Mir, J., Singh, M.P. (eds.) TRUST 2008. LNCS (LNAI), vol. 5396, pp. 65–80. Springer, Heidelberg (2008)

Farrahi, K., Zia, K., Sharpanskykh, A., Ferscha, A., Muchnik, L.: Agent perception modeling for movement in crowds. In: Demazeau, Y., Ishida, T., Corchado, J.M., Bajo, J. (eds.) PAAMS 2013. LNCS (LNAI), vol. 7879, pp. 73–84. Springer, Heidelberg (2013)

Golbeck, J.A.: Computing and applying trust in web-based social networks. Ph.D. thesis, College Park, MD, USA (2005)

Hoogendoorn, M., Van Lambalgen, R.M., Treur, J.: Modeling situation awareness in human-like agents using mental models. In: Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, IJCAI 2011, vol. 2, pp. 1697–1704. AAAI Press (2011)

Josang, A., Ismail, R., Boyd, C.: A survey of trust and reputation systems for online service provision. Decis. Support Syst. 43(2), 618–644 (2007). Emerging Issues in Collaborative Commerce

Klein, G.: Sources of Power: How People Make Decisions. MIT Press, Cambridge (1998)

Morita, P.P., Burns, C.M.: Understanding interpersonal trust from a human factors perspective: insights from situation awareness and the lens model. Theor. Issues Ergon. Sci. 15(1), 88–110 (2014)

ODonovan, J., Jones, R.E., Marusich, L.R., Teng, Y., Gonzalez, C., Höllerer, T.: A model-based evaluation of trust and situation awareness in the diners dilemma game. In: Proceedings of the 22nd Behavior Representation in Modeling & Simulation (BRIMS) Conference, pp. 11–14 (2013)

Parasuraman, R., Sheridan, T.B., Wickens, C.D.: Situation awareness, mental workload, and trust in automation: viable, empirically supported cognitive engineering constructs. J. Cogn. Eng. Decis. Making 2(2), 140–160 (2008)

Rouse, W., Morris, N.: On looking into the black box: prospects and limits in the search for mental models. Technical report 85–2, Center for Man-Machine Systems Research (1985)

Salmon, P.M., Stanton, N.A., Walker, G.H., Jenkins, D.P.: What really is going on? review of situation awareness models for individuals and teams. Theor. Issues Ergon. Sci. 9(4), 297–323 (2008)

Sensoy, M., Yilmaz, B., and Norman, T.J.: Stage: Stereotypical trust assessment through graph extraction. Comput. Intell. (2014)

So, R., Sonenberg, L.: Situation awareness in intelligent agents: foundations for a theory of proactive agent behavior. In: IEEE/WIC/ACM International Conference on Intelligent Agent Technology, (IAT 2004), pp. 86–92 (2004)

Teng, Y., Jones, R., Marusich, L., O’Donovan, J., Gonzalez, C., Hollerer. T.: Trust and situation awareness in a 3-player diner’s dilemma game. In: 2013 IEEE International Multi-Disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support (CogSIMA), pp. 9–15, February 2013

Tenney, Y.J., Pew, R.W.: Situation awareness catches on: what? so what? now what? Rev. Hum. Factors Ergon. 2, 1–34 (2006)

Winter, F., Kataria, M.: You are who your friends are: An experiment on trust and homophily in friendship networks. Jena Economic Research Papers 2013–044, Friedrich-Schiller-University Jena, Max-Planck-Institute of Economics, October 2013

Acknowledgments

We thank Soufiane Bouarfa for his involvement and contribution in the development of the paper and especially the case study.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Aydoğan, R., Sharpanskykh, A., Lo, J. (2015). A Trust-Based Situation Awareness Model. In: Bulling, N. (eds) Multi-Agent Systems. EUMAS 2014. Lecture Notes in Computer Science(), vol 8953. Springer, Cham. https://doi.org/10.1007/978-3-319-17130-2_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-17130-2_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-17129-6

Online ISBN: 978-3-319-17130-2

eBook Packages: Computer ScienceComputer Science (R0)