Abstract

The contribution deals with sequential distributed estimation of global parameters of normal mixture models, namely mixing probabilities and component means and covariances. The network of cooperating agents is represented by a directed or undirected graph, consisting of vertices taking observations, incorporating them into own statistical knowledge about the inferred parameters and sharing the observations and the posterior knowledge with other vertices. The aim to propose a computationally cheap online estimation algorithm naturally disqualifies the popular (sequential) Monte Carlo methods for the associated high computational burden, as well as the expectation-maximization (EM) algorithms for their difficulties with online settings requiring data batching or stochastic approximations. Instead, we proceed with the quasi-Bayesian approach, allowing sequential analytical incorporation of the (shared) observations into the normal inverse-Wishart conjugate priors. The posterior distributions are subsequently merged using the Kullback–Leibler optimal procedure.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Key words

1 Introduction

The rapid development of ad-hoc networks and the emergence of the so-called big data phenomenon have brought new challenges for distributed statistical data processing. For instance, the processing often needs to be decentralized, i.e. without any dedicated unit in the network. Instead, all agents are responsible for (i) taking measurements, (ii) processing them, and (iii) sharing the statistical knowledge about the (usually global) inferred parameters. In addition, the estimation should run online in many cases. This means to take observations of a dynamic process and incorporate them sequentially into the shared knowledge. This often disqualifies the popular sequential Monte Carlo (MC) approach due to the associated high computational burden. Excellent surveys on distributed estimation are the recent papers by Sayed [10] (non-MC) and Hlinka et al. [5] (MC-based).

Despite the great potential of the Bayesian paradigm in this field, its adoption is still rather the exception than the rule. From the probabilistic viewpoint, the resulting “classical” (that is, non-Bayesian) algorithms often suffer from statistical inconsistencies. For instance, point estimators are often combined without reflecting the associated uncertainty, which may lead to erroneous estimates. The first author’s work [1] aims at partially filling this gap. It proposes a fully Bayesian approach to decentralized distributed estimation with fusion, based on minimizing the Kullback–Leibler divergence. The present contribution extends the results to the case of mixture models, covered for the static cases, e.g., in [3, 8, 13].

The novelty of the proposed framework lies in a fully analytical Bayesian processing of observations and shared knowledge about the estimated parameters. To this end, the underlying theory relies on the quasi-Bayesian approach, proposed by Smith, Makov, and Titterington [11, 12] and followed by Kárný et al. [6], whose approach is adopted here. It provides analytical tractability of mixture inference by relying on point estimators where necessary. Though we focus on normal mixtures, the results are applicable to homogeneous mixtures of exponential family distributions.

2 Quasi-Bayesian Estimation of Mixture Models

Consider an observable time series {Y t , t ∈ ℕ} with Y t ∈ ℝ n following a normal mixture distribution

where N(μ k , Σ k ) denotes the kth component density, namely a normal distribution with mean vector μ k ∈ ℝ n and covariance matrix Σ k ∈ ℝ n×n, in the latter notation summarized by θ k = {μ k , Σ k }. The nonnegative variables ϕ k taking values in the unit K-simplex are the component probabilities. The number of components K is assumed to be known a priori. Furthermore, the notation θ = {θ 1, …, θ K }, ϕ = {ϕ 1, …, ϕ K } is used.

Let p k (y k | θ k ) be the probability density function of the kth component, yielding the mixture density of the form

At each time instant t the observation y t is generated by the k t th component p k (y t | θ k ), selected with probability ϕ k ,

where S k, t is the indicator function of the active component

In other words, S t = (S 1, t , …, S K, t ) can be viewed as a vector with 1 on the k t th position and zeros elsewhere, and hence follows the multinomial distribution Multi(1, ϕ).

From the Bayesian viewpoint the topological property of ϕ is crucial, as it allows its modelling with the Dirichlet distribution with parameters κ 1, …, κ K ,

conjugate to the multinomial distribution of S t . Sequential estimation of each single component mean and covariance can then proceed with the conjugate normal inverse-Wishart distribution (or normal inverse-gamma in the univariate case),

Exact knowledge of S t would make the Bayesian inference of both the component parameters μ k , Σ k and mixing probabilities ϕ easily tractable, since the product (3.3) simplifies to a single density and a single component probability. Likewise, the Bayesian inference of mixing probabilities ϕ is easy under known components, as the detection of the active one is a relatively simple hypotheses testing problem, see, e.g., [4]. However, our attention is shifted towards estimating both component parameters μ, Σ and mixing probabilities ϕ. For this sake, we need to derive the Bayesian update

where the joint prior distribution is assumed to be

The independence of ϕ and θ allows tractable computation of the posterior distribution. Indeed, this assumption is not quasi-Bayes specific.

In this case, Kárný et al. [6] propose to rely on the approach of Smith, Makov, and Titterington [11, 12] and replace the latent indicators S k, t defined in Eq. (3.4) by their respective point estimates with respect to ϕ k and θ k of the form

where

is the predictive distribution (under normal inverse-Wishart prior it is a Student’s t distribution). To summarize, the estimation of the indicator S k, t of the active component k is based on (i) testing the component membership based on the predictive likelihood (3.6), and (ii) the estimated probability of the particular component \(\mathbb{E}[\phi _{k}\vert \cdot ]\) in (3.5).

The quasi-Bayesian update then takes the weighted form of the regular update under known S t ,

If the component density is rewritten as the exponential family and the prior density is conjugate, then, as shown in the Appendix, the update of the relevant hyperparameters is particularly easy.

3 Distributed Estimation

Assume that the distributed estimation runs in a network represented by a directed or undirected connected graph G(V, E) consisting of a set of vertices V = { 1, …, N} (also called nodes or agents) and a set E of edges, defining the graph topology. The vertices n ∈ V are allowed to communicate with adjacent vertices. For a fixed vertex n, these neighbors form a complete bipartite subgraph (every neighboring vertex is connected with n) with radius 1, diameter at most 2 and of type star (unless the vertex n is of degree 1), where n is the central vertex and all other vertices peripheral. The set of vertices of this subgraph is denoted by V n .

The vertices independently observe the process {Y t , t ∈ ℕ}, taking observations y t (n), n ∈ V. These are shared within V n in the sense that each vertex n has access to y t (j) of vertices j ∈ V n and incorporates them according to the quasi-Bayesian estimation theory outlined in the previous section. That is, each node n ends with the joint posterior density

resulting from the number of \(\mathop{card}\nolimits (V _{n})\) updates of the form (3.7) and (3.8). Here, tilde denotes the statistical knowledge comprising the V n ’s information relevant to the particular variable. This step is called adaptation, e.g., [10].Footnote 1

3.1 Combination of Estimates

In the combination step [10], the vertices n ∈ V access V n ’s posterior distributions (3.9) resulting from the adaptation,

Now the goal is to represent (i.e., approximate) them by a single joint posterior \(\widetilde{\pi }_{\phi,\theta }^{(n)}\) parameterizing the mixture (3.1) in consideration. To this end, we adopt the Kullback–Leibler divergence [7] defined in the Appendix, and seek for \(\widetilde{\pi }_{\phi,\theta }^{(n)}\) satisfying

where \(\alpha _{nj} = 1/(\mathop{card}\nolimits (V _{n}))\) are nonnegative uniform weights assigned to nodes j ∈ V n summing to unity. Other weight choices, e.g. reflecting properties of the neighboring vertices are possible as well.

Let us impose an additional assumption simplifying the theory: identical order of component parameters and significantly overlapping densities π ϕ, θ (j) of all j ∈ V n . This means that the order of the components and their parameterization agrees at all vertices in V n (and hence V ). This assumption can be easily removed by incorporating detection of similar posterior distributions or enforced by starting from identical initial priors.

We exploit the following general proposition proved, e.g., in [1]. Although we consider exponential family distributions (where it provides analytically tractable results), the proposition is not limited to them.

Proposition 1.

Let π ϕ,θ (j) be the posterior probability density functions of vertices j ∈ V n and α nj their weights from the unit card(V n )-simplex. Their approximation by a single density \(\widetilde{\pi }_{\phi,\theta }^{(n)}\) optimal in the Kullback–Leibler sense (3.10) has the form

The resulting approximate posterior density hence virtually parameterizes a much richer mixture, however, the individual densities overlap by the given assumption. Then Proposition 1 gives a method for reduction to the parametrization of K components,

which, due to the structure of the conjugate priors (see Appendix) and component ordering yields

for the hyperparameters ξ, ν and κ of the prior distributions for θ and ϕ, respectively. The resulting KL-optimal posterior is then again conjugate to the model and can be used for the subsequent adaptation step.

4 Simulation Example

The simulation example deals with estimating a three-component normal mixture model, for simplicity univariate of the form

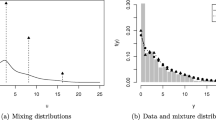

with unknown means and variances. The graph G(V, E), whose scheme is depicted together with the components and samples in Fig. 3.1, consists of a set of vertices V = { 1, …, 8}∖{6}. The sixth vertex is disconnected and serves for comparison. The vertices n ∈ V ∪{ 6} take observations y t (n) with t = 1, …, 300. Clearly, one would expect relatively easy identification of the leftmost component, while the other two may be problematic due to their closeness.

The quasi-Bayesian estimation of components k ∈ { 1, 2, 3} exploits the conjugate normal inverse-gamma prior NIG(μ k , σ k ; m k , s k , a k , b k ) = N(μ k | σ k 2; m k , σ 2 s k ) × IG(σ k 2; a k , b k ) with initial hyperparameters m k set to 0, 3, and 6, respectively; the other hyperparameters are \(s_{k} = 1,a_{k} = 2,b_{k} = 2\) for all k. The prior for the component probabilities is \(\phi \sim \mathrm{ Dir}(\frac{1} {3}, \frac{1} {3}, \frac{1} {3})\). This initialization is identical across the graph.

The progress of the point estimates of μ k and σ k is depicted in Fig. 3.2 for the isolated vertex 6 (left) and the randomly chosen vertex 4 (right). The point estimates of μ k converge relatively well in both cases, however, the variance estimates converge well only in the case of the distributed estimation (with the exception of σ 1 2). This is due to the much richer data available for the interconnected vertices. The mean squared errors (MSE) of the final estimates are given in Table 3.1.

5 Conclusion

The quasi-Bayesian method for analytically tractable sequential inference of parameters of probabilistic mixtures has been extended to the case of distributed estimation of normal mixture model with unknown mixing probabilities and component parameters. Here, distributed means that there is a graph (network) of cooperating vertices (nodes, agents) sharing their statistical knowledge (observations and estimates) with a limited subset of other vertices. This knowledge is combined at each vertex: the observations are incorporated by means of the Bayes’ theorem, the estimates are combined via the Kullback–Leibler optimal rule.

The main advantage of the method is its simplicity and scalability. Unlike Monte Carlo approaches, it is computationally very cheap. The authors have recently shown in [2] that this method is suitable for the whole class of mixture models consisting of exponential family distributions and their conjugate prior distributions.

One difficulty associated with the method is common for most mixture estimation methods, namely the initialization. In addition, merging and splitting of components after the combination of estimates would significantly enhance the suitability of the approach for dynamic cases. These topics remain for further research.

Notes

- 1.

The terms “adaptation” and “combination” were introduced by [10]. We adopt them for our Bayesian counterparts.

References

Dedecius, K., Sečkárová, V.: Dynamic diffusion estimation in exponential family models. IEEE Signal Process. Lett. 20(11), 1114–1117 (2013)

Dedecius, K., Reichl, J., Djurić, P.M.: Sequential estimation of mixtures in diffusion networks. IEEE Signal Process. Lett. 22(2), 197–201 (2015)

Dongbing, Gu.: Distributed EM algorithm for Gaussian mixtures in sensor networks. IEEE Trans. Neural Netw. 19(7), 1154–1166 (2008)

Frühwirth-Schnatter, S.: Finite Mixture and Markov Switching Models. Springer, London (2006)

Hlinka, O., Hlawatsch, F., Djurić, P.M.: Distributed particle filtering in agent networks: a survey, classification, and comparison. IEEE Signal Process. Mag. 30(1), 61–81 (2013)

Kárný, M., Böhm, J., Guy, T.V., Jirsa, L., Nagy, I., Nedoma, P., Tesař, L.: Optimized Bayesian Dynamic Advising: Theory and Algorithms. Springer, London (2006)

Kullback, S., Leibler, R.A.: On information and sufficiency. Ann. Math. Stat. 22(1), 79–86 (1951)

Pereira, S.S., Lopez-Valcarce, R., Pages-Zamora, A.: A diffusion-based EM algorithm for distributed estimation in unreliable sensor networks. IEEE Signal Process. Lett. 20(6), 595–598 (2013)

Raiffa, H., Schlaifer, R.: Applied Statistical Decision Theory (Harvard Business School Publications). Harvard University Press, Cambridge (1961)

Sayed, A.H.: Adaptive networks. Proc. IEEE 102(4), 460–497 (2014)

Smith, A.F.M., Makov, U.E.: A Quasi-Bayes sequential procedure for mixtures. J. R. Stat. Soc. Ser. B (Methodol.) 40(1), 106–112 (1978)

Titterington, D.M., Smith, A.F.M., Makov, U.E.: Statistical Analysis of Finite Mixture Distributions. Wiley, New York (1985)

Weng, Y., Xiao, W., Xie, L.: Diffusion-based EM algorithm for distributed estimation of Gaussian mixtures in wireless sensor networks. Sensors 11(6), 6297–316 (2011)

Acknowledgements

This work was supported by the Czech Science Foundation, postdoctoral grant no. 14–06678P. The authors thank the referees for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Below we give several useful definitions and lemmas regarding the Bayesian estimation of exponential family distributions with conjugate priors [9]. The proofs are trivial. Their application to the normal model and normal inverse-gamma prior used in Sect. 3.4 follows.

Definition 1 (Exponential family distributions and conjugate priors).

Any distribution of a random variable y parameterized by θ with the probability density function of the form

where f, g, η, and T are known functions, is called an exponential family distribution. η ≡ η(θ) is its natural parameter, T(y) is the (dimension preserving) sufficient statistic. The form is not unique.

Any prior distribution for θ is said to be conjugate to p(y | θ), if it can be written in the form

where q is a known function and the hyperparameters ν ∈ ℝ + and ξ is of the same shape as T(y).

Lemma 1 (Bayesian update with conjugate priors).

Bayes’ theorem

yields the posterior hyperparameters as follows:

Lemma 2.

The normal model

where μ,σ 2 are unknown can be written in the exponential family form with

Lemma 3.

The normal inverse-gamma prior distribution for μ,σ 2 with the (nonnatural) real scalar hyperparameters m, and positive s,a,b, having the density

can be written in the prior-conjugate form with

Lemma 4.

The Bayesian update of the normal inverse-gamma prior following the previous lemma coincides with the ‘ordinary’ well-known update of the original hyperparameters,

Definition 2 (Kullback–Leibler divergence).

Let f(x), g(x) be two probability density functions of a random variable x, f absolutely continuous with respect to g. The Kullback–Leibler divergence is the nonnegative functional

where the integration domain is the support of f. The Kullback–Leibler divergence is a premetric; it is zero if f = g almost everywhere, it does not satisfy the triangle inequality nor is it symmetric.

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Dedecius, K., Reichl, J. (2015). Distributed Estimation of Mixture Models. In: Frühwirth-Schnatter, S., Bitto, A., Kastner, G., Posekany, A. (eds) Bayesian Statistics from Methods to Models and Applications. Springer Proceedings in Mathematics & Statistics, vol 126. Springer, Cham. https://doi.org/10.1007/978-3-319-16238-6_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-16238-6_3

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-16237-9

Online ISBN: 978-3-319-16238-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)