Abstract

Complexity science provides a general mathematical basis for evolutionary thinking. It makes us face the inherent, irreducible nature of uncertainty and the limits to knowledge and prediction. Complex, evolutionary systems work on the basis of on-going, continuous internal processes of exploration, experimentation and innovation at their underlying levels. This is acted upon by the level above, leading to a selection process on the lower levels and a probing of the stability of the level above. This could either be an organizational level above, or the potential market place. Models aimed at predicting system behaviour therefore consist of assumptions of constraints on the micro-level – and because of inertia or conformity may be approximately true for some unspecified time. However, systems without strong mechanisms of repression and conformity will evolve, innovate and change, creating new emergent structures, capabilities and characteristics. Systems with no individual freedom at their lower levels will have predictable behaviour in the short term – but will not survive in the long term. Creative, innovative, evolving systems, on the other hand, will more probably survive over longer times, but will not have predictable characteristics or behaviour. These minimal mechanisms are all that are required to explain (though not predict) the co-evolutionary processes occurring in markets, organizations, and indeed in emergent, evolutionary communities of practice. Some examples will be presented briefly.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

JEL Classification

1 Introduction

This paper will attempt to show how the ideas of complexity, evolutionary processes, uncertainty and innovations are all inextricably bound up together. If a system can be reduced to a set of fixed mechanical interactions, providing a predictable, stable and non-innovative future, then it is not complex, not evolutionary and not uncertain. It is also not interesting, and in addition not what we encounter and must deal with in real life. Ecologies, families, groups, neighbourhoods, firms, organizations, institutions, markets and technologies are all complex in themselves and also sit within and among other complex systems. Instead of ‘equilibrium’ we find a multi-level, co-evolutionary mass of interconnected learning entities, within which pending and actual innovation is a permanent feature (Allen 1990).

As a physicist I was let loose believing that things could be modelled – and that the way to do this was to:

-

1.

Establish the components of the situation under study

-

2.

Try to take into account the interactions between these components.

The result would be a mechanical representation that would predict the behaviour of the system from any particular initial condition. Clearly, this kind of mechanical model is based on the renowned views of Newton, whose model of the planetary system revolutionised science and society. These ideas have permeated and driven science since then. The extraordinary success of technology then suggested that ‘scientific ideas’ could and should be used in biology, ecology and human systems, and that undoubtedly great things would be achieved. As a physicist, I also had been given a strong faith in the idea that: Components + Interactions = Predictive System.

Modelling, in my view, therefore appeared to provide the proper basis for decision making and policy support. Represent the situation as a system, calibrate it on reality, and then use it to predict the different possible impacts of particular interventions. This is probably the basic thought behind all scientific advice that is sought by decision and policy makers.

But people can think. They are not cogs in a machine, but people with differing roles and power, each of whom is attempting to decide what would be a good idea and how best to pursue it. And a social system is full of potential consumers and suppliers of products, services, policies and interventions. Each person is exploring and reflecting on their experiences and attempting to learn from them. This gives the system an underlying flexibility and inventiveness which means that a mechanical representation of average behaviours and types present at a given moment will inevitably fail over time. At any moment there will be average behaviours for particular sectors of activity, but over time competition and synergies within the system will lead to an evolution of the behaviours of the different players present. New technologies, new goals, new practices and new desires will grow in the system as some old ones disappear. Evolution will occur. Over time the differences between reality and any past, fixed, representation of behaviours will become large, and our actions erroneous. So, how do we construct an evolutionary model – a model capable of changing its own variables, interaction mechanisms and values of its underlying elements? In order to see this we need to study the assumptions that must be made in moving from ‘evolutionary reality’ to a ‘mechanical model’ of the behaviour of the current system.

2 Models: successive assumptions and uncertainties

What are the assumptions that we make in ‘modelling’ in order to ‘understand’ and predict the behaviour of the system under study? At a given moment we may identify the different types of element that are interacting, and make them ‘the’ variables of our model. The model dynamics changes the numbers of these different types as a result of their interactions between, for example, consumers, producers, products, services, prices and costs that are present initially. If the model is mechanical then the choice of variables, the interactions and parameters will remain fixed, while reality will evolve. Changing patterns of desirability, of cost and profit, and of production and sales will lead to the growth and decline of firms, changing patterns of consumption and to the failure of some firms and the growth of others. The real system will reflect the differential success or failure of innovations, firms and activities. So we need to take into account the fact that each population or economic sector is itself made up of diverse elements and that this micro-diversity changes over time as a result of the successes and failures that actually occur. This reflects both the detailed characteristics of the individuals or firms and also by the luck of events. If some characteristics make the individual or firm more vulnerable than others, for example, then these will be the first to go. Therefore, over time the numbers of vulnerable individuals decrease with respect to the fitter ones, thus changing the nature of the ‘average’ individual of that type. This means that within each type of variable identified in a model, the mechanisms of experimental micro-diversity within it will automatically provide exploratory, innovating capacity that will allow adaptation of existing types and also produce entirely new variables from successful, innovative types.

Clearly, in order to predict the exact behaviour of a complex system we might think we need to know; the different types of element present, the particular micro-diversity within of each of these, their precise locations and interaction characteristics and their internal moods and workings – an infinite depth of knowledge. But of course, not only is this not possible, but it would also fail to predict behaviour! This is because of ‘emergence’. In reality, elements join together to form collective entities and things around us are characterized by structure and organization at various scales. Over time, the performance of the system’ results not just from the elemental behaviours but also the structures of which they are part. The phenotype is not the genotype. Structures with various forms and symmetries possess emergent capabilities which accord differential successes over others. Micro-diversity includes these different collective entities and organizations and so innovations occur at different levels of structure, breaking previous symmetries with consequences that are completely unknowable! Life itself is the result of the emergent properties of folded macro-molecules such as proteins, and their emergent capacity to reproduce imperfectly.

Molecules, elements and systems can adopt new morphologies that break some previous symmetry leading to emergent features, capabilities, and functionalities at the level above. New variables (new types) can occur and occupy new dimensions of ‘character space’. Any particular mathematical model or representation of a system, developed at a given moment, will be in terms of the existing current taxonomy and capabilities. But in reality, both the capabilities and the taxonomy will themselves be evolving and changing over time. This is why ‘reality and the model’ will diverge over time! As the wonderful example of origami demonstrates, not only can changes occur that modify the average interaction parameters between individuals, but also ‘folding’ – new forms, technologies, techniques and practices - can lead to entirely new emergent properties and dimensions of performance (Allen 1982). This means that the assumption of structural stability cannot be taken for granted.

And selection may depend on the emergent properties at the ‘upper level’ and not be able to select upon exploratory changes occurring at the lower level.

In the case of economic situations, interacting suppliers, distributors, retailers and potential consumers, will over time drive the evolution of products, their costs, qualities and capabilities. Human agents will be reflecting and experimenting with their various approaches, technologies and ideas in their different roles as producers, suppliers and consumers. Clearly, the strength and success of these innovative flows will depend on the ‘regimes’ operating in the organizations concerned. So, there are important local conditions that affect this. Firstly, there is the possibility of individuals being able to think new thoughts, and here the richness of the local cultural and technological environment will feed new ideas. Secondly, there has to be a freedom, and mechanisms, by which new ideas, techniques and technologies can be tried out and tested. These are underlying conditions of endogenous externalities that really will be important in allowing evolutionary change to occur. Again any model that has fixed products, costs, prices and consumer preferences will rapidly be overtaken by the changing reality created by the evolving firms. Clearly the simplistic ideas of ‘homogeneous goods’ and knowledge of pay-offs from different possible, as yet untried strategies, are quite ludicrous.

Schumpeter had the genius to see that markets were not mechanical systems of robotic producers and consumers giving rise to equilibrium markets of homogeneous goods with maximal profits for producers and maximum utility for consumers. He saw that what mattered was what came into the system and what went out. His phrase for this was “Creative Destruction”, and this the same view as that coming out of complexity science. Both Darwin and Schumpeter saw and spoke a truth which has taken a long time for Science to come to terms with (Figs. 1, 2 and 3).

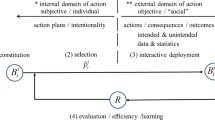

Today, we can examine carefully the successive assumptions that are made by people wishing to ‘understand’ and ‘model’ system behaviour, moving from totally undefined description through evolving structurally unstable systems, to deterministic mechanical representations to stationary states and attractors. In Fig. 4 as we move to the right from Reality (on the left) we come to successively simpler, more understandable and less detailed representations of that reality. And although Reality, on the left, may evolve qualitatively over time, adding new variables and structures, the models on the right of Fig. 4 cannot. They can only ‘run’ but not ‘evolve’. So if we monitor ‘reality’ against our models, then we shall be forced to create successive modified dynamical systems – which we shall only be able to do post-hoc. In other words, in an evolving world, our representations will always be pictures of the past!

In science, understanding and prediction are achieved in practice by making successive assumptions concerning the situation under study. This means that we exchange uncertainty about the system for uncertainty about the truth of our assumptions. If no assumptions are made then we are in the realm of narrative, where we are limited in our ability to learn or generalise or predict. When we make lots of assumptions we have simple clear predictive models but are uncertain whether our assumptions are still true. Uncertainty is an irreducible fact.

2.1 Assumption 1 – the boundary

This is that the problem we are interested in has a boundary distinguishing what is inside the system and what is in the environment. In fact it may not be clear where exactly this lies and so we really proceed by choosing an ‘experimental’ boundary and seeing whether the model that results is useful. In fact this first assumption is actually tied up with the second, because the boundary can also be defined as being the decision to include or exclude a particular element or variable from the ‘model’ and leave it in the ‘environment’. One important result that we find for complex systems is that they can possess emergent behaviours that connect them to new variables and entities that previously were not in the system. In this way, one of the important properties of a complex system is that it can itself change the boundary of the system. This means that the modeller must be sufficiently humble to admit that the initial choice of a boundary might need revision at some later time. The point is that ‘modelling’ is an experiment that seeks a representation that is useful.

2.2 Assumption 2 – evolutionary complex models – qualitative change

The second assumption concerns that of ‘classification’ in which we decide to label the different types of thing that populate our system. This might be firms or organizations, people classified according to their jobs, their skills or professional activities; so in this way we specify the variables of the system and we hope that the changing values of these variables will allow an answer to our questions about the system. We must face the fact that for example, market systems have changed qualitatively over time and that the taxonomy of the system will also change in the future. Different elements that were present have disappeared and new ones will appear in the future.

Social and economic systems such as markets have all evolved and changed over time as innovations, new technologies, new practices and markets have emerged. New types and activities emerge and others leave. Over time qualitative evolution occurs and the system is not structurally stable in that the variables - and therefore the equations describing the mechanisms and processes at work within it - can change. The key point here is whether or not the micro-diversity makes the system structurally unstable.

The point here is that instead of discussing ‘a’ mathematical model of a complex system, evolutionary change and structural instability lead us successively to a series of qualitatively different models. If a fixed set of dynamic equations might be thought to describe a particular system, then over time structural instabilities occur and a new set of equations will be required to describe the new system. So, these first two assumptions do not lead to any single mathematical model of the system, but instead to an open, diverging series of possible mathematical models that correspond to an evolving system with changing taxonomy. If we think of our assumptions as corresponding to ‘constraints’ on what the elements in the system can do, then we see that with only these two first assumptions the elements, and the organizations they are part of, are still free to change. Thinking again of the origami forms, the differential success of the elements may well result from their emergent ‘upper level’ forms and features, and ‘selection’ therefore can no longer act directly on the lower level details of the paper from which the different origami forms are made. But this in turn means that explorations, experiments and errors at the lower level of the paper are not directly visible to selective forces coming from outside and therefore cannot be stopped from happening. Only if a lower level change affects the emergent, upper level features will external selection operate and lead to the amplification or rejection of a particular change. The internal freedom and the endogenous externalities, lead to the emergence of new types of element, and to successive new systems. Structural stability is not guaranteed when that much freedom is present in the system.

This multi-level reality means that evolution cannot be stopped. This is because ‘selection’ cannot get at the internal levels directly, but only indirectly through the relative performance at the level above. So, providing there is diversity and freedom at the level of individuals, and people are not forced to keep silent or to conform to existing ideas, then new ideas will occur and some will be tried out. This may also be enhanced by the presence of rewards for successful new ideas and an ambience of encouragement for their conception. Since this will occur somewhere then any stable period of interacting forms will always eventually undergo an instability, after perhaps a long period of protected ‘exploration’ at the genotypic level below. We see that phenotypes carry the emergent properties of genotypes, and that selection only operates clearly on phenotypes. The phenotype ‘shields’ the genotype’s internal details from immediate view and in this way ensures that experimentation and drift will definitely occur at the lower level. This is an absolutely vital point. Novelties, potential innovations and new ideas need to be nurtured and protected within an organization until they are ready to face the outside world.

We see that internal ‘micro-diversity’ will be increased constantly at the lower level by probabilistic events, the encounters between different types of individual and local freedom. But it will be decreased through the differential success of the diverse individuals. And this is the mechanism by which the overall ‘average’ moves in the multidimensional space of the phenotypes, responding to changing environment and also co-evolving with the other types. The ‘dynamical system’ is changing qualitatively as well as quantitatively, as new variables appear and others disappear. The system is structurally unstable in that its very constitution can and does change over time.

This is the reality of ‘creative destruction’ that Schumpeter discussed. Instead of arguing that markets are characterized by the optimized behaviour of producers and consumers, that has already led to equilibrium, what really mattered over time was the inflow of innovations (new types) and the disappearance of old ones. So Schumpeter divined correctly that classical and neo-classical market views were really pitched at the wrong time-scale, and looked at the wrong things. What he understood was that what really mattered was the evolutionary process in which innovations, new types of economic activities and technologies invaded the system usually replacing old ones.

2.3 Assumption 3 – probabilistic dynamics

But, if an economist or academic wishes to make money from his models then they must come up with a predictive model. In moving to the right of our fundamental diagram of Fig. 3 the next assumption therefore is that our system is structurally stable—the variables, taxonomy, types of individual or agent do not change.

Our model will now describe the changes in numbers of a given set of types of individual or agent. It will be assumed that no new variables can emerge. The changing values of these variables result from the rates of production, sales, growth, declines and movements in and out of the system. But the underlying rates of the different microscopic, local events can only be represented by probabilities of such events occurring. These stochastic events lead to probabilistic equations—the Master or the Chapman-Kolmogorov equations. These govern the changing probability distribution of the different variables. They allow for the occurrence of all possible sequences of events, taking into account their relative probability, rather than simply assuming that only the most probable events occur (Fig. 5).

The collection of all possible dynamical paths is taken into account in a probabilistic way. But for any single system this allows into our scientific understanding the vital notion of ‘freedom’, ‘luck’ and ‘uncertainty’ in its behaviour. Although, a system that is initially not at the peak of probability will more probably move towards the peak, it can perfectly well move the other way; it just happens to be less probable that it will. A large burst of good or bad luck can therefore take any one system far from the most probable average, and it is precisely this constant movement that probes the stability of the most probable state. Such probabilistic systems can ‘tip’ spontaneously from one type of solution/attractor to another. It also points us towards the very important idea that the ‘average’ for a system should be calculated from the distribution of its actual possible behaviours—not that the distribution of its behaviour should be calculated by simply adding a Gaussian distribution around the average. The Gaussian is a distribution much loved of economists which expresses the spread of random shots around a target. In reality though, the actual distribution is given by the full probabilistic dynamics and can be calculated precisely. It will in general have a complicated mathematical form, and will be changing over time according to the probabilistic dynamics.

2.4 Assumption 4: either a) or b)

-

a)

Assume the Probability is Stationary:

The assumption that the probability distribution has reached a stationary state leads to the idea of the non-linear dynamics leading to ‘self-organized criticality’ and to the power law structure that often characterizes them.

-

b)

Probability remains sharply peaked: System Dynamics

Instead of a) we can look at the ‘average’ dynamics of the system and see where this leads. The full probabilistic dynamics is a rather daunting mathematical problem of the changing probability distributions over time. However, much of the uncertainty can be taken away as if by magic by changing slightly the question that is being asked of the model. The change is so slight that many people simple do not realize that the problem has been vastly simplified by the artifice of making this particular assumption.

Instead of asking ‘what can actually happen to this system?’ (requiring us to deal with all possible system trajectories according to their probability), we can ask ‘what will most probably happen?’ then we have a much simpler problem. We do this by assuming that only the most probable events occur; that things actually happen at their average rates. Most people do not realize the magnitude of the difference between these two questions, but it is the difference between a heavy set of probabilistic equations describing the spreading and mixing of possible system trajectories into the future, to a representation in which the system moves cleanly into the future along a single, narrow trajectory. This simple deterministic trajectory appears to provide the perfect ability to make predictions and do ‘what if’ experiments in support of decision or policy making. It appears to tell us exactly what will happen in the future with or without whatever action we are considering taking. These sorts of models are called ‘system dynamics’ and are immensely appealing to decision makers since they seem to provide predictions and certainty.

They appear to predict the future trajectory of the system and therefore seem attractive in calculating the expected outcomes of different possible actions or policies. They can show the effects of different interventions and allow ‘cost/benefit’ calculations. They can also show the factors to which the real situation is potentially very sensitive or insensitive, and this can provide useful information. But systems dynamics models are deterministic; they still only allow for one solution or path from a particular starting point.

Despite the limitations of such models, their ‘predictions’ allow comparisons with reality and can reveal when the model is failing. Without this, we might not know that the system had changed! And so this provides a basis for a learning experience where the each model is constantly monitored against reality to see when something has changed.

2.5 Assumption 5 – solutions of the dynamical system

The final assumption that one can make to simplify a problem even further is to consider not the System Dynamics itself as it changes over time, but the possible long term solutions of the dynamical equations—the ‘attractors’ of the dynamics. This means that instead of studying how the system will run, one looks simply at where it might ‘run to’. Of course, non-linear interactions can lead to different possible ‘attractors’—equilibrium points, permanent cycles, or chaotic attractors. This could be useful information – at least for some time.

But, of course, over longer times, the system will evolve and any set of equations will become untrue, and the possible attractors will also. In reality there is a trade-off between the utility and simplicity of predictions, and the strength of the assumptions that are required in order to make them. Of course, it is much easier to ‘sell’ a model that appears to make solid predictions. Because of this scientists often have had to underplay the real level of uncertainty and doubt about the possible consequences of interventions, actions, technologies and practices, allowing the seemingly solid business plans and policy consequences to be presented as persuasively as possible. In any case, usually people wish to hear clear statements that imply knowledge and certainty and find the actual uncertainty and risk much more disturbing. People will often prefer a lie that comforts to the uncomfortable truth.

In giving advice it is of critical importance to know how long the assumptions made in the calculations may hold. This is how long the actual complexity and uncertainty of ‘reality’ can be expected to remain hidden from view. Of course, believers in ‘free markets’ can get around this problem by simply stating that whatever occurs is by definition the best possible outcome. But if we wish to give advice to particular players within the system then we will need to develop models that can explore possible futures as well as possible.

This section has focused on the importance of micro-diversity in a system, which provides an automatic range of possible innovations and responses to threats. At any given time, of course, we would not be able to ‘value’ different types of micro-diversity, since we would not know which would in fact be important for some future problem. A theory based on the evolutionary emergence of micro-diversity, and the way that evolution itself adjusts its range (The Theory of Evolutionary Drive) was developed some time ago (Allen and McGlade 1987; Allen 1988) but has not been much commented upon, even by evolutionary economists. Instead of a complex system being successfully described by any fixed set of components and mechanisms we see that the system of components and mechanisms is not fixed, but is itself changing with the events that occur. As the system runs, so it is changed by its running.

3 Modelling human systems

Behaviours, practices, routines and technologies are invented, learned and transmitted over time between successive actors and firms, and we shall discuss how the principles of Evolutionary Drive can be used to understand them.

3.1 Emergent market structure

Since the “invisible hand” of Adam Smith, the idea of self-organization has been present in economic thought (e.g. Veblen (1898)). However, towards the end of the 19th century mainstream economics adopted ideas from equilibrium physics as the basis for understanding. This led us to neo-classical economics that was strong on very general and rigorous theorems concerning artificial systems, but rather weak on dealing with reality in practice. Today, with the arrival of computers able to “run” systems instead of us having to solve them analytically, interest is burgeoning in complex systems simulations and modelling. Complex systems thinking offers us an integrative paradigm, in which we retain the fact of multiple subjectivities, differing perceptions and views, and indeed see this as part of the complexity, as a source of creative interaction and of innovation and change. Building the model is in itself extremely informative—since it shows us mechanisms and ideas that were not apparent.

For example, in building the model we quickly find that a decision rule that expands production when there are profits and decreases it when there are losses will not allow firms to launch a new product, since every new product must start with an investment - a loss. However, in the real world, firms are created and new products and services are developed. Therefore the “equation” governing the increase or decrease of production volume cannot be based on the actual profits made instantaneously. This point is discussed in (Allen and Strathern 2004).

The next idea we could use in the model could be that an agent would use “expected” profits to adjust their production volume. So, firms moving into a new market area must be doing so because, they think that on balance their investment cost will be more than balanced by future profits. However, if we try to put this in our model we find that it is actually impossible for an agent to calculate expected profits for different pricing strategies because he does not know the strategies of other firms. Profits in each firm will depend on the products and prices of other firms and none of them know what the others will do.

Of course we could use the sort of neo-classical economics idea which would say that if firms are present then they must be operating with a strategy that maximises profits. And if no firms ever went bankrupt then we might have to accept such an idea—but in reality we know that many firms do go bankrupt and therefore cannot have been operating at an optimal strategy. An examination of the statistics concerning firm failures (Foster and Kaplan 2001; Ormerod 2005) shows us that whatever it is that entrepreneurs or firms believe, they are quite often completely wrong. The bankruptcies, failure rates and life expectancies of firms all attest to the fact that the beliefs of the founders, managers or investors are often not correct. In trying to build our model we are faced with the fact that firms cannot know what strategy will maximize profits. The market is not the theatre of perfect knowledge but instead is the theatre of possible learning.

By participating, players may find strategies, products, and mark-ups that work. Schumpeter (1962) was correct. The actual market is a temporary system of interacting firms that have entered the market and have not yet gone bankrupt. Some firms are growing and others shrinking. But with the entry into the market of new firms and products will come innovations and innovative organizations, and so over time the ‘bar’ will be raised by successive ‘generations’ of firms. Instead of supposing ‘magical entrepreneurs and consumers’ with perfect information and knowledge, our model shows us how real agents may behave with knowledge limited to what is realistically possible. They cannot calculate strategies and behaviours that fulfil (magically) the assumptions of (touchingly naïve) neo-classical economists. Our model shows us the many possible, market trajectories into the future. None of these correspond to a ‘global’ optimum (maximum profits and utility) and indeed there is no global agent to oversee the process. Each different trajectory is a possible future history of the system and will bring corresponding winners and losers, and particular patterns of strategies, imitations and routines.

The complex, evolutionary market model has been presented before (Allen et al. 2007a; Allen 2001).

Figure 6 shows us the model’s structure. There can be any number of interacting firms, but in the examples we employ there will be up to 18 present at any given moment. The internal structure of each firm is represented in the illustration labelled “firm 1”. Production has fixed and variable costs, that depend on the quality of the product. It also needs sales staff to sell the stock to potential customers. On the right of the figure, there are three different types of potential customer, and we have chosen here to distinguish between three groups differing in their price sensitivity. The point is that potential customers are sensitive to the price/quality of the different products on offer, and so will be attracted differentially to the different firms competing in the market place, thus creating the ‘selection mechanism’. Profits from sales allow increased production and pay off any debts. In this way, our model provides an evolutionary theatre within which competing and complementary strategies are generated, tested and retained or rejected.

The firm tries to finance its growth and avoid going near its credit limit. If it exceeds its credit limit then it is declared bankrupt and closed down. The evolutionary model then replaces the failed firm with a new one, with new credit and a new strategy of price and quality. Again this firm either survives or fails. The model assumes that managers want to expand to capture their potential markets, but are forced to cut production if sales fall. So, they can make a loss for some time, providing that it is within their credit limit, but they much prefer to make a profit, and so attempt to increase sales, and match production to this.

Our model is somewhat different from those of others (March 1991, 2006; Rivkin 2000; Rivkin and Siggelkow 2003, 2006, 2007; Siggelkow et al. 2005) and indeed from those inspired by Nelson and Winter (1982). In such models instead of modelling both the supply the demand sides as we have, the demand side performance of a product is inferred in an abstract way, and is generally given some randomness. Here, we model explicitly both supply and demand, though in a relatively simple manner, and the ‘uncertainty’ resides in the impossibility of the firm agents knowing beforehand what the real pay-off will be for a given price/quality strategy. Silverberg and Verspagen (1994), have also developed a model that is closer to ours.

3.2 Exploring the three meta-strategies

Running the model tells us that it is not the exact fixed strategy of a firm that matters. It is how successfully it can be changed if it isn’t working! Learning what works for you is what matters, though the ‘success’ of any particular behaviour will always be temporary.

The question that we want to investigate is how firms change their strategies (quality, mark up, publicity, research etc.) over time and in the light of experience. We now consider the results of running a model with 18 competing firms with three different ways (meta-strategies) to CHANGE what they are doing. They can be:

-

Learners - test the profits that would arise from small changes in quality or price, and move their production in the direction of increasing profits.

-

Imitators - move their product strategy towards that of whichever firm is currently making most profits.

-

Darwinists - adopt a strategy ‘intuitively’ and then stick with it.

In the simulations here we study the interaction and outcomes of 6 learning firms, 6 imitating firms and 6 Darwinists. Any firm that goes bankrupt is replaced by another with the same meta-strategy but starting from a different initial position. We can then run our model repeatedly and see how well the three meta-strategies (Learning, Imitation, Darwinist) perform.

If we repeat the simulations for different random sequences (seeds 1 to 10) then we find the overall results of Fig. 7. This is the average outcome arising from ten simulations, but with different initial and re-launch choices. The message from Fig. 8 seems clear. Learning by experiment is the best meta-strategy. Using intuition and individual belief (Darwinist) is good, and imitating winners is the least successful meta-strategy. Imitators seem to arrive late to a strategy, and then suffer the competition of both the original user and that of other imitators. We can calculate the spread of results obtained by the different meta-strategies. There is some overlap of outcomes and so in a particular case we can probably never say with absolute certainty that the ‘learning’ strategy will ‘definitely’ be better than the others, only that it will ‘most probably’ be better than the others. If a manager owned the simulation model, then it would still not guarantee they definitely win, but only increase the probability of winning.

This result shows clearly the ‘limits to knowledge’ (Allen et al. 2007a), that future trajectories and strategies of other firms cannot be known, and therefore that there cannot be a corresponding ‘perfect’ strategy. This puts a real limit on any predictive ‘horizon’ which may be until the next firm changes its strategy, or if it is pursuing a meta-strategy (of learning or imitating for example) how this will change over time. This example is limited to a discussion of innovations concerning the quality and price of a product or service, but the model can equally well look into more ambitious innovations of technology or research. Our model shows us that the basic process of “micro-variation” and differential amplification of the emergent behaviours is the most successful process in generating a successful market structure, and is good both for the individual players and for the whole market, as well as its customers (Metcalfe 1998).

3.3 Organizational evolution

In discussing how firms change their performances through product and process innovation, we can refer briefly to an example that has been published before (Allen et al. 2007a, b). Changing patterns of practices and routines are studied using the ideas of Evolutionary Drive. For a particular industrial/business sector we find a “cladistic diagram” (a diagram showing evolutionary history) showing the succession of new practices and innovative ideas within a particular economic activity. This idea looks at organizational change in terms of the emergence of particular ‘bundles’ of practices and techniques with performances that allow survival in the market. The ideas come from McKelvey (1982, 1994), McCarthy (1995), McCarthy et al. (1997).

For the automobile sector the observed bundle of possible ‘practices’, our “dictionary”, allows us to identify 16 distinct organisational forms – 16 bundles of practice that actually exist:

-

♦ Ancient craft system; Standardised craft system; Modern craft system

-

♦ Neocraft system; Flexible manufacturing; Toyota production

-

♦ Lean producers; Agile producers; Just in time

-

♦ Intensive mass producers; European mass producers;

-

♦ Modern mass producers; Pseudo lean producers; Fordist mass producers

-

♦ Large scale producers; Skilled large scale producers

Cladistic theory calculates backwards the most probable evolutionary sequence of events. The key idea in the work presented here is to use a survey of manufacturers that explores their estimates of the pair-wise interactions between the practices. In this way we can ‘predict’ the synergetic ‘bundles’ of working practices and understand and make retrospective sense of the evolution of the automobile industry.

The evolutionary simulation model examines how the random introduction of new practices and innovations is affected by the changing ‘receptivity’ reflecting the overall effects of the positive or negative pairwise interactions. As a result of the particular sequence of attempted additions particular bundles of practices and techniques emerge that correspond to different organizational forms.

The model can generate the history of a particular ‘firm’ which launches new practises randomly, and grows where there is synergy between the practices. Figure 8 shows us one possible history of a firm. The particular choices of practices introduced and their timing allows us to assess how their performance evolved over time, and also assess whether they would have been eliminated by other firms.

Overall performance of each firm is a function of the synergy of the practices that are tried successfully in the context of the other evolving firms. The particular emergent attributes and capabilities of the organisation result from the particular combination of practices that constitute it. Different simulations lead to different organizational structures. The actual emergent capabilities and qualities that would be desirable for any particular firm cannot be predicted in advance, since the performance of any particular organization will depend on that of the others with which it is co-evolving. So, we cannot pre-define a desired structure through some pre-calculated rationality. Firms, markets and life are about an on-going, imperfect learning process that both creates and requires uncertainty.

3.4 Emergent supply chain performance

These ideas were applied to the study of the aerospace supply chain (Rose-Anderssen et al. 2008a, b). The aerospace supply chain actually needs a series of different capabilities if it is to succeed. They are:

-

1.

Quality

-

2.

Cost Efficiency

-

3.

Reliable Delivery

-

4.

Innovation and Technology

-

5.

Vision.

The stage in the life cycle of the product, or the market situation, determines what mix of these is required as the platform or product moves from design and conception, through initial prototyping and production to an eventual lean production phase. In addition 27 key characteristics or practices were identified that could characterize supply chain relationships. A questionnaire was formulated to enquire into the opinion of important individuals within these key aerospace supply chains in order to understand better the underlying beliefs that affect the decisions concerning the structure of supply chains. The questionnaire considered the intrinsic improvement of a given practice and the possible interaction between pairs of practices. The details have been given elsewhere but we can summarize in Fig. 9.

The important point about the evolution of systems is that they concern both the elements inside a system, that constitute its identity, and also the external environment in which they are attempting to perform and the requirements that are perceived for successful performance.

Our model then explores the random launching of practices under different performance selection criteria corresponding to our five basic performance qualities. The practices retained are on the whole synergetic. We can look at the patterns of synergy that have been selected, Fig. 10.

Knowledge of the effects of interaction can therefore be of considerable advantage in creating a successful supply chain. Even if the pair interaction terms are considered to be 50 times smaller than the direct effects of a practice there are still synergy effects of up to 75 %. This shows the importance of considering the systemic, collective effects of any organization or supply chain. It is another example of the ‘ontology of connection’ and not that of ‘isolation’.

3.5 Simple self-organizing model of UK electricity supply and demand

Another important area of application concerns the future of the UK electricity supply. The model is based on an earlier ‘self-organizing’ logistics model (Allen and Strathern 2004) in which the structure of distribution systems emerged from a dynamic, spatial model.

We shall briefly summarize the main points. One of the main ideas adopted in order to reduce our carbon emissions is to ‘electrify’ transport and heating as well as all the current uses. This means that, unless we accept a radical change in lifestyle (e.g. almost no travel, or heating!), over the next 40 years electricity supply must approximately triple! And at the same time we must decrease our carbon emissions by 80 % of their 1990 value. This means that in large part we will have to add new, carbon light capacity across the country. The problem we are examining here therefore is that of ‘when to put what, where’ in the intervening years between now and 2050.

The model therefore first makes an annual calculation of the relative attractivity of the possible list of energy investments—their type, size and location—for a particular set of evaluation criteria. The aim is to both generate the power required and to reduce UK emissions by 80 %. Our model can therefore explore different pathways, perhaps favoured by different types of agent, of different energy supply investments. In this way such a model can be the focus of discussion among the numerous stakeholders as to the relative attraction of the different pathways. Our choice model will therefore include a multi-dimensional value system that will reflect financial costs, CO2 reduction and other possible considerations.

The most basic core of the attractivity of a particular technology E is given by:

CO2(E) is the table of values for the carbon emissions per Kwh from the different energy sources and LCE(E) is the table of costs for different types of generation per Kwh. Va1 and Va2 reflect the relative importance that we attach to carbon reduction and to financial cost.

In addition though, the attractivity is higher if the location i has a high stress (demand/supply) and also if the location has a particular advantage or disadvantage for the technology in question. For example, if the source is wind power, then a windy location offers far greater returns. For nuclear power there will be a need for cooling water and a location that is not too close to large populations and that as already has had nuclear power on it will also be highly advantageous. Similarly, for coastal power, (tidal or wave) we need to be on the coast but also some stakeholders may view the ecological impact of a tidal barrage (such as the Severn Barrage for example) to outweigh the value of the electricity generated. We can also allow for the ‘saturation’ of a zone as a function of capacity that is already installed. So, there is a limit to how many wind farms one can put in a zone, and waste and biomass incineration require populations or land to provide the raw materials. Each location also has its own ‘predispositions’ that affect its attractivity. The environmental impacts of the energy production can be the corresponding carbon emissions (kgs of CO2 per kWh of electricity) but could also represent radiation risks, noise, or impact on wild life.

Generally speaking however, the most common factor taken into account by actual decision makers will be financial costs. At the micro-level, however, local decisions concerning solar, wind and CHP schemes would continue reducing to some extent the supply stresses present.

In this preliminary version of the model, the geographical space for the simulations was 100 points representing the UK. The model starts from the actual situation in 2010 and then looks at the annual changes brought about by: end of service closures, closures of coal capacity, new capacity either gas, nuclear, Wind (on or Off shore), marine, biomass and solar, the continual growth of demand and of local schemes for solar, wind and CHP. Each year the model makes the changes/investments suggested by the relative attractivity, in a particular type of generation technology, at a given place. It then recalculates the pattern of supply and demand. However it also calculates the CO2 emissions and if the supply is deviating too much from the trajectory towards an 80 % reduction by 2050, then the attraction of low carbon generation increases compared to higher emission technologies. In this way the system is guided towards achieving the policy aims. The costs involved take into account the transmission losses in corresponding to the spatial pattern of generation and of demand. Our model can therefore help to create how a more ‘compact’ pattern of generation.

To cut emissions by 80 % while increasing electricity production three-fold means that we must add a great deal of low carbon generation capacity (Wind, Hydro, Nuclear). Running the model generates the changing spatial distribution of generating capacity of different kinds. In Fig. 11 we show a result for wind generation under one particular scenario.

In this run the model shows us that off-shore rises steadily to 46 GWs and on-shore spreads widely across the landscape rising to 41 GWs by 2050. So much wind power raises the problem of intermittency and of the need for standby capacity or storage to ensure continuity of supply. Clearly, the larger the geographical spread of wind generation the lower the coherence of intermittency.

The model can be used to explore different possible ways of achieving the required 2050 situation Fig. 12 and can also be used to rapidly explore the effects of new technologies or changing costs of different types of generation. Simple models of complex systems can be useful for rapid, strategic explorations.

4 Conclusions: living and learning

In trying to deal with the world we develop an ‘interpretive framework’ with which we attempt to navigate reality and to understand opportunities and dangers. This is really a set of beliefs about the entities that make up reality and the connections that exist between them. We see that this is really a qualitative ‘model’ and perhaps, sometimes, this can even be transformed into a quantitative mathematical model. But over time, our interpretive frameworks are constantly tested by our observations and experiences. Our beliefs provide us with expectations concerning the probable consequences of events or of our actions and when these are confirmed then we tend to reinforce our beliefs. When our expectations are denied however, we must face the fact that our current interpretive framework – set of beliefs – is inadequate. But there is no scientific method to tell us how to modify our views. Why is it not working? Are there new types or behaviours present? Or are their interconnections incorrect? Importantly however, there is no scientific, unique way to change our beliefs. In reality, we simply have to experiment with modified views and try to see whether the new system seems to work ‘better’ than the old.

In addition, when our expectations are thwarted, we can only draw on our beliefs and experiences to decide ‘how to change our ideas’. This may suggest to us which of our beliefs are most likely mistaken, whose ideas or comments we should trust and listen to, and who’s we should discard. Of course, some people may be happy to take on new ideas every day, while others may choose never to modify their beliefs, feeling that the increasing evidence of inadequacy is merely a test of their faith. Figure 13 was originally drawn so as to represent ‘the honest scientist’ seeking the truth. But it was pointed out to me that in reality people are much more complex than that. Although some people may learn, others will simply find reasons to ignore or reject any evidence that is contrary to their current beliefs or may detract from their own status or prestige. So micro-diversity encompasses not only the different interpretive frameworks people may have, but also how willing and equipped people are to change their beliefs and understanding and to adapt to what is happening. Complexity therefore suggests a ‘messy cognitive evolution’ in which some people change their beliefs and models, generating different behaviours and responses, which lead to differential success. This allows some beliefs and interpretive frameworks to evolve with the real world, as they are tested and either retained or dropped according to their apparent success. It is therefore clearly very important for an organization that wants to ‘learn’, to have employees that are willing to participate honestly in the learning process. This implies firstly that individuals are diverse and that the local organizational ambiance encourages open exchanges and discussions. It probably requires continual disagreement and rivalry among staff, but within a recognition of the overall good of the organization. This all points to the idea that we should really be looking at actions and events as “experiments” that test our understanding of how things work. Clearly, given the lack of any clear scientific method on how to change one’s own beliefs, many may simply adopt the views of their preferred group, and simply mimic their responses without, necessarily, understanding the basis of these. This may explain the importance of ‘social networks’, and the ‘wisdom’ or ‘idiocy’ of crowds.

From these discussions we can derive some key points about evolution in human systems.

-

Evolution is driven by the noise/local freedom and micro-diversity to which it leads—meaning that not only are current average ‘types’ explained and shaped by past evolution but so also are the micro-diversity and exploratory mechanisms around these.

-

There is a selective advantage to the power of adaptation and hence to the retention of noise and micro-diversity generating mechanisms.

-

This means that aggregate descriptions will always only be short term descriptions of reality, though useful perhaps for operational improvements

-

Successful management must ‘mimic’ evolution and make sure that mechanisms of exploration and experiment are present in the organization. These are endogenous externalities. Though they are not profitable in the short term they are the only guarantee of survival into the longer term

-

History will be marked by successive models of complex, synergetic dynamical systems: for products it is bundled technologies; for markets it is certain bundles of co-evolving firms; for organizations it is bundles of co-evolving practices and techniques; for knowledge more generally it is bundles of connected words, concepts and variables that emerge for a time.

-

Living systems create a world of connected, co-evolving, multi-level structures, at times temporally self-consistent and at other times inconsistent.

The world viewed through ‘complexity’ spectacles is unendingly creative and surprising. Some surprises are serendipitous, others are unpleasant. We need to explore possible futures permanently in order to see when problems may occur or when something unexpected is happening. And we need to do so openly, allowing our assumptions, mechanisms and models to be studied, criticized and improved, so that we can react quickly. So, we are part of the system that we study, and the world, and its complexity will continue evolving with us as part of itself. We cannot be objective and there will be multiple truths. Even the past, where we might imagine certainty might exist, can be interpreted in a multiplicity of ways.

This new understanding of the world might appear to say that the complexity of the world is such that we cannot find a firm base for any actions or policies, and so we should perhaps just pursue our own self-interest and let the world look after itself. In many ways this would resemble behaviour resulting from belief on the ‘invisible hand’ of neo-classical economics. But this would be a false interpretation of what we now know of complexity. We know that we must operate in a multi-level, ethically and politically heterogeneous world where both wonderful and terrible things can happen. The models discussed here provide us with a better view than before of some possible futures, and allows us to get some idea of the likely consequences and responses to our actions and choices. We could even imagine ‘Machiavellian’ versions of complexity models that contained several layers of expected responses and countermoves on the part of the multiple agents interacting. Complexity tells us that our understanding of the system may be good for the short term, reasonable for the medium but will inevitably be inadequate for the long. It also tells us that sometimes there can be very sudden major changes—such as the ‘financial crisis’ of 2007/8. This means that policies should always consider resilience as well as efficiency or cost, as the one thing we do now know is that systems that are highly optimized for a single criteria such as profits or costs will crash at some point. Creative destruction data tells us that most firms fail quickly, some enjoy a period of growth, but all eventually crash (Foster and Kaplan 2001).

The other reason to develop and use complex systems models to reflect upon and formulate possible policies and interventions is that when a plan is chosen and put into action, the model can be used to compare with reality. Then unexpected deviations and new phenomena can be spotted as soon as possible and plans and models revised to explore a new range of possible futures.

In this complex systems’ view then, history is still running, and our interpretive frameworks and understanding are partial, limited and will change over time. The most that can be said of the behaviour of any particular individual, group or organization in an ecology, a socio-cultural system or a market, is that its continued existence proves only that it is not dead yet—but not that it is optimal. Optimality is a fantasy that supposes the simplistic idea of a single ‘measure’ that would characterize adequately evolved and evolving situations. In reality there would always need to be ‘sub-optimal’ redundancies, seemingly pointless micro-diversity and freedom if long term survival is to occur. In reality there are multiple understandings, values, goals and behaviours that co-habit a complex system at any moment, and these change with the nature of the elements in interaction as well as with their changing interpretive frameworks of what is going on. There is no end to history, no equilibrium and no simple recipes for success. But, how could there be?

References

Allen PM (1982) Evolution, modelling and design in a complex world. Environ Plan B 9:95–111

Allen PM (1988) Dynamic models of evolving systems. Syst Dyn Rev 4(1–2):109–130

Allen PM (1990) Why the future is not what it was. Futures 22:554–570

Allen PM (1994) Evolutionary complex systems: models of technology change. In: Leydesdorff L, van den Besselaar P (eds) Chaos and economic theory. Pinter, London. ISBN 1 85567 198 0 (hb) 1 85567 202 2 (pb)

Allen PM (2001) A complex systems approach to learning, adaptive networks. Int J Innov Manag 5(2):149–180. ISSN 1363–9196

Allen PM, McGlade JM (1987) Evolutionary drive: the effect of microscopic diversity, error making & noise. Found Phys 17(7):723–728

Allen P, Strathern M (2004) Evolution, emergence and learning in complex systems. Emergence 5(4):8–33

Allen PM, Strathern M, Baldwin JS (2007a) Complexity and the limits of learning. J Evol Econ 17:401–431

Allen PM, Strathern M, Baldwin JS (2007b) Evolutionary drive: new understanding of change in socio-economic systems. Emergence, Complexity and Organization 8(2):2–19

Foster R, Kaplan S (2001) Creative destruction. Doubleday, New York

March JG (1991) Exploration and exploitation in organizational learning. Organ Sci 2(1):71–87

March JG (2006) Rationality, foolishness and adaptive intelligence. Strat Manag J 27:201–214

McCarthy I (1995) Manufacturing classifications: lessons from organisational systematics and biological taxonomy. J Manuf Technol Manag- Integr Manuf Syst 6(6):37–49. ISSN: 1741-038X

McCarthy I, Leseure M, Ridgeway K, Fieller N (1997) Building a manufacturing Cladogram. Int J Technol Manag 13(3):2269–2296. ISSN (Online): 1741-5276 - ISSN (Print): 0267-5730

McKelvey B (1982) Organizational Systematics – Taxonomy, Evolution, Classification. University of California Press, Berkeley, Los Angeles, London. ISBN 0520042255

McKelvey B (1994) Evolution and organizational science. In: Baum J, Singh J (eds) Evolutionary dynamics of organizations. Oxford University Press, pp 314–326. ISBN13: 9780195085846, ISBN10: 0195085841

Metcalfe JS (1998) Evolutionary economics and creative destruction. Routledge, London. ISBN: 0415158680

Nelson R, Winter S (1982) An evolutionary theory of economic change. Harvard University Press, Cambridge

Ormerod P (2005) Why most things fail. Faber and Faber, London

Rivkin J (2000) Imitation of complex strategies. Manag Sci 46(6):824–844

Rivkin J, Siggelkow N (2003) Balancing search and stability: interdependencies among elements of organizational design. Manag Sci 49(3):290–311

Rivkin J, Siggelkow N (2006) Organizing to strategize in the face of interactions: preventing premature lock-in. Long Range Planning 39:591–614

Rivkin J, Siggelkow N (2007) Patterned interactions in complex systems: implications for exploration. Manag Sci 53(7):1068–1085

Rose-Anderssen C, Ridgway K, Baldwin J, Allen P, Varga L, Strathern M (2008a) The evolution of commercial aerospace supply chains and the facilitation of innovation. Int J Electron Cust Relationship Manag 2(1):307–327

Rose-Anderssen C, Ridgway K, Baldwin J, Allen P, Varga L, Strathern M (2008b) Creativity and innovation management 17(4):304–318

Schumpeter J (1962) Capitalism, socialism and democracy, 3rd edn. Harper Torchbooks, New York

Siggelkow N, Rivkin JW (2005) Speed and search: designing organizations for turbulence and complexity. Organ Sci 16:101–122

Silverberg G, Verspagen B (1994) Collective learning, innovation and growth in a boundedly rational, evolutionary world. J Evol Econ 4(3):207–226

Veblen T (1898) Why is economics not an evolutionary science. Q J Econ:12

Acknowledgments

Some of the work described in this paper was supported by the EPSRC Research Grant EP/G059969/1(Complex Adaptive Systems, Cognitive Agents and Distributed Energy (CASCADE))

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Allen, P.M. (2015). Evolution: Complexity, Uncertainty and Innovation. In: Pyka, A., Foster, J. (eds) The Evolution of Economic and Innovation Systems. Economic Complexity and Evolution. Springer, Cham. https://doi.org/10.1007/978-3-319-13299-0_7

Download citation

DOI: https://doi.org/10.1007/978-3-319-13299-0_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-13298-3

Online ISBN: 978-3-319-13299-0

eBook Packages: Business and EconomicsEconomics and Finance (R0)