Abstract

Methods for uncertainty quantification lie at the heart of the robust design process. Good robust design practice seeks to understand how a product or ;process behaves under uncertain conditions to design out unwanted effects such as inconsistent or below-par performance or reduced life (and hence increased service or total life cycle costs). Understanding these effects can be very costly, requiring a deep understanding of the system(s) under investigation and of the uncertainties to be guarded against. This chapter explores applications of UQ methods in an engineering design environment, including discussions on risk and decision, systems engineering, and validation and verification. The need for a well-aligned hierarchy of high-quality models is also discussed. These topics are brought together in an Uncertainty Management Framework to provide an overall context for embedding UQ methods in the engineering design process. Lastly, some significant challenges to the approach are highlighted.

Access provided by CONRICYT-eBooks. Download reference work entry PDF

Similar content being viewed by others

Keywords

- Systems Engineering

- Verification & Validation

- Risk

- Decision Making

- Simulation

- Uncertainty Propagation

- Robust optimization

1 Introduction

Applying UQ methods in design is a core robust design activity and can be difficult. Here we discuss the issues around the use of UQ in the design process and the challenges faced by the practitioner. Engineering design is the process of applying science to create a system or process that converts resources to meet desired needs. Typical engineering applications are inherently complex, with many dynamic, nonlinear interactions and multiple objectives and constraints derived from requirements that express those needs. When considering the design and analysis of engineering systems, one needs to consider attributes that are multifaceted. Studies often contain multiple:

-

Objectives and constraints,

-

Behaviors and failure modes,

-

Physical disciplines,

-

Scales or levels of fidelity.

Product design involves teams of specialists developing subsystems in parallel with an inherent need to communicate ideas, models, and results between them. Too much focus on individual aspects of a design (single discipline, single objective) will ultimately lead to overall suboptimal performance.

Addressing these issues requires a holistic, systems engineering view. Experience has shown that without a structured approach to product design and development, the inherent complexity of engineering systems can result in undesired emergent behavior, with adverse consequences from significant project cost overrun to much worse, the classic examples being the collapse of the Tacoma Narrows Bridge or the NASA Challenger disaster. Conversely, adopting a systems approach can simplify complex situations, identify key issues, and reduce risk and cost. Examples of this can be seen in the adoption of “lean” methods in manufacturing, construction, and other sectors, where a focus on alignment of requirements and objectives can help drive out waste. A systems approach can also foster innovation by encouraging both revolution and incremental improvement, or the “aggregation of marginal gains”, which is particularly valuable in mature fields where many of the major advances in design and technology have already been made. Importantly for robust design, this approach provides a natural framework for dealing with uncertainty in the system itself, in the network of models that represent the system, in model form, and in model inputs and in model outputs, which is the main focus of uncertainty quantification methods in engineering.

The rest of this section will describe in more detail the application of UQ in the engineering design environment, followed by a set of challenges that need to be overcome for application to complex engineering systems. The topics to be discussed are:

-

Risk and decision

-

Systems engineering and validation and verification

-

Uncertainty management framework

-

Challenges

2 Risk and Decision

The relationship between quality, risk, and uncertainty is worth exploring. Robust design has its roots in the quality revolution in postwar Japan (Ishikawa/Deming) where the connection was made between statistical methods applied to industry and management, leading to the famous “14 key principles for management” later embraced by the total quality management revolution. The point here is that we undertake UQ studies to improve our understanding of the effect of uncertainty on the system (or subsystem, or component) in order to make a decision as part of the product development process (PDP) . It is all about those decisions. There is a strong hierarchical link between probabilistic analysis, UQ, uncertainty management, and risk (Fig. 42.1).

Taken in isolation, UQ studies on complex engineering systems are costly and time-consuming to conduct. High cost can make it difficult to justify their use, particularly in low-volume industries. Long-running studies may take weeks or even months to complete, which may be too late to influence the design. However, the design process itself is not a linear process, and in general, neither is innovation. Instead ideas are generated, tested, and broken in an iterative process, and design studies need to keep pace with this. Mapping this process to hierarchical models of the system (integrated, multi-scale, multi-fidelity) may facilitate detailed UQ studies early in the PDP, reducing the risk of costly design modifications later. This will be discussed further in later sections.

When considering uncertainty, there are three main categories:

-

1.

Randomness: aleatory uncertainty, or intrinsic randomness,

-

2.

Incompleteness: epistemic uncertainty, or lack of knowledge,

-

3.

Ineptitude, incomprehension, or incompetence.

The field of UQ is most often concerned with (1) and (2). Probabilistic studies can provide a natural framework for dealing with aleatory uncertainty. Probability distributions can be used to model uncertainty as variation, and the impact of variation can be assessed using models of systems. The issue then becomes one of distinguishing between error in model inputs and error in the models themselves (model discrepancy with the physical system).

Where there are deeper uncertainties, it may be difficult to frame that uncertainty in a probabilistic sense. There may be a lack of data, there may not be a sufficiently credible model of the system, or there may not be sufficient knowledge of cause and effect to produce any kind of model. In these cases, knowledge management and systems engineering can help blend qualitative and quantitative information to express these uncertainties in a way that aids decision-making (see Fig. 42.2).

Where there is complexity, or even chaos, it is likely that there is no credible causal model of the system. There may be deep uncertainty about the system and its inputs, with no model, and no well-defined expressions of uncertainty. One way of trying to address this type of problem is to develop parallel plausible scenarios that illustrate these uncertainties and then to analyze their effects. This can be seen, for example, in weather forecasting where several alternative (but equally likely) storm paths may be shown to communicate the uncertainty in the forecast (see, e.g., [5]).

The Cynefin model [1] provides a framework for integrating and expanding these levels of uncertainty to encompass the wider system view and related decision-making processes and appropriate modes of behavior for dealing with uncertainty. Figure 42.3 shows an attempt at mapping the levels of uncertainty defined in Fig. 42.2 into this framework. Where there is deep uncertainty, one is firmly on the left-hand side of the Cynefin model.

In addition to facilitating UQ studies by helping with the quantification problem, systems engineering can also help to address the issue of ineptitude or incompetence, for example, by providing a framework, or checklist, to facilitate the implementation of best practice or simply by having a structured approach that minimizes data transfer, data processing, model sharing, and model integration. The issue of incomprehension is also worth considering at this point. A proposed solution to a problem may not be adequate if the initial specifications are misunderstood or if the specified system is not correctly modeled. A systems engineering approach can help guard against these problems by checking for inconsistencies and unexpected behavior, which will be explored in the next section. Ultimately, managing uncertainty helps to speed up implementation, reduce error, and reduce cost.

3 Systems Engineering and Verification & Validation

Systems engineering is an approach used to develop complex engineering products. It emphasizes a top-down approach, embracing the whole product life cycle, and is naturally multidisciplinary. Importantly it also places a heavy emphasis on defining requirements (both customer and system) before developing solutions. Why is this important? Because as systems become more complex, it becomes more difficult to design solutions without encountering undesirable emergent behavior.

Systems engineering promotes a hierarchical approach, embodied in the “V-diagram” shown in Fig. 42.4.

The basic “V” can be applied hierarchically and can operate at many scales in a fractal sense. A typical generic decomposition would be system, subsystem, component, and material. The overall systems engineering framework provides a hierarchical structure to frame UQ studies in the context of risk and decision, as discussed in the previous section. The final step in the system V-diagram is verification, where the system is tested against the original requirements to confirm if it fulfils those requirements: “did we build the thing right?” This is in contrast to system validation which confirms that the requirements are correct and complete: “did we build the right thing?”

System verification and validation (V&V) combines experience, judgment, physical test, and mathematical modeling (simulation). If simulation models are to be used in a V&V context, then they need to be subject to a simulation V&V process, e.g., [4] integrated with the structured systems view.

3.1 Simulation Verification & Validation

In a general sense, a model can be described as a set of assumptions about a given system [2]. Models are used to describe behavior, both static and dynamic, for the purpose of understanding and controlling (designing) the system. Simulation then is the evaluation of those models to mimic the relevant features of a real process. Once a simulation model is built, it needs to be verified and validated.

In line with the fractal nature of the systems engineering V-diagram, simulation V&V can be viewed as a subsystem of systems V&V (Fig. 42.5), where the goal is to verify the models against experience, judgment, and test data and to validate those models against requirements.

Simulation V&V (A simplified version of the Sargent Cycle [4])

Simulation therefore has multiple roles as it is used throughout the product development process, from design to system validation. Validation can involve testing the simulation model against physical test data for the right physics, the right geometry, the right mesh, the right boundary conditions, etc. This can be achieved with an integrated test and simulation strategy. In the end, the simulation model must be shown to be fit for purpose, and this of course means that the validation process very much depends on the purpose. At this stage one must be mindful of the bounds within which the model is shown to be valid. A model representing a particular design configuration may be calibrated against test data at a particular operating point, but that does not necessarily mean it is a valid model at other design configurations or operating points. If the model is to be used for a design trade study, or for optimization, then the validity of the model over the whole of the defined design space needs to be considered. The discrepancy between model and observed behavior can be modeled, either explicitly or by considering the plausibility of the model as it is evaluated at different locations in the design space.

Where simulation models are used in the early stages of design, they will often need to be evaluated many times, and with many different input configurations (e.g., changes in geometry), it may be possible to use simplified models that are faster to run where less accuracy is required, for example, to detect design trends. Where detailed optimization studies are needed, a more accurate but slower-running model may be necessary to accurately capture behavior. Thus a multi-fidelity approach to modeling may be required, where there are several models of the same system, with different blends of speed and accuracy, used as the situation dictates. Such models need to be aligned with each other, so that they can be interchanged at will. Considering finite element models, this could be achieved by varying the size of the FE mesh, with coarse meshing for faster models and finer meshing for more detailed analysis, subject to model validation. Alternatively, linearized or reduced-order models (ROM) may be used.

4 Uncertainty Management Framework

As previously mentioned, the engineering system can be represented by a network of models. In order to propagate variation through this network, a common parametric model framework is required. The models must be able to represent variation as it is experienced in manufacturing and use. This requires that uncertainty in manufacture and use be defined, measured, and expressed as variation and then propagated as efficiently as possible. The general framework for uncertainty management in analysis is shown in Fig. 42.6.

There are four key pillars to this framework.

-

1.

Quantify input uncertainty (identify sources, elicit, measure)

-

2.

Model the system (validated models, including representation of uncertainty)

-

3.

Propagate uncertainty using a suitable method (see below)

-

4.

Analyze/optimize (sensitivity, objectives, constraints, algorithms)

This simulation framework can be used to solve the forward problem (what is the variation in outputs, given variation in inputs?) and the inverse problem (what input variation caused the observed output variation?). There are significant challenges associated with each pillar of the framework, and these will be further discussed now.

4.1 Quantification of Input Uncertainty

Standard robust design and systems engineering tools can be used to identify sources of input uncertainty, and once identified, these need to be quantified. This usually involves gathering data, although interval-based methods do exist; they are not usually associated with UQ studies, which concentrate on probabilistic analysis. Where deeper uncertainty exists (i.e., uncertainty that cannot be quantified), one can take a scenario-based approach, as previously mentioned. Different types of input uncertainty related to engineering analysis include:

-

1.

Physics. This defines the nature of the problem, e.g., find displacements given forces on objects.

-

2.

Geometry. This defines the domain over which the problem will be solved. This may not necessarily be the fully featured part geometry but could be a simplification.

-

3.

Meshing. The geometry domain needs to be discretized with a mesh as part of the FEM formulation of the problem.

-

4.

Boundary conditions. These define the initial conditions for the model.

-

5.

Solver. In general, the problem needs to be solved numerically, and hence any solution is approximate.

-

6.

Computer hardware. Decomposition of a large problem into subproblems that can be solved with available computing resource (memory, CPU power, bus bandwidth).

4.2 Modeling and Simulation Framework

A system model or network of models that represents the system needs to be:

-

1.

Representative of the relevant system responses,

-

2.

Flexible enough to represent the design space and input uncertainties,

-

3.

Able to run automatically (in “batch mode”), to facilitate design studies and computer experiments.

These modeling and simulation requirements demand flexible, parametric models that can be integrated into workflows containing several simulation codes with data being shared automatically between them. In the case of finite element models, this means automatic generation of geometry, automatic meshing of that geometry, and automatic application of forces and boundary conditions, which are all nontrivial tasks.

Automation is needed for propagation of variation, for example, by using computer experiments [3] where design of experiments (DoE) methods are used to fit empirical models (variously know as emulators, meta-models, surrogates, response surface models) that approximate the behavior of the simulation models, but run much faster and can be used more effectively for design space exploration and optimization. An additional benefit of workflow automation that includes empirical model fitting is that block DoEs can be run in parallel and, in addition, sequential modeling strategies can minimize the number of costly simulation runs needed to fit a good-quality model.

The model network should also be aligned with the broader “systems” view, so that changes to the model can be evaluated by other subsystems (e.g., cost of manufacture) and also so that any resulting design changes can be implemented. Alignment of model networks also facilitates multi-fidelity analysis, where there are multiple models of the same system. Where computational costs are high, a multi-fidelity model framework can be used to achieve accuracy in design search and optimization while reducing the overall computational burden.

4.3 Representation of Uncertainty in Simulation Models

Promoting the parameters of a simulation model from deterministic to stochastic can be very costly from a numerical simulation perspective. Even the most sophisticated propagation methods currently struggle to deal with more than around 20 random variables, when applied to nonlinear systems. Where data exists, variation in individual parameter values can be characterized by standard univariate distributions. Accounting for correlation can be more problematic, but is no less important. Where there is a spatial aspect to the parameters (e.g., boundary conditions along an edge, or a face, material properties, or even geometry), then random fields may be used.

4.4 Propagation of Uncertainty

Methods for the propagation of uncertainty through the model framework include:

-

Monte Carlo methods

-

Sparse quadrature

-

Sigma point methods

-

gPC: generalized polynomial chaos

-

Stochastic reduced basis methods (for stochastic PDEs)

-

Adjoint solvers (for efficient computation of derivatives)

These methods are discussed in detail in other chapters. It is interesting to note that many of these methods are intimately bound to the statistical models that represent the uncertainty, which can make it difficult to develop and deploy generic solutions to the engineering design community.

4.5 Decision Analysis

Evaluation of the model framework provides insight into system performance and design. Specific applications include sensitivity studies, general search in the design space, and numerical optimization. Design information gathered using these techniques can be used to inform decisions during the product development process and to confirm (validate) system behavior as part of a systems V&V process and also for product certification by external authorities. Where information exists on variation in system response (e.g., in-service data, or operational data), then an inverse problem formulation of the model network can attempt to identify the likely variation in system inputs that led to the variation in outputs.

4.5.1 Sensitivity Studies

If one considers the model network as a transfer function, mapping inputs (design parameters) to outputs (system responses), then at its simplest, a sensitivity study can be used to rank the inputs according to their effect on the outputs. The model network evaluates the change in output for a fixed (e.g., unit, or 1 %) change in inputs. This does not take into account different levels of uncertainty that each of the inputs may have, which in turn will change the amount by which the outputs will vary. Therefore, in order to be useful, the likely variation of input values must be used. Where these are not known, sensitivity studies are of limited use.

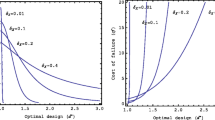

4.5.2 Robust Optimization

Numerical optimization algorithms can be applied to the model network to optimize performance. The lower and upper bounds of the model (input) parameters define a design space which can be explored. If variation in the input parameters is ignored, there is the danger that optimal designs are identified that are at constraint boundaries or that are highly sensitive to small changes in input parameter values (cliff-edge designs). Therefore the robustness of the design to variation in inputs needs to be evaluated, which of course is a prime motivator for UQ studies.

Robust optimization imposes a significant computational burden because a UQ study is potentially needed at every iteration of the optimizer, creating the requirement for fast, efficient UQ methods. The number of objectives is higher for robust optimization as there may well be multiple attributes (e.g., mean, variance), for each response.

5 An Example Application: Jet Engine Disc and Blade Design

The design and development of a new engine is supported by analysis and test operations that rely on models at system, subsystem, and component level. The impact of variation at component level needs to be assessed not only at that level but also at higher levels. This can create a significant challenge for the practical application of UQ methods.

A generic example problem, which is nonetheless based on a real engineering design problem, is presented to illustrate some of the issues that have been discussed. The problem is to design a blade and disc assembly for a jet engine. The idea behind the example is to show the hierarchy of design systems and the multifaceted nature of the design problem.

The disc and blade design problem is addressed at component level, but also needs to be considered at subsystem level (compressor or turbine) and at system (whole engine) level. Figures 42.7, 42.8, and 42.9 show these three levels of design geometry. These geometry models form the basis of the set of analysis models used to inform both design and the verification and validation of the system.

Figure 42.7 shows a schematic of a Rolls-Royce Plc Trent engine. At the core of the engine lies the compressor-combustor-turbine arrangement, where air is compressed, heated, and expanded to produce work. The detailed designs of the blade and disc arrangements of the compressor and turbine are critical in determining overall engine performance and are subject to multiple design criteria. Performance is evaluated over a full flight profile, with hundreds of complex thermomechanical loading conditions. The blade and disc components need to be evaluated for strength (stress, strain), fatigue, and vibration, requiring the model to be able to represent nonlinear physical phenomena such as creep, plasticity, fatigue, and contact and friction, all in a coupled thermomechanical model.

Not all of these conditions and physics-based models need to be evaluated at the whole engine level. Whole engine analysis is extremely costly – more can be done with available resources if the physical phenomena that drive individual design criteria are modeled at appropriate scale and fidelity. This however creates an overhead in managing the hierarchy of analysis models. The models need to be kept in alignment via common geometry definitions and consistent boundary conditions, all while allowing them to flex to take uncertainty into account and to allow for larger design changes in the search for a good design solution.

The disc and blade arrangement of the compressor subsystem is shown in Fig. 42.8. This subsystem can be modeled and analyzed to determine key performance characteristics, which may involve more detailed modeling at the individual blade and disc level, as depicted in Fig. 42.9

At this detailed level, effects such as contact and friction between blade and disc can be modeled. Thus there is a well-defined modeling pathway from engine to subsystem to component .

6 Challenges For the Application of UQ

In summary, the application of UQ methods to the design of engineering systems poses the following challenges:

-

1.

Simulation

-

a.

Multidisciplinary: common parametric models to enable flow of data

-

b.

Aligned models: systems of multi-fidelity models

-

c.

Fully stochastic simulation models

-

a.

-

2.

Uncertainty quantification

-

a.

Data-led quantification of sources of uncertainty

-

b.

Methods for UQ where data are sparse (expensive) and multi-scale

-

c.

Propagation methods integrated with simulation models

-

a.

-

3.

Optimization

-

a.

Improved automated geometry creation and meshing (morph geometry or mesh?)

-

b.

Increase in the number of objectives considered during optimization

-

a.

-

4.

Robust design

-

a.

Tools and methods able to cope with large nonlinear problems

-

b.

Hierarchy of variable fidelity models from component to subsystem to system

-

c.

Full “System view” of uncertainty quantification and management: decision-making in the face of uncertainty

-

d.

Validation: calibration at a test point, extrapolation to a new design vs interpolation

-

e.

“Close the loop”: how well did we predict the variation that we see in the field?

-

a.

7 Conclusion

Several aspects of managing uncertainty in engineering design have been discussed. Uncertainty, risk, and decision-making are closely related to each other and also to the process of design and development of new products. By classifying uncertainty into different types (randomness, incompleteness, ineptitude), one can begin to define effective strategies for managing uncertainty. These strategies should be integrated with a systems engineering approach and should ultimately define a hierarchy of models that includes functional models, static and dynamic simulation models, and data, all of which are verified to accurately represent the (sub) systems in question and validated against the original design requirements. This network of models forms the foundation of a framework for managing uncertainty that can be exploited to develop an understanding of how the many forms of variation (randomness) impact design performance.

There are many challenges to making this vision a reality. UQ studies can be costly, both in terms of generating models that are fit for purpose and in evaluating those models to understand how randomness is propagated through them. It can be difficult to develop well-aligned hierarchies of models, and it can also be difficult to understand how the results of a probabilistic simulation study can be used upstream to aid the design decision-making process.

Despite these challenges, the main argument remains that managing risk and uncertainty in engineering design can be achieved using a well-organized, well-structured approach. This can help to minimize risk and create an understanding of uncertainty which can then be used to inform designers on how to make products more robust to variation and more resilient to change.

References

Snowden, D.J., Boone, M.E.: A leader’s framework for decision making. Harv. Bus. Rev. 85(11), 68–76 (2007)

Hartmann, S.: The world as a process: simulations in the natural and social sciences. In: Hegselmann, R., et al. (eds.) Modelling and Simulation in the Social Sciences from the Philosophy of Science Point of View, Theory and Decision Library. pp. 77–100. Kluwer, Dordrecht (1996)

Sacks, J., Welch, W.J., Mitchell, T.J., Wynn, H.P.: Design and analysis of computer experiments. Stat. Sci. 4(4), 409–423 (1989)

Sargent, R.G.: Validation of simulation models. In: Highland, H.J., Spiegel, M.F., Shannon, R.E. (eds.) Proceedings of the 1979 Winter Simulation Conference. IEEE, Piscataway, pp 497–503 (1979)

Stephens, E.M., Edwards, T.L., Demeritt, D.: Communicating probabilistic information from climate model ensembles—lessons from numerical weather prediction. WIREs Clim. Change 3, 409–426 (2012). doi10.1002/wcc.187

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this entry

Cite this entry

Bates, R. (2017). Robust Design and Uncertainty Quantification for Managing Risks in Engineering. In: Ghanem, R., Higdon, D., Owhadi, H. (eds) Handbook of Uncertainty Quantification. Springer, Cham. https://doi.org/10.1007/978-3-319-12385-1_45

Download citation

DOI: https://doi.org/10.1007/978-3-319-12385-1_45

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-12384-4

Online ISBN: 978-3-319-12385-1

eBook Packages: Mathematics and StatisticsReference Module Computer Science and Engineering