Abstract

The aim of this paper is to review methods of designing screening experiments, ranging from designs originally developed for physical experiments to those especially tailored to experiments on numerical models. The strengths and weaknesses of the various designs for screening variables in numerical models are discussed. First, classes of factorial designs for experiments to estimate main effects and interactions through a linear statistical model are described, specifically regular and nonregular fractional factorial designs, supersaturated designs, and systematic fractional replicate designs. Generic issues of aliasing, bias, and cancellation of factorial effects are discussed. Second, group screening experiments are considered including factorial group screening and sequential bifurcation. Third, random sampling plans are addressed including Latin hypercube sampling and sampling plans to estimate elementary effects. Fourth, a variety of modeling methods commonly employed with screening designs are briefly described. Finally, a novel study demonstrates six screening methods on two frequently-used exemplars, and their performances are compared.

Access provided by CONRICYT-eBooks. Download reference work entry PDF

Similar content being viewed by others

Keywords

- Computer experiments

- fractional factorial designs

- Gaussian process models

- group screening

- space-filling designs

- supersaturated designs

- variable selection

1 Introduction

Screening [32] is the process of discovering, through statistical design of experiments and modeling, those controllable factors or input variables that have a substantive impact on the response or output which is either calculated from a numerical model or observed from a physical process.

Knowledge of these active input variables is key to optimization and control of the numerical model or process. In many areas of science and industry, there are often a large number of potentially important variables. Effective screening experiments are then needed to identify the active variables as economically as possible. This may be achieved through careful choice of experiment size and the set of combinations of input variable values (the design) to be run in the experiment. Each run determines an evaluation of the numerical model or an observation to be made on the physical process. The variables found to be active from the experiment are further investigated in one or more follow-up experiments that enable estimation of a detailed predictive statistical model of the output variable.

The need to screen a large number of input variables in a relatively small experiment presents challenges for both design and modeling. Crucial to success is the principle of factor sparsity [16] which states that only a small proportion of the input variables have a substantive influence on the output. If this widely observed principle does not hold, then a small screening experiment may fail to reliably detect the active variables, and a much larger investigation will be required.

While most literature has focused on designs for physical experiments, screening is also important in the study of numerical models via computer experiments [97]. Such models often describe complex input-output relationships and have numerous input variables. A primary reason for building a numerical model is to gain better understanding of the nature of these relationships, especially the identification of the active input variables. If a small set of active variables can be identified, then the computational costs of subsequent exploration and exploitation of the numerical model are reduced. Construction of a surrogate model from the active variables requires less experimentation, and smaller Monte Carlo samples may suffice for uncertainty analysis and uncertainty propagation.

The effectiveness of screening can be evaluated in a variety of ways. Suppose there are d input variables held in vector x = (x 1, …, x d )T and that \(\mathcal{X} \subset \mathbb{R}^{d}\) contains all possible values of x, i.e., all possible combinations of input variable values. Let A T ⊆ {1, …, d} be the set of indices of the truly active variables and A S ⊆ {1, …, d} consist of the indices of those variables selected as active through screening. Then, the following measures may be defined: (i) sensitivity, ϕ s = | A S ∩ A T | ∕ | A T |, the proportion of active variables that are successfully detected, where ϕ s is defined as 1 when A T = ∅; (ii) false discovery rate [8], \(\phi _{\mathrm{fdr}} = \vert A_{S} \cap \bar{ A}_{T}\vert /\vert A_{S}\vert\), where \(\bar{A}_{T}\) is the complement of A T , the proportion of variables selected as active that are actually inactive, and ϕ fdr is defined as 0 when A S = ∅; and (iii) type I error rate, \(\phi _{\mathrm{I}} = \vert A_{S} \cap \bar{ A}_{T}\vert /\vert \bar{A}_{T}\vert\), the proportion of inactive variables that are selected as active. In practice, high sensitivity is often considered more important than a low type I error rate or false discovery rate [34] because failure to detect an active input variable results in no further investigation of the variable and no exploitation of its effect on the output for purposes of optimization and control.

The majority of designs for screening experiments are tailored to the identification and estimation of a surrogate model that approximates an output variable Y (x). A class of surrogate models which has been successfully applied in a variety of fields [80] has the form

where h is a p × 1 vector of known functions of x, \(\boldsymbol{\beta }= (\beta _{0},\ldots,\beta _{p-1})^{\mathrm{T}}\) are unknown parameters, and ɛ(x) is a random variable with a N(0, σ 2) distribution for constant σ 2. Note that if multiple responses are obtained from each run of the experiment, then the simplest and most common approach is separate screening of the variables for each response using individual models of the form (33.1).

An important decision in planning a screening experiment is the level of fidelity, or accuracy, required of a surrogate model for effective screening including the choice of the elements of h in (33.1). Two forms of (33.1) are commonly used for screening variables in numerical models: linear regression models and Gaussian process models.

1.1 Linear Regression Models

Linear regression models assume that ɛ(x 0) and ɛ(x 0 ′), \(\mathbf{x}_{0}\neq \mathbf{x}_{0}^{{\prime}} \in \mathcal{X}\), are independent random variables. Estimation of detailed mean functions \(\mathbf{h}^{\mathrm{T}}(\mathbf{x})\boldsymbol{\beta }\) with a large number of terms requires large experiments which can be prohibitively expensive. Hence, many popular screening strategies investigate each input variable x i at two levels, often coded + 1 and − 1 and referred to as “high” and “low,” respectively [15, chs. 6 and 7]. Interest is then in identifying those variables that have a large main effect, defined for variable x i as the difference between the average expected responses for the 2d−1 combinations of variable values with x i = +1 and the average for the 2d−1 combinations with x i = −1. Main effects may be estimated via a first-order surrogate model

where p = d + 1. Such a “main effects screening” strategy relies on a firm belief in strong effect heredity [46], that is, important interactions or other nonlinearities involve only those input variables that have large main effects. Without this property, active variables may be overlooked.

There is evidence, particularly from industrial experiments [18, 99], that strong effect heredity may fail to hold in practice. This has led to the recent development and assessment of design and data analysis methodology that also allows screening of interactions between pairs of variables [34, 57]. For two-level variables, the interaction between x i and x j (i, j = 1, …, d; i ≠ j) is defined as one-half of the difference between the conditional main effect for x i given x j = +1 and the conditional main effect of x i given x j = −1. Main effects and two-variable interactions can be estimated via a first-order surrogate model supplemented by two-variable product terms

where p = 1 + d(d + 1)∕2 and β d+1, …, β p−1 in (33.1) are relabeled β 12, …, β (d−1)d for notational clarity.

The main effects and interactions are collectively known as the factorial effects and can be shown to be the elements of \(2\boldsymbol{\beta }\). The screening problem may be cast as variable or model selection, that is, choosing a statistical model composed of a subset of the terms in (33.3).

The parameters in \(\boldsymbol{\beta }\) can be estimated by least squares. Let x i (j) be the value taken by the ith variable in the jth run (i = 1, …, d; j = 1, …, n). Then, the rows of the n × d design matrix \(\mathbf{X}^{n} = \left (x_{1}^{(j)},\ldots,x_{d}^{(j)}\right )_{j=1,\ldots,n}\) each hold one run of the design. Let Y n = (Y (1), …, Y (n)) be the output vector. Then, the least squares estimator of \(\boldsymbol{\beta }\) is

where H = (h(x 1 n), …, h(x n n))T is the model matrix and (x j n)T is the jth row of X n. For the least squares estimators to be uniquely defined, H must be of full column rank.

In physical screening experiments, often no attempt is made to estimate nonlinear effects other than two-variable interactions. This practice is underpinned by the principle of effect hierarchy [112] which states that low-order factorial effects, such as main effects and two-variable interactions, are more likely to be important than higher-order effects. This principle is supported by substantial empirical evidence from physical experiments.

However, the exclusion of higher-order terms from surrogate model (33.1) can result in biased estimators (33.4). Understanding, and minimizing, this bias is key to effective linear model screening. Suppose that a more appropriate surrogate model is

where \(\tilde{\mathbf{h}}(\mathbf{x})\) is a \(\tilde{p}\)-vector of model terms, additional to those held in h(x), and \(\tilde{\boldsymbol{\beta }}\) is a \(\tilde{p}\)-vector of constants. Then, the expected value of \(\hat{\boldsymbol{\beta }}\) is given by

where

and \(\tilde{\mathbf{H}} = (\tilde{\mathbf{h}}(\mathbf{x}_{j}^{n}))_{j}\). The alias matrix A determines the pattern of bias in \(\hat{\boldsymbol{\beta }}\) due to omitting the terms \(\tilde{\mathbf{h}}^{\mathrm{T}}(\boldsymbol{x})\tilde{\boldsymbol{\beta }}\) from the surrogate model and can be controlled through the choice of design. The size of the bias is determined by \(\tilde{\boldsymbol{\beta }}\) which is outside the experimenter’s control .

1.2 Gaussian Process Models

Gaussian process (GP) models are used when it is anticipated that understanding more complex relationships between the input and output variables is necessary for screening. Under a GP model, it is assumed that ɛ(x 0), ɛ(x 0 ′) follow a bivariate normal distribution with correlation dependent on a distance metric applied to x 0, x 0 ′; see [93] and Metamodel-based sensitivity analysis: polynomial chaos and Gaussian process.

Screening with a GP model requires interrogation of the parameters that control this correlation. A common correlation function employed for GP screening has the form

Conditional on θ 1, …, θ d , closed-form maximum likelihood or generalized least squares estimators for \(\boldsymbol{\beta }\) and σ 2 are available. However, θ i requires numerical estimation. Reliable estimation of these more sophisticated and flexible surrogate models for a large number of variables requires larger experiments and may incur an impractically large number of evaluations of the numerical model .

1.3 Screening without a Surrogate Model

The selection of active variables using a surrogate model relies on the model assumptions and their validation. An alternative model-free approach is the estimation of elementary effects [74]. The elementary effect for the ith input variable for a combination of input values \(\mathbf{x}_{0} \in \mathcal{X}\) is an approximation to the derivative of Y (x 0) in the direction of the ith variable. More formally,

where e id is the ith unit vector of length d (the ith column of the d × d identity matrix) and Δ > 0 is a given constant such that \(\mathbf{x} +\varDelta \mathbf{e}_{id} \in \mathcal{X}\). Repeated random draws of x 0 from \(\mathcal{X}\) according to a chosen distribution enable an empirical, model-free distribution for the elementary effect of the ith variable to be estimated. The moments (e.g., mean and variance) of this distribution may be used to identify active effects, as discussed later.

In the remainder of the paper, a variety of screening methods are reviewed and discussed, starting with (regular and nonregular) factorial and fractional factorial designs in the next section. Later sections cover methods of screening groups of variables, such as factorial group screening and sequential bifurcation; random sampling plans and space-filling designs, including sampling plans for estimating elementary effects; and model selection methods. The paper finishes by comparing and contrasting the performance of six screening methods on two examples from the literature .

2 Factorial Screening Designs

In a full factorial design, each of the d input variables is assigned a fixed number of values or levels, and the design consists of one run of each of the distinct combinations of these values. Designs in which each variable has two values are mainly considered here, giving n = 2d runs in the full factorial design. For even moderate values of d, experiments using such designs may be infeasibly large due to the costs or computing resources required. Further, such designs can be wasteful as they allow estimation of all interactions among the d variables, whereas effect hierarchy suggests that low-order factorial effects (main effects and two-variable interactions) will be the most important. These problems may be overcome by using a carefully chosen subset, or fraction, of the combinations of variable values in the full factorial design. Such fractional factorial designs have a long history of use in physical experiments [39] and, more recently, have also been used in the study of numerical models [36]. However, they bring the complication that the individual main effects and interactions cannot be estimated independently. Two classes of designs are discussed here.

2.1 Regular Fractional Factorial Designs

The most widely used two-level fractional factorial designs are 1∕2q fractions of the 2d full factorial design, known as 2d−q designs [112, ch. 5] (1 ≤ q < d is integer). As the outputs from all the combinations of variable values are not available from the experiment, the individual main effects and interactions cannot be estimated. However, in a regular fractional factorial design, 2d−q linear combinations of the factorial effects can be estimated. Two factorial effects that occur in the same linear combination cannot be independently estimated and are said to be aliased. The designs are constructed by choosing which factorial effects should be aliased together.

The following example illustrates a full factorial design, the construction of a regular fractional factorial design, and the resulting aliasing among the factorial effects. Consider first a 23 factorial design in variables x 1, x 2, x 3. Each run of this design is shown as a row across the columns 3–5 in Table 33.1. Thus, these three columns form the design matrix. The entries in these columns are the coefficients of the expected responses in the linear combinations that constitute the main effects, ignoring constants. Where interactions are involved, as in model (33.3), their corresponding coefficients are obtained as elementwise products of columns 3–5. Thus, columns 2–8 of Table 33.1 give the model matrix for model (33.3).

A 24−1 regular fractional factorial design in n = 8 runs may be constructed from the 23 design by assigning the fourth variable, x 4, to one of the interaction columns. In Table 33.1, x 4 is assigned to the column corresponding to the highest-order interaction, x 1 x 2 x 3. Each of the eight runs now has the property that x 1 x 2 x 3 x 4 = +1, and hence, as each variable can only take values ± 1, it follows that x 1 = x 2 x 3 x 4, x 2 = x 1 x 3 x 4, and x 3 = x 1 x 2 x 4. Similarly, x 1 x 2 = x 3 x 4, x 1 x 3 = x 2 x 4, and x 1 x 4 = x 2 x 3. Two consequences are: (i) each main effect is aliased with a three-variable interaction and (ii) each two-variable interaction is aliased with another two-variable interaction. However, for each variable, the sum of the main effect and the three-variable interaction not involving that variable can be estimated. These two effects are said to be aliased. The other pairs of aliased effects are shown in Table 33.1. The four-variable interaction cannot be estimated and is said to be aliased with the mean, denoted by I = x 1 x 2 x 3 x 4 (column 2 of Table 33.1).

An estimable model for this 24−1 design is

with model matrix H given by columns 2–9 of Table 33.1. The columns of H are mutually orthogonal, h(x j n)T h(x k n) = 0 for j ≠ k; j, k = 1, …, 8. The aliasing in the design will result in a biased estimator of \(\boldsymbol{\beta }\). This can be seen by setting

which leads to the alias matrix A = ∑ j = 1 8 e j8 e (8−j+1)8, which is an anti-diagonal identity matrix, and

More generally, to construct a 2d−q fractional factorial design, a set {v 1, …, v q } of defining words, such as x 1 x 2 x 3 x 4, must be chosen and the corresponding factorial effects aliased with the mean. That is, the product of variable values defined by each of these words is constant in the design (and equal to either − 1 or + 1). As the product of any two columns of constants in the design must also be constant, there is a total of 2q − 1 effects aliased with the mean. The list of all effects aliased with the mean is called the defining relation and is written as I = v 1 = … = v q = v 1 v 2 = … = v 1⋯v q . Products of the defining words are straightforward to calculate as x i 2 = 1, so that v j 2 = 1 (i = 1, …, d; j = 1, …, 2d).

The aliasing scheme for a design is easily obtained from the defining relation. A factorial effect with corresponding word v j is aliased with each factorial effect corresponding to the words v j v 1, v j v 2, …, v j v 1⋯v q formed by the product of v j with every word in the defining relation. Hence, the defining relation I = x 1 x 2 x 3 x 4 results in x 1 = x 2 x 3 x 4, x 2 = x 1 x 3 x 4, and so on; see Table 33.1.

As demonstrated above, the impact of aliasing is bias in the estimators of the regression coefficients in (33.1) which can be formulated through the alias matrix. For a regular fractional factorial design, the columns of H are mutually orthogonal and hence \(\mathbf{A} = \frac{1} {n}\mathbf{H}^{\mathrm{T}}\tilde{\mathbf{H}}\). If the functions in \(\tilde{\mathbf{h}}\) correspond to those high-order interactions not included in h, then the elements of A are all either 0 or ± 1. This is because the aliasing of factorial effects ensures that each column of \(\tilde{\mathbf{H}}\) is either orthogonal to all columns in H or identical to a column of H up to a change of sign. Thus, A identifies the aliasing among the factorial effects.

Crucial to fractional factorial design is the choice of a defining relation to ensure that effects of interest are not aliased together. Typically, this involves choosing defining words to ensure that only words corresponding to higher-order factorial effects are included in the defining relation.

Regular fractional factorial designs are classed according to their resolution. A resolution III design has at least one main effect aliased with a two-variable interaction. A resolution IV design has no main effects aliased with interactions but at least one pair of two-variable interactions aliased together. A resolution V design has no main effects or two-variable interactions aliased with any other main effects or two-variable interactions. A more detailed and informative classification of regular fractional factorial designs is obtained via the aberration criterion [25].

Although resolution V designs allow for the estimation of higher-fidelity surrogate models, they typically require too many runs for screening studies. The most common regular fractional factorial designs used in screening are resolution III designs as part of a main-effects screening strategy. The design in Table 33.1 has resolution IV .

2.2 Nonregular Fractional Factorial Designs

The regular designs discussed above require n to be a power of two, which limits their application to some experiments. Further, even resolution III regular fractional factorials may require too many runs to be feasible for large numbers of variables. For example, with 11 variables, a resolution III regular fractional design requires n = 16 runs. Smaller experiments with n not equal to a power of two can often be performed by using the wider class of nonregular fractional factorial designs [115] that cannot be constructed via a set of defining words. For 11 variables, a design with n = 12 runs can be constructed that can estimate all 11 main effects independently of each other. While these designs are more flexible in their run size, the cost is a more complex aliasing scheme that makes interpretation of experimental results more challenging and requires the use of more sophisticated modeling methods.

Many nonregular designs are constructed via orthogonal arrays [92]. A symmetric orthogonal array of strength t, denoted by OA(n, s d, t), is an n × d matrix of s different symbols such that all ordered t-tuples of the symbols occur equally often as rows of any n × t submatrix of the array. Each such array defines an n-run factorial design in d variables, each having s levels. Here, only arrays with s = 2 symbols, ± 1, will be discussed. The strength of the array is closely related to the resolution of the design. An array of strength t = 2 allows estimation of all main effects independently of each other but not of the two-variable interactions (cf. resolution III); a strength 3 array allows estimation of main effects independently of two-variable interactions (cf. resolution IV). Clearly, the two-level regular fractional factorial designs are all orthogonal arrays. However, the class of orthogonal arrays is wider and includes many other designs that cannot be obtained via a defining relation.

An important class of orthogonal arrays are constructed from Hadamard matrices [44]. A Hadamard matrix C of order n is an n × n matrix with entries ± 1 such that C T C = n I n , where I n is the n × n identity matrix. An OA(n, 2n−1, 2) is obtained by multiplying rows of C by − 1 as necessary to make all entries in the first column equal to +1 and then removing the first column. Such a design can estimate the main effects of all d = n − 1 variables independently, assuming negligible interactions. This class of designs includes the regular fractional factorials (e.g., for n = 4, 8, 16, …) but also other designs with n a multiple of four but not a power of two (n = 12, 20, 24, …). These designs were first proposed by Plackett and Burman [84]. Table 33.2 gives the n = 12-run Plackett-Burman (PB) design, one of the most frequently used for screening.

The price paid for the greater economy of run size offered by nonregular designs is more complex aliasing. Although designs formed from orthogonal arrays, including PB designs, allow estimation of each main effect independently of all other main effects, these estimators will usually be partially aliased with many two-variable interactions. That is, the alias matrix A will contain many entries with 0 < | a ij | < 1. For example, consider the aliasing between main effects and two-variable interactions for the 12-run PB design in Table 33.2, as summarized in the 11 × 55 alias matrix. The main effect of each variable is partially aliased with all 45 interactions that do not include that variable. That is, for the ith variable,

where  is the indicator function for the set A and b

ijk

= 0 or 1 (i, j, k = 1, …, 11). For this design, each interaction is partially aliased with nine main effects. The competing 16-run resolution III 211−7 regular fraction has each main effect aliased with at most four two-variable interactions, and each interaction aliased only with at most one main effect. Hence, while an active interaction would bias only one main effect for the regular design, it would bias nine main effects for the PB design, albeit to a lesser extent.

is the indicator function for the set A and b

ijk

= 0 or 1 (i, j, k = 1, …, 11). For this design, each interaction is partially aliased with nine main effects. The competing 16-run resolution III 211−7 regular fraction has each main effect aliased with at most four two-variable interactions, and each interaction aliased only with at most one main effect. Hence, while an active interaction would bias only one main effect for the regular design, it would bias nine main effects for the PB design, albeit to a lesser extent.

However, an important advantage of partial aliasing is that it allows interactions to be considered through the use of variable selection methods (discussed later) without requiring a large increase in the number of runs. For example, the 12-run PB design has been used to identify important interactions [26, 46].

A wide range of nonregular designs can be constructed. An algorithm has been developed for constructing designs which allow orthogonal estimation of all main effects together with catalogues of designs for n = 12, 16, 20 [101]. Other authors have used computer search and criteria based on model selection properties to find nonregular designs [58]. A common approach is to use criteria derived from D-optimality [4, ch. 11] to find fractional factorial designs for differing numbers of variables and runs [35]. Designs from these methods may or may not allow independent estimation of the variable main effects dependent on the models under investigation and the number of runs available.

Most screening experiments use designs at two levels, possibly with the addition of one or more center points to provide a portmanteau test for curvature. Recently, an economic class of three-level screening designs have been proposed, called “definitive screening designs” (DSDs) [53], to investigate d variables, generally in as few as n = 2d + 1 runs. The structure of the designs is illustrated in Table 33.3 for d = 6. The design has a single center point and 2d runs formed from d mirrored pairs. The jth pair has the jth variable set to zero and the other d − 1 variables set to ± 1. The second run in the pair is formed by multiplying all the elements in the first run by − 1. That is, the 2d runs form a foldover design [17]. This foldover property ensures that main effects and two-variable interactions are orthogonal and hence main effects are estimated independently from these interactions, unlike for resolution III or PB designs. Further, all quadratic effects are estimated independently of the main effects but not independently of the two-variable interactions. Finally, the two-variable interactions will be partially aliased with each other. These designs are growing in popularity, with a sizeable literature available on their construction [79, 82, 113].

2.3 Supersaturated Designs for Main Effects Screening

For experiments with a large number of variables or runs that are very expensive or time consuming, supersaturated designs have been proposed as a low-resource (small n) solution to the screening problem [42]. Originally, supersaturated designs were defined as having too few runs to estimate the intercept and the d main effects in model (33.2), that is, n < d + 1. The resulting partial aliasing is more complicated than for the designs discussed so far, in that at least one main effect estimator is biased by one or more other main effects. Consequently, there has been some controversy about the use of these designs [1]. Recently, evidence has been provided for the effectiveness of the designs when factor sparsity holds and the active main effects are large [34, 67]. Applications of supersaturated designs include screening variables in numerical models for circuit design [65], extraterrestrial atmospheric science [27], and simulation models for maritime terrorism [114].

Supersaturated designs were first proposed in the discussion [14] of random balance designs [98]. The first systematic construction method [11] found designs via computer search that have pairs of columns of the design matrix X n as nearly orthogonal as possible through use of the \(\mathbb{E}(s^{2})\) design selection criterion (defined below). There was no further research in the area for more than 30 years until Lin [61] and Wu [111] independently revived interest in the construction of these designs. Both their methods are based on Hadamard matrices and can be understood, respectively, as (i) selecting a half-fraction from a Hadamard matrix (Lin) and (ii) appending one or more interaction columns to a Hadamard matrix and assigning a new variable to each of these columns (Wu).

Both methods can be illustrated using the n = 12-run PB design in Table 33.2. To construct a supersaturated design for d = 10 variables in n = 6 runs by method (i), all six runs of the PB design with x 11 = −1 are removed, followed by deletion of the x 11 column. The resulting design is shown in Table. 33.4. To obtain a design by method (ii) for d = 21 variables in n = 12 runs, ten columns are appended that correspond to the interactions of x 1 with variables x 2 to x 11, and variables x 12 to x 21 are assigned to these columns; see Table 33.5.

Since 1993, there has been a substantial research effort on construction methods for supersaturated designs; see, for example, [62, 77, 78]. The most commonly used criterion for design selection in the literature is \(\mathbb{E}(s^{2})\)-optimality [11]. More recently, the Bayesian D-optimality criterion [35, 51] has become popular.

2.3.1 \(\mathbb{E}(s^{2})\)-Optimality

This criterion selects a balanced design, that is, a design with n = 2m for some integer m > 0 where each column of X n contains m entries equal to − 1 and m entries equal to +1. The \(\mathbb{E}(s^{2})\)-optimal design minimizes the average of the squared inner products between columns i and j of X n (i, j = 1, …, d; i ≠ j),

where s ij is the ijth element of \(\left (\mathbf{X}^{n}\right )^{\mathrm{T}}\mathbf{X}^{n}\) (i, j = 1, …, d). A lower bound on \(\mathbb{E}(s^{2})\) is available [19, 94]. The designs in Tables 33.4 and 33.5 achieve the lower bound and hence are \(\mathbb{E}(s^{2})\)-optimal. For the design in Table 33.4, each s ij 2 = 4. For the design in Table 33.5, \(\mathbb{E}(s^{2}) = 6.857\) (to 3 dp), with 120 pairs of columns being orthogonal (s ij 2 = 0) and the remaining 90 pairs of columns having s ij 2 = 16. Recently, the definition of \(\mathbb{E}(s^{2})\) has been extended to unbalanced designs [52, 67] by including the inner product between each column of X n and the vector 1 n , the n × 1 vector with every entry 1, which corresponds to the intercept term in model (33.1). This extension widens the class of available designs.

2.3.2 Bayesian D-Optimality

This criterion selects a design that maximizes the determinant of the posterior variance-covariance matrix for \((\beta _{0},\bf{\beta }^{\mathrm{T}})^{\mathrm{T}}\),

where H ⋆ = [1 n | X n], K = I d+1 − e 1(d+1) e 1(d+1) T, τ 2 > 0, and τ 2 K −1 is the prior variance-covariance matrix for \(\boldsymbol{\beta }\). Equation (33.10) results from assuming an informative prior distribution for each β i (i = 1, …, d) with mean zero and small prior variance, to reflect factor sparsity, and a non-informative prior distribution for β 0. The prior information can be regarded as equivalent to having sufficient additional runs to allow estimation of all parameters β 0, …, β d , with the value of τ 2 reflecting the quantity of available prior information. However, the optimal designs obtained tend to be insensitive to the choice of τ 2 [67].

Both \(\mathbb{E}(s^{2})\)- and D-optimal designs may be found numerically, using algorithms such as columnwise-pairwise [59] or coordinate exchange [71]. From simulation studies, it has been shown that there is little difference in the performance of \(\mathbb{E}(s^{2})\)- and Bayesian D-optimal designs assessed by, for example, sensitivity and type I error rate [67].

Supersaturated designs have also been constructed that allow the detection of two-variable interactions [64]. Here, the definition of supersaturated has been widened to include designs that have fewer runs than the total number of factorial effects to be investigated. In particular, Bayesian D-optimal designs have been shown to be effective in identifying active interactions [34]. Note that under this expanded definition of supersaturated designs, all fractional factorial designs are supersaturated under model (33.1) when n < p.

2.4 Common Issues with Factorial Screening Designs

The analysis of unreplicated factorial designs commonly used for screening experiments has been a topic of much research [45, 56, 105]. In a physical experiment, the lack of replication to provide a model-free estimate of σ 2 can make it difficult to assess the importance of individual factorial effects. The most commonly applied method for orthogonal designs treats this problem as analogous to the identification of outliers and makes use of (half-) normal plots of the factorial effects. For many nonregular and supersaturated designs, more advanced analysis methods are necessary; see later. For studies on numerical models, provided all the input variables are controlled, the problem of assessing statistical significance does not occur as no unusually large observations can have arisen due to “chance.” Here, factorial effects may be ranked by size and those variables whose effects lead to a substantive change in the response declared active.

Biased estimators of factorial effects, however, are an issue for experiments on both numerical models and physical processes. Complex (partial) aliasing can produce two types of bias in the estimated parameters in model (33.1): upward bias so that a type I error may occur (amalgamation) or downward bias leading to missing active variables (cancellation). Simulation studies have been used to assess these risks [31, 34, 67].

Bias may also, of course, be induced by assuming a form of the surrogate model that is too simple, for example, through the surrogate having too few turning points (e.g., being a polynomial of too low order) or lacking the detail to explain the local behavior of the numerical model. This kind of bias is potentially the primary source of mistakes in screening variables in numerical models. When prior scientific knowledge suggests that the numerical model is highly nonlinear, screening methods should be employed that have fewer restrictions on the surrogate model or are model-free. Such methods, including designs for the estimation of elementary effects (33.8), are described later in this paper. Typically, they require larger experiments than the designs in the present section .

2.5 Systematic Fractional Replicate Designs

Systematic fractional replicate designs [28] enable expressions to be estimated that indicate the influence of each variable on the output, through main effects and interactions, without assumptions in model (33.1) on the order of interactions that may be important. These designs have had considerable use for screening inputs to numerical models, especially in the medical and biological sciences [100, 116]. In these designs, each variable takes two levels and there are n = 2d + 2 runs.

The designs are simple to construct as (i) one run with all variables set to − 1, (ii) d runs with each variable in turn set to + 1 and the other variables set to − 1, (iii) d runs with each variable in turn set to − 1 and the other variables set to + 1, and (iv) one run with all variables set to + 1. Let the elements of vector Y n be such that Y (1) is the output from the run in (i), Y (2), …, Y (d+1) are the outputs from the runs in (ii), Y (d+2), …, Y (2d+1) are from the runs in (iii), and Y 2d+2 is from the run in (iv). In such a design, each main effect can be estimated independently of all two-variable interactions. This can easily be seen from the alternative construction as a foldover from a one-factor-at-a-time (OFAAT) design with n = d + 1, that is, a design having one run with each variable set to − 1 and d runs with each variable in turn set to + 1 with all other variables set to − 1.

For each variable x i (i = 1, …, d), two linear combinations, S o (i) and S e (i), of “odd order” and “even order” model parameters, respectively, can be estimated:

and

with respective unbiased estimators

and

Under effect hierarchy, it may be anticipated that a large absolute value of C o (i) is due to a large main effect for the ith variable, and a large absolute value of C e (i) is due to large two-variable interactions. A design that also enables estimation of two-variable interactions independently of each other is obtained by appending (d − 1)(d − 2)∕2 runs, each having two variables set to +1 and d − 2 variables set to − 1 [91].

For numerical models, where observations are not subject to random error, active variables are selected by ranking the sensitivity indices defined by

where M(i) = | C o (i) | + | C e (i) |. This methodology is potentially sensitive to the cancellation or amalgamation of factorial effects, discussed in the previous section.

From (33.8), it can also be seen that use of a systematic fractional replicate design is equivalent to calculating two elementary effects (with Δ = 2) for each variable at the extremes of the design region. Let \(\mathrm{EE}_{1i} = \left (Y ^{(2d+2)} - Y ^{(d+i+1)}\right )/2\) and \(\mathrm{EE}_{2i} = \left (Y ^{(i+1)} - Y ^{(1)}\right )/2\) be these elementary effects for the ith variable. Then, it follows directly that \(S(i) \propto \max \left (\vert EE_{1i}\vert,\vert EE_{2i}\vert \right )\), and the above method selects as active those variables with elementary effects that are large in absolute value .

3 Screening Groups of Variables

Early work on group screening used pooled blood samples to detect individuals with a disease as economically as possible [33]. The technique was extended, almost 20 years later, to screening large numbers of two-level variables in factorial experiments where a main effects only model is assumed for the output [108]. For an overview of this work and several other strategies, see [75].

In group screening, the set of variables is partitioned into groups, and the values of the variables within each group are varied together. Smaller designs can then be used to experiment on these groups. This strategy deliberately aliases the main effects of the individual variables. Hence, follow-up experimentation is needed on those variables in the groups found to be important in order to detect the individual active variables. The main screening techniques that employ grouping of variables are described below.

3.1 Factorial Group Screening

The majority of factorial group screening methods apply to variables with two levels and use two stages of experimentation. At the first stage, the d variables are partitioned into g groups, where the jth group contains g j ≥ 1 variables (j = 1, …, g). High and low levels for each of the g grouped variables are defined by setting all the individual variables in a group to either their high level or their low level simultaneously. The first experiment with n 1 runs is performed on the relatively small number of grouped variables. Classical group screening then estimates the main effects for each of the grouped variables and takes those variables involved in groups that have large estimated main effects through to a second-stage experiment. Individual variables are investigated at this stage, and their main effects, and possibly interactions, are estimated.

For sufficiently large groups of variables, highly resource-efficient designs can be employed at stage 1 of classical group screening for even very large numbers of factors. Under the assumption of negligible interactions, orthogonal nonregular designs, such as PB designs, may be used. For screening variables from a deterministic numerical model, designs in which the columns corresponding to the grouped main effects are not orthogonal can be effective [9] provided n 1 > g + 1, as the precision of factorial effect estimators is not a concern.

Effective classical group screening depends on strong effect heredity, namely, that important two-variable interactions occur only between variables both having important main effects. More recently, strategies for group screening that also investigate interactions at stage 1 have been developed [57]. In interaction group screening, both main effects and two-variable interactions between the grouped variables are estimated at stage 1. The interaction between two grouped variables is the summation of the interactions between all pairs of variables where one variable comes from each group; interactions between two variables in the same group are aliased with the mean. Variables in groups found to have large estimated main effects or to be involved in large interactions are carried forward to the second stage. From the second-stage experiment, main effects and interactions are examined between the individual variables within each group declared active. Where the first stage has identified a large interaction between two grouped variables, the interactions between pairs of individual variables, one from each group, are also investigated. For this strategy, larger resolution V designs, capable of independently estimating all grouped main effects and two-variable interactions, have so far been used at stage 1, when decisions to drop groups of variables are made.

Group screening experiments can be viewed as supersaturated experiments in the individual variables. However, when orthogonal designs are used for the stage 1 experiment, decisions on which groups of variables to take forward can be made using t-tests on the grouped main effects and interactions. When smaller designs are used, particularly if n 1 is less than the number of grouped effects of interest, more advanced modeling methods are required, in common with other supersaturated designs (see later). Incorrectly discarding active variables at stage 1 may result in missed opportunities to improve process control or product quality. Hence, it is common to be conservative in the choice of design at stage 1, for example, in the number of runs, and also to allow a higher type I error rate.

In the two-stage process, the design for the second experiment cannot be decided until the stage 1 data have been collected and the groups of factors deemed active have been identified. In fact, the size, N 2, of the second-stage experiment required by the group screening strategy is a random variable. The distribution of N 2 is determined by features under the experimenter’s control, such as d, g, g 1, …, g g , n 1, the first-stage design, and decision rules for declaring a grouped variable active at stage 1. It also depends on features outside the experimenter’s control, such as the number of active individual variables and the size and nature of their effects, and the signal-to-noise ratio if the process is noisy. Given prior knowledge of these uncontrollable features, the grouping strategy, designs, and analysis methods can be tailored, for example, to produce a smaller expected experiment size, \(n_{1} + \mathbb{E}(N_{2})\), or to minimize the probability of missing active variables [57, 104]. Of course, these two goals are usually in conflict and hence a trade-off has to be made. In practice, the design used at stage 2 depends on the number of variables brought forward and the particular effects requiring estimation; options include regular or nonregular fractional factorial designs and D-optimal designs.

Original descriptions of classical group screening made the assumption that all the active variable main effects have the same sign to avoid the possibility of cancellation of the main effects of two or more active variables in the same group. As discussed previously, cancellation can affect any fractional factorial experiment. Group screening is often viewed as particularly susceptible due to the complete aliasing of main effects of individual variables and the screening out of whole groups of variables at stage 1. Often, particularly for numerical models, prior knowledge makes reasonable the assumption of active main effects having the same sign. Otherwise, the risks of missing active variables should be assessed by simulation [69], and, in fact, the risk can be modest under factor sparsity [34].

3.2 Sequential Bifurcation

Screening groups of variables is also used in sequential bifurcation , proposed originally for deterministic simulation experiments [10]. The technique can investigate a very large number of variables, each having two levels, when a sufficiently accurate surrogate for the output is a first-order model (33.2). It is assumed that each parameter β i (i = 1, …, d) is positive (or can be made positive by interchanging the variable levels) to avoid cancellation of effects.

The procedure starts with a single group composed of all the variables which is split into two new groups (bifurcation). For a deterministic numerical model, the initial experiment has just two runs: all variables set to the low levels (x (1)) and all variables set to the high levels (x (2)). If the output Y (2) > Y (1), then the group is split, with variables \(x_{1},\ldots,x_{d_{1}}\) placed in group 1 and \(x_{d_{1}+1},\ldots,x_{d}\) placed in group 2. At the next stage, a single further run x (3) is made which has all group 1 variables set to their high levels and all group 2 variables set low. If Y (3) > Y (1), then group 1 is split further, and group 2 is split if Y (2) > Y (3). These comparisons can be replaced by Y (3) − Y (1) > δ and Y (2) − Y (3) > δ, where δ is an elicited threshold. This procedure of performing one new run and assessing the split of each subsequent group continues until singleton groups, containing variables deemed to be active, have been identified. Finally, these individual variables are investigated. If the output variable is stochastic, the replications of each run are made, and a two-sample t-test can be used to decide whether or not to split a group.

Typically, if d = 2k for some integer k > 0, then at each split, half the variables are assigned to one of the groups, and the other half are assigned to the second group. Otherwise, use of unequal group sizes can increase the efficiency (in terms of overall experiment size) of sequential bifurcation when there is prior knowledge of effect sizes. Then, at each split, the first new group should have size equal to the largest possible power of 2. For example, if the group to be split contains m variables, then the first new group should contain 2l variables such that 2l < m. The remaining m − 2l variables are assigned to the second group. If variables have been ordered by an a priori assessment of increasing importance, the most important variables will be in the second, smaller group, and hence more variables can be ruled out as unimportant more quickly.

The importance of two-variable interactions may be investigated by using the output from the following runs to assess each split. The first is run x used in the standard sequential bifurcation method; the second is the mirror image of x in which each variable is set low that is set high in x and vice versa. This foldover structure ensures that any two-variable interactions will not bias estimators of grouped main effects at each stage. This alternative design also permits standard sequential bifurcation to be performed and, if the variables deemed active differ from those found via the foldover, then the presence of active interactions is indicated. Again, successful identification of the active variables relies on the principle of strong effect heredity.

A variety of adaptations of sequential bifurcation have been proposed, including methods of controlling type I and type II error rates [106, 107] and a procedure to identify dispersion effects in robust parameter design [3]. For further details , see [55, ch. 4].

3.3 Iterated Fractional Factorial Designs

These designs [2] also group variables in a sequence of applications of the same fractional factorial design. Unlike factorial group screening and sequential bifurcation, the variables are assigned at random to the groups at each stage. Individual variables are identified as active if they are in the intersection of those groups having important main effects at each stage.

Suppose there are g = 2l groups, for integer l > 0. The initial design has 2g runs obtained as a foldover of a g × g Hadamard matrix; for details, see [23]. This construction gives a design in which main effects are not aliased with two-variable interactions. The d ≥ g variables are assigned at random to the groups, and each grouped variable is then assigned at random to a column of the design. The experiment is performed and analyzed as a stage 1 group screening design. Subsequent stages repeat this procedure, using the same design but with different, independent assignments of variables to groups and groups to columns. Individual variables which are common to groups of variables found to be active across several stages of experimentation are deemed to be active. Estimates of the main effects using data from all the stages can also be constructed.

There are two further differences from the other grouping methods discussed in this section. First, for a proportion of the stages, the variables are set to a mid-level value (0), rather than high ( + 1) or low ( − 1). These runs allow an estimate of curvature to be made and some screening of quadratic effects to be undertaken. Second, to mitigate cancellation of main effects, the coding of the high and low levels may be swapped at random, that is, the sign of the main effect reversed.

The use of iterated fractional factorial designs requires a larger total number of runs than other group screening methods, as a sequence of factorial screening designs is implemented. However, the method has been suggested for use when there are many variables (thousands) arranged in a few large groups. Simulation studies [95, 96] have indicated that it can be effective here, provided there are very few active variables .

3.4 Two-Stage Group Screening for Gaussian Process Models

More recently, methodology for group screening in two stages of experimentation using Gaussian process modeling to identify the active variables has been developed for numerical models [73]. At the first stage, an initial experiment that employs an orthogonal space-filling design (see the next section) is used to identify variables to be grouped together. Examples are variables that are inert or those having a similar effect on the output, such as having a common sign and a similarly-sized linear or quadratic effect. A sensitivity analysis on the grouped variables is then performed using a Gaussian process model, built from the first-stage data. Groups of variables identified as active in this analysis are investigated in a second-stage experiment in which the variables found to be unimportant are kept constant. The second-stage data are then combined with the first-stage data and a further sensitivity analysis performed to make a final selection of the active variables. An important advantage of this method is the reduced computational cost of performing a sensitivity study on the grouped variables at the first stage .

4 Random Sampling Plans and Space Filling

4.1 Latin Hypercube Sampling

The most common experimental design used to study deterministic numerical models is the Latin hypercube sample (LHS) [70]. These designs address the difficult problem of space filling in high dimensions, that is, when there are many controllable variables. Even when adequate space filling in d dimensions with n points may be impossible, an LHS design offers n points that have good one-dimensional space-filling properties for a chosen distribution, usually a uniform distribution. Thus, use of an LHS at least implicitly invokes the principle of factor sparsity and hence is potentially suited for use in screening experiments.

Construction of a standard d-dimensional LHS is straightforward: generate d random permutations of the integers 1, …, n and arrange them as an n × d matrix D (one permutation forming each column); transform each element of D to obtain a sample from a given distribution F(⋅ ), that is, define the coordinates of the design points as \(x_{j}^{(i)} = F^{-1}\left \{(d_{j}^{(i)} - 1)/(n - 1)\right \}\), where d j (i) is the ijth element of D (i = 1, …, n; j = 1, …, d). Typically, a (small) random perturbation is added to each d j (i) or some equivalent operation performed, prior to transformation to x j (i). An LHS design generated by this method is shown in Fig. 33.1a.

There may be great variation in the overall space-filling properties of LHS designs. For example, the LHS design in Fig. 33.1a clearly has poor two-dimensional space-filling properties. Hence, a variety of extensions to Latin hypercube sampling have been proposed. Most prevalent are orthogonal array-based and maximin Latin hypercube sampling.

To generate an orthogonal array-based LHS [81, 102], the matrix D is formed from an orthogonal array. Hence, the columns of D are no longer independent permutations of 1, …, d. For simplicity, assume O is a symmetric OA(n, s d, t) with symbols 1, …, s and t ≥ 2. The jth row of D is formed by mapping the n∕s occurrences of each symbol in the jth column of O to a random permutation, α, of n∕s new symbols, i.e., 1 → α(1, …, n∕s), 2 → α(n∕s + 1, …, 2n∕s), …, s → α((s − 1)n∕s + 1, …, n), where α(1, …, a) is a permutation of the integers 1, …, a. Figure 33.1b shows an orthogonal array-based LHS, constructed from an OA(9, 32, 2). Notice the improved two-dimensional space filling compared with the randomly generated LHS. The two-dimensional projection properties of more general space-filling designs have also been considered by other authors [29], especially for uniform designs minimizing specific L 2-discrepancies [38].

In addition to the orthogonal array-based LHS, there has been a variety of work on generating space-filling designs that directly minimize the correlation between columns of X n [47], including algorithmic [103] and analytic [117] construction methods. Such designs have good two-dimensional space-filling properties and also provide near-independent estimators of the β i (i = 1, …, d) in Eq. (33.2), a desirable property for screening.

A maximin LHS [76] achieves a wide spread of design points across the design region by maximizing the minimum distance between pairs of design points within the class of LHS designs with n points and d variables. The Euclidean distance between two points x = (x 1, …, x d )T and x ′ = (x 1 ′, …, x d ′)T is given by

Rather than maximizing directly the minimum of (33.14), most authors [6, 76] find designs by minimization of

where, for q → ∞, minimization of (33.15) is equivalent to maximizing the minimum of (33.14) across all pairs of design points; see also [85]. Figure 33.1c shows a maximin LHS, found by this method with q = 15. This design displays better two-dimensional space filling than the random LHS and a more even distribution of the design points than the orthogonal array-based LHS.

Maximin LHS can be found using the R packages DiceDesign [40] and SLHD [5]. More general classes of distance-based space-filling designs, without the projection properties of the Latin hypercubes, can also be found [see 13, 50]. Studies of the numerical efficiencies of optimization algorithms for LHS designs are available [29, 49].

Construction of LHS designs is an active area of research, and many further extensions to the basic methods have been suggested. Recently, maximum projection space-filling designs have been found [54] that minimize

Such a design promotes good space-filling properties, as measured by (33.15), in all projections of the design into subspaces of variables. Objective function (33.16) arises from the use of a weighted Euclidean distance; see also [13].

Another important recent development is sliced LHS designs [6, 88, 89], where the design can be partitioned into sets of runs or slices, each of which is an orthogonal or maximin LHS. The overall design, composed of the runs from all the slices, is also an LHS. Such designs may be used to study multiple numerical models having the same inputs where one slice is used to investigate each model, for example, to compare results from different model implementations. They are also useful for experiments on quantitative and qualitative variables when each slice is combined with one combination of levels of the qualitative variables. This latter application is the most important for screening where the resulting data may be used to estimate a surrogate linear model with dummy variables or a GP model with an appropriate correlation structure [90]. Supersaturated LHS designs, for d ≥ n, have also been developed [21]. See Maximin sliced Latin hypercube designs, with application to cross validating prediction error for more on space-filling and Latin hypercube designs .

4.2 Sampling Plans for Estimating Elementary Effects (Morris’ Method)

As an alternative to estimation of a surrogate model, Morris [74] suggested a model-free approach that uses the elementary effect (33.8) to measure the sensitivity of the response Y (x) to a change in the ith variable at point x. Each EE i (x) may be exactly or approximately (a) zero for all x, implying a negligible influence of the ith variable on Y (x); (b) a nonzero constant for all x, implying a linear, additive effect; (c) a nonconstant function of x i , implying nonlinearity; or (d) a nonconstant function of x j for j ≠ i, implying the presence of at least one interaction involving x i .

In practice, active variables are usually selected using data from a relatively small experiment, and therefore it is not possible to reconstruct EE i (x) as a continuous function of x. The use of r “trajectory vectors”, x 1, …, x r , enables the following sensitivity indices to be defined for i = 1, …, d:

and

A large value of the mean μ i suggests the ith input variable is active. Nonlinear and interaction effects are indicated by large values of σ i . Plots of the sensitivity indices may be used to decide which variables are active and, among these variables, which have complex (nonadditive and nonlinear) effects.

In addition to (33.17) and (33.18), an additional measure [22] of the individual effect of the ith variable has been proposed that overcomes the possible cancellation of elementary effects in (33.17) due to non-monotonic variable effects, namely,

where | ⋅ | denotes the absolute value. Large values of both μ i and μ i ⋆ suggest that the ith variable is active and has a linear effect on Y (x); large values of μ i ⋆ and small values of μ i indicate cancellation in (33.17) and a nonlinear effect for the ith variable.

Four examples of the types of effects which use of (33.17), (33.18) and (33.19) seeks to identify are shown in Fig. 33.2. These are linear, nonlinear, and interaction effects corresponding to nonzero values of (33.17) and (33.19) and both zero and nonzero values of (33.18). It is not possible to use these statistics to distinguish between nonlinear and interaction effects; see Fig. 33.2b, c.

Illustrative examples of effects for an active variable x i with values of μ i , μ i ⋆, and σ i . In plots (c) and (d), the plotting symbols correspond to the ten levels of a second variable. (a) Linear effect: μ i > 0, μ i ⋆ > 0; σ i = 0. (b) Nonlinear effect: μ i ⋆ > 0; σ i > 0. (c) Interaction: σ i > 0. (d) Nonlinear effect and interaction: μ i ⋆ > 0, σ i > 0

The future development of a surrogate model can be simplified by separation of the active variables into two vectors, \(\mathbf{x}_{S_{1}}\) and \(\mathbf{x}_{S_{2}}\), where the variables in \(\mathbf{x}_{S_{1}}\) have linear effects and the variables in \(\mathbf{x}_{S_{2}}\) have nonlinear effects or are involved in interactions [12]. For example, model (33.1) might be fitted in which \(\mathbf{h}(\mathbf{x}_{S_{1}})\) consists of linear functions and \(\varepsilon (\mathbf{x}_{S_{2}})\) is modeled via a Gaussian process with correlation structure dependent only on variables in \(\mathbf{x}_{S_{2}}\).

In the elementary effect literature, the design region is assumed to be \(\mathcal{X} = [0,1]^{d}\), after any necessary scaling, and is usually approximated by a d-dimensional grid, \(\mathcal{X}_{G}\), having f equally spaced values, 0, 1∕(f − 1), …, 1, for each input variable. The design of a screening experiment to allow the computation of the sample moments (33.17), (33.18) and (33.19) has three components: the trajectory vectors x 1, …, x r , the m-run sub-design used to calculate EE i (x j ) (i = 1, …, d) for each x j (j = 1, …, r), and stepsize Δ. Choices for these components are now discussed.

-

1.

Morris [74] chose x 1, …, x r at random from \(\mathcal{X}_{G}\), subject to the constraint that \(\mathbf{x}_{j} +\varDelta \mathbf{e}_{id} \in \mathcal{X}_{G}\) for i = 1, …, d; j = 1, …, r. Alternative suggestions include choosing the trajectory vectors as the r points of a space-filling design [22] found, for example, by minimizing (33.15). Larger values of r result in (33.17), (33.18) and (33.19) being more precise estimators of the corresponding moments of the elementary effect distribution. Morris used r = 3 or 4; more recently, other authors [22] have discussed the use of larger values (r = 10–50).

-

2.

An OFAAT design with m = d + 1 runs may be used to calculate EE 1(x j ), …, EE d (x j ) for j = 1, …, r. The design matrix is X j = 1 d+1 x j + Δ B, where 1 m is a column m-vector with all entries equal to 1 and B = ∑ l = 2 d+1 ∑ k = 1 l−1 e l(d+1) e kd T. That is, B is the (d + 1) × d matrix

$$\displaystyle{ \mathbf{B} = \left (\begin{array}{ccccc} 0&0&0&\cdots &0\\ 1 &0 &0 &\cdots &0 \\ 1&1&0&\cdots &0\\ \vdots & \vdots & & & \vdots\\ 1 &1 &1 &\cdots &1\\ \end{array} \right )\,. }$$Designs are generated by swapping 0’s and 1’s at random within each column of B and randomizing the column order. The overall design X n = (X 1 T, …, X r T)T then has n = (d + 1)r runs. It can be shown that this choice of B, combined with the randomization scheme, minimizes the variability in the number of times in X n that each variable takes each of the f possible values [74].

Such OFAAT designs have the disadvantage of poor projectivity onto subsets of (active) variables, compared with, for example, LHS designs. Projection of a d-dimensional OFAAT design into d − 1 dimensions reduces the number of distinct points from d + 1 to d. This is a particular issue when the projection of the screening design onto the active variables is used to estimate a detailed surrogate model. Better projection properties may be obtained by replacing an OFAAT design by a rotated simplex [86] at the cost of less precision in the estimators of the elementary effects.

-

3.

The choice of the “stepsize” Δ in (33.8) is determined by the choice of design region \(\mathcal{X}_{G}\). Recommended values are f = 2g for some integer g > 0 and Δ = f∕2(f − 1). This choice ensures that all f d∕2 elementary effects are equally likely to be selected for each variable when the trajectory vectors x are selected at random from \(\mathcal{X}_{G}\).

Further extensions of the elementary effect methodology include the application to group screening for numerical models with hundreds or thousands of input variables through the study of μ ⋆ (33.19) [22], and a sequential experimentation strategy to reduce the number of runs of the numerical model required [12]. This latter approach performs r OFAAT experiments, one for each trajectory vector, in turn. For the jth experiment, elementary effects are calculated only for those variables that have not already been identified as having a nonlinear or interaction effect. That is, if σ i > σ 0, for some threshold σ 0, when σ i is calculated from r 1 < r trajectory vectors, the ith elementary effect is no longer calculated for \(\mathbf{x}_{r_{1}+1},\ldots,\mathbf{x}_{r}\). The threshold σ 0 can be elicited directly from subject experts or by using prior knowledge about departures from linearity for the effect of each variable [12]. An obvious generalization of the elementary effect method is to compute sensitivity indices directly from the (averaged local) derivatives (see Chap. 33, “Design of Experiments for Screening”).

Methodology for the design and analysis of screening experiments using elementary effects is available in the R package sensitivity [87].

5 Model Selection Methods

The selection and estimation of a surrogate model (33.1) from an application of a design discussed in this paper generally requires advanced statistical methods. An exception is a regular fractional factorial design. For these designs, standard linear modeling methods can be used provided that only one effect from each alias string is included in the model and it is recognized that \(\hat{\bf{\beta }}\) may be biased. A brief description is now given of variable selection methods for (a) linear models with nonregular and supersaturated designs and (b) Gaussian process models.

5.1 Variable Selection for Nonregular and Supersaturated Designs

For designs with complex partial aliasing such as supersaturated and nonregular fractional factorial designs, a wide range of model selection methods have been proposed. An early suggestion was forward stepwise selection [72], but this was shown to have low sensitivity in many situations [1, 67]. More recently, evidence has been provided for the effectiveness of shrinkage regression for the selection of active effects using data from these more complex designs [34, 67, 83], particularly use of the Dantzig selector [24]. For this method, estimators \(\hat{\boldsymbol{\beta }}\) of the parameters in model (33.1) are chosen to satisfy

with s a tuning constant and | | a | | ∞ = max | a i |, a T = (a 1, …, a p ). This equation balances the desire for a parsimonious model with the need for models that adequately describe the data. The value of s can be chosen via an information criterion [20] (e.g., AIC, AICc, BIC). The solution to (33.20) may be obtained via linear programming; computationally efficient algorithms exist for calculating coefficient paths for varying s [48].

The Dantzig selector is applied to choose a subset of potentially active variables, and then standard least squares is used to fit a reduced linear model. The terms in this model whose coefficient estimates exceeded a threshold t, elicited from subject experts, are declared active. This procedure is known as the Gauss-Dantzig selector [24].

Other methods for variable selection that have been effective for these designs include Bayesian methods that use mixture prior distributions for the elements of \(\boldsymbol{\beta }\) [26, 41] and the application of stochastic optimization algorithms [110].

5.2 Variable Selection for Gaussian Process Models

Screening for a Gaussian process model (33.1), with constant mean (h(x) = 1) and ɛ(x), ɛ(x)′ having correlation (33.7), may be performed by deciding which θ i in (33.7) are “large” using data obtained from an LHS or other space-filling design. Two approaches to this problem are outlined below: stepwise Gaussian process variable selection (SGPVS) [109] and reference distribution variable selection (RDVS) [63].

In the first method, screening is via stepwise selection of θ i , α i in (33.7), analogous to forward stepwise selection in linear regression models. The SGPVS algorithm identifies those variables that differ from the majority in their impact on the response in the following steps:

-

(i)

Find the maximized log-likelihood for model (33.1) subject to θ i = θ and α i = α for all i = 1, …, d; denote this by l 0.

-

(ii)

Set \(\mathcal{E} =\{ 1,\ldots,d\}\).

-

(iii)

For each \(j \in \mathcal{E}\), find the maximized log-likelihood subject to θ k = θ and α k = α for all \(k \in \mathcal{E}\setminus \{j\}\); denote the maximized log-likelihood by l j .

-

(iv)

Let \(j^{\star } =\mathrm{ arg}\max \limits _{j\in \mathcal{E}}l_{j}\). If \(l_{j^{\star }} - l_{0}> c\), set \(\mathcal{E} = \mathcal{E}\setminus \{j^{\star }\}\), \(l_{0} = l_{j^{\star }}\) and go to step (iii). Otherwise, stop.

In step (iv), the value c ≈ 6 (the 5% critical value for a \(\mathcal{X}_{2}\) distribution) has been suggested [109].

The algorithm starts by assuming an isotropic correlation function, and, at each iteration, at most one variable is allocated individual correlation parameters. The initial model contains only four parameters and the largest model considered has 4d + 2 parameters. However, factor sparsity suggests that the algorithm usually terminates before models of this size are considered. Hence, smaller experiments can be used than are normally employed for GP regression in d variables (e.g., d = 20 and n = 40 or 50 [109]). This approach essentially adds one variable at a time to the model. Hence, it has, potentially, similar issues as stepwise regression for linear models; in particular, the space of possible GP models is not very thoroughly explored.

The second method, RDVS, is a fully Bayesian approach to the GP variable selection problem. A Bayesian treatment of model (33.1) with correlation function (33.7) requires numerical methods, such as Markov Chain Monte Carlo (MCMC) , to obtain an approximate joint posterior distribution of θ 1, …, θ d (in RDVS, α i = 2 for all i). Conjugate prior distributions can be used for β 0 and σ 2 to reduce the computational complexity of this approximation. In RVDS, a prior distribution, formed as a mixture of a standard uniform distribution on [0, 1] and a point mass at 1, is assigned to \(\rho _{i} =\exp \left (-0.25\theta _{i}\right )\). This reparameterization of θ i aids the implementation of MCMC algorithms and provides an intuitive interpretation: small 0 < ρ i ≤ 1 corresponds to an active variable with the response changing rapidly with respect to the ith variable.

To screen variables using RVDS , the design matrix X n for the experiment is augmented by a (d + 1)th column corresponding to an inert variable (having no substantive effect on the response) whose values are set at random. The posterior median for the correlation parameter, θ d+1, of the inert variable is computed for b different randomly generated design matrices, formed by sampling values for the inert variable, to obtain an empirical reference distribution for the median θ d+1. The percentiles of this reference distribution can be used to assess the importance of the “real” variables via the size of the corresponding correlation parameters.

For methods that also incorporate variable selection into the Gaussian process mean function, i.e., incorporating the choice of functions in h(x), see [68, 80].

6 Examples and Comparisons

In this section, six combinations of the design and modeling strategies discussed in this paper are demonstrated and compared for variable screening using two test functions from the literature having d = 20 variables and \(\mathcal{X} = [-1,1]^{d}\). The functions differ in the number of active variables and the strength of influence of these variables on the output.

Example 1.

A function used to demonstrate stepwise Gaussian process variable selection [109]:

where w i = 0. 5x i (i = 1, …, 20). There are six active variables, x 1, x 4, x 5, x 12, x 19, and x 20.

Example 2.

A function used to demonstrate the elementary effect method [74]:

where v i = x i for i ≠ 3, 5, 7 and v i = 11(x i + 1)∕(5x i + 6) − 1 otherwise; β j = 20 (j = 1, …, 10), β jk = −15 (j, k = 1, …, 6), β jkl = −10 (j, k, l = 1, …, 5), and β jklu = 5 (j, k, l, u = 1, …, 4). The remaining β j and β jk values are independently generated from a N(0,1) distribution, and these are used in all the analyses; all other coefficients are set to 0. There are ten active variables, x 1, …, x 10.

There are some important differences between these two examples: function (33.21) has a proportion of active variables (0.3) in line with factor sparsity, but the influence of many of these active variables on the response is only small; function (33.22) is not effect sparse (with 50% of the variables being active), but the active variables have much stronger influence on the response. This second function also contains many more interactions. Thus, the examples present different screening challenges. For these two deterministic functions, a single data set was generated for each design employed.

The screening strategies employ experiment sizes chosen to allow comparison of the different methods. They fall into three classes:

-

1.

Methods using Gaussian processes and space-filling designs:

-

(a)

Stepwise Gaussian process variable selection (SGPVS)

-

(b)

Reference distribution variable selection (RDVS)

These two variable selection methods use n = 16, 41, 84, 200 runs, where n = 200 follows the standard guidelines of n = 10d runs for estimating a Gaussian process model [66]. For each value of n, two designs are found: a maximin Latin hypercube sampling design and a maximum projection space-filling design. These designs were generated from the R packages SLHD [5] and MaxPro [7] using simulated annealing and quasi-Newton algorithms. For both methods, α i = 2 in (33.7) for all i = 1, …, d; that is, for SGPVS, stepwise selection is performed for θ i only.

-

(a)

-

2.

One-factor-at-a-time methods:

-

(a)

Elementary effect (EE) method

-

(b)

Systematic fractional replicate designs (SFRD)

The elementary effects were calculated using the R package sensitivity [87], with each variable taking f = 4 levels, Δ = 2∕3 and r = 2, 4, 10 randomly generated trajectory vectors x 1, …, x r , giving design sizes of n = 42, 84, 210, respectively. An n = 42-run SFRD was used to calculate the sensitivity indices S(i), Eq. (33.13), for i = 1, …, 20.

-

(a)

-

3.

Linear model methods:

-

(a)

Supersaturated design (SSD)

-

(b)

Definitive screening design (DSD)

-

(a)

The designs used are an SSD with n = 16 runs and a DSD with n = 41 runs. For each design, variable selection is performed using the Dantzig selector as implemented in the R package flare [60] with shrinkage parameter s chosen using AICc. Note that these designs are tailored to screening in linear models and hence may not perform well when the output is inadequately described by a linear model.

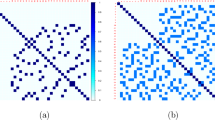

Tables 33.6 and 33.7 summarize the results for Examples 1 and 2, respectively, and present the sensitivity (ϕ s), type I error rate (ϕ I), and false discovery rate (ϕ fdr) for the five methods. The summaries reported in these tables use automatic selection of tuning parameters in each method; see below. More nuanced screening, for example, using graphical methods, may produce different trade-offs between sensitivity, type I error rate, and false discovery rate. In general, with the exception of the EE method, results are better for Example 1, which obeys factor sparsity, than for Example 2, which does not.

For the Gaussian process methods (SGPVS and RDVS), the assessments presented in Tables 33.6 and 33.7 are the better of the results obtained from the maximin Latin hypercube sampling design and the maximum projection space-filling design. In Example 1, for n = 16, 41, 84 the maximin LHS design gave the better performance, while for n = 200 the maximum projection design was preferred. In Example 2, the maximum projection design was preferred for n = 16, 200 and the maximin LHS design for n = 41, 84. For Example 1, note that, rather counterintuitively, for correlation function (33.7) with α i = 2, SGPVS has lower sensitivity for n = 200 than for n = 41 or n = 84. A working hypothesis to explain this result is that larger designs, with points closer together in the design space, can present challenges in estimating the parameters θ 1, …, θ d when the correlation function is very smooth. Setting α i = 1, so that the correlation function is less smooth, resulted in better screening for n = 200. The choice of design for Gaussian process screening is an area for further research, as is the application to screening of extensions of the Gaussian process model to high-dimensional inputs [37, 43].

SGPVS and RDVS performed well when used with larger designs (n = 84, 200) for both examples. For Example 1, the effectiveness of SGPVS has already been demonstrated [109] for a Latin hypercube design with n = 50 runs. In the current study, the method was also effective when n = 41. Neither RDVS or SGPVS provided reliable screening when n = 16. Both methods found Example 2, with a greater proportion of active variables, more challenging; effective screening was only achieved when n = 84, 200.

For RVDS, recall that the empirical distribution of the posterior median of the correlation parameter for the inert variable is used as a reference distribution to assess the size of the correlation parameters for the actual variables. For the assessments in Tables 33.6 and 33.7, a variable was declared active if the posterior median of its correlation parameter exceeded the 90th percentile of the reference distribution. A graphical analysis can provide a more detailed assessment of the method. Figure 33.3 shows the reference distribution and the posterior median of the correlation parameter for each of the 20 variables. Variables declared active have their posterior medians in the right-hand tail of the reference distribution. For both examples, the greater effectiveness of RDVS for larger n is clear. It is interesting to note that choosing a smaller percentile as the threshold to declare a variable active, for example, 80%, would have resulted in considerably higher type I error and false discovery rates for n = 16, 41.