Abstract

This chapter presents a quantitative summary of research with regard to the effects of school size on student achievement and noncognitive outcomes (such as involvement, participation, social cohesion, safety, attendance, etc.). The noncognitive outcomes are widely considered as desirable in it, but are also often assumed to be conducive to high academic performance.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

This chapter presents a quantitative summary of research with regard to the effects of school size on student achievement and noncognitive outcomes (such as involvement, participation, social cohesion, safety, attendance, etc.). The noncognitive outcomes are widely considered as desirable in it, but are also often assumed to be conducive to high academic performance.

4.1 General Approach

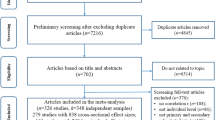

The approach applied in this chapter yields an overall estimate of expected outcomes at a given school size. As such the approach can be considered a type of meta-analysis. However, common meta-analysis methods cannot be applied when dealing with research on the effects of school size. The main reason for this is that the relation between school size and outcomes is not always modeled as a clear-cut difference between small and large schools or as a straightforward linear relationship in studies that treat school size as a continuous variable.

Standard methods for conducting meta-analysis either assume a comparison between an experimental group and a comparison group or an effect measure that expresses a linear relationship. Outcomes from several studies are then standardized so that a weighted average effect can be computed (taking into account differences in sample sizes). The outcomes per study may be a standardized difference between groups (e.g., Cohen’s d) or a statistic that describes the linear relation between an explanatory and a dependent variable (e.g., Fisher Z). Both kinds of measures can be converted to a common metric.

Many different forms beside a straightforward linear relationship (i.e., the smaller/larger the better) are hypothesized and reported in research on effects of school size, e.g., quadratic and log-linear. In a considerable number of studies, several different size categories are compared. The reason for this is that researchers want to take into account the possibility that it may be more appropriate to look for an optimal school size rather than to estimate a linear relationship between size and outcomes. Such a linear relationship would imply that the best results occur if schools would be either as large as a possible (e.g., one school for an entire district) or as small as possible (e.g., single class schools).

With regard to school size research, providing a quantitative summary of research findings is therefore quite complicated. Often more than just two school size categories are compared. In addition, the categories used vary between studies. In other cases the relation between school size and outcomes is modeled as a mathematical function (mostly linear, log-linear, or quadratic). The findings from these studies are not only difficult to compare to those that relate to comparisons between different school categories, but also the distinct mathematical functions cannot be converted to a common metric. When the effect of school size is modeled as a quadratic function, two distinct coefficients must be estimated (linear and quadratic), which precludes by definition converting the findings to a single metric.

As standard meta-analysis methods are not suitable when it comes to drawing up a quantitative summary of the research findings, another approach will be used. Based on the findings reported in the reviewed studies the “predicted” outcomes given a certain school size are calculated. To achieve comparability of the results only the scores on the outcome variables have been standardized to z-scores. There is no need to standardize the explanatory variable as well, because studies only have been included that use the same operationalization for school size (i.e., total number of students enrolled). Standardization of both the explanatory and the dependent variable is often applied in meta-analysis when the focus is on the relationship between two numerical variables. Often this is the only option available to render findings from different studies comparable, as the operationalizations of both dependent and independent variables tend to vary across studies. In such cases standardized regression coefficients may be the “raw material” processed in the meta-analysis. In the present case standardizing the independent variable is not required, but standardization of the outcomes is unavoidable, as the raw scores are incomparable across studies. Whatever the outcome variable relates to (student achievement, involvement, safety), the operationalization is bound to differ from one study to the next. The approach applied here reports for specific school sizes the average standardized outcomes over a number of studies. More details on this method are provided below as we illustrate more specifically how the “predicted” outcomes have been calculated for a couple of studies.

A potential risk of the approach relates to samples with strongly diverging ranges on the explanatory variable. Suppose that one is dealing with two samples. In the first sample, the school size ranges from very small (single class schools) to a total enrolment of 500 students and the average school size is 250. The second sample consists of schools with enrolments ranging from 500 to 1,000 students and the average school size is 750. If the effect of school size on achievement is identical (e.g., achievement decreases one tenth of a standard deviation with a school size increase of 100 students enrolled), one would conclude that in both schools with 50 students and in schools with 550 students achievement is two tenths of a standard deviation above average. This interpretation might be correct, but it might also be mistaken. It is conceivable that the average achievement is much higher in the sample with smaller schools. In that case, the previous interpretation is clearly a mistake. It is therefore very important to be cautious in drawing conclusions from studies based on studies that vary strongly with regard to the ranges in school size. Note that similar risks apply to more commonly applied methods of meta-analysis.

4.2 Summarizing the Research Findings

Separate analyses are reported for student achievement and noncognitive outcomes. If an effect of school size for more than one measure of student achievement is reported (e.g., both language and mathematics), the average of these effects is reported in the summary. The same goes for noncognitive outcomes. In some studies, the effect of school size on a wide range of noncognitive outcomes (involvement, attendance, and safety) may be covered. Also in these cases the average effect is reported in the summary.

Findings will be reported separately for primary and secondary education. The main focus will be on the effect of school size on individual students. The key question addressed is to what extent student scores (cognitive or noncognitive) turn out to be relatively high or low given a certain school size. Student scores are standardized according to the well-known z-score transformation. First the mean is subtracted from each score and next the resulting difference is divided by the standard deviation. In another approach that is frequently applied, the result after subtraction is divided not by the standard deviation in student scores, but the standard deviation in school averages. The main argument for this approach is that school size, being a school level characteristic, can only have an impact on school means. Also when an analysis is based on data that are aggregated at the school level, it is hardly ever possible to estimate the effect of school size at the student level (unless information is available on the variation among student scores within schools). One highly important consequence of this approach is that it will inevitably yield larger estimates of school size effects. Only in the extreme situation where all variation in student scores is situated at the school level, (which would imply a complete absence of differences among students within schools) this approach yield the same estimate of a school size effect. However, as long as there is some variance between students within schools (which is always the case in real life), the school level variance (and therefore the standard deviation) is less than the total variance among students.

The argument outlined above may be illustrated with a simple example. Suppose that the standard deviation on a student achievement score equals 10 (therefore the variance is 100) and that the percentage of school level variance is 16.Footnote 1 This implies a variance equal to 16 and thus a standard deviation of 4 (square root of 16). Now let us assume that in large schools achievement scores are on average about 2 points lower than in small schools (for the moment, we will not deal with the question what counts as small and large school size). At the student level, this is a modest effect at best (one-fifth of a standard deviation), but if we compare the difference to the standard deviation among school means, the effect looks fairly impressive. In that case the difference between large and small schools equals half a standard deviation. Note that also such increases of the school size effect become even stronger as the percentage of school level variance decreases. In that case also the standard deviation among school means gets smaller, which will make the effect of school size appear to be larger. Especially for noncognitive outcomes differences between schools have often been reported to be quite modest.

In the authors’ opinion, the most appropriate basis for expressing the school size effect is the total amount of variation (i.e., the standard deviation) among student scores. This puts the impact of school size in the right perspective. The impact is limited because it only affects school means. Most of the variation in student scores (both cognitive and cognitive) is situated within schools. This variation cannot be affected by changes in school size unless school size interacts with a student level variable (e.g., some studies have reported that the effect of school size is relatively for socioeconomically disadvantaged students). This natural limitation of the impact by school level characteristics should be clearly expressed in an assessment of the effects of school size. However, findings that are standardized by means of the school level standard deviation will be reported as well. Otherwise a substantial part of the available research would be discarded.

If one study covers two or more distinct samples (e.g., primary school students and secondary school students; or samples from different countries or regions) the outcomes per sample will be treated separately when the findings are reviewed. Thus, it is possible that a single study contributes more than one result when summarizing the findings.

Findings on the effect of school size are included in the summary if they meet the following two preconditions. First of all sufficient information needs to be provided for calculating the “predicted” outcomes at a given school size. Some author report only unstandardized regression coefficients without providing information on mean and standard deviations of the outcome variables. In such cases it is impossible to determine what the standardized outcome will be according to the regression model. In other cases only standardized regression coefficients are reported. In such cases one needs information on the mean and standard deviation of the explanatory variable (i.e., school size) in order to determine what the standardized outcomes will be for a given school size. The second precondition is that only findings are included if prior achievement has been controlled for. This is the case if the analysis is based on growth scores or if student achievement has been controlled for prior achievement. Note that controlling for cognitive aptitude (e.g., IQ measures) has only been counted as measures of prior achievement if students took the test at an earlier point in time. Some studies did control for cognitive aptitude that was measured during the same period that the outcome measures were collected. Findings from these studies have not been included in the quantitative summary.

4.3 School Size and Student Achievement in Primary Education

Out of the total number of studies on school size reviewed, five relate to its effect on individual student achievement in primary education and also meet the preconditions specified above. All five studies were conducted in the United States. Basic details about these studies are provided in Table 4.1.

In the studies by Archibald (2006), Holas and Huston (2012), and Maerten-Rivera et al. (2010) the relation between school size and student achievement is modeled as a linear function. Of these only Archibald (2006) reports a significant (and negative) effect of school size for both reading and mathematics. Maerten-Rivera et al. also report a negative effect, but a nonsignificant one. Holas and Huston (2012) only report that their analyses failed to reveal a significant relationship. For the quantitative summary of the research findings, it is assumed that they found a zero relationship. In the studies by Lee and Loeb (2000) and by Ready and Lee (2007) different categories of schools are compared. Lee and Loeb (2000) distinguish three categories (less than 400 students; 400–750 students, and over 750 students). Ready, and Lee (2007) distinguish five categories (less than 275 students; 275–400 students; 400–600 students; 600–800 students, and over 800 students). Lee and Loeb (2000) report significantly lower performance in the medium category (400–750 students) in comparison to the small category. Ready and Lee (2007) report significantly lower performance in the large schools category (>800 students) in comparison to the medium category (400–600) for reading in the first grade. For mathematics in the first grade they report a significantly higher performance in the small schools category (<275 students) in comparison to the medium category. No significant effects of school size were found in Kindergarten.

By taking a closer look at the findings reported by Archibald (2006) their implications become apparent in more detail. The reported standardized regression coefficients equal −0.03 and −0.07 for reading and mathematics, respectively. As the mean and standard deviation for school size are reported as well (see Table 4.1), any school size can be transformed into a z-score. The z-scores corresponding with school size ranging from 150 to 850 are displayed in Table 4.2. After that one only needs to multiply the z-scores with either −0.03 or −0.07 to arrive at the predicted z-scores for reading or mathematics. Table 4.2 shows the details that the Archibald findings imply in a primary school with 800 students and the reading scores on average are 0.055 of standard deviation below average. For mathematics this will be 0.128 of a standard deviation. The table also reports the average results across both subjects.

Lee and Loeb (2000) report differences in mathematics achievement between various school size categories after controlling for numerous confounding variables including prior achievement. The differences reported are standardized by dividing through the standard deviation among school averages. As both within school and between school variances are reported (Lee and Loeb 2000, p. 18), it is possible to rescale the reported differences relative to the total standard deviation in student achievement scores. Lee and Loeb (2000, p. 21) report that the math scores are on average 0.073 of a standard deviation higher in small schools vs. medium schools (less than 400 students versus 400–750 students). The advantage of small over large schools is more modest (0.041 and statistically not significant). Given the information provided in Lee and Loeb (2000) and assuming that the standardized average score must be equal to zero, it is possible to compute for each school size category the “predicted” average. Table 4.3 report two types of standardized scores. First the scores standardized relative to the standard deviation among school means and next the scores standardized relative to the total standard deviation in math scores (i.e., taking into account variation within and between schools). The table shows that the highest scores were found in the smallest schools. However, the differences are clearly more modest when they standardized relative to the standard deviation based on variation both within and between schools. The findings clearly suggest a curvilinear relationship between school size and achievement. Based on the standardized averages per category, a quadratic function has been estimated. This approach has also been applied to the findings reported by Ready and Lee (2007) and further on to findings from other studies that focus on differences between three or more school size categories.

Table 4.4 reports the main findings from all five studies on the school size effect in primary education based on student level findings. For each study, the predicted standardized achievement scores at student level are reported. All five studies report outcomes within the range from 200 to 850 students enrolled. For school sizes within this range a weighted average across all five studies has been calculated. Outcomes per study are weighted by the number of students.Footnote 2 Figure 4.1 provides a graphical display of the findings. On an average a slightly negative effect of school size on student achievement scores is detected. It should be noted, though, that the difference between student achievements in primary schools with 200 versus 850 students enrolled is still below one tenth of a standard deviation.

4.4 School Size and School Mean Achievement in Primary Education

It has already been mentioned that it also customary to standardize school size effects relative to the standard deviation among school means. This is the only option available when the analyses are based on aggregated school data. When multilevel analyses are conducted, it is possible to compute both types of standardized scores, provided that the necessary information on variance within and between schools on the outcome variable is reported. This is the case for three of the studies discussed in the previous section (Archibald 2006; Lee and Loeb 2000; Maerten-Rivera et al. 2010). See Table 4.5 for basic details on these studies. One additional study on school size and student achievement in primary education is included in Table 4.5 (Fernandez 2011). This study is based on aggregated school data. Like the other studies discussed so far, it relates to American schools (Nevada). The reported effect of school size on achievement is not significant and the standardized regression coefficient shows no noticeable deviation from zero. The study by Fernandez also includes high schools and middle schools, but the effects of school size are controlled for school type.

Appendix 4.1 presents the predicted standardized school means per school size for these studies. Figure 4.2 provides a graphical display of the findings. The figures in Appendix 4.1 also illustrate to what extent school size effects “increase” when the standardization is based on variation between school means. In the Archibald study the predicted standardized student scores range from 0.127 in schools with 200 students to −0.110 in schools with 800. The predicted standardized school means in the same study range from 0.296 to −0.257. Similar increases can be observed for the studies by Lee and Loeb (2000) and Maerten-Rivera et al. (2010). The impact of school size clearly appears to be more impressive if one compares the differences between large and small schools to the standard deviation of the school averages. Still, it is our opinion that the effects reported in Table 4.4 (i.e., impact on student scores) provide a more appropriate description of the impact of school size.

4.5 School Size and Student Achievement in Secondary Education

Six studies have been found that related to the effect of school size on individual student achievement in secondary education and also meet our preconditions. Of these, five relate to secondary schools in the United States. The study by Ma and McIntyre (2005) deals with the situation in Canada (Alberta). Basic details are reported in Table 4.6. Except for the study by Ma and McIntyre (2005) the effect of school size is analyzed through comparison of different categories. However, there is little similarity in the categorizations applied. The number of categories range from 4 (Carolan 2012; Rumberger and Palardy 2005) to 8 (Lee and Smith 1997). See Table 4.6 for more details.

Most of the studies included in Table 4.6 report differences in student achievement between school categories. In those cases, a quadratic function has been estimated to describe the relation between school size and student achievement. This function is based on the standardized averages per category. There are two exceptions. The first one is the study by Luyten (1994), which only reports that no significant differences between categories were found. The other exception is the study by Ma and McIntyre (2005). Here a linear relation between school size and achievement is estimated, but the authors only report a significant interaction effect of taking math courses with school size on the mathematics post-test (the effect of taking math courses is weaker in larger schools; in other words: students that take math course get higher scores if they attend smaller schools). No main effect for school size on math achievement is reported. For this review it is assumed that the main school size effect is not statistically significant in this study. No further details are reported and for the summary of the research findings it is assumed that both the study Luyten (1994) and by Ma and McIntyre found a zero relationship.

Appendix 4.2 reports the predicted standardized achievement scores per school size in secondary education for individual student achievement. Weighted averages for school sizes within the range from 400 to 1,900 students enrolled are presented as well. Note that the studies by Luyten (1994) and Ma and McIntyre (2005) do not fully cover this range. The zero effects that are reported in these studies are assumed to extend beyond the exact ranges covered in these studies. In contrast to primary education, the findings suggest a curvilinear relation between school size and student achievement. The lowest scores are found in small secondary schools (−0.050). In schools with enrolments ranging from 1,200 to 1,600, the scores are at least one-tenth of a standard deviation higher. When schools get larger, the predicted scores decrease somewhat. Figure 4.3 provides a graphical display of the findings.

4.6 School Size and School Mean Achievement in Secondary Education

Appendix 4.3 reports the predicted standardized achievement scores per school size in secondary education for school mean achievement. For four out of the six studies included in Appendix 4.2, it was possible to calculate predicted standardized school means per school size. The study by Fernandez (2011), which makes use of aggregated school-level data (from the USA, Nevada) is included in Appendix 4.3. Again the findings reveal a curvilinear pattern, but now the lowest scores are found in the largest schools and the highest scores are found in schools with enrolments ranging from 900 to 1,250. This suggests a somewhat smaller optimum school size than suggested by the results based on individual achievement data. The findings from Appendix 4.3 are graphically displayed in Fig. 4.4.

4.7 School Size and Noncognitive Outcomes in Primary Education (Individual and School Means)

A wide range of outcome variables is subsumed under the label noncognitive outcomes. Still the number of studies on school size and noncognitive outcomes in primary education that report sufficient information to calculate the predicted outcomes per school size is quite limited, even though the requirements to be included in the quantitative summary are less stringent than for academic achievement. For studies on noncognitive outcomes controlling for prior achievement was not considered necessary. Inclusion of socioeconomic background as a covariate in the analyses was deemed sufficient.

For the summary relating both to individual outcomes and school means five distinct studies are available. Of these, one relates exclusively to the effect of school size on individual outcomes (Holas and Huston 2012), two relate exclusively to school means (Durán-Narucki 2008; Lee and Loeb 2000) and two relate to both levels (Bonnet et al. 2009; Koth et al. 2008). See Table 4.7 for an overview of the studies on school size and noncognitive outcomes in primary education.

Four of the five studies listed in Table 4.7 report on American research. The other one relates to research in the Netherlands. In three studies, the effect of school size is modeled as a linear function (Durán-Narucki 2008; Holas and Huston 2012; Koth et al. 2008). In the other two studies, three categories are compared (Bonnet et al. 2009: <300, 301–500, >500; Lee and Loeb 2000: <400, 400–750, >750). When summarizing the findings, the results reported by Bonnet et al. (2009) have been rescored so that a high score denotes a positive situation (i.e., little peer victimization). These authors report significantly more victimization in the category of large schools (over 500 students). Lee and Loeb (2000) report significantly more positive teacher attitudes about responsibility for student learning in small schools (less than 400 students). Based on the standardized averages per category, a quadratic function has been estimated to denote the relation between school size and noncognitive outcomes in these two studies. Holas and Huston have analyzed the linear relation between school size and three noncognitive outcomes (student perceived self-competence, school involvement in grade 5, and in grade 6). Only the relation between size and involvement in grade 6 was found to be significant. The predicted scores presented in Appendix 4.4 denote the averages across these three outcomes. The study by Koth et al. (2008) focuses on achievement motivation and student-reported order and discipline. The relation between school size and order and discipline is not significant but they found a significantly negative relation between school size and achievement motivation. In Appendix 4.4, the averages across both outcomes are reported. Duran-Narucki focused on attendance and found significantly higher attendance in large schools (see Appendix 4.5). This is the only study on noncognitive outcomes in primary education that shows positive effects when schools are large.

The weighted average in Appendix 4.4 suggests a somewhat stronger effect of school size on noncognitive student outcomes in primary education as compared to achievement scores (see Table 4.4). The difference between primary schools with 200 versus 600 students is 0.13 standard deviation. With regard to student achievement scores, the difference between schools with 200 versus 600 students equals 0.076 standard deviation. Appendix 4.5 reports the predicted standardized school means per school size. The effect of school size looks stronger when standardized relative to standard deviation among school means. However, the standardization applied in Appendix 4.4 must be considered more appropriate. Graphic displays of the findings on the relation between school size and noncognitive outcomes in primary education are provided in Figs. 4.5 and 4.6.

4.8 School Size and Noncognitive Outcomes in Secondary Education

A relatively large number of studies provide details on the predicted level of noncognitive outcomes per school size in secondary education. Table 4.8 provides basic information about these studies. The total number of studies is 19, but the study by Kirkpatrick Johnson et al. (2001) reports separate findings for middle schools (grades 7 and 8) and high schools (grades 7–12). As a result, the number of samples thus equals 20.

Twelve samples focus on the relation of school size with student outcomes and seventeen on the relation with school mean scores. Nine samples provide information on both student outcomes and school mean scores. Most research derives from the USA, but seven studies relate to other countries (two Israeli, two Dutch, the remaining three from Australia, Italy, and Taiwan). Many studies focus on the occurrence of incidents and other undesirable phenomena (such as harassment, disorder, theft, vandalism). All outcomes have been rescored in such a way that low scores denote a negative situation (e.g., high frequencies of vandalism and theft or low levels of safety or involvement). In most studies school size is modeled as a continuous variable. Only five studies make use of school size categories (Bowen et al. 2000; Chen 2008; Chen and Vazsonyi 2013; Dee et al. 2007; Rumberger and Palardy 2005). In the remaining 15 samples, the relation between school size and noncognitive outcomes is mostly modeled as a linear function, but in three cases (Gottfredson and DiPietro 2011; McNeely et al. 2002; Payne 2012) the researchers modelled it as a log-linear function (i.e., outcomes were regressed on the log of school size).

As shown in Table 4.8, many studies on noncognitive outcomes relate to multiple outcome measures. In these cases, the average effect of school size across the outcome measures involved has been computed. These are the outcomes reported in Appendices 6a–c and the corresponding figures.

4.9 School Size and Noncognitive Student Outcomes

Appendix 4.6a presents the findings for the American studies that focus on student outcomes. Appendix 4.6b reports the findings for the non-U.S. studies. The averages across studies (overall and broken down for American and non-U.S. samples) are reported in Appendix 4.6c. Graphic representations of the results are provided in the Figs. 4.7, 4.8 and 4.9.

For three out of the five American samples negative and significant effects on noncognitive outcomes are reported. The study by Gottfredson and DiPietro (2011) has come up with significantly positive effects. Kirkpatrick Johnson et al. (2001) report nonsignificant effects for their sample that focuses on students in middle schools. The strongest effect is reported in the study by Bowen et al. (2000), which reports a difference of about half a standard deviation between the smallest and the largest schools. School size ranges in this study from less than 100 students to nearly 1,400. The outcome measures relate to school satisfaction, safety, and teacher support.

Whereas the American findings mostly show negative effects of large school size on noncognitive student outcomes in secondary education, research conducted outside the U.S. fails to confirm this picture. Appendix 4.6b presents the results from six studies conducted outside the U.S. Of these, three show a negative effect of large school size, but the other three show a positive effect. Two of the negative effects are statistically significant (Attar-Schwartz 2009; Van der Vegt et al. 2005). Only one of the reported positive effects is significant (Mooij et al. 2011). All of these three studies relate to various aspects of school safety. Two of these studies were conducted in the Netherlands. Both reports show significant effects, but in different directions. The finding reported by Vieno et al. (2005) for Italy deserves special mention. The effect in this study appears to be particularly strong, without reaching statistical significance. Perhaps the strong effect is due to over-fitting, as the number of explanatory variables at the school level is quite large relative to the number of schools.

The general picture on the relation between school size and noncognitive outcomes at the student level across all twelve samples is provided in Appendix 4.6c and Fig. 4.9. The overall effects of school size on noncognitive student outcomes appear to be quite modest, but findings from the U.S. versus outside the U.S. contradict each other. The average effect in American studies is slightly negative, whereas studies form other countries (Israel, Italy, the Netherlands, and Taiwan) show on average a positive effect of school size. Even when the findings from the study by Vieno et al. (2005) are excluded from the summary, the effect of school size remains positive. However, the effect becomes considerably smaller in that case. School size effects on noncognitive student outcomes must be described as small. The difference between predicted scores in schools with 300 versus 1,100 students is about 0.06 of standard deviation (positive or negative). The findings that relate to the U.S. suggest a negative effect of large school size, but this average effect is even smaller than the positive effects found in other countries.

4.10 School Size and Noncognitive School Mean Scores

The findings that relate to the relation between school size and standardized school mean scores largely replicate the findings on student outcomes. The main difference is that the effect on school mean scores appears to be stronger. This is basically a statistical artifact as the variation in school means is bound to be smaller than the variation between student scores. Again we see negative, but relatively small effects of large school size in the USA, while a reverse picture emerges from non-U.S. research. More details are provided in Appendices 7a–c and Figs. 4.10, 4.11, and 4.12 provide graphic illustrations of the trends described.

4.11 Conclusion

The research synthesis presented in this chapter was aimed at a precise specification of the relationship between school size and outcomes (both cognitive and noncognitive) in primary and secondary education. The predicted level of standardized outcomes given a certain school size was calculated for dozens of samples, based on the information provided in reports on the effects of school size. The discussion of the findings will focus on results related to outcomes that are standardized through division by the standard deviation in student scores. The alternative (division by the standard deviation in school means) is considered as less appropriate. It is bound to produce results that appear to reveal stronger effects of school size, which is confirmed in the present report. However, this approach tends to obscure that school size is unlikely to affect variation in student outcomes within schools, whereas the bulk of the variation in student scores (cognitive and noncognitive) is situated within schools.

On an average the review shows a slightly negative relation in primary education between school sizes both for cognitive and noncognitive outcomes. It should be noted that this finding is almost exclusively based on American research. The difference in predicted scores between very small and large schools is less than one tenth of a standard deviation for cognitive outcomes and somewhat larger (0.13 standard deviation) for noncognitive outcomes. Taken into account that the difference between the smallest and the largest schools amount at least to two standard deviations, it is clear that the effect of school size in terms of a standardized effect size (e.g., Cohen’s d) must be very modest. For noncognitive outcomes, it may still exceed the (very modest) value of 0.05, but for cognitive outcomes the effect is even weaker.

For cognitive outcomes secondary education, a curvilinear pattern emerged from the studies reviewed. The highest scores appear to occur in schools with over 1,200 students but less than 1,600 students. In larger schools, lower scores are found, but the lowest scores are predicted for schools with less than 700 students. The difference between the lowest scoring schools (400 students) and the highest scoring (1,350–1,500 students) is just over one-tenth of a standard deviation. Because the relation between school size and outcomes does not always fit into a linear pattern, it is difficult to express it in more current metrics like Cohen’s d, or a correlation coefficient. The difference between the highest scoring schools (i.e., medium to large) and small schools is probably less than one tenth of a standard deviation, which would commonly be considered a small effect (i.e., Cohen’s d < 0.20). This assessment is based on the supposition that the difference in size between very small and medium to large schools (approximately 1,000 students) accounts for atleast one standard deviation.Footnote 3 The findings on cognitive outcomes are exclusively based on research conducted in the U.S.

With regard to research on the relation between school size and noncognitive outcomes in secondary education a large part of the results relate to studies from other countries as well. Interestingly, clearly opposite trends are apparent in American studies versus studies from other countries. Across all studies the trend is slightly in favor of large schools. The difference between small secondary schools (300 students) and large ones (1,100 students) amounts to 0.06 standard deviation, but for American studies the trend is reversed. Small schools show more favorable scores, although the difference between small and large American schools turns out to be very modest (0.04 standard deviation). The effect of school size in non-U.S. studies is somewhat stronger and reversed (showing more positive scores in large schools).

Notes

- 1.

This is a realistic example. The total variance and percentage of variance at the school level are roughly the same for the standardized test taken in the final year of Dutch primary education (Cito eindtoets).

- 2.

In a meta-analysis based on effect sizes the results would be weighted by the inverse of the sampling variance. This weighting method cannot applied in the present case, as information on sampling variance was not reported for the predicted outcomes in any of the publications reviewed. Note that sampling variance is computed as the observed variance in the sample divided by the number of respondents. In the present case we can only take into account the number of respondents. De facto we assume that differences in variance between samples do not differ substantially.

- 3.

If the standard deviation in school size is 5,00 instead of 1,000, a difference of 0.10 would imply an effect size of 0.05.

References

Included in the Quantitative Summary of Research Findings

Archibald, S. (2006). Narrowing in on educational resources that do affect student achievement. Peabody Journal of Education, 81(4), 23–42. http://dx.doi.org/10.1207/s15327930pje8104_2

Attar-Schwartz, S. (2009). Peer sexual harassment victimization at school: The Roles of student characteristics, cultural affiliation, and school factors. American Journal of Orthopsychiatry, 79(3), 407–420. doi:10.1037/a0016553

Bonnet, M., Gooss, F. A., Willemen, A. M., & Schuengel, C. (2009). Peer victimization in dutch school classes of four- to five-year-olds: Contributing factors at the school level. The Elementary School Journal, 110(2), 163–177.

Bowen, G. L., Bowen, N. K., & Richman, J. M. (2000). School size and middle school students’ perceptions of the school environment. Social Work in Education, 22(2), 69–82.

Carolan, B. V. (2012). An examination of the relationship among high school size, social capital, and adolescents’ mathematics achievement. Journal of Research on Adolescence, 22(3), 583–595. doi:10.1111/j.1532-7795.2012.00779.x

Chen, G. (2008). Communities, students, schools and school crime—A confirmatory study of crime in US high schools. Urban Education, 43(3), 301–318. doi:10.1177/0042085907311791

Chen, P., & Vazsonyi, A. T. (2013). Future orientation, school contexts, and problem behaviors: A multilevel study. Journal of Youth and Adolescence, 42(1), 67–81. doi:10.1007/s10964-012-9785-4

Dee, T. S., Ha, W., & Jacob, B. A. (2007). The effects of school size on parental involvement and social capital: Evidence from the ELS:2002. Brookings Papers on Education Policy, pp. 77–97.

Durán-Narucki, V. (2008). School building condition, school attendance, and academic achievement in New York City public schools: A mediation model. Journal of Environmental Psychology, 28(3), 278–286. doi:10.1016/j.jenvp.2008.02.008

Fernandez, K. E. (2011). Evaluating school improvement plans and their affect on academic performance. Educational Policy, 25(2), 338–367. doi:10.1177/0895904809351693

Gottfredson, D. C., & DiPietro, S. M. (2011). School size, social capital, and student victimization. Sociology of Education, 84(1), 69–89. doi:10.1177/0038040710392718

Haller, E. J. (1992). High-school size and student indiscipline: Another aspect of the school consolidation issue. Educational Evaluation and Policy Analysis, 14(2), 145–156.

Holas, I., & Huston, A. C. (2012). Are middle schools harmful? The role of transition timing, classroom quality and school characteristics. Journal of Youth and Adolescence, 41(3), 333–345. doi:10.1007/s10964-011-9732-9

Khoury-Kassabri, M., Benbenishty, R., Astor, R. A., & Zeira, A. (2004). The contributions of community, family, and school variables to student victimization. American Journal of Community Psychology, 34(3–4), 187–204.

Kirkpatrick Johnson, M., Crosnoe, R., & Elder, G. H, Jr. (2001). Students’ attachment and academic engagement: the role of race and ethnicity. Sociology of Education, 74(4), 318–340.

Klein, J., & Cornell, D. (2010). Is the link between large high schools and student victimization an illusion? Journal of Educational Psychology, 102(4), 933–946. doi:10.1037/a0019896

Koth, C. W., Bradshaw, C. P., & Leaf, P. J. (2008). A multilevel study of predictors of student perceptions of school climate: The effect of classroom-level factors. Journal of Educational Psychology, 100(1), 96–104. doi:10.1037/0022-0663.100.1.96

Lee, V. E., & Loeb, S. (2000). School size in Chicago elementary schools: Effects on teachers’ attitudes and students’ achievement. American Educational Research Journal, 37(1), 3–31.

Lee, V. E., & Smith, J. B. (1997). High school size: Which works best and for whom? Educational Evaluation and Policy Analysis, 19(3), 205–227.

Luyten, H. (1994). School size effects on achievement in secondary education: Evidence from the Netherlands, Sweden, and the USA. School Effectiveness and School Improvement, 5(1), 75–99. doi:10.1080/0924345940050105

Ma, X., & McIntyre, L. J. (2005). Exploring differential effects of mathematics courses on mathematics achievement. Canadian Journal of Education/Revue Canadienne de l’éducation, 28(4), 827–852.

Maerten-Rivera, J., Myers, N., Lee, O., & Penfield, R. (2010). Student and school predictors of high-stakes assessment in science. Science Education, 94(6), 937–962. doi:10.1002/sce.20408

McNeely, C. A., Nonnemaker, J. M., & Blum, R. W. (2002). Promoting school connectedness: Evidence from the National Longitudinal Study of Adolescent Health. Journal of School Health, 72(4), 138–146.

Mooij, T., Smeets, E., & de Wit, W. (2011). Multi-level aspects of social cohesion of secondary schools and pupils’ feelings of safety. British Journal of Educational Psychology, 81(3), 369–390. doi:10.1348/000709910X526614

Payne, A. A. (2012). Communal school organization effects on school disorder: Interactions with school structure. Deviant Behavior, 33(7), 507–524. doi:10.1080/01639625.2011.636686

Ready, D. D., & Lee, V. E. (2007). Optimal context size in elementary schools: Disentangling the effects of class size and school size and school size. Brookings Papers on Education Policy, pp. 99–135.

Rumberger, R. W., & Palardy, G. J. (2005). Test scores, dropout rates, and transfer rates as alternative indicators of high school performance. American Educational Research Journal, 42(1), 3–42.

Silins, H., & Mulford, B. (2004). Schools as learning organisations—Effects on teacher leadership and student outcomes. School Effectiveness and School Improvement, 15(3–4), 443–466. http://dx.doi.org/10.1080/09243450512331383272

van der Vegt, A. L., den Blanken, M., & Hoogeveen, K. (2005). Nationale scholierenmonitor: meting voorjaar 2005. Utrecht: Sardes.

Vieno, A., Perkins, D. D., Smith, T. M., & Santinello, M. (2005). Democratic school climate and sense of community in school: A multilevel analysis. American Journal of Community Psychology, 36(3–4), 327–341. doi:10.1007/s10464-005-8629-8

Wei, H. S., Williams, J. H., Chen, J. K., & Chang, H. Y. (2010). The effects of individual characteristics, teacher practice, and school organizational factors on students’ bullying: A multilevel analysis of public middle schools. Children and Youth Services Review, 32(1), 137–143. doi:10.1016/j.childyouth.2009.08.004

Wyse, A. E., Keesler, V., & Schneider, B. (2008). Assessing the effects of small school size on mathematics achievement: A propensity score-matching approach. Teachers College Record, 110(9), 1879–1900.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Predicted School Mean Achievement (Standardized) Per School Size in Primary Education

Archibald (2006) | Fernandez (2011) | Lee and Loeb (2000) | (Maerten-Rivera et al. 2010) | Weighted average | |

|---|---|---|---|---|---|

Number of schools | 55 | 252 | 264 | 198 | 769 |

School size | |||||

100 | |||||

150 | −0.339 | 0.295 | |||

200 | 0.296 | 0.000 | 0.073 | 0.272 | 0.116 |

250 | 0.254 | 0.000 | 0.054 | 0.249 | 0.101 |

300 | 0.211 | 0.000 | 0.037 | 0.226 | 0.086 |

350 | 0.168 | 0.000 | 0.022 | 0.204 | 0.072 |

400 | 0.126 | 0.000 | 0.010 | 0.181 | 0.059 |

450 | 0.083 | 0.000 | −0.001 | 0.158 | 0.046 |

500 | 0.041 | 0.000 | −0.009 | 0.135 | 0.035 |

550 | −0.002 | 0.000 | −0.015 | 0.113 | 0.024 |

600 | −0.044 | 0.000 | −0.019 | 0.090 | 0.014 |

650 | −0.087 | 0.000 | −0.021 | 0.067 | 0.004 |

700 | −0.130 | 0.000 | −0.021 | 0.045 | −0.005 |

750 | −0.172 | 0.000 | −0.018 | 0.022 | −0.013 |

800 | −0.215 | 0.000 | −0.014 | −0.001 | −0.020 |

850 | −0.257 | 0.000 | −0.007 | −0.024 | −0.027 |

900 | 0.000 | 0.002 | −0.046 | ||

950 | 0.000 | 0.013 | −0.069 | ||

1,000 | 0.000 | 0.026 | −0.092 | ||

1,050 | 0.000 | −0.114 | |||

1,100 | 0.000 | −0.137 | |||

1,150 | 0.000 | −0.160 | |||

1,200 | 0.000 | −0.183 | |||

1,250 | 0.000 | −0.205 | |||

1,300 | 0.000 | −0.228 | |||

1,350 | 0.000 | −0.251 | |||

1,400 | 0.000 | −0.274 | |||

1,450 | 0.000 | −0.296 | |||

1,500 | 0.000 | − 0.319 | |||

1,550 | 0.000 | − 0.342 | |||

1,600 | 0.000 | − 0.364 | |||

1,650 | 0.000 | − 0.387 | |||

1,700 | 0.000 | − 0.41 | |||

1,750 | 0.000 | − 0.433 | |||

1,800 | 0.000 | − 0.455 | |||

1,850 | 0.000 | − 0.478 | |||

1,900 | 0.000 | − 0.501 |

Appendix 2: Predicted Student Achievement (Standardized) Per School Size in Secondary Education

Carolan (2012) | Lee and Smith (1997) | Luyten (1994) | Ma and McIntyre (2005) | Rumberger and Palardy (2005) | Wyse et al. (2008) | Weighted average | |

|---|---|---|---|---|---|---|---|

N (students) | 9,647 | 9,812 | 4,507 | 1,518 | 14,199 | 12,853 | 54,134 |

School size | |||||||

100 | −0.144 | 0.000 | |||||

150 | −0.115 | 0.000 | 0.000 | ||||

200 | −0.088 | 0.000 | 0.000 | −0.284 | |||

250 | −0.062 | 0.000 | 0.000 | −0.256 | |||

300 | −0.038 | 0.000 | 0.000 | −0.032 | −0.228 | ||

350 | −0.016 | 0.000 | 0.000 | −0.029 | −0.202 | ||

400 | −0.011 | 0.005 | 0.000 | 0.000 | −0.027 | −0.176 | −0.051 |

450 | −0.011 | 0.024 | 0.000 | 0.000 | −0.025 | −0.152 | −0.041 |

500 | −0.012 | 0.042 | 0.000 | 0.000 | −0.022 | −0.128 | −0.032 |

550 | −0.012 | 0.058 | 0.000 | 0.000 | −0.020 | −0.105 | −0.022 |

600 | −0.013 | 0.072 | 0.000 | 0.000 | −0.018 | −0.083 | −0.014 |

650 | −0.013 | 0.085 | 0.000 | 0.000 | −0.015 | −0.062 | −0.006 |

700 | −0.013 | 0.096 | 0.000 | 0.000 | −0.013 | −0.042 | 0.002 |

750 | −0.013 | 0.106 | 0.000 | 0.000 | −0.011 | −0.023 | 0.009 |

800 | −0.013 | 0.114 | 0.000 | 0.000 | −0.009 | −0.004 | 0.015 |

850 | −0.012 | 0.120 | 0.000 | 0.000 | −0.007 | 0.013 | 0.021 |

900 | −0.012 | 0.125 | 0.000 | 0.000 | −0.005 | 0.029 | 0.027 |

950 | −0.012 | 0.128 | 0.000 | 0.000 | −0.003 | 0.045 | 0.032 |

1,000 | −0.011 | 0.130 | 0.000 | 0.000 | −0.001 | 0.060 | 0.037 |

1,050 | −0.011 | 0.130 | 0.000 | 0.000 | 0.001 | 0.074 | 0.041 |

1,100 | −0.010 | 0.128 | 0.000 | 0.000 | 0.003 | 0.086 | 0.044 |

1,150 | −0.009 | 0.125 | 0.000 | 0.000 | 0.005 | 0.098 | 0.047 |

1,200 | −0.008 | 0.120 | 0.000 | 0.000 | 0.007 | 0.109 | 0.050 |

1,250 | −0.007 | 0.114 | 0.000 | 0.000 | 0.009 | 0.120 | 0.052 |

1,300 | −0.006 | 0.106 | 0.000 | 0.011 | 0.129 | 0.053 | |

1,350 | −0.004 | 0.096 | 0.013 | 0.137 | 0.054 | ||

1,400 | −0.003 | 0.085 | 0.014 | 0.145 | 0.055 | ||

1,450 | −0.002 | 0.072 | 0.016 | 0.151 | 0.054 | ||

1,500 | 0.000 | 0.058 | 0.018 | 0.157 | 0.054 | ||

1,550 | 0.002 | 0.042 | 0.019 | 0.162 | 0.053 | ||

1,600 | 0.003 | 0.024 | 0.021 | 0.165 | 0.051 | ||

1,650 | 0.005 | 0.005 | 0.023 | 0.168 | 0.049 | ||

1,700 | 0.007 | −0.016 | 0.024 | 0.170 | 0.046 | ||

1,750 | 0.009 | −0.038 | 0.026 | 0.171 | 0.043 | ||

1,800 | 0.012 | −0.063 | 0.027 | 0.171 | 0.040 | ||

1,850 | 0.014 | −0.088 | 0.029 | 0.171 | 0.036 | ||

1,900 | 0.016 | −0.115 | 0.030 | 0.169 | 0.031 | ||

1,950 | −0.144 | 0.032 | 0.167 | ||||

2,000 | −0.175 | 0.033 | 0.163 | ||||

2,050 | −0.207 | 0.034 | 0.159 | ||||

2,100 | −0.241 | 0.036 | 0.153 | ||||

2,150 | −0.276 | 0.147 | |||||

2,200 | − 0.313 | 0.140 | |||||

2,250 | − 0.351 | ||||||

2,300 | − 0.391 | ||||||

2,350 | − 0.433 | ||||||

2,400 | − 0.476 |

Appendix 3: Predicted School Mean Achievement (Standardized) Per School Size in Secondary Education

Carolan (2012) | Fernandez (2011) | Lee and Smith (1997) | Luyten (1994) | Ma and McIntyre (2005) | Rumberger and Palardy (2005) | Weighted average | |

|---|---|---|---|---|---|---|---|

N (schools) | 579 | 252 | 789 | 116 | 34 | 912 | 2,648 |

School size | |||||||

100 | − 0.545 | 0.000 | |||||

150 | − 0.434 | 0.000 | 0.000 | ||||

200 | − 0.329 | 0.000 | 0.000 | ||||

250 | 0.000 | −0.230 | 0.000 | 0.000 | |||

300 | 0.000 | −0.137 | 0.000 | 0.000 | −0.061 | ||

350 | 0.000 | −0.051 | 0.000 | 0.000 | −0.057 | ||

400 | −0.027 | 0.000 | 0.029 | 0.000 | 0.000 | −0.052 | −0.015 |

450 | −0.028 | 0.000 | 0.103 | 0.000 | 0.000 | −0.047 | 0.008 |

500 | −0.029 | 0.000 | 0.171 | 0.000 | 0.000 | −0.042 | 0.030 |

550 | −0.030 | 0.000 | 0.233 | 0.000 | 0.000 | −0.038 | 0.049 |

600 | −0.031 | 0.000 | 0.288 | 0.000 | 0.000 | −0.033 | 0.067 |

650 | −0.031 | 0.000 | −0.337 | 0.000 | 0.000 | −0.029 | 0.083 |

700 | −0.032 | 0.000 | −0.380 | 0.000 | 0.000 | −0.025 | 0.096 |

750 | −0.032 | 0.000 | −0.417 | 0.000 | 0.000 | −0.020 | 0.109 |

800 | −0.031 | 0.000 | −0.448 | 0.000 | 0.000 | −0.016 | 0.120 |

850 | −0.031 | 0.000 | −0.473 | 0.000 | 0.000 | −0.012 | 0.128 |

900 | −0.030 | 0.000 | −0.491 | 0.000 | 0.000 | −0.008 | 0.135 |

950 | −0.029 | 0.000 | −0.503 | 0.000 | 0.000 | −0.004 | 0.140 |

1,000 | −0.028 | 0.000 | −0.509 | 0.000 | 0.000 | 0.000 | 0.144 |

1,050 | −0.026 | 0.000 | −0.509 | 0.000 | 0.000 | 0.004 | 0.145 |

1,100 | −0.024 | 0.000 | −0.502 | 0.000 | 0.000 | 0.008 | 0.145 |

1,150 | −0.022 | 0.000 | −0.490 | 0.000 | 0.000 | 0.012 | 0.143 |

1,200 | −0.020 | 0.000 | −0.471 | 0.000 | 0.000 | 0.015 | 0.139 |

1,250 | −0.017 | 0.000 | −0.446 | 0.000 | 0.000 | 0.019 | 0.134 |

1,300 | −0.014 | 0.000 | −0.415 | 0.000 | 0.022 | 0.127 | |

1,350 | −0.011 | 0.000 | −0.377 | 0.026 | 0.117 | ||

1,400 | −0.008 | 0.000 | −0.334 | 0.029 | 0.106 | ||

1,450 | −0.004 | 0.000 | 0.284 | 0.033 | 0.094 | ||

1,500 | 0.000 | 0.000 | 0.228 | 0.036 | 0.079 | ||

1,550 | 0.004 | 0.000 | 0.166 | 0.039 | 0.063 | ||

1,600 | 0.008 | 0.000 | 0.098 | 0.042 | 0.045 | ||

1,650 | 0.013 | 0.000 | 0.023 | 0.045 | 0.025 | ||

1,700 | 0.018 | 0.000 | −0.057 | 0.048 | 0.003 | ||

1,750 | 0.023 | 0.000 | −0.144 | 0.051 | −0.020 | ||

1,800 | 0.029 | 0.000 | −0.237 | 0.054 | −0.045 | ||

1,850 | 0.034 | 0.000 | −0.336 | 0.057 | −0.072 | ||

1,900 | 0.040 | 0.000 | −0.442 | 0.060 | −0.101 | ||

1,950 | 0.000 | −0.553 | 0.063 | ||||

2,000 | 0.000 | −0.671 | 0.065 | ||||

2,050 | 0.000 | −0.795 | 0.068 | ||||

2,100 | 0.000 | −0.925 | 0.070 | ||||

2,150 | 0.000 | −1.062 | |||||

2,200 | 0.000 | −1.204 | |||||

2,250 | 0.000 | −1.353 | |||||

2,300 | 0.000 | −1.508 | |||||

2,350 | 0.000 | ||||||

2,400 | 0.000 |

Appendix 4: Predicted Noncognitive Student Outcomes (Standardized) Per School Size in Primary Education

Bonnet et al. (2000) | Holas and Huston (2012) | Koth et al. (2008) | Weighted average | |

|---|---|---|---|---|

N (students) | 2,003 | 855 | 2,468 | 5,326 |

School size | ||||

100 | 0.073 | |||

150 | 0.065 | |||

200 | 0.088 | 0.057 | 0.106 | 0.093 |

250 | 0.124 | 0.048 | 0.088 | 0.078 |

300 | 0.131 | 0.040 | 0.069 | 0.062 |

350 | 0.108 | 0.032 | 0.051 | 0.047 |

400 | 0.056 | 0.023 | 0.033 | 0.031 |

450 | −0.025 | 0.015 | 0.014 | 0.014 |

500 | −0.136 | 0.007 | −0.004 | −0.002 |

550 | −0.275 | −0.002 | −0.023 | −0.019 |

600 | −0.444 | −0.010 | −0.041 | −0.036 |

650 | −0.018 | −0.059 | ||

700 | −0.027 | −0.078 | ||

750 | −0.035 | −0.096 | ||

800 | −0.043 | −0.115 | ||

850 | −0.052 | −0.133 | ||

900 | −0.060 | |||

950 | −0.068 | |||

1,000 | −0.077 | |||

1,050 | ||||

1,100 | ||||

1,150 | ||||

1,200 | ||||

1,250 |

Appendix 5: Predicted Noncognitive School Mean Outcomes (Standardized) Per School Size in Primary Education

Bonnet et al. (2009) | Durán-Narucki (2008) | Koth et al. (2008) | Lee and Loeb (2000) | Weighted average | |

|---|---|---|---|---|---|

N (schools) | 23 | 95 | 37 | 264 | 419 |

School size | |||||

100 | −0.270 | ||||

150 | −0.248 | ||||

200 | 0.280 | −0.226 | −0.475 | −0.540 | −0.346 |

250 | −0.396 | −0.204 | −0.392 | −0.464 | −0.302 |

300 | −0.418 | −0.182 | −0.310 | −0.392 | 0.256 |

350 | −0.346 | −0.160 | 0.228 | −0.325 | 0.208 |

400 | 0.180 | −0.138 | 0.146 | 0.263 | 0.157 |

450 | −0.079 | −0.116 | 0.063 | 0.205 | 0.104 |

500 | −0.433 | −0.094 | −0.019 | 0.151 | 0.049 |

550 | −0.879 | −0.071 | −0.101 | 0.102 | −0.009 |

600 | −1.420 | −0.049 | −0.184 | 0.058 | −0.069 |

650 | −0.027 | −0.266 | 0.018 | ||

700 | −0.005 | −0.348 | −0.017 | ||

750 | 0.017 | −0.431 | −0.048 | ||

800 | 0.039 | −0.513 | −0.074 | ||

850 | 0.061 | −0.595 | −0.095 | ||

900 | 0.083 | −0.113 | |||

950 | 0.105 | −0.125 | |||

1,000 | 0.127 | −0.133 | |||

1,050 | 0.149 | ||||

1,100 | 0.171 | ||||

1,150 | 0.194 | ||||

1,200 | 0.216 | ||||

1,250 | 0.238 | ||||

1,300 | 0.260 | ||||

1,350 | 0.282 | ||||

1,400 | −0.304 |

Appendix 6a: Predicted Noncognitive Student Outcomes Per School Size in Secondary Education; American Studies

Bowen et al. (2000) | Chen and Vazsonyi (2013) | Dee et al. (2007) | Gottfredson and DiPietro (2011) | Kirkpatrick Johnson et al. (2001); middle schools | Kirkpatrick Johnson et al. (2001); high schools | |

|---|---|---|---|---|---|---|

N (students) | 945 | 9,163 | 8,197 | 13,597 | 2,482 | 8,104 |

School size | ||||||

100 | −0.121 | 0.051 | ||||

150 | −0.097 | 0.000 | 0.049 | |||

200 | 0.122 | 0.082 | −0.081 | 0.000 | 0.046 | |

250 | 0.107 | 0.077 | −0.067 | 0.000 | 0.044 | |

300 | 0.219 | 0.093 | 0.072 | −0.057 | 0.000 | 0.041 |

350 | 0.198 | 0.080 | 0.067 | −0.048 | 0.000 | 0.039 |

400 | 0.176 | 0.067 | 0.062 | −0.04 | 0.000 | 0.037 |

450 | 0.153 | 0.055 | 0.057 | −0.033 | 0.000 | 0.034 |

500 | 0.128 | 0.044 | 0.052 | −0.027 | 0.000 | 0.032 |

550 | 0.102 | 0.033 | 0.048 | −0.021 | 0.000 | 0.029 |

600 | 0.075 | 0.022 | 0.043 | −0.016 | 0.000 | 0.027 |

650 | 0.046 | 0.013 | 0.039 | −0.012 | 0.000 | 0.024 |

700 | 0.016 | 0.004 | 0.035 | −0.007 | 0.000 | 0.022 |

750 | −0.015 | −0.004 | 0.031 | −0.003 | 0.000 | 0.019 |

800 | −0.048 | −0.012 | 0.027 | 0.001 | 0.000 | 0.017 |

850 | −0.081 | −0.019 | 0.023 | 0.004 | 0.015 | |

900 | −0.117 | −0.025 | 0.019 | 0.007 | 0.012 | |

950 | −0.153 | −0.031 | 0.016 | 0.011 | 0.010 | |

1,000 | −0.191 | −0.036 | 0.013 | 0.014 | 0.007 | |

1,050 | −0.230 | −0.041 | 0.009 | 0.017 | 0.005 | |

1,100 | −0.271 | −0.044 | 0.006 | 0.019 | 0.002 | |

1,150 | −0.313 | −0.047 | 0.003 | 0.022 | 0.000 | |

1,200 | −0.356 | −0.050 | 0.001 | 0.024 | −0.003 | |

1,250 | −0.002 | 0.027 | −0.005 | |||

1,300 | −0.005 | 0.029 | −0.007 | |||

1,350 | −0.007 | 0.031 | −0.010 | |||

1,400 | −0.009 | 0.033 | −0.012 | |||

1,450 | −0.011 | 0.035 | −0.015 | |||

1,500 | −0.013 | 0.037 | −0.017 | |||

1,550 | −0.015 | 0.039 | −0.020 | |||

1,600 | −0.017 | 0.041 | −0.022 | |||

1,650 | −0.019 | 0.043 | −0.025 | |||

1,700 | −0.020 | 0.045 | −0.027 | |||

1,750 | −0.021 | 0.046 | −0.029 | |||

1,800 | −0.022 | −0.032 | ||||

1,850 | −0.024 | −0.034 | ||||

1,900 | −0.024 | −0.037 | ||||

1,950 | −0.025 | −0.039 | ||||

2,000 | −0.026 | −0.042 | ||||

2,050 | −0.026 | −0.044 | ||||

2,100 | −0.027 | −0.047 | ||||

2,150 | −0.027 | −0.049 | ||||

2,200 | −0.027 | −0.051 | ||||

2,250 | −0.027 | −0.054 | ||||

2,300 | −0.027 | −0.056 | ||||

2,350 | −0.026 | −0.059 | ||||

2,400 | −0.026 | −0.061 |

Appendix 6b: Predicted Noncognitive Student Outcomes Per School Size in Secondary Education; Studies Outside the U.S

Attar-Schwarz (2009) | Khoury-Kassabri et al. (2004) | Mooij et al. (2011) | Van der Vegt et al. (2005) | Vieno et al. (2005) | Wei et al. (2010) | |

|---|---|---|---|---|---|---|

N (students) | 16,604 | 1 − 0.400 | 26,162 | 5,206 | 4,733 | 1,172 |

School size | ||||||

100 | −0.057 | 0.019 | −0.057 | 0.047 | −0.593 | −0.164 |

150 | −0.051 | 0.017 | −0.054 | 0.043 | −0.515 | −0.158 |

200 | −0.045 | 0.015 | −0.050 | 0.040 | −0.437 | −0.153 |

250 | −0.040 | 0.012 | −0.047 | 0.036 | −0.359 | −0.147 |

300 | −0.034 | 0.010 | −0.043 | 0.033 | −0.281 | −0.142 |

350 | −0.029 | 0.007 | −0.040 | 0.030 | −0.203 | −0.136 |

400 | −0.023 | 0.005 | −0.036 | 0.026 | −0.125 | −0.130 |

450 | −0.018 | 0.003 | −0.033 | 0.023 | −0.047 | −0.125 |

500 | −0.012 | 0.000 | −0.029 | 0.019 | 0.031 | −0.119 |

550 | −0.006 | −0.002 | −0.026 | 0.016 | 0.109 | −0.114 |

600 | −0.001 | −0.005 | −0.023 | 0.013 | 0.187 | −0.108 |

650 | 0.005 | −0.007 | −0.019 | 0.009 | 0.265 | −0.103 |

700 | 0.010 | −0.009 | −0.016 | 0.006 | −0.343 | −0.097 |

750 | 0.016 | −0.012 | −0.012 | 0.002 | −0.421 | −0.091 |

800 | 0.021 | −0.014 | −0.009 | −0.001 | −0.500 | −0.086 |

850 | 0.027 | −0.016 | −0.005 | −0.004 | −0.578 | −0.08 |

900 | 0.033 | −0.019 | −0.002 | −0.008 | 0.656 | −0.075 |

950 | 0.038 | −0.021 | 0.002 | −0.011 | 0.734 | −0.069 |

1,000 | 0.044 | −0.024 | 0.005 | −0.015 | 0.812 | −0.063 |

1,050 | 0.049 | −0.026 | 0.009 | −0.018 | 0.890 | −0.058 |

1,100 | 0.055 | −0.028 | 0.012 | −0.021 | 0.968 | −0.052 |

1,150 | 0.060 | 0.016 | −0.025 | −0.047 | ||

1,200 | 0.066 | 0.019 | −0.028 | −0.041 | ||

1,250 | 0.072 | 0.022 | −0.032 | −0.035 | ||

1,300 | 0.026 | −0.035 | −0.030 | |||

1,350 | 0.029 | −0.038 | −0.024 | |||

1,400 | 0.033 | −0.042 | −0.019 | |||

1,450 | 0.036 | −0.045 | −0.013 | |||

1,500 | 0.040 | −0.049 | −0.008 | |||

1,550 | 0.043 | −0.052 | −0.002 | |||

1,600 | 0.047 | −0.055 | 0.004 | |||

1,650 | 0.050 | −0.059 | 0.009 | |||

1,700 | 0.053 | −0.062 | 0.015 | |||

1,750 | 0.057 | −0.066 | 0.020 | |||

1,800 | 0.060 | −0.069 | 0.026 | |||

1,850 | 0.064 | −0.072 | 0.032 | |||

1,900 | 0.067 | −0.076 | 0.037 | |||

1,950 | 0.071 | −0.079 | 0.043 | |||

2,000 | 0.074 | −0.083 | 0.048 | |||

2,050 | −0.086 | 0.054 | ||||

2,100 | −0.089 | 0.06 | ||||

2,150 | −0.093 | 0.065 | ||||

2,200 | −0.096 | 0.071 | ||||

2,250 | 0.076 | |||||

2,300 | 0.082 | |||||

2,350 | 0.087 | |||||

2,400 | 0.093 |

Appendix 6c: Average Outcomes Noncognitive Student Scores Per School Size in Secondary Education

Weighted average (all) | Weighted average (U.S.) | Weighted average (non-U.S.) | Weighted average (non-U.S., excluding Vieno et al.) | |

|---|---|---|---|---|

N (students) | 106,765 | 42,488 | 64,277 | 59,544 |

School size | ||||

300 | −0.0160 | 0.0284 | −0.0454 | −0.0294 |

350 | −0.0120 | 0.0266 | −0.0376 | −0.0257 |

400 | −0.0079 | 0.0246 | −0.0294 | −0.0217 |

450 | −0.0041 | 0.0222 | −0.0214 | −0.0179 |

500 | −0.0002 | 0.0198 | −0.0134 | −0.0140 |

550 | 0.0038 | 0.0173 | −0.0052 | −0.0099 |

600 | 0.0075 | 0.0149 | 0.0026 | −0.0062 |

650 | 0.0113 | 0.0120 | 0.0107 | −0.0022 |

700 | 0.0152 | 0.0099 | 0.0188 | 0.0016 |

750 | 0.0190 | 0.0073 | 0.0267 | 0.0055 |

800 | 0.0230 | 0.0051 | 0.0348 | 0.0093 |

850 | 0.0270 | 0.0027 | 0.0430 | 0.0135 |

900 | 0.0308 | 0.0002 | 0.0510 | 0.0173 |

950 | 0.0349 | −0.0016 | 0.0590 | 0.0212 |

1,000 | 0.0388 | −0.0038 | 0.0670 | 0.0250 |

1,050 | 0.0429 | −0.0057 | 0.0750 | 0.0288 |

1,100 | 0.0469 | −0.0079 | 0.0832 | 0.0329 |

Appendix 7a: Predicted Noncognitive School Means Cores Per School Size in Secondary Education; American Studies

Chen (2008) | Chen and Vazsonyi (2013) | Chen (2008) | Gottfredson and DiPietro (2011) | Haller (1992) | Kirkpatrick Johnson et al. (2001); middle schools | Kirkpatrick Johnson et al. (2001); high schools | Klein and Cornell (2010) | McNeely et al. (2002) | Payne (2012) | Rumberger and Palardy (2005) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

N (schools) | 712 | 85 | 212 | 253 | 558 | 45 | 64 | 290 | 127 | 253 | 912 |

School size | |||||||||||

100 | 0.157 | −0.702 | 0.175 | 0.295 | 0.016 | 0.662 | 0.233 | ||||

150 | 0.148 | −0.565 | 0.164 | 0.000 | 0.281 | 0.015 | −0.518 | 0.188 | |||

200 | 0.740 | 0.655 | 0.139 | −0.467 | 0.154 | 0.000 | 0.267 | 0.015 | −0.415 | 0.152 | −0.332 |

250 | 0.665 | −0.577 | 0.129 | −0.391 | 0.144 | 0.000 | 0.253 | 0.014 | −0.336 | 0.124 | −0.302 |

300 | −0.593 | −0.501 | 0.120 | −0.329 | 0.134 | 0.000 | 0.239 | 0.013 | 0.271 | 0.100 | 0.274 |

350 | −0.524 | −0.430 | 0.111 | −0.277 | 0.124 | 0.000 | 0.225 | 0.012 | 0.216 | 0.079 | 0.246 |

400 | −0.458 | −0.362 | 0.102 | −0.232 | 0.114 | 0.000 | 0.211 | 0.012 | 0.168 | 0.060 | 0.219 |

450 | −0.394 | 0.297 | 0.093 | −0.192 | 0.104 | 0.000 | 0.197 | 0.011 | 0.127 | 0.043 | 0.193 |

500 | −0.334 | 0.235 | 0.084 | −0.156 | 0.094 | 0.000 | 0.183 | 0.010 | 0.089 | 0.028 | 0.168 |

550 | 0.276 | 0.177 | 0.075 | −0.124 | 0.084 | 0.000 | 0.168 | 0.010 | 0.055 | 0.015 | 0.144 |

600 | 0.221 | 0.123 | 0.066 | −0.094 | 0.073 | 0.000 | 0.154 | 0.009 | 0.024 | 0.002 | 0.121 |

650 | 0.169 | 0.072 | 0.056 | −0.067 | 0.063 | 0.000 | 0.140 | 0.008 | −0.004 | −0.009 | 0.098 |

700 | 0.120 | 0.024 | 0.047 | −0.042 | 0.053 | 0.000 | 0.126 | 0.007 | −0.031 | −0.020 | 0.077 |

750 | 0.074 | −0.020 | 0.038 | −0.018 | 0.043 | 0.000 | 0.112 | 0.007 | −0.055 | −0.030 | 0.057 |

800 | 0.030 | −0.061 | 0.029 | 0.003 | 0.033 | 0.000 | 0.098 | 0.006 | −0.078 | −0.040 | 0.037 |

850 | −0.011 | −0.099 | 0.020 | 0.024 | 0.023 | 0.084 | 0.005 | −0.100 | −0.049 | 0.019 | |

900 | −0.048 | −0.133 | 0.011 | 0.043 | 0.013 | 0.070 | 0.004 | −0.120 | −0.057 | 0.001 | |

950 | −0.083 | −0.163 | 0.002 | 0.062 | 0.003 | 0.056 | 0.004 | −0.139 | −0.066 | −0.015 | |

1,000 | −0.116 | −0.190 | −0.007 | 0.079 | −0.008 | 0.041 | 0.003 | −0.158 | −0.073 | −0.031 | |

1,050 | −0.145 | −0.214 | −0.016 | 0.096 | −0.018 | 0.027 | 0.002 | −0.175 | −0.081 | −0.046 | |

1,100 | −0.171 | −0.235 | −0.026 | 0.111 | −0.028 | 0.013 | 0.002 | −0.192 | −0.088 | −0.059 | |

1,150 | −0.195 | −0.251 | −0.035 | 0.127 | −0.038 | −0.001 | 0.001 | −0.208 | −0.094 | −0.072 | |

1,200 | −0.216 | −0.265 | −0.044 | 0.141 | −0.048 | −0.015 | 0.000 | −0.223 | −0.101 | −0.084 | |

1,250 | −0.234 | −0.053 | 0.155 | −0.058 | −0.029 | −0.001 | −0.237 | −0.107 | −0.095 | ||

1,300 | −0.062 | 0.168 | −0.068 | −0.043 | −0.001 | −0.251 | −0.113 | −0.105 | |||

1,350 | −0.071 | 0.181 | −0.078 | −0.057 | −0.002 | −0.265 | −0.118 | −0.115 | |||

1,400 | −0.080 | 0.193 | −0.089 | −0.071 | −0.003 | −0.278 | −0.124 | −0.123 | |||

1,450 | −0.089 | 0.205 | −0.099 | −0.086 | −0.003 | −0.290 | −0.129 | −0.130 | |||

1,500 | −0.099 | 0.217 | −0.109 | −0.100 | −0.004 | −0.302 | −0.134 | −0.136 | |||

1,550 | −0.108 | 0.228 | −0.119 | −0.114 | −0.005 | −0.314 | −0.139 | −0.142 | |||

1,600 | −0.117 | 0.239 | −0.129 | −0.128 | −0.006 | −0.325 | −0.144 | −0.146 | |||

1,650 | −0.126 | 0.249 | −0.139 | −0.142 | −0.006 | −0.336 | −0.149 | −0.150 | |||

1,700 | −0.135 | 0.259 | −0.149 | −0.156 | −0.007 | −0.347 | −0.154 | −0.152 | |||

1,750 | −0.144 | 0.269 | −0.159 | −0.170 | −0.008 | −0.357 | −0.154 | ||||

1,800 | −0.153 | −0.169 | −0.184 | −0.009 | −0.367 | −0.155 | |||||

1,850 | −0.162 | −0.180 | −0.198 | −0.009 | −0.377 | −0.155 | |||||

1,900 | −0.172 | −0.190 | −0.213 | −0.010 | −0.386 | −0.153 | |||||

1,950 | −0.181 | −0.200 | −0.227 | −0.011 | −0.396 | −0.151 | |||||

2,000 | −0.210 | −0.241 | −0.011 | −0.405 | −0.148 | ||||||

2,050 | −0.220 | −0.255 | −0.012 | −0.413 | −0.144 | ||||||

2,100 | −0.230 | −0.269 | −0.013 | −0.422 | −0.140 | ||||||

2,150 | −0.240 | −0.283 | −0.014 | −0.430 | −0.134 | ||||||

2,200 | −0.250 | −0.297 | −0.014 | −0.438 | −0.127 | ||||||

2,250 | −0.261 | −0.311 | −0.015 | ||||||||

2,300 | −0.271 | −0.325 | −0.016 | ||||||||

2,350 | −0.281 | −0.340 | −0.017 | ||||||||

2,400 | −0.291 | −0.354 | −0.017 |

Appendix 7b: Predicted Noncognitive School Mean Scores Per School Size in Secondary Education; Studies Outside the U.S

Attar-Schwarz (2009) | Khoury-Kassabri et al. (2004) | Mooij et al. (2011) | Silins and Mulford (2004) | Vieno et al. (2005) | Wei et al. (2010) | |

|---|---|---|---|---|---|---|

N (schools) | 327 | 162 | 104 | 96 | 134 | 12 |

School size | ||||||

100 | −0.220 | 0.060 | −0.272 | −0.437 | −2.967 | −0.423 |

150 | −0.198 | 0.053 | −0.255 | −0.396 | −2.577 | −0.408 |

200 | −0.177 | 0.045 | −0.239 | −0.355 | −2.187 | −0.394 |

250 | −0.155 | 0.038 | −0.222 | −0.314 | −1.796 | −0.379 |

300 | −0.133 | 0.031 | −0.206 | 0.272 | −1.406 | −0.365 |

350 | −0.112 | 0.023 | −0.189 | 0.231 | −1.016 | −0.351 |

400 | −0.090 | 0.016 | −0.173 | 0.190 | −0.625 | −0.336 |

450 | −0.068 | 0.008 | −0.156 | 0.149 | −0.235 | −0.322 |

500 | −0.047 | 0.001 | −0.140 | 0.108 | 0.155 | −0.307 |

550 | −0.025 | −0.007 | −0.124 | 0.067 | −0.546 | −0.293 |

600 | −0.003 | −0.014 | −0.107 | 0.026 | 0.936 | −0.279 |

650 | 0.018 | −0.021 | −0.091 | −0.015 | 1.327 | −0.264 |

700 | 0.040 | −0.029 | −0.074 | −0.056 | 1.717 | −0.250 |

750 | 0.062 | −0.036 | −0.058 | −0.097 | 2.107 | −0.235 |

800 | 0.083 | −0.044 | −0.041 | −0.138 | 20498 | −0.221 |

850 | 0.105 | −0.051 | −0.025 | −0.179 | 2.888 | −0.207 |

900 | 0.127 | −0.059 | −0.009 | −0.220 | 3.278 | −0.192 |

950 | 0.148 | −0.066 | 0.008 | −0.261 | 3.669 | −0.178 |

1,000 | 0.170 | −0.073 | 0.024 | −0.302 | 4.059 | −0.163 |

1,050 | 0.192 | −0.081 | 0.041 | −0.343 | 4.449 | −0.149 |

1,100 | 0.213 | −0.088 | 0.057 | −0.384 | 4.84 | −0.135 |

1,150 | 0.235 | 0.074 | −0.425 | −0.120 | ||

1,200 | 0.257 | 0.090 | −0.466 | −0.106 | ||

1,250 | 0.278 | 0.107 | −0.091 | |||

1,300 | 0.123 | −0.077 | ||||

1,350 | 0.139 | −0.063 | ||||

1,400 | 0.156 | −0.048 | ||||

1,450 | 0.172 | −0.034 | ||||

1,500 | 0.189 | −0.019 | ||||

1,550 | 0.205 | −0.005 | ||||

1,600 | 0.222 | 0.009 | ||||

1,650 | 0.238 | 0.024 | ||||

1,700 | 0.254 | 0.038 | ||||

1,750 | 0.271 | 0.053 | ||||

1,800 | 0.287 | 0.067 | ||||

1,850 | −0.304 | 0.081 | ||||

1,900 | −0.320 | 0.096 | ||||

1,950 | −0.337 | 0.110 | ||||

2,000 | −0.353 | 0.125 | ||||

2,050 | 0.139 | |||||

2,100 | 0.153 | |||||

2,150 | 0.168 | |||||

2,200 | 0.182 | |||||

2,250 | 0.197 | |||||

2,300 | 0.211 | |||||

2,350 | 0.225 | |||||

2,400 | 0.240 |

Appendix 7c: Average Outcomes Noncognitive School Mean Scores Per School Size in Secondary Education

Weighted average (all) | Weighted average (U.S.) | Weighted average (non-U.S.) | Weighted average (non-U.S. excluding Vieno) | |

|---|---|---|---|---|

N (schools) | 4346 | 3511 | 835 | 701 |

School size | ||||

200 | 0.151 | 0.283 | −0.107 | −0.066 |

250 | 0.142 | 0.257 | −0.098 | −0.060 |

300 | 0.134 | 0.231 | −0.089 | −0.054 |

350 | 0.127 | 0.206 | −0.080 | −0.049 |

400 | 0.120 | 0.181 | −0.070 | −0.044 |

450 | 0.114 | 0.158 | −0.061 | −0.038 |

500 | 0.109 | 0.135 | −0.052 | −0.033 |

550 | 0.104 | 0.114 | −0.042 | −0.028 |

600 | 0.100 | 0.093 | −0.032 | −0.022 |

650 | 0.097 | 0.073 | −0.022 | −0.017 |

700 | 0.094 | 0.054 | −0.012 | −0.011 |

750 | 0.093 | 0.036 | −0.002 | −0.005 |

800 | 0.092 | 0.018 | 0.008 | 0.000 |

850 | 0.091 | 0.002 | 0.019 | 0.005 |

900 | 0.092 | −0.014 | 0.030 | 0.011 |

950 | 0.093 | −0.028 | 0.040 | 0.016 |

1,000 | 0.095 | −0.042 | 0.051 | 0.022 |

1,050 | 0.097 | −0.055 | 0.062 | 0.027 |

1,100 | 0.101 | −0.067 | 0.073 | 0.033 |

Rights and permissions

Copyright information

© 2014 The Author(s)

About this chapter

Cite this chapter

Luyten, H. (2014). Quantitative Summary of Research Findings. In: School Size Effects Revisited. SpringerBriefs in Education. Springer, Cham. https://doi.org/10.1007/978-3-319-06814-5_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-06814-5_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-06813-8

Online ISBN: 978-3-319-06814-5

eBook Packages: Humanities, Social Sciences and LawEducation (R0)