Abstract

In this paper, a three-dimensional imaging LIDAR system using two 1×8 Geiger-mode avalanche photodiode (GmAPD) arrays is presented. A passively Q-switched microchip laser is used as a light source and a compact PCI (cPCI) system, which includes a time-to-digital converter (TDC), is set up for fast signal processing. Clear 3D images with a fast acquisition speed are obtained by the proposed LIDAR system with using two 1×8 GmAPD arrays for the reduction of false alarms at the TOF data acquisition stage. The software for the three-dimensional visualization is developed for the system of a 1×8 GmAPD array. The range resolution of the system is measured at 100 m, and 3D images acquired by one GmAPD array and two GmAPD arrays during the daytime.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Light detection and ranging (LIDAR) has recently become an important method for both distance measurements and the acquisition of 3D images. Many research groups have used a Geiger-mode avalanche photodiode (GmAPD) as a detector in the 3D imaging LIDAR system due to its extremely high detection sensitivity and a simple readout integrated circuit [1–6]. However, a GmAPD has some disadvantages when used in a 3D imaging LIDAR system. First, the dark counts arising by thermal noise in the depletion region generate false alarms during the stage of the signal processing. Second, a GmAPD is independent of the optical intensity indicating that it cannot distinguish between a signal and noise. Therefore, a noise removal process is essential in a LIDAR system using a GmAPD for clear 3D images.

Heriot-Watt University is the one of the main research centers using a GmAPD as a detector. The method of time-correlated single-photon counting (TCSPC) is used to acquire the distance to the object. It estimates the object distance by thresholding data at a fixed level of the peak height in a TCSPC histogram, but it is time-consuming to repeat the measurements many times to obtain the TCSPC histogram (typically 104~106) [5]. MIT Lincoln Laboratory has also developed a 3D imaging LIDAR system with a GmAPD. Their 3D imaging LIDAR system is a compact, light-weight system that shows good performance. However, it creates much noise, necessitating much time to remove the noise for clear 3D images as this is done with a series of image processing algorithms [7].

For clear 3D images, the stage of the removal of noise is indispensable. Therefore, we developed a low-noise 3D imaging LIDAR system that can obtain clear 3D image with fast acquisition speed via the reduction of false alarms at the stage of the acquisition of raw time-of-flight (TOF) data with few measurements with two 1×8 GmAPD arrays.

In Sect. 4.2, both the hardware and the software of the low noise 3D imaging LIDAR system are described in detail. The range resolution of the system and 3D images acquired by one GmAPD array and two GmAPD arrays during the daytime are shown in Sect. 4.3. Section 4.4 provides some summary remarks.

2 Low-Noise Three-Dimensional Imaging LIDAR System

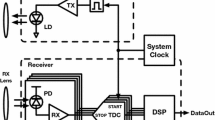

The LIDAR system is divided into two parts: its hardware and its software. Figure 4.1 shows a schematic diagram of the LIDAR system. A laser pulse is emitted from a light source and passes through the optical system. A small portion of the laser pulse is used to generate the start signal and the rest of the laser pulse is irradiated onto the target. The part of the scattered laser pulse and background light in the field of view (FOV) are collected by the receiving optical system. Then, the laser return pulse and background light are intensity-divided in half by a beam splitter and routed to two GmAPD arrays. An AND gate compares the arrival time of the two GmAPD arrays and a stop signal is generated only if the time difference between the two signals from the GmAPD arrays is less than a fixed value. Although the signal is decreased due to the division into half of the energy of the laser-return pulse, the number of false alarms is decreased drastically because the noise distributed randomly in the time domain is filtered out [8]. TOF data, which is the time difference between the start signal and the stop signal, is generated at the TDC during the signal processing step. The TOF data is then transferred to a distance to visualize a 3D image with the point cloud method in the image processing step.

2.1 Hardware

The optical system is shown in Fig. 4.2. A diode-pumped passively Q-switched microchip laser with a second harmonic generation (Alphalas PULSELAS-P-1064-300-FC/SHG) is used as a light source. The start signal cannot be generated by the laser itself, as the laser is passively Q-switched. Therefore, a small portion of laser pulse is transmitted to the photodiode at a 45° reflection mirror. The PD used for generating the start signal is a Thorlab high-speed Si detector. The rest of the laser pulse is reflected by a mirror and collimated by lenses L1 and L2. Due to the single polarization of the laser, a half-wave plate (HWP) is located before the polarization beam splitter (PBS) in order to control both the transmission and reflection of the laser pulses at PBS1. The transmitted laser pulse passes through a beam expander. The FOV of the system determined by the focal length of lenses L3 and L4 and a beam expander is set to be identical to the laser beam divergence assuming Gaussian propagation. After the beam expander, the laser pulse is guided to the target by a two-axis galvano scanner and is then scattered. The two-axis galvano scanner used for controlling the beam pointing for 1×8 GmAPD array is from Cambridge Technology with a 50 mm aperture mirror. The scattered laser pulse from the target and background light in the FOV of the system are collected into the GmAPD arrays (ID quantique id150-1×8) through the two-axis galvano scanner, a quarter-wave plate (QWP), PBS1, an optical band-pass filter, HWP2, PBS2, and the focusing lenses in that order. Because the microchip laser has a narrow spectral linewidth and temperature stability, the optical band-pass filter, which is centered at a wavelength of 532 nm with a bandwidth (FWHM) of 10 nm and a maximum transmission of 65.91 %, is used for spectral filtering.

A TDC (Agilent U1051A), with six channels and a timing resolution of 50 ps receives both the start and stop signal and measures the time difference between them. The function of the AND gate is to compare the TOFs as measured by GmAPD array 1 and GmAPD array 2. When applying the functionality of the AND gate to the TOFs, calibration is necessary due to the different time-delay characteristics between each pixel in GmAPD arrays 1 and 2. A time bin is defined as a unit that indicates the time interval obtained by dividing the total measurement time by a specific value. The time bin is set to 3 ns, determined by calculating the overall timing jitter of the system.

A charge-coupled device (CCD) camera (Pixelink PL-B953U) with a fixed focal length lens is used as a boresight camera mounted in the optical axis of the system in order to photograph the targets. The FOV of the CCD camera comparatively accords with the FOV of the system.

2.2 Software

The algorithm executes a series of functional steps to convert the TOF data obtained by the system into a 3D image. After receiving the laser pulses, the system provides a two-dimensional depth image that includes angle-angle-depth data for software processing and 3D image visualization data. First the TOF data are converted into Cartesian locations. XYZ points at the Cartesian coordinate are calculated using the scanning angle and range information of each pixel. Using the XYZ points, 3D image visualization can be carried out by a point cloud scheme. In the point cloud scheme, each of the XYZ points in the received data is simply plotted in a 3D Cartesian coordinate. Next, there are various algorithms that serve to remove the noise, but these algorithms are unnecessary in the proposed system because the noise was removed during the acquisition of the raw TOF data.

3 Range Resolution and 3D Images

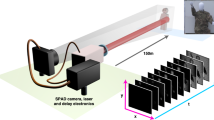

The range resolution of a LIDAR system is its ability to distinguish between targets which are very close in either range or bearing. To obtain the range resolution of the system at 100 m, the standard deviations of the TOFs of the proposed system were measured. As shown in Fig. 4.3, the measurement target was located between 99.8 m and 100.2 m, at 10 cm intervals on a motorized translational stage. The standard deviations were obtained with 10,000 laser pulses at each position.

Figure 4.4 shows the range resolution of a pixel of GmAPD array 1. As Fig. 4.4 represents, the location of the target can be distinguished between 100 m and 100.1 m. For a pixel of GmAPD array 1, the 2σ value was 7.4 cm. The average range resolution of all pixels of the two GmAPD arrays (2σ) was 7.6 cm at 100 m. An error is defined as a difference between a true value and a measured value. The average error of all pixels of the two GmAPD arrays is 5.8 mm which is less than the timing resolution of TDC (7.5 mm).

Figure 4.5a shows a two-dimensional image of the scene. The distance of the scene was approximately 60 m. The average rate functions of the noise, defined as the sum of the rate of background photons impinging on the detector and the dark count rate of the GmAPD arrays, were 16 kHz and 20 kHz for GmAPD arrays 1 and 2, respectively. The three-dimensional images were acquired with 1164 points × 1170 points scanning with a field of regard (FOR) of 4°×4°. Figures 4.5b, c show 3D images acquired by one GmAPD array and two GmAPD arrays, respectively. The capacity for a 3D image file acquired by one GmAPD array was 162 MB, and the capacity for a 3D image file acquired by two GmAPD arrays was 3.6 MB.

4 Summary

This paper describes a low-noise three-dimensional imaging LIDAR system using two 1×8 GmAPD arrays. A passively Q-switched microchip laser is used as a light source and a cPCI system, which includes a TDC, and is set up for fast signal processing. The proposed LIDAR system is capable of obtaining clear 3D images with a rapid acquisition speed which is achieved by the reduction of false alarms at the TOF data acquisition stage in conjunction with the use of a 1×8 GmAPD array. The software for the three-dimensional visualization is developed for the system of the 1×8 GmAPD array. The range resolution of the system was measured at 100 m and 3D images acquired by one GmAPD array and by two GmAPD arrays during the daytime are presented.

References

Albota MA, Aull BF, Fouche DG, Geinriches RM, Kocher DG, Marino RM, Moony JG, Newbury NR, O’Brien ME, Player BE, Willard BC, Zayhowski JJ (2002) Three-dimensional laser radar with geiger-mode avalanche photodiode arrays. MIT Linc J 13:2

Aull BF, Loomis AH, Young DJ, Heinrichs RM, Felton BH, Daniels PJ, Landers DJ (2002) Geiger-mode avalanche photodiodes for three-dimensional imaging. Linc Lab J 13:2

Marino RM, Davis WR (2005) Jigsaw: a foliage-penetrating 3D imaging laser radar system. Linc Lab J 15:23

Massa JS, Wallace AM, Buller GS, Fancey SJ, Walker AC (1997) Laser depth measurement based on time-correlated single-photon counting. Opt Lett 22:543

Massa J, Buller G, Walker A, Smith G, Cova S, Umasuthan M, Wallace A (2002) Optical design and evaluation of a three-dimensional imaging and ranging system based on time-correlated single-photon counting. Apple Opt 41(6):1063

McCarthy A, Collins RJ, Krichel NJ, Fernandes V, Wallace AM, Buller GS (2009) Long-range time of flight scanning sensor based on high speed time-correlated photon counting. Apple Opt 48(32):6241

Cho P, Anderson H, Hatch R, Ramaswami P (2006) Real-time 3D ladar imaging. Linc Lab J 16:147

Kong HJ, Kim TH, Jo SE, Oh MS (2011) Smart three-dimensional imaging ladar using two Geiger-mode avalanche photodiodes. Opt Express 19(20):19323

Acknowledgements

This work was supported by “Dual Use Technology Program” at Agency for Defense Development (ADD) of the Republic of Korea((UM12012RD1).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Jo, S.E., Kim, T.H., Kong, H.J. (2014). Development of a Low-Noise Three-Dimensional Imaging LIDAR System Using Two 1×8 Geiger-Mode Avalanche Photodiode Arrays. In: Tutsch, R., Cho, YJ., Wang, WC., Cho, H. (eds) Progress in Optomechatronic Technologies. Lecture Notes in Electrical Engineering, vol 306. Springer, Cham. https://doi.org/10.1007/978-3-319-05711-8_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-05711-8_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-05710-1

Online ISBN: 978-3-319-05711-8

eBook Packages: EngineeringEngineering (R0)