Abstract

Support vector machines (SVMs) are among the most popular classification techniques adopted in security applications like malware detection, intrusion detection, and spam filtering. However, if SVMs are to be incorporated in real-world security systems, they must be able to cope with attack patterns that can either mislead the learning algorithm (poisoning), evade detection (evasion) or gain information about their internal parameters (privacy breaches). The main contributions of this chapter are twofold. First, we introduce a formal general framework for the empirical evaluation of the security of machine-learning systems. Second, according to our framework, we demonstrate the feasibility of evasion, poisoning and privacy attacks against SVMs in real-world security problems. For each attack technique, we evaluate its impact and discuss whether (and how) it can be countered through an adversary-aware design of SVMs. Our experiments are easily reproducible thanks to open-source code that we have made available, together with all the employed datasets, on a public repository.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

4.1 Introduction

Machine-learning and pattern-recognition techniques are increasingly being adopted in security applications like spam filtering, network intrusion detection, and malware detection due to their ability to generalize and to potentially detect novel attacks or variants of known ones. Support vector machines (SVMs) are among the most successful techniques that have been applied for this purpose [28, 55].

However, learning algorithms like SVMs assume stationarity: that is, both the data used to train the classifier and the operational data it classifies are sampled from the same (though possibly unknown) distribution. Meanwhile, in adversarial settings such as the above-mentioned ones, intelligent and adaptive adversaries may purposely manipulate data (violating stationarity) to exploit existing vulnerabilities of learning algorithms and to impair the entire system. This raises several open issues, related to whether machine-learning techniques can be safely adopted in security-sensitive tasks, or if they must (and can) be redesigned for this purpose. In particular, the main open issues to be addressed include:

-

1.

analyzing the vulnerabilities of learning algorithms;

-

2.

evaluating their security by implementing the corresponding attacks; and

-

3.

eventually, designing suitable countermeasures.

These issues are currently addressed in the emerging research area of adversarial machine learning, at the intersection between computer security and machine learning. This field is receiving growing interest from the research community, as witnessed by an increasing number of recent events: the NIPS Workshop on “Machine Learning in Adversarial Environments for Computer Security” [43]; the subsequent Special Issue of the Machine Learning journal titled “Machine Learning in Adversarial Environments” [44]; the 2010 UCLA IPAM workshop on “Statistical and Learning-Theoretic Challenges in Data Privacy”; the ECML-PKDD Workshop on “Privacy and Security issues in Data Mining and Machine Learning” [27]; five consecutive CCS Workshops on “Artificial Intelligence and Security” [2, 3, 19, 22, 34], and the Dagstuhl Perspectives Workshop on “Machine Learning for Computer Security” [37].

In Sect. 4.2, we review the literature of adversarial machine learning, focusing mainly on the issue of security evaluation. We discuss both theoretical work and applications, including examples of how learning can be attacked in practical scenarios, either during its training phase (i.e., poisoning attacks that contaminate the learner’s training data to mislead it) or during its deployment phase (i.e., evasion attacks that circumvent the learned classifier).

In Sect. 4.3, we summarize our recently defined framework for the empirical evaluation of classifiers’ security [12]. It is based on a general model of an adversary that builds on previous models and guidelines proposed in the literature of adversarial machine learning. We expound on the assumptions of the adversary’s goal, knowledge, and capabilities that comprise this model, which also easily accommodate application-specific constraints. Having detailed the assumptions of his/her adversary, a security analyst can formalize the adversary’s strategy as an optimization problem.

We then demonstrate our framework by applying it to assess the security of SVMs. We discuss our recently devised evasion attacks against SVMs [8] in Sect. 4.4, and review and extend our recent work [14] on poisoning attacks against SVMs in Sect. 4.5. We show that the optimization problems corresponding to the above attack strategies can be solved through simple gradient-descent algorithms. The experimental results for these evasion and poisoning attacks show that the SVM is vulnerable to these threats for both linear and nonlinear kernels in several realistic application domains including handwritten digit classification and malware detection for PDF files. We further explore the threat of privacy-breaching attacks aimed at the SVM’s training data in Sect. 4.6 where we apply our framework to precisely describe the setting and threat model.

Our analysis provides useful insights into the potential security threats from the usage of learning algorithms (and, particularly, of SVMs) in real-world applications and sheds light on whether they can be safely adopted for security-sensitive tasks. The presented analysis allows a system designer to quantify the security risk entailed by an SVM-based detector so that he/she may weigh it against the benefits provided by the learning. It further suggests guidelines and countermeasures that may mitigate threats and thereby improve overall system security. These aspects are discussed for evasion and poisoning attacks in Sects. 4.4 and 4.5. In Sect. 4.6 we focus on developing countermeasures for privacy attacks that are endowed with strong theoretical guarantees within the framework of differential privacy. We conclude with a summary and discussion in Sect. 4.7.

In order to support the reproducibility of our experiments, we published all the code and the data employed for the experimental evaluations described in this paper [24]. In particular, our code is released under open-source license, and carefully documented, with the aim of allowing other researchers to not only reproduce but also customize, extend, and improve our work.

4.2 Background

In this section, we review the main concepts used throughout this chapter. We first introduce our notation and summarize the SVM learning problem. We then motivate the need for the proper assessment of the security of a learning algorithm so that it can be applied to security-sensitive tasks.

Learning can be generally stated as a process by which data is used to form a hypothesis that performs better than an a priori hypothesis formed without the data. For our purposes, the hypotheses will be represented as functions of the form \(f: \mathcal{X} \rightarrow \mathcal{Y}\), which assign an input sample point \(\mathbf{x} \in \mathcal{X}\) to a class \(y \in \mathcal{Y}\); that is, given an observation from the input space \(\mathcal{X}\), a hypothesis f makes a prediction in the output space \(\mathcal{Y}\). For binary classification, the output space is binary and we use \(\mathcal{Y} =\{ -1,+1\}\). In the classical supervised learning setting, we are given a paired training dataset \(\{(\mathbf{x}_{i},y_{i})\;\vert \;\mathbf{x}_{i} \in \mathcal{X},y_{i} \in \mathcal{Y}\}_{i=1}^{n}\), we assume each pair is drawn independently from an unknown joint distribution P(X, Y ), and we want to infer a classifier f able to generalize well on P(X, Y ); i.e., to accurately predict the label y of an unseen sample x drawn from that distribution.

4.2.1 Support Vector Machines

In its simplest formulation, an SVM learns a linear classifier for a binary classification problem. Its decision function is thus \(f(\mathbf{x}) = \mbox{ sign}({\mathbf{w}}^{\top }\mathbf{x} + b)\), where sign(a) = +1 ( − 1) if a ≥ 0 (a < 0), and w and b are learned parameters that specify the position of the decision hyperplane in feature space: the hyperplane’s normal w gives its orientation and b is its displacement. The learning task is thus to find a hyperplane that well separates the two classes. While many hyperplanes may suffice for this task, the SVM hyperplane both separates the training samples of the two classes and provides a maximum distance from itself to the nearest training point (this distance is called the classifier’s margin), since maximum-margin learning generally reduces generalization error [66]. Although originally designed for linearly separable classification tasks (hard-margin SVMs), SVMs were extended to nonlinearly separable classification problems by Vapnik [25] (soft-margin SVMs), which allow some samples to violate the margin. In particular, a soft-margin SVM is learned by solving the following convex quadratic program (QP):

where the margin is maximized by minimizing \(\frac{1} {2}{\mathbf{w}}^{\top }\mathbf{w}\), and the variables ξ i (referred to as slack variables) represent the extent to which the samples, x i , violate the margin. The parameter C tunes the trade-off between minimizing the sum of the slack violation errors and maximizing the margin.

While the primal can be optimized directly, it is often solved via its (Lagrangian) dual problem written in terms of Lagrange multipliers, α i , which are constrained so that \(\sum _{i=1}^{n}\alpha _{i}y_{i} = 0\) and 0 ≤ α i ≤ C for i = 1, …, n. Solving the dual has a computational complexity that grows according to the size of the training data as opposed to the feature space’s dimensionality. Further, in the dual formulation, both the data and the slack variables become implicitly represented—the data is represented by a kernel matrix, K, of all inner products between pairs of data points (i.e., \(K_{i,j} = \mathbf{x}_{i}^{\top }\mathbf{x}_{j}\)) and each slack variable is associated with a Lagrangian multiplier via the KKT conditions that arise from duality. Using the method of Lagrangian multipliers, the dual problem is derived, in matrix form, as

where \(\mathbf{Q} = \mathbf{K} \circ y{y}^{\top }\) (the Hadamard product of K and yy ⊤) and 1 n is a vector of n ones.

Through the kernel matrix, SVMs can be extended to more complex feature spaces (where a linear classifier may perform better) via a kernel function—an implicit inner product from the alternative feature space. That is, if some function \(\phi: \mathcal{X} \rightarrow \varPhi\) maps training samples into a higher-dimensional feature space, then K ij is computed via the space’s corresponding kernel function, \(\kappa (\mathbf{x}_{i},\mathbf{x}_{j}) =\phi {(\mathbf{x}_{i})}^{\top }\phi (\mathbf{x}_{j})\). Thus, one need not explicitly know ϕ, only its corresponding kernel function.

Further, the dual problem and its KKT conditions elicit interesting properties of the SVM. First, the optimal primal hyperplane’s normal vector, w, is a linear combination of the training samplesFootnote 1; i.e., \(\mathbf{w} =\sum _{ i=1}^{n}\alpha _{i}y_{i}\mathbf{x}_{i}\). Second, the dual solution is sparse, and only samples that lie on or within the hyperplane’s margin have a nonzero α-value. Thus, if α i = 0, the corresponding sample x i is correctly classified, lies beyond the margin (i.e., \(y_{i}({\mathbf{w}}^{\top }\mathbf{x}_{i} + b) > 1\)), and is called a non-support vector. If α i = C, the ith sample violates the margin (i.e., \(y_{i}({\mathbf{w}}^{\top }\mathbf{x}_{i} + b) < 1\)) and is an error vector. Finally, if 0 < α i < C, the ith sample lies exactly on the margin (i.e., \(y_{i}({\mathbf{w}}^{\top }\mathbf{x}_{i} + b) = 1\)) and is a support vector. As a consequence, the optimal displacement b can be determined by averaging \(y_{i} -{\mathbf{w}}^{\top }\mathbf{x}_{i}\) over the support vectors.

4.2.2 Machine Learning for Computer Security: Motivation, Trends, and Arms Races

In this section, we motivate the recent adoption of machine-learning techniques in computer security and discuss the novel issues this trend raises. In the last decade, security systems increased in complexity to counter the growing sophistication and variability of attacks; a result of a long-lasting and continuing arms race in security-related applications such as malware detection, intrusion detection, and spam filtering. The main characteristics of this struggle and the typical approaches pursued in security to face it are discussed in Sect. 4.2.3.1. We now discuss some examples that better explain this trend and motivate the use of modern machine-learning techniques for security applications.

In the early years, the attack surface (i.e., the vulnerable points of a system) of most systems was relatively small and most attacks were simple. In this era, signature-based detection systems (e.g., rule-based systems based on string-matching techniques) were considered sufficient to provide an acceptable level of security. However, as the complexity and exposure of sensitive systems increased in the Internet Age, more targets emerged and the incentive for attacking them became increasingly attractive, thus providing a means and motivation for developing sophisticated and diverse attacks. Since signature-based detection systems can only detect attacks matching an existing signature, attackers used minor variations of their attacks to evade detection (e.g., string-matching techniques can be evaded by slightly changing the attack code). To cope with the increasing variability of attack samples and to detect never-before-seen attacks, machine-learning approaches have been increasingly incorporated into these detection systems to complement traditional signature-based detection. These two approaches can be combined to make accurate and agile detection: signature-based detection offers fast and lightweight filtering of most known attacks, while machine-learning approaches can process the remaining (unfiltered) samples and identify new (or less well-known) attacks.

The Quest of Image Spam. A recent example of the above arms race is image spam (see, e.g., [10]). In 2006, to evade the textual-based spam filters, spammers began rendering their messages into images included as attachments, thus producing “image-based spam,” or image spam for short. Due to the massive volume of image spam sent in 2006 and 2007, researchers and spam-filter designers proposed several different countermeasures. Initially, suspect images were analyzed by OCR tools to extract text for standard spam detection, and then signatures were generated to block the (known) spam images. However, spammers immediately reacted by randomly obfuscating images with adversarial noise, both to make OCR-based detection ineffective and to evade signature-based detection. The research community responded with (fast) approaches mainly based on machine-learning techniques using visual features extracted from images, which could accurately discriminate between spam images and legitimate ones (e.g., photographs and plots). Although image spam volumes have since declined, the exact cause for this decrease is debatable—these countermeasures may have played a role, but the image spam were also more costly to the spammer as they required more time to generate and more bandwidth to deliver, thus limiting the spammers’ ability to send a high volume of messages. Nevertheless, had this arms race continued, spammers could have attempted to evade the countermeasures by mimicking the feature values exhibited by legitimate images, which would have, in fact, forced spammers to increase the number of colors and elements in their spam images, thus further increasing the size of such files and the cost of sending them.

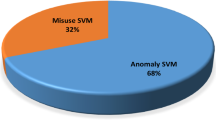

Misuse and Anomaly Detection in Computer Networks. Another example of the above arms race can be found in network intrusion detection, where misuse detection has been gradually augmented by anomaly detection. The former approach relies on detecting attacks on the basis of signatures extracted from (known) intrusive network traffic, while the latter is based upon a statistical model of the normal profile of the network traffic and detects anomalous traffic that deviates from the assumed model of normality. This model is often constructed using machine-learning techniques, such as one-class classifiers (e.g., one-class SVMs), or, more generally, using density estimators. The underlying assumption of anomaly-detection-based intrusion detection, though, is that all anomalous network traffic is, in fact, intrusive. Although intrusive traffic often does exhibit anomalous behavior, the opposite is not necessarily true: some non-intrusive network traffic may also behave anomalously. Thus, accurate anomaly detectors often suffer from high false-alarm rates.

4.2.3 Adversarial Machine Learning

As witnessed by the above examples, the introduction of machine-learning techniques in security-sensitive tasks has many beneficial aspects, and it has been somewhat necessitated by the increased sophistication and variability of recent attacks and zero-day exploits. However, there is good reason to believe that machine-learning techniques themselves will be subject to carefully designed attacks in the near future, as a logical next step in the above-sketched arms race. Since machine-learning techniques were not originally designed to withstand manipulations made by intelligent and adaptive adversaries, it would be reckless to naively trust these learners in a secure system. Instead, one needs to carefully consider whether these techniques can introduce novel vulnerabilities that may degrade the overall system’s security, or whether they can be safely adopted. In other words, we need to address the question raised by Barreno et al. [5]: can machine learning be secure?

At the center of this question is the effect an adversary can have on a learner by violating the stationarity assumption that the training data used to train the classifier comes from the same distribution as the test data that will be classified by the learned classifier. This is a conventional and natural assumption underlying much of machine learning and is the basis for performance-evaluation-based techniques like cross-validation and bootstrapping as well as for principles like empirical risk minimization (ERM). However, in security-sensitive settings, the adversary may purposely manipulate data to mislead learning. Accordingly, the data distribution is subject to change, thereby potentially violating non-stationarity, albeit, in a limited way subject to the adversary’s assumed capabilities (as we discuss in Sect. 4.3.1.3). Further, as in most security tasks, predicting how the data distribution will change is difficult, if not impossible [12, 36]. Hence, adversarial learning problems are often addressed as a proactive arms race [12], in which the classifier designer tries to anticipate the next adversary’s move, by simulating and hypothesizing proper attack scenarios, as discussed in the next section.

4.2.3.1 Reactive and Proactive Arms Races

As mentioned in the previous sections, and highlighted by the examples in Sect. 4.2.2, security problems are often cast as a long-lasting reactive arms race between the classifier designer and the adversary, in which each player attempts to achieve his/her goal by reacting to the changing behavior of his/her opponent. For instance, the adversary typically crafts samples to evade detection (e.g., a spammer’s goal is often to create spam emails that will not be detected), while the classifier designer seeks to develop a system that accurately detects most malicious samples while maintaining a very low false-alarm rate; i.e., by not falsely identifying legitimate examples. Under this setting, the arms race can be modeled as the following cycle [12]. First, the adversary analyzes the existing learning algorithm and manipulates his/her data to evade detection (or more generally, to make the learning algorithm ineffective). For instance, a spammer may gather some knowledge of the words used by the targeted spam filter to block spam and then manipulate the textual content of her spam emails accordingly; e.g., words like “cheap” that are indicative of spam can be misspelled as “che4p.” Second, the classifier designer reacts by analyzing the novel attack samples and updating his/her classifier. This is typically done by retraining the classifier on the newly collected samples, and/or by adding features that can better detect the novel attacks. In the previous spam example, this amounts to retraining the filter on the newly collected spam and, thus, to adding novel words into the filter’s dictionary (e.g., “che4p” may be now learned as a spammy word). This reactive arms race continues in perpetuity as illustrated in Fig. 4.1.

A conceptual representation of the reactive arms race [12]

However, reactive approaches to this arms race do not anticipate the next generation of security vulnerabilities and thus, the system potentially remains vulnerable to new attacks. Instead, computer security guidelines traditionally advocate a proactive approachFootnote 2—the classifier designer should proactively anticipate the adversary’s strategy by (1) identifying the most relevant threats, (2) designing proper countermeasures into his/her classifier, and (3) repeating this process for his/her new design before deploying the classifier. This can be accomplished by modeling the adversary (based on knowledge of the adversary’s goals and capabilities) and using this model to simulate attacks, as is depicted in Fig. 4.2 to contrast the reactive arms race. While such an approach does not account for unknown or changing aspects of the adversary, it can indeed lead to an improved level of security by delaying each step of the reactive arms race because it should reasonably force the adversary to exert greater effort (in terms of time, skills, and resources) to find new vulnerabilities. Accordingly, proactively designed classifiers should remain useful for a longer time, with less frequent supervision or human intervention and with less severe vulnerabilities.

A conceptual representation of the proactive arms race [12]

Although this approach has been implicitly followed in most of the previous work (see Sect. 4.2.3.2), it has only recently been formalized within a more general framework for the empirical evaluation of a classifier’s security [12], which we summarize in Sect. 4.3. Finally, although security evaluation may suggest specific countermeasures, designing general-purpose secure classifiers remains an open problem.

4.2.3.2 Previous Work on Security Evaluation

Previous work in adversarial learning can be categorized according to the two main steps of the proactive arms race described in the previous section. The first research direction focuses on identifying potential vulnerabilities of learning algorithms and assessing the impact of the corresponding attacks on the targeted classifier; e.g., [4, 5, 18, 36, 40–42, 46]. The second explores the development of proper countermeasures and learning algorithms robust to known attacks; e.g., [26, 41, 58].

Although some prior work does address aspects of the empirical evaluation of classifier security, which is often implicitly defined as the performance degradation incurred under a (simulated) attack, to our knowledge a systematic treatment of this process under a unifying perspective was only first described in our recent work [12]. Previously, security evaluation is generally conducted within a specific application domain such as spam filtering and network intrusion detection (e.g., in [26, 31, 41, 47, 67]), in which a different application-dependent criteria is separately defined for each endeavor. Security evaluation is then implicitly undertaken by defining an attack and assessing its impact on the given classifier. For instance, in [31], the authors showed how camouflage network packets can mimic legitimate traffic to evade detection; and, similarly, in [26, 41, 47, 67], the content of spam emails was manipulated for evasion. Although such analyses provide indispensable insights into specific problems, their results are difficult to generalize to other domains and provide little guidance for evaluating classifier security in a different application. Thus, in a new application domain, security evaluation often must begin anew and it is difficult to directly compare with prior studies. This shortcoming highlights the need for a more general set of security guidelines and a more systematic definition of classifier security evaluation that we began to address in [12].

Apart from application-specific work, several theoretical models of adversarial learning have been proposed [4, 17, 26, 36, 40, 42, 46, 54]. These models frame the secure learning problem and provide a foundation for a proper security evaluation scheme. In particular, we build upon elements of the models of [4, 5, 36, 38, 40, 42], which were used in defining our framework for security evaluation [12]. Below we summarize these foundations.

4.2.3.3 A Taxonomy of Potential Attacks Against Machine Learning Algorithms

A taxonomy of potential attacks against pattern classifiers was proposed in [4, 5, 36] as a baseline to characterize attacks on learners. The taxonomy is based on three main features: the kind of influence of attacks on the classifier, the kind of security violation they cause, and the specificity of an attack. The attack’s influence can be either causative, if it aims to undermine learning, or exploratory, if it targets the classification phase. Accordingly, a causative attack may manipulate both training and testing data, whereas an exploratory attack only affects testing data. Examples of causative attacks include work in [14, 38, 40, 53, 59], while exploratory attacks can be found in [26, 31, 41, 47, 67]. The security violation can be either an integrity violation, if it aims to gain unauthorized access to the system (i.e., to have malicious samples be misclassified as legitimate); an availability violation, if the goal is to generate a high number of errors (both false-negatives and false-positives) such that normal system operation is compromised (e.g., legitimate users are denied access to their resources); or a privacy violation, if it allows the adversary to obtain confidential information from the classifier (e.g., in biometric recognition, this may amount to recovering a protected biometric template of a system’s client). Finally, the attack specificity refers to the samples that are affected by the attack. It ranges continuously from targeted attacks (e.g., if the goal of the attack is to have a specific spam email misclassified as legitimate) to indiscriminate attacks (e.g., if the goal is to have any spam email misclassified as legitimate).

Each portion of the taxonomy specifies a different type of attack as laid out in Barreno et al. [4] and here we outline these with respect to a PDF malware detector. An example of a causative integrity attack is an attacker who wants to mislead the malware detector to falsely classify malicious PDFs as benign. The attacker could accomplish this goal by introducing benign PDFs with malicious features into the training set and the attack would be targeted if the features corresponded to a particular malware or otherwise an indiscriminate attack. Similarly, the attacker could cause a causative availability attack by injecting malware training examples that exhibited features common to benign messages; again, these would be targeted if the attacker wanted a particular set of benign PDFs to be misclassified. A causative privacy attack, however, would require both manipulation of the training and information obtained from the learned classifier. The attacker could inject malicious PDFs with features identifying a particular author and then subsequently test if other PDFs with those features were labeled as malicious; this observed behavior may leak private information about the authors of other PDFs in the training set.

In contrast to the causative attacks, exploratory attacks cannot manipulate the learner, but can still exploit the learning mechanism. An example of an exploratory integrity attack involves an attacker who crafts a malicious PDF for an existing malware detector. This attacker queries the detector with candidate PDFs to discover which attributes the detector uses to identify malware, thus, allowing his/her to redesign his/her PDF to avoid the detector. This example could be targeted to a single PDF exploit or indiscriminate if a set of possible exploits are considered. An exploratory privacy attack against the malware detector can be conducted in the same way as the causative privacy attack described above, but without first injecting PDFs into the training data. Simply by probing the malware detector with crafted PDFs, the attacker may divulge secrets from the detector. Finally, exploratory availability attacks are possible in some applications but are not currently considered to be of interest.

4.3 A Framework for Security Evaluation

In Sects. 4.2.3 and 4.2.3.1, we motivated the need for simulating a proactive arms race as a means for improving system security. We further argued that evaluating a classifier’s security properties through simulations of different, potential attack scenarios is a crucial step in this arms race for identifying the most relevant vulnerabilities and for suggesting how to potentially counter them. Here, we summarize our recent work [12] that proposes a new framework for designing proactive secure classifiers by addressing the shortcomings of the reactive security cycle raised above. Namely, our approach allows one to empirically evaluate a classifier’s security during its design phase by addressing the first three steps of the proactive arms race depicted in Fig. 4.2: (1) identifying potential attack scenarios, (2) devising the corresponding attacks, and (3) systematically evaluating their impact. Although it may also suggest countermeasures to the hypothesized attacks, the final step of the proactive arms race remains unspecified as a unique design step that has to be addressed separately in an application-specific manner.

Under our proposed security evaluation process, the analyst must clearly scrutinize the classifier by considering different attack scenarios to investigate a set of distinct potential vulnerabilities. This amounts to performing a more systematic what-if analysis of classifier security [57]. This is an essential step in the design of security systems, as it not only allows the designer to identify the most important and relevant threats, but also it forces him/her to consciously decide whether the classifier can be reasonably deployed, after being made aware of the corresponding risks, or whether it is instead better to adopt additional countermeasure to mitigate the attack’s impact before deploying the classifier.

Our proposed framework builds on previous work and attempts to systematize and unify their views under a more coherent perspective. The framework defines how an analyst can conduct a security audit of a classifier, which we detail in the remainder of this section. First, in Sect. 4.3.1, we explain how an adversary model is constructed according to the adversary’s anticipated goals, knowledge, and capabilities. Based on this model, a simulation of the adversary can be conducted to find the corresponding optimal attack strategies and produce simulated attacks, as described in Sect. 4.3.1.4. These simulated attack samples are then used to evaluate the classifier by adding them to either the training or test data, in accordance with the adversary’s capabilities from Sect. 4.3.1.3. We conclude this section by discussing how to exploit our framework in specific application domains in Sect. 4.3.2.

4.3.1 Modeling the Adversary

The proposed model of the adversary is based on specific assumptions about his/her goal, knowledge of the system, and capability to modify the underlying data distribution by manipulating individual samples. It allows the classifier designer to model the attacks identified in the attack taxonomy described as in Sect. 4.2.3.3 [4, 5, 36]. However, in our framework, one can also incorporate application-specific constraints into the definition of the adversary’s capability. Therefore, it can be exploited to derive practical guidelines for developing optimal attack strategies and to guide the design of adversarially resilient classifiers.

4.3.1.1 Adversary’s Goal

According to the taxonomy presented first by Barreno et al. [5] and extended by Huang et al. [36], the adversary’s goal should be defined based on the anticipated security violation, which might be an integrity, availability, or privacy violation (see Sect. 4.2.3.3), and also depending on the attack’s specificity, which ranges from targeted to indiscriminate. Further, as suggested by Laskov and Kloft [42] and Kloft and Laskov [40], the adversary’s goal should be defined in terms of an objective function that the adversary is willing to maximize. This allows for a formal characterization of the optimal attack strategy.

For instance, in an indiscriminate integrity attack, the adversary may aim to maximize the number of spam emails that evade detection, while minimally manipulating their content [26, 46, 54], whereas in an indiscriminate availability attack, the adversary may aim to maximize the number of classification errors, thereby causing a general denial-of-service due to an excess of false alarms [14, 53].

4.3.1.2 Adversary’s Knowledge

The adversary’s knowledge of the attacked system can be defined based on the main components involved in the design of a machine-learning system, as described in [29] and depicted in Fig. 4.3.

A representation of the design steps of a machine-learning system [29] that may provide sources of knowledge for the adversary

According to the five design steps depicted in Fig. 4.3, the adversary may have various degrees of knowledge (ranging from no information to complete information) pertaining to the following five components:

-

(k.i)

the training set (or part of it);

-

(k.ii)

the feature representation of each sample; i.e., how real objects (emails, network packets, etc.) are mapped into the feature space;

-

(k.iii)

the learning algorithm and its decision function; e.g., that logistic regression is used to learn a linear classifier;

-

(k.iv)

the learned classifier’s parameters; e.g., the actual learned weights of a linear classifier;

-

(k.v)

feedback from the deployed classifier; e.g., the classification labels assigned to some of the samples by the targeted classifier.

These five elements represent different levels of knowledge about the system being attacked. A typical hypothesized scenario assumes that the adversary has perfect knowledge of the targeted classifier (k.iv). Although potentially too pessimistic, this worst-case setting allows one to compute a lower bound on the classifier performance when it is under attack [26, 41]. A more realistic setting is that the adversary knows the (untrained) learning algorithm (k.iii), and he/she may exploit feedback from the classifier on the labels assigned to some query samples (k.v), either to directly find optimal or nearly optimal attack instances [46, 54] or to learn a surrogate classifier, which can then serve as a template to guide the attack against the actual classifier. We refer to this scenario as a limited knowledge setting in Sect. 4.4.

Note that one may also make more restrictive assumptions on the adversary’s knowledge, such as considering partial knowledge of the feature representation (k.ii), or a complete lack of knowledge of the learning algorithm (k.iii). Investigating classifier security against these uninformed adversaries may yield a higher level of security. However, such assumptions would be contingent on security through obscurity; that is, the provided security would rely upon secrets that must be kept unknown to the adversary even though such a high level of secrecy may not be practical. Reliance on unjustified secrets can potentially lead to catastrophic unforeseen vulnerabilities. Thus, this paradigm should be regarded as being complementary to security by design, which instead advocates that systems should be designed from the ground-up to be secure and, if secrets are assumed, they must be well justified. Accordingly, security is often investigated by assuming that the adversary knows at least the learning algorithm and the underlying feature representation.

4.3.1.3 Adversary’s Capability

We now give some guidelines on how the attacker may be able to manipulate samples and the corresponding data distribution. As discussed in Sect. 4.2.3.3 [4, 5, 36], the adversary may control both training and test data (causative attacks), or only on test data (exploratory attacks). Further, training and test data may follow different distributions, since they can be manipulated according to different attack strategies by the adversary. Therefore, we should specify:

-

(c.i)

whether the adversary can manipulate training (TR) and/or testing (TS) data; i.e., the attack influence from the taxonomy in [4, 5, 36];

-

(c.ii)

whether and to what extent the attack affects the class priors, for TR and TS;

-

(c.iii)

which and how many samples can be modified in each class, for TR and TS;

-

(c.iv)

which features of each attack sample can be modified and how can these features’ values be altered; e.g., correlated feature values cannot be modified independently.

Assuming a generative model \(p(\mathbf{X},Y ) = p(Y )p(\mathbf{X}\vert Y )\) (where we use p tr and p ts for training and test distributions, respectively), assumption (c.ii) specifies how an attack can modify the priors p tr(Y ) and p ts(Y ), while assumptions (c.iii) and (c.iv) specify how it can alter the class-conditional distributions p tr(X | Y ) and p ts(X | Y ).

To perform security evaluation according to the hypothesized attack scenario, it is thus clear that the collected data and generated attack samples should be resampled according to the above distributions to produce suitable training and test set pairs. This can be accomplished through existing resampling algorithms like cross-validation or bootstrapping, when the attack samples are independently sampled from an identical distribution (i.i.d.). Otherwise, one may consider different sampling schemes. For instance, in Biggio et al. [14] the attack samples had to be injected into the training data, and each attack sample depended on the current training data, which also included past attack samples. In this case, it was sufficient to add one attack sample at a time, until the desired number of samples was reached.Footnote 3

4.3.1.4 Attack Strategy

Once specific assumptions on the adversary’s goal, knowledge, and capability are made, one can compute the optimal attack strategy corresponding to the hypothesized attack scenario; i.e., the adversary model. This amounts to solving the optimization problem defined according to the adversary’s goal, under proper constraints defined in accordance with the adversary’s assumed knowledge and capabilities. The attack strategy can then be used to produce the desired attack samples, which then have to be merged consistently to the rest of the data to produce suitable training and test sets for the desired security evaluation, as explained in the previous section. Specific examples of how to derive optimal attacks against SVMs and how to resample training and test data to properly include them are discussed in Sects. 4.4 and 4.5.

4.3.2 How to Use Our Framework

We summarize here the steps that can be followed to correctly use our framework in specific application scenarios:

-

1.

hypothesize an attack scenario by identifying a proper adversary’s goal, and according to the taxonomy in [4, 5, 36];

-

2.

define the adversary’s knowledge according to (k.i-v), and capabilities according to (c.i-iv);

-

3.

formulate the corresponding optimization problem and devise the corresponding attack strategy;

-

4.

resample the collected (training and test) data accordingly;

-

5.

evaluate classifier’s security on the resampled data (including attack samples);

-

6.

repeat the evaluation for different levels of adversary’s knowledge and/or capabilities, if necessary; or hypothesize a different attack scenario.

In the next sections we show how our framework can be applied to investigate three security threats to SVMs: evasion, poisoning, and privacy violations. We then discuss how our findings may be used to improve the security of such classifiers to the considered attacks. For instance, we show how careful kernel parameter selection, which trades off between security to attacks and classification accuracy, may complicate the adversary’s task of subverting the learning process.

4.4 Evasion Attacks Against SVMs

In this section, we consider the problem of SVM evasion at test time; i.e., how to optimally manipulate samples at test time to avoid detection. The problem of evasion at test time has been considered in previous work albeit limited either to simple decision functions such as linear classifiers [26, 46], or to cover any convex-inducing classifiers [54] that partition the feature space into two sets, one of which is convex, but do not include most interesting families of nonlinear classifiers such as neural nets or SVMs. In contrast to this prior work, the methods presented in our recent work [8] and in this section demonstrate that evasion of kernel-based classifiers at test time can be realized with a straightforward gradient-descent-based approach derived from Golland’s technique of discriminative directions [33]. As a further simplification of the attacker’s effort, we empirically show that, even if the adversary does not precisely know the classifier’s decision function, he/she can learn a surrogate classifier on a surrogate dataset and reliably evade the targeted classifier.

This section is structured as follows. In Sect. 4.4.1, we define the model of the adversary, including his/her attack strategy, according to our evaluation framework described in Sect. 4.3.1. Then, in Sect. 4.4.2 we derive the attack strategy that will be employed to experimentally evaluate evasion attacks against SVMs. We report our experimental results in Sect. 4.4.3. Finally, we critically discuss and interpret our research findings in Sect. 4.4.4.

4.4.1 Modeling the Adversary

We show here how our framework can be applied to evaluate the security of SVMs against evasion attacks. We first introduce our notation, state our assumptions about attack scenario, and then derive the corresponding optimal attack strategy.

Notation. We consider a classification algorithm \(f: \mathcal{X}\mapsto \mathcal{Y}\) that assigns samples represented in some feature space \(\mathbf{x} \in \mathcal{X}\) to a label in the set of predefined classes \(y \in \mathcal{Y} =\{ -1,+1\}\), where − 1 ( + 1) represents the legitimate (malicious) class. The label \(f_{\mathbf{x}} = f(\mathbf{x})\) given by a classifier is typically obtained by thresholding a continuous discriminant function \(g: \mathcal{X}\mapsto \mathbb{R}\). Without loss of generality, we assume that f(x) = −1 if g(x) < 0, and + 1 otherwise. Further, note that we use f x to refer to a label assigned by the classifier for the point x (rather than the true label y of that point) and the shorthand f i for the label assigned to the ith training point, x i .

4.4.1.1 Adversary’s Goal

Malicious (positive) samples are manipulated to evade the classifier. The adversary may be satisfied when a sample x is found such that g(x) < −ε where ε > 0 is a small constant. However, as mentioned in Sect. 4.3.1.1, these attacks may be easily defeated by simply adjusting the decision threshold to a slightly more conservative value (e.g., to attain a lower false negative rate at the expense of a higher false positive rate). For this reason, we assume a smarter adversary, whose goal is to have his/her attack sample misclassified as legitimate with the largest confidence. Analytically, this statement can be expressed as follows: find an attack sample x that minimizes the value of the classifier’s discriminant function g(x). Indeed, this adversarial setting provides a worst-case bound for the targeted classifier.

4.4.1.2 Adversary’s Knowledge

We investigate two adversarial settings. In the first, the adversary has perfect knowledge (PK) of the targeted classifier; i.e., he/she knows the feature space (k.ii) and function g(x) (k.iii-iv). Thus, the labels from the targeted classifier (k.v) are not needed. In the second, the adversary is assumed to have limited knowledge (LK) of the classifier. We assume he/she knows the feature representation (k.ii) and the learning algorithm (k.iii), but that he/she does not know the learned classifier g(x) (k.iv). In both cases, we assume the attacker does not have knowledge of the training set (k.i).

Within the LK scenario, the adversary does not know the true discriminant function g(x) but may approximate it as \(\hat{g}(\mathbf{x})\) by learning a surrogate classifier on a surrogate training set \(\{(\mathbf{x}_{i},y_{i})\}_{i=1}^{n_{q}}\) of n q samples. This data may be collected by the adversary in several ways; e.g., he/she may sniff network traffic or collect legitimate and spam emails from an alternate source. Thus, for LK, there are two sub-cases related to assumption (k.v), which depend on whether the adversary can query the classifier. If so, the adversary can build the training set by submitting a set of n q queries x i to the targeted classifier to obtain their classification labels, y i = f(x i ). This is indeed the adversary’s true learning task, but it requires him/her to have access to classifier feedback; e.g., by having an email account protected by the targeted filter (for public email providers, the adversary can reasonably obtain such accounts). If not, the adversary may use the true class labels for the surrogate data, although this may not correctly approximate the targeted classifier (unless it is very accurate).

4.4.1.3 Adversary’s Capability

In the evasion setting, the adversary can only manipulate testing data (c.i); i.e., he/she has no way to influence training data. We further assume here that the class priors cannot be modified (c.ii), and that all the malicious testing samples are affected by the attack (c.iii). In other words, we are interested in simulating an exploratory, indiscriminate attack. The adversary’s capability of manipulating the features of each sample (c.iv) should be defined based on application-specific constraints. However, at a more general level we can bound the attack point to lie within some maximum distance from the original attack sample, d max, which then is a parameter of our evaluation. Similarly to previous work, the definition of a suitable distance measure \(d: \mathcal{X}\times \mathcal{X}\mapsto \mathbb{R}\) is left to the specific application domain [26, 46, 54]. Note indeed that this distance should reflect the adversary’s effort or cost in manipulating samples, by considering factors that can limit the overall attack impact; e.g., the increase in the file size of a malicious PDF, since larger files will lower the infection rate due to increased transmission times. For spam filtering, distance is often given as the number of modified words in each spam [26, 46, 53, 54], since it is assumed that highly modified spam messages are less effectively able to convey the spammer’s message.

4.4.1.4 Attack Strategy

Under the attacker’s model described in Sects. 4.4.1.1, 4.4.1.2, and 4.4.1.3, for any target malicious sample x 0 (the adversary’s true objective), an optimal attack strategy finds a sample x ∗ to minimize g or its estimate \(\hat{g}\), subject to a bound on its modification distance from x 0:

For several classifiers, minimizing g(x) is equivalent to maximizing the estimated posterior p(f x = −1 | x); e.g., for neural networks, since they directly output a posterior estimate, and for SVMs, since their posterior can be estimated as a sigmoidal function of the distance of x to the SVM hyperplane [56].

Generally, this is a nonlinear optimization, which one may optimize with many well-known techniques (e.g., gradient-descent, Newton’s method, or BFGS) and below we use a gradient-descent procedure. However, if \(\hat{g}(\mathbf{x})\) is not convex, descent approaches may not find a global optima. Instead, the descent path may lead to a flat region (local minimum) outside of the samples’ support where p(x) ≈ 0 and the classification behavior of g is unspecified and may stymie evasion attempts (see the upper left plot in Fig. 4.4).

Different scenarios for gradient-descent-based evasion procedures. In each, the function g(x) of the learned classifier is plotted with a color map with high values (red-orange-yellow) corresponding to the malicious class, low values (green-cyan-blue) corresponding to the benign class, and a black decision boundary for the classifier. For every malicious sample, we plot the path of a simple gradient-descent evasion for a classifier with a closed boundary around the malicious class (upper left) or benign class (bottom left). Then, we plot the modified objective function of Eq. (4.1) and the paths of the resulting density-augmented gradient descent for a classifier with a closed boundary around the malicious (upper right) or benign class (bottom right)

Unfortunately, our objective does not utilize the evidence we have about the distribution of data p(x), and thus gradient descent may meander into unsupported regions (p(x) ≈ 0) where g is relatively unspecified. This problem is further compounded since our estimate \(\hat{g}\) is based on a finite (and possibly small) training set making it a poor estimate of g in unsupported regions, which may lead to false evasion points in these regions. To overcome these limitations, we introduce an additional component into the formulation of our attack objective, which estimates p(x | f x = −1) using density-estimation techniques. This second component acts as a penalizer for x in low density regions and is weighted by a parameter λ ≥ 0 yielding the following modified optimization problem:

where h is a bandwidth parameter for a kernel density estimator (KDE) and n is the number of benign samples (f x = −1) available to the adversary. This alternate objective trades off between minimizing \(\hat{g}(\mathbf{x})\) (or p(f x = −1 | x)) and maximizing the estimated density p(x | f x = −1). The extra component favors attack points to imitate features of known samples classified as legitimate, as in mimicry attacks [31]. In doing so, it reshapes the objective function and thereby biases the resulting density-augmented gradient descent towards regions where the negative class is concentrated (see the bottom-right plot in Fig. 4.4).

Finally, note that this behavior may lead our technique to disregard attack patterns within unsupported regions (p(x) ≈ 0) for which g(x) < 0, when they do exist (see, e.g., the upper right plot in Fig. 4.4). This may limit classifier evasion especially when the constraint \(d(\mathbf{x},{\mathbf{x}}^{0}) \leq d_{\mathrm{max}}\) is particularly strict. Therefore, the trade-off between the two components of the objective function should be carefully considered.

4.4.2 Evasion Attack Algorithm

Algorithm 1 details a gradient-descent method for optimizing problem of Eq. (4.1). It iteratively modifies the attack point x in the feature space as \({\mathbf{x}}^{{\prime}}\leftarrow \mathbf{x} - t\nabla E\), where ∇E is a unit vector aligned with the gradient of our objective function, and t is the step size. We assume g to be differentiable almost everywhere (subgradients may be used at discontinuities). When g is non-differentiable or is not smooth enough for a gradient descent to work well, it is also possible to rely upon the mimicry / KDE term in the optimization of Eq. (4.1).

Algorithm 1 Gradient-descent attack procedure

Input: the initial attack point, x 0; the step size, t; the trade-off parameter, λ; and ε > 0. Output: x ∗, the final attack point.

1: k ← 0.

2: repeat

3: k ← k + 1

4: Set ∇E(x k−1) to a unit vector aligned with \(\nabla g({\mathbf{x}}^{k-1}) -\lambda \nabla p({\mathbf{x}}^{k-1}\vert f_{\mathbf{x}} = -1)\).

5: \({\mathbf{x}}^{k} \leftarrow {\mathbf{x}}^{k-1} - t\nabla E({\mathbf{x}}^{k-1})\)

6: if \(d({\mathbf{x}}^{k},{\mathbf{x}}^{0}) > d_{\mathrm{max}}\) then

7: Project x k onto the boundary of the feasible region (enforcing application-specific constraints, if any).

8: end if

9: until \(E\left ({\mathbf{x}}^{k}\right ) - E\left ({\mathbf{x}}^{k-1}\right ) <\epsilon\)

10: return: \({\mathbf{x}}^{{\ast}} ={ \mathbf{x}}^{k}\)

In the next sections, we show how to compute the components of ∇E; namely, the gradient of the discriminant function g(x) of SVMs for different kernels, and the gradient of the mimicking component (density estimation). We finally discuss how to project the gradient ∇E onto the feasible region in discrete feature spaces.

4.4.2.1 Gradient of Support Vector Machines

For SVMs, \(g(\mathbf{x}) =\sum _{i}\alpha _{i}y_{i}k(\mathbf{x},\mathbf{x}_{i}) + b\). The gradient is thus given by \(\nabla g(\mathbf{x}) =\sum _{i}\alpha _{i}y_{i}\nabla k(\mathbf{x},\mathbf{x}_{i})\). Accordingly, the feasibility of the approach depends on the computability of this kernel gradient \(\nabla k(\mathbf{x},\mathbf{x}_{i})\), which is computable for many numeric kernels. In the following, we report the kernel gradients for three main cases: (a) the linear kernel, (b) the RBF kernel, and (c) the polynomial kernel.

-

(a)

Linear Kernel. In this case, the kernel is simply given by \(k(\mathbf{x},\mathbf{x}_{i}) =\langle \mathbf{x},\mathbf{x}_{i}\rangle\). Accordingly, \(\nabla k(\mathbf{x},\mathbf{x}_{i}) = \mathbf{x}_{i}\) (we remind the reader that the gradient has to be computed with respect to the current attack sample x), and \(\nabla g(\mathbf{x}) = \mathbf{w} =\sum _{i}\alpha _{i}y_{i}\mathbf{x}_{i}\).

-

(b)

RBF Kernel. For this kernel, \(k(\mathbf{x},\mathbf{x}_{i}) =\exp \{ -\gamma \|\mathbf{x} -\mathbf{x}{_{i}\|}^{2}\}\). The gradient is thus given by \(\nabla k(\mathbf{x},\mathbf{x}_{i}) = -2\gamma \exp \{ -\gamma \|\mathbf{x} -\mathbf{x}{_{i}\|}^{2}\}(\mathbf{x} -\mathbf{x}_{i})\).

-

(c)

Polynomial Kernel. In this final case, \(k(\mathbf{x},\mathbf{x}_{i}) = {(\langle \mathbf{x},\mathbf{x}_{i}\rangle + c)}^{p}\). The gradient is thus given by \(\nabla k(\mathbf{x},\mathbf{x}_{i}) = p{(\langle \mathbf{x},\mathbf{x}_{i}\rangle + c)}^{p-1}\mathbf{x}_{i}\).

4.4.2.2 Gradients of Kernel Density Estimators

As with SVMs, the gradient of kernel density estimators depends on the gradient of its kernel. We considered generalized RBF kernels of the form

where \(d(\cdot,\cdot )\) is any suitable distance function. We used here the same distance d(⋅ , ⋅ ) used in Eq. (4.1), but they can be different, in general. For ℓ 2- and ℓ 1-norms (i.e., RBF and Laplacian kernels), the KDE (sub)gradients are, respectively, given by:

Note that the scaling factor here is proportional to \(O( \frac{1} {nh})\). Therefore, to influence gradient descent with a significant mimicking effect, the value of λ in the objective function should be chosen such that the value of \(\frac{\lambda }{nh}\) is comparable to (or higher than) the range of the discriminant function \(\hat{g}(\mathbf{x})\).

4.4.2.3 Gradient-Descent Attack in Discrete Spaces

In discrete spaces, gradient approaches may lead to a path through infeasible portions of the feature space. In such cases, we need to find feasible neighbors x that yield a steepest descent; i.e., maximally decreasing E(x). A simple approach to this problem is to probe E at every point in a small neighborhood of x: \({\mathbf{x}}^{{\prime}} \leftarrow \arg \min _{\mathbf{z}\in \mathcal{N}(\mathbf{x})}E(\mathbf{z})\). However, this approach requires a large number of queries. For classifiers with a differentiable decision function, we can instead use the neighbor whose difference from x best aligns with ∇E(x); i.e., the update becomes

Thus, the solution to the above alignment is simply to modify a feature that satisfies \(\arg \max _{i}\vert \nabla E(\mathbf{x})_{i}\vert \) for which the corresponding change leads to a feasible state. Note, however that, sometimes, such a step may be relatively quite large and may lead the attack out of a local minimum potentially increasing the objective function. Therefore, one should consider the best alignment that effectively reduces the objective function by disregarding features that lead to states where the objective function is higher.

4.4.3 Experiments

In this section, we first report some experimental results on the MNIST handwritten digit classification task [32, 45] that visually demonstrate how the proposed algorithm modifies digits to mislead classification. This dataset is particularly useful because the visual nature of the handwritten digit data provides a semantic meaning for attacks. We then show the effectiveness of the proposed attack on a more realistic and practical scenario: the detection of malware in PDF files.

4.4.3.1 Handwritten Digits

We first focus on a two-class sub-problem of discriminating between two distinct digits from the MNIST dataset [45]. Each digit example is represented as a grayscale image of 28 × 28 pixels arranged in raster-scan-order to give feature vectors of d = 28 × 28 = 784 values. We normalized each feature (pixel) \(x_{f} \in {[0,1]}^{d}\) by dividing its value by 255, and we constrained the attack samples to this range. Accordingly, we optimized Eq. (4.1) subject to 0 ≤ x f ≤ 1 for all f.

For our attacker, we assume the perfect knowledge (PK) attack scenario. We used the Manhattan distance (ℓ 1-norm) as the distance function, d, both for the kernel density estimator (i.e., a Laplacian kernel) and for the constraint \(d(\mathbf{x},{\mathbf{x}}^{0}) \leq d_{\mathrm{max}}\) of Eq. (4.1), which bounds the total difference between the gray-level values of the original image x 0 and the attack image x. We used an upper bound of \(d_{\mathrm{max}} = \frac{5000} {255}\) to limit the total change in the gray-level values to 5000. At each iteration, we increased the ℓ 1-norm value of x −x 0 by \(\frac{10} {255}\), which is equivalent to increasing the difference in the gray-level values by 10. This is effectively the gradient step size.

For the digit discrimination task, we applied an SVM with the linear kernel and C = 1. We randomly chose 100 training samples and applied the attacks to a correctly classified positive sample.

Illustration of the gradient attack on the MNIST digit data, for λ = 0 (top row) and λ = 10 (bottom row). Without a mimicry component (λ = 0) gradient descent quickly decreases g but the resulting attack image does not resemble a “7.” In contrast, the attack minimizes g slower when mimicry is applied (λ = 10), but the final attack image closely resembles a mixture between “3” and “7,” as the term “mimicry” suggests

In Fig. 4.5 we illustrate gradient attacks in which a “3” is to be misclassified as a “7.” The left image shows the initial attack point, the middle image shows the first attack image misclassified as legitimate, and the right image shows the attack point after 500 iterations. When λ = 0, the attack images exhibit only a weak resemblance to the target class “7” but are, nevertheless, reliably misclassified. This is the same effect we observed in the left plot of Fig. 4.4: the classifier is evaded by making the attack sample dissimilar to the malicious class. Conversely, when λ = 10 the attack images strongly resemble the target class because the mimicry term favors samples that are more similar to the target examples. This is the same effect illustrated in the rightmost plot of Fig. 4.4.

4.4.3.2 Malware Detection in PDF Files

We focus now on the problem of discriminating between legitimate and malicious PDF files, a popular medium for disseminating malware [68]. PDF files are excellent vectors for malicious-code, due to their flexible logical structure, which can be described by a hierarchy of interconnected objects. As a result, an attack can be easily hidden in a PDF to circumvent file-type filtering. The PDF format further allows a wide variety of resources to be embedded in the document including JavaScript, Flash, and even binary programs. The type of the embedded object is specified by keywords, and its content is in a data stream. Several recent works proposed machine-learning techniques for detecting malicious PDFs use the file’s logical structure to accurately identify the malware [50, 63, 64]. In this case study, we use the feature representation of Maiorca et al. [50] in which each feature corresponds to the tally of occurrences of a given keyword in the PDF file. Similar feature representations were also exploited in [63, 64].

The PDF application imposes natural constraints on attacks. Although it is difficult to remove an embedded object (and its corresponding keywords) without corrupting the PDF’s file structure, it is rather easy to insert new objects (and, thus, keywords) through the addition of a new version to the PDF file [1, 49]. In our feature representation, this is equivalent to allowing only feature increments; i.e., requiring \({\mathbf{x}}^{0} \leq \mathbf{x}\) as an additional constraint in the optimization problem given by Eq. (4.1). Further, the total difference in keyword counts between two samples is their Manhattan distance, which we again use for the kernel density estimator and the constraint in Eq. (4.1). Accordingly, d max is the maximum number of additional keywords that an attacker can add to the original x 0.

Experimental Setup. For experiments, we used a PDF corpus with 500 malicious samples from the Contagio datasetFootnote 4 and 500 benign samples collected from the web. We randomly split the data into five pairs of training and testing sets with 500 samples each to average the final results. The features (keywords) were extracted from each training set as described in [50]; on average, 100 keywords were found in each run. Further, we also bounded the maximum value of each feature (keyword count) to 100, as this value was found to be close to the 95th percentile for each feature. This limited the influence of outlying samples having very high feature values.

We simulated the perfect knowledge (PK) and the limited knowledge (LK) scenarios described in Sect. 4.4.1.2. In the LK case, we set the number of samples used to learn the surrogate classifier to n q = 100. The reason is to demonstrate that even with a dataset as small as the 20 % of the original training set size, the adversary may be able to evade the targeted classifier with high reliability. Further, we assumed that the adversary uses feedback from the targeted classifier f; i.e., the labels \(\hat{y}_{i} = f_{i} = f(\mathbf{x}_{i})\) for each surrogate sample x i . Similar results were also obtained using the true labels (without relabeling), since the targeted classifiers correctly classified almost all samples in the test set.

As discussed in Sect. 4.4.2.2, the value of λ is chosen according to the scale of the discriminant function g(x), the bandwidth parameter h of the kernel density estimator, and the number of samples n labeled as legitimate in the surrogate training set. For computational reasons, to estimate the value of the KDE at a given point x in the feature space, we only consider the 50 nearest (legitimate) training samples to x; therefore, n ≤ 50 in our case. The bandwidth parameter was set to h = 10, as this value provided a proper rescaling of the Manhattan distances observed in our dataset for the KDE. We thus set λ = 500 to be comparable with O(nh).

For each targeted classifier and training/testing pair, we learned five different surrogate classifiers by randomly selecting n q samples from the test set and averaged their results. For SVMs, we sought a surrogate classifier that would correctly match the labels from the targeted classifier; thus, we used parameters C = 100, and γ = 0. 1 (for the RBF kernel) to heavily penalize training errors.

Experimental Results. We report our results in Fig. 4.6, in terms of the false negative (FN) rate attained by the targeted classifiers as a function of the maximum allowable number of modifications, d max ∈ [0, 50]. We compute the FN rate corresponding to a fixed false positive (FP) rate of FP = 0. 5 %. For d max = 0, the FN rate corresponds to a standard performance evaluation using unmodified PDFs. As expected, the FN rate increases with d max as the PDF is increasingly modified, since the adversary has more flexibility in his/her attack. Accordingly, a more secure classifier will exhibit a more graceful increase of the FN rate.

Experimental results for SVMs with the linear and the RBF kernel (first and second columns). We report the FN values (attained at FP = 0.5 %) for increasing d max. For the sake of readability, we report the average FN value ± half standard deviation (shown with error bars). Results for perfect knowledge (PK) attacks with λ = 0 (without mimicry) are shown in the first row, for different values of the considered classifier parameters, i.e., the regularization parameter C for the linear SVM, and the kernel parameter γ for the SVM with RBF kernel (with C = 1). Results for limited knowledge (LK) attacks with λ = 0 (without mimicry) are shown in the second row for the linear SVM (for varying C), and the SVM with RBF kernel (for varying γ, with C = 1). Results for perfect (PK) and limited knowledge (LK) with λ = 500 (with mimicry) are shown in the third row for the linear SVM (with C = 1), and the SVM with RBF kernel (with γ = 1 and C = 1)

Results for λ = 0. We first investigate the effect of the proposed attack in the PK case, without considering the mimicry component (Fig. 4.6, top row), for varying parameters of the considered classifiers. The linear SVM (Fig. 4.6, top-left plot) is almost always evaded with as few as 5–10 modifications, independent of the regularization parameter C. It is worth noting that attacking a linear classifier amounts to always incrementing the value of the same highest-weighted feature (corresponding to the /Linearized keyword in the majority of the cases) until it is bounded. This continues with the next highest-weighted non-bounded feature until termination. This occurs simply because the gradient of g(x) does not depend on x for a linear classifier (see Sect. 4.4.2.1). With the RBF kernel (Fig. 4.6, top-right plot), SVMs exhibit a similar behavior with C = 1 and various values of its γ parameter,Footnote 5 and the RBF SVM provides a higher degree of security compared to linear SVMs (cf. top-left plot and middle-left plot in Fig. 4.6).

In the LK case, without mimicry (Fig. 4.6, middle row), classifiers are evaded with a probability only slightly lower than that found in the PK case, even when only n q = 100 surrogate samples are used to learn the surrogate classifier. This aspect highlights the threat posed by a skilled adversary with incomplete knowledge: only a small set of samples may be required to successfully attack the target classifier using the proposed algorithm.

Results for λ = 500. When mimicry is used (Fig. 4.6, bottom row), the success of the evasion of linear SVMs (with C = 1) decreases both in the PK (e.g., compare the blue curve in the top-left plot with the solid blue curve in the bottom-left plot) and in the LK case (e.g., compare the blue curve in the middle-left plot with the dashed blue curve in the bottom-left plot). The reason is that the computed direction tends to lead to a slower descent; i.e., a less direct path that often requires more modifications to evade the classifier. In the nonlinear case (Fig. 4.6, bottom-right plot), instead, mimicking exhibits some beneficial aspects for the attacker, although the constraint on feature addition may make it difficult to properly mimic legitimate samples. In particular, note how the targeted SVMs with RBF kernel (with C = 1 and γ = 1) in the PK case (e.g., compare the blue curve in the top-right plot with the solid blue curve in the bottom-right plot) are evaded with a significantly higher probability than in the case when λ = 0. The reason is that a pure descent strategy on g(x) may find local minima (i.e., attack samples) that do not evade detection, while the mimicry component biases the descent towards regions of the feature space more densely populated by legitimate samples, where g(x) eventually attains lower values. In the LK case (e.g., compare the blue curve in the middle-right plot with the dashed blue curve in the bottom-right plot), however, mimicking does not exhibit significant improvements.

Analysis. Our attacks raise questions about the feasibility of detecting malicious PDFs solely based on logical structure. We found that /Linearized, /OpenAction, /Comment, /Root and /PageLayout were among the most commonly manipulated keywords. They indeed are found mainly in legitimate PDFs, but can be easily added to malicious PDFs by the versioning mechanism. The attacker can simply insert comments inside the malicious PDF file to augment its /Comment count. Similarly, he/she can embed legitimate OpenAction code to add /OpenAction keywords or he/she can add new pages to insert /PageLayout keywords.

In summary, our analysis shows that even detection systems that accurately classify non-malicious data can be significantly degraded with only a few malicious modifications. This aspect highlights the importance of developing detection systems that are accurate, but also designed to be robust against adversarial manipulation of attack instances.

4.4.4 Discussion

In this section, we proposed a simple algorithm that allows for evasion of SVMs with differentiable kernels, and, more generally, of any classifier with a differentiable discriminant function. We investigated the attack effectiveness in the case of perfect knowledge of the attacked system. Further, we empirically showed that SVMs can still be evaded with high probability even if the adversary can only learn a classifier’s copy on surrogate data (limited knowledge). We believe that the proposed attack formulation can easily be extended to classifiers with non-differentiable discriminant functions as well, such as decision trees and k-nearest neighbors.

Our analysis also suggests some ideas for improving classifier security. In particular, when the classifier tightly encloses the legitimate samples, the adversary must increasingly mimic the legitimate class to evade (see Fig. 4.4), and this may not always be possible; e.g., malicious network packets or PDF files still need to embed a valid exploit, and some features may be immutable. Accordingly, a guideline for designing secure classifiers is that learning should encourage a tight enclosure of the legitimate class; e.g., by using a regularizer that penalizes classifying “blind spots”—regions with low p(x)—as legitimate. Generative classifiers can be modified, by explicitly modeling the attack distribution, as in [11], and discriminative classifiers can be modified similarly by adding generated attack samples to the training set. However, these security improvements may incur higher FP rates.

In the above applications, the feature representations were invertible; i.e., there is a direct mapping from the feature vectors x to a corresponding real-world sample (e.g., a spam email, or PDF file). However, some feature mappings cannot be trivially inverted; e.g., n-gram analysis [31]. In these cases, one may modify the real-world object corresponding to the initial attack point at each step of the gradient descent to obtain a sample in the feature space that as close as possible to the sample that would be obtained at the next attack iteration. A similar technique has already been exploited in to address the pre-image problem of kernel methods [14].

Other interesting extensions include (1) considering more effective strategies such as those proposed by [46, 54] to build a small but representative set of surrogate data to learn the surrogate classifier and (2) improving the classifier estimate \(\hat{g}(\mathbf{x})\); e.g. using an ensemble technique like bagging to average several classifiers [16].

4.5 Poisoning Attacks Against SVMs

In the previous section, we devised a simple algorithm that allows for evasion of classifiers at test time and showed experimentally how it can be exploited to evade detection by SVMs and kernel-based classification techniques. Here we present another kind of attack, based on our work in [14]. Its goal is to force the attacked SVM to misclassify as many samples as possible at test time through poisoning of the training data, that is, by injecting well-crafted attack samples into the training set. Note that, in this case, the test data is assumed not to be manipulated by the attacker.

Poisoning attacks are staged during classifier training, and they can thus target adaptive or online classifiers, as well as classifiers that are being re-trained on data collected during test time, especially if in an unsupervised or semi-supervised manner. Examples of these attacks, besides our work [14], can be found in [7, 9, 13, 39, 40, 53, 59]. They include specific application examples in different areas, such as intrusion detection in computer networks [7, 39, 40, 59], spam filtering [7, 53], and, most recently, even biometric authentication [9, 13].

In this section, we follow the same structure of Sect. 4.4. In Sect. 4.5.1, we define the adversary model according to our framework; then, in Sects. 4.5.1.4 and 4.5.2 we, respectively, derive the optimal poisoning attack and the corresponding algorithm; and, finally, in Sects. 4.5.3 and 4.5.4 we report our experimental findings and discuss the results.

4.5.1 Modeling the Adversary

Here, we apply our framework to evaluate security against poisoning attacks. As with the evasion attacks in Sect. 4.4.1, we model the attack scenario and derive the corresponding optimal attack strategy for poisoning.

Notation. In the following, we assume that an SVM has been trained on a dataset \(\mathcal{D}_{\text{tr}} =\{ \mathbf{x}_{i},y_{i}\}_{i=1}^{n}\) with \(\mathbf{x}_{i} \in {\mathbb{R}}^{d}\) and \(y_{i} \in \{-1,+1\}\). The matrix of kernel values between two sets of points is denoted with K, while \(\mathbf{Q} = \mathbf{K} \circ y{y}^{\top }\) denotes its label-annotated version, and α denotes the SVM’s dual variables corresponding to each training point. Depending on the value of α i , the training points are referred to as margin support vectors (0 < α i < C, set \(\mathcal{S}\)), error support vectors (α i = C, set \(\mathcal{E}\)), or reserve vectors (α i = 0, set \(\mathcal{R}\)). In the sequel, the lowercase letters s, e, r are used to index the corresponding parts of vectors or matrices; e.g., Q ss denotes the sub-matrix of Q corresponding to the margin support vectors.

4.5.1.1 Adversary’s Goal

For a poisoning attack, the attacker’s goal is to find a set of points whose addition to \(\mathcal{D}_{\text{tr}}\) maximally decreases the SVM’s classification accuracy. For simplicity, we start considering the addition of a single attack point \(({\mathbf{x}}^{{\ast}},{y}^{{\ast}})\). The choice of its label y ∗ is arbitrary but fixed. We refer to the class of this chosen label as attacking class and the other as the attacked class.

4.5.1.2 Adversary’s Knowledge

According to Sect. 4.3.1.2, we assume that the adversary knows the training samples (k.i), the feature representation (k.ii), that an SVM learning algorithm is used (k.iii) and the learned SVM’s parameters (k.iv), since they can be inferred by the adversary by solving the SVM learning problem on the known training set. Finally, we assume that no feedback is exploited by the adversary (k.v).

These assumptions amount to considering a worst-case analysis that allows us to compute the maximum error rate that the adversary can inflict through poisoning. This is indeed useful to check whether and under what circumstances poisoning may be a relevant threat for SVMs.

Although having perfect knowledge of the training data is very difficult in practice for an adversary, collecting a surrogate dataset sampled from the same distribution may not be that complicated; for instance, in network intrusion detection an attacker may easily sniff network packets to build a surrogate learning model, which can then be poisoned under the perfect knowledge setting. The analysis of this limited knowledge poisoning scenario is, however, left to future work.

4.5.1.3 Adversary’s Capability

According to Sect. 4.3.1.3, we assume that the attacker can manipulate only training data (c.i), can manipulate the class prior and the class-conditional distribution of the attack point’s class y ∗ by essentially adding a number of attack points of that class into the training data, one at a time (c.ii-iii), and can alter the feature values of the attack sample within some lower and upper bounds (c.iv). In particular, we will constrain the attack point to lie within a box, that is \(\mathbf{x}_{\mathrm{lb}} \leq \mathbf{x} \leq \mathbf{x}_{\mathrm{ub}}\).

4.5.1.4 Attack Strategy

Under the above assumptions, the optimal attack strategy amounts to solving the following optimization problem:

where the hinge loss has to be maximized on a separate validation set \(\mathcal{D}_{\text{val}} =\{ \mathbf{x}_{k},y_{k}\}_{k=1}^{m}\) to avoid considering a further regularization term in the objective function. The reason is that the attacker aims to maximize the SVM generalization error and not only its empirical estimate on the training data.

4.5.2 Poisoning Attack Algorithm