Abstract

Research in cognitive neuroscience has found emotion-induced distinct cognitive variances between the left and right hemispheres of the brain. In this work, we follow up on this idea by using Phase-Locking Value (PLV) to investigate the EEG based hemispherical brain connections for emotion recognition task. Here, PLV features are extracted for two scenarios: Within-hemisphere and Cross-hemisphere, which are further selected using maximum relevance-minimum redundancy (mRmR) and chi-square test mechanisms. By making use of machine learning (ML) classifiers, we have evaluated the results for dimensional model of emotions through making binary classification on valence, arousal and dominance scales, across four frequency bands (theta, alpha, beta and gamma). We achieved the highest accuracies for gamma band when assessed with mRmR feature selection. KNN classifier is most effective among other ML classifiers at this task, and achieves the best accuracy of 79.4%, 79.6%, and 79.1% in case of cross-hemisphere PLVs for valence, arousal, and dominance respectively. Additionally, we find that cross-hemispherical connections are better at predictions on emotion recognition than within-hemispherical ones, albeit only slightly.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Emotions play a fundamental role in human communication and interaction, significantly influencing our behavior, decision-making, and overall well-being. Accurately detecting and interpreting human emotions has far-reaching applications, ranging from affective computing to mental health monitoring and human-robot interaction. Among the various modalities utilized for emotion analysis, electroencephalography (EEG) stands out as a promising and non-invasive technique for capturing the neural correlates of emotions. The use of EEG for emotion recognition presents numerous advantages; one being, EEG is non-invasive and relatively affordable, along with a high temporal resolution, allowing for the precise examination of rapid changes in emotional states. Moreover, EEG is capable of capturing brain activity associated with both conscious and unconscious emotional processes, offering a comprehensive perspective on emotional experiences. Unlike emotions captured using physiological signals from Autonomous Nervous System (like heart rate, galvanic skin response) which are vulnerable to noise, those captured directly from the Central Nervous System (like the EEG) capture the expression of emotional experience from its origin. This has sparked extensive research in the field of EEG-based emotion recognition, aiming to harness the power of EEG signals to advance our understanding of emotions and pave the way for practical applications [10]. Leveraging machine learning and pattern recognition techniques has made it possible to translate complex EEG data into meaningful emotional states, bridging the gap between neuroscience and technology.

There are two widely accepted emotion models around which such research has centered around - discrete and dimension. Based on the discrete basic emotion description approach, emotions can be categorized into six fundamental emotions: sadness, joy, surprise, anger, disgust, and fear. Alternatively, the dimension approach enables emotions to be classified based on multiple dimensions (valence, arousal, and dominance). Valence pertains to the level of positivity or negativity experienced by an individual, while arousal reflects the degree of emotional excitement or indifference. The dominance dimension encompasses a spectrum ranging from submissive (lack of control) to dominance (assertiveness). In practice, emotion recognition predominantly relies on the dimension approach due to its simplicity in comparison to the detailed description of discrete basic emotions [13], which also allows for a quantitative analysis. In this work, our investigations explore the latter. Emotion recognition from EEG involves extracting relevant time or frequency domain feature components in response to stimuli evoking different emotions. However, a common limitation of existing methods is the lack of spatial correlation between EEG electrodes in univariate feature extraction. EEG brain network is a highly valuable approach for examining EEG signals, wherein each EEG channel serves as a node and the connections between nodes are referred to as edges. The concept of brain connectivity encompasses functional connectivity and effective connectivity [1, 2]. Moreover, findings in cognitive neuroscience have provided evidence for the structural and functional dissimilarities between the brain hemispheres [5].

To address this limitation and leverage hemispherical functional brain connections for emotion recognition, the phase locking value (PLV) method [9] has been utilized in our work which enables the investigation of task-induced changes in long-range neural activity synchronization in EEG data.

Based on this, we investigate the connections both within-hemisphere, and cross-hemisphere. Therefore, this paper proposes an EEG emotion recognition scheme based on significant Phase Locking Value (PLV) features extracted from hemispherical brain regions in the EEG data acquired as part of DEAP dataset [7], to understand the functional connections underlying within same hemisphere and cross hemisphere. By investigating performance of various machine learning models in being able to recognize the human emotions from EEG signals, this work throws light on which rhythmic EEG bands (alpha, beta, theta, gamma, all), and hemispherical brain connections (within or cross) are most efficient and responsive to emotions to measure the emotional state.

2 Related Work

There have been many studies conducted on using DEAP dataset for emotion recognition. Wang et al. (2018) [14] used an EEG specific 3-D CNN architecture to extract spatio-temporal emotional features, which are used for classification. Chen et al. (2015) [4] used connectivity features representation for valence and arousal classification.

Current findings in cognitive neuroscience have provided evidence for the structural and functional dissimilarities between the brain hemispheres [5]. Zheng et al. (2015) [17] conducted an investigation on emotional cognitive characteristics induced by emotional stimuli, revealing distinct cognitive variances between the left and right hemispheres. The study indicated that the right hemisphere exhibits enhanced sensitivity towards negative emotions. Similarly, Li et al. (2021) [11] employed the calculation of differential entropy between pairs of EEG channels positioned symmetrically in the two hemispheres, and used bi-hemisphere domain adversarial neural network to learn emotional features distinctively from each hemisphere.

Consequently, the analysis of EEG signals in both the left and right hemispheres holds immense significance in advancing emotional recognition techniques. Following this, Zhang et al. (2022) [16] focused on the asymmetry of the brain’s hemispheres and employed cross-frequency Granger causality analysis to extract relevant features from both the left and right hemispheres, highlighting the significance of considering functional connectivity between hemispheres and leveraging cross-frequency interactions to improve the performance of EEG-based emotion recognition systems.

Wang et al. (2019) [15] used Phase-Locking Value (PLV), to extract information about functional connections along with the spatial information of electrodes and brain regions.

3 Proposed Method

3.1 Dataset Description

The DEAP (Database for Emotion Analysis using Physiological Signals) dataset [7] consists of data from 32 participants, who were exposed to 40 one-minute video clips with varying emotional content. These video clips were carefully selected to elicit different emotional states.

While the EEG signal is recorded at 512 Hz, it is down-sampled to 128 Hz sampling frequency in this work. Although the videos were of one minute, recording was started 3 s prior, resulting in recordings of 63 s. Therefore, data of dimension 32 \(\times \) 40 \(\times \) 32 \(\times \) 8064 (participants \(\times \) videos \(\times \) channels \(\times \) EEG sampling points) was recorded.

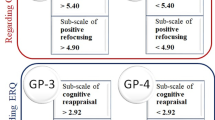

At the end of each trial, the participants self-reported ratings about their emotional levels in the form of valence, arousal, dominance and liking and familiarity. Each of these scales spans from a low value of one to a high value of nine. To create binary-classification tasks, the scales are divided into two categories. Each of valence-arousal-dominance is categorized as high valence-arousal-dominance (ranging from five to nine) and low valence-arousal-dominance (ranging from one to five) based on the respective scales.

A block diagram depicting our proposed method is shown in Fig. 1, while Algorithms 1 and 2 depict our proposed approach.

3.2 Data Preprocessing

Preprocessing involved several steps to enhance the quality and extract relevant information from the recorded signals, which are described as follows.

Firstly, we used Z-score normalization, which helps eliminate the individual variations and biases present in the recorded signals. Since the recording was started 3 s before the actual video, the signal in the first three seconds is used for z-score based baseline removal. Assuming \(x(i)\) is the input signal, it is performed by first calculating the mean (\(\mu \)) and standard deviation (\(\sigma \)) of the signal upto the first three seconds (N = 3 \(\times \) 128), and then normalizing as shown in Eq. 1.

We then used Wavelet-based multiscale PCA [3] to remove noise from the normalized signal. Firstly, a covariance matrix \(C_j\) of the wavelet coefficients is computed as shown in Eq. 2. Top eigenvectors with highest eigenvalues are chosen after eigenvalue decomposition. However, in this work, we selected the whole set of the principle components, instead of taking a subset.

Finally, the normalized EEG signal is projected onto the selected eigenvectors \(V_{ij}\) (\(i\)th eigenvalue at scale \(j\)) at each scale to obtain the wavelet-based multiscale PCA features, as shown in Eq. 3.

After applying these steps, bandpass filtering is performed on the EEG signals by decomposing them into \(\alpha \) (8–15 Hz), \(\beta \) (16–30 Hz), \(\theta \) (4–7 Hz), and \(\gamma \) (30–45 Hz) bands.

3.3 Feature Extraction

The extraction of Phase-Locking-Value (PLV) features from each EEG band - \(\alpha \) (alpha), \(\beta \) (beta), \(\theta \) (theta), and \(\gamma \) (gamma) bands, involves a series of steps to quantify phase coupling between different electrode pairs within each frequency band. The electrodes selected from each hemisphere for extraction of these features are shown in Fig. 2, which include 14 electrodes from each of the left and right hemispheres.

The extraction process involves the following steps. Firstly, we segment the preprocessed EEG signals into 10 time windows of 6 s each. Next, for each segment, the EEG signals within the selected frequency band are processed using the Hilbert transform H(x(t)), to obtain the instantaneous phase information, as shown in Eq. 4.

Once the instantaneous phase information is obtained, pairwise phase difference value is computed for each electrode pair within the frequency band of interest. Finally, PLV for each electrode pair within the frequency band is calculated by averaging of the absolute value of the complex exponential of the pairwise phase differences (\(\varDelta \phi _n\)) over the entire segment, as shown in Eq. 5. An example of PLV features extracted for within-hemisphere and cross-hemisphere is shown in Fig. 3.

In our proposed approach, we calculate PLVs within each frequency band, for both within-hemisphere and cross-hemisphere, which is described as follows. The 28 electrodes left after removal of the four middle electrodes (Fz, Cz, Pz, and Oz) are symmetrical. To investigate the role of hemispherical functional brain connections, we compute PLVs on these electrode pairs through two kinds of combinations: (1) within-hemisphere (wherein, electrodes in each hemisphere form a pair with every other electrode in the same hemisphere), and (2) cross-hemisphere (wherein, electrodes in one hemisphere form a pair with each electrode from the other hemisphere). While the former reflects the connections in each hemisphere, the latter reflects the connections across hemispheres and between the left and right hemispheres.

Since there are 14 EEG electrode nodes in each hemisphere, the number of effective PLV values in the case of cross-hemisphere is 14 * 14 = 196, while in the case of within-hemisphere is 14 * 14 * 2 = 392.

3.4 Feature Selection

We select relevant PLV features for classification using two feature selection methods - mRmR (maximum Relevance-minimum Redundancy) and chi-square, explained as follows. The mRmR algorithm [12] aims to select features that have a high relevance to the target variable (e.g., emotion classification) while minimizing redundancy among selected features, as shown for a specific feature \(F_i\) in Eq. 6.

We also use chi-square statistic [6] to select the features with the highest statistical significance, with respect to the target variable. It’s calculation for a specific feature \(F_i\) with c classes in the target variable and observed \(O_{ij}\) and expected frequencies \(E_{ij}\) is shown in Eq. 7. We use these methods to identify the fifty most relevant and discriminative PLV features for our task.

3.5 Classification

PLV features are extracted from each band, for each of within and cross-hemisphere. Fifty most significant features are selected through mRmR and Chi-squared methods, on which classification is performed. We employed several popular machine learning classifiers to learn the underlying patterns and relationship, namely K-Nearest Neighbors (KNN), Decision Tree, Support Vector Machines (SVM), Random Forest, and Adaboost. The technical specifications of these models are shown in Table 1. The trained models were then evaluated using cross-validation for 10 folds. The mean of accuracies obtained and their standard deviation are used as an evaluation metric to assess the performance of the models and corresponding approaches on emotion recognition task.

4 Results and Discussion

Table 2 shows the accuracy for valence classification from within and cross hemispheres. We observe that the approach involving features from the Gamma band, selected through the mRmR method, using the KNN classifier perform best at valence classification. Additionally, PLV features from cross-hemisphere seem to be performing better (accuracy of 79.4%) than those from within-hemisphere (accuracy of 78.1%). Table 3 shows the accuracy for arousal classification from within and cross hemispheres. We observe that the approach involving features from the Gamma band, selected through the mRmR/Chi-square method, using the KNN classifier perform best at arousal classification. Additionally, PLV features from cross-hemisphere seem to be performing slightly better (accuracy of 79.6%) than those from within-hemisphere (accuracy of 79.0%). Table 4 shows the accuracy for dominance classification from within and cross hemispheres. We observe that the approach involving features from the Gamma band, selected through the mRmR method, using the KNN classifier perform best at dominance classification. Additionally, PLV features from cross-hemisphere seem to be performing better (accuracy of 79.1%) than those from the within-hemisphere (accuracy of 77.1%). On comparing our results with the state of arts (Table 5), we find that our approach performs better with state-of-the-art accuracy.

Overall, the experimental results demonstrate that gamma EEG band is most relevant for emotion recognition and among machine learning classifiers, KNN achieves the best performance across all three ratings. Additionally, there is a minor increment in accuracy when PLV features are acquired from cross-hemisphere as compared to within-hemisphere.

5 Conclusion

In this paper, we have performed an emotion recognition task based on brain functional connectivity. Firstly, EEG signals are processed and denoised using wavelet based multiscale PCA. Then, PLV features are extracted from these processed signals and further mRmR feature section is done to examine the performance of brain connections demonstrated for within-hemisphere and cross-hemisphere. The obtained results manifest that gamma band is most effective and relevant for the evaluation of emotion recognition task. We achieved the best performance with KNN classifier across three rating dimensions of emotions (valence, arousal and dominance) for cross-hemisphere connections. Although there is a very slight difference between both the scenarios, we concluded that phase information obtained across cross-hemisphere connections is more reliable in comparison to same hemisphere one. Besides, as we know the information extracted via brain connections requires more and more numbers of EEG electrodes to enhance the performance of emotion recognition, simultaneously increases complexity for data acquisition. Thus, we are interested in multivariate phase synchronisation which improves the estimation of region-to-region source space connectivity with lesser number of EEG channels while eliminating useless electrodes. We leave this interesting topic as our future work.

References

Cao, J., et al.: Brain functional and effective connectivity based on electroencephalography recordings: a review. Hum. Brain Mapp. 43(2), 860–879 (2021). https://doi.org/10.1002/hbm.25683

Cao, R., et al.: EEG functional connectivity underlying emotional valance and arousal using minimum spanning trees. Front. Neurosci. 14, 355 (2020). https://doi.org/10.3389/fnins.2020.00355

Chavan, A., Kolte, M.: Improved EEG signal processing with wavelet based multiscale PCA algorithm. In: 2015 International Conference on Industrial Instrumentation and Control (ICIC), pp. 1056–1059 (2015). https://doi.org/10.1109/IIC.2015.7150902

Chen, M., Han, J., Guo, L., Wang, J., Patras, I.: Identifying valence and arousal levels via connectivity between EEG channels. In: 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), pp. 63–69 (2015). https://doi.org/10.1109/ACII.2015.7344552

Dimond, S.J., Farrington, L., Johnson, P.: Differing emotional response from right and left hemispheres. Nature 261(5562), 690–692 (1976). https://doi.org/10.1038/261690a0

Pearson, K.: X. on the criterion that a given system of deviations from the probable in the case of a correlated system of variables is such that it can be reasonably supposed to have arisen from random sampling. Lond. Edinburgh Dublin Phil. Maga. J. Sci. 50(302), 157–175 (1900). https://doi.org/10.1080/14786440009463897

Koelstra, S., et al.: Deap: a database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 3(1), 18–31 (2012). https://doi.org/10.1109/T-AFFC.2011.15

Kumari, N., Anwar, S., Bhattacharjee, V.: Time series-dependent feature of EEG signals for improved visually evoked emotion classification using EmotionCapsNet. Neural Comput. Appl. 34(16), 13291–13303 (2022). https://doi.org/10.1007/s00521-022-06942-x

Lachaux, J.P., Rodriguez, E., Martinerie, J., Varela, F.J.: Measuring phase synchrony in brain signals. Hum. Brain Mapp. 8(4), 194–208 (1999). https://doi.org/10.1002/(sici)1097-0193(1999)8:4<194::aid-hbm4>3.0.co;2-c

Li, X., Hu, B., Sun, S., Cai, H.: EEG-based mild depressive detection using feature selection methods and classifiers. Comput. Methods Programs Biomed. 136, 151–161 (2016). https://doi.org/10.1016/j.cmpb.2016.08.010

Li, Y., Zheng, W., Zong, Y., Cui, Z., Zhang, T., Zhou, X.: A bi-hemisphere domain adversarial neural network model for EEG emotion recognition. IEEE Trans. Affect. Comput. 12(2), 494–504 (2021). https://doi.org/10.1109/taffc.2018.2885474

Peng, H., Long, F., Ding, C.: Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 27(8), 1226–1238 (2005). https://doi.org/10.1109/TPAMI.2005.159

Singh, M.K., Singh, M.: A deep learning approach for subject-dependent and subject-independent emotion recognition using brain signals with dimensional emotion model. Biomed. Signal Process. Control 84, 104928 (2023). https://doi.org/10.1016/j.bspc.2023.104928

Wang, Y., Huang, Z., McCane, B., Neo, P.: Emotionet: a 3-d convolutional neural network for EEG-based emotion recognition. In: 2018 IJCNN, pp. 1–7 (2018). https://doi.org/10.1109/IJCNN.2018.8489715

Wang, Z., Tong, Y., Heng, X.: Phase-locking value based graph convolutional neural networks for emotion recognition. IEEE Access 7, 93711–93722 (2019). https://doi.org/10.1109/access.2019.2927768

Zhang, J., Zhang, X., Chen, G., Huang, L., Sun, Y.: EEG emotion recognition based on cross-frequency granger causality feature extraction and fusion in the left and right hemispheres. Front. Neurosci. 16, 974673 (2022). https://doi.org/10.3389/fnins.2022.974673

Zheng, W.L., Lu, B.L.: Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 7(3), 162–175 (2015). https://doi.org/10.1109/tamd.2015.2431497

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ruchilekha, Srivastava, V., Singh, M.K. (2024). Emotion Recognition Using Phase-Locking-Value Based Functional Brain Connections Within-Hemisphere and Cross-Hemisphere. In: Choi, B.J., Singh, D., Tiwary, U.S., Chung, WY. (eds) Intelligent Human Computer Interaction. IHCI 2023. Lecture Notes in Computer Science, vol 14531. Springer, Cham. https://doi.org/10.1007/978-3-031-53827-8_12

Download citation

DOI: https://doi.org/10.1007/978-3-031-53827-8_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-53826-1

Online ISBN: 978-3-031-53827-8

eBook Packages: Computer ScienceComputer Science (R0)