Abstract

The effects of music training are reflected in auditory perception. Behavioral, brain structural and functional changes were found in musicians and nonmusician controls, demonstrating the effects of music training. Rhythm, music phrases and syntax are complex music elements, which involve higher level of auditory processing. EEG studies of the perception of these music elements and the effects of music training were summarized and discussed in this review. The ERP early and mid-latency components, P3a, P3, N5, ERAN (early right anterior negativity), CPS (closure positive shift) and neural entrainment to beat frequencies were EEG features to study complex music perception between musicians who received music training and nonmusician controls. Musicians showed more complex ERP components related to rhythm, music phrases and syntax features and stronger neural entrainment to music beats. These results showed different cortical processing of rhythm, music phrases and syntax in musicians and nonmusicians and explained the effects of music training on auditory perception on a deeper level.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Studies have found that musicians outperform nonmusicians on the behavioral level in many aspects, probably due to advanced perceptual skills and working memory through years of musical training [1, 2]. Changes in brain functions and structures explain the effects of music training. Evidence was found on the neurobiological level, where musicians showed better information encoding and stronger cross-modal auditory-motor integration, supporting the musician’s advantage on corresponding perception [3, 4].

Music perception involves cognition, emotion, and other underlying brain mechanisms [5]. Studies of music perception explained musician advantage over nonmusicians in a more underpinning way. Various neuroimaging techniques have been used in studies of music perception for the different properties of cortical responses to musical stimuli [6]. Electroencephalography (EEG), a powerful tool in the field of neuroscience and brain science, allows for the analysis of continuous processes in time, frequency, and other domains, therefore, has been frequently used in studies of music perception. EEG provides researchers with various methods and evidence helping to understand music perception profoundly. Here, we focus on EEG methods and findings of music perception in musicians and non-musicians.

The EEG studies of music perception is conditioned by the interested musical contents and the form of stimulus. EEG features that we usually discovered, which reveals the cortical decoding of music, depend on experiment tasks and paradigms. The perception of basic musical elements, such as pitch, timbre, etc., has been widely studied in recent years, whereas the effects of systematic music training have not been adequately embodied [7]. Rhythm, music phrases, and syntax are complex musical materials for which the EEG paradigms should be carefully designed. The investigation of these musical materials involves more complex brain activities. Cortical processing of the perception of these music elements would explain the differences between musicians and nonmusicians on a deeper level. EEG studies of the effects of music expertise are summarized in this review according to the three forms of musical stimulus.

2 Effects of Music Training on Music Perception

2.1 Perception of Rhythm

Temporal pattern or structure is essential to the perception of music. A rhythm is a sequence of musical sound groups temporally patterned by duration or stress [8]. In studies of rhythm perception, subjects perceive repeating rhythmic units or beats. Subjects’ responses to violations of rhythmic patterns or durations are analyzed using EEG or behavioral measures [9]. Most EEG studies of rhythm perception investigated (1) the ERP out of EEG recordings or (2) the neural entrainment to steady beats stimulus [10]. The study of rhythm perception requires high temporal resolution, and ERP enables a close examination of the time course of the changing rhythmic pattern. ERP P3 components were found to correlate with the performance of detection of speeding up or slowing down target beats after a four-beat sequence [11]. Subcomponents of P3 can further recognize the two kinds of rhythm violations.

Geiser et al. discovered the perception of meter and rhythm under two attention modes in musicians and nonmusicians utilizing early ERPs components within 300 ms [12]. Subjects were to detect and distinguish changes in meter (the number of beats in a loop) and rhythm (temporal pattern in the fixed loop) in repeating standard 3-beat rhythmic units. The musicians showed significantly better behavioral performance. The early negative deflection was elicited by rhythm and meter deviants in the attended condition, while in the unattended condition, only rhythm deviants elicited negative deflection. No group effects were found across all experimental conditions concerning ERP results, indicating that rhythm and meter are processed similarly in the early stage attentively or inattentively for both musicians and non-musicians. The effects of musicianship were found in a more complicated experiment revealed by P3a component [13]. Two rhythm processing modes were introduced: a sequential processing hypothesis that individuals expect incoming beats in a fixed position and a hierarchical processing hypothesis that individuals expect incoming beats in all possible positions. Target beat appears on one of six positions where few of them fit the meter. Subjects were to determine if there was a meter violation. Musicians showed significantly longer P3a latency than non-musicians. Moreover, the correlation coefficients of P3a, which express the resemblance of two P3a from different conditions, of nonmusicians could be compared fairly well with the correlation coefficients as predicted by the sequential hypothesis. In contrast, musicians’ results fit the hierarchical hypothesis more. All the P3a results discovered in this study suggest that temporal patterns are processed sequentially in nonmusicians and processed hirarchically in musicians.

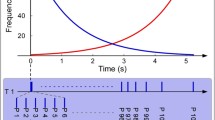

The neural oscillations entrain to a stimulus of fixed time period [14]. These neural entrainments in music perception are usually synchronizations of neural excitability and underlying music rhythms [15]. It is analyzed similarly to steady-state evoked potentials (SSEP) where amplitude peaks of signals’ frequency spectra are plotted and compared. The differences between musicians and nonmusicians in rhythm perception are revealed by neural entrainment to musical rhythms [16]. Stupacher et al. designed a complex experiment in which subjects listened to a quadruple rhythm and a quadruple-triple combined polyrhythm. Then after a silent, subjects were asked to determine if the upcoming stimulus was early, on time or late. EEG signals were recorded through whole process. The normalized frequency spectrum amplitudes of EEG responses during silent periods before the target stimulus showed a significant effect of music expertise. The neural entrainment of 3-beat frequencies (1.5 Hz and 3 Hz) were significantly larger in musician group. The observed difference during silent period indicated that the beat-related top-down controlled neural oscillations can exist without continuous stimulus and the endogenous oscillations were enhanced by music expertise. Another study of rhythm perception found a stronger neural entrainment to 3-beat rhythm in musicians than in nonmusicians [17]. The rhythmic cues from different spatial angular positions induced a sense of 3-beat rhythm. The stronger responses to 3-beat rhythm in musicians were only observed under attentive condition, suggesting that top-down attentional mechanisms were in play for rhythm perception and the neural entrainment to spatial cue rhythms were strengthened by music expertise.

2.2 Perception of Music Phrase and Syntax

Music develops based on phrases like language. Melodies and harmony series are composed into musical phrases and may have different functions in the corresponding phrase according to music theory. The perception of musical phrases and syntax has been studied [18–20]. The perception of musical phrases or syntax may correlate with music experience and be reflected in EEG responses. Influences of long-term musical experience on the processing of musical phrases or syntax are summarized below.

A commonly used experimental paradigm of music syntax perception is a chord sequence that includes violations against the expectations of the chord progression [21]. By changing the ending chord, the music syntactic appropriateness and expectancy are violated, which elicits ERP components related to music syntax perception. An early right anterior negativity (ERAN) ERP component was found specifically sensitive to the violation of musical regularities. ERAN has a latency of 200 ms, which is similar to MMN and is usually followed by an N5. The ERAN evoked by harmonically inappropriate chords in a sequence was larger in musicians than non-musicians [22]. Their results indicate that the automatic neural mechanisms that process musical syntax irregularities reflected by ERAN can be modulated by music expertise. Portabella et al. discovered the dissonance perception in a chord progression of the two groups. Chords that violate the regularities in the middle or end of the sequence evoked P3 and N5 components. The amplitude of these components varied between musicians and non-musicians. Moreover, they found that the predictability of dissonant chords did not modulate the ERP responses [23].

More complex phrases were used as musical syntax stimuli. James et al. studied the brain process of music syntax perception in different populations using spatial-temporal ERP, Microstate, and ERP source imaging [24]. Subjects listened to ten-second expressive string quartet pieces, which ended in one regular version or two transgressed versions, after which the subjects had to respond if they appraised the stimulus as satisfactory or not. The effects of musical expertise on the neural processing revealed by ERP response and microstate lay in a 300–500 ms window after the onset of the target stimulus. The P3b-like components and characteristic microstates differentiated nonmusicians, amateur pianists, and expert pianists from each other. The underlying sources for these microstates were localized in the right middle temporal gyrus, anterior cingulate, and right parahippocampal areas. Ma et al. discovered the neural responses of subjects with different musical experiences to musical syntax that follows finite state grammar (FSG) and phrase structure grammar (PSG) [25]. In the FSG condition, different final chord types were not reflected in ERP responses in non-musician group. Irregular final chords evoked ERAN-N5 responses in medium− and pro-musician groups. In terms of PSG condition, only in the pro-musician group were observed ERAN-N5 responses evoked by irregular final chords. Their results suggested that the effects of musical expertise on the perception of musical phrases were reflected in syntactic complexity and early ERAN and late N5 components. EEG responses were also studied during the aesthetic judgment of the chord sequences with different closures [26]. Musicians showed negative potentials during the beauty judgment task compared to the correctness judgment task, while this phenomenon was not seen in non-muscian group. However, during the listening stage in non-musician group, differences were observed between the correctness and aesthetic judgment tasks. The neural correlates of aesthetic music processing were modulated by musical expertise.

Another subject of music phrase perception that has been studied is the perception of phrase boundaries [27]. An ERP positive wave of approximately 550 ms latency after the phrase boundary offset was found. The observed peak was similar to a positive component in respond to prosodic phrase boundaries in speech perception [28]. It was termed as closure positive shift (CPS) for correlating with prosodic phrase closure. The musical CSP was studied and found to be modulated by music expertise [27]. Nuehaus et al. used EEG and MEG methods and musical melody stimulus with phrase boundaries in the middle. Musicians showed an electric CPS and a magnetic CPS (CPSm) evoked by phrased melody versions, while nonmusicians showed an early negativity and a smaller CPSm. Zhang et al. designed a complex musical stimulus which contains boundaries at three hierarchical levels. All three boundaries evoked CPS in musician group and their amplitudes were modulated by hierarchical levels. Only period boundary (most obvious one) elicited CPS in nonmusicians and an undistinguishable negativity was induced at the three boundaries. These findings of music phrase perception suggest that the musical phrasing ability could be enhanced by music expertise or the phrasing perceptual strategies are different between the two groups.

3 Summary

In this paper, we reviewed EEG studies of music perception on rhythm, music phrases, and syntax perception between musicians and nonmusicians to show the effect of music training from the perspective of behavioral and cortical levels.

In summary, the perception of rhythm was usually studied using ERP or neural entrainment. Neural responses of musicians and nonmusicians were similar in the early stage and different in the later stage of rhythm processing. The P3a component evoked by a meter violation in the beats expectation task demonstrated the effects of music expertise, where musicians showed later P3a. The neural oscillations entrain to a rhythmic beat, where this entrainment was stronger in musicians than in non-musicians. Moreover, the endogenous beat-related top-down controlled neural oscillations were modulated by music expertise. These findings suggest that musicians and nonmusicians process complex rhythmic temporal patterns differently.

Moreover, neural entrainment is strengthened in musicians. In addition, musicians and nonmusicians process music phrases and syntax differently, revealed by evoked responses (ERAN, P3, and N5) to violations of chord sequences. Microstate and ERP source imaging also revealed the different mechanisms in the mid-latency period and involved cortical areas of musicians and nonmusicians in the perception of music syntax. The prosodic phrase boundaries-related CPS showed better musical phrasing ability in musicians.

The above studies revealed that cortical processing of rhythm, music phrases, and syntax differs between musicians and nonmusicians, reflecting the effects of music training on the perception of complex music elements. The mentioned EEG features and corresponding results are listed in Table 1. It should be mentioned that the discovered results are strictly conditioned by the experiment paradigm due to the complexity of the stimulus. Further EEG studies of music perception are recommended to investigate the interplay of these mechanisms, for example, in attentive and inattentive conditions.

References

Parbery-Clark, A., Skoe, E., Lam, C., et al.: Musician enhancement for Speech-In-Noise. Ear Hear. 30(6), 653–661 (2009)

Miendlarzewska, E.A., Trost, W.J.: How musical training affects cognitive development: rhythm reward and other modulating variables. Front. Neurosci.,7, (2014). https://doi.org/10.3389/fnins.2013.00279

Du, Y., Zatorre, R.J.: Musical training sharpens and bonds ears and tongue to hear speech better. Proc. Natl. Acad. Sci. U.S.A. 114(51), 13579–13854 (2017). https://doi.org/10.1073/pnas.1712223114

Bosnyak, D.J., Eaton, R.A., Roberts, L.E.: Distributed auditory cortical representations are modified when non-musicians are trained at pitch discrimination with 40 Hz amplitude modulated tones. Cereb. Cortex 14(10), 1088–1099 (2004). https://doi.org/10.1093/cercor/bhh068

Koelsch, S.: Neural substrates of processing syntax and semantics in music. Curr. Opin. Neurobiol. 15(2), 207–212 (2005)

González, A., Santapau, M., González Julián, J., et al.: EEG analysis during music Perception. In: Hideki, N (Ed.) Electroencephalography, pp. Ch. 2. IntechOpen, Rijeka (2020)

Koelsch, S.: Toward a neural basis of music perception−a review and updated model. Front. Psychol., 2, (2011)

Krumhansl, C.L.: Rhythm and pitch in music cognition. Psychol. Bull. 126(1), 159–179 (2000)

Phillips-Silver, J., Trainor, L.J.: Feeling the beat: Movement influences infant rhythm perception. Science 308(5727), 1430 (2005)

Nozaradan, S.: Exploring how musical rhythm entrains brain activity with electroencephalogram frequency-tagging. Philos. Trans. R. Soc. B-Biol. Sci., 369(1658), (2014)

Jongsma, M.L.A., Meeuwissen, E., Vos, P.G., et al.: Rhythm perception: Speeding up or slowing down affects different subcomponents of the ERP P3 complex. Biol. Psychol. 75(3), 219–228 (2007). https://doi.org/10.1016/j.biopsycho.2007.02.003

Geiser, E., Ziegler, E., Jancke, L., et al.: Early electrophysiological correlates of meter and rhythm processing in music perception. Cortex 45(1), 93–102 (2009)

Jongsma, M.L.A., Desain, P., Honing, H.: Rhythmic context influences the auditory evoked potentials of musicians and nonmusicians. Biol. Psychol. 66(2), 129–152 (2004)

Winkler, I., Denham, S.L., Nelken, I.: Modeling the auditory scene: predictive regularity representations and perceptual objects. Trends Cogn. Sci. 13(12), 532–540 (2009)

Schroeder, C.E., Lakatos, P.: Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 32(1), 9–18 (2009). https://doi.org/10.1016/j.tins.2008.09.012

Stupacher, J., Wood, G., Witte, M.: Neural entrainment to polyrhythms: A comparison of musicians and Non-musicians. Front. Neurosci. 11, 1–17 (2017). https://doi.org/10.3389/fnins.2017.00208

Celma-Miralles, A., Toro, J.M.: Ternary meter from spatial sounds: Differences in neural entrainment between musicians and non-musicians. Brain Cogn. 136, (2019). Koelsch, S., Gunter, T., Friederici, A.D., et al.: Brain indices of music processing: “Non-musicians” are musical. J. Cogn. Neurosci., 12(3), 520–541 (2000). https://doi.org/10.1162/089892900562183

Koelsch, S., Gunter, T.C., Schroger, E., et al.: Differentiating ERAN and MMN: An ERP study. NeuroReport 12(7), 1385–1389 (2001)

Sun, L.J., Feng, C., Yang, Y.F.: Tension experience induced by nested structures in music. Front. Hum. Neurosci., 14, (2020)

Koelsch, S., Grossmann, T., Gunter, T.C., et al.: Children processing music: electric brain responses reveal musical competence and gender differences. J. Cogn. Neurosci. 15(5), 683–693 (2003). https://doi.org/10.1162/jocn.2003.15.5.683

Koelsch, S., Schmidt, B.H., Kansok, J.: Effects of musical expertise on the early right anterior negativity: An event-related brain potential study. Psychophysiology 39(5), 657–663 (2002)

Pages-Portabella, C, Bertolo, M, Toro, J.M.: Neural correlates of acoustic dissonance in music: The role of musicianship, schematic and veridical expectations. Plos One, 16(12), (2021)

James, C.E., Oechslin, M.S., Michel, C.M., et al.: Electrical neuroimaging of music processing reveals mid-latency changes with level of musical expertise. Front. Neurosci., 11, (2017)

Ma, X., Ding, N., Tao, Y., et al.: Syntactic complexity and musical proficiency modulate neural processing of non-native music. Neuropsychologia 121, 164–174 (2018)

Muller, M., Hofel, L., Brattico, E., et al.: Electrophysiological correlates of aesthetic music processing comparing experts with laypersons. In: DallaBella, S., Kraus, N., Overy, K., Pantev, C., Snyder, J. S., Tervaniemi, M., Tillmann, B., Schlaug, G., (Eds.), Neurosciences and Music Iii: Disorders and Plasticity, vol. 1169, pp. 355–358. (2009)

Neuhaus, C., Knosche, T.R., Friederici, A.D.: Effects of musical expertise and boundary markers on phrase perception in music. J. Cogn. Neurosci. 18(3), 472–493 (2006)

Steinhauer, K., Friederici, A.D.: Prosodic boundaries, comma rules, and brain responses: the closure positive shift in ERPs as a universal marker for prosodic phrasing in listeners and readers. J. Psycholinguist. Res. 30(3), 267–295 (2001)

Zhang, J.J., Jiang, C.M., Zhou, L.S., et al.: Perception of hierarchical boundaries in music and its modulation by expertise. Neuropsychologia 91, 490–498 (2016)

Acknowledgment

This work was supported by grants from the Key Technologies Research and Development Program of China (No.2022YFF1202400) and the National Natural Science Foundation of China (No.81971698&No.82202290).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Nie, J., Bai, Y., Zheng, Q., Ni, G. (2024). EEG Studies of the Effects of Music Training on Rhythm, Music Phrases and Syntax Perception. In: Wang, G., Yao, D., Gu, Z., Peng, Y., Tong, S., Liu, C. (eds) 12th Asian-Pacific Conference on Medical and Biological Engineering. APCMBE 2023. IFMBE Proceedings, vol 103. Springer, Cham. https://doi.org/10.1007/978-3-031-51455-5_32

Download citation

DOI: https://doi.org/10.1007/978-3-031-51455-5_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-51454-8

Online ISBN: 978-3-031-51455-5

eBook Packages: EngineeringEngineering (R0)