Abstract

Digital Twin (DT) is recognized as a key enabling technology of Industry 4.0 and 5.0 and can be used in collaborative networks formed to fulfillment of complex tasks of the manufacturing industry. In the last years, the variety and complexity of DTs have been significantly increasing with new technologies and smarter solutions. The current definition of DT, such as cognitive, hybrid, and others, embraces a wide range of solutions with different aspects. In this sense, this article discusses DT definitions and presents a five-dimensional analytical framework to classify the different proposals. Finally, to better understand the proposal, we analyzed 12 articles using the analytical framework. We argue this research may help researchers and practitioners to better understand digital twins and compare different solutions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Industry 5.0 is considered the next industrial evolution with the objective to leverage the creativity of human experts in collaboration with efficient, intelligent, and accurate machines, in order to obtain resource-efficient and userpreferred manufacturing solutions compared to Industry 4.0 [32]. The enabling technologies of Industry 5.0 are a set of complex systems that combine technological trends such as edge computing, digital twins (DTs), internet of things (IoT), big data analytics, collaborative robots (cobots), 6G network and blockchain, which are integrated with cognitive skills and innovation that can help industries increase production and deliver customized products more quickly [32].

Digital twins are recognized as a key enabling technology of Industry 4.0 [38] and Industry 5.0 [32]. DT provides opportunities for improved product lifecycle management in manufacturing and also represents a significant change from the actual methods, processes, and tools used by the organization [29]. DTs can be used in a collaborative way for the solving of complex problems of the manufacturing from DT ecosystems approach and can be comprised as part of collaborative networks in manufacturing. The collaboration between DTs aims to improve the detection of contextual and collective anomalies, which are more challenging to be identified using self-reference models [15], and also to facilitate the collaborative and distributed autonomous driving [26].

In the last years, the number of publications, works, and applications with Digital Twins increase considerably in the manufacturing context. The literature presents projects in different domains with the use of more intelligent and autonomous DTs, resulting in a growing variety and complexity of the proposals, nomenclatures, and types of DTs. On the other side, it can cause confusion among researchers and practitioners in the field when referencing their proposal of DT. Both the definition and expectations for DT functionality have evolved significantly over the past decade. We consider that the variety of DT definitions and types with different but intersecting characteristics is a current challenge to the understanding of DT proposals. According to [25], the primary obstacle in broader DT adoption or evolution is its ambiguity. Current efforts have been made to define DT but this is still an open research question.

This paper discusses the different DT definitions and approaches and proposes a five-dimensional analytical framework to cover different proposals of DTs. The dimensions of analysis are: i) data flow; ii) interoperability level; iii) system level; iv) cognitive process; and v) covering of the system life cycle. A proof of concept is carried out using 12 DT works. We argue an analytical framework can help researchers in the field to better understand DTs, since it combines different approaches of the literature to define and categorize a proposal of DT, focusing more on the characteristics than on your definitions. From our approach, it is expected to clarify situations when a simulation, optimization, or machine-learning model is closer to a DT with cognitive capabilities or a data model, for instance.

The paper is organized as follows: Sect. 2 presents the theoretical background, highlighting the types of DTs cited in the literature, aspects of cognition related to the development of DTs, and semantic characteristics that can be included in a proposal of DT; Sect. 3 details the proposed analytical framework, and Sect. 4 shows a proof of concept of the analytical framework. Finally, Sect. 5 presents our concluding remarks and limitations.

2 Background

To help understand the types of DTs and better characterize the approaches proposed in the literature, we analyze these definitions according to the following aspects: data flow automation, system level, covering of the system lifecycles, cognitive process, semantics, and interoperability level.

One of the first discussions on a distinction among DT types considering the data flow automation was made by [29]. According to these authors, Digital Model is the case where the digital object (a representation of an existing or planned physical object) does not use any form of automated data exchange between it and the physical object. Digital Shadow includes the existence of automated one-way flow between the state of the physical object and the digital object. Digital Twin is used only for the case where the “data flow between physical object and data object are fully integrated into both directions”. Although this typology is still well accepted and referred to in the literature, it contains a certain level of ambiguity as demonstrated by [25].

Already [1] propose a typology of DTs based on the data flow but also consider cognitive capacities. These authors show a three-layer approach to the different twins: so-called Digital Twins and Cognitive Twins. Digital Twin is considered a digital replica of a physical system that captures the attributes and behaviors of that system. In their point of view, a DT is typically materialized as a set of multiple isolated models that are either empirical or first-principles based. Hybrid Twin (HT) is an extension of DT in which the isolated DT models are intertwined to recognize, forecast and communicate less optimal (but predictable) behavior of the physical counterpart just before such behavior occurs. An HT integrates data from various sources (sensors, databases, etc.) with the DT models and has predictive capabilities. Cognitive Digital Twin (CDT) is an extension of HT incorporating cognitive features that enable sensing complex and unpredicted behavior. According to the authors, CDT is thus a hybrid, selflearning, and proactive system that will optimize its own cognitive capabilities over time based on the data it collects and the experience it gains.

From the first reference to the term Cognitive Digital Twin at an industrial workshop in 2016 [2], other authors [1, 4, 5, 31] presented complementary and similar definitions for this kind of DT. The recent definition of [38] encompasses the majority of these definitions from a literature review. According to these authors, Cognitive Twin is a “digital representation of a physical system that is augmented with certain cognitive capabilities and support to execute autonomous activities; comprises a set of semantically interlinked digital models related to different lifecycle phases of the physical system including its subsystems and components; and evolves continuously with the physical system across the entire lifecycle”. This definition includes beyond the cognitive capacity, the characteristics related to multiple system levels and multiple lifecycle phases. While DT corresponds to a single system (or product, subsystem, component, etc.), and focus on a single lifecycle phase, a CDT consists of multiple digital models corresponding to different subsystems and components of a complex system, focusing on multiple lifecycle phases of the asset [38].

Other authors present different DT types, such as Enhanced Cognitive Twin [23], Semantic Digital Twin [12, 18], next-generation Digital Twin [25], and the six-type typology (Imaginary, Monitoring, Predictive, Prescriptive, Autonomous, and Recollection) by [6, 35]. These typologies overlap with DT types mentioned previously in this section. In this sense, we consider that the important not is the different names for DTs, but the characteristics and aspects included in the proposals of DTs, which enable us to better analyze the proposal and implementation of certain DT.

2.1 Cognition Applied to DTs

Cognition capabilities are an important topic related to modern DT proposals and therefore an aspect of interest to analyze DT implementations. It is wellaccepted that the main capabilities related to cognition for DTs are perception, attention, memory, reasoning, problem-solving, and learning [4].

Perception is the process of forming useful representations of data related to the physical twin and its physical environment for further processing. Examples: real-time monitoring or data analytics on various data streams spanning from sensory data [24]. Attention is the process of focusing selectively on a task or a goal or certain sensory information either by intent or driven by environmental signals and circumstances [4], for example using anomaly detection tools (statistical process control, complex event processing, ML-based tools) [24]. Memory corresponds to a single process that includes: working, episodic and semantic memory; encoding and storing information; and information retrieval [33]. Key technologies for the memory of DTs are the use of databases, metadata, ontologies, and knowledge graphs. Reasoning can be defined as drawing conclusions consistent with a starting point – a perception of the physical twin and its environment, a set of assertions, a memory, or some mixture of them [27]. Examples of reasoning capabilities are root-cause analysis tools, simulations of the impact of the detected disruptions, evaluations of the impact of machines malfunction, and crane bottleneck [24]. Problem-Solving can be defined as the process of finding a solution for a given problem or achieving a given goal from a starting point [4]. It is achieved using optimization and simulation [24], for instance. Finally, learning is the process of transforming the experience of the physical twin into reusable knowledge for a new experience [4], predicting unwanted events in the operation before they happen and offering the best possible solutions [1]. Key technologies for learning in DTs are ML techniques, neural networks, knowledge graphs, DT models, etc., integrated with the persistence technologies.

These capabilities are required to perform complex tasks and a Cognitive DT is not required to have all these capabilities. On the other side, it is not clear what is the threshold for a DT not to be considered cognitive, and the term has been used widely in the recent literature. The implementation and realization of these cognitive capabilities in DTs are far from a simple task. In this sense, [4] arguments in favor of three critical operations in the design stage which cognition enable and enhance: (i) search; (ii) share; and (iii) scale. For example, perception and attention allow the search operation to selectively focus on a set of appropriate models. Problem-solving can further improve the search operation by enabling it to identify the most suitable digital twin model. Learning support for the share operation, because it enables transforming the experience into knowledge reusable by a new DT.

2.2 Semantics in DTs

Interoperability problems are an issue while integrating systems and equipment in the manufacturing industry, often related to different models and systems of the lifecycle of a product. It is expected that the DT supports the integration of systems and models across different lifecycle phases and collaborates with the level of interoperability. Because of that, the term “semantics” appears in some definitions of DT, such as Cognitive DTs and Semantic DTs. In both cases, the references to semantics in DTs are mainly associated with the key enabling technologies (i.e. semantic modeling, ontologies, knowledge graphs) to reach the semantic interoperability of data, digital models, and information.

Some authors propose the term Semantic Digital Twin. According to [30], Semantic DT is a semantically enhanced virtual representation of a retail store environment, connecting an ontology-based symbolic knowledge base with a scene graph. The scene graph provides a realistic 3D model of the store, which is enhanced with semantic information about the store, its shelf layout, and contained products. [13] highlighted that a Semantic Digital Twin should enable pro-active modeling, connecting among various planning systems (i.e. heterogeneous datasets), tracking, and optimization of construction processes and their associated off- and on-site resources. [21] assert that a fully Semantic Digital Twin is capable of leveraging acquired knowledge with the use of AI-enabled agents, agent-driven socio-technical platforms, and a variety of digital technologies and techniques (i.e. machine learning, deep learning, data mining, and data analysis) employed to create a self-reliant, self-updatable and self-learning DT.

In some definitions of Cognitive DTs it is possible to identify references to semantic capabilities as necessary requirement for this DT type. [14] defines a next-generation digital twin as a description of a component, product, system, or process by a set of well-aligned, descriptive, and executable models which is the semantically linked collection of the relevant digital artifacts including design and engineering data, operational data and behavioral descriptions. In its turn, [31] defines cognitive twin as a DT with augmented semantic capabilities for identifying the dynamics of virtual model evolution, promoting the understanding of interrelationships between virtual models, and enhancing the decision-making based on DT. In the definition of [38], Cognitive DT “comprises a set of semantically interlinked digital models related to different lifecycle phases of the physical system including its subsystems and components”. In many cases, a Cognitive DT can be constructed by integrating multiple related DTs using ontologies, semantic modeling, knowledge graphs, and lifecycle management technologies.

Considering the literature revised and the different concepts used to define Cognitive and Semantic Twins, it is important to highlight that in our opinion cognition and semantics are complementary and essential characteristics to make smart, autonomous, predictive, and self-learning Digital Twins, and necessary to the current context of the application of DTs in Industry 5.0.

3 An Analytical Framework for Digital Twins

The use of new technologies and the growth of the number of DT applications in Industry 4.0 and 5.0 have increased the complexity of the functions and capabilities of DTs. There is a wide range of reported implementations from digital models to cognitive DTs, but it is difficult in many cases to clearly understand such reports. It is not unusual that some papers report regular simulation, optimization, and machine-learning models as cognitive digital twins, and the misuse of this term may be related to ambiguity regarding DT definitions [25]. We present a conceptual framework of analysis for digital twins in Fig. 1 to help researchers and practitioners understand DT implementations. In this proposal, DT is analyzed on five dimensions: i) data flow, ii) interoperability level, iii) system level, iv) the cognitive process it performs, and iv) how the DT supports the product lifecycle. Unlike other authors, we do not create a categorization of digital twins such as semantic or cognitive DT, digital shadow, and others. On the other hand, our five-dimensional analytical framework embraces these definitions and allows the specification of a wide range of DT implementations.

Digital twins can be compared regarding their data flow [29]. Considering the model-asset communication, the data can flow automatically in both directions (two-way), from the asset to the model (one-way), or with no automatic data flow between the asset and the model - no data flow. Prototypes are usually the first model used to simulate a machine’s behavior and act as proof of concept of the digital twin. These prototypes (or data models) are generally considered DTs with no automatic communication. Finally, when the data flows are realized among a DT and other DTs, this DT is considered interconnected.

The interoperability dimension describes how the DT can interpret the data. In our analytical framework, the Levels of Conceptual Interoperability Model (LCIM) [34] is applied to heterogeneous DT communication. LCIM has been applied to different domains [36, 37] and it is a useful tool to understand the capabilities of the digital twin regarding data manipulation. The syntactical level refers to structured data, while the semantic level corresponds to the description (meaning) of the data. Asset Administration Shell addresses these two interoperability levels. Pragmatic interoperability demands a common understanding of context. Pragmatics in computation addresses the problem of finding the appropriate communication partner, establishing and maintaining exchange with him [37], which is primarily performed by orchestration methods. Orchestrating digital twins is, however, an open problem. Besides a protocol for negotiating a task, it is necessary a public catalog of digital twins with a description of the contract and policies. Next, the dynamic interoperability level relates to the understanding of the effect of the exchanged data on the sender and receiver. To this, it is necessary a shared state model where the digital twin is capable to understand its inner state and the state model of its communication partner.

DTs can be seen as digital models of a simple asset or a complex machine. Cognitive DTs represent such complex digital models able to communicate with other (generally simpler) DTs to, for instance, identify anomalies in the product line, search for optimized solutions, and negotiate a solution. The system level dimension considers the granularity of the DT regarding the ecosystem to which it belongs: part, component, subsystem, system, or system-of-systems [38].

The capacity of digital twins to handle complex tasks is related to their cognitive capabilities. The implementation and realization of these capabilities is a complex task and frequently it is difficult to identify them in the proposals of DT. Some technologies used in cognitive process of DTs can encompass two or more capabilities at the same time (i.e., machine learning techniques and knowledge graphs can be used for the memory of DTs and also for reasoning). In this sense, a strategy of identification of the cognition for DTs is to associate operations with the capabilities that make possible the cognitive process. We make use of the Information Processing Theory [28] in our analytical framework to define the four stagesFootnote 1 of processing information in digital twin ecosystem: collect, search, share, and scale. Collect is the process of retrieving and gathering the appropriate data from assets to perform an operation; Search is the process of identifying the appropriate digital twin models of a manufacturing system by searching over the Internet across search mechanisms; Share is the process of sharing relevant information gained during the life cycle of the digital twin of a manufacturing system to a new digital twin in its early design, development stage, and usage; and Scale is the process of sharing knowledge across nonoverlapping domains of a manufacturing system.

Finally, digital twins can be analyzed according to life cycle management. In the manufacturing industry, it is common that exist multiple related digital models to the different phases (production design, simulation, planning, production, maintenance, recycling, etc.) of the lifecycle of a product. Unfortunately, these different models and systems manifest a low degree of interoperability, and this creates problems, for instance when different enterprises or branches of an enterprise interact. In this sense, it is expected that proposals of DTs more semantic and cognitive support the integration of models across different lifecycle phases [38]. Therefore, it is not a trivial task and still a challenge for DTs to evolve digital models to cover the entire life cycle of the asset. In this dimension, we classify the proposals of DTs according to covering of the lifecycle of the product (asset) in: full, partial, or single lifecycle. The purpose expected of DTs is that implement technologies capable of covering the different phases of the lifecycle of a product and maintain a good level of interoperability.

4 Discussion

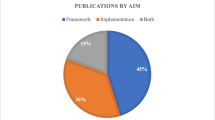

To exemplify how DT proposals can be analyzed with the framework, we compared 12 DT proposals and compiled the results in a radar graph (Fig. 2). The five dimensions were mapped to a five-point scale according to the following values: i) data flow (no data flow = 0; one-way = 1.7; two-way = 3.4; interconnect = 5); ii) system level (part = 1; component = 2; subsystem = 3; system = 4; system-ofsystems = 5); iii) cognitive process (collect = 1.25; search = 2.5; share = 3.75; scale = 5); iv) interoperability (systenatic = 1; semantic = 2; pragmatic = 3; dynamic = 4; conceptual = 5); v) lifecycle (single = 1.7; partial = 3.4; full = 5). We show four hybrid DT [8, 10, 11, 17], four cognitive DT [3, 7, 9, 23], and four DT proposals [16, 19, 20, 22] that authors don’t specify the type of DT. These works were selected to exemplify how distinct these DT proposals can be in the same definition.

Hybrid DT (Fig. 2a) are expected to be interconnected since most authors understand these DT should interact with other DTs to perform more complex tasks. Moreover, complex tasks demand a certain level of interoperability and cognition. Although some works present good interoperability and cognition process levels, it is not the case for all of the works. For example, HDT1 presents a solution for the exploration of overlay metal deposition patterns in real-time primarily collecting from assets and training a knowledge-based DT. Regarding the data flow, however, the DT collects data from the asset and presents information to engineers. Although the solution can be used in a DT ecosystem, it is not discussed. HDT4 showcases a distributed hybrid DT for the predictivemaintenance purpose, discussing how DT can communicate with assets and other DTs. However, the interoperability level is not covered by the authors.

In its turn, works proposing cognitive DT (Fig. 2b) tend to be interconnected and achieve high interoperability and cognitive processes levels. More important, the majority of the authors discuss more clearly the role of the DT in relation to other systems and twins, i.e. these works are more aware of the system-level dimension. For instance, CDT4 discusses the use of DT in the context of process industries, where cognition is particularly important due to the continuous, non-linear, and varied nature of the respective production processes, communication and collaboration among DT are presented to achieve more complex cognitive processes by using knowledge graphs. CDT3 presents an LSTM neural network-based method for Time-To-Recovery (TTR) prediction within a digital supply chain twin framework to enhance supply chain resilience and improve decision-making under disruption. According to the chart, however, CDT3 cognitive capabilities regarding more complex cognitive processes, interconnectivity among other digital twins, and interoperability levels.

The product lifecycle is not considered in all these 12 DTs. Lifecycle management is an important characteristic of cognitive digital twins, as stated by [38], but is not trivial to produce models that cover multiple lifecycle phases and it is reflected in Fig. 2. Finally, other DT works (Fig. 2c) are more closely to data models (DT1 [16] and DT3 [20]) and digital shadows (DT2 [19] and DT4 [22], which have the same values in all dimensions), according to the definition of [29] since they don’t present interoperability and system-level information, perform tasks related to less-complexity cognitive processes, and the communication with other DT is not a requisite.

It is worth noting how these works present a variety of DT proposals with different characteristics using the same definition (hybrid or cognitive). We argue that is more useful to understand these proposals using an analytical framework than creating new definitions.

5 Concluding Remarks

The enabling technologies of Industry 5.0 are a set of complex systems that combine technological trends such as digital twins, IoT, collaborative robots, etc. In this paper, we discussed the concept of digital twins with the objective to can help researchers and practitioners to better understand the current variety of proposals of DTs and their different applications, considering the set of typologies and some implementations found in the literature.

We propose a simple analytical framework to clarify the different proposals of DTs based not on terminologies but on the characteristics and capabilities of DTs, using five dimensions of analysis: data flow, interoperability, system level, cognitive process, product lifecycle. The framework embraces the different approaches found in the literature [1, 4, 13, 25, 30, 38] and allows the specification of a wide range of DT implementations, being domain-agnostic. We argue the analytical framework can better characterize a DT than the use of terminologies.

It is important to highlight that this research is not intended to exhaust all forms of analysis and characterization of digital twins but to bring some contributions and directions to the current approaches and proposals for digital twins in Industry. In this sense, other researchers can extend the proposed framework by including new dimensions, according to the need of the application domain or new capabilities demanded by Industry 5.0.

As the first validation of the analytical framework, this paper presented a proof of concept from an analysis of a limited set of proposals of DTs extracted from the literature, encompassing 12 articles analyzed under the five dimensions of our framework. A broader study of publications through a systematic review of the subject can provide new insights into the ability of the framework to encompass the variety of existing DTs. Finally, our analysis was based on the DT description of each work but it may be error-prone since some authors are not clear about some aspects we have analyzed.

Notes

- 1.

These stages are strongly motivated by the three processes described in [4].

References

Abburu, S., Berre, A.J., Jacoby, M., Roman, D., Stojanovic, L., Stojanovic, N.: Cognitwin - hybrid and cognitive digital twins for the process industry. In: Proceedings - 2020 IEEE International Conference on Engineering, Technology and Innovation, ICE/ITMC 2020 (2020)

Adl, A.E.: The cognitive digital twins: vision, architecture framework and categories (2016)

Adu-Kankam, K.O., Camarinha-Matos, L.M.: A framework for the integration of IoT components into the household digital twins for energy communities. In: Camarinha-Matos, L.M., Ribeiro, L., Strous, L. (eds.) IFIPIoT 2022. IFIP Advances in Information and Communication Technology, vol. 665, pp. 197–216. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-18872-5_12

Al Faruque, M.A., Muthirayan, D., Yu, S.Y., Khargonekar, P.P.: Cognitive digital twin for manufacturing systems. In: Proceedings -Design, Automation and Test in Europe, DATE. vol. 2021-February, pp. 440–445 (2021)

Ali, M.I., Patel, P., Breslin, J.G., Harik, R., Sheth, A.: Cognitive digital twins for smart manufacturing. IEEE Intell. Syst. 36(2), 96–100 (2021)

Ariesen-Verschuur, N., Verdouw, C., Tekinerdogan, B.: Digital twins in greenhouse horticulture: a review. Comput. Electron. Agric. (2022)

Asadi, A.R.: Cognitive ledger project: towards building personal digital twins through cognitive blockchain. In: 2nd International Informatics and Software Engineering Conference, IISEC 2021 (2021)

Asadi, M., Fernandez, M., Kashani, M.T., Smith, M.: Machine-learning digital twin of overlay metal deposition for distortion control of panel structures. IFACPapersOnLine 54(1), 767–772 (2021)

Ashraf, M., Eltawil, A., Ali, I.: Time-to-recovery prediction in a disrupted three-chelon supply chain using LSTM. IFAC-PapersOnLine 55(10), 1319–1324 (2022)

Ayyalusamy, V., Sivaneasan, B., Kandasamy, N., Xiao, J., Abidi, K., Chandra, A.: Hybrid digital twin architecture for power system cyber security analysis. In: 2022 IEEE PES Innovative Smart Grid Technologies-Asia, pp. 270–274. IEEE (2022)

Azangoo, M., et al.: Hybrid digital twin for process industry using Apros simulation environment. In: 2021 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), pp. 01–04. IEEE (2021)

Beetz, J.: Semantic digital twins for the built environment-a key facilitator for the European green deal? In: CEUR Workshop Proceedings, vol. 2887 (2021)

Boje, C., Kubicki, S., Guerriero, A.: A 4D BIM system architecture for the semantic web. In: Toledo Santos, E., Scheer, S. (eds.) ICCCBE 2020. LNCE, vol. 98, pp. 561–573. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-51295-8_40

Boschert, S., Heinrich, C., Rosen, R.: Next generation digital twin. In: Proceedings of TMCE. vol. 2018, pp. 7–11. Las Palmas de Gran Canaria, Spain (2018)

Calvo-Bascones, P., Voisin, A., Do, P., Sanz-Bobi, M.A.: A collaborative network of digital twins for anomaly detection applications of complex systems. Snitch digital twin concept. Comput. Ind. 144, 103767 (2023)

Cao, Z., Wang, R., Zhou, X., Wen, Y.: Reducio: model reduction for data center predictive digital twins via physics-guided machine learning. In: BuildSys 2022 Proceedings of the 2022 9th ACM International Conference on Systems for EnergyEfficient Buildings, Cities, and Transportation, pp. 1–10 (2022)

Costantini, A., et al.: IoTwins: toward implementation of distributed digital twins in industry 4.0 settings. Computers 11(5), 67 (2022)

Diéz, A., De Lara, J.: Semantic digital twins for organizational development. In: CEUR Workshop Proceedings, vol. 2887 (2021)

Donkers, A., de Vries, B., Yang, D.: Knowledge discovery approach to understand occupant experience in cross-domain semantic digital twins. In: CEUR Workshop Proceedings, vol. 3213, pp. 77–86 (2022)

Dorrer, M.: The prototype of the organizational maturity model’s digital twin of an educational institution. J. Phys.: Conf. Ser. 1691(1) (2020)

Douglas, D., Kelly, G., Kassem, M.: BIM, digital twin and cyber-physical systems: crossing and blurring boundaries. arXiv preprint arXiv:2106.11030 (2021)

Dröder, K., Bobka, P., Germann, T., Gabriel, F., Dietrich, F.: A machine learning-enhanced digital twin approach for human-robot-collaboration. Procedia CIRP 76, 187–192 (2018)

Eirinakis, P., et al.: Enhancing cognition for digital twins. In: Proceedings - 2020 IEEE International Conference on Engineering, Technology and Innovation (2020)

Eirinakis, P., et al.: Cognitive digital twins for resilience in production: a conceptual framework. Inf. (Switz.) 13(1), 33 (2022)

Falekas, G., Karlis, A.: Digital twin in electrical machine control and predictive maintenance: state-of-the-art and future prospects. Energies 14(18), 5933 (2021)

Hui, Y., et al.: Collaboration as a service: Digital-twin-enabled collaborative and distributed autonomous driving. IEEE Internet Things J. 9(19), 18607–18619 (2022)

Johnson-Laird, P.N.: Mental models and human reasoning. Proc. Natl. Acad. Sci. 107(43), 18243–18250 (2010)

Kmetz, J.L.: The Information Processing Theory of Organization: Managing Technology Accession in Complex Systems. Routledge (2018)

Kritzinger, W., Karner, M., Traar, G., Henjes, J., Sihn, W.: Digital twin in manufacturing: a categorical literature review and classification. IFAC-PapersOnline 51(11), 1016–1022 (2018)

Kümpel, M., Mueller, C.A., Beetz, M.: Semantic digital twins for retail logistics. In: Freitag, M., Kotzab, H., Megow, N. (eds.) Dynamics in Logistics, pp. 129–153. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-88662-2_7

Lu, J., Zheng, X., Gharaei, A., Kalaboukas, K., Kiritsis, D.: Cognitive twins for supporting decision-makings of internet of things systems. In: Wang, L., Majstorovic, V.D., Mourtzis, D., Carpanzano, E., Moroni, G., Galantucci, L.M. (eds.) Proceedings of 5th International Conference on the Industry 4.0 Model for Advanced Manufacturing. LNME, pp. 105–115. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-46212-3_7

Maddikunta, P.K.R., et al.: Industry 5.0: a survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 26, 100257 (2022)

McDermott, K.B., Roediger, H.L.: Memory (encoding, storage, retrieval). General Psychology FA2018. Noba Project: Milwaukie, OR, pp. 117–153 (2018)

Turnitsa, C.: Extending the levels of conceptual interoperability model. In: Proceedings IEEE Summer Computer Simulation Conference. IEEE CS Press (2005)

Verdouw, C., Tekinerdogan, B., Beulens, A., Wolfert, S.: Digital twins in farming systems. Agric. Syst 189, 103046 (2021)

Wang, W., Tolk, A., Wang, W.: The levels of conceptual interoperability model: applying systems engineering principles to M&S. In: Proceedings of the 2009 Spring Simulation Multiconference, pp. 1–9 (2009)

Wassermann, E., Fay, A.: Interoperability rules for heterogenous multi-agent systems: levels of conceptual interoperability model applied for multi-agent systems. In: 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), pp. 89–95. IEEE (2017)

Zheng, X., Lu, J., Kiritsis, D.: The emergence of cognitive digital twin: vision, challenges and opportunities. Int. J. Prod. Res. 60(24), 7610–7632 (2022)

Acknowledgments

The project TRF4p0-Transformer4.0 leading to this work is co-financed by the ERDF, through COMPETE-POCI and by the Foundation for Science and Technology under the MIT Portugal Program under POCI-01-0247-FEDER-045926.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 IFIP International Federation for Information Processing

About this paper

Cite this paper

Mendonça, F.M., de Souza, J.F., Soares, A.L. (2023). Making Sense of Digital Twins: An Analytical Framework. In: Camarinha-Matos, L.M., Boucher, X., Ortiz, A. (eds) Collaborative Networks in Digitalization and Society 5.0. PRO-VE 2023. IFIP Advances in Information and Communication Technology, vol 688. Springer, Cham. https://doi.org/10.1007/978-3-031-42622-3_53

Download citation

DOI: https://doi.org/10.1007/978-3-031-42622-3_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-42621-6

Online ISBN: 978-3-031-42622-3

eBook Packages: Computer ScienceComputer Science (R0)