Abstract

The public health world is increasingly becoming an analytical place, and with increases in the availability of existing literature, drawing evidence-based conclusions requires important knowledge and skills. This chapter discusses the importance of evidence-based decision-making and the critical considerations and limitations of conducting systematic reviews and meta-analyses. Additionally, this chapter discusses important considerations for the quality assessment of articles, step-by-step procedures for conducting systematic reviews and meta-analyses, and some practical examples. Specifically, the objectives of this chapter are as follows: (i) understanding the importance of evidence-based decision-making in public health; (ii) acquiring knowledge about biases, complexities, and limitations for the conduct of systematic review and meta-analyses studies; and (iii) assimilating the analytical approaches involved in the conduct of meta-analysis. The most important topics of this chapter are differences between advocacy and analysis, quality assessment of peer-reviewed and grey literature, consideration of publication bias and winner’s curse, and implications and ethical challenges associated with the use of emerging artificial intelligence software for evidence-based decision-making in public health. The information in this chapter could be used for evidence-based decision-making in public health surveillance programs, prevention of diseases, assessment of health status, health protection and promotion, emergency response and preparedness, and for regulatory regimes and designing public health education programs.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

FormalPara Learning Objectives-

Assimilating the importance of evidence-based decision-making in public health

-

Learning various biases, complexities, and limitations for the conduct of systematic reviews and meta-analyses studies

-

Gaining knowledge about analytical approaches for the conduct of meta-analysis

1 Introduction: Evidence-Based Decision-Making

There are major differences between advocacy and analysis for drawing a conclusion from the literature. If one is an advocate, there is a particular cause or policy in mind. Using that predetermined notion, an advocate collects information from the literature to support the particular policy or cause [1]. For example, for someone representing an organization that manufactures conjugated linolenic acid supplements, an advocate could identify studies that highlight the positive impact of conjugated linolenic acid supplements on health. Of course, this approach may not provide the complete picture of the health effects of the supplement as studies that show no positive outcome or those that may show negative health consequences associated with the intake of conjugated linolenic acid might not be collected by an advocate. In contrast to this approach, evidence-based decision-making is derived from a systematic literature analysis [2]. An analyst considers negative and positive results and those that do not illustrate significant differences. An analyst could make an evidence-based decision by considering important topics such as publication bias and winner’s curse, using inclusion and exclusion criteria for evaluating existing studies, and using analytical tools.

Evidence-based decision-making starts with a review of the literature. For identifying the existing studies, various search engines could be utilized. Although the use of common search engines such as Google Scholar could lead to the identification of many existing studies, the use of specialized search engines could broaden the impact of the literature review efforts. PubMed is a free search engine that is maintained by the United States National Library of Medicine and the National Institute of Health that could be considered the best source for the identification of medical and public health peer-reviewed research articles. Many other databases could be of great help and importance as well. For example, AGRICOLA is a search engine maintained by the National Agriculture Library and the United States Department of Agriculture and could be a great resource for identifying nutrition- and food system-related articles. AGRIS is another helpful search engine maintained by the United Nations Food and Agricultural Organization that could be used to identify literature associated with nutrition, public health, and global food security. AGRIS is also a great resource for searching research articles that are published in a language other than English. Ovid, Web of Science, Cochrane library, and Scopus databases are also very common resources for obtaining information for systematic review and meta-analysis. A successful literature review requires the identification of appropriate keywords and maintaining a search record. More complex search efforts could be completed using Boolean operators (such as and, or, not, or and not) as conjugations to combine and exclude keywords [3]. Using these Boolean operators could make the review and evaluation of search records more manageable for filtering studies that are irrelevant to the search topic.

2 Limitations and Important Considerations for Systematic Review and Meta-Analysis

A successful and comprehensive literature review requires identifying all types of studies associated with a search topic, including the material often called “grey literature.” In contrast to widely disseminated published literature, grey publications are typically not peer-reviewed and may contain results of studies that are not statistically significant. These negative-outcome studies are very important in the control of publication bias, as further discussed below. The unpublished grey literature could also be a good source of pilot projects that have very small sample size due to high cost or difficulty in recruiting subjects and studies that are highly innovative in nature [4,5,6,7].

Box 16.1 Evidence-Based Decision Making in Public Health

An advocate has a particular cause or policy in mind and based on that pre-determined notion, collects information from the literature. This could lead to major biases in decision making based on information in the literature. In contrast, an analyst uses a systematic procedure to collect information from the literature, conducts quality assessment, considers both positive and negative results, and after controlling biases could summarize and interpret the results in a systematic review and/or meta-analysis. This approach leads to evidence-based decisions and could be completed by individuals with sufficient training who are intelligent consumer of the literature.

Notably, grey publications such as annual reports, project reports, working papers, government publications, and white papers might not be peer-reviewed, and including them in a systematic review should be done cautiously after carefully considering the scientific merit of the studies. Thus, the inclusion and exclusion of grey publications should be carefully considered. Some researchers might place a very heavy emphasis on the grey literature, as an example, Piggott-McKellar et al. completed a systematic review of only the grey literature associated with climate change [8]. In certain fields of study, information discussed in patents could also be considered a grey publication and be used in a systematic review [9]. In those cases, it is important to ensure that information derived from patents is discussed so that it does not violate the patent and copyright laws.

A very important topic for consideration during systematic review is publication bias which should not be confused with biases for selecting samples and participants in epidemiological studies. According to “the dictionary of Epidemiology,” [10] publication bias is defined as “an editorial predilection for publishing particular findings, e.g., positive results, which leads to the failure of authors to submit negative findings for publication.” This is particularly common for researchers who use analytical skills for drawing associations from large data sets and may not express interest in further pursuing a hypothesis that does not have a statistically significant difference. As apparent in the definition, publication bias could also occur systematically by journal editors and peer-reviewers by not showing interest in the publication of studies with negative results [11]. The lack of inclusion of negative results in a systematic review and meta-analysis could substantially affect the outcome of the analysis. Some studies indicate that as high as 45% of an observed association could be attributed to publication bias [11, 12]. As such, when a negative study is identified, it is very important to give appropriate weight. Discussion about publication bias could also be an important part of interpreting both systematic reviews and meta-analyses.

When conducting and interpreting systematic reviews and meta-analyses, it is also important to consider the winner’s curse effect in research publications. The verbiage winner’s curse is derived from competitive auctions when a “winner” of an item listed in an auction might have overpaid for an item. In scientific settings, a “winner,” i.e., the researcher who first identifies a novel association, might trumpet important results that in further studies might illustrate less association or no significance. Follow-up and confirmatory studies to the original finding, since the nature of exposure and outcome is now determined based on the original work, might have a larger sample size and thus better estimate the impact of association on the outcome compared to the original study [11]. This could be of concern for genomic research, health policy studies, as well as public health randomized and observational investigations. As such, consideration of the winner’s curse could be an important aspect of conducting and interpreting systematic review and meta-analysis. As discussed later in this chapter, conducting a sensitivity analysis for comparing the overall effect with and without the original study could be used as an analytical method for investigating the existence of the winner’s curse. Careful examination of the normal quantile–quantile plot (QQ Plot) is also an important diagnostic tool to determine the potential effect of the winner’s curse in a study.

For writing and summarizing material, it is important to mention that in recent years, there has been great progress in the use of artificial intelligence (AI) software such as ChatGPT. The use of these applications and ethical considerations of their utilization are currently in their infancy. Although these AI software applications could ultimately be of positive help for evidence-based decision-making, this technology should be used responsibly and ethically. When a researcher’s work is utilized, proper credit should be given by properly citing the work. Similarly, material that is generated by AI technology or the work that has received assistance or derived from AI software applications requires proper citation of the work as well [13]. The use of existing software for determining similarity index reports, if used properly, could be of great help to examine if a written summary is properly giving credit to other researchers in the field, but at the current time, these software applications cannot detect the work generated by AI applications. However, emerging AI software is now becoming available to determine if an essay is generated by another AI [14, 15].

Box 16.2 Biases and Important Considerations for Systematic Review and Meta-Analysis

For ensuring the success of an evidence-based systematic review and meta-analysis, it is important to ensure that in addition to peer-review studies, grey literature are considered as well. Although many forms of grey literature may not be peer-reviewed and their inclusion in the final systematic review and meta-analysis should be considered carefully after quality assessment, these sources of information could be of great importance to minimize the risk of publication bias. Publication bias is due to the fact that studies with negative results might not be selected by the editorial team of journals or by researchers’ themselves for publication so published literature tend to be more about positive and significant results and lacks research with negative outcome. Consideration about winner’s curse are additionally an important aspect of a successful systematic review and meta-analysis. This could be detected using diagnostic tools such as quantile-quantile plot (QQ Plot).

3 Overview of Step-by-Step Procedures

3.1 Criteria for Inclusion and Exclusion of Studies

In addition to concerns associated with sample variability, a single study has limitations associated with the sample size and probability of false negatives in the outcome. Traditional narrative review articles also have major limitations since they are subjective in nature and prone to errors and biases [16]. In contrast, a successful systematic review and meta-analysis could lead to a precise estimation of treatment effects and a reduction of false-negative results probability.

However, the outcome of a systematic review and meta-analysis could be compromised if “raw materials” are derived from inadequate methodological quality.

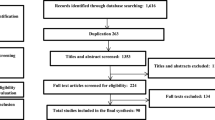

A systematic review and meta-analysis should be very carefully planned. In addition to important information discussed earlier for selecting studies, it is important to have robust, transparent, and repeatable inclusion and exclusion criteria and quality assessment procedures [17]. The results of the quality assessment activities are usually summarized in the PRISMA flow chart. Figure 16.1 is an example of a PRISMA flow chart for studying racial disparities associated with respiratory infections in the US’ children [18]. The researcher could generate this flow chart or a template of a diagram that could be obtained from reputable agencies’ websites such as Cochrane Collaboration and the Cochrane Library [19].

PRISMA flow chart for illustration of searched article and inclusion process [18] (Reprinted with permission from the authors)

Systematic review and meta-analysis could be completed for both randomized and observational studies. To ensure that biases are controlled in the final analysis, it is important to include robust inclusion and exclusion criteria for the quality assessment of the studies. The inclusion and exclusion criteria could vary depending on the type of studies and outcomes selected and the extent and availability of literature associated with the meta-analysis topic. These inclusion/exclusion criteria would need to be predefined. An example of a well-defined a priori inclusion/exclusion criteria could be accessed in the methods section of the study of Desai et al. [20]. In their study, the criteria were applied to 751 articles for the selection of 35 studies in their final systematic review.

Although the various methodologies could be applied for developing successful inclusion and exclusion criteria, Meline explains a six-step process for selecting studies for a systematic review [21] that includes the following: (i) applying the exclusion/inclusion criteria to abstracts and titles of the identified studies, (ii) elimination of studies that do not meet ≥1 of exclusion criteria, (iii) obtaining the full-text of remaining studies, (iv) further evaluation of studies’ full-text for inclusion and exclusion, (v) including studies that meet all inclusion criteria and do not meet any of the exclusion criteria, (vi) excluding studies during systematic review only with justified reasons (such as quality concerns), and (vii) accepting all remaining studies for a systematic review.

3.2 Outcome Measures and Displaying Results

After a successful systematic review, choosing the outcome measure(s) that are intended to be studied is important. The type of outcome measures could be binary data, measures of associations, continuous variables, or time-to-event data. For choosing these outcome measures, it is important to ensure that these estimates are easy to interpret and are consistent across all selected studies. Additionally, it is important to consider the degree of heterogeneity of these outcome measures between selected studies. It is critical to consider the types of participants and interventions in each study to ensure that a formal meta-analysis can be considered for the data. A meta-analysis may have primary and secondary outcomes, and the analysis could be done for the main data and for the sub-groups [22]. Once these outcome measures are identified, they would need to be extracted from each study with the sample size associated with each outcome measurement [23]. Since a meta-analysis aims to calculate the overall or combined effect of several studies, instead of relying on simple mean, it utilizes weighted mean, with more weight given to some studies and less weight given to others based on the sample size of each experiment [24, 25]. Although these could be calculated manually or in an Excel spreadsheet, many analytical software applications are available for data management and meta-analysis. As mentioned earlier, Cochrane reviews are typically considered as the gold standard of quality for systematic review and meta-analysis and the use of software from the Cochrane Library is recommended [26]. Some software, including the above-mentioned Cochrane software, allows the users to prepare a protocol that assists in searching and selecting studies and data extraction [26, 27]. Software applications such as SPSS® or programming software applications such as SAS®, Stata®, R®, and Python®, just to name a few, are all capable of conducting a meta-analysis as well. Once the weighted means are calculated, results are typically illustrated in a Forest plot. Figure 16.2 is an example of a Forest plot completed for continuous variables.

Forest plot for the effect of physical activity label on calorie reduction by study setting [28] (Reprinted with permission from the authors)

However, the meta-analysis results do not necessarily need to be presented in a forest plot. As an example, Fig. 16.3 shows the summary of a dose–response meta-analysis. A very common and important decision for conducting a meta-analysis is choosing fixed or random effects models. The general assumption for a fixed effects model is that there is one true effect size across all included studies. In contrast, a random effects model allows the flexibility that the true effect could be variable between the studies [24, 25, 30, 31].

Non-linear dose-response plots on the relationship between body mass index (BMI) and mortality in stroke patients. The association of BMI with all-cause mortality (a). The association of BMI with stroke specific mortality (b). Continuous black and medium-dashed orange-red lines represent non-linear and linear plots. 95% confidence intervals are shown by long-dashed maroon lines. Vertical axes are based on the log-scale of the hazard ratios [29]. (Used with permission from the publisher; permission conveyed through Copyright Clearance Center, Inc.)

3.3 Validity of Meta-Analysis and Conduct of Sensitivity Analysis

Sensitivity analysis could be defined as “a method to determine the robustness of an assessment by examining the extent to which results are affected by changes in methods, models, values of unmeasured variables, or assumptions [32, 33].” The sensitivity analysis could be either quantitative or qualitative. For example, for formulating a research question before initiation of a literature review, one could ask “what if” questions (qualitative sensitivity analysis) to entertain various variations of research questions since this step could determine the outcome and analytics needed for a successful systematic review and meta-analysis [34]. However, sensitivity analysis typically refers to quantitative and analytical approaches to examine the robustness of the study. Sensitivity analysis is an excellent option for the detection of publication bias, as discussed earlier. Some studies suggest conducting worse-case meta-analytic point estimates by conducting a standard meta-analysis only for nonsignificant and negative studies to explore the impact of these potentially underrepresented studies on the overall meta-analysis [35]. Funnel and QQ plots are also very common and impactful diagnostic tools to examine the validity of meta-analyses [32]. Conducting these diagnostics tests and sensitivity analysis could be of great importance to determine the effects of outliers, missing data, publication bias, winner’s curse, or the existence of baseline imbalances on a meta-analysis’s internal and external validity [36]. These are also very important to determine the unexplained variations among various studies (heterogeneity) that could significantly threaten a meta-analysis’s internal and external validity [37].

Overall, a meta-analysis could be evaluated and reviewed for merit and significance by reviewing study questions (clear articulation of objectives, relevance to public health), quality of literature search, data abstraction procedure, evaluation of results and graphical displays, control measures for preventing or minimizing the publication bias, applicability of final results, and the funding source [38, 39]. These sections should be carefully reviewed for choice of analytical methods, risk of biases, inconsistencies, imprecision, indirectness, reporting biases, and confidence in the estimates [40].

4 Practical Examples and Interpretation of Relevant Studies

4.1 Example 1—Physical Activity Equivalent Labeling Versus Calorie Labeling

Several strategies are used in different countries to reduce the rates of overweight and obesity. One of these approaches includes nutrition labeling, a policy in which information like food calories on menus (calorie labeling) is provided to consumers to help them choose healthy food choices. Although this policy contributes to changes in consumers’ food selection and eating behaviors, there are controversies revolving around the effectiveness of the approach as it seems that it inversely affects eating behaviors. Alternatively, a more effective type of nutrition labeling that has been recently proposed is called physical activity equivalent labeling which is the amount of physical activity needed to burn calories taken by consuming each food item. Seyedhamzeh et al. [28] designed a meta-analysis to determine the effectiveness of equivalent physical activity labeling to calorie-only labeling on any alteration in consumers’ eating behavior. This study searched several databases including PubMed, Scopus, Web of Science, AGRIS, Cochrane library, and Google Scholar to identify any scientific paper published between January 2000 and October 2016. They reported the findings using either or both labeling methods. The reference lists of these articles were also examined to prevent missing information published in this area. No restriction was performed with respect to the language of the relevant articles. If any discrepancies occurred during data extraction, a consensus was reached to make a decision. The Cochrane assessment tool could not be used for quality assessment as most of the included studies were performed in non-real-world settings. Therefore, the authors designed their own tool and categorized the studies into three groups: high risk of bias, low risk of bias, and unclear. The degree of heterogeneity between studies was evaluated using the Q Cochrane test and I2 statistics. Heterogeneity was classified as low, moderate, or severe based on I2 values of less than 25%, between 25% and 50%, and over 50%, respectively. A fixed effects model was utilized to merge the mean and standard deviations of the included studies. The authors also used a fixed effects model to calculate a weighted mean difference accounting for any differences in calorie purchases between those exposed to physical activity and calorie-only labeling. The findings of this study showed that calorie ordering during food selection for consumers exposed to physical activity labeling rather than calories only slightly decreased, but this reduction was not statistically significant when physical activity was shown either in minutes [standardized mean difference (SMD): −0.03; 95% confidence interval (CI): −0.13 to 0.07] or in miles [SMD-0.02; 95%CI: −0.13 to 0.09]. The findings on sub-group analysis based on quality assessment revealed that in low risk of bias studies, calories ordered in physical activity label compared to calorie label were nonsignificantly decreased [SMD: −0.04; 95%CI: −0.15 to 0.07]. They also found that the impact of those exposed to physical activity labeling on calorie reduction compared to individuals exposed to calorie labeling demonstrated an average decrease of 65 calories in their food choices (Fig. 16.2). This study concluded that there was no significant difference regarding calories ordered by consumers exposed to physical activity labeling and calorie labeling.

4.2 Example 2—BMI Impact on Stroke and All-Cause Mortality

The “obesity paradox” is centered upon the protective role of fat in individuals with metabolic conditions. However, it was unclear if this effect, also known as reverse epidemiology, exists in all-cause mortality and mortality from a specific disease in patients suffering from that particular disease. Therefore, Bagheri et al. [29] designed a dose–response meta-analysis of the studies examining the relationship between body mass index (BMI) and mortality due to all causes and stroke-specific causes in individuals suffering from a stroke. PubMed, Ovid, and Scopus databases were systematically searched to identify these studies published in English before 7 July 2014, and a predefined protocol was followed to extract the studies. This protocol includes studies with (1) the adult population (aged >18 years), (2) the measurement of BMI as the exposure and mortality as the outcome in the patients suffering from stroke, (3) findings reported as the relative risk (RR) or hazard ratio (HR), and (4) reported number of cases and controls in each BMI classes. To include all possible data, some studies’ authors were contacted and asked for additional information. Two authors independently extracted the data, and a third author resolved disagreements by consensus. The heterogeneity and the classification of the heterogeneity were assessed using the method explained earlier in Study 1. Based on the above protocol, the selected studies on (1) all-cause mortality were eight cohorts comprising 20,807 deaths of 95,651 stroke patients and (2) stroke-specific mortality were nine studies comprising 8087 deaths of 286,270 patients. The authors evaluated the quality of each study based on selection, comparability, and outcome using the Newcastle–Ottawa Scale. A two-stage hierarchical regression model performed the nonlinear dose–response association across all BMI categories. The spline transformations were used to estimate the dose–response relationship, while within- and between-study variances were taken into account. To assess this relationship, authors used random-effects dose–response models, which considered logarithms of HRs and CIs, as well as the number of deaths and participants across various BMI categories. Linearity in the potential relationship was assumed during the analysis. The findings of this study revealed a statistically significant nonlinear relationship (P < 0.0001) between BMI and mortality due to all causes following stroke. For this analysis, the authors reported the correlation matrix estimate of 0.72 and the estimated between-study standard deviations (SDs) of 0.03 and 0.01. Results showed that BMI values below 25 kg/m2 had a protective effect on all-cause mortality rates, with the decreasing risk as BMI increased up to 21 kg/m2, but the increasing risk with a steep slope at BMI levels above 23 (Fig. 16.3a). Also, the random-effects dose–response analysis showed a nonlinear relationship (P = 0.05) between BMI and mortality specific to stroke (the estimate of the correlation matrix was reported to be −1 and the estimated between studies SDs were 0.05 and 0.02) (Fig. 16.3b). Potential sources of heterogeneity were observed using the Q Cochrane test and I2 statistics. In addition, based on Egger’s regression test, there was not any publication bias among the included cohorts in this meta-analysis. Based on the findings of this meta-analysis, an increase in BMI, an increased rate of all-cause mortality, and a decreased rate of mortality specific to stroke were observed. This showed that in patients suffering from stroke, different patterns were found in all-cause mortality and stroke-specific mortality with respect to BMI increase, indicating that there might be a paradox within the obesity paradox in this relationship.

4.3 Risk of Bias Assessment

In a meta-analysis, risk of bias assessment is an important step for quality control of the studies used for the analysis. In a recently published systematic review and meta-analysis of the safety and efficacy of post-eradication smallpox vaccine for the prevention of mpox (monkeypox), the investigators independently assessed the risk of bias in the included study using predetermined criteria, such as random sequence generation, allocation concealment; blinding of participants, personnel, and outcomes; incomplete outcome data; selective outcome reporting; and other sources of bias, in accordance with the methods recommended by The Cochrane Collaboration [41]. The results are presented in Fig. 16.4. The risk of bias was from low (green) to high (red) in the studies reviewed.

5 Further Practice

-

1.

What are the differences between advocacy and analysis in the context of evidence-based decision-making? An advocate has a particular cause or policy in mind and based on that predetermined notion collects information from literature. This could lead to major biases in decision-making based on information in the literature. In contrast, an analyst uses a systematic procedure to collect information from the literature, conducts quality assessment, considers both positive and negative results, and after controlling biases could summarize and interpret the results in a systematic review and/or meta-analysis. This approach leads to evidence-based decisions and could be completed by individuals with sufficient training who are intelligent consumers of the literature.

-

2.

What are PubMed, AGRICOLA, and AGRIS and what are the institutions that maintain these search engines? PubMed is a free search engine that is maintained by the United States National Library of Medicine and the National Institute of Health that could be considered the best source for the identification of medical and public health peer-reviewed research articles. AGRICOLA is a search engine maintained by the National Agriculture Library and the United States Department of Agriculture and could be a great resource for identifying nutrition- and food system-related articles. AGRIS is another helpful search engine maintained by the United Nation’s Food and Agricultural Organization that could be used to identify literature associated with nutrition, public health, and global food security. AGRIS is also a great resource for searching research articles that are published in a language other than English.

-

3.

What are Boolean operators and why are they important in a successful literature review? Boolean operators (such as and, or, not, or and not) are conjugations that could be used to combine or exclude keywords. The use of these Boolean operators could make the review and evaluation of search records more manageable for filtering studies that are not relevant to the search topic.

-

4.

What are grey publications and what is their importance in a successful systematic review? A successful and comprehensive literature review requires the identification of all types of studies associated with a search topic including the material that is often called “grey literature.” In contrast to widely disseminated published literature, grey publications are typically not peer-reviewed and may contain results of studies that are not statistically significant. These negative-outcome studies are very important in the control of publication bias as further discussed below. The unpublished grey literature could also be a good source of pilot projects that have very small sample size due to high cost or difficulty in recruiting subjects and studies that are highly innovative in nature.

-

5.

What is publication bias in the context of systematic review and meta-analysis?

A very important topic for consideration during systematic review is publication bias which should not be confused with biases for the selection of samples and participants in epidemiological studies. According to “dictionary of epidemiology,” publication bias is defined as “an editorial predilection for publishing particular findings, e.g., positive results, which leads to the failure of authors to submit negative findings for publication” [10]. This is particularly common for researchers who use analytical skills for drawing associations from large data sets and may not express interest in further pursuing a hypothesis that does not have a statistically significant difference. As apparent in the definition, publication bias could also occur systematically by journal editors and peer-reviewers by not showing interest in the publication of studies with negative results.

-

6.

What is the winner’s curse effect in the context of systematic review and meta-analysis? It is also important to carefully consider the winner’s curse effect in research publications when conducting and interpreting systematic reviews and meta-analyses. The verbiage winner’s curse is derived from competitive auctions when a “winner” of an item listed in an auction might have overpaid for an item. In scientific settings, a “winner,” i.e., the researcher that first identifies a novel association might trumpet important results that in further studies might illustrate less degree of association or no significance. Follow-up and confirmatory studies to the original finding, since the nature of exposure and outcome is now determined based on the original work, might have a larger sample size and thus better estimate impact of association on the outcome compared to the original study. This could be of concern for genomic research, health policy studies, as well as public health randomized and observational investigations. As such, consideration of the winner’s curse could be an important aspect of the conduct and interpretation of systematic review and meta-analysis.

-

7.

What are the limitations of a single study and a traditional review article, and how these limitations are addressed in a systematic review and meta-analysis? In addition to concerns associated with sample variability, a single study has limitations associated with the sample size and probability of false negatives in the outcome. Traditional narrative review articles also have major limitations since they are subjective in nature and prone to errors and biases. In contrast, a successful systematic review and meta-analysis could lead to a precise estimation of treatment effects, and a reduction of false-negative results probability. However, the outcome of a systematic review and meta-analysis could be compromised if “raw materials” are derived from inadequate methodological quality.

-

8.

What is a PRISMA flow chart? The results of the quality assessment activities are usually summarized in the PRISMA flow chart. This flow chart that illustrates number of identified and number of selected studies could be generated by the researcher, or a template of a diagram could be obtained from reputable agencies’ websites such as Cochrane Collaboration and the Cochrane Library.

-

9.

What are typical outcome measures in meta-analyses and what are common software applications used for conducting meta-analysis? Outcome measures could be binary data, measures of associations, continuous variables, or time-to-event data. For choosing these outcome measures, it is important to ensure that these estimates are easy to interpret and are consistent across all selected studies.

Cochrane reviews are typically considered as the gold standard of quality for systematic review and meta-analysis and the use of software from the Cochrane library is recommended. Some software including the Cochrane software allows the users to prepare a protocol that assists in searching and selecting studies and data extraction. Software applications such as SPSS® or programming software applications such as SAS®, Stata®, R®, and Python®, just to name a few, are all capable of conducting a meta-analysis as well.

-

10.

What is sensitivity analysis and why is it important? Sensitivity analysis could be defined as “a method to determine the robustness of an assessment by examining the extent to which results are affected by changes in methods, models, values of unmeasured variables, or assumptions” [32, 33]. The sensitivity analysis could be both quantitative and qualitative in nature. For example, for formulating a research question before initiation of a literature review, one could ask “what if” questions (qualitative sensitivity analysis) to entertain various variations of research questions since this step could determine the outcome and analytics needed for a successful systematic review and meta-analysis. However, sensitivity analysis typically refers to quantitative and analytical approaches to examine the robustness of the study. Sensitivity analysis is an excellent option for the detection of publication bias.

References

Reid EJ. Understanding the word “advocacy”: context and use. In: Structuring the inquiry into advocacy, vol. 1. Urban Institute; 2000. p. 1–7.

Brownson RC, Gurney JG, Land GH. Evidence-based decision making in public health. J Public Health Manag Pract. 1999;5:86–97.

Jesson J, Matheson L, Lacey FM. Doing your literature review: traditional and systematic techniques. Los Angeles: SAGE; 2011.

Conn VS, Valentine JC, Cooper HM, Rantz MJ. Grey literature in meta-analyses. Nurs Res. 2003;52(4):256–61.

Adams J, Hillier-Brown FC, Moore HJ, Lake AA, Araujo-Soares V, White M, Summerbell C. Searching and synthesising “grey literature” and “grey information” in public health: critical reflections on three case studies. Syst Rev. 2016;5(1):1–11.

Hopewell S, McDonald S, Clarke MJ, Egger M. Grey literature in meta-analyses of randomized trials of health care interventions. Cochrane Database Syst Rev. 2007;2000(2):MR000010.

Saleh AA, Ratajeski MA, Bertolet M. Grey literature searching for health sciences systematic reviews: a prospective study of time spent and resources utilized. Evid Based Libr Inf Pract. 2014;9(3):28.

Piggott-McKellar AE, McNamara KE, Nunn PD, Watson JE. What are the barriers to successful community-based climate change adaptation? A review of grey literature. Local Environ. 2019;24(4):374–90.

Fouladkhah A, Berlin D, Bruntz D. High-sodium processed foods: public health burden and sodium reduction strategies for industry practitioners. Food Rev Intl. 2015;31(4):341–54.

Last JM, editor. A dictionary of epidemiology. Oxford: Oxford University Press; 1983. p. 12.

Thornton A, Lee P. Publication bias in meta-analysis: its causes and consequences. J Clin Epidemiol. 2000;53(2):207–16.

Tweedie RL, Scott DJ, Biggerstaff BJ, Mengersen KL. Bayesian meta-analysis, with application to studies of ETS and lung cancer. Lung Cancer. 1996;14:S171–94.

Lund BD, Wang T. Chatting about ChatGPT: how may AI and GPT impact academia and libraries? Libr Hi Tech News. 2023;40:26.

Meo SA, Talha M. Turnitin: is it a text matching or plagiarism detection tool? Saudi J Anaesth. 2019;13(Suppl 1):S48.

Fouladkhah A. Emerging open-access artificial intelligence applications: a peer-to-peer note to teaching colleagues in higher education. LinkedIn. 23 Feb 2023. https://www.linkedin.com/posts/aliyarfouladkhah_artificialintelligence-highereducation-studentsevaluation-activity-7016170362358923264-X96h?utm_source=share&utm_medium=member_desktop.

Teagarden JR. Meta-analysis: whither narrative review? Pharmacotherapy. 1989;9(5):274–84.

Egger M, Smith GD, Phillips AN. Meta-analysis: principles and procedures. BMJ. 1997;315(7121):1533–7.

Jones EA, Mitra AK, Malone S. Racial disparities and common respiratory infectious diseases in children of the United States: a systematic review and meta-analysis. Diseases. 2023;11(1):23.

Clarke M. The Cochrane collaboration and the Cochrane library. Otolaryngol Head Neck Surg. 2007;137(4_suppl):S52–4.

Desai MM, Stauffer BD, Feringa HH, Schreiner GC. Statistical models and patient predictors of readmission for acute myocardial infarction: a systematic review. Circ Cardiovasc Qual Outcomes. 2009;2(5):500–7.

Meline T. Selecting studies for systemic review: inclusion and exclusion criteria. Contemp Issues Commun Sci Disord. 2006;33(Spring):21–7.

Zwahlen M, Renehan A, Egger M. Meta-analysis in medical research: potentials and limitations. In: Urologic oncology: seminars and original investigations, vol. 26, No. 3. Elsevier; 2008. p. 320–9.

Sutton AJ, Abrams KR, Jones DR. An illustrated guide to the methods of meta-analysis. J Eval Clin Pract. 2001;7(2):135–48.

Borenstein M, Hedges LV, Higgins JP, Rothstein HR. A basic introduction to fixed-effect and random-effects models for meta-analysis. Res Synth Methods. 2010;1(2):97–111.

Borenstein M, Hedges L, Rothstein H. Meta-analysis: fixed effect vs. random effects. Meta-analysis.com. 2007. p. 1–30.

Henderson LK, Craig JC, Willis NS, Tovey D, Webster AC. How to write a Cochrane systematic review. Nephrology. 2010;15(6):617–24.

Higgins JP, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane handbook for systematic reviews of interventions. Wiley; 2019.

Seyedhamzeh S, Bagheri M, Keshtkar AA, Qorbani M, Viera AJ. Physical activity equivalent labeling vs. calorie labeling: a systematic review and meta-analysis. Int J Behav Nutr Phys Act. 2018;15:1–13.

Bagheri M, Speakman JR, Shabbidar S, Kazemi F, Djafarian K. A dose-response meta-analysis of the impact of body mass index on stroke and all-cause mortality in stroke patients: a paradox within a paradox. Obes Rev. 2015;16(5):416–23.

Nikolakopoulou A, Mavridis D, Salanti G. How to interpret meta-analysis models: fixed effect and random effects meta-analyses. BMJ Ment Health. 2014;17(2):64.

Hunter JE, Schmidt FL. Fixed effects vs. random effects meta-analysis models: implications for cumulative research knowledge. Int J Sel Assess. 2000;8(4):275–92.

Schneeweiss S. Sensitivity analysis and external adjustment for unmeasured confounders in epidemiologic database studies of therapeutics. Pharmacoepidemiol Drug Saf. 2006;15(5):291–303.

Thabane L, Mbuagbaw L, Zhang S, Samaan Z, Marcucci M, Ye C, et al. A tutorial on sensitivity analyses in clinical trials: the what, why, when and how. BMC Med Res Methodol. 2013;13(1):1–12.

Cooper H, Hedges LV, Valentine JC, editors. The handbook of research synthesis and meta-analysis. New York: Russell Sage Foundation; 2019.

Mathur MB, VanderWeele TJ. Sensitivity analysis for publication bias in meta-analyses. J R Stat Soc Ser C Appl Stat. 2020;69(5):1091.

Liu Z, Yao Z, Li C, Liu X, Chen H, Gao C. A step-by-step guide to the systematic review and meta-analysis of diagnostic and prognostic test accuracy evaluations. Br J Cancer. 2013;108(11):2299–303.

Trikalinos TA, Balion CM, Coleman CI, Griffith L, Santaguida PL, Vandermeer B, Fu R. Chapter 8: Meta-analysis of test performance when there is a “gold standard”. J Gen Intern Med. 2012;27:56–66.

Russo MW. How to review a meta-analysis. Gastroenterol Hepatol. 2007;3(8):637.

Crowther M, Lim W, Crowther MA. Systematic review and meta-analysis methodology. Blood. 2010;116(17):3140–6.

Murad MH, Montori VM, Ioannidis JP, Jaeschke R, Devereaux PJ, Prasad K, et al. How to read a systematic review and meta-analysis and apply the results to patient care: users’ guides to the medical literature. JAMA. 2014;312(2):171–9.

Malone SM, Mitra AK, Nwanne A, Onumah NA, et al. Safety and efficacy of post-eradication smallpox vaccine as an mpox vaccine: a systematic review with meta-analysis. Int J Environ Res Public Health. 2023;20:2963. https://doi.org/10.3390/ijerph20042963.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Fouladkhah, A.C., Bagheri, M. (2024). Systematic Review and Meta-Analysis: Evidence-Based Decision-Making in Public Health. In: Mitra, A.K. (eds) Statistical Approaches for Epidemiology. Springer, Cham. https://doi.org/10.1007/978-3-031-41784-9_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-41784-9_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-41783-2

Online ISBN: 978-3-031-41784-9

eBook Packages: MedicineMedicine (R0)