Abstract

In this paper we discuss the Risk Analysis problem as it has been developed, offering a solution to crucial problems and offering food for thought for statistical generalisations. We try to explain why we need to keep the balance between Theory and Practice.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

At the early stage risk was involving to political or military games for a decision making with the minimum risk. The pioneering work of Quincy Wright [40] on the study of war was devoted to this line of thought. The Mathematics and Statistics involved, could be considered in our day as low-level, he applied eventually the differential equation theory with a successful application.

In principle Risk is defined as an exposure to the chance of injury or loss—it is a hazard or dangerous chance for an event under consideration. Therefore the probability of a damage, for the considered phenomenon (in Politics, Economy, Epidemiology, Food Science, Industry etc.) caused by external or internal factors has to be evaluated, especially the essential ones influence the Risk. That is why we refer eventually to Relative Risk (RR), as each factor influences the Risk in a different way. In principle the relative risk (RR) is the ratio of the probability of an outcome in an exposed group to the probability of an outcome in an unexposed group. That is why a value of RR = 1 means that the exposure does not affect the outcome and a “risk factor” is assigned when RR\(>\)1, i.e. when the risk of the outcome is increased by the exposure.

This is clear in Epidemiological studies where in principle it is needed to identify and quantitatively assess the susceptibility of a partition of the population to specify risk factors, so we refer to RR. For a nice introduction to statistical terminology for RR see Everitt [13, Chapter 12].

Such an early attempt was by John Graunt (1620–1674), founder of Demography, trying to evaluate “bills of Mortality” as he explained in his work “Observations”, while almost at the same time his friend Sir William Petty (1623–1687), economist and Philosopher, published the “Political Anatomy of Ireland”. So there was an early attempt to evaluate Social and Political Risk.

Still there is a line of thought loyal to the idea that Risk Analysis is only related to political problems through the Decision Theory; William Playfair (1759–1823) was among the first working with empirical data in 1796 publishing “For the Use of the Enemies of England: A Real Statement of the Finances and Resources of Great Britain”. Quincy Wright (1890–1970) in his excellent book “A study of War” offers a development of simple indexes, evaluating Risk successfully, as it has been pointed out for such an important problem as war.

The statistical work of Florence Nightingale (1820–1910) is essential as with her “Notes on Matters effecting the Health, Efficiency and Hospital Administration of the British Army” opened the problem of analysing Epidemiological Data, adopting the Statistical methods of that time.

It is really Armitage and Doll [2] who introduced the recent Statistical framework to the Cancer Problem. Latter Crump et al. [11] can be referred for their work on carcinogenic process, while [14] provided a global work for the Bioassays, and Megill [34] worked on Risk Analysis (RA) for Economical Data. It was emphasising that, at the early stages, the fundamental in RA was to isolate the involved variables. Still the Statistical background was not too high. But the adoption of the triangle distribution was essentially useful. The triangle distribution has been faced under a different statistical background recently, but still the triangle obtained from the mode, the minimum value its high and the maximum value of the data can be proved very useful, as a special case of trapezoidal distributions, see also Appendix 1. For a compact new presentation, while a more general framework was developed by Ngunen and McLachian [35]. The main characteristic of the triangular distribution is its simplicity and can be easily adopted in practice. There are excellent examples with no particular mathematical difficulty in Megill [34].

In Food Science the Risk Assessment problem is easier to be understood by those who are not familiar to RA. In the next section we discuss the existing Practical Background, which is not that easy to be developed, despite the characterisation as “practical”. Most of the ideas presented are from the area of Food Science where RA is very clear under a chemical analysis orientation.

In Sect. 3 the existing theoretical insight is discussed briefly and therefore the Discussion in Sect. 4 is based on Sects. 2 and 3.

2 Practical Background

Risk factors can be increased during the food processing and food can be contaminated due to filtering and cleaning agents or during packaging and storage. Therefore, in principle, chemical hazards can be divided in two primary categories:

-

(i)

Naturally occurring chemical hazards (mycotoxins, pyrrolizidine, alkaloids, polychlorinated biphenyls etc.)

-

(ii)

Added chemical hazards (pesticides, antibiotics, hormones, heavy metals etc.)

The effect of each chemical as a Risk factor has been studied and we refer briefly to mycotoxins as dairy products belong to the most susceptible foodstuffs (one possible reason humidity, among others) to be contaminated by them and might result to, Kitsos and Tsaknis [28] among others.

-

1.

Direct contamination

-

2.

Indirect contamination

Example 1 (Indirect Contamination)

Recall that due to decontamination bacteria become resistant and therefore interhospital various can appear. Moreover a number of countries have introduced or proposed regulations for the control and analysis of aflatoxins in food.

As far as milk is concerned, EU requires the maximum level of aflatoxin \(M_1\), \(\max M_1\) say, \(\max M_1=0.5\) mg/kg. The maximum tolerated level for aflatoxin \(M_1\), in dairy products, it is not the same all over the world and therefore it is regulated in some countries.

The problem of mixtures has been discussed from a statistical point of view, for the cancer problem, in Kitsos and Elder [23]. In practice the highly carcinogenic polychlorinated biophenyls (PCBs) are mixtures of chlorinated biphenyls with varying percentages of chlorine/weight. It has been noticed, Biuthgen et al. [3], that PCBs led to a worldwide contamination of the environment due to their physical/chemical properties. Moreover PCBs have been classified as probable human carcinogens, while no Tolerance Daily Intake (TDI), the main safety standards, have been established for them. Eventually the production of PCBs was banned in USA in 1979 and internationally in 2001.

Example 2

Dioxins occur as complex mixtures, Kitsos and Edler [23], and mixtures act through a common mechanism but vary in their toxic potency. As an example Tetrachlorodibenzo-p-dioxin (TCCD) has been classified as a human carcinogen, as there are epidemiological studies on exposure to 2,3,7,8- tetrachlorodizen-p-dioxin and cancer risk. It might not be responsible for producing substantial chronic disability in humans but there are experimental evidence for its carcinogenicity, McConnell et al. [33].

The TDI for dioxins is 1–4 pg TEQ/kg body-weight/day, which is exceeded in industrialised countries. Recall that Toxic Equivalence Quotient (TEQ) is the USA Environmental Protection Agency (EPA),with TEQ being the, threshold for safe dioxin exposure at Toxicity Equivalence of \(0.7\) picograms per kilogram of body weight per day.

The Lethal Dose is an index of the percentage P of the lethal toxicity LD\({ }_P\) of a given toxic substance or different type radiation. LD\({ }_{0.5}\) is the amount of given material at once that causes the death of 50% in the group of animals (usually rats and mice) under investigation. Furthermore the median lethal dose LD\({ }_{0.5}\) is widely used as a measure of the same effect in toxic studies. Not only the lethal dose but also the low percentiles need special consideration, see Kitsos [18], who suggested a sequential approach to face the problem.

Now if we assume that two components \(C_1\) and \(C_2\) are identical, except that \(C_1\) is thinned by a factor \(T<1\), then we can replace the same dose as \(d_1\) of \(C_1\) by an appropriate dose of \(C_2\), so that to have the same effect as dose \(d_1\). In such a case the effect of a combination of doses \(d_1\) and \(d_2\), for the components under consideration, \(C_1\) and \(C_2\) are: \(Td_1+d_2\) of \(C_2\), \(d_1+(1/T)d_2\) of \(C_1\) respectively. The factor T is known as relative potency of \(C_1\) to \(C_2\) and \(\lambda =1/T\) is called as relative potency of \(C_2\) to \(C_1\). Such simple but practical rules are appreciated by experimenters. Another practical problem in RA appears with the study of the involved covariates. The role of covariates, in this context, is of great interest and has been discussed by Kitsos [17].

Therefore, in principle, to cover as many as possible sources of risk as possible, we can say that the target in human risk assessment is the estimation of the probability of an adverse effect to human being, and the identification of such a source.

3 Theoretical Inside

In Biostatistics and in particular in Risk Analysis for the Cancer problem, the evolution of the Statistical applications can be considered in the over 1000 references in Edler and Kitsos [12]. The development of methods and the application of particular probabilistic models, Kopp-Schneider et al. [30] and statistical analyses appear on extended development after 1980. Recently, Stochastic Carcinogenesis Models, Dose Response Models on Modeling Lung Cancer Screening are medical ideas with a strong statistical insight which have been adopted by the scientific community, Kitsos [21].

The variance-covariance matrix is related to Fisher’s information matrix and it is the basis for evaluating optimal designs in chemical kinetics, Kitsos and Kolovos [24], while for a recent review of the Mathematical models, facing breast cancer see Mashekova et al. [32]. The Fisher’s information measure appears either in a parametric form, or in an entropy type. The former plays an important role to a number of Statistical applications, such as the optimal experimental design, the calibration problem, the variance estimation of the parameters in Logit model in RA, Cox [9, 10], etc. The latter is fundamental to the Information Theory. Both have been extended by Kitsos an Tavoularis [26].

Indeed: With the use of an extra parameter, which influences the “shape” of the distribution, the generalised Normal distribution was introduced. This is useful in cases where “fat tails” exist, i.e. the Normal distribution devotes 0.05 probability in details but there are cases where the distribution provides in “tails” more than 0.05 probability. Such cases are covered under the generalised Normal distribution.

The \(\gamma \)-ordered Normal Distribution emerged from the Logarithm Sobolev Inequality and it is a generalisation of the multivariable Normal distribution, with an extra parameter \(\gamma \) in the following, see also Appendix 2. It can be useful to RA to adopt the general cumulative hazard function, see (2), (3) below. Therefore a strong mathematical background exists, which is certainly difficult to be followed by toxicologists, medical doctors, etc who mainly work on RA. Still it has not been developed an appropriate software for it.

The Normal distribution has been extended by Kitsos and Tavoularis [26], with a rather complicated form, quite the opposite of the easy to handle the triangular distribution, see Appendix 1.

Let, as usual, \(\Gamma (a)\) be the gamma function and \(\Gamma (x,a)\) the upper incomplete gamma function. Then the cumulative distribution function (cdf) of the \(GN(\mu ,\sigma ^2;\gamma )\), say,

with \(\mu \) the position parameter, \(\sigma \) the scale parameter and \(\gamma \) an extra, shape parameter. In this line of thought Kitsos and Toulias [25] as well as Toulias and Kitsos [37] worked on the Generalised Normal Distribution \(GN(\mu ,\sigma ^2 ; \gamma )\) with \(\gamma \in \mathbf {R}-[0,1]\) being an extra shape parameter. This extra parameter \(\gamma \) makes the difference: when \(\gamma =2\) the usual Normal is obtained, with \(\gamma >0\) it is still normal with “heavy tails”.

Under this foundation the cumulative hazard function, \(H(\cdot )\) say, of a random variable \(X\sim GN(\mu ,\sigma ^2 ; \gamma )\) can be proved equal to

while

with

with \(\Gamma (a)\) being the gamma function and \(\Gamma (x,a)\) the upper incomplete gamma function.

Example 3

As \(\gamma \rightarrow \pm \infty \) the Generalised Normal Distribution tends to Laplace, \(L(\mu ,\sigma )\). Then it can be proved that:

while

See Toulias and Kitsos [37] for more examples.

Let X be the rv denoting the time of death. Recall that the future lifetime of a given time \(x_0\) is the remaining time \(X-x_0\) until death. Therefore the expected value, \(E(X)\), of the future life time can be evaluated . In principle it has to be a function of the involved survival function, Breslow and Day [5]. This idea can be extended with the \(\gamma \)-order Generalised Normal. Moreover for the future lifetime rv \(X_0\) at point \(x_0\), \( X\sim GN(\mu ,\sigma ^2 ; \gamma )\) the density function (df), the cdf can be evaluated and the corresponding expected future lifetime is

The above mentioned results, among others, provide evidence to discuss, that the theoretical inside is moving faster than the applications are needed such results. These comments need special consideration and further analysis. We try in Sect. 4.

4 Discussion

To emphasise how difficult the evaluation of Risk might be, we recall the Simpson’s paradox, Blyth [4], when three events \(A,B,C\) are considered. Then if we assume

we might have

Therefore there is a prior, a scepticism of how “sure” a procedure might be. In Epidemiological studies it is needed to identify and quantitatively assess the susceptibility of a portion of the population to specific Risk factors. It is assumed that they have been exposed to the same possible hazardous factors. The difference that at the early stage of the study, is only on a particular factor which acts as a susceptibility factor. In such cases Statistics provides the evaluation of the RR. That is why J. Grant was mentioned in Sect. 1.

Concerning the \(2 \times 2\) setup, for correlated binary response, the backbone of medical doctors research, a very practical line of thought, with a theoretical background was faced by Mandal et al. [31] and is exactly the spirit we would like to encourage, following Cox believes, Kitsos [22] of how Statistics can support other Sciences, especially medicine. They provide the appropriate proportions and their variances in a \(2 \times 2\) setup, so that 95% confidence interval can be constructed. The Binary Response problem has been early discussed by Cox [9] while for a theoretical approach for Ca problems see Kitsos [20].

The area of interest of RA is wide; it covers a number of fields, with completely different backgrounds sometimes, such as Politics and food Science. But Food Science is related to Cancer problems as we briefly discussed.

Excellent Economical studies with “elementary” statistical work are covered by Megill [34] who provides useful results as Wright [40] did earlier. Therefore we oscillate between Practice and Theory. We have theoretical results, waiting to be applied as in the 60s we had Cancer problems waiting for statistical considerations.

The cancer problem was eventually the problem under consideration and Sir David Cox provided a number of examples working on this, Cox [8,9,10], and offers ideas of how we can proceed on medical data analysis, Kitsos [22], trying to keep it simple. In contrast Tan [36], offers a completely theoretical approach, understanding perhaps from mathematicians, Kopp-Schneider [29] reviews the theoretical stochastic models and in lesser extent Kitsos [19, 21], Kitsos and Toulias [25] the appropriate modeling, which are difficult to be followed by medical doctors and not only.

A compromise between theory and practice has been attended in Edler and Kitsos [12],where different approaches facing cancer are discussed, while Cogliano et al. [7] discuss more toxicological oriented cases. The logit method took some time to be appreciated, but provides a nice tool for estimating Relative Risk, Kitsos [20], among others. The role of covariates in such studies, and not only for cancer it is of great interest and we believe is needed to be investigated, Kitsos [17]. In this paper we provided food for thought for a comparison of an easy to understand work with the triangular distribution and the rather complicated Generalised Normal, see Appendix 2. It is not only a matter of choice. It depends heavily on the structure of data—we would say graph your data and then proceed your analysis.

The logit methods can be applied on different applied areas. Certain qualities have been adopted for different areas from international organisations, see IARC [16], WHO [39], US EPA [38] among others. As it is mentioned in Sect. 2, as far as Food Risk Assessment concerns, Fisher et al. [15], Kitsos and Tsaknis [28], Binthgen et al. [3] among others, there are more chemical results and guidance for the involved risk, while Amaral-Mendes and Pluyger [1] offer an extensive list of Biomarkers for Cancer Risk Assessment in humans.

In Cancer problems, and not only, the hazard function identification is crucial and only Statistical Analysis can be adopted, Armitage and Doll [2], Crump et al. [11], Cogliano et al. [7], Kitsos [17, 18]. The extended work, based on generalised Normal distribution, mentioned in Sect. 2 in a global form, generalising the hazard function, needs certainly not only an appropriate software cover but also to bridge the differences between statistical line of thought and applications.

Meanwhile recent methods can be applied to face cancer, Carayanni and Kitsos [6], where the existent software offers a great support. More geometrical knowledge is needed, or even fractals, to describe a tumour. But the communication with Medicine might be difficult.

We need to keep the balance of how “Statistics in Action” has to behave offering solutions to crucial problems of Risk Analysis, see Mandal et al. [31], while the theoretical work of Tan [36] adds a strong background but not useful to practical problems. Since the time that Cox [10] provided a general solution for hazard functions, there is an extensive development of Statistical Theory for Risk problems.

It might be eventually helpful to offer results, but now we believe it is also very crucial to offer solutions, to the corresponding fields, working in Risk Analysis. That is the practical background is needed, we believe, to be widely known, as it is easier to be absorbed from practician and the theoretical framework is needed to be supported from the appropriate software so that to bridge the gap with practical applications.

References

Amaral-Mendes, J.J., Pluygers, E.: Use of biochemical and molecular biomarkers for cancer risk assessment in humans. In: Perspectives on Biologically Based Cancer Risk Assessment, pp. 81–182. Springer, Boston (1999)

Armitage, P., Doll, R.: The age distribution of cancer and a multi-stage theory of carcinogenesis. Br. J. Cancer, 8, 1–12 (1954)

Blüthgen, A., Burt, R., Heeschen, W.H.: Heavy metals and other trace elements. Monograph on Residues and Contaminants in Milk and Milk Products. IDF Special Issue 9071, 65–73 (1997)

Blyth, C.R.: On Simpson’s paradox and the sure-thing principle. J. Am. Stat. Assoc. 67(338), 364–366 (1972)

Breslow, N.E., Day, N.E.: Statistical methods in cancer research. Volume I-The analysis of case-control studies. IARC Sci. Publ. (32), 5–338 (1980)

Carayanni, V., Kitsos, C.: Model oriented statistical analysis for cancer problems. In: Pilz, J., Oliveira, T., Moder, K., Kitsos, C. (eds.) Mindful Topics on Risk Analysis and Design of Experiments, pp. 37–53. Springel, (2022)

Cogliano, V.J., Luebeck, E.G., Zapponi, G.A. (eds.): Perspectives on Biologically Based Cancer Risk Assessment (23). Springer Science & Business Media, Berlin (2012)

Cox, D.R.: Tests of separate families of hypotheses. In: Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability 1(0), 96 (1961)

Cox, D.R.: Analysis of Binary Data. Chapman and Hall, London (1970)

Cox, D.R.: Regression models and life-tables. J. R. Stat. Soc. Ser. B Methodol. 34(2), 187–202 (1972)

Crump, K.S., Hoel, D.G., Langley, C.H., Peto, R.: Fundamental carcinogenic processes and their implications for low dose risk assessment. Cancer Res. 36(9 Part 1), 2973–2979 (1976)

Edler, L., Kitsos, C. (eds.) Recent Advances in Quantitative Methods in Cancer and Human Health Risk Assessment. Wiley (2005)

Everitt, B.S.: Statistical Methods for Medical Investigations, pp. 105–115. Edward Arnold, London (1994)

Finney, D.J.: Probit Analysis. Cambridge University Press, Cambridge (1971)

Fisher, W.J., Trisher, A.M., Schiter, B., Standler, R.J.: Contaminations of milk and dairy products. (A) Contaminants resulting from agricultural and dairy practices. In: Roginski, H., Fuquary, J.W., Fox, P.F. (eds.) Encyclopedia of Dairy Sciences. Academic Press, Oxford (2003)

IARC: Polychlorinated dibenzo-paradioxins and polychlorinated dibenzofurans. In: Monographs of the Evaluation of the Carcinogenic Risk of Chemicals to Humans (69). IARC, Lyon (1977)

Kitsos, C.P.: The role of covariates in experimental carcinogenesis. Biom. Lett. 35(2), 95–106 (1998)

Kitsos, C.: Optimal designs for estimating the percentiles of the risk in multistage models of carcinogenesis. Biom. J.: J. Math. Methods Biosci. 41(1), 33–43 (1999)

Kitsos, C.P.: The cancer risk assessment as an optimal experimental design. Folia Histochem. Cytobiol. Suppl. 39(1), 16 (2001)

Kitsos, C.P.: On the logit methods for Ca problems. In: Vonta, F. (ed.) Statistical Methods for Biomedical and Technical Systems, pp. 335–340. Limassol, Cyprus (2006)

Kitsos, C.P.: Cancer Bioassays: A Statistical Approach. LAMBERT Academic Publisher, Saarbrucken, 326, 110 (2012)

Kitsos, C.P.: Sir David Cox: a wise and noble Statistician (1924–2022). EMS Mag. 124, 27–32 (2022)

Kitsos, C.P., Edler, L.: Cancer risk assessment for mixtures. In: Edler, L., Kitsos, C. (eds.) Recent Advances in Quantitative Methods in Cancer and Human Health Risk Assessment, pp. 283–298. Wiley, (2005)

Kitsos, C.P., Kolovos, K.G.: A compilation of the D-optimal designs in chemical kinetics. Chem. Eng. Commun. 200(2), 185–204 (2013)

Kitsos, C.P., Toulias, T.L.: Generalized information criteria for the best logit model. In: Oliveira, T., Kitsos, C., Rigas, A., Gulati, S. (eds.) Theory and Practice of Risk Assessment, pp. 3–20. Springer, Cham (2015)

Kitsos, C.P., Tavoularis, N.K.: Logarithmic Sobolev inequalities for information measures. IEEE Trans. Inf. Theory 55(6), 2554–2561 (2009)

Kitsos, C.P., Toulias, T.L.: On the family of the \(\gamma \)-ordered normal distributions. Far East J. Theor. Stat. 35(2), 95–114 (2011)

Kitsos, C.P., Tsaknis, I.: Risk analysis on dairy products. In: Proceedings of the International Conference on Statistical Management of Risk Assessment, Lisbon 30–31 Aug (2007)

Kopp-Schneider, A.: Carcinogenesis models for risk assessment. Stat. Methods Med. Res. 6(4), 317–340 (1997)

Kopp-Schneider, A., Burkholder, I., Groos, J.: Stochastic carcinogenesis models. In: Edler, L., Kitsos, C. (eds.) Recent Advances in Quantitative Methods in Cancer and Human Health Risk Assessment, pp. 125–135. Wiley, (2005)

Mandal, S., Biswas, A., Trandafir, P.C., Chowdhury, M.Z.I.: Optimal target allocation proportion for correlated binary responses in a 2\(\times \)2 setup. Stat. Probab. Lett. 83(9), 1991–1997 (2013)

Mashekova, A., Zhao, Y., Ng, E.Y., Zarikas, V., Fok, S.C., Mukhmetov, O.: Early detection of the breast cancer using infrared technology–a comprehensive review. Therm. Sci. Eng. Prog. 27, 101142 (2022)

McConnell, E.E., Moore, J.A., Haseman, J., Harris, M.W.: The comparative toxicity of chlorinated dibenzo-p-dioxins in mice and guinea pigs. Toxicol. Appl. Pharmacol. 44(2), 335–356 (1978)

Megill, R.E.: An Introduction to Risk Analysis. Penn. Well. Pub. Co., Tulsa (1984)

Nguyen, H.D., McLachlan, G.J.: Maximum likelihood estimation of triangular and polygonal distributions. Comput. Stat. Data Anal. 102, 23–36 (2016)

Tan, W.Y.: Stochastic Models for Carcinogenesis, vol. 116. CRC Press, Boca Raton (1991)

Toulias, T.L., Kitsos, C.P.: Hazard rate and future lifetime for the generalized normal distribution. In: Oliveira, T., Kitsos, C., Oliveira, A., Grilo, L. (eds.) Recent Studies on Risk Analysis and Statistical Modeling, pp. 165–180. Springer, Cham (2018)

US EPA: Help Manual for Benchmark Pose Software (2000)

World Health Organization: Guidelines for air quality (No. WHO/SDE/OEH/00.02). World Health Organization (2000)

Wright, Q.: A Study of War. University of Chicago Press, Chicago (1964)

Acknowledgements

I would like to thank the referees for their comments which improved the final version of this paper. The discussions with Associate Professor S. Fatouros are very much appreciated.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix 1

Let \(X_1, X_2,..., X_n\) be a set of n independent, identically distributed, random variables with

with these three points \(m,\, M_0,\, M\) we can define a plane triangle with height \(h=\frac {2}{M-m}\) and the points \(m,\, M_0,\, M\) on the basis of the triangle. Notice that for a continuous random variable the mode is not the value of X most likely to occur, as it is the case for discrete random variables. It is worth it to notice that the mode of a continuous random variable corresponds to that x value/values at which the probability density function (pdf) \(f(\cdot )\) reaches a local maximum, or a peak, i.e. is the solution of the equation \(\frac {df(x)}{dx} = 0\) See Megill [34], for the definitions and a Euclidean Geometry development.

The probability density function of the triangle distribution is defined as

with \(d_1=M_0-m,\, d_2=M-M_0\).

If we let \(v=(m,M_0,M)\) and \(u=(M,m,M_0)\), \(\mathbf {1}=(1,1,1)\), then

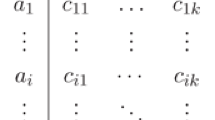

with \(\Vert \cdot \Vert \) the Euclidean norm and \(<\cdot ,\cdot >\) the inner product of two vectors (Fig. 1, see also Megiil [34]).

It is helpful that in triangle distribution the mode lies within the range \(R\simeq 6\sigma \), \(\sigma \) being the standard deviation.

See also Nguyen and McLachlan [35] for a more general analysis for the triangle distribution.

Appendix 2

Let p be the number of parameters involved in the multivariate normal distribution. The induced from the Logarithm Sobolev Inequality (LSI), \(\gamma \)-ordered Normal distribution \(GN^p(\gamma ;\mu ,\Sigma )\) behaves as a generalized multivariate normal distribution, with an extra parameter, with \(\gamma \in \mathbb {R}\) and it is assumed that \(\gamma _1=\frac {\gamma }{\gamma -1}>0\).

The density function of the \(\gamma \)-ordered Normal is defined as

where

and the normalizing factor equals to \(C(p,\gamma )\) equals to

Notice that, from the definition in (2) the second-ordered Normal is the known normal distribution, i.e. \(GN^p(2;\mu , \Sigma )=N(\mu , \Sigma )\). In the spherically contoured case, i.e. when \(\Sigma =\sigma ^2 I_p\), the density \(f_\gamma \) is reduced to the form

For a random variable X following \(GN^p(\gamma ,\mu , \sigma ^2I_p)\) we can evaluate its mode, \(\text{Mode}(X)\), which is achieved due to the symmetry as in classical Normal distribution, for \(x=\mu \) , i.e.

which can be easily verified for the classical normal with \(\gamma =2\) and \(p=1\) (single variable), recall also the symmetry, see Kitsos and Toulias [27] for details.

Theorem 1 (Kitsos and Toulias [27])

The spherically contoured\(\gamma \)-order Generalised Normal distribution, coincides with the p-variate normal distribution when\(\gamma =2\), with the p -variate uniform distribution when\(\gamma =1\), and with the p-variate Laplace distribution when\(\gamma =\pm \infty \).

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Kitsos, C.P. (2023). Risk Analysis in Practice and Theory. In: Kitsos, C.P., Oliveira, T.A., Pierri, F., Restaino, M. (eds) Statistical Modelling and Risk Analysis. ICRA 2022. Springer Proceedings in Mathematics & Statistics, vol 430. Springer, Cham. https://doi.org/10.1007/978-3-031-39864-3_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-39864-3_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-39863-6

Online ISBN: 978-3-031-39864-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)