Abstract

We previously defined ItemSB as an extension of the concept of frequent itemsets and a new interpretation of the association rule expression, which has statistical properties in the background. We also proposed a method for its discovery by applying an evolutionary computation called GNMiner. ItemSB has the potential to become a new baseline method for data analysis that bridges the gap between conventional data analysis using frequent itemsets and statistical analyses. In this study, we examine the statistical properties of ItemSB, focusing on the setting between two continuous variables, including their correlation coefficients, and how to apply ItemSB to data analysis. As an extension of the discovery method, we define ItemSB that focuses on the existence of differences between two datasets as contrast ItemSB. We further report the results of evaluation experiments conducted on the properties of ItemSB from the perspective of reproducibility and reliability using contrast ItemSB.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The extraction of frequent itemsets and association rules is widely used as a basic technique in data mining [1]. An association rule expresses the fact that “if an instance (tuple) satisfies P, then it also satisfies Q” in a relational database. It is expressed as “if P then Q (\(P \rightarrow Q\))” where P and Q are conditions on database attributes (items). When Q is a class attribute, it can be used for rule-based classification. In the case of class classification using association rules, the expressive form of the rules is readable and considered useful for interpreting the reasons for class classification.

A method has been proposed for directly discovering class association rules that satisfy flexible conditions set by the user without constructing a frequent itemset or going through any manual process, using a network structure and an evolutionary computation method that accumulates results continuously over generations [2]. An extension of the method for determining an attribute combination P such that Q provides a small region in a continuous variable X has also been reported [3].

In the case of using the expression “if P then Q,” if this is an interesting rule, it can indicate that in the set of instances satisfying P (the set of records satisfying P, a small set taken from the whole data), there is a statistical characteristic Q in terms of the whole data. For example, if Q is a class attribute, it can indicate that the ratio of class membership is a characteristic of the population satisfying P. Furthermore, in the case of a small distribution of continuous quantities, the values of the continuous variable X are indicated to show a characteristic distribution in the population satisfying P. Thus, the interpretation of the association rule is to consider a group of cases (a group of records) satisfying P as a single cluster and extract the cluster with the label satisfying P. Furthermore, the basis for extracting the cluster is in Q. In this study, we consider Q in the expression “if P then Q” as an expression using the conventional statistical analysis method and treat a method to discover that “a statistical expression Q is found in a population that satisfies P.” Specifically, when Q comprises two continuous variables X and Y in the database, this method reveals that X and Y are correlated in a small population with attribute combination P, even though X and Y are not correlated in the entire database. In this study, we address correlations for two continuous variables, which are expected to realize conditional settings for the ratio and distribution of X and Y to be found and are positioned as a basic method to link large-scale data and conventional statistical analysis methods. We referred to this attribute combination P as ItemSB (Itemsets with statistically distinctive backgrounds) and proposed a method for its discovery [4]. ItemSB has the potential to become a new baseline method for data analysis that bridges the gap between conventional data analysis using frequent itemsets and statistical analysis.

In this paper, to examine the characteristics of ItemSB, we propose a method to discover ItemSB by focusing on the differences between two sets of data and report the results of evaluation experiments on the characteristics of ItemSB. Applying conventional statistical analysis methods to an entire dataset can sometimes be challenging in the analysis of big data. Therefore, to effectively apply statistical analysis methods to big data, statistical analysis should be conducted after establishing a certain type of stratum and dividing the records into subgroups. Typically, this subgrouping is defined a priori by the user; however, it is expected to be determined in an exploratory manner. However, there may be cases where the user wishes to determine a small group in which there is a strong correlation between the two variables of interest. Techniques to understand and apply the characteristics of ItemSB are expected to be the basis of big data mining methods that are characterized by a combination of this subgrouping and statistical analysis.

2 Contrast ItemSB

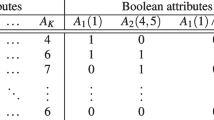

In this study, we define ItemSB as a set of rules that satisfy the conditions predetermined by the user [4]. Specifically, the set of attribute combinations \(A_i\) that appear to have statistical characteristics regarding X and Y in Table 1 is determined by expressing the following rule:

where \(m_X\), \(s_X\), \(m_Y\), and \(s_Y\) are the mean values and standard deviations of X and Y, respectively, and \(R_{X, Y}\) is the correlation coefficient between X and Y for instances containing the itemset.

We proposed a method for discovering ItemSBs using a genetic network programming (GNP) structure characterized by a directed graph network structure and an evolutionary computation method (GNMiner) [4]. GNMiner is an evolutionary computational method that employs an evolutionary strategy to accumulate the outcomes obtained in each generation. Unlike general evolutionary computation methods, the solution is not the best individual of the last generation; rather, numerous interesting rule candidates are represented and searched for in the individuals in evolutionary computation. Consequently, when an interesting rule is found, it is accumulated in a rule library. Therefore, it has the following characteristics: setting the goodness of fit during the evolutionary operation has little influence on performance; rule discovery can be terminated at any time because it is an accumulative problem-solving method; the flexibility of the individual network structure allows a variety of rules expressions. However, it is limited by its inability to discover all the rules that satisfy the set conditions.

In data mining from dense databases, it is interesting to find differences between two sets of data collected in different settings (A/B) for the same subject, or between two groups (A/B) of data collected for the same subject. When \(I=(A_j=1) \wedge \dots \wedge (A_k=1)\) is the same ItemSB obtained from Databases A and B, we define contrast ItemSB as follows: For instance, \(I^A\): \(I \rightarrow (m_X^A, s_X^A, m_Y^A, s_Y^A, R_{X, Y}^A) \) in Database A satisfies the condition given by the user. \(I^B\): \(I \rightarrow (m_X^B, s_X^B, m_Y^B, s_Y^B, R_{X, Y}^B) \) in database B does not satisfy the same condition. The basic idea of contrast ItemSB discovery was introduced in [4].

In this study, an interesting feature of contrast ItemSB is defined as follows:

where \(R_{min}\) is a constant provided by the user in advance. In addition, the conditions for support (frequency of occurrence) are added to interestingness, such as

where \(supp_{min}\) denotes a constant provided in advance by the user. To evaluate the reproducibility and reliability of ItemSB, we used an interest setting different from that in [4].

3 Experimental Results

The YPMSD datasets [5] from the UCI machine learning repository [6] were used to evaluate the proposed method.

The characteristics of the YPMSD dataset are summarized as follows:

-

predicting the release year of a song based on audio features;

-

90 attributes without missing values (T(j) (\(j=0, \dots , 89\)). The target attribute value (Y) is the year ranging from 1922 to 2011;

-

463,715 instances for training and 51,630 instances for testing.

We set \(X=T(0)\) and discretized continuous attribute values T(j) \((j=1, \dots , 89)\) to sets of attributes \(A_{3j-2}\), \(A_{3j-1}\), and \(A_{3j}\) with values of 1 or 0. Discretization was performed in the same manner as that described in [4]. Two hundred sixty-seven transformed attributes (\(A_1, \dots , A_{267}\)) and continuous attributes X and Y were used. The first 100,000 of the 463,715 instances were used as Database A, and 51,630 testing instances were used as Database B. The detailed experimental setup, including the GNP setting, was the same as that described in [4]. The final solution was a set of ItmeSBs with no duplicates obtained after 100 independent rounds of trials.

The following conditions were used for contrast ItemSB discovery:

The purpose of this experiment was to gain insight into the reproducibility of ItemSBs found between the two databases such that reliable ItemSB discovery could be performed. The fitness of a GNP individual was defined as

where \(I_c\) is the set of suffixes of extracted contrast ItemSBs satisfying (4) and (5) in the GNP individual. \(n_P(I) \) is the number of attributes in I, and we set \(n_P(I) \le 6\). \(c_{new}(I) \) is a constant for novelty of I.

After 100 rounds of discovery, 133,475 ItemSBs were obtained. The scatter plots of the support value of ItemSB, correlation coefficient, mean values of X and Y, and standard deviations of X and Y for the 133,457 ItemSBs are shown in Fig. 1. Figure 2 shows the scatter plots for the 9,365 ItemSBs satisfying the additional condition \(supp_{min}=0.015\). As shown in Figs. 1 and 2, contrast ItemSB tended to be absent from ItemSB with large support values. Additionally, the values of the correlation coefficients tended to differ between Databases A and B. However, the mean values of X and Y were reproducible. Figure 2 focuses on ItemSB with relatively large support values; however, compared to Fig. 1, the range of the mean values of X and Y is narrower, resulting in a skewed distribution. This indicates that when using ItemSB for classification or stratification by subgroup, it is recommended to use the ItemSB with a relatively high frequency of occurrence. However, ItemSB, which had a comparatively high frequency of occurrence, may not be sufficient to cover all cases. Even if ItemSBs with large correlation coefficients are found, their frequency of occurrence is low, and the correlation coefficient values may not be reproducible when ItemSBs are examined between two datasets collected under the same conditions.

These results suggest that the frequency of occurrence (support) is reproducible and reliable, whereas itemsets are conventionally used based on their frequency of occurrence. The same was considered true for mean values. However, when using complex values such as the correlation coefficient as a statistical feature of ItemSB, evaluating their reliability in the process of discovery is considered an issue.

4 Conclusions

As an extension of the concept of frequent itemsets and a new interpretation of the association rule expression, we defined ItemSB as a set of items with statistical properties as the background and discussed a method of finding ItemSB by applying an evolutionary computation called GNMiner. In this study, we discussed the statistical properties of ItemSB as a basis for big data analysis and its application in forecasting and classification, focusing on setting two continuous variables as the statistical background of ItemSB. Based on experimental results using publicly available data, it is necessary to consider the reproducibility of the characteristics of ItemSB even for small groups with the same combination of items when the correlation coefficient is used as the statistical background of ItemSB. In the future, we aim to establish desirable ItemSB discovery conditions in collaboration with researchers with expertise in the data to which ItemSB is to be applied and to improve the reproducibility and reliability of the ItemSB discovery method in the discovery process.

References

Agrawal, R., Srikant, R.: Fast algorithms for mining association rules. In: Proceedings of 20th International Conference VLDB, pp. 487–499 (1994)

Shimada, K., Hirasawa, K., Hu, J.: Class association rule mining with chi-squared test using genetic network programming. In: Proceedings of the IEEE Conference on Systems, Man, and Cybernetics, pp. 5338–5344 (2006)

Shimada, K., Hanioka, T.: An evolutionary method for associative local distribution rule mining. In: Perner, P. (ed.) ICDM 2013. LNCS (LNAI), vol. 7987, pp. 239–253. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-39736-3_19

Shimada, K., Arahira, T., Matsuno, S.: ItemSB: Itemsets with statistically distinctive backgrounds discovered by evolutionary method. Int. J. Semant. Comput. 16(3), 357–378 (2022)

Bertin-Mahieux, T., Ellis, D.P.W., Whitman, B., Lamere, P.: The million song dataset. In: Proceedings of 12th International Society for Music Information Retrieval Conference, pp. 591–596 (2011)

Kelly, M., Longjohn, R., Nottingham, K.: The UCI machine learning repository. https://archive.ics.uci.edu

Acknowledgment

This study was partially supported by JSPS KAKENHI under Grant Number JP20K11964.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Shimada, K., Matsuno, S., Saito, S. (2023). Discovery of Contrast Itemset with Statistical Background Between Two Continuous Variables. In: Wrembel, R., Gamper, J., Kotsis, G., Tjoa, A.M., Khalil, I. (eds) Big Data Analytics and Knowledge Discovery. DaWaK 2023. Lecture Notes in Computer Science, vol 14148. Springer, Cham. https://doi.org/10.1007/978-3-031-39831-5_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-39831-5_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-39830-8

Online ISBN: 978-3-031-39831-5

eBook Packages: Computer ScienceComputer Science (R0)