Abstract

In recent years, as the increases of people’s concerns on environmental and body safety, various image-based detection techniques and research have gained wide attention. Currently, object-detection algorithms can be generally divided into two categories: traditional ones which extract features manually, and deep learning-based approaches that automatically extract features from images. Since the former requires a lot of manpower, material resources, and financial costs and consumes a lot of time to screen abnormal images, it no longer meets the urgent needs by our societies. In other words, more intelligent identification systems are required.

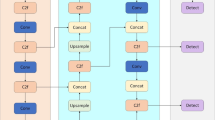

For society security reason, if different types of weapons, such as sticks, knives, and guns, can be detected in surveillance images, this can effectively prevent the chance of gangsters carrying weapons and acting fiercely or seeking revenge. To identify weapons, we need to distinguish them from other surveillance objects and images in a real-time manner. But most cameras have limited computing power, and images captured in the real world have their own problems, such as noise, blur, and rotation jitter, which need to be solved if we want to correctly detect weapons. Therefore, in this study, we develop a weapon detection system for surveillance images by employing a deep learning model. The intelligent tool used for image detection is YOLO (You Only Look Once)-v5, a lightweighting architecture of YOLO series and Sohas (Small Objects Handled Similarly to a weapon) dataset are adopted for image detection comparison. According to our simulation results, we successfully reduced the number of parameters in the YOLOv5s model by substituting the backbone with Shufflenetv2, replacing the PANet upsample module in the neck with the CARAFE (Content-Aware ReAssembly of Features upsample) upsample module, and replacing the SPPF (Spatial Pyramid Pooling-Fast) module with three lightweight options of simp PPF. These changes resulted in a 16.35% reduction in the parameter size of the YOLOv5s model, a 30.38% increase in FLOPS computational efficiency, and a decrease of 0.024 in mAP@0.5.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Nath, S.S., Mishra, G., Kar, J., Chakraborty, S., Dey, N.: A survey of image classification methods and techniques. In: 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), pp. 554–557. IEEE (2014)

Ruiz-del-Solar, J., Loncomilla, P., Soto, N.: A survey on deep learning methods for robot vision. arxiv preprint arXiv:1803.10862 (2018)

Deng, L., Liu, Y. (eds.): Deep Learning in Natural Language Processing. Springer, Singapore (2018). https://doi.org/10.1007/978-981-10-5209-5

Xiao, Y., et al.: A review of object detection based on deep learning. Multimed. Tools Appl. 79(33–34), 23729–23791 (2020). https://doi.org/10.1007/s11042-020-08976-6

Lee, S., Kim, N., Paek, I., Hayes, M.H., Paik, J.: Moving object detection using unstable camera for consumer surveillance systems. In: IEEE International Conference on Consumer Electronics (ICCE), Jan., pp. 145–146 (2013)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Ouyang, W., Wang, X.: Joint deep learning for pedestrian detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2056–2063 (2013)

Han, F., Shan, Y., Cekander, R., Sawhney, H.S., Kumar, R.: A two-stage approach to people and vehicle detection with hog-based svm. In: Performance Metrics for Intelligent Systems 2006 Workshop, Aug., pp. 133–140 (2006)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, vol. 28 (2015)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning, June, pp. 448–456. PMLR (2015)

Maas, A.L., Hannun, A.Y., Ng, A.Y.: Rectifier nonlinearities improve neural network acoustic models. In: Proceedings of International Conference on Machine Learning, vol. 30, no. 1, p. 3, Atlanta, Georgia, June (2013)

https://www.researchgate.net/figure/Architectures-of-YOLO-v4-and-YOLO-v5s_fig4_351625277

Wang, Y., Zhang, R., Li, Z.: An improved convolutional neural network for object detection. IEEE Access 9, 95112–95123 (2021)

Angelova, A., Krizhevsky, A., Vanhoucke, V., Ogale, A., Ferguson, D.: Real-time pedestrian detection with deep network cascades (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Su, TY., Leu, FY. (2023). The Design and Implementation of a Weapon Detection System Based on the YOLOv5 Object Detection Algorithm. In: Barolli, L. (eds) Innovative Mobile and Internet Services in Ubiquitous Computing . IMIS 2023. Lecture Notes on Data Engineering and Communications Technologies, vol 177. Springer, Cham. https://doi.org/10.1007/978-3-031-35836-4_30

Download citation

DOI: https://doi.org/10.1007/978-3-031-35836-4_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-35835-7

Online ISBN: 978-3-031-35836-4

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)