Abstract

Disaster management is a crucial process that aims at limiting the consequences of a natural disaster. Disaster-related data, that are heterogeneous and multi-source, should be shared among different actors involved in the management process to enhance the interoperability. In addition, they can be used for inferring new information that helps in decision making. The evacuation process of flood victims during a flood disaster is critical and should be simple, rapid and efficient to ensure the victims’ safety. In this paper, we present an ontology that allows integrating and sharing flood-related data to various involved actors and updating these data in real time throughout the flood. Furthermore, we propose using the ontology to infer new information representing evacuation priorities of places impacted by the flood using semantic reasoning to assist in the disaster management process. The evaluation results show that it is efficient for enhancing information interoperability as well as for inferring evacuation priorities.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Natural disasters, such as floods, are adverse events resulting from earth’s natural processes. They could lead to severe consequences including threatening people’s lives, disruption of their normal life and other physical damages in properties, infrastructures and economy. From here comes the need for a disaster management process to limit its consequences. Disaster-related data can be provided from various data sources, and they are usually stored individually. Various actors are involved in the disaster management process; therefore, interoperability problems are common in such situations. The interoperability is defined as the ability of systems to provide services to and accept services from other systems and make them operate effectively [16], and its absence impacts the disaster management process and could adversely affect disaster response efforts [3]. The problem thus lies in the lack of formalized and structured knowledge, the proper sharing and propagation of this knowledge among different parties involved in the flood management process and thus in a delayed decision making.

The success of the disaster management process is interpreted as “getting the right resources to the right place at the right time; to provide the right information to the right people to make the right decisions at the right level and the right time” [16]. Disaster management is defined as a lifecycle of four phases: mitigation, preparedness, response and recovery [4]. The safety of the population during a disaster is the most important concern; therefore, the evacuation process of victims in the disaster response phase should immediately take place. This process is handled by domain experts, that are the firefighters responsible for taking actions concerning the evacuation. It is a critical process that must respect constraints of efficiency, simplicity and rapidness to ensure the population’s safety.

This work is conducted in the frame of “ANR inondations” e-floodingFootnote 1. This project focuses on the mitigation and response phases where it aims at integrating several disciplinary expertises to prevent flash floods and to experiment the effects of decision making on two timescales: short-term and long-term. The short-term timescale aims at optimizing the disaster management process during the disaster, while the long-term timescale aims at improving the territories’ resilience for risk prevention from five years to ten years after the disaster. Our work lies in the short-term timescale of the project where we propose assisting in the flood disaster response phase. The objective of our work is two-fold. First, we aim at proposing a solution to the interoperability problem through managing flood-related data and sharing them among different actors involved in the management process. Second, we aim at assisting the experts in decision making concerning victims’ evacuation through proposing evacuation priorities to the demand points in a flooded area. We define a demand point as a place that can be impacted by a flood and thus needs to be evacuated if it contains population.

Heterogeneous multi-source data can be structured by considering ontologies. An ontology is defined as a formal, explicit specification of a shared conceptualization [14]. It allows a structuring and a logical representation of knowledge through defining concepts and relations among concepts thus defining a shared vocabulary. Ontology-driven systems have gained popularity as they enable semantic interoperability, flexibility, and reasoning support [12]. Using the concepts and relations of the ontology, we can form a knowledge graph by integrating the data. Ontology-based approaches have been proposed in the domain of natural disasters for information management and sharing among different actors involved in the flood management process as well as for inferring new information. In this work, we propose an ontology that allows managing and sharing information to enhance the interoperability. Using this ontology, we then aim at assisting the firefighters responsible for the evacuation process in taking decisions through proposing evacuation priorities to all demand points in the flooded area. The paper is organized as follows. Section 2 discusses the related work in the domain of flood disasters. Section 3 discusses our proposed approach. Enhancing the interoperability is discussed in Sect. 4 and inferring evacuation priorities is discussed in Sect. 5. The evaluation of our approach is presented in Sect. 6 and the conclusion and the future work are discussed in Sect. 7.

2 Related Work

Ontologies have been widely proposed in the literature in various domains including the domain of flood disasters. We distinguish two objectives of the use of ontologies in this domain: information management and sharing as well as reasoning to infer new information. In this section, we discuss the related work for these two objectives.

2.1 Ontologies for Information Management and Sharing

Ontology-based approaches have been proposed in the domain of flood disasters for managing and sharing the information among different actors involved in the disaster management process. In [5], they build an ontology for integrating flood-related data to ensure the coordination of response activities among different agencies involved in the management process and to provide up-to-date information facilitating the decision making by the management committee chairman. Another ontology is proposed by [11] for integrating local knowledge in the flood management process; they define local knowledge as the knowledge comprising preferences of stakeholders and decision makers. These preferences are expressed by describing the data through their proposed ontology. They define concepts describing events and their properties such as “hazard” and “vulnerability”, and they define other concepts describing population, material infrastructure and elements at risk. In [6], they present their ontology that is built to enhance the information sharing among different actors handled by different systems in organizations. They manage static data that don’t change during the flood as well as dynamic data that evolve throughout the flood. They describe static data through concepts including “area”, “flood event” and “flood evacuation” and dynamic data through concepts representing coordination and production acts concerning the disaster. A flood ontology is proposed by [17] for solving the problems of data inaccuracy or unavailability among different agencies. They aim at building an ontology for each agency and integrating them in a global ontology to allow information sharing among different agencies. The ontology of evacuation centers include concepts describing general data about victims and evacuation centers.

2.2 Ontology-Based Approaches for Inferring New Information

Some ontology-based approaches in the literature are proposed for inferring new information from the flood-related data. A flood ontology built by [11] (previously presented) is used in a risk assessment framework to detect flood risks as follows. A user chooses an event type and defines its intensity. The framework then identifies the intensity parameters suitable for this event and infers elements at risk susceptible to this event through matching susceptibility functions against the event using “isSusceptiblityTo” relation that links each susceptibility function to the respective event types. Another ontology is proposed by [7] to capture dynamically evolving phenomena for understanding the dynamic spatio-temporal behaviour of a flood disaster. They then discuss a reasoning approach relying on the ontology to infer new information representing image regions based on their temporal interval relations using SWRL rulesFootnote 2, that are reasoning rules used to infer new information from a knowledge graph. In [15], they present their hydrological sensor web ontology that integrates heterogeneous sensor data during a natural disaster. They use concepts describing sensors and observations, and they integrate concepts describing temporal and geospatial data. They then present a reasoning approach to infer flood phases from the water precipitation level and observation data.

We notice from the presented approaches that ontologies are commonly used for integrating and sharing flood-related data among the actors involved in the flood management process, while some approaches propose reasoning to infer new information using the ontology. Some ontologies define concepts describing victim’s evacuation such as flood, victim as well as evacuation areas, resources and centers [6, 17]; however, these concepts are not exploited for inferring new information that assists in the evacuation process.

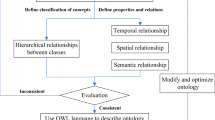

3 Approach Presentation

As previously discussed, the aim of our proposed work is enhancing the interoperability among actors involved in the disaster management process as well as assisting in the evacuation process of flood victims. Our proposed approach is presented in Fig. 1 and is detailed as follows. We first propose an ontology that formally defines concepts and relations describing the flood-related data. We then rely on the ontology to form our knowledge graph that integrates all the data, and it is updated regularly with evolving data. This knowledge graph can be shared among actors involved in the flood management process so that each actor can access the needed data at the right times. We use this knowledge graph further in a reasoning approach in order to infer new information representing evacuation priorities to all demand points in the study area for the sake of helping firefighters in taking rapid and efficient decisions concerning the evacuation process of flood victims.

3.1 Data Description

Our study area concerns the Pyrénées flood that occurred in June 2013 in Bagnères-de-Luchon, south-western France. It was a torrential flood particularly destructive and dangerous to the population, and it lead to many destructive consequences including damaged houses and farms, cut roads and flooded campsites. 240 people were evacuated from the areas impacted by this flood.

The flood-related data of our study area are provided from various data sources. These sources include institutional databases used in disaster management including BD TOPOFootnote 3 and GeoSireneFootnote 4 providing data about hazards, vulnerability, damage and resilience. Certain data sources provide data about geographical locations of roads, buildings and companies in France. Other sources include sensors providing data about water levels and flows, a hydrological model computing the flood generation, a hydraulic model for flood propagation as well as sources providing data about resilience corresponding to some actions taken from the past, socio-economic data, population data as well as danger and vulnerability indices of the flood calculated by domain experts. The vulnerability index measures a demand point’s vulnerability, and it is calculated using topographic and social data like population density, building quality and socio-economic conditions. The danger index measures the danger level of a demand point, and it is calculated using water’s level and speed obtained from a hydraulic model. These data are classified as static or dynamic data. Static data include number of floors and geographic locations, while dynamic data include water levels and population in a demand point.

4 Enhancing Interoperability

To handle the problem of interoperability among different involved actors in the disaster management process, we have proposed, in a previous work [1], a flood ontology that formally describes and thus provides semantics to the flood-related data.

4.1 Flood Ontology

We define a class (concept) named “demand point” representing an infrastructure. It is characterized by four subclasses that represent evacuation priorities defined for a further usage. The class “Material infrastructure” describes all kinds of infrastructure including habitats, working places and healthcare facilities. In addition to the class “Material infrastructure” that describes all kinds of infrastructure, we define a novel class named “Infrastructure aggregation” that allows managing different kinds of infrastructure in an aggregated manner. This class regroups the infrastructure in districts, buildings and floors. We can thus describe for a district all the infrastructure that it contains. For example, we can describe that a district has buildings, a building has floors, and a floor has apartments using the “has part” relation. Thanks to the “Infrastructure aggregation” class, an infrastructure is described by its data as well as the data of the infrastructure that contains it. It can be useful when the data about a specific infrastructure are unavailable or uncertain; in this case, this infrastructure can be described by the data of the infrastructure containing it.

The class “population” describes the population in an infrastructure including fragile and non-fragile population. This class is reused from [11] with adding some details. “Fragile population” describes elderly, children, and persons with disabilities, reduced mobility or illnesses who should be given a high evacuation priority when a flood occurs. Non-fragile population thus represents all persons that are non-fragile. The relation “is in” defines that a population type is in an infrastructure (or infrastructure aggregation).

In addition to the classes, we define object and data properties. The object properties represent the relations that link classes, and they are divided into static and dynamic object properties. The static object properties represent relations between classes describing static data such as the “contains” relation that links an infrastructure or an infrastructure aggregation to a population. The data properties represent properties describing the classes, and they are divided into static and dynamic data properties. The static data properties include a building’s vulnerability index and number of floors, while the dynamic data properties include danger index, submersion height, flood duration, number of population and if a demand point is inhabited.

Our ontology contains 41 classes, 6 object properties and 23 data properties. It is represented as RDF triplesFootnote 5 and is available onlineFootnote 6 . A visualization of the ontology classes is presented in Fig. 2 where the rectangles represent the classes of the ontology and the arrows represent the relations between classes.

4.2 Knowledge Graph

We have built our knowledge graph that integrates the heterogeneous flood-related data based on the concepts, relations and properties of our proposed ontology [1]. It contains all data instances represented in RDF format. The static data are first integrated and transformed into RDF triples that are added to the ontology triples. The dynamic data are then integrated, transformed into RDF triples, updated in real-time throughout the flood and added to the ontology and static data triples. The RDF transformation of static and dynamic data are performed using “rdflib” library in python environment that maps data according to the concepts and relations of the ontologyFootnote 7.

The knowledge graph is shared among all the actors involved in the management process to enhance the information interoperability and ensure that each actor can access the needed data at the right time. In order to facilitate the data access by the actors of the management process, the knowledge graph can be represented in GQISFootnote 8, a cross-platform desktop geographic information system application that supports viewing, editing, and analyzing geospatial data. The actors can choose the data to visualize to better understand the flood event and take the relevant decisions.

5 Inferring Evacuation Priorities

In addition to the information sharing and enhancing interoperability, the knowledge graph can be used to infer new information that does not exist in the knowledge graph thus enriching it. The enriched knowledge graph can then be shared so that the actors use the new information to take more efficient decisions.

Flood disaster management is a critical process as it concerns limiting adverse consequences, most importantly saving people’s lives. Therefore, the evacuation process of victims should be efficient, rapid and simple to ensure the victims’ safety. The firefighters concerned in the evacuation process are usually non-experts in new automated techniques that facilitate the evacuation process. Any automated assistance should respect the delicacy of this process. In our work, we propose assisting the concerned actors in taking decisions concerning the evacuation through reasoning approaches that allow inferring new information from the knowledge graph which represents evacuation priorities to demand points in the study area. For this aim, we propose evaluating two approaches for inferring the priorities, and we discuss the advantages and limitations of each approach.

5.1 Evacuation Priorities

The domain experts in our project have defined four evacuation priorities of demand points independently of the study area: Evacuate immediately, Evacuate in 6 h (hours), Evacuate in 12 h and No evacuation. Each priority represents a set of conditions defined on the properties of demand points, and the demand point whose properties satisfy the conditions of an evacuation priority is typed with this priority. The properties used for defining the evacuation priorities represent static and dynamic data properties defined in the ontology to describe demand points including danger and vulnerability indices, flood duration, number of floors, submersion height, housing type and if the demand point is inhabitable. The conditions of priorities are defined in an exclusive manner to avoid any conflicting cases; in addition, they consider all possible properties’ values describing demand points. The conditions defining the priority “Evacuate in 12 h” are displayed in Fig. 3.

5.2 Reasoning Approaches for Inferring Evacuation Priorities

We evaluate two reasoning approaches to infer the evacuation priorities of demand points in our study area. The first approach is reasoning using SPARQL queries, and the second approach is reasoning using SHACL rules.

Inferring Evacuation Priorities Using SPARQL Queries

SPARQL is an RDF query language that can be used to query and retrieve data. SPARQL queriesFootnote 9 are usually used to query knowledge graphs in order to extract information [15]; however, they can also be used for reasoning over the knowledge graph to infer new information. We propose using SPARQL queries to infer the evacuation priorities of demand points from the knowledge graph containing the flood-related data.

The first step of this approach consists of storing the knowledge graph in a triplestore, also named RDF store, that is a purpose-built database for the storage and retrieval of triples through semantic queries. We have chosen “Virtuoso” triplestore for storing knowledge graphs as it is proved to be efficient in storing a big number of triples in a relatively short time. The results of a benchmark show that Virtuoso can load 1 billion RDF triples in 27 min while other triplestores take hours to load them including BigData, BigOwlim and TDBFootnote 10.

We define SPARQL insert and delete queries defining the conditions of each evacuation priority, and we execute the queries on the knowledge graph stored in Virtuoso. The queries allow inferring a new triple for each demand point representing the demand point typed with an evacuation priority based on its properties. The SPARQL query defining the priority “Evacuate in 12 h” is displayed in Fig. 4.

Inferring Evacuation Priorities Using SHACL Rules

Rules are frequently used for reasoning over knowledge graphs to infer new information. Various kinds of rules are used in disaster management including as SWRL rules [8]. In this approach, we propose using SHACL rules to infer evacuation priorities to the demand points in our study area. SHACL (Shapes Constraint Language)Footnote 11 is a World Wide Web Consortium (W3C) standard language that defines RDF vocabulary to describe shapes where shapes are collections of constraints that apply to a set of nodes. A SHACL ruleFootnote 12 is a recent kind of rules having advantages over other kinds, and it has not been used in this domain yet. A SHACL rule is identified through a unique Internationalized Resource Identifier (IRI) in contrary to other rules. It can also be activated or deactivated upon user’s needs where a deactivated rule is ignored by the rules engine. In addition, an execution order of the rules can be defined. In our approach, we use SPARQL rules, a kind of SHACL rules written in SPARQL notation, to infer the evacuation priorities of demand points. The rules are defined in a shape file containing node shapes that represent classes describing the evacuation priorities as well as the used properties. The SPARQL rule representing the priority “Evacuate in 12 h” is displayed in Fig. 5. The rules are executed on the knowledge graph to infer new triples each consisting of a demand point typed with an evacuation priority according to its properties. The knowledge graph is enriched by adding the inferred triples to it, and it is then shared among different actors.

6 Approach Evaluation

In this section, we first discuss the evaluation of our ontology; then, we discuss the evaluation of the reasoning approaches for inferring the evacuation priorities.

6.1 Ontology Evaluation

In the frame of our project, we have conducted regular interviews with the domain experts in order to get their feedback concerning the ontology vocabulary. The experts’ feedback indicate that the concepts describing the infrastructures are sufficient as they include all the possible kinds of infrastructures that can be found in a flooded area. In addition, the concept defining the infrastructure aggregation is important as it solves the problem of missing or unavailable data. Concerning the concepts describing the population with its different categories, they indicate that they are consistent, and the relations between the population and the infrastructure are important as they allow to identify fragile persons in an infrastructure in order to prioritize their evacuation. The concepts and data properties describing the demand points were found to be adequate, and they cover all the properties used for defining the evacuation priorities. The concepts describing the four evacuation priorities are found to be important when used for planning the evacuation and inferring priorities of demand points.

According to the domain experts’ feedback and as the ontology is further used to infer evacuation priorities of demand points, it is useful to add concepts describing human and vehicle resources needed for evacuating flood victims in order to allow an efficient resource management.

6.2 Experimental Evaluation of Reasoning Approaches

In this section, we discuss the experimental evaluations conducted to evaluate the two reasoning approaches for inferring the evacuation priorities of demand points. First, we analyze the impact of the variation of the number of knowledge graph instances on the complexity of the two reasoning approaches, and then we evaluate the complexity of the evacuation priorities.

All the conducted experiments run in 4h and 1min on 8 CPUs Core i7-1185G7, and draw 0.28 kWh. Based in France, this has a carbon footprint of 11.01 g CO2e, which is equivalent to 0.01 tree-months (calculated using green-algorithms.org v2.1 [9]).

Variation of the Number of Demand Points

Evacuation priorities are inferred to the demand points in the study area; therefore, the number of instances of demand points determines the number of evacuation priorities to be inferred, and it represents the number of times that the conditions of each evacuation priority should be tested in order to infer a priority for a demand point. In this experiment, we aim at analyzing the impact of the variation of the number of instances describing the demand points in the knowledge graph on the complexity of the two reasoning approaches for inferring the evacuation priorities in terms of execution time.

We have 15,078 demand points in our study area. A demand point is described by different instances and relations representing its properties. All the instances are represented in the form of RDF triples where the knowledge graph of our study area contains a total number of 472,594 triples. In order to analyze the impact of the variation of the number of instances, we evaluate the execution time of the reasoning processes with decreasing percentages of demand points in the knowledge graph from 75% to 25% of the total number of demand points. Table 1 displays the number of demand points for each decreasing percentage. Figure 6 shows the execution times of the two reasoning approaches with decreasing percentages of demand points in the knowledge graph. We recall that the two approaches are reasoning using SPARQL insert and delete queries and using SHACL rules.

We notice from these results that the execution time decreases with decreasing percentages of demand points in the knowledge graph using the two reasoning approaches. It takes significantly less time to infer the priorities using SHACL rules (12.86 s for 100% of demand points) than using SPARQL queries (80.62 s) which makes it a more efficient approach. The SPARQL approach requires the knowledge graph’s loading on the triplestore which takes from 3 to 4.5 s depending on its size, while the chosen SHACL implementation is independent of a triplestore.

Our proposed approach of inferring evacuation priorities is a critical approach that should be applied on real use cases of flood disasters; therefore, it should be a reactive approach that helps the firefighters in taking their decisions. Our data represents a real flood disaster case; these results thus prove that the two reasoning approaches are reactive in real use cases while the SHACL approach being more efficient than the SPARQL approach in terms of time. Furthermore, our approach is efficient for our study area which represents a relatively large area of 52.80 km\(^{2}\) containing 15,078 demand points and 2,384 inhabitants (2015). The results of decreasing percentages of demand points prove that the reasoning approaches are reactive for varying numbers of demand points and thus for different flood areas.

Evaluation of Priorities’ Complexity

We define the complexity of an evacuation priority as the complexity of the reasoning process in terms of time upon executing this priority on the knowledge graph. The number of conditions defining a priority impacts its complexity. We define the worst case scenario of an evacuation priority, representing its highest complexity, as the case where all its conditions must be tested for each demand point. It is useful to the actors involved in the evacuation process to know the complexity of an evacuation priority as it allows them to estimate the complexity of inferring evacuation priorities in any flood condition.

The four evacuation priorities are defined by different numbers of conditions. Table 2 shows the number of conditions defining each priority. We evaluate the complexity of each evacuation priority using the two reasoning approaches for inferring the priorities of demand points.

In order to evaluate the complexity of the evacuation priorities in terms of execution time, there should be an equivalent number of demand points typed with each evacuation priority. This is not the case in our study area as different numbers of demand points are typed with each priority; therefore, we choose to evaluate the complexity using a synthetically generated knowledge graph that satisfies the above condition with considering the worst case scenario. This knowledge graph contains 16,000 demand points; each 4,000 demand points are typed by one evacuation priority, that is their properties satisfy the conditions of this priority. This knowledge graph is generated by generating data representing the demand points with their corresponding properties and transforming these data into RDF triples using the ontology. The RDF triples constituting the knowledge graph are generated using JENA Java libraryFootnote 13.

Figure 7 presents the experimental results in terms of execution time (in seconds) for each evacuation priority using the two approaches for inferring the priorities from the synthetic knowledge graph.

We notice from the results that the execution time increases as the number of conditions defining each priority increases in both approaches (refer to Table 2 for the number of conditions defining each priority). This confirms that the number of conditions defining an evacuation priority impacts its complexity. In addition, inferring the priorities using SHACL rules takes less time than using SPARQL queries for all evacuation priorities.

Although the execution time increases with increasing complexity of evacuation priorities, this increase remains reasonable. According to the domain experts, the results show that the reasoning approaches are efficient to be used in different possible scenarios of flood disasters for inferring the evacuation priorities of demand points while the SHACL approach remains more efficient than the SPARQL approach in terms of time.

6.3 Discussion

Inferring evacuation priorities of demand points using SHACL rules is proved to be more efficient than using SPARQL queries as it allows inferring the priorities in a shorter time for different flood disaster scenarios. In addition, the complexity of the evacuation priorities as well as the increase in the number of priorities has a lower impact on the SHACL approach than on the SPARQL approach.

The delicacy of the evacuation process requires proposing solutions that are not only efficient and rapid but also simple to assist in facilitating it. The inferred evacuation priorities of demand points can be accessed and visualized graphically in QGIS where we can view each demand point of the study area typed with an evacuation priority according to its properties that can also be visualized, such as the water level. In addition, these inferred priorities of demand points can be used in an algorithm of vehicle routing that optimizes the routing of the various vehicles used for the evacuation of flood victims [2]. The domain experts indicate that this would assist in the disaster response phase by optimizing the routes of the evacuation vehicles with the help of the priorities of different demand points; in addition, it would help in the preparedness phase through simulations conducted by the concerned actors to enhance the evacuation process.

SPARQL queries defining the evacuation priorities can be integrated in a tool where a natural language query written by the user about an evacuation priority can be transformed into a SPARQL query using existing approaches of query transformations [10, 13]. SHACL rules are based on SPARQL query language; therefore, natural queries can be transformed to rules and then integrated in a tool used by the users to infer information. In addition, integrating SHACL rules in a tool would allow users to choose whether to activate or deactivate rules as well as to set an execution order of different rules based upon their needs.

7 Conclusion and Future Work

In this paper, we have discussed our proposed solutions for enhancing the interoperability in the case of a flood disaster as well as for inferring evacuation priorities to demand points in a flooded area in order to assist in the evacuation process. We have proposed an ontology and a knowledge graph that allow managing and integrating the flood-related data and sharing them among different actors involved in the flood management process [1]. The feedback of the experts has showed that this solution enhances the interoperability. Furthermore, we have discussed two reasoning approaches for inferring evacuation priorities of demand points in our study area, reasoning using SPARQL queries and using SHACL rules. The experimental results have proved that the two reasoning approaches can be used for inferring the priorities while the SHACL approach is more efficient as it allows inferring the priorities in a shorter time thus assisting the involved actors in taking rapid and efficient decisions.

As a future work, we first aim at industrially expressing this work through an interface that integrates SHACL Rules and allows transforming users’ natural language queries to rules for the purpose of inferring new information. We also aim at relying on the ontology to infer new information concerning the management of the human and vehicle resources needed for the evacuation process. In addition to inferring new information that assists in the disaster response phase, we aim at inferring new information to improve a past disaster’s experience. We thus aim at proposing a learning approach that allows learning from a past disaster’s data and adjusting the values of the properties that define the conditions of evacuation priorities.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

References

Bu Daher, J., Huygue, T., Stolf, P., Hernandez, N.: An ontology and a reasoning approach for evacuation in flood disaster response. In: 17th International Conference on Knowledge Management 2022. University of North Texas (UNT) Digital Library (2022)

Dubois, F., Renaud-Goud, P., Stolf, P.: Capacitated vehicle routing problem under deadlines: an application to flooding crisis. IEEE Access 10, 45629–45642 (2022). https://doi.org/10.1109/ACCESS.2022.3170446

Elmhadhbi, L., Karray, M.H., Archimède, B.: A modular ontology for semantically enhanced interoperability in operational disaster response. In: 16th International Conference on Information Systems for Crisis Response and Management-ISCRAM 2019, pp. 1021–1029 (2019)

Franke, J.: Coordination of distributed activities in dynamic situations. The case of inter-organizational crisis management. Ph.D. thesis, Université Henri Poincaré-Nancy I (2011)

Katuk, N., Ku-Mahamud, K.R., Norwawi, N., Deris, S.: Web-based support system for flood response operation in Malaysia. Disaster Prev. Manag. Int. J. 18(3), 327–337 (2009)

Khantong, S., Sharif, M.N.A., Mahmood, A.K.: An ontology for sharing and managing information in disaster response: an illustrative case study of flood evacuation. Int. Rev. Appl. Sci. Eng. (2020)

Kurte, K.R., Durbha, S.S., King, R.L., Younan, N.H., Potnis, A.V.: A spatio-temporal ontological model for flood disaster monitoring. In: 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), pp. 5213–5216. IEEE (2017)

Kurte, K.R., Durbha, S.S., King, R.L., Younan, N.H., Vatsavai, R.: Semantics-enabled framework for spatial image information mining of linked earth observation data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 10(1), 29–44 (2016)

Lannelongue, L., Grealey, J., Inouye, M.: Green algorithms: quantifying the carbon footprint of computation. Adv. Sci. 8(12), 2100707 (2021)

Ochieng, P.: PAROT: translating natural language to SPARQL. Expert Syst. Appl. X 5, 100024 (2020)

Scheuer, S., Haase, D., Meyer, V.: Towards a flood risk assessment ontology-knowledge integration into a multi-criteria risk assessment approach. Comput. Environ. Urban Syst. 37, 82–94 (2013)

Schulz, S., Martínez-Costa, C.: How ontologies can improve semantic interoperability in health care. In: Riaño, D., Lenz, R., Miksch, S., Peleg, M., Reichert, M., ten Teije, A. (eds.) KR4HC/ProHealth -2013. LNCS (LNAI), vol. 8268, pp. 1–10. Springer, Cham (2013). https://doi.org/10.1007/978-3-319-03916-9_1

Shaik, S., Kanakam, P., Hussain, S.M., Suryanarayana, D.: Transforming natural language query to SPARQL for semantic information retrieval. Int. J. Eng. Trends Technol. 7, 347–350 (2016)

Studer, R., Benjamins, V.R., Fensel, D.: Knowledge engineering: principles and methods. Data Knowl. Eng. 25(1–2), 161–197 (1998)

Wang, C., Chen, N., Wang, W., Chen, Z.: A hydrological sensor web ontology based on the SSN ontology: a case study for a flood. ISPRS Int. J. Geo Inf. 7(1), 2 (2018)

Xu, W., Zlatanova, S.: Ontologies for disaster management response. In: Li, J., Zlatanova, S., Fabbri, A.G. (eds.) Geomatics Solutions for Disaster Management. LNGC, pp. 185–200. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-72108-6_13

Yahya, H., Ramli, R.: Ontology for evacuation center in flood management domain. In: 2020 8th International Conference on Information Technology and Multimedia (ICIMU), pp. 288–291. IEEE (2020)

Acknowledgments

This work has been funded by the ANR (https://anr.fr/) in the context of the project “i-Nondations” (e-Flooding), ANR-17-CE39-0011.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 IFIP International Federation for Information Processing

About this paper

Cite this paper

Bu Daher, J., Stolf, P., Hernandez, N., Huygue, T. (2023). Enhancing Interoperability and Inferring Evacuation Priorities in Flood Disaster Response. In: Gjøsæter, T., Radianti, J., Murayama, Y. (eds) Information Technology in Disaster Risk Reduction. ITDRR 2022. IFIP Advances in Information and Communication Technology, vol 672. Springer, Cham. https://doi.org/10.1007/978-3-031-34207-3_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-34207-3_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-34206-6

Online ISBN: 978-3-031-34207-3

eBook Packages: Computer ScienceComputer Science (R0)