Abstract

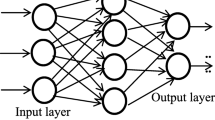

The automated identification of spoken languages from the voice signals is attributed to automatic Language Identification (LID). Automated LID has many applications, including global customer support systems and voice-based user interfaces for different machines. The hundreds of different languages are popularly spoken around the world and learning of all languages is practically impossible for anyone. The machine learning methods have been used effectively for automation and translation of LID. However, machine learning-based automation of the LID process is heavily reliant on handcrafted feature engineering. The manual feature extraction process is subjective to individual expertise and prone to many deficiencies. The conventional feature extraction not only leads to significant delays in the development of automated LID systems but also leads to inaccurate and non-scalable systems. In this paper, a deep learning-based approach using spectrograms is proposed. The Convolutional Neural Networks (CNN) model is designed for the task of automatic language identification. The proposed model is trained on a dataset from VoxForge on the speech from five different languages, viz. Deutsche, Dutch, English, French, and Portuguese. For this study, evaluation measures like accuracy, precision, recall, and F1-score are used. The new proposed approach has been compared against traditional approaches as well as other existing deep learning approaches for LID. The proposed model outperforms its competitors with an average F1-score of above 0.9 and an accuracy of 91.5%.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Zissman, M.A., Berkling, K.M.: Automatic language identification. Speech Commun. 35, 115–124 (2001). https://doi.org/10.1016/S0167-6393(00)00099-6

Barnard, E., Cole, R.A.: Reviewing automatic language identification. IEEE Signal Process. Mag. 11, 33–41 (1994). https://doi.org/10.1109/79.317925

Lewis, M., Paul, G., Simons, F., Fennig, C.D.: Ethnologue: languages of the world. Ethnologue 87–101 (2016). https://doi.org/10.2307/415492

Hachman, M.: Battle of the digital assistants: cortana, siri, and google now. PCWorld 32, 13–20 (2014)

Tong, R., Ma, B., Zhu, D., Li, H., Chng, E.S.: Integrating acoustic, prosodic and phonotactic features for spoken language identification. In: 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, vol. 1, pp. 205–208 (2006). https://doi.org/10.1109/ICASSP.2006.1659993

Torres-Carrasquillo, P.A., Singer, E., Kohler, M.A., Greene, R.J., Reynolds, D.A., Deller, J.R.: Approaches to language identification using Gaussian mixture models and shifted delta cepstral features. In: International Conference on Acoustics, Speech, and Signal Processing 2002, pp. 89–92 (2002). 10.1.1.58.368

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015). https://doi.org/10.1016/j.neunet.2014.09.003

Zissman, M.A.: Comparison of four approaches to automatic language identification of telephone speech. IEEE Trans. Speech Audio Process. 4, 31–44 (1996). https://doi.org/10.1109/TSA.1996.481450

Zissman, M.A.: Automatic language identification using Gaussian mixture and hidden Markov models. In: IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 2, pp. 399–402 (1993). https://doi.org/10.1109/ICASSP.1993.319323

Lippmann, R.P.: Speech recognition by machines and humans. Speech Commun. 22, 1–15 (1997). https://doi.org/10.1016/S0167-6393(97)00021-6

House, A.S., Neuburg, E.P.: Toward automatic identification of the language of an utterance. I. Preliminary methodological considerations. J. Acoust. Soc. Am. 62, 708–713 (1977). https://doi.org/10.1121/1.381582

Hazen, T.J.: Segment-based automatic language identification. J. Acoust. Soc. Am. 101, 2323 (1997). https://doi.org/10.1121/1.418211

Pellegrino, F., Andre-Obrecht, R.: Automatic language identification: an alternative approach to phonetic modelling. Signal Process. 80, 1231–1244 (2000). https://doi.org/10.1016/S0165-1684(00)00032-3

Torres-Carrasquillo, P.A., et al.: The MITLL NIST LRE 2007 language recognition system. In: Proceedings of the Annual Conference of the International Speech Communication Association INTERSPEECH, pp. 719–722 (2008)

Torres-Carrasquillo, P.A., et al.: The MITLL NIST LRE 2009 language recognition system. In: ICASSP, International Conference on Acoustics, Speech and Signal Processing - Proceedings, pp. 4994–4997 (2010). https://doi.org/10.1109/ICASSP.2010.5495080

Singer, E., et al.: The MITLL NIST LRE 2011 language recognition system. In: ICASSP, IEEE International Conference on Acoustics, Speech, and Signal Processing - Proceedings, pp. 209–215 (2012)

Montavon, G.: Deep learning for spoken language identification. In: NIPS Workshop on deep Learning for Speech Recognition and Related Applications, pp. 1–4 (2009)

Graves, A., Mohamed, A., Hinton, G.: Speech recognition with deep recurrent neural networks. ICASSP 6645–6649 (2013). https://doi.org/10.1109/ICASSP.2013.6638947

Deng, L., Yu, D.: Deep convex net: a scalable architecture for speech pattern classification. In: Proceedings of the Annual Conference of the International Speech Communication Association INTERSPEECH, pp. 2285–2288 (2011)

Dahl, G.E., Yu, D., Deng, L., Acero, A.: Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. IEEE Trans. Audio, Speech Lang. Process. 20, 30–42 (2012). https://doi.org/10.1109/TASL.2011.2134090

Deng, L., et al.: Recent advances in deep learning for speech research at Microsoft. In: ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing - Proceedings, pp. 8604–8608 (2013). https://doi.org/10.1109/ICASSP.2013.6639345

Hinton, G., et al.: Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process. Mag. 82–97 (2012). https://doi.org/10.1109/MSP.2012.2205597

Lopez-Moreno, I., Gonzalez-Dominguez, J., Plchot, O., Martinez, D., Gonzalez-Rodriguez, J., Moreno, P.: Automatic language identification using deep neural networks. In: ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing - Proceedings, pp. 5337–5341 (2014). https://doi.org/10.1109/ICASSP.2014.6854622

Gonzalez-Dominguez, J., Lopez-Moreno, I., Sak, H., Gonzalez-Rodriguez, J., Moreno, P.J.: Automatic Language Identification using Long Short-Term Memory Recurrent Neural Networks, Interspeech-2014, pp. 2155–2159 (2014)

Voxforge.org, Free Speech... Recognition (Linux, Windows and Mac) - voxforge.org (2006)

Sisodia, D.S., Nikhil, S., Kiran, G.S., Sathvik, P.: Ensemble learners for identification of spoken languages using mel frequency cepstral coefficients. In: 2nd International Conference on Data, Engineering and Applications (IDEA), pp. 1–5. IEEE (2020). https://doi.org/10.1109/IDEA49133.2020.9170720

Shrawgi, H., Sisodia, D.S.: Convolution neural network model for predicting single guide RNA efficiency in CRISPR/Cas9 system. Chemomtr. Intell. Lab. Syst. 189, 149–154 (2019). https://doi.org/10.1016/j.chemolab.2019.04.008

Sisodia, D.S., Agrawal, R.: Data imputation-based learning models for prediction of diabetes. In: 2020 International Conference on Decision Aid Sciences and Application (DASA), pp. 966–970. IEEE (2020). https://doi.org/10.1109/DASA51403.2020.9317070

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Shrawgi, H., Sisodia, D.S., Gupta, P. (2023). Automated Spoken Language Identification Using Convolutional Neural Networks & Spectrograms. In: Garg, L., et al. Key Digital Trends Shaping the Future of Information and Management Science. ISMS 2022. Lecture Notes in Networks and Systems, vol 671. Springer, Cham. https://doi.org/10.1007/978-3-031-31153-6_14

Download citation

DOI: https://doi.org/10.1007/978-3-031-31153-6_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-31152-9

Online ISBN: 978-3-031-31153-6

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)