Abstract

Systematic reviews of interventions aim at synthesising evidence from studies on interventions to provide guidance for decision-making and further research. Systematic reviews use prespecified methods, mapped in a review protocol. A systematic search strategy is applied to identify studies by searching electronic databases and possibly other sources. After reviewers reach consent on the included primary studies, they systematically record detailed information on each study in the data extraction process. Each study is described and assessed regarding key descriptive features, risk of bias and the certainty of the evidence, and its findings. Finally, results from the included primary studies may be combined by pooling data in the context of a statistical meta-analysis or other type of synthesis.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Clinical decision-making and health policy development should be informed by the best available research evidence. This applies to clinical treatments as well as healthcare delivery models, implementation programmes and other complex interventions. A systematically consolidated evidence base on a specific research question has higher certainty than single studies. Systematic reviews follow systematic, replicable methodological approaches to search, synthesise and critically appraise the available evidence on a defined research question. Single studies may be biased, resulting in misleading conclusions. This results in inadequate, potentially unstable action, which may cause more harm than necessary.

Historically, the statistical approach of ‘meta-analysis’ (as a relevant part of data synthesis in the context of systematic reviews) emerged prior to the broader methodological approach of a systematic review. In 1904, Karl Pearson was the first to publish pooled results, and with his publication, he sustainably influenced the development of meta-analysis. The development of the concept and technique of meta-analysis and the word itself were coined by Gene Glass in 1976 (Bohlin 2012; Glass 1976). The methods were adopted and further developed in the health sciences, covering (among others) systematic methods for searching studies and the methodological assessment of included studies. Today, the conduct of meta-analysis as part of systematic reviews is an important tool in evidence-based medicine (EbM) (Greco et al. 2013). There is a broad consensus that reviews are required to reduce research waste and support decision-makers with the best available evidence. The number of systematic reviews in health and the methodology for conducting systematic reviews have developed enormously in recent decades. Thirty years ago, very few systematic reviews were conducted, and it was considered to be a new methodological approach. Prior to the year 2000, only about 3000 systematic reviews had been indexed in MEDLINE. Now, about 10,000 systematic reviews of health research are published annually (Clarke and Chalmers 2018).

Systematic reviews with homogenous included studies provide the highest level of evidence and are therefore, compared to other study designs, superior regarding the certainty of the evidence (Howick et al. 2011). The systematic review methodology seeks to provide unbiased evidence by applying a systematic and transparent research methodology. Cochrane Reviews are internationally considered the gold standard of systematic reviews, particularly for randomised trials (and related designs) of interventions. Cochrane Reviews are based on an extensively elaborated and largely standardised set of methods (Higgins et al. 2021). These standards are also captured in the PRISMA 2020 statement (Page et al. 2021). Systematic reviews of interventions are also relevant in health services research (HSR), as this covers the evaluation of healthcare delivery models, implementation programmes and other interventions in real-world healthcare.

Besides the Cochrane style of systematic reviews, there are other types, such as scoping reviews, rapid reviews and qualitative syntheses. Scoping reviews aim to map out the available research in a chosen domain to identify knowledge gaps, clarify concepts, scope literature or investigate research conduct. They also are conducted to serve systematic reviews to confirm eligibility criteria or research questions (Munn et al. 2018). Rapid reviews are conducted in a shorter timeframe than systematic reviews. There is no common methodological approach to conduct a rapid review, and thus, they often differ from each other in the methodologies utilised (Harker and Kleijnen 2012). Specifically, policy makers need a synthesis of the evidence to derive policy actions in short time frames, i.e. within some weeks or months. Rapid reviews often serve this purpose as systematic reviews take at least 12 months (Ganann et al. 2010). Qualitative syntheses focus on the qualitative research evidence for a topic, focusing on types of phenomena, processes or working mechanisms rather than their numbers. Some Cochrane Reviews are syntheses of qualitative studies.

This chapter provides an introduction to the methods of systematic reviews, focusing largely on reviews of studies on the effects of interventions. To guarantee that methods are applied as initially planned and to reduce publication bias, all steps of a systematic review are ideally documented in a protocol that is published prior to the conduct of the review itself (Chandler et al. 2021). Further steps of a systematic review include (1) defining a research question; (2) writing a review protocol; (3) developing and applying a systematic search strategy; (4) conducting title, abstract and full text screening; (5) extracting data; (6) assessing the risk of bias; (7) grading the quality of the evidence (GRADE); and (8) synthesis of findings, which may imply statistical meta-analysis. The approach differs across the study design of included studies in the systematic reviews; i.e. non-randomised studies need different risk of bias assessments and data extraction tools compared to randomised controlled trials (RCTs).

2 Defining a Research Question and Writing a Review Protocol

Defining the focus of a systematic review by generating a research question is one of the very first steps of a systematic review. The scope of a systematic review is either broad or narrow and is reflected in the research question. A broad research question covers a wide range of topics (e.g. interventions), which may enhance broad relevance, whereas a narrow research question only addresses one topic or a few topics, which may enhance concrete relevance. The scope, either broad or narrow, also depends on time, resources and instructions, among other factors. Systematic reviews aim to support clinical and policy decision-makers and identify knowledge gaps that require further research. The research questions need to be both answerable and not yet answered. The development of a well-formulated research question is time-consuming and needs expert knowledge in the research field of the intended topic of the systematic review. It is recommended to involve relevant stakeholders and apply tools for priority setting to ensure that the review considers all relevant aspects in the field of research. In Cochrane Reviews, research questions are formulated as objectives (Thomas et al. 2021). The James Lind Alliance (JLA) offers priority setting methods for health research. The priority setting process involves patients, clinicians and carers in a priority setting partnership. The JLA developed a detailed guidebook for everyone who wants to establish such a partnership and conduct the process of priority setting in health research (Cowan and Oliver 2013).

Specifically, for systematic reviews of interventions, the application of the ‘PICO’ scheme is recommended, i.e. the PRISMA checklist suggests using the PICO scheme if the systematic review aims to investigate the effects of an intervention (Page et al. 2021) (Box 16.1 provides an example).

PICO (Population/Patient, Intervention, Comparison/Control, Outcome) is a tool to define the breadth of the review and to set the anchor for the inclusion criteria. Applying the PICO criteria for the definition of the research question means addressing all components of PICO in the research question. One might ask the following questions to define the PICO elements:

-

Population/patient: What are the characteristics of the patient or population of interest, e.g. gender, age, etc.? What is the condition or disease of interest (and its severity)? In HSR, the population additionally or primarily concerns healthcare providers.

-

Intervention: What is the intervention of interest for the patient or population regarding its effectiveness? In HSR, interventions may also be healthcare delivery models, strategies for improving aspects of care and other typically ‘complex interventions’.

-

Comparison/control: What is the alternative to the intervention? In systematic reviews including clinical trials, the comparator usually consists of clinical alternatives to the intervention, e.g. placebo, different drug and surgery. In HSR, the comparator to the intervention is mainly usual care or alternative strategies.

-

Outcome: What are relevant outcomes with regard to the condition and intervention? In HSR, a wide range of outcomes may be considered, reflecting aspects of healthcare delivery, costs and health outcomes.

Box 16.1: Example of PICO

When investigating whether the application of media in gynaecological care is effective in improving health behaviours during pregnancy, it is recommended to define a research question according to the PICO scheme: Is the application of media in gynaecological care (intervention) as compared to no media application in gynaecological care (comparator) effective in bringing about improvement in health behaviours (outcome) in adult pregnant women (population)?

Based on the PICO elements, review authors define eligibility criteria for inclusion of studies. Therefore, each element of PICO needs to be defined in sufficient detail, and review authors need to consider the pros, cons, necessity, relevance and consequences of each restriction and allowance, meaning each exclusion and inclusion criteria.

After the research question has been developed, the methods of the systematic review should be described in a study protocol. This protocol elaborates on the methods in the phases that are presented in this chapter. It is recommended to apply the PRISMA-P (Preferred Reporting Items for Systematic review and Meta-Analysis Protocols) 2015 checklist for recommended items to address in a systematic review protocol (Moher et al. 2015). This checklist can be applied as a guideline for the writing of a review protocol.

3 Search and Select Studies

To identify relevant primary studies for inclusion in a systematic review, it is necessary to develop a systematic search strategy that meets the review’s eligibility criteria as closely as possible. The balance between accuracy and comprehensiveness in literature searches usually leans towards the latter, which means that as many relevant studies as possible are included in order to reduce selection bias. Systematic review authors need to search various sources, such as electronic databases (see Box 16.2), grey literature databases, internet search engines, trial registers, targeted internet searches of key organisational and institutional websites and other sources. Searching systematic reviews on similar topics and reference lists of included studies is a relevant aspect of the search for eligible studies.

Box 16.2: Databases for Literature Searches

Most relevant databases include Medline (via Ovid or PubMed), EMBASE (Excerpta Medica Database), Cochrane Database of Systematic Reviews, CENTRAL (Cochrane Central Register of Controlled Trials), CINAHL Plus (Cumulative Index to Nursing and Allied Health), Nursing Reference Center Plus, Scopus, Web of Science, PsycINFO, HSTAT (Health Services/Technology Assessment Text), TRoPHI (Trials Register of Promoting Health Interventions), LILACS (Latin American and Caribbean Health Science), AIM (African Index Medicus), CCMed (Current Contents Medicine Database of German and German-Language Journals) and RAND-Health and Health Care.

The selection of relevant databases also depends on the topic of the systematic review as there are many further databases on specific topics, such as sexually transmitted diseases or different aspects of toxins. The review authors need to check the relevance of each database for conducting the systematic review. It is strongly recommended to consult or involve an information specialist to support the development of a search strategy and to conduct searches of electronic databases. The search strategy should at least be peer-reviewed by an information specialist or a librarian before it is run (Lefebvre et al. 2021).

The development of a search strategy for a systematic review of interventions needs to be tied to the main concepts of the review as defined by the PICO scheme. For each concept, it is helpful to identify synonyms, related and international terms, alternative spellings, plurals, etc. and select relevant text words and controlled vocabulary. The application of truncations (used to replace multiple characters, e.g. protect* = protects, protective, protection, etc.) and wildcards (used to replace single characters, e.g. te?t = test, text, etc.), is recommended. The concepts are connected to one search strategy by the application of different Boolean operators (and, or, not). The search strategy reflects the review’s eligibility criteria.

For each database search, the date of search, the search period and the retrieved records need to be documented. The search dates ideally should not be older than 12 (better 6) months prior to publication of the systematic review. Thus, the review team needs to schedule the search and if necessary, update it according to the project timeline and the scheduled submission date.

In addition to electronic database searches, review authors should conduct searches on relevant organisational and institutional websites, on grey literature databases, in search engines and in trial registries. Also, it is recommended to hand search references of included studies.

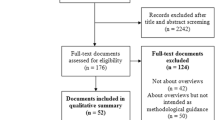

In the next step, duplicates from the different searches need to be removed. Practical experience in HSR suggests that searches may result in 1000–10,000 database hits, ‘records’, with about 0–100 eligible studies. The deduplicated records are screened, ideally by at least two review authors. There are various technical possibilities to screen the titles and abstracts of the records; e.g. Covidence is a recommendable digital screening tool. The full texts of potentially relevant records need to be assessed for eligibility. At least two review authors need to check and decide on their eligibility by screening the full texts. Disagreements in the title, abstract and full text screening should be solved with a third review author as an arbiter.

4 Data Extraction

Data extraction is the structured collection of data from studies that are included in the systematic review. The following categories of data are usually covered: (a) descriptive information on the study (e.g. author and year of publication), (b) information on study design and methods, (c) main findings and (d) other information (e.g. description of the study setting). It is recommended to use a data extraction form to ensure that all relevant data are extracted by the review authors. The application of a data extraction form simplifies the comparison of extracted data between two review authors. Data can be extracted in paper forms, in electronic forms or in data systems. Regardless of the format, data extraction forms need to be “easy-to-use forms and collect sufficient and unambiguous data that faithfully represent the source in a structured and organized manner” (Li et al. 2021). Data extraction forms should be piloted and adapted before its application in the data extraction process of the review. A well-designed data extraction form captures all relevant details of the study, and in the ideal case, the review authors no longer need to check the original paper in the further process of the review conduct. The Cochrane Collaboration provides data extraction forms for various types of designs.

5 Assessment of Risk of Bias and Grading of Certainty

All studies suffer from a degree of bias, which results in deviations from ‘the truth’. As the truth is often unknown, we can only assess the risk of bias based on known characteristics of the included studies. For instance, non-randomised comparisons of intervention outcomes between study groups run the risk that the groups may be different from the start. Many types of risks of bias in studies have been described. They are primarily determined by the study design (e.g. randomised trials involve lower risk of bias by design), but specific aspects of the conduct and analysis of studies can compensate to some extent for weaknesses in study design or increase bias in well-designed studies. Higher risk of bias results in lower certainty of the veracity of the study results. The assessment of the risk of bias and thus the certainty of findings is a key component of systematic reviews.

The domains of assessment, its contents and its labelling differ across study designs. In randomised controlled trials (RCTs), for instance, the risk of bias assessment focuses on seven domains (Sterne et al. 2019) (Table 16.1):

After the identification of specific risks of bias, a structured method may be used to determine an overall assessment of the risk of bias. For instance, the Cochrane Handbook describes a stepwise approach. Using a checklist of domains for risk of bias, each domain receives a judgement on the risk of bias: low, moderate (some concerns) or high. If the judgements across all domains is ‘low risk of bias’, the overall judgement is ‘low risk of bias’. If at least one domain is judged as ‘moderate risk of bias/some concerns’, the trial is judged as ‘moderate risk of bias/some concerns’. Similarly, if at least one domain is judged as ‘high risk of bias’ or several domains are assessed as ‘moderate risk of bias/some concerns’, the overall judgement is ‘high risk of bias’ (Higgins et al. 2021).

Systematic reviews may include study designs other than RCTs. In several research fields, specifically in HSR, evidence needs to be derived from non-randomised studies of interventions as there are few RCTs. According to the GRADE working group, individual cross-sectional studies with consistently applied reference standards and blinding, inception cohort studies and observational studies with large effect sizes can provide a similar evidence level as RCTs (Schüneman et al. 2013). Well-executed observational studies may provide a high certainty in evidence. Non-randomised studies of interventions are ‘observational studies’. These include different study designs, such as cohort studies, controlled before-and-after studies, case-control studies, interrupted-time-series studies (ITS) and controlled trials (Sterne et al. 2016). Several tools exist to assess the risk of bias for non-randomised studies of interventions. However, the domains of assessment differ across risk of bias assessment tools. A prominent tool – recommended by the Cochrane Collaboration – to assess the risk of bias in non-randomised studies of interventions is the ROBINS-I tool. The ROBINS-I tool also consists of seven domains, including bias due to confounding, bias in selection of participants for the study, bias in classification of interventions, bias due to deviations from intended interventions, bias due to missing data, bias in measurement of outcomes and bias in selection of the reported result (Sterne et al. 2016, 2019). Further information and a detailed guidance on the usage of the ROBINS-I tool and updates in risk of bias tools can be found on the internet on www.riskofbias.info.

To assess the certainty of the total body of evidence (rather than individual studies) regarding intervention outcomes, it is recommended to grade the evidence. GRADE is an internationally and widely used system, which involves an evaluation of the evidence on health interventions for each of the relevant outcomes regarding risk of bias, consistency of effects, directness of comparisons, publication bias and imprecision. The certainty of the evidence can be assessed as very low, low, moderate or high. While a systematic review do not go further, developers of guidance for decision-makers will also consider other factors beyond certainty of evidence, such as cost and implementability of an intervention. Details on the performance of GRADE are made available by the GRADE working group via the internet www.gradeworkinggroup.org.

6 Synthesis of Studies

The synthesis of studies is a procedure in which data from all included studies in the systematic review are collected to generate an overall body of evidence from the relevant and included evidence. When the review includes results from two or more studies, the review authors should consider a statistical synthesis of the numerical results. This is only valid if the interventions in included studies are sufficiently homogeneous, which requires expert judgement. The statistical synthesis to estimate the overall intervention effect is conducted by the application of a meta-analysis (Deeks et al. 2021; McKenzie et al. 2021). From a statistical point of view, a meta-analysis provides a weighted average value of the effect estimates as derived from the included studies. Simple counting of studies with ‘positive effects’ can be highly misleading and should not be applied.

In some cases, conducting a meta-analysis may not be relevant or possible for several reasons and instead, a narrative synthesis of the results is necessary; e.g. when there is limited evidence (no studies or only one study), the reported outcome estimates are incomplete, the effect measures are different and cannot be equalised, there is a huge concern of bias in the evidence or there is large statistical heterogeneity across studies (McKenzie and Brennan 2021). The narrative synthesis is typically a textual description of the effect estimates. The reporting of systematic reviews without meta-analysis should follow the Synthesis Without Meta-analysis (SWiM) guideline. The guideline consists of nine items to improve transparency in reporting (Campbell et al. 2020).

In other types of synthesis, the results of each study are systematically categorised according to prespecified or post hoc developed categories and then described separately as a narration. There are also further statistical methods to synthesise the evidence, e.g. summarising effect estimates, combining p-values and vote counting based on the direction effect. Those alternative statistical methods result in a more limited body of evidence than meta-analysis. However, compared to narrative approaches, these statistical approaches may be superior.

Systematic reviews that integrate qualitative studies need a qualitative evidence synthesis. In qualitative synthesis, the focus is on identification of issues rather than quantifying them using qualitative methods of analysis.

7 Practical Aspects of Systematic Reviews

When conducting a systematic review, it is recommended to build a team with different areas of expertise and previously establish a project plan where the team defines which persons will be involved in the different stages of the review conduct. One person should take the lead and the coordination of the project, supervise the timeline and manage the team and the tasks. For the team members, it is important to have one contact person in charge of the review conduct. As indicated earlier, it is highly recommendable to have an information specialist in the project team who has access to many databases.

It is recommended to meet up regularly with the team (in person or virtually) to manage and update the project plan, discuss how to proceed, clarify the next steps and solve open issues. It is recommended to work with a GANTT chart to track progress and development and identify potential problems in the progress of the project. Setting fixed deadlines for the finalisation of the single working packages is very helpful. As the team will work together for up to several years, it is helpful to consider elements of team building. When conducting a review with the Cochrane Collaboration, authors have the possibility to contact the review advisory groups, which support Cochrane authors from the beginning of their projects.

Conducting a systematic review is time-consuming. A Cochrane Review may take between 2 and 5 years, depending on the records received, the workforce available to conduct the review and the overall progress of the project. Therefore, it is recommended to check the available tools, test them, agree within the team on which ones are helpful in the context of the specific review, and adapt and apply the tools that ease the process (as exemplified in each section).

8 Conclusions and Perspective

Systematic reviews are often used by decision-makers in clinical practice and health policy, and thus, systematic reviews may have an impact on the health services system, clinical practice guidelines and intervention programmes in healthcare. The methodology for systematic reviews has developed enormously in recent decades. For intervention research, the methodological guidance of the Cochrane Collaboration provides the gold standard, which also applies to HSR. For instance, the Cochrane Effective Practice and Organisation of Care (EPOC) group has conducted many reviews in this field. The COVID-19 pandemic has reinforced the need for high-quality research and systematic reviews. The quality of systematic reviews may be further improved by methodological developments, such as the natural language processing methods for literature searches, tools for specification of complex interventions and Bayesian methods for statistical pooling of data from studies.

Recommended Reading

-

Higgins, J.P., Thomas, J., Chandler, J., et al. (Eds.). (2019). Cochrane handbook for systematic reviews of interventions. John Wiley & Sons.

-

Schünemann, H., Brozek, J., Guyatt, G., et al. (Eds.) (2013). GRADE Handbook for grading the quality of evidence and the strength of recommendations using the GRADE approach version of October 2013. The GRADE Working Group. http://gdt.guidelinedevelopment.org/central_prod/_design/client/handbook/handbook.html

References

Bohlin, I. (2012). Formalizing syntheses of medical knowledge: The rise of meta-analysis and systematic reviews. Perspectives on Science, 20(3), 273-309.

Campbell, M., McKenzie, J. E., Sowden, A., et al. (2020). Synthesis without meta-analysis (SWiM) in systematic reviews: reporting guideline. British Medical Journal, 368, l6890.

Chandler, J., Cumpston, M., Thomas, J., et al. (2021). Chapter I: Introduction. In: Higgins, J.P.T., Thomas, J., Chandler, J., Cumpston, M., Li, T., Page, M.J., Welch, V.A. (Eds). Cochrane Handbook for Systematic Reviews of Interventions version 6.3 (updated February 2022). Cochrane, 2022. Retrieved August 12, 2021, from www.training.cochrane.org/handbook

Clarke, M., & Chalmers, I. (2018). Reflections on the history of systematic reviews. British Medical Journal Evidence-Based Medicine, 23(4), 121–122.

Cowan, K., & Oliver, S. (2013). The James Lind Alliance Guidebook. Southampton: National Institute for Health Research Evaluation, Trials and Studies Coordinating Centre.

Deeks, J. J., Higgins, J. P. T., Altman, D. G. (2021). Chapter 10: Analysing data and undertaking meta-analyses. In: J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, V. A. Welch (Eds.). Cochrane Handbook for Systematic Reviews of Interventions version 6.2 (updated February 2021a). Cochrane, 2021. Retrieved September 21, 2021 from www.training.cochrane.org/handbook

Ganann, R., Ciliska, D., & Thomas, H. (2010). Expediting systematic reviews: methods and implications of rapid reviews. Implementation Science, 5(1), 56.

Glass, G. V. (1976). Primary, secondary, and meta-analysis of research. Educational Researcher, 5(10), 3–8.

Greco, T., Zangrillo, A., Biondi-Zoccai, G., et al. (2013). Meta-analysis: pitfalls and hints. Heart, lung and vessels, 5(4), 219.

Harker, J., & Kleijnen, J. (2012). What is a rapid review? A methodological exploration of rapid reviews in Health Technology Assessments. International journal of evidence-based healthcare, 10(4), 397–410.

Higgins, J. P. T., Savović, J., Page, M. J., et al. (2021). Chapter 8: Assessing risk of bias in a randomized trial. In: J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, V. A. Welch (Eds.). Cochrane Handbook for Systematic Reviews of Interventions version 6.2 (updated February 2021b). Cochrane, 2021. Retrieved August 28, 2021, from www.training.cochrane.org/handbook

Howick, J., Chalmers, I., Glasziou, P., et al. (2011). The 2011 Oxford CEBM Levels of Evidence (Introductory Document). Oxford Centre for Evidence-Based Medicine. Retrieved August 21, 2021, from https://www.cebm.ox.ac.uk/resources/levels-of-evidence/ocebm-levels-of-evidence

Lefebvre, C., Glanville, J., Briscoe, S., et al. (2021). Chapter 4: Searching for and selecting studies. In: J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, V. A. Welch (Eds.). Cochrane Handbook for Systematic Reviews of Interventions version 6.2 (updated February 2021c). Cochrane, 2021. Retrieved October 5, 2021, from www.training.cochrane.org/handbook

Li, T., Higgins, J. P. T., & Deeks, J. J. (2021). Chapter 5: Collecting data. In: J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, V. A. Welch (Eds.). Cochrane Handbook for Systematic Reviews of Interventions version 6.2 (updated February 2021d). . Cochrane, 2021. Retrieved September 13, 2021, from www.training.cochrane.org/handbook

McKenzie, J. E., & Brennan, S. E. (2021). Chapter 12: Synthesizing and presenting findings using other methods. In: J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, V. A. Welch (Eds.). Cochrane Handbook for Systematic Reviews of Interventions version 6.2 (updated February 2021e).. Cochrane, 2021. Retrieved August 27, 2021, from www.training.cochrane.org/handbook

McKenzie, J. E., Brennan, S. E., Ryan, R. E., et al. (2021). Chapter 9: Summarizing study characteristics and preparing for synthesis. In: J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, V. A. Welch (Eds.). Cochrane Handbook for Systematic Reviews of Interventions version 6.2 (updated February 2021f). Cochrane, 2021. Retrieved October 9, 2021, from www.training.cochrane.org/handbook

Moher, D., Shamseer, L., Clarke, M., et al. (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Reviews, 4(1), 1.

Munn, Z., Peters, M., Stern, C., et al. (2018). Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Medical Research Methodology, 18(1), 143.

Page, M. J., McKenzie, J. E., Bossuyt, P. M., et al. (2021). The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ, 372, n71.

Schünemann, H., Brozek, J., Guyatt, G., et al. (eds.) (2013). GRADE Handbook for grading the quality of evidence and the strength of recommendations using the GRADE approach version of October 2013. The GRADE Working Group. Retrieved September 12, 2021, from, http://gdt.guidelinedevelopment.org/central_prod/_design/client/handbook/handbook.html

Sterne, J. A. C., Hernán, M. A., Reeves, B. C., et al. (2016). ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ, 355, i4919.

Sterne, J.A.C., Savović, J., Page, M. J., et al. (2019). RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ, 366, l4898.

Thomas, J., Kneale, D., McKenzie, J. E., et al. (2021). Chapter 2: Determining the scope of the review and the questions it will address. In: J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, V. A. Welch (Eds.). Cochrane Handbook for Systematic Reviews of Interventions version 6.2 (updated February 2021g). Cochrane, 2021.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Bombana, M. (2023). Systematic Reviews of Health Care Interventions. In: Wensing, M., Ullrich, C. (eds) Foundations of Health Services Research. Springer, Cham. https://doi.org/10.1007/978-3-031-29998-8_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-29998-8_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-29997-1

Online ISBN: 978-3-031-29998-8

eBook Packages: MedicineMedicine (R0)