Abstract

Diabetic retinopathy is a major issue faced all over the world peoples that causes permanent blindness. With the onset of symptoms of diabetic retinopathy and the illness advances to an extreme level, it is difficult to recognize diabetic retinopathy at an earlier level. This paper presents the automatic detection of blood vessel segmentation based on U-net architecture. First, the retina blood vessels were segmented using a U-Net Architecture with the encoder/decoder module of multiple convolutional neural networks. For segmentation, binary conversion techniques are used. For the classification, deep learning models were proposed, namely ResNet50, Inception V3, VGG-16, and modified CNN. The final results are measured on a standard benchmark DRIVE dataset that contains 2865 retinal blood vessel images. For image classification, the proposed modified CNN performed better for DRIVE datasets with an accuracy score of 98%. Precision of 98%, Recall is 94.5% and F1-score is 95%. This paper evaluates the perceptional quality of segmented retinal images using SSIM. In this study pixel intensity was measured using RMSE, and PSNR to assess the quality of the retinal vessel segmented image.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Analyzing retinal fundus images has become more important in diabetic retinopathy detection, obtaining essential information underlying the condition of eyes in diabetic patients and early diagnosis of specific illnesses such as cataracts and diabetic retinopathy [1]. The human eye is one of the most important and delicate parts of the human system. The fundus, which contains the retina, optic disc, macula, and fovea, is the area of the upper eyelid facing the lens. When in an eye test, it can be noticed by peering through the retina. The principal structural components apparent from color fundus imaging are the retinal blood vessels [2]. Color fundus imaging can reveal hemorrhages in the retina arteries, fundus hemorrhage, edema, and other disorders. Medical experts rely on these foundations to recognize and effectively treat disorders. They form the foundation on which eye doctors identify and treat disorders. Early detection, early diagnosis, and treatments of diabetic retinopathy illnesses all require precise segmented retinal blood vessel images. Manually segmentation is a time-consuming process, difficult, and needs advanced skills. When various specialists segment identical blood vessel images in medical practice, the outcomes are inconsistent, resulting in inaccuracies in vessel segmentation [3]. The retinal vascular segmentation technique can ease eye specialist diagnosis stress and efficiently handle the concerns of unskilled medical experts. As a result, automated retinal vascular segmentation is critical for the medical detection and treatment of ocular illnesses.

There are various difficulties in segmenting retinal blood vessels is exacerbated by the extremely complicated design of retinal blood vessels, poor contrast between both the retinal targeted artery and the foreground image, and noise contamination when obtaining retinal images. Though there are numerous obstacles to achieving correct retinal vascular segmentation, it is critical for early detection of DR and therapy [4].

The main contribution of the paper is given below.

-

We use preprocessing methods such as image augmentation to increase the number of images and to enhance the number of training instances and data normalizing to denoising to accurately forecast classifications to create the best massive retinal datasets for training the proposed model.

-

We proposed the U-net architecture for retinal blood vessel segmentation of detecting the DR at early

-

Measuring the perceptual quality of segmented images to improve the accuracy of detecting DR at an early stage after vessel segmentation.

-

We train the proposed CNN model (Resnet50, InceptionV3, and VGG16) to distinguish the minor variations among retinal images for diabetic retinopathy detection.

2 Related Work

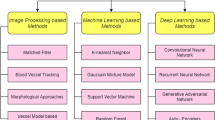

By detecting and recognizing diabetic retinopathy using a segmentation strategy, several investigators have contributed to a considerable increase in detection and diagnosis approaches. The method for optical disc segmentation based on the histogram matching approach were suggested in [5]. In this approach, portions of the retinal fundus images were utilized to generate a framework, and then the mean of histograms for every colored element was determined to locate the optical disc's center. The association between several RGB elements of the source image and the framework is also calculated, and the location with the highest significance level is chosen as the optical disc center. The author in [6] suggested the model for automatic retinal Hard Exudates recognition using the fuzzy c-mean clustering approach and SVM classifier to classify the retinal images. The proposed model was trained using ophtha EX and tested on the DIARETDB1 dataset. The cross-validation were performed using 10 folds. In [7] author suggested the automatic Diabetic Retinopathy Diagnosis Method analyzed fundus images of a com-parable level as angiograms to improve the visibility of abnormalities. The optical disc is segmented utilizing fast powerful features, and blood vessels are extracted utilizing morphological image processing methods. For automated optical disc recognition, the researchers in [8] used a clustering technique with a unique correction mechanism and vessel transformation. The suggested approach were used scale-space analysis to detect the optical disc's borders. In [9] suggested the hybrid approach for optical disc segmentation based on the vessel vector phase portrait analysis approach and used the modified clustering analysis for vessel transformation. In [10], another tree-based graph cut approach were suggested for retinal blood vessel and optic disc segmentation and achieve a better performance of the suggested model. The graph cut approach were suggested in [11-12] for extracting the retinal vascular tree and blood vessel information was used to recognize the location of the disc which is useful for training the neural network. In [13], several image processing methods were used for detecting the blood vessel and optical disc. The morphological operation and thresholding techniques were utilized for effective performance. In [14], suggested another model for blood vessel segmentation based on adaptive histogram and distance transformation. The adaptive histogram was used for preprocessing and distance transformation was used for segmentation. In [15], suggested the convolutional model with number of augmented retinal images and then preprocessed using normalization, transformation for blood vessel segmentation. In the work [16] presented the deep neural network model for segmentation of retinal blood vessel images and resolve the problem of cross modality. In [17], suggested the deep learning for classification of diabetic retinopathy based on inception-v3 architecture. Extract the retinal features using local binary pattern and classify the retinal images using convolutional neural network [18-27]. Another work on retinal images classification used TLBO [19] approach for binary and multiclass classification. In [20], suggested the hybrid approach of vessel segmentation and morphological operations for extraction of vessels. Several models were suggested based on hybrid approach [21-23]. After examination of some of previous studies, it was discovered that poor luminance and resolution of retinal dataset images make it impossible to examine it using image processing methods. As a result, image pre-processing approaches are critical for improving clarity and brightness for subsequent image analysis and interpretation such as Optical disc classification and segmentation, Optical disc elimination, and retinal blood vessel identification. As a result, the investigators of this study devised a method for optical disc, and retinal blood vessel segmentation to aid optometrists in the accurate identification of retinal illnesses utilizing retinal fundus image processing. In this paper, implemented and classify the grade of retinal images critically analysed segmentation methods and proposed a Mathematical Morphology based efficient extraction of blood vessels. This paper uses the U-Net architecture for retinal funds images segmentation and then use mathematical model to extract a feature based on Gaussian filters. Because extracting just relevant blood vessels, segmentation enables a more accurate examination of structural information of vessels, which is one of its main ad-vantages. Funds images obtained from the standard publicly available DRIVE database.

3 Material and Methods

Dataset: In this study used the publically available benchmark retinal DRVIE [23] datasets that contains2865 retinal blood vessel images which are extracted from the Kaggle website.

The Fig. 1 shows the labels are assigned according to the rank 0, 1, 2, 3, 4 of DR images for recognizing the diabetic retinopathy. Each labels have special meaning Mild NPDR, Moderate, Severse NPDR, and Proliferative PDR.

Figure 2 shows the classification of DR severity in dataset. it shows the percentage of various severity of various diabetic retinopathy images.

3.1 Preprocessing and Image Augmentation

The original dataset of retinal images could not provide the necessary resolution for a thorough study of retinal blood vessel segmentation because of noise is present in images. By overcoming the unclear edges of blood vessels in image, the retinal layers can be used augmenting approaches. The original retinal images have large noise and low SNR value. Figure 3 shows the schematic block diagram of proposed model with several steps. Some preprocesing step of proposed model.

-

1. Removal of noise from retinal image.

-

2. Retinal image flattening for contour adjustment on morphological diversity of blood vessel image scanning.

-

3. Binary transformation to detect the lower and upper boundaries of vessels and extract the detected boundary of vessels.

-

4. Resize retinal images and provide input to the U-Net architecture.

Figure 4 shows the various augmentation techniques used for increasing the number of retinal images to overcome the over-fitting problem and improve the performance of proposed model. it assists in the creation of a well-balanced training data and enhanced effectiveness of model.

3.2 Feature Extraction Using Modified Gaussian Filter

Because retinal blood vessels have great contrast, the Gaussian functional concept for retinal imaging is derived on the uses a comprehensive of the retinal fundus image. Several examples of the vessel shape, as well as the adjusted Gaussian curves using the least square error condition. The features of the vessel segment and fit curve have been reversed for clarity. Equation 1 shows the Gaussian function that was utilized for matching.

Here \(x\) is indeed the linear vector, \(A\) is the intensity, \(z\) is the place of the profiling peaks, and \(w\) is a Gaussian functional variable that determines the width. Fitted relative residual errors are smaller than 4 Gy - level on average. The formula for calculating error is \(\left\{\sum |({m}_{i}-{v}_{i})|\right\}/n.\) Here, \({m}_{i}\) and \({v}_{i}\) are the framework data and real data's profiles scores, accordingly, and n represents total pixels present in blood vessel image.

Equation 2 describes the revised Gaussian filter with values between 0 for vessel identification.

Here \(\sigma \) is a Gaussian filter functional variable that regulates the size of filter, such as the \(w\). Equation 3 shows how to update the functions \(f(x)\).

If element is adjusted furthermore to reasonable levels, as illustrated in Eq. 4, the \(\sigma \) amplitude value of element is altered to 3.

It means that for a certain vessel, this filter might provide the best reaction. As a result, using a modified Gaussian filter, it is better to improve the vessel. When both the peak values such as Gaussian vessel and Gaussian filter are overlap, get the maximum convolution. Equation 5 depicts the maximum convergence peak.

here, \(t\) is amplitude parameter of filter to assign the power of second order filter. \(1\) is assigned to vessel for evaluation. Equation 6 can be rearranged by substituting the exponentially ordering element by \(1/S2\).

The 2nd order generalized Gaussian functional value is first element in the integrant, as well as its integral value is equals zero. Used this association as a tool.

The convolutional peak intensity of a blood vessel segmentation associated with factor and 2nd order Gaussian filter related with factor are given in Eq. 8. Equation 9 shows how when variable t varies, the value in the previous equation modifies as well.

It is simple to determine that convolutional peak value reaches its highest. Further situations' maximum value conditions can also be determined. The conditions are maximal when \(t is 5, 4, 3, and 2,\) for example. Consequently, when the modified Gaussian filters with parameters \(t = 4 and t= 3\) have been employed, the related filters yield the highest convolutional peaks. Processing is performed with a proposed revised Gaussian filter.

3.3 Segmentation Using U-Net Architecture

Figure 5 shows the U-net architecture used for retinal blood vessel segmentation. It consists of encoder and decoder blocks with four convolutional layers (Channel). Each layer of encoder block mapped (Copy & Crop) with each layer decoder block. Initial value of channel is 64. The channel changes from 64- > 512, as convolutional process is increased step by step in downward direction. The arrow shows the max-pooling operation.

In decoding phase, convolutional process are decreased by step by step in upward direction called as up sampling approach that increases the size of retinal images. The arrow indicates the padding operation. The last layer of U-net architecture uses a filter for vessel segmentation.

After converting the all the retinal image to grayscale. For the linear motion used motion filter for approximation. The difference between the initial image and filtered image is calculated, yielding the final image. The final output image is obtained by taking the differences between the images bit by bit.

Let, P is the initial image, and M is the represents the motion filter \(,D\) is difference of final image and initial image\(D = M-P\).

Image Adjustment: The retinal images were enhanced via image alterations. Numerous factors such as image levels of intensity, luminance, and contrast adjustment were modified. The modifications increased the image quality, which leads to greater feature extracted. Color casts were removed using image intensity levels. Simple brightness and contrast adjustments were rapidly change the quality of an image. Color balance were enables for colors changes to rectify white balance issues.

Binary Conversion: To transform the basic pre-processed image to binary sequence in that step. B binary image is a computer image in which each pixel has just two distinct values. A binary image is often made up of two color: black and white, however the certain color may be utilized. The image intensity determines whether the pixel is black or white, and a threshold is determined appropriately.

Condition Check: To determine the blood vessels, the conditions is examined. The criterion is that a pixel in the image normalized differential image should be white, while the identical pixel in the binary transformed image should be black. If a pixel meets both of these criteria, it is chosen and sent for subsequent analysis, which involves the morphology encoding procedure. The little portions under a predefined threshold are deleted after erosion is complete, and the other pixels were collected.

Figure 6 depicts the retinal image final appearance following post-processing. Figure 6 (b) has less noise than Fig. 6 (a), the image taken before post-processing, and the vessels’ widths are closer to the actual value.

3.4 Classification

ResNet50: Featured pictures are fed into the input layer of the ResNet50 architecture, which is subsequently fed into convolution block-1, which contains conv2d, batch normalisation, relu activation, and max pooling processes. These techniques are used to map and filter images in order to detect context similarity. ResNet50 is made up of 50 weighted layers, each of which is connected to the next block in the same way that block-1 is connected to the next block. It changes the dimensions of 32, 64, 128, 256, 512, 1024, and 2048 forms internally. To reduce overfitting in the model, two dense layers with dropout layers have been included. Relu activation, L2 kernal regularisation with penalty value 0.01, and dropout value 0.5 are all present in the dense layer. Finally, a sigmoid activation function is applied to the output layer. Hyper-parameters such as the Adam optimizer with a learning rate of 0.0001 and the binary cross-entropy loss function are used to compile the model. Model is tested on batch sizes of 32 and 40 epochs, but at epoch 10, the model becomes stagnant and overfits.

InceptionV3: The InceptionV3 architecture uses convolutions, average pooling, max pooling, concatenations, dropouts, and fully connected layers to feed featured images from the input layer to the model, which is built up of symmetric and asymmetric building blocks. The activation inputs are batch normalized, and batch normalization is employed extensively throughout the model. This structure is made up of 42 weighted layers in which blocks are connected in a sequential manner. Two dense layers with dropout layers are also added to this model to reduce overfitting. Relu activation, L2 kernal regularization with penalty value 0.01, and dropout value 0.5 are all present in the dense layer.

VGG-16: The VGG-16 design takes featured images in the input layer and feeds them to the cov1 layer, which is 264 × 264 pixels in size. The image is loaded into a convolutional layer stack, where filers are used to capture the smallest image in the left/right, up/down, and centre positions. The convolution stride is set to 1 pixel, and the spatial padding of convolution layer input is set to 1 pixel for 3 × 3 convolution layers in order to maintain spatial resolution after convolution. Five max-pooling layers do spatial pooling after some of the conv. Layers. Stride 2 is used to max-pool over a 2 × 2-pixel section. Three Fully-Connected (FC) layers are added after a stack of convolutional layers, the first two of which each have 4096 channels, and the third of which conducts 1000-way classification and so has 1000 channels. Two thick layers with dropout layers are also added to this model to reduce overfitting. Relu activation, L2 kernal regularization with penalty value 0.01, and dropout value 0.5 are all present in the dense layer.

Proposed CNN Architecture: Conv2D, MaxPooling2D, Relu activation, Dropout operations, and a fully linked layer are among the eight layers that make up the CNN model [24, 25]. A featured image with dimensions of (264,264, 3) is applied to the first convolution layer, with node weights of 32. The convolution layer performs striding and padding to ensure that the output image has the same dimensions as the input image. The pooling layer receives the output of the convolution layer, which is used to lower the size of the convolved feature map and hence the computational expenses. This is accomplished by reducing the connections between layers and operating independently on each feature map. The MaxPooling2D operation with pool size (2, 2) is utilized to pool the largest element from the feature map in this study. Following pooling, a dropout player is introduced to reduce overfitting, with a dropout value of (0.5), implying that 50% of neurons are dropped throughout the training phase. At the convolution layer, the Relu activation is used. The same procedure is used with the other 7 CNN layers, which have varied node weights of 64, 128, 256, 128, 64, 32, and 16. After flattening the vector, one dense layer with relu activation and node weight 16 is employed, and this layer is represented as a fully linked layer. The model is tested with batch sizes of 16 and 100 epochs, but it becomes stagnant and overfits around epoch 25.

4 Performance Metrics

Accuracy, sensitivity, and specificity are the performance evaluation parameters used to measure the effectiveness of different deep learning classifiers. Accuracy is defined as the proportion of overall accuracy of the models.

where \(\mu x, \mu y, \sigma x, \sigma y, and \sigma xy\) are the local means, standard deviations, and cross-covariance for the input image x, and output image y. SSIM values greater than 0.95 indicate the high perceptual quality in the objective evaluation.

5 Result Analysis

Table 1 and Fig. 8, 9, 10 shows the performance evaluation of proposed U-net architecture for retinal blood vessel segmentation. The total area of segmented image was calculated. In Table 2 measured the RSME, PSNR, and SSIM and total execution time. The SSIM shows the perceptional quality of segmented retinal image with the range 0 to 1. The 0.8781 is the maximum achieved values. In this study pixels intensity measured using RMSE, and PSNR to assess the quality of retinal vessel segmented image. The effectiveness of all the vessel segmented images in the Dataset is evaluated only for the 9 retinal segmented image.

6 Comparative Analysis

Table 3 indicate that the proposed strategy is more accurate in segmentation phases. This approach also shows that it can get a good performance whiteout the use of a training stage.

Table 4 shows the Comparative analysis of perceptional quality of segmented image of proposed model with previous methods. It is observed that proposed model gives the smaller the value of RMSE for better the segmentation performance of retinal segmented image as compare to the previous methods. The proposed model gives the better values of PSNR.

7 Conclusion and Future Scope

This paper presents the segmentation techniques for blood vessel retinal images segmentation. The modified CNN approach is proposed based on the U-net architecture of deep learning by applying the binary conversion mechanism for segmentation. The experimental results were carried on all the images of DRIVE dataset obtained from Kaggle. It is found that proposed approach is effective for detecting the blood vessel used for early diagnosis of DR. For the major analysis part of this study is to measure the perceptional quality of segmented retinal image using SSIM. In this study pixels intensity measured using RMSE, and PSNR to assess the quality of retinal vessel segmented image. The final results are measured on standard benchmark DRIVE dataset that contains 2865 retinal blood vessel images. For image classification, proposed modified CNN performed better for DRIVE datasets with the accuracy score 98%. Precision of 98%, Recall is 94.5% and F1-score is 95%. The future work of this study is to compare the retinal blood vessel analysis for feature selection and extraction, and classification to recognise the optical disc, excluded, HEMs etc. for the other various standards benchmark datasets for early detection diabetic retinopathy.

References

Ashraf, M.N., Habib, Z., Hussain, M.: Texture feature analysis of digital fundus images for early detection of diabetic retinopathy. In: 2014 11th International Conference on Computer Graphics, Imaging and Visualization, pp. 57–62 (2014). https://doi.org/10.1109/CGiV.2014.29

Kar, S.S., Maity, S.P.: Automatic detection of retinal lesions for screening of diabetic retinopathy. IEEE Trans. Biomed. Eng. 65(3), 608–618 (2018)

Bai, C., Huang, L., Pan, X., Zheng, J., Chen, S.: Optimization of deep convolutional neural network for large scale image retrieval. Neurocomputing 303(16), 60–67 (2018)

Kar, S.S., Maity, S.P.: Automatic detection of retinal lesions for screening of diabetic retinopathy. IEEE Trans. Biomed. Eng. 65(3), 608–618 (2016)

Dehghani, A., Moghaddam, H., Moin, M.: Optic disc localization in retinal images using histogram matching. EURASIP J. Image Video Process. (1) (2012). https://doi.org/10.1186/1687-5281-2012-19

Wang, S., Yin, Y., Cao, G., Wei, B., Zheng, Y., Yang, G.: Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neurocomputing 149, 708–717 (2015)

Manjiri, P., Ramesh, M., Yogesh, R., Manoj, S., Neha, D.: Automated localization of optic disk, detection of microaneurysms and extraction of blood vessels to bypass angiography. In: Satapathy, S.C., Biswal, B.N., Udgata, S.K., Mandal, J.K. (eds.) Proceedings of the 3rd International Conference on Frontiers of Intelligent Computing: Theory and Applications (FICTA) 2014. AISC, vol. 327, pp. 579–587. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-11933-5_65

Muangnak, N., Aimmanee, P., Makhanov, S., Uyyanonvara, B.: Vessel transforms for automatic optic disk detection in retinal images. IET Image Proc. 9(9), 743–750 (2015). https://doi.org/10.1049/iet-ipr.2015.0030

Sreng, S., Maneerat, N., Hamamoto, K., Win, K.Y.: Deep learning for optic disc segmentation and glaucoma diagnosis on retinal images. Appl. Sci. 10(14), 4916 (2020). https://doi.org/10.3390/app10144916

Muangnak, N., Aimmanee, P., Makhanov, S.: Automatic optic disk detection in retinal images using hybrid vessel phase portrait analysis. Med. Biol. Eng. Compu. 56(4), 583–598 (2017). https://doi.org/10.1007/s11517-017-1705-z

Gonzalez, A.S., Kaba, D., Li, Y., Liu, X.: Segmentation of the blood vessels and optic disc in retinal images. IEEE J. Biomed. Health Inform. 18(6), 1874–1886 (2014)

Jestin, V.K.: Extraction of blood vessels and optic disc segmentation for retinal disease classification. In: Recent Advances in Computer Science, pp. 440–444 (2015)

Phyo, O., Khaing, A.S.: Automatic detection of optical disc and blood vessels from retinal images using image processing techniques. Int. J. Res. Eng. Technol. 3(3), 300–307 (2014)

Prakash, R.S., Aditya, R., Sameer, Y., Parameswari, S., Kumar, G.S.: Retinal blood vessel extraction and optical disc removal. Int. J. Res. Eng. Technol. 4(04), 80–83 (2015)

Oliveira, A.F.M., Pereira, S.R.M., Silva, C.A.B.: Retinal vessel segmentation based on fully convolutional neural networks. Expert Syst. Appl. (2018). https://doi.org/10.1016/j.eswa.2018.06.034

Liskowski, P., Krawiec, K.: Segmenting retinal blood vessels with deep neural networks. IEEE Trans. Med. Imaging 35(11), 2369–2380 (2016). https://doi.org/10.1109/TMI.2016.2546227

Fu, H., Xu, Y., Wong, D.W.K., Liu, J.: Retinal vessel segmentation via deep learning network and fully-connected conditional random ELDS. In: 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), vol. 698, p. 701 (2016). https://doi.org/10.1109/ISBI.2016.7493362

de la Calleja, J., Tecuapetla, L., Auxilio Medina, M., Bárcenas, E., Urbina Nájera, A.B.: LBP and machine learning for diabetic retinopathy detection. In: Corchado, E., Lozano, J.A., Quintián, H., Yin, H. (eds.) IDEAL 2014. LNCS, vol. 8669, pp. 110–117. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10840-7_14

Sidibé, D., Sadek, I., Mériaudeau, F.: Discrimination of retinal images containing bright lesions using sparse coded features and SVM. Comput. Biol. Med. 62, 175–184 (2015). https://doi.org/10.1016/j.compbiomed.2015.04.026

Li, Y.-H., Yeh, N.-N., Chen, S.-J., Chung, Y.-C.: Computer-assisted diagnosis for diabetic retinopathy based on fundus images using deep convolutional neural network. Mob. Inf. Syst. (2019)

Jiang, X., Mojon, D.: Adaptive local thresholding by verification-based multithreshold probing with application to vessel detection in retinal images. IEEE Trans. Pattern Anal. Mach. Intell. 25(1), 131–137 (2003)

Lam, B., Yan, H.: A novel vessel segmentation algorithm for pathological retina images based on the divergence of vector fields. IEEE Trans. Med. Imaging 27(2), 237-246 (2008)

Ghoshal, R., Saha, A., Das, S.: An improved vessel extraction scheme from retinal fundus images. Multimedia Tools Appl. 78(18), 25221–25239 (2019). https://doi.org/10.1007/s11042-019-7719-9

Staal, J.J., Abramoff, M.D., Niemeijer, M., Viergever, M.A., Ginneken, B.V.: Ridge based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 23, 501–509 (2004)

Zhexin, J., Zhang, H., Wang, Y., Ko, S.-B.: Retinal blood vessel segmentation using fully convolutional network with transfer learning, CDATA. Comput. Med. Imaging Graph. (2018). https://doi.org/10.1016/j.compmedimag.2018.04.005

Gadekallu, T.R., Khare, N., Bhattacharya, S., Singh, S., Maddikunta, P.K., Srivastava, G.: Deep neural networks to predict diabetic retinopathy. J. Ambient Intell. Human. Comput. (2020).https://doi.org/10.1007/s12652-020-01963-7

Muhammad, M., Wen, J., Nasrullah, N., Sun, S., Hayat, S.: Exudate detection for diabetic retinopathy using pretrained convolutional neural networks. Complexity, 11 (2020). https://doi.org/10.1155/2020/5801870

Jadhav, A.S., Patil, P.B., Biradar, S.: Optimal feature selection-based diabetic retinopathy detection using improved rider optimization algorithm enabled with deep learning. Evol. Intel. 14(4), 1431–1448 (2020). https://doi.org/10.1007/s12065-020-00400-0

Primitivo, D., et al.: A hybrid method for blood vessel segmentation in images. Biocybern. Biomed. Eng. 39(3), 814–824 (2019). https://doi.org/10.1016/j.bbe.2019.06.009

Bhardwaj, C., Jain, S., Sood, M.: Automated optical disc segmentation and blood vessel extraction for fundus images using ophthalmic image processing. In: Luhach, A.K., Singh, D., Hsiung, P.-A., Hawari, K.B.G., Lingras, P., Singh, P.K. (eds.) ICAICR 2018. CCIS, vol. 955, pp. 182–194. Springer, Singapore (2019). https://doi.org/10.1007/978-981-13-3140-4_17

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Deshmukh, S.V., Roy, A. (2023). Retinal Blood Vessel Segmentation Based on Modified CNN and Analyze the Perceptional Quality of Segmented Images. In: Woungang, I., Dhurandher, S.K., Pattanaik, K.K., Verma, A., Verma, P. (eds) Advanced Network Technologies and Intelligent Computing. ANTIC 2022. Communications in Computer and Information Science, vol 1798. Springer, Cham. https://doi.org/10.1007/978-3-031-28183-9_43

Download citation

DOI: https://doi.org/10.1007/978-3-031-28183-9_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-28182-2

Online ISBN: 978-3-031-28183-9

eBook Packages: Computer ScienceComputer Science (R0)