Abstract

Brain–Computer Interface (BCI) technology is a promising research area in many domains. Brain activity can be interpreted through both invasive and noninvasive monitoring devices, allowing for novel, therapeutic solutions for individuals with disabilities and for other non-medical applications. However, a number of ethical issues have been identified from the use of BCI technology. In previous work published in 2020, we reviewed the academic discussion of the ethical implications of BCI technology in the previous 5 years by using a limited sample to identify trends and areas of concern or debate among researchers and ethicists. In this chapter, we provide an overview on the academic discussion of BCI ethics and report on the findings for the next phase of this work, which systematically categorizes the entire sample. The aim of this work is to collect and synthesize all the pertinent academic scholarship into the ethical, legal, and social implications (ELSI) of BCI technology. We hope this study will provide a foundation for future scholars, ethicists, and policy makers to understand the landscape of the relevant ELSI concepts and pave the way for assessing the need for regulatory action. We conclude that some emerging applications of BCI technology—including commercial ventures that seek to meld human intelligence with AI—present new and unique ethical concerns.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Brain–computer interface (BCI)

- Brain–machine interface (BMI)

- Ethical, legal, and social issues (ELSI)

- Neuroethics

- Scoping review

1 Introduction

Although Brain–Computer Interfaces (BCI) have been used for decades, recent advances in the technology and increased private investment into BCI research have led to rapidly broadening and novel applications that have caught the public’s attention. In recent years, BCIs have made headlines and inspired viral coverage of press releases, illustrating the potential applications of the technology and the ambitions of the private and public researchers advancing it [1]. Media coverage has included a monkey playing a video game using its mind alone [2], prototypes of a mass-produced consumer BCI device implanted into a person’s skull via an automated surgical robot with aims to introduce economies of scale into the process of implanting an invasive BCI, thus aiding widespread adoption [3], and devices for sale at electronics stores that claim to improve a user’s mood or performance in video games by modulating their brain waves [4, 5]. While many people may already personally know someone with a cochlear implant, an example of a BCI device that helps restore one’s sense of hearing, the technology has already extended to read the mind of a person with paraplegia to operate a wheelchair, allow direct brain-to-brain communication, or even enable motor control of an insect with an implanted BCI [6]. These are all examples of applications of BCI technology, which is both a well-established and a quickly growing field of research with the potential for therapeutic medical use as well as a consumer technology.

At its core, BCI is any technology that can read, interpret, and translate brain activity into a format digestible by a computer. The device can then interpret those brain signals as input to create some sort of output in the form of an interaction with the outside world, or pass information about the outside world back to the user as feedback that the user can then act upon. BCI devices may be generally categorized as active, reactive, or passive. Active BCIs are, as the name suggests, action based. They detect and decode mental commands initiated by the user, and many can even translate these signals into motor outputs. Meanwhile, reactive BCIs are sensory based, modulating user-brain activity based on external stimuli. In either of these BCI devices, the user is directing BCI output based on purposeful commands or attentiveness. Passive BCIs, in contrast, work solely to monitor user-brain activity and provide relevant feedback. In these instances, the BCI device is not modulating or reacting to brain activity, aside from reporting arbitrary measurements. As noted above, current examples include the cochlear implant, which detects and transforms sound into electrical signals that stimulate the cochlear nerve, transmitting auditory information to the brain and allowing the user to hear (reactive BCI); or any device that interprets a user’s brain activity as a means to control an external prosthesis, such as a robotic arm or wheelchair (active BCI) [7]. BCI devices can be noninvasive, utilizing, for example, an electroencephalogram (EEG) skull cap that can read the brain’s electrical activity from outside the skull, or invasive devices that require implantation within the skull and direct contact with the brain. Due to the interference from the intervening tissue, noninvasive BCIs have a poor signal-to-noise ratio, and as a result the more advanced BCI applications usually involve an invasive device, which increases signal quality but also poses greater risks to the user [8]. Proliferation of BCIs has emerged as a trend, as the initial therapeutic BCI technologies are adapted for general public use as “cool” gadgets or in military applications [9], gaming [10], communication [11], and even performance enhancement [12].

2 Ethical Concerns with BCIs

BCI technology is associated with several ethical and societal implications, including issues of safety, stigma and discrimination, autonomy, and privacy, to name but a few. The assessment of the balance of risks and benefits associated with widespread use of this technology is a complex endeavor and must account for concerns about possibly frequent events (e.g., hacking of BCIs and malevolent use of extracted information) as well as relatively rare but catastrophic events (such as a BCI prosthetics failure leading to a fatal traffic accident). Additionally, the use of BCI may contribute to the stigmatization of disability and may even jeopardize autonomy in specific groups.

One specific form of BCI development, Brain-to-Brain Interface (BBI), may lead to particularly novel social and ethical concerns. BBI technology combines BCI with Computer-to-Brain Interfaces (CBI) and, in newer work, multi-brain-to-brain interfaces—such as Jing et al.’s [12] study—real-time transfer of information between two subjects to each other has been demonstrated.

There have been a number of advances in BCI technology in recent years, including commercial ventures that seek to utilize BCI in novel ways. One such example is the company Neuralink, led by entrepreneur Elon Musk, which aims to achieve “a merger with artificial intelligence” [13]. There has been ample skepticism about Neuralink’s goals and claims, with some referring to the company’s public announcements and demonstrations as “neuroscience theater” [14]. Regardless of whether Neuralink’s stated goals are feasible in the near-term future, the existence of commercial ventures like Neuralink in the BCI field certainly signals new areas of active development and may shed some light on where the technology could be heading.

3 Prior Research into BCI Ethics

Prior research on this topic, apart from our own work [15], includes an earlier scoping review of the pertinent academic discussion [16] as well as analysis of print media reports on ethics of BCI [17]. The scoping review, conducted by Burwell and colleagues in 2016 and reported in a 2017 paper, included the selection of 42 academic articles about BCI that were published before 2016. They found that the majority of articles discussed more than one type of ethical issue associated with BCI use, which demonstrated that there is some cause for concern. Among the most common ethical concerns surrounding the use of BCI were user safety, justice, privacy and security, and balance of risks and benefits. Other, less commonly mentioned concerns include military applications, as well as enhancement and uses of BCI that promote controversial ideologies (e.g., transhumanism).

In their first-of-its-kind analysis of the academic literature on BCI ethics, Burwell and colleagues noted the frequency of concerns does not measure the moral or regulatory significance of the issue; ethical concerns that were mentioned once or rarely may be just as pressing as concerns mentioned with high frequency, or even more so. They also found that the articles that mentioned a vast range of issues failed to provide depth of discussion and may be less suitable for use by ethicists and policy makers as guidance to address specific social problems. While numerous concerns are identified in the literature, the authors found the debate up until the year 2016 to be relatively underdeveloped, and few of the analyzed articles made concrete proposals to address social and ethical issues. All in all, Burwell and colleagues conclude that, based on their results, more high-quality work, including empirical studies, should be conducted on this topic.

Kögel and colleagues [18] then conducted a scoping review of empirical BCI studies in fields of medicine, psychology, and the social sciences. They sought to understand empirical methods employed in BCI studies and how the ethical and social issues discussed are associated, while also identifying relevant ethical and social concerns not being discussed. With a sample of 73 studies, Kögel and colleagues found that problems of usability and feasibility, such as user opinion and expectations, technical issues, etc., were being frequently addressed. However, potential problems of changes in self-image, user experience, and caregiver perspectives were relatively lacking. Overall, Kögel and colleagues [19] recognize a lack of BCI-centered ethical engagement and exploration among these studies.

A study by Gilbert and colleagues [1] explored how BCI is depicted in the English-speaking media, with emphasis on news outlets. The researchers analyzed 3873 articles by topic and tone. Five major topics were discussed: focus on the future, mention of ethics, sense of urgency, medical applications, and enhancement. As for the tone of print media articles, the researchers contrasted articles that provided positive depictions from those that had negative depictions and reservations about the technology. The authors found that 76.91% (n = 2979) of the 3873 total articles portrayed BCI positively including 979 of these articles (25.27%) that had overly positive and enthusiastic narratives. In contrast, 1.6% of articles had a negative tone, with 0.5% of the total articles having an overly negative narrative. Only 2.7% of articles mention issues specific to ethical concerns. In terms of article content, 70.64% of total articles discuss BCI with respect to its future potential, 61.16% of the articles discuss the medical applications of BCIs, and 26.64% of the articles contain claims about BCI enabling enhancement.

Gilbert and colleagues’ analysis of the large sample of mass media articles reveals a disproportional bias in favor of a positive outlook on BCI technology. A positive representation of BCI can be a good thing if the purpose is to highlight the potential to help patients and bring attention to the struggles faced by the people who may benefit from the technology. However, there are adverse effects of positive bias on BCI in the media. The technology is far from perfect, and the disproportionate representation of positive articles could overshadow the risks of BCI, therefore not fairly representing the current capabilities and future potential of this technology within the media, intended for mass public consumption. Gilbert and colleagues suggested that the positive bias in the media misrepresents the state of the technology by disregarding ethical issues, risks, and shortcomings. They conclude that the media seems to lack objective information regarding risks and adverse effects of BCI and disregard the potential impacts of the technology on key topics such as agency, autonomy, responsibility, privacy, and justice.

4 Recent Trends in BCI Ethics

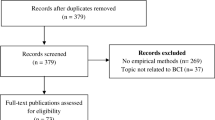

In order to elucidate the ethical issues inherent in the development of BCI technology, we revisit our previously published work [15] that began an in-depth scoping review of the ethics literature concerning BCI. This work has updated the mapping of the BCI ethics literature published since 2016, when the last review of this nature was conducted [16], and updated the coding strategy accordingly. Revisiting the academic literature around BCI ethics just 4 years after the publication of Burwell and colleagues’ first-of-its-kind review was necessary given the rapid growth of the technology in recent years; when reproducing Burwell et al.’s search methodology in 2020, we found that almost as many relevant academic papers discussing BCI ethics had been published in the years since 2016 (n = 34) as Burwell and colleagues had identified in all years prior to 2016 (n = 42). This indicates the body of academic literature on BCI ethics is rapidly growing, and an updated analysis is warranted. Previously, we reviewed a randomly selected statistically significant sample (n = 7, 20.6%) of the 34 academic papers addressing the ethical and social issues of BCI technology published between 2016 and 2020 following a systematic search with inclusion and exclusion criteria. In that paper, we established the continued utility of the coding schema developed by Burwell and colleagues that can continue to be used, with some modifications, to understand the landscape of the academic literature around the ethical, legal, and social issues (ELSI) inherent to BCI technology.

In this chapter, we outline the methodology and findings for the next phase of this work, which systematically categorizes the entire sample. The aim of this work is to collect and synthesize all of the pertinent academic scholarship into the ELSI of BCI technology in order to provide a foundation for future scholars, ethicists, and policy makers to understand the landscape of the relevant ELSI concepts and pave the way for assessing the need for regulatory action.

In this endeavor, we are guided by Blank’s [20] taxonomy of regulatory responses (See Table 1), which mirrors the familiar distinctions in moral philosophy between things that are (a) morally required (and thus should be made mandatory), (b) morally desirable and permissible (and thus should be encouraged), (c) morally neutral and permissible (and thus should be left to the unfettered operation of the market), (d) bad but nevertheless still morally permissible (and thus should be discouraged), and (e) morally impermissible (and thus should be prohibited). Blank’s work provides a specialized outline regarding Neuropolicy, particularly factors guiding regulatory responses of the government. We use this framework to contextualize our discussion of ELSI of BCI technology and how future regulations may be considered. In this work, we do not seek to make specific recommendations about the regulatory response that may be appropriate for the different ethical and social issues that arise from BCI technology, but instead hope to gather and synthesize the relevant salient facts and normative positions in order to propel the debate to a more mature state where policy action is more informed and feasible.

It should be noted that there are different levels of background regulation of BCI technology. For instance, research and development in BCI (both invasive and noninvasive) are currently encouraged via government incentives (e.g., DARPA-supported Brain Initiative and BrainGate in the USA) while certain forms of invasive BCI use are discouraged via the gate-keeper medical model and noninvasive forms are left to market forces. The issue of whether policy change is necessary should reflect an open public discussion where ethical and policy concerns are not only thoroughly mapped, but also ranked for importance (see, e.g., the study by Voarino and colleagues [21]).

5 Materials and Methods

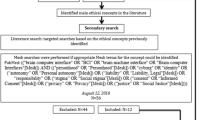

In this study, we have completed an expansion upon our previous research [15] into the academic discussion of BCI technology, which in turn followed from Burwell et al.’s 2016 study. While Burwell et al.’s 2016 study was a first-of-its-kind literature review of the ethics scholarship around BCI, our 2020 study adopted a similar methodology, but looked at the rapidly growing body of literature published in the time since Burwell et al.’s study was conducted. We [15] analyzed a randomly selected pilot sample (20.6%, n = 7) from the original pool of articles (n = 34) published since 2016 in order to identify recent trends in research and ethical debates regarding BCI technology and to assess the continued utility of the coding structure previously established by Burwell, et al. We now evaluate the entire sample. Thus, the same search information and criteria were used. The search was conducted in April of 2020 using PubMed and PhilPapers. Search queries included:

-

PubMed: ((“brain computer interface” OR “BCI” OR “brain machine interface” OR “Brain-computer Interfaces”[Mesh]) AND ((“personhood” OR “Personhood”[Mesh]) OR “cyborg” OR “identity” OR (“autonomy” OR “Personal autonomy”[Mesh]) OR (“liability” OR “Liability, Legal”[Mesh]) OR “responsibility” OR (“stigma” OR “Social stigma”[Mesh]) OR (“consent” OR “Informed Consent”[Mesh]) OR (“privacy” OR “Privacy”[Mesh]) OR (“justice” OR “Social Justice”[Mesh]))).

-

PhilPapers:((brain-computer-interface|bci|brain-machine-interface)&(personhood|cyborg| identity|autonomy|legal|liability|responsibility|stigma|consent|privacy|justice)).

Following our prior work [15], we seek to elaborate on and identify changes in academic literature on BCI ethics since 2016 regarding new ethical discussions identified in the pilot sample. Further, we hope to better understand and quantify the preliminary trends observed within the literature using Burwell and colleagues’ ethical framework. As in our prior work [15], the slightly modified search yielded 34 articles since 2016, as compared with Burwell et al.’s original 42 articles. At the full text screening phase, one article was excluded as tangential, leaving a sample of 33 texts. The search was conducted using a similar, slightly modified methodology and exclusion/inclusion criteria as Burwell et al. expanded to include applications involving animals and other subjects, such as brain organoids.

As with the pilot sample, the abductive inference approach to qualitative research [22] was applied such that Burwell et al.’s framework was used to identify and map the overarching themes of ethical issues posed by BCIs (see Fig. 1). The map identifies eight specific ethical concerns that define the conceptual space of the ethics of BCI as a field of research. Only one of the ethical concerns refers to physical factors specifically: User Safety. Two are explicitly about psychological factors: Humanity/Personhood and Autonomy, while the remaining five focus on social factors: Stigma and Normality; Responsibility and Regulation; Research Ethics and Informed Consent; Privacy and Security; and Justice. While coding the texts with an eye toward any additional discussions of BCI-related ethical issues not identified in Burwell and colleagues’ framework, we found a recurring theme of Dependence on Technology among our sample. Thus, we decided to add this as an additional ethical concern under the overarching theme “psychological factors.”

Overarching themes in BCI ethics. Note: Adapted from our prior work [15]

6 Results

Similar to our prior published work [15], analysis of the fully updated sample includes discussion of all eight original ethical categories identified by Burwell and colleagues [16], with a notable addition of Dependence on Technology as a growing ethical theme not seen in the BCI literature prior to 2016. Table 2 summarizes our findings in 2021 compared to Burwell et al.’s findings reported in their 2017 paper.

The most frequently discussed categories in our 2021 analysis were Autonomy (n = 26, 78.8%) and Responsibility and Regulation (n = 26, 78.8%), which appeared at equal frequencies in the sample. Discussions of autonomy were primarily concerned with the level of control those with BCI devices have over their actions and decisions. This idea is especially relevant to BCI design as the BCI developers are the ones who decide whether users should be able to override or ignore BCI-mediated behavioral or physiological responses. As one study [21] notes, “Even while performing an action, the users themselves might be uncertain about being the (only) agent of an action, with systems that make autonomous decisions additionally decreasing the users’ own autonomy.”

While most articles discussed benefits in terms of the increases in autonomy and independence gained from using a BCI [10, 17, 23,24,25], the potential for autonomy to be compromised was also discussed. For example, Hildt [10] mentions the possibility of taking the information gained from BCI—or in this case, Brain-to-Brain Interface (BBI)—from the individual and using it without their consent or knowledge:

Participants in BBI networks depend heavily on other network members and the input they provide. The role of recipients is to rely on the inputs received, to find out who are the most reliable senders, and to make decisions based on the inputs and past experiences. In this, a lot of uncertainty and guessing will be involved, especially as it will often be unclear where the input or information originally came from. For recipients in brain networks, individual or autonomous decision-making seems very difficult if not almost impossible [10].

These ideas were often tied into discussions of Responsibility and Regulation, which was largely concerned with who should be held responsible in the cases of adverse consequences of BCI-mediated actions. The issue at the heart of Responsibility and Regulation can be understood with the hypothetical question: if a negative action was to be carried out by someone using a BCI, would liability fall upon the user of the technology, the technology itself, or perhaps the developers of the technology? For instance, if someone were to use a BCI-controlled prosthetic arm to pull the trigger on a gun and kill another person in the process, is there an argument to be made that the manufacturer of the BCI-prosthetic bears some responsibility for the action? What if the user of the BCI claims that they did not intend to fire the gun, and it was a malfunction of the BCI device? Many researchers claim that our legal system is not yet equipped to deal with such a situation. In the sample of articles, there was contention as to not only the moral and legal challenges associated with determining accountability in these instances, but also as to how to differentiate between responsibility on the part of the user, the machine, or the BCI developer. Rainey and colleagues, for example, state that “on the one hand, having limited control of devices seems to suggest device users ought to be considered less responsible for their actions mediated via BCIs. On the other hand, it is predictable that devices will be only partially controllable” [19]. This then relates to issues of regulation, not only in the development, distribution, and use of BCI devices, but also in how to enforce legal accountability in situations such as these.

Privacy and Security (n = 21, 63.6%) was another commonly discussed issue. The nature of a BCI sending brain signals directly to a computer raises the possibility of hacking, and many sources acknowledged the potential of brain hacking, in which control of a BCI device or access to its data (including the user’s brain activity signals and the BCI’s interpretation of those signals) might be seized by an unauthorized party. This could lead to a host of potential harms and privacy concerns for the user, especially if personal information—including their mental state or truthfulness at a given time—may be accessed in this way. On a related note, there are notable concerns that EEG data might be used to identify users and gain access to sensitive information. This then introduces concerns as to how neural data should be “gathered, collected, and stored,” [26] in addition to “data ownership and privacy concerns” [26, p8]. Some articles [10, 24, 27] talked about the risks of extracting private information from people’s brains and using it without their knowledge or consent, which is a significant concern for BCI technologies. Müller & Rotter connected this issue to User Safety, arguing that the increased fidelity of BCI data yields inherently more sensitive data, and that the “impact of an unintended manipulation of such brain data, or of the control policy applied to them, could be potentially harmful to the patient or his/her environment” [24].

The theme of User Safety (n = 19, 57.6%) tied into this discussion, as concerns for the psychological harms that might arise from brain hacking and privacy breaches were discussed on both an individual and societal level. As Müller and Rotter explain, “The impact of an unintended manipulation of such brain data, or of the control policy applied to them, could be potentially harmful to the patient or his/her environment” [24]. Physical harms were also mentioned as a point of ethical contention under this category, as detrimental consequences of BCI malfunctions and risks associated with implantation were taken into account. There was also discussion of the harms that might befall others aside from the BCI user, as in cases of adverse behavioral outcomes resulting from BCI malfunction or user mistakes. In these scenarios, as one source claims, “BCI-mediated action that deviates from standard norms or that leads to some kind of harm ought to be accommodated” [19]. Thus, both psychological and physical harm were explained as serious possibilities that need to be considered [10, 19, 24, 25]. One article discussed the impacts of harm on the results of a BCI study, stressing the importance of stopping a clinical trial if the risks to the individual participants begin to outweigh the potential benefits to science [23].

Research Ethics and Informed Consent (n = 19, 57.6%) were addressed at the same frequency as User Safety. Discussions surrounding this topic were primarily about whether subjects had an in-depth and comprehensive understanding of all associated risks, including the potential for psychological, social, and physical adverse effects involved. Indeed, as Yuste and colleagues point out, current consent practices may become problematic due to their “focus only on the physical risks of surgery, rather than the possible effects of a device on mood, personality or sense of self” [28]. Another point of concern was whether clinicians and researchers were providing an accurate representation of the limitations of BCI, taking care to avoid overhyping its potential among vulnerable or desperate populations. Few mentioned the particular challenges in obtaining informed consent from those in locked-in states. The main consensus among the ethicists that discussed this theme was that it is very important to obtain informed consent and make sure that the subjects are aware of all possible implications of BCI technology before consenting to its use. Additionally, some ethicists warned against the possibility of exploiting potentially vulnerable BCI research subjects. As Klein and Higger note: “the inability to communicate a desire to participate or decline participation in a research trial—when the capacity to form and maintain that desire is otherwise intact—undermines the practice of informed consent. Individuals cannot give an informed consent for research if their autonomous choices cannot be understood by others” [23].

Humanity and Personhood (n = 15, 45.5%) was the next most commonly discussed category. The largest consideration within this topic was the potential for changes to user identity and “sense of self” resulting from BCI use, contributing to a “pressing need to explore and address the potential effects of BCIs as they may impinge on concepts of self, control and identity” [17]. Many sources describe how users grapple with changes to their self-image following therapeutic use of BCI technology, both in terms of their disorder and associated limitations, and the extent to which the BCI technology is a part of them. Some sources also cited changes to personality as a risk associated with BCI technology, a concern arising from the finding that “some people receiving deep-brain stimulation...have reported feeling an altered sense of agency and identity” [28].

This is an important concern since BCIs could impact one’s sense of self. In one specific study of BCI technology used in patients with epilepsy, there were a variety of resulting perspectives on sense of self, with some individuals saying that it made them feel more confident and independent, while others felt like they were not themselves anymore. One patient expressed that the BCI was an “…extension of herself and fused with part of her body…” [17]. Other articles more generally discussed the possibility of the sense of self changing and the ways BCI technology could contribute to this. Sample and colleagues categorized three ways in which one’s sense of self and identity could change: altering the users’ interpersonal and communicative life; altering their connection to legal capacity; and by way of language associated with societal expectations of disability [25]. Meanwhile, Müller and Rotter argue that BCI technology constitutes a fusion of human and machine, stating that “the direct implantation of silicon into the brain constitutes an entirely new form of mechanization of the self… [T]he new union of man and machine is bound to confront us with entirely new challenges as well” [24].

Justice (n = 13, 39.4%) was less frequently discussed among the sample, with the central concern being the potential for the technology to exacerbate existing inequalities, both in inherent design flaws and in distribution processes and barriers to access. There was also some discussion as to the potential for the technology to restore basic human rights among populations experiencing debilitating diseases and disorders, prompting the need for fair and ethical advancement of BCI technology. Two texts specifically discussed healthcare coverage of BCI access, noting how “unequal access to BCIs because of personal variations in BCI proficiency might raise questions of healthcare justice” [29]. One final concern was that “through algorithmic discrimination, existing inequalities might be reinforced” [29], disproportionately affecting disadvantaged populations. An additional concern related to inequality and injustice arose within the BCI research itself. These discussions often related back to the aforementioned questions of when the trials would end and if the participants were permitted to subsequently keep the BCI technologies [23].

Stigma and Normality (n = 12, 36.4%) was discussed to nearly the same degree as Justice. These conversations were largely centered around concerns that visible BCIs might further target their users for discrimination, leading to biased interactions. This could contribute to social isolation and exclusion among these populations. One source, for example, theorizes the technology that “confers disability group identity on the user might validate or otherwise reinforce harmful stigmas that often accompany that disability group identity and isolate, dominate, devalue, and generally oppress disabled people” [30]. Thus, stigma was mainly discussed from the perspective of the device itself having a negative stigma around it, and the device itself being what is stigmatizing about the individual [23]. However, it was also mentioned that perhaps universalizing the technology instead of only targeting it toward a group that is considered “disabled” could reduce or eliminate stigma [25].

Surprisingly, Enhancement (n = 7, 21.2%) was discussed at a greater frequency than Military Applications, diverging from Burwell and colleagues’ sample [16]. Sources mentioned a potential “extended mind” and augmentation capabilities, one going so far as to suggest a future in which “powerful computational systems linked directly to people’s brains aid their interactions with the world such that their mental and physical abilities are greatly enhanced” [28].

Dependence on Technology (n = 6, 18.2%) was a category unique to our sample that seems to have emerged in the ethical discussion of BCI technology since 2016. These discussions were dominated by concerns that BCI users might become dependent on their devices and fail to recognize potential errors in the machine’s decision-making capabilities. Gilbert and colleagues explain that “the ethical problem with over-reliance is that the device ends up supplanting agency rather than supplementing it” [31], which relates back to challenges associated with autonomy. Alternatively, some might become dependent on BCI technology that they are unable to continue using beyond study participation.

Consistent with the findings of Burwell and colleagues [16], Military Applications (n = 3, 9.1%) was a relatively infrequent consideration within our sample, with sources briefly touching on the idea of the military as a relevant target population. Additionally, in our sample, Other Ethical Issues (n = 3, 9.1%) were similarly infrequent, briefly citing the potential for “therapeutic misconception and unrealistic expectations” [32], issues with advance directives among BCI users, and the ethical implications of slowing technology advancement.

7 Discussion

While there have been notable advancements in BCI and BBI technology and the body of literature on the ethical aspects of BCI technology has grown substantially since the original publication of Burwell and colleagues’ research, these findings suggest that the original taxonomy developed by Burwell and colleagues remains a useful framework for understanding the body of literature, specifically on the social factors of the ethics of BCI. Ethicists can use this taxonomy—with some slight modifications, which we outline below—to understand how the body of literature on the ethics of BCI is grappling with ethical issues arising from the applications of this rapidly advancing technology. Articles published since 2016 still mostly conform to the taxonomy and can be categorized using it in future iterations of the scoping review methodology (Fig. 2).

There are, however, some areas within the growing body of literature on BCI ethics that have arisen since the original research was published that need to be incorporated into the taxonomy. We recommend the following modifications to the conceptual mapping outlined in Fig. 1. First, expanding the discussion of the physical (e.g., harms to test animals) and psychological (e.g., radical psychological distress) effects of BCI technology. The publicly available information on commercial BCI endeavors frequently mentions experiments with increasingly complex and even sentient animals, such as Neuralink’s demonstration of their technology on live pigs [14]. The lack of ethical scrutiny of these studies is an essential cause for concern [33]. Thus, ethical discussions should be expanded to include public awareness of private industry research into BCI using animals. Secondly, while the risks of physical harm from BCI are fairly well understood and covered in the literature, further research is needed to understand emerging psychological factors in BCI ethics, examining how human–AI intelligence symbiosis, brain-to-brain networking, and other novel applications of the technology may affect psychological well-being in humans. For instance, in the interview study by Gilbert and colleagues, one patient mentioned that “she was unable to manage the information load returned by the device,” which led to radical psychological distress [17].

Going forward, it is imperative to expand on the connection between ethics and policy in discussions of BCI technology and conduct more empirical studies that will help separate non-urgent policy concerns, which are based on theoretical effects of BCI, from the more urgent concerns based on the current state of science in regard to BCI technology. In this, we echo Voarino and colleagues [21], in stating that we must advance the discussion from merely mapping ethical issues into an informed debate that explains which ethical concerns are high priority, which issues are moderately important, and establishing what constitutes a low priority discussion of possible future developments.

That said, it is important to make sure that the ethics literature keeps pace with engineering advances and that policy does not lag behind. In that vein, following Dubljević [34], we propose that the key ethical question for future work on BCI ethics is:

What would be the most legitimate public policies for regulating the development and use of various BCI neurotechnologies in a reasonably just, though not perfect, democratic society?

Additionally, ethicists need to distinguish between ethical questions regarding BCI technology that engineers and social scientists can answer for policy makers, versus those that cannot be resolved even with extensive research funding [35]. Therefore, following Dubljević and colleagues [36], we posit that these four additional questions need to be answered to ensure that discussions of BCI technology are realistic:

-

1.

What are the criteria for assessing the relevance of BCI cases to be discussed?

-

2.

What are the relevant policy options for targeted regulation (e.g., of research, manufacture, use)?

-

3.

What are the relevant external considerations for policy options (e.g., international treaties)?

-

4.

What are the foreseeable future challenges that public policy might have to contend with?

By providing answers to such questions (and alternate or additional guiding questions proposed by others), ethicists can systematically analyze and rank issues in BCI technology based on an as-yet to be determined measure of importance to society. While we have not completed such analyses yet, we do provide a blueprint above, based on conceptual mapping and newly emerging evidence, of how this can be done.

8 Conclusion

This chapter builds on, and updates, previous research conducted by Burwell and colleagues [16] to review relevant literature published since 2016 on the ethics of BCI. Although their article is now somewhat outdated in terms of specific references to and details from the relevant literature, the thematic framework, and the map we created—with the eight specific categories that it provides—and the nuanced discussion of overarching social factors have withstood the test of time and remain a valuable tool to scope BCI ethics as an area of research. A growing body of literature focuses on each of the eight categories, contributing to further clarification of existing problems concerning BCI technology. BCI ethics is still in its early stages, and more work needs to be done to provide solutions for how these social and ethical issues should be addressed.

Despite seeing evidence that these eight categories continue to be significant in more recent research, it is worth noting that we have found that the distribution of the eight categories was different in recent years, compared with the distribution previously identified by Burwell and colleagues [16] in the literature published before 2016. For instance, in the full sample of articles, we found that the two categories discussed most frequently were Autonomy (n = 26, 78.8%) and Responsibility and Regulation (n = 26, 78.8%), with Privacy and Security being discussed in 63.6% (n = 21) of articles, and User Safety, and Research Ethics and Informed Consent each discussed in 19 out of the 33 articles analyzed [57.6%]. However, despite Responsibility and Regulation being mentioned in 26 of the 33 papers [78.8%], it was not frequently discussed at length. Three of the four most frequently discussed categories identified in this distribution were not among Burwell and colleagues’ top four most frequently mentioned (see Table 2). It seems that while the eight issues mapped are still ethically significant with regard to BCI research, the emphasis among them may be shifting toward concerns of psychological impact.

On that note, psychological effects (e.g., radical psychological distress) need to be carefully scrutinized in future research on BCI ethics. Additionally, one aspect that was not explicitly captured in the original thematic framework or the map we reconstructed from it is physical harm to animals used in BCI experimentation [33]. Finally, more detailed proposals for BCI policy have not yet become a frequent point of discussion in the relevant literature on BCI ethics, and this should be addressed in future work. We have provided guiding questions that will help ethicists and policy makers grapple with the most important issues first.

References

Gilbert F, Pham C, Viana J, Gillam V. Increasing brain-computer interface media depictions: pressing ethical concerns. Brain Computer Interfaces. 2019;6(3):49–70.

Wakefield J. Elon Musk’s Neuralink ‘shows monkey playing pong with mind’. BBC News. 2021, April 9. https://www.bbc.com/news/technology-56688812.

Etherington D. Take a closer look at Elon Musk’s Neuralink Surgical Robot. TechCrunch. 2020, August 28. https://techcrunch.com/2020/08/28/take-a-closer-look-at-elon-musks-neuralink-surgical-robot/.

Childers N. The video game Helmet that can hack your brain. Vice. 2013, June 6. https://www.vice.com/en/article/d77bmx/the-video-game-helmet-that-can-hack-your-brain.

Grush L. Those ‘mind-reading’ EEG headsets definitely can’t read your thoughts. The Verge. 2016, January 12. https://www.theverge.com/2016/1/12/10754436/commercial-eeg-headsets-video-games-mind-control-technology.

Li G, Zhang D. Brain-computer interface controlled cyborg: establishing a functional information transfer pathway from human brain to cockroach brain. PLoS One. 2016;11(3):e0150667.

Aricò P, Borghini G, Di Flumeri G, Sciaraffa N, Babiloni F. Passive BCI beyond the lab: current trends and future directions. Physiol Meas. 2018;39(8):08TR02.

Shih J, et al. Brain-computer interfaces in medicine. Mayo Clin Proc. 2012;87(3):268–79.

Trimper JB, et al. When ‘I’ Becomes ‘we’: ethical implications of emerging brain-to-brain interfacing technologies. Front Neuroeng. 2014;7:4. www.frontiersin.org/articles/10.3389/fneng.2014.00004/full

Hildt E. Multi-person brain-to-brain interfaces: ethical issues. Front Neurosci. 2019;13:1177. www.frontiersin.org/articles/10.3389/fnins.2019.01177/full

Schmitz S. The communicative phenomenon of brain-computer interfaces. In: Mattering feminism, science, and materialism. New York/London: New York University Press; 2016. p. 140–58.

Coin A, Dubljević V. The authenticity of machine-augmented human intelligence: therapy, enhancement, and the extended mind. Neuroethics. 2020;14(2):283–90. https://doi.org/10.1007/s12152-020-09453-5.

Marsh S. Neurotechnology, Elon Musk and the goal of human enhancement. The Guardian. 2018, January 1. https://www.theguardian.com/technology/2018/jan/01/elon-musk-neurotechnology-human-enhancement-brain-computer-interfaces.

Regalado A. Elon Musk’s Neuralink is neuroscience theater. In MIT Technology Review. https://www.technologyreview.com/2020/08/30/1007786/elon-musks-neuralink-demo- update-neuroscience-theater/. Accessed 30 Aug 2020.

Coin A, Mulder M, Dubljević V. Ethical aspects of BCI technology: what is the state of the art? Philosophies. 2020;5(4):31. https://doi.org/10.3390/philosophies5040031.

Burwell S, et al. Ethical aspects of brain computer interfaces: a scoping review. BMC Med Ethics. 2017;18:60.

Gilbert F, Cook M, O’Brien T, Illes J. Embodiment and estrangement: results from a first-in-human “intelligent BCI” trial. Sci Eng Ethics. 2019;25(1):83–96.

Kögel J, Schmid JR, Jox RJ, et al. Using brain-computer interfaces: a scoping review of studies employing social research methods. BMC Med Ethics. 2019;20:18. https://doi.org/10.1186/s12910-019-0354-1.

Rainey S, Maslen H, Savulescu J. When thinking is doing: responsibility for BCI-mediated action. AJOB Neurosci. 2020;11(1):46–58.

Blank R. Globalization: pluralist concerns and contexts. In: Giordano J, Gordijn B, editors. Scientific and philosophical perspectives in neuroethics. Cambridge: Cambridge University Press; 2010. p. 321–42.

Voarino N, Dubljević V, Racine E. tDCS for memory enhancement: a critical analysis of the speculative aspects of ethical issues. Front Hum Neurosci. 2017;10:678. https://doi.org/10.3389/fnhum.2016.00678.

Timmermans S, Tavory I. Theory construction in qualitative research: from grounded theory to abductive analysis. Sociol Theory. 2012;30:167–86.

Klein E, Higger M. Ethical considerations in ending exploratory brain–computer interface research studies in locked-in syndrome. Camb Q Healthc Ethics. 2018;27(4):660–74.

Müller O, Rotter S. Neurotechnology: current developments and ethical issues. Front Syst Neurosci. 2017;11:93. https://doi.org/10.3389/fnsys.2017.00093.

Sample M, Aunos M, Blain-Moraes S, Bublitz C, Chandler JA, Falk TH, et al. Brain–computer interfaces and personhood: interdisciplinary deliberations on neural technology. J Neural Eng. 2019;16(6):063001.

Naufel S, Klein E. Brain-computer interface (BCI) researcher perspectives on neural data ownership and privacy. J Neural Eng. 2020;17(1):016039.

Agarwal A, Dowsley R, McKinney ND, Wu D, Lin CT, De Cock M, Nascimento AC. Protecting privacy of users in brain-computer interface applications. IEEE Trans Neural Syst Rehabil Eng. 2019;27(8):1546–55.

Yuste R, Goering S, Arcas BA, Bi G, Carmena JM, Carter A, Fins JJ, Friesen P, Gallant J, Huggins JE, Illes J, Kellmeyer P, Klein E, Marblestone A, Mitchell C, Parens E, Pham M, Rubel A, Sadato N, et al. Four ethical priorities for neurotechnologies and AI. Nature. 2017;551:159–63.

Wolkenstein A, Jox RJ, Friedrich O. Brain-computer interfaces: lessons to be learned from the ethics of algorithms. Camb Q Healthc Ethics. 2018;27(4):635–46.

Stramondo JA. The distinction between curative and assistive technology. Sci Eng Ethics. 2019;25(4):1125–45.

Gilbert F, O’Brien T, Cook M. The effects of closed-loop brain implants on autonomy and deliberation: what are the risks of being kept in the loop? Camb Q Healthc Ethics. 2018;27(2):316–25.

Klein E. Informed consent in implantable BCI research: identifying risks and exploring meaning. Sci Eng Ethics. 2016;22(5):1299–317.

Johnson SL, Fenton A, Shriver A, editors. Neuroethics and nonhuman animals. Berlin/Heidelberg: Springer; 2020.

Dubljević V. Neuroethics, justice and autonomy: public reason in the cognitive enhancement debate, vol. 19. Springer; 2019.

Parens E, Johnston J. Does it make sense to speak of neuroethics? Three problems with keying ethics to hot new science and technology. EMBO Rep. 2007;8(S1):S61–4.

Dubljević V, Trettenbach K, Ranisch R. The socio-political roles of neuroethics and the case of Klotho. AJOB Neurosci. 2021;13(1):10–22.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

No funding (industry or otherwise) was received for this work.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Lang, A., Coin, A., Dubljević, V. (2023). A Scoping Review of the Academic Literature on BCI Ethics. In: Dubljević, V., Coin, A. (eds) Policy, Identity, and Neurotechnology. Advances in Neuroethics. Springer, Cham. https://doi.org/10.1007/978-3-031-26801-4_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-26801-4_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26800-7

Online ISBN: 978-3-031-26801-4

eBook Packages: MedicineMedicine (R0)