Abstract

Gait recognition aims to recognize one subject by the way she/he walks without alerting the subject, which has drawn increasing attention. Recently, gait recognition can be represented using various data modalities, such as RGB, skeleton, depth, infrared data, acceleration, gyroscope, .etc., which have various advantages depending on the application scenarios. In this paper, we present a comprehensive survey of recent progress in gait recognition methods based on the type of input data modality. Specifically, we review commonly-used gait datasets with different gait data modalities, following with effective gait recognition methods both for single data modality and multiple data modalities. We also present comparative results of effective gait recognition approaches, together with insightful observations and discussions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

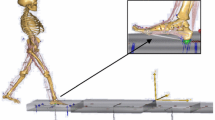

There is a growing demand for human identification in many applications (e.g., secure access control and criminal identification). Biometrics such as face, fingerprint, iris, palmprint, .etc., have been used for human identification for years. Gait recognition aims to discriminate a subject by the way she/he walks. Compared with other biometrics, the key advantage of gait recognition is its unobtrusiveness, i.e., the gait video can be captured from a distance without disturbing the subject. Many gait recognition methods are proposed, and deep learning based gait recognition methods achieve great progresses in recent years. The general gait recognition framework can be found in Fig. 1. Most of the existing gait recognition methods utilize RGB videos as input as reviewed in [23, 27, 37], due to their easy access. Besides, we also have witnessed the emergence of works [5, 22, 29, 44, 47] with other data modalities for gait recognition, such as skeletons, depth images, infrared data, acceleration and gyroscope. Figure 2 demonstrates different modalities for gait recognition. This is mainly due to the development of various affordable sensors as well as the advantages of different modalities for gait recognition depending on various application scenarios.

Generally, gait data can be roughly divided into two categories: vision-based gait data and non-vision gait data. The former are visually “intuitive” for representing gait, such as RGB images, silhouettes, 2D/3D skeletons [5], 3D Meshes [44]. Recently, vision-based gait data play important and effective roles for gait recognition. Among them, RGB video based gait data is the most common-used data modalities for gait recognition, which has been widely-used in surveillance and monitoring systems. Meanwhile, inertial-based gait data modalities, such as acceleration, gyroscope, are non-vision gait data as they are visually non-intuitive for representing human gaits. The non-vision gait data modalities can also be used in the scenarios that require to protect the privacy of subjects. Figure 2 shows several gait data modalities and more details of different modalities are discussed in Sect. 2.

Compared with other biometrics, gait recognition face many challenges due to the long distance and uncooperative means. Many methods are proposed to deal with these challenges for robust gait recognition. So far, many gait recognition survey have been published, such as [23, 25, 27, 37]. However, most of the recent surveys mainly focus on vision based deep learning, and the non-vision gait recognition draws little attentions. This paper aims to conduct a comprehensive analyze about the development of gait recognition, including gait datasets, recent gait recognition approaches, as well as the performance and trend. The main contributions of this work can be summarized as follows.

-

(1)

We comprehensively review the gait recognition from the perspective of various data modalities, including RGB, depth, skeleton, infrared sequence, acceleration, gyroscope.

-

(2)

We review the advanced gait databases with various data modalities, and provide comparisions and analysises of the existing gait datasets, including the scale of database, viewing angle, walking speed and other challenges.

-

(3)

We provide comparisons of the advanced deep learning based methods and their performance with brief summaries.

The rest of this paper is organized as follows. Section 2 reviews gait recognition databases with different data modalities. Section 3 introduces related gait recognition methods and performance. Finally, conclusions of gait recognition is discussed in Sect. 4.

2 Datasets for Gait Recognition

In this section, we first introduce commonly-used gait datasets with different data modalities: vision-based and non-vision based gait data. Then, we analyze the differences among those gait databases, including the number of subjects, viewing angles, sequences, and the challenges.

2.1 Vision-Based Gait Datasets

Vision-based gait data modalities mainly include RGB image/video, infared image, depth image, skeleton data. So far, RGB image/video based gait data is the most popular gait modality for gait recognition. The CMU MoBo [8] gait database is a early gait database, which mainly study the influences of walking speed, carrying objects, viewing angles in gait recognition. CASIA series gait databases provide various data types. Specifically, the CASIA A [36] is first released with 20 subjects from 3 different viewing angles, while the CASIA B [39] contains 124 subjects with different clothes, carrying conditions and 11 viewing angles, which is one of the most commonly-used gait database. CASIA C [31] database contains infared image sequences. Besides, the OU-ISIR Speed [34], OU-ISIR Cloth [11], OU-ISIR MV [21] gait databases provide gait silhouettes with different walking speed, clothes and viewing angles.

Different with early gait database, the OU-LP [13], OU-LP Age [38], OU-MVLP [30], OU-LP Bag [35], OU-MVLP Pose [2] focus on large scale gait samples from 4007, 63846, 10307, 62528 and 10307 subjects respectively. The scale of gait database increases with the development of deep learning technologies. The GREW dataset [45] consists of 26000 identities and 128000 sequences with rich attributes for unconstrained gait recognition. The Gait3D database [44] contains 4000 subjects and over 25000 sequences extracted from 39 cameras in an unconstrained indoor scene. It provides 3D Skinned Multi-Person Linear (SMPL) models recovered from video frames which can provide dense 3D information of body shape, viewpoint, and dynamics. Figure 3 demonstrates several examples of different gait benchmark databases.

2.2 Non-vision Gait Databases

Non-vision gait recognition can be performed by sensors in the floor, in the shoes or on the body. Generally, the non-vision gait datasets are mainly focus on inertial data. Inertial sensors, such as accelerometers and gyroscopes, are used to record the inertial data generated by the movement of a walking subject. Accelerometers and gyroscopes measure inertial dynamic information from three directions, namely, along the X, Y and Z axes. The accelerations in the three directions reflect the changes in the smartphone’s linear velocity in 3D space and also reflect the movement of smartphone users. The whuGAIT Datasets [47] provide the inertial gait data in the wild without the limitations of walking on specific roads or speeds. [1] provide a real-world gait database with 44 subjects over 7–10 days, including normal walking, fast walking, down and upstairs for each subject.

Table 1 show some benchmark datasets with various data modalities for gait recognition. (R: RGB, S: Skeleton, D: Depth, IR: Infrared, Ac: Acceleration, Gyr: Gyroscope, 3DS: 3D SMPL). It can be observed that with the development of deep learning technology, the scale and data modality of gait database increase rapidly. The real-world large-scale gait recognition databases draw lots of attentions.

3 Approaches for Gait Recognition

Many hand-crafted feature based gait recognition approaches, such as GEI based subspace projection method [16] and trajectory-based method [3], were well designed for video-based gait recognition. Recently, with the great progress of deep learning techniques, various deep learning architectures have been proposed for gait recognition with strong representation capability and superior performance.

Vision-based gait recognition approaches can be roughly classified into two categories: model-based approaches and appearance-based approaches. Model-based approaches model the human body structure and extract gait features by fitting the model to the observed body data for recognition. Appearance-based approaches extract effective features directly from dynamic gait images. Most current appearance-based approaches use silhouettes extracted from a video sequence to represent gait. Non-vision based gait recognition approaches mainly use inertia-based gait data as input.

3.1 Appearance-Based Approaches

RGB data contains rich appearance information of the captured scene context and can be captured easily. Gait recognition from RGB data has been studies for years but still face many challenges, owing to the variations of backgrounds, viewpoints, scales of subjects and illumination conditions. Besides, RGB videos have generally large data sizes, leading to high computational costs when modeling the spatio-temporal context for gait recognition. The temporal modeling can be divided into single-image, sequence-based, and set-based approaches. Early approaches proposed to encode a gait cycle into a single image, i.e., Gait Energy Image (GEI) [9]. These representations are easy to compute but lose lots of temporal information. Sequence-based approaches focus on each input separately. For modeling the temporal information 3D CNN [12, 17] or LSTM [18] are utilized. Sequence-based approaches can comprehend more spatial information and gather more temporal information with higher computational costs. The set-based approach [4] with shuffled inputs and require less computational complexity. GaitPart [6] uses a temporal module to focus on capturing short-range temporal features. [19] conduct effective gait recognition via effective global-local feature representation and local temporal aggregation.

For the similarity comparision, current deep learning gait recognition methods often rely on effective loss functions such as contrastive loss, triplet loss [4] and angle center loss [41].

3.2 Model-Based Approaches

In recent years, model-based gait recognition using a 2D/3D skeleton has been widely studied through view-invariant modeling and analysis. 2D poses can be obtained by human pose estimation, while 3D dynamic gait features can be extracted from the depth data for gait recognition [14]. [18] represents pose-based approach for gait recognition with handcrafted pose features. [32] utilize human pose estimation method to obtain skeleton poses directly from RGB images, and then GaitGraph network is proposed to combine skeleton poses with Graph Convolutional Network for gait recognition.

In Table 2, we just compare several current the appearance-based and model-based methods due to the space limitations. We can observe that appearance-based gait recognition achieves better performance than model-based approaches. Model-based gait recognition approaches such as [32] achieve great progress compared with early model-based gait recognition method.

3.3 Inertia-Based Approaches

In the past decade, many inertia-based gait recognition methods have been developed [7, 15, 28, 33, 42]. Most of them require inertia sensors to be fastened to specific joints of the human body to obtain gait information, which is inconvenient for the gait data collection. Recently, many advanced inertial sensors, including accelerometers and gyroscopes, are integrated into smartphones nowadays [47] to collect the interial gait data. Compared with traditional methods, which often require a person to walk along a specified road and/or at a normal walking speed, [47] collects inertial gait data in unconstrained conditions. To obtain good performance, deeply convolutional neural network and recurrent neural network are utilized for robust inertial gait feature representation. So far, non-vision based recognition becomes more and more important due to its safety to protect people’s privacy.

3.4 Multi-modalities Gait Recognition

Traditionally, gait data are collected by either color sensors, such as a CCD camera, depth sensors, Microsoft Kinect devices, or inertial sensors, or an accelerometer. Generally, a single type of gait sensor may only capture part of the dynamic gait features which makes the gait recognition sensitive to complex covariate conditions. To solve these problems, approaches based on multi-modalities are proposed. For examples, [46] proposes EigenGait and TrajGait methods to extract gait features from the inertial data and the RGBD (color and depth) data for effective gait recognition. [40] proposes sensor fusion of data for gait recognition which is obtained by ambulatory inertial sensors and plastic optical fiber-based floor sensors. The multi-modalities gait recognition approaches can achieves better performance but require more sensors to obtain different gait modalities.

3.5 Challenges and Future Work

Although significant progress has been achieved in gait recognition, there are still many challenges for robust gait recognition. Generally, gait recognition can be improved from the aspects of database, recognition accuracy, computational cost and privicay. For the gait database, it is significant to develop more gait databases in real scenarios. More effective gait recognition methods should be developed to deal with challenges such as different viewing angles and object carrying. Besides, the computational cost and privicy projection are also important for gait recognition.

4 Conclusions

In this paper, we provide a comprehensive study of gait recognition with various data modalities, including RGB, depth, skeleton, infrared sequences and inertial data. We provide an up-to-date review of existing studies on both vision-based and non-vision based gait recognition approaches. Moreover, we also provide an extensive survey of available gait databases with multi-modalities by comparing and analyzing gait databases from aspects of subjects, viewing angle, carrying, speed and other challenges.

References

Alobaidi, H., Clarke, N., Li, F., Alruban, A.: Real-world smartphone-based gait recognition. Comput. Secur. 113, 102557 (2022)

An, W., et al.: Performance evaluation of model-based gait on multi-view very large population database with pose sequences. IEEE Trans. Biometrics Behav. Ident. Sci. 2(4), 421–430 (2020)

Castro, F.M., Marín-Jiménez, M., Mata, N.G., Muñoz-Salinas, R.: Fisher motion descriptor for multiview gait recognition. Int. J. Pattern Recogn. Artif. Intell. 31(01), 1756002 (2017)

Chao, H., He, Y., Zhang, J., Feng, J.: Gaitset: regarding gait as a set for cross-view gait recognition. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8126–8133 (2019)

Choi, S., Kim, J., Kim, W., Kim, C.: Skeleton-based gait recognition via robust frame-level matching. IEEE Trans. Inf. Forensics Secur. 14(10), 2577–2592 (2019)

Fan, C., et al.: Gaitpart: temporal part-based model for gait recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14225–14233 (2020)

Gafurov, D., Snekkenes, E.: Gait recognition using wearable motion recording sensors. EURASIP J. Adv. Signal Process. 2009, 1–16 (2009)

Gross, R., Shi, J.: The CMU motion of body (MOBO) database (2001)

Han, J., Bhanu, B.: Individual recognition using gait energy image. IEEE Trans. Pattern Anal. Mach. Intell. 28(2), 316–322 (2005)

Hofmann, M., Geiger, J., Bachmann, S., Schuller, B., Rigoll, G.: The tum gait from audio, image and depth (gaid) database: Multimodal recognition of subjects and traits. J. Vis. Commun. Image Represent. 25(1), 195–206 (2014)

Hossain, M.A., Makihara, Y., Wang, J., Yagi, Y.: Clothing-invariant gait identification using part-based clothing categorization and adaptive weight control. Pattern Recogn. 43(6), 2281–2291 (2010)

Huang, Z., et al.: 3D local convolutional neural networks for gait recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14920–14929 (2021)

Iwama, H., Okumura, M., Makihara, Y., Yagi, Y.: The OU-ISIR gait database comprising the large population dataset and performance evaluation of gait recognition. IEEE Trans. Inf. Forensics Secur. 7(5), 1511–1521 (2012)

John, V., Englebienne, G., Krose, B.: Person re-identification using height-based gait in colour depth camera. In: 2013 IEEE International Conference on Image Processing, pp. 3345–3349. IEEE (2013)

Juefei-Xu, F., Bhagavatula, C., Jaech, A., Prasad, U., Savvides, M.: Gait-id on the move: pace independent human identification using cell phone accelerometer dynamics. In: 2012 IEEE Fifth International Conference on Biometrics: Theory, Applications and Systems (BTAS), pp. 8–15. IEEE (2012)

Li, W., Kuo, C.-C.J., Peng, J.: Gait recognition via GEI subspace projections and collaborative representation classification. Neurocomputing 275, 1932–1945 (2018)

Liao, R., Cao, C., Garcia, E.B., Yu, S., Huang, Y.: Pose-based temporal-spatial network (ptsn) for gait recognition with carrying and clothing variations. In: Zhou, J., et al. (eds.) CCBR 2017. LNCS, vol. 10568, pp. 474–483. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-69923-3_51

Liao, R., Shiqi, Yu., An, W., Huang, Y.: A model-based gait recognition method with body pose and human prior knowledge. Pattern Recogn. 98, 107069 (2020)

Lin, B., Zhang, S., Yu, X.: Gait recognition via effective global-local feature representation and local temporal aggregation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14648–14656 (2021)

Jiwen, L., Wang, G., Moulin, P.: Human identity and gender recognition from gait sequences with arbitrary walking directions. IEEE Trans. Inf. Forensics Secur. 9(1), 51–61 (2013)

Makihara, Y., Mannami, H., Yagi, Y.: Gait analysis of gender and age using a large-scale multi-view gait database. In: Kimmel, R., Klette, R., Sugimoto, A. (eds.) ACCV 2010. LNCS, vol. 6493, pp. 440–451. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-19309-5_34

Middleton, L., Buss, A.A., Bazin, A., Nixon, M.S.: A floor sensor system for gait recognition. In: Fourth IEEE Workshop on Automatic Identification Advanced Technologies (AutoID 2005), pp. 171–176. IEEE (2005)

Rida, I., Almaadeed, N., Almaadeed, S.: Robust gait recognition: a comprehensive survey. IET Biometrics 8(1), 14–28 (2019)

Sarkar, S., Phillips, P.J., Liu, Z., Vega, I.R., Grother, P., Bowyer, K.W.: The humanid gait challenge problem: data sets, performance, and analysis. IEEE Trans. Pattern Anal. Mach. Intell. 27(2), 162–177 (2005)

Shen, C., Yu, S., Wang, J., Huang, G.Q., Wang, L.: A comprehensive survey on deep gait recognition: algorithms, datasets and challenges. arXiv preprint arXiv:2206.13732 (2022)

Shutler, J.D., Grant, M.G., Nixon, M.S., Carter, J.N.: On a large sequence-based human gait database. In: Lotfi, A., Garibaldi, J.M. (eds.) Applications and Science in Soft Computing, pp. 339–346. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-45240-9_46

Singh, J.P., Jain, S., Arora, S., Singh, U.P.: Vision-based gait recognition: a survey. IEEE Access 6, 70497–70527 (2018)

Sprager, S., Juric, M.B.: An efficient hos-based gait authentication of accelerometer data. IEEE Trans. Inf. Forensics Secur. 10(7), 1486–1498 (2015)

Sun, J., Wang, Y., Li, J., Wan, W., Cheng, D., Zhang, H.: View-invariant gait recognition based on kinect skeleton feature. Multimedia Tools Appl. 77(19), 24909–24935 (2018). https://doi.org/10.1007/s11042-018-5722-1

Takemura, N., Makihara, Y., Muramatsu, D., Echigo, T., Yagi, Y.: Multi-view large population gait dataset and its performance evaluation for cross-view gait recognition. IPSJ Trans. Comput. Vis. Appl. 10(1), 1–14 (2018). https://doi.org/10.1186/s41074-018-0039-6

Tan, D., Huang, K., Yu, S., Tan, T.: Efficient night gait recognition based on template matching. In 18th International Conference on Pattern Recognition (ICPR 2006), vol. 3, pages 1000–1003. IEEE (2006)

Teepe, T., Khan, A., Gilg, J., Herzog, F., Hörmann, S., Rigoll, G.: Gaitgraph: graph convolutional network for skeleton-based gait recognition. In: 2021 IEEE International Conference on Image Processing (ICIP), pp. 2314–2318. IEEE (2021)

Trung, N.T., Makihara, Y., Nagahara, H., Mukaigawa, Y., Yagi, Y.: Performance evaluation of gait recognition using the largest inertial sensor-based gait database. In 2012 5th IAPR International Conference on Biometrics (ICB), pp. 360–366. IEEE (2012)

Tsuji, A., Makihara, Y., Yagi, Y.: Silhouette transformation based on walking speed for gait identification. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 717–722. IEEE (2010)

Uddin, M.Z., et al.: The OU-ISIR large population gait database with real-life carried object and its performance evaluation. IPSJ Trans. Comput. Vis. Appl. 10(1), 1–11 (2018)

Wang, L., Tan, T., Ning, H., Weiming, H.: Silhouette analysis-based gait recognition for human identification. IEEE Trans. Pattern Anal. Mach. Intell. 25(12), 1505–1518 (2003)

Zifeng, W., Huang, Y., Wang, L., Wang, X., Tan, T.: A comprehensive study on cross-view gait based human identification with deep CNNs. IEEE Trans. Pattern Anal. Mach. Intell. 39(2), 209–226 (2016)

Chi, X., Makihara, Y., Ogi, G., Li, X., Yagi, Y., Jianfeng, L.: The OU-ISIR gait database comprising the large population dataset with age and performance evaluation of age estimation. IPSJ Trans. Comput. Vis. Appl. 9(1), 1–14 (2017)

Yu, S., Tan, D., Tan, T.: A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition. In: 18th International Conference on Pattern Recognition (ICPR 2006), vol. 4, pp. 441–444. IEEE (2006)

Yunas, S.U., Alharthi, A.,Ozanyan, K.B .: Multi-modality sensor fusion for gait classification using deep learning. In: 2020 IEEE Sensors Applications Symposium (SAS), pp. 1–6. IEEE (2020)

Zhang, Y., Huang, Y., Shiqi, Yu., Wang, L.: Cross-view gait recognition by discriminative feature learning. IEEE Trans. Image Process. 29, 1001–1015 (2019)

Zhang, Y., Pan, G., Jia, K., Minlong, L., Wang, Y., Zhaohui, W.: Accelerometer-based gait recognition by sparse representation of signature points with clusters. IEEE Trans. Cybern. 45(9), 1864–1875 (2014)

Zhang, Z., et al.: Gait recognition via disentangled representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4710–4719 (2019)

Zheng, J., Liu, X., Liu, W., He, L., Yan, C., Mei,T.: Gait recognition in the wild with dense 3D representations and a benchmark. arXiv preprint arXiv:2204.02569, 2022

Zhu, Z.., et al.. Gait recognition in the wild: a benchmark. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14789–14799 (2021)

Zou, Q., Ni, L., Wang, Q., Li, Q., Wang, S.: Robust gait recognition by integrating inertial and RGBD sensors. IEEE Trans. Cybernet. 48(4), 1136–1150 (2017)

Zou, Q., Wang, Y., Wang, Q., Zhao, Y., Li, Q.: Deep learning-based gait recognition using smartphones in the wild. IEEE Trans. Inf. Forensics Secur. 15, 3197–3212 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Li, W., Song, J., Liu, Y., Zhong, C., Geng, L., Wang, W. (2022). Gait Recognition with Various Data Modalities: A Review. In: Deng, W., et al. Biometric Recognition. CCBR 2022. Lecture Notes in Computer Science, vol 13628. Springer, Cham. https://doi.org/10.1007/978-3-031-20233-9_42

Download citation

DOI: https://doi.org/10.1007/978-3-031-20233-9_42

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20232-2

Online ISBN: 978-3-031-20233-9

eBook Packages: Computer ScienceComputer Science (R0)