Abstract

We propose a novel algorithm for monocular depth estimation that decomposes a metric depth map into a normalized depth map and scale features. The proposed network is composed of a shared encoder and three decoders, called G-Net, N-Net, and M-Net, which estimate gradient maps, a normalized depth map, and a metric depth map, respectively. M-Net learns to estimate metric depths more accurately using relative depth features extracted by G-Net and N-Net. The proposed algorithm has the advantage that it can use datasets without metric depth labels to improve the performance of metric depth estimation. Experimental results on various datasets demonstrate that the proposed algorithm not only provides competitive performance to state-of-the-art algorithms but also yields acceptable results even when only a small amount of metric depth data is available for its training.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Monocular depth estimation is a task to predict a pixel-wise depth map from a single image to understand the 3D geometry of a scene. The distance from a scene point to the camera provides essential information in various applications, including 2D-to-3D image/video conversion [52], augmented reality [35], autonomous driving [8], surveillance [22], and 3D CAD model generation [20]. Since only a single camera is available in many applications, monocular depth estimation, which infers the 3D information of a scene without additional equipment, has become an important research topic.

Recently, learning-based monocular depth estimators using convolutional neural networks (CNNs) have shown significant performance improvements, overcoming the intrinsic ill-posedness of monocular depth estimation by exploiting a huge amount of training data [1, 3, 6, 7, 14, 25, 28, 54, 56]. Existing learning-based monocular depth estimators can be classified into two categories according to the properties of estimated depth maps: relative depth estimation and metric depth estimation. Relative depth estimation predicts the relative depth order among pixels [2, 34, 51, 58]. Metric depth estimation, on the other hand, predicts the absolute distance of each scene point from the camera [3, 6, 14, 25, 54], which is a pixel-wise regression problem.

To estimate a metric depth map, a network should learn both the 3D geometry of the scene and the camera parameters. This implies that a metric depth estimator should be trained with a dataset obtained by a specific camera. In contrast, a relative depth estimator can be trained with heterogeneous datasets, e.g., disparity maps from stereo image pairs or even manually labeled pixel pairs. Thus, relative depth estimation is an easier task than metric depth estimation is. Moreover, note that the geometry of a scene can be easily estimated when extra cues are available. For example, depth completion [38, 39, 55], which recovers a dense depth map from sparse depth measurements, can be performed more accurately and more reliably than monocular depth estimation is. Based on these observations, metric depth estimation algorithms using relative depths as extra cues have been developed via fitting [34, 43] or fine-tuning [42].

In this paper, we propose a monocular metric depth estimator that decomposes a metric depth map into a normalized depth map and scale features. As illustrated in Fig. 1, a normalized depth map contains relative depth information, and it is less sensitive to scale variations or camera parameters than a metric depth map is. The proposed algorithm consists of a single shared encoder and three decoders, G-Net, N-Net, and M-Net, which estimate gradient maps, a normalized depth map, and a metric depth map, respectively. M-Net learns to estimate metric depth maps using relative depth features extracted by G-Net and N-Net. To this end, we progressively transfer features from G-Net to N-Net and then from N-Net to M-Net. In addition, we develop the mean depth residual (MDR) block for M-Net to utilize N-Net features more effectively. Because the proposed algorithm learns to estimate metric depths by exploiting gradient maps and relative depths, additional datasets containing only relative depths can be used to improve the metric depth estimation performance further. Experimental results show that the proposed algorithm is competitive with state-of-the-art metric depth estimators, even when it is trained with a smaller metric depth dataset.

This paper has the following contributions:

-

We propose a novel monocular depth estimator, which decomposes a metric depth map into a normalized depth map and relative depth features and then exploits those relative features to improve the metric depth estimation performance.

-

The proposed algorithm can be adapted to a new camera efficiently since it can be trained with a small metric depth dataset together with camera-independent relative depth datasets.

-

The proposed algorithm provides competitive performance to conventional state-of-the-art metric depth estimators and can improve the performance further through joint training using multiple datasets.

2 Related Work

2.1 Monocular Metric Depth Estimation

The objective of monocular metric depth estimation is to predict pixel-wise absolute distances of a scene from a camera using a single image. Since different 3D scenes can be projected onto the same 2D image, monocular depth estimation is ill-posed. Nevertheless, active research has been conducted due to its practical importance. To infer depths, early approaches made prior assumptions on scenes, e.g. box blocks [9], planar regions [44], or particular layout of objects [10]. However, they may provide implausible results, especially in regions with ambiguous colors or small objects.

With recent advances in deep learning, CNN techniques for monocular depth estimation have been developed, yielding excellent performance. Many attempts have been made to find better network architecture [3, 6, 14, 25, 54] or to design more effective loss functions [2, 5, 16, 25]. It has been also demonstrated that the depth estimation performance can be improved by predicting quantized depths through ordinal regression [7], by employing Fourier domain analysis [26], by enforcing geometric constraints of virtual normals [56], or by reweighting multiple loss functions [28]. Recently, the vision transformer [4] was employed for monocular depth estimation [1], improving the performance significantly.

2.2 Relative Depth Estimation

The objective of relative depth estimation is to learn the pairwise depth order [58] or the rank of pixels [2, 51] in an image. Recently, listwise ranking, instead of pairwise ranking, was considered for relative depth estimation [34]. Also, scale-invariant loss [6] and its variants [32, 33, 43, 48] have been used to alleviate the scale ambiguity of depths, thereby improving the performance of relative depth estimation.

Unlike metric depths, relative depth information—or depth order information—is invariant to camera parameters. Therefore, even though a training set is composed of images captured by different cameras, it does not affect the performance of relative depth estimation adversely. Therefore, heterogeneous training data, such as disparity maps from stereo image pairs [48, 50, 51] or videos [43], structure-from-motion reconstruction [32, 33], and ordinal labels [2], have been used to train relative depth estimators.

2.3 Relative vs. Metric Depths

A metric depth map contains relative depth information, whereas relative depth information is not sufficient for reconstructing a metric depth map. However, relative-to-metric depth conversion has been attempted by fitting relative depths to metric depths [34, 43] or by fine-tuning a relative depth estimator for metric depth estimation [42].

On the other hand, relative and metric depths can be jointly estimated to exploit their correlation and to eventually improve the performance of metric depth estimation. To this end, ordinal labels are used with a ranking loss in [2]. Also, in [27], relative and metric depth maps at various scales are first estimated and then optimally combined to yield a final metric depth map.

The proposed algorithm also estimates relative depth information, in addition to metric depths, to improve the performance of metric depth estimation. However, differently from [2, 27], the proposed algorithm better exploits the correlation between relative and metric depths by decomposing a metric depth map. Furthermore, the proposed algorithm can provide promising results even with a small metric depth dataset by exploiting a relative depth dataset additionally.

(a) Overall network architecture of the proposed algorithm and (b) detailed structure of decoders. The proposed algorithm consists of a shared encoder and three decoders: G-Net, N-Net, and M-Net. G-Net predicts horizontal and vertical gradient maps, while N-Net and M-Net estimate normalized and metric depth maps, respectively. Note that G-Net features are fed into N-Net, and N-Net features are fed into M-Net.

3 Proposed Algorithm

Figure 2 is an overview of the proposed algorithm, which consists of a shared encoder and three decoders—G-Net, N-Net, and M-Net. The shared encoder extracts common features that are fed into the three decoders. Then, G-Net predicts horizontal and vertical gradients of depths, while N-Net and M-Net estimate a normalized depth map and a metric depth map, respectively. Note that features extracted by G-Net are fed into N-Net to convey edge information, and those by N-Net are, in turn, fed into M-Net to provide relative depth features. Finally, via the MDR block, M-Net exploits the relative depth features to estimate a metric depth map more accurately.

3.1 Metric Depth Decomposition

Given an RGB image \(I \in \mathbb {R}^{h \times w \times 3}\), the objective is to estimate a metric depth map \(M \in \mathbb {R}^{h \times w}\). However, this is ill-posed because different scenes with different metric depths can be projected onto the same image. Moreover, scale features of depths are hard to estimate from the color information only since they also depend on the camera parameters. To address this issue, we decompose a metric depth map M into a normalized depth map N and scale parameters. The normalized depth map N contains relative depth information, so it is less sensitive to scale variations or camera parameters than the metric depth map M is.

There are several design choices for normalizing a metric depth map, including min-max normalization or ranking-based normalization [11]. However, the min-max normalization is sensitive to outliers, and the ranking-based normalization is unreliable in areas with homogeneous depths, such as walls and floors. Instead, we normalize a metric depth map using the z-score normalization. Given a metric depth map M, we obtain the normalized depth map N by

where \(\mu _M\) and \(\sigma _M\), respectively, denote the mean and standard deviation of metric depths in M. Also, U is the unit matrix whose all elements are 1.

N-Net, denoted by \(f_N\), estimates this normalized depth map, and its estimate is denoted by \(\hat{N}\). When the scale parameters \(\mu _M\) and \(\sigma _M\) are known, the metric depth map M can be reconstructed by

In practice, \(\mu _M\) and \(\sigma _M\) are unknown. Conventional methods in [34, 42, 43] obtain fixed \(\sigma _{M}\) and \(\mu _{M}\) for all images based on the least-squares criterion. In such a case, the accuracy of \(\hat{M}_{\text {direct}}\) in (2) greatly depends on the accuracy of \(\sigma _{M}\) and \(\mu _{M}\). In this work, instead of the direct conversion in (2), we estimate the metric depth map by employing the features \(\psi _N(I)\), which are extracted by the proposed M-Net, \(f_N\), during the estimation of \(\hat{N}\). In other words, the proposed M-Net, \(f_M\), estimates the metric depth map by

For metric depth estimation, structural data (e.g. surface normals or segmentation maps) have been adopted as additional cues [5, 29, 37, 40], or relative depth features have been used indirectly via loss functions (e.g. pairwise ranking loss [2] or scale-invariant loss [1, 6, 30]). In contrast, we convert a metric depth map to a normalized depth map. Then, the proposed N-Net estimates the normalized depth map to extract the features \(\psi _N\), containing relative depth information. Then, the proposed M-Net uses \(\psi _N\) for effective metric depth estimation.

Similarly, we further decompose the normalized depth map N into more elementary data: horizontal and vertical gradients. The horizontal gradient map \(G_x\) is given by

where \(\nabla _x\) is the partial derivative operator computing the differences between horizontally adjacent pixels. The vertical gradient map \(G_y\) is obtained similarly. The proposed G-Net is trained to estimate these gradient maps \(G_x\) and \(G_y\). Hence, G-Net learns edge information in a scene, and its features \(\psi _G\) are useful for inferring the normalized depth map. Therefore, similar to (3), N-Net estimates the normalized depth map via

using the gradient features \(\psi _G(I)\).

3.2 Network Architecture

For the shared encoder in Fig. 2, we adopt EfficientNet-B5 [47] as the backbone network. G-Net and N-Net have an identical structure, consisting of five upsampling blocks. However, G-Net outputs two channels for two gradient maps \(G_x\) and \(G_y\), while N-Net yields a single channel for a normalized depth map N. M-Net also has a similar structure, except for the MDR block, which will be detailed in Sect. 3.3. MDR predicts the mean \(\mu _M\) of M separately, which is added back at the end of M-Net.

The encoder features are fed into the three decoders via skip-connections [13], as shown in Fig. 2(a). To differentiate the encoder features for the different decoders, we apply \(1 \times 1\) convolution to the encoder features before feeding them to each decoder. Also, we apply the channel attention [15] before each skip-connection to each decoder.

We transfer features unidirectionally from G-Net to N-Net and also from N-Net to M-Net to exploit low-level features for the estimation of high-level data. To this end, we fuse features through element-wise addition before each of the first four upsampling blocks in N-Net and M-Net, as shown in Fig. 2(b). Specifically, let \(\psi _G^\textrm{out}\) and \(\psi _N^\textrm{out}\) denote the output features of G-Net and N-Net, respectively. Then, the input feature \(\psi ^\textrm{in}_N\) to the next layer of N-Net is given by

where \(\omega _G\) and \(\omega _N\) are pre-defined weights for \(\psi _G^\textrm{out}\) and \(\psi _N^\textrm{out}\) to control the relative contributions of the two features. For M-Net, the features from N-Net are added similarly. In order to fuse features, we use addition, instead of multiplication or concatenation, for computational efficiency.

3.3 MDR Block

We design the MDR block to utilize the features \(\psi _N\) of N-Net more effectively for the metric depth estimation in M-Net. Figure 3 shows the structure of the MDR block, which applies patchwise attention to an input feature map and estimates the mean \(\mu _M\) of M separately using the transformer encoder [4]. Note that the transformer architecture enables us to use one of the patchwise-attended feature vectors to regress \(\mu _M\).

Specifically, MDR first processes input features using an \(8 \times 8\) convolution layer with a stride of 8 and a \(3 \times 3\) convolution layer, respectively. The patchwise output of the \(8 \times 8\) convolution is added to the positional encodings and then input to the transformer encoder [4]. The positional encodings are learnable parameters, randomly initialized at training. Then, the transformer encoder generates 192 patchwise-attended feature vectors of 128 dimensions. We adopt the mini-ViT architecture [1] for the transformer encoder. The first vector is fed to the regression module, composed of three fully-connected layers, to yield \(\mu _M\). The rest 191 vectors form a matrix, which is multiplied with the output of the \(3 \times 3\) convolution layer to generate \(191 \times 96 \times 128\) output features through reshaping. Finally, those output features are fed to the next upsampling block of M-Net. Also, the estimated \(\mu _M\) is added back at the end of M-Net in Fig. 2(b), which makes the remaining parts of M-Net focus on the estimation of the mean-removed depth map \(M-\mu _M U\) by exploiting the N-Net features \(\psi _N\).

3.4 Loss Functions

Let us describe the loss functions for training the three decoders. For G-Net, we use the \(\ell _1\) loss

where \(\hat{G}_x\) and \(\hat{G}_y\) are predictions of the ground-truth gradient maps \(G_x\) and \(G_y\), respectively. Also, T denotes the number of valid pixels in the ground-truth.

For N-Net, we use two loss terms: the \(\ell _1\) loss and the gradient loss. The \(\ell _1\) loss is defined as

where \(\hat{N}\) and N are predicted and ground-truth normalized depth maps. Note that scale-invariant terms are often adopted to train monocular depth estimators [1, 30, 42]. However, we do not use such scale-invariant losses since normalized depth maps are already scale-invariant. Next, the gradient losses [16, 33, 51] for N in the horizontal direction are defined as

where \(\hat{N}_s\) and \(N_s\) are the bilinearly scaled \(\hat{N}\) and N with a scaling factor s. We compute the gradient losses at various scales, as in [33, 51], by setting s to 0.5, 0.25, and 0.125. The losses \(L_{Ny}\) in the vertical direction are also used.

Similarly, for M-Net, we use the loss terms \(\mathcal {L}_M\), \(\mathcal {L}_{Mx}\), and \(\mathcal {L}_{My}\). In addition, we use two more loss terms. First, \(\mathcal {L}_{\mu _M}\) is defined to train the MDR block, which is given by

where \(\mu (\hat{M})\) denotes the mean of depth values in \(\hat{M}\). Second, we define the logarithmic \(\ell _1\) loss,

In this work, we adopt inverse depth representation of metric depths to match the depth order with a relative depth dataset [51]. In this case, theoretically, a metric depth can have a value in the range \([0, \infty )\). Thus, when a metric depth is near zero, its inverted value becomes too large, which interferes with training. We overcome this problem through a simple modification. Given an original metric depth \(m_o\), its inverted metric depth m is defined as

In this way, inverted metric depth values are within the range of (0, 1] and also are more evenly distributed.

However, using the \(\ell _1\) loss \(\mathcal {L}_M\) on inverse depths has a disadvantage in learning distant depths. Suppose that \(\hat{\chi }\) and \(\chi \) are predicted and ground-truth metric depth values for a pixel, respectively. Then, the \(\ell _1\) error E is given by

As \(\chi \) gets larger, E becomes smaller for the same \(| \hat{\chi } - \chi |\). This means that the network is trained less effectively for distant regions. This problem is alleviated by employing \(\mathcal {L}_{\log M}\) in (11).

4 Experimental Results

4.1 Datasets

We use four depth datasets: one for relative depths [51] and three for metric depths [23, 45, 46]. When relative depth data are used in training, losses are generated from the loss terms for N-Net and G-Net only because the loss terms for M-Net cannot be computed.

HR-WSI [51]: It consists of 20,378 training and 400 test images. The ground-truth disparity maps are generated by FlowNet 2.0 [19]. We use only the training data of HR-WSI. We normalize the disparity maps by (1) and regard them as normalized depth maps.

NYUv2 [45]: It contains 120K video frames for training and 654 frames for test, together with the depth maps captured by a Kinect v1 camera. We use the NYUv2 dataset for both training and evaluation. We construct three training datasets of 51K, 17K, and 795 sizes. Specifically, we extract the 51K and 17K images by sampling video frames uniformly. For the 795 images, we use the official training split of NYUv2. We fill in missing depths using the colorization scheme [31], as in [45].

DIML-Indoor [23]: It consists of 1,609 training images and 503 test images, captured by a Kinect v2 camera.

SUN RGB-D [46]: It consists 5,285 training images and 5,050 test images, obtained by four different cameras: Kinect v1, Kinect v2, RealSense, and Xtion.

4.2 Evaluation Metrics

Metric Depths: We adopt the four evaluation metrics in [6], listed below. Here, \(M_i\) and \(\hat{M}_i\) denote the ground-truth and predicted depths of pixel i, respectively. \(|\cdot |\) denotes the number of valid pixels in a depth map. For the NYUv2 dataset, we adopt the center crop protocol [6].

Relative Depths: we use two metrics for relative depths. First, WHDR (weighted human disagreement rate) [2, 43, 51] measures the ordinal consistency between point pairs. We follow the evaluation protocol of [51] to randomly sample 50,000 pairs in each depth map. However, WHDR is an unstable protocol, under which the performance fluctuates with each measurement. We hence use Kendall’s \(\tau \) [21] additionally, which considers the ordering relations of all pixel pairs. Given a ground-truth normalized depth map D and its prediction \(\hat{D}\), Kendall’s \(\tau \) is defined as

where \(\alpha (\hat{D}, D)\) and \(\beta (\hat{D}, D)\) are the numbers of concordant pairs and discordant pairs between D and \(\hat{D}\), respectively. Note that Kendall’s \(\tau \) can measure the quality of a metric depth map, as well as that of a relative one.

4.3 Implementation Details

Network Architecture: We employ EfficientNet-B5 [47] as the encoder backbone. The encoder takes an \(512 \times 384\) RGB image and generates a \(16 \times 12\) feature with 2,048 channels. The output feature is used as the input to the three decoders. G-Net and N-Net consist of 5 upsampling blocks, each of which is composed of a bilinear interpolation layer and two \(3\times 3\) convolution layers with the ReLU activation. Also, in addition to the 5 upsampling blocks, M-Net includes the MDR block, located between the fourth and fifth upsampling blocks. For feature fusion in (6), \(\omega _G = \omega _N = 1\).

Training: We train the proposed algorithm in two phases. First, we train the network, after removing M-Net, for 20 epochs with an initial learning rate of \(10^{-4}\). The learning rate is decreased by a factor of 0.1 at every fifth epoch. Second, we train the entire network, including all three decoders, jointly for 15 epochs with an initial learning rate of \(10^{-4}\), which is decreased by a factor of 0.1 at every third epoch. We use the Adam optimizer [24] with a weight decay of \(10^{-4}\). If a relative depth is used in the second phase, losses are calculated from the loss terms for N-Net and G-Net only.

4.4 Performance Comparison

Table 1 compares the proposed algorithm with conventional ones on NYUv2 dataset. Some of the conventional algorithms use only NYUv2 training data [1, 3, 6, 7, 12, 15, 18, 25, 28, 30, 56], while the others use extra data [41, 42, 49]. For fair comparisons, we train the proposed algorithm in both ways: ‘Proposed’ uses NYUv2 only, while ‘Proposed\(^\dagger \)’ uses both HR-WSI and NYUv2. The following observations can be made from Table 1.

-

‘Proposed’ outperforms all conventional algorithms in all metrics with no exception. For example, ‘Proposed’ provides a REL score of 0.100, which is 0.003 better than that of the second-best algorithm, Bhat et al. [1]. Note that both algorithms use the same encoder backbone of EfficientNet-B5 [47].

-

‘Proposed\(^\dagger \)’ provides the best results in five out of six metrics. For \(\delta _2\), the proposed algorithm yields the second-best score after Ranftl et al. [42]. It is worth pointing out that Ranftl et al. uses about 20 times more training data than the proposed algorithm does.

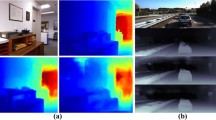

Figure 4 compares the proposed algorithm with the conventional algorithms [1, 3, 6, 7, 30, 42] qualitatively. We see that the proposed algorithm estimates the depth maps more faithfully with smaller errors.

4.5 Various Datasets

Table 2 verifies the effectiveness of the proposed algorithm on various datasets. The first two columns in Table 2 indicate the metric depth dataset and its size. ‘Baseline’ is a bare encoder-decoder for monocular depth estimation. Specifically, we remove G-Net and N-Net, as well as the MDR block in M-Net, from the proposed algorithm in Fig. 2 to construct ‘Baseline.’ For its training, only three loss terms \(\mathcal {L}_M\), \(\mathcal {L}_{Mx}\), and \(\mathcal {L}_{My}\) are used. ‘Proposed’ means the proposed algorithm without employing the 20K HR-WSI training data, while ‘Proposed\(^\dagger \)’ means using the HR-WSI data additionally. The following observations can be made from Table 2.

-

By comparing ‘Proposed’ with ‘Baseline,’ we see that G-Net and N-Net help M-Net improve the performance of metric depth estimation by transferring edge information and relative depth information. Also, ‘Proposed\(^\dagger \)’ meaningfully outperforms ‘Proposed’ by leveraging relative depth training data in HR-WSI, which contain no metric depth labels.

-

Even when only the 795 NYUv2 images are used, the proposed algorithm provides acceptable results. For example, the RMSE score of 0.417 is similar to that of the Hyunh et al.’s estimator [18] in Table 1, which uses 50K metric depth map data. In contrast, the proposed algorithm uses the 795 metric depth maps only.

-

The proposed algorithm also exhibits similar trends in the DIML-Indoor and SUN RGB-D datasets, which are collected using different cameras: the proposed algorithm can be trained effectively even with a small number of metric depth images. This is advantageous in practical applications in which an algorithm should be adapted for various cameras.

Figure 5 compares ‘Baseline’ and ‘Proposed\(^\dagger \)’ qualitatively using the 795 NYUv2, DIML-Indoor, and SUN RGB-D datasets. For all datasets, ‘Proposed\(^\dagger \)’ provides more accurate and more detailed depth maps, especially around chairs, tables, and desks, than ‘Baseline’ does.

4.6 Analysis

Ablation Studies: We conduct ablation studies that add the proposed components one by one in Table 3. Here, the 17K images from NYUv2 are used for training. M, N, and G denote the three decoders. MDR\(^*\) is the MDR block with \(\mu _M\) deactivated. \(\dagger \) indicates the use of relative depth data. We see that all components lead to performance improvements, especially in terms of the two relative depth metrics Kendall’s \(\tau \) and WHDR.

Table 4 shows the effectiveness of the two-phase training scheme of the proposed algorithm. The proposed algorithm, which trains G-Net and N-Net first, shows better results than the single-phase scheme, which trains the entire network at once.

Complexities and Inference Speeds: Table 5 compares the complexities of the proposed algorithm and the Ranftl et al.’s algorithm [42]. The proposed algorithm performs faster with a smaller number of parameters than the Ranftl et al.’s algorithm [42] does. This indicates that the performance gain of the proposed algorithm is not from the increase in complexity but from the effective use of relative depth features. Table 6 lists the complexity of each component of the proposed algorithm. The encoder spends most of the inference time, while the three decoders are relatively fast.

5 Conclusions

We proposed a monocular depth estimator that decomposes a metric depth map into a normalized depth map and scale features. The proposed algorithm is composed of a shared encoder with three decoders, G-Net, N-Net, and M-Net, which estimate gradient maps, a normalized depth map, and a metric depth map, respectively. G-Net features are used in N-Net, and N-Net features are used in M-Net. Moreover, we developed the MDR block for M-Net to utilize N-Net features and improve the metric depth estimation performance. Extensive experiments demonstrated that the proposed algorithm provides competitive performance and yields acceptable results even with a small metric depth dataset.

References

Bhat, S.F., Alhashim, I., Wonka, P.: AdaBins: depth estimation using adaptive bins. In: CVPR, pp. 4009–4018 (2021)

Chen, W., Fu, Z., Yang, D., Deng, J.: Single-image depth perception in the wild. In: NIPS, pp. 730–738 (2016)

Chen, X., Chen, X., Zha, Z.J.: Structure-aware residual pyramid network for monocular depth estimation. In: IJCAI, pp. 694–700 (2019)

Dosovitskiy, A., et al.: An image is worth 16 x 16 words: transformers for image recognition at scale. In: ICLR (2021)

Eigen, D., Fergus, R.: Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: ICCV, pp. 2650–2658 (2015)

Eigen, D., Puhrsch, C., Fergus, R.: Depth map prediction from a single image using a multi-scale deep network. In: NIPS, pp. 2366–2374 (2014)

Fu, H., Gong, M., Wang, C., Batmanghelich, K., Tao, D.: Deep ordinal regression network for monocular depth estimation. In: CVPR, pp. 2002–2011 (2018)

Godard, C., Aodha, O.M., Brostow, G.J.: Unsupervised monocular depth estimation with left-right consistency. In: CVPR, pp. 270–279 (2017)

Gupta, A., Efros, A.A., Hebert, M.: Blocks world revisited: image understanding using qualitative geometry and mechanics. In: ECCV, pp. 482–496 (2010)

Gupta, A., Hebert, M., Kanade, T., Blei, D.: Estimating spatial layout of rooms using volumetric reasoning about objects and surfaces. In: NIPS (2010)

Han, J., Pei, J., Kamber, M.: Data Mining: Concepts and Techniques. Elsevier (2011)

Hao, Z., Li, Y., You, S., Lu, F.: Detail preserving depth estimation from a single image using attention guided networks. In: 3DV, pp. 304–313 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Heo, M., Lee, J., Kim, K.R., Kim, H.U., Kim, C.S.: Monocular depth estimation using whole strip masking and reliability-based refinement. In: ECCV, pp. 36–51 (2018)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: CVPR, pp. 7132–7141 (2018)

Hu, J., Ozay, M., Zhang, Y., Okatani, T.: Revisiting single image depth estimation: toward higher resolution maps with accurate object boundaries. In: WACV, pp. 1043–1051 (2019)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: CVPR, pp. 4700–4708 (2017)

Huynh, L., Nguyen-Ha, P., Matas, J., Rahtu, E., Heikkilä, J.: Guiding monocular depth estimation using depth-attention volume. In: ECCV, pp. 581–597 (2020)

Ilg, E., Mayer, N., Saikia, T., Keuper, M., Dosovitskiy, A., Brox, T.: FlowNet 2.0: evolution of optical flow estimation with deep networks. In: CVPR, pp. 2462–2470 (2017)

Izadinia, H., Shan, Q., Seitz, S.M.: IM2CAD. In: CVPR, pp. 5134–5143 (2017)

Kendall, M.G.: A new measure of rank correlation. Biometrika 30(1/2), 81–93 (1938)

Kim, H., et al.: Weighted joint-based human behavior recognition algorithm using only depth information for low-cost intelligent video-surveillance system. Expert Syst. Appl. 45, 131–141 (2016)

Kim, Y., Jung, H., Min, D., Sohn, K.: Deep monocular depth estimation via integration of global and local predictions. IEEE Trans. Image Process. 27(8), 4131–4144 (2018)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: ICLR (2015)

Laina, I., Rupprecht, C., Belagiannis, V., Tombari, F., Navab, N.: Deeper depth prediction with fully convolutional residual networks. In: 3DV, pp. 239–248 (2016)

Lee, J.H., Heo, M., Kim, K.R., Kim, C.S.: Single-image depth estimation based on Fourier domain analysis. In: CVPR, pp. 330–339 (2018)

Lee, J.H., Kim, C.S.: Monocular depth estimation using relative depth maps. In: CVPR, pp. 9729–9738 (2019)

Lee, J.H., Kim, C.S.: Multi-loss rebalancing algorithm for monocular depth estimation. In: ECCV, pp. 785–801 (2020)

Lee, J.H., Lee, C., Kim, C.S.: Learning multiple pixelwise tasks based on loss scale balancing. In: ICCV, pp. 5107–5116 (2021)

Lee, J.H., Han, M.K., Ko, D.W., Suh, I.H.: From big to small: Multi-scale local planar guidance for monocular depth estimation. arXiv preprint arXiv:1907.10326 (2019)

Levin, A., Lischinski, D., Weiss, Y.: Colorization using optimization. ACM Trans. Graph. 23(3), 689–694 (2004)

Li, Z., et al.: Learning the depths of moving people by watching frozen people. In: CVPR, pp. 4521–4530 (2019)

Li, Z., Snavely, N.: MegaDepth: learning single-view depth prediction from internet photos. In: CVPR, pp. 2041–2050 (2018)

Lienen, J., Hullermeier, E., Ewerth, R., Nommensen, N.: Monocular depth estimation via listwise ranking using the Plackett-Luce model. In: CVPR, pp. 14595–14604 (2021)

Liu, C., Yang, J., Ceylan, D., Yumer, E., Furukawa, Y.: PlaneNet: piece-wise planar reconstruction from a single RGB image. In: CVPR, pp. 2579–2588 (2018)

Liu, C., et al.: Progressive neural architecture search. In: ECCV, pp. 19–34 (2018)

Liu, S., Johns, E., Davison, A.J.: End-to-end multi-task learning with attention. In: CVPR, pp. 1871–1880 (2019)

Ma, F., Karaman, S.: Sparse-to-dense: depth prediction from sparse depth samples and a single image. In: ICRA, pp. 4796–4803 (2018)

Park, J., Joo, K., Hu, Z., Liu, C.K., So Kweon, I.: Non-local spatial propagation network for depth completion. In: ECCV, pp. 120–136 (2020)

Qi, X., Liao, R., Liu, Z., Urtasun, R., Jia, J.: GeoNet: geometric neural network for joint depth and surface normal estimation. In: CVPR, pp. 283–291 (2018)

Ramamonjisoa, M., Lepetit, V.: SharpNet: Fast and accurate recovery of occluding contours in monocular depth estimation. In: ICCVW (2019)

Ranftl, R., Bochkovskiy, A., Koltun, V.: Vision transformers for dense prediction. In: ICCV, pp. 12179–12188 (2021)

Ranftl, R., Lasinger, K., Hafner, D., Schindler, K., Koltun, V.: Towards robust monocular depth estimation: mixing datasets for zero-shot cross-dataset transfer. IEEE Trans. Pattern Anal. Mach. Intell. (2020)

Saxena, A., Sun, M., Ng, A.Y.: Make3D: learning 3D scene structure from a single still image. IEEE Trans. Pattern Anal. Mach. Intell. 31(5), 824–840 (2008)

Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: Indoor segmentation and support inference from RGBD images. In: ECCV, pp. 746–760 (2012)

Song, S., Lichtenberg, S.P., Xiao, J.: SUN RGB-D: a RGB-D scene understanding benchmark suite. In: CVPR, pp. 567–576 (2015)

Tan, M., Le, Q.: EfficientNet: rethinking model scaling for convolutional neural networks. In: ICML, pp. 6105–6114 (2019)

Wang, C., Lucey, S., Perazzi, F., Wang, O.: Web stereo video supervision for depth prediction from dynamic scenes. In: 3DV, pp. 348–357. IEEE (2019)

Wang, P., Shen, X., Lin, Z., Cohen, S., Price, B., Yuille, A.L.: Towards unified depth and semantic prediction from a single image. In: CVPR, pp. 2800–2809 (2015)

Xian, K., et al.: Monocular relative depth perception with web stereo data supervision. In: CVPR, pp. 311–320 (2018)

Xian, K., Zhang, J., Wang, O., Mai, L., Lin, Z., Cao, Z.: Structure-guided ranking loss for single image depth prediction. In: CVPR, pp. 611–620 (2020)

Xie, J., Girshick, R., Farhadi, A.: Deep3D: fully automatic 2D-to-3D video conversion with deep convolutional neural networks. In: ECCV, pp. 842–857 (2016)

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: CVPR, pp. 1492–1500 (2017)

Xu, D., Ricci, E., Ouyang, W., Wang, X., Sebe, N.: Multi-scale continuous CRFs as sequential deep networks for monocular depth estimation. In: CVPR, pp. 5354–5362 (2017)

Xu, Y., Zhu, X., Shi, J., Zhang, G., Bao, H., Li, H.: Depth completion from sparse LiDAR data with depth-normal constraints. In: ICCV, pp. 2811–2820 (2019)

Yin, W., Liu, Y., Shen, C., Yan, Y.: Enforcing geometric constraints of virtual normal for depth prediction. In: ICCV, pp. 5684–5693 (2019)

Yu, F., Koltun, V., Funkhouser, T.: Dilated residual networks. In: CVPR, pp. 472–480 (2017)

Zoran, D., Isola, P., Krishnan, D., Freeman, W.T.: Learning ordinal relationships for mid-level vision. In: ICCV, pp. 388–396 (2015)

Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF) grants funded by the Korea government (MSIT) (No. NRF-2021R1A4A1031864 and No. NRF-2022R1A2B5B03002310).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Jun, J., Lee, JH., Lee, C., Kim, CS. (2022). Depth Map Decomposition for Monocular Depth Estimation. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13662. Springer, Cham. https://doi.org/10.1007/978-3-031-20086-1_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-20086-1_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-20085-4

Online ISBN: 978-3-031-20086-1

eBook Packages: Computer ScienceComputer Science (R0)