Abstract

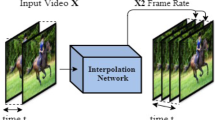

Real-time video frame interpolation (VFI) is very useful in video processing, media players, and display devices. We propose RIFE, a Real-time Intermediate Flow Estimation algorithm for VFI. To realize a high-quality flow-based VFI method, RIFE uses a neural network named IFNet that can estimate the intermediate flows end-to-end with much faster speed. A privileged distillation scheme is designed for stable IFNet training and improve the overall performance. RIFE does not rely on pre-trained optical flow models and can support arbitrary-timestep frame interpolation with the temporal encoding input. Experiments demonstrate that RIFE achieves state-of-the-art performance on several public benchmarks. Compared with the popular SuperSlomo and DAIN methods, RIFE is 4–27 times faster and produces better results. Furthermore, RIFE can be extended to wider applications thanks to temporal encoding. https://github.com/megvii-research/ECCV2022-RIFE

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Video Frame Interpolation (VFI) aims to synthesize intermediate frames between two consecutive video frames. VFI supports various applications like slow-motion generation, video compression [56], and video frame predition [57]. Moreover, real-time VFI methods running on high-resolution videos have many potential applications, such as reducing bandwidth requirements for live video streaming, providing video editing services for users with limited computing resources, and video frame rate adaption on display devices.

VFI is challenging due to the complex, non-linear motions and illumination changes in real-world videos. Recently, flow-based VFI algorithms have offered a framework to address these challenges and achieved impressive results [3, 22, 28, 30, 40, 60, 61]. Common approaches for these methods involve two steps: 1) warping the input frames according to approximated optical flows and 2) fusing the warped frames using Convolutional Neural Networks (CNNs).

Optical flow models can not be directly used in VFI. Given the input frames \(I_0, I_1\), flow-based methods [3, 22, 30] need to approximate the intermediate flows \(F_{t\rightarrow 0}, F_{t\rightarrow 1}\) from the perspective of the frame \(I_t\) that we are expected to synthesize. There is a “chicken-and-egg" problem between intermediate flows and frames because \(I_t\) is not available beforehand, and its estimation is a difficult problem [22, 44]. Many practices [3, 22, 28, 60] first compute bi-directional flows from optical flow models, then reverse and refine them to generate intermediate flows. However, such flows may have flaws in motion boundaries, as the object position changes from frame to frame (“object shift" problem). Appearance Flow [65], A pioneering work in view synthesis, proposes to estimate flow starting from the target view using CNNs. DVF [30] extend it to the voxel flow of dynamic scenes to jointly model the intermediate flow and blend mask to estimate them end-to-end. AdaCoF [27] further extends intermediate flows to adaptive collaborative flows. BMBC [44] designs a bilateral cost volume operator for obtaining more accurate intermediate flows (bilateral motion). In this paper, we aim to build a lightweight pipeline that achieves state-of-the-art (SOTA) performance while maintaining the conciseness of direct intermediate flow estimation. Our pipeline has these main design concepts:

-

1)

Not requiring additional components, like image depth model [3], flow refinement model [22] and flow reversal layer [60], which are introduced to compensate for the defects of intermediate flow estimation. We also want to eliminate reliance on pre-trained SOTA optical flow models that are not tailored for VFI tasks.

-

2)

End-to-end learnable motion estimation: we demonstrate experimentally that instead of introducing some inaccurate motion modeling, it is better to make the CNN learn the intermediate flow end-to-end. This methodology has been proposed [30]. However, the follow-up works do not fully inherit this idea.

-

3)

Providing direct supervision for the approximated intermediate flows: most VFI models are trained with only the final reconstruction loss. Intuitively, propagating gradients of pixel-wise loss across warping operator is not efficient for flow estimation [11, 35, 37]. Lacking supervision explicitly designed for flow estimation degrades the performance of VFI models.

We propose IFNet, which directly estimates intermediate flow from adjacent frames and a temporal encoding input. IFNet adopts a coarse-to-fine strategy [20] with progressively increasing resolution: it iteratively updates the intermediate flows and soft fusion mask via successive IFBlocks. Intuitively, according to the iteratively updated flow fields, we could move corresponding pixels from two input frames to the same location in a latent intermediate frame and use a fusion mask to combine pixels from two input frames. To make our model efficient, unlike most previous optical flow models [15, 19, 20, 53, 55], IFBlocks do not contain expensive operators like cost volume and only use \(3\times 3\) convolution and deconvolution as building blocks, which are suitable for resource-constrained devices. Furthermore, plain Conv is highly supported by NPU embedded in display devices and provides convenience for customized requirements. Thanks related researchers for the exploration of efficient models [14, 36, 47].

Employing intermediate supervision is very important. When training the IFNet end-to-end using the final reconstruction loss, our method produces worse results than SOTA methods because of the inaccurate optical flow estimation. The situation dramatically changes after we design a privileged distillation scheme that employs a teacher model with access to the intermediate frames to guide the student to learn.

Combining these designs, we propose the Real-time Intermediate Flow Estimation (RIFE). RIFE trained from scratch can achieve satisfactory results, without requiring pre-trained models or datasets with optical flow labels. We illustrate the RIFE’s performance compared with other methods in Fig. 1.

To sum up, our main contributions include:

-

We design an effective IFNet to approximate the intermediate flows and introduce a privileged distillation scheme to improve the performance.

-

Our experiments demonstrate that RIFE achieves SOTA performance on several public benchmarks, especially in the scene of arbitrary-time frame interpolation.

-

We show RIFE can be extended to applications such as depth map interpolation and dynamic scene stitching, thanks to its flexible temporal encoding.

2 Related Works

Optical Flow Estimation. Optical flow estimation is a long-standing vision task that aims to estimate the per-pixel motion, useful in many downstream tasks [33, 54, 63, 64]. Since the milestone work of FlowNet [15], flow model architectures have evolved for several years, yielding more accurate results while being more efficient, such as FlowNet2.0 [20], PWC-Net [53] and LiteFlowNet [19]. Recently Teed et al. [55] introduce RAFT, which iteratively updates a flow field through a recurrent unit and achieves a remarkable breakthrough in this field. Another important research direction is unsupervised optical flow estimation [23, 35, 37] which tackles the difficulty of labeling.

Overview of RIFE pipeline. Given two input frames \(I_0, I_1\) and temporal encoding t (timestep encoded as an separate channel [18, 39]), we directly feed them into the IFNet to approximate intermediate flows \(F_{t\rightarrow 0}, F_{t\rightarrow 1}\) and the fusion map M. During the training phase, a privileged teacher refines student’s results based on ground truth \(I_t\) using a special IFBlock

Video Frame Interpolation. Recently, optical flow has been a prevalent component in video interpolation. In addition to the method of directly estimating the intermediate flow [27, 30, 44], Jiang et al. [22] propose SuperSlomo using the linear combination of the two bi-directional flows as an initial approximation of the intermediate flows and then refining them using U-Net. Reda et al. [49] and Liu et al. [29] propose to improve intermediate frames using cycle consistency. Bao et al. [3] propose DAIN to estimate the intermediate flow as a weighted combination of bidirectional flow. Niklaus et al. [41] propose SoftSplat to forward-warp frames and their feature map using softmax splatting. Xu et al. [60] propose QVI to exploit four consecutive frames and flow reversal filter to get the intermediate flows. Liu et al. [28] further extend QVI with rectified quadratic flow prediction to EQVI.

Along with flow-based methods, flow-free methods have also achieved remarkable progress. Meyer et al. [38] utilize phase information to learn the motion relationship for multiple video frame interpolation. Niklaus et al. [43] formulate VFI as a spatially adaptive convolution whose convolution kernel is generated using a CNN given the input frames. Cheng et al. propose DSepConv [8] to extend kernel-based method using deformable separable convolution and. Choi et al. [10] propose an efficient flow-free method named CAIN, which employs the PixelShuffle operator and channel attention to capture the motion information implicitly. Some work further focus on increasing the resolution and frame rate of the video together and has achieved good visual effect [58, 59]. In addition, large-motion and animation frame interpolation is also fields of great interest [6, 48, 51].

Knowledge Distillation. Our privileged distillation [31] for intermediate flow conceptually belongs to the knowledge distillation [17], which originally aims to transfer knowledge from a large model to a smaller one. In privileged distillation, the teacher model gets more input than the student model, such as scene depth, images from other views, and even image annotation. Therefore, the teacher model can provide more accurate representations to guide the student model to learn. This idea is applied to some computer vision tasks, such as hand pose estimation [62], re-identification [45] and video style transfer [7]. Our work is also related to codistillation [1] where the student and teacher have the same architecture and different inputs during training.

3 Method

3.1 Pipeline Overview

We illustrate the overall pipeline of RIFE in Fig. 2. Given a pair of consecutive RGB frames, \(I_0, I_1\) and target timestep \(t~(0 \le t \le 1)\), our goal is to synthesize an intermediate frame \(\widehat{I}_t\). We estimate the intermediate flows \(F_{t\rightarrow 0}\), \(F_{t\rightarrow 1}\) and fusion map M by feeding input frames and t as an additional channel into the IFNet. We can get reconstructed image \(\widehat{I}_t\) using following formulation:

where \(\overleftarrow{\mathcal {W}}\) is the image backward warping, \(\odot \) is an element-wise multiplier, and M is the fusion map \((0 \le M \le 1)\). We use another encoder-decoder CNNs named RefineNet following previous methods [22, 41] to refine the high-frequency area of \(\widehat{I}_t\) and reduce artifacts of the student model. Its computational cost is similar to the IFNet. The RefineNet finally produce a reconstruction residual \(\Delta ~(-1\le \Delta \le 1)\). And we will get a refined reconstructed image \(\widehat{I}_t + \Delta \). The detailed architecture of RefineNet is in the Appendix.

3.2 Intermediate Flow Estimation

Some previous VFI methods reverse and refine bi-directional flows [3, 22, 28, 60] as depicted in Fig. 3. The flow reversal process is usually cumbersome due to the difficulty of handling the changes of object positions. Intuitively, the previous flow reversal method hopes to perform spatial interpolation on the optical flow field, which is not trivial because of the “object shift" problem. The role of our IFNet is to directly and efficiently predict \(F_{t\rightarrow 0}, F_{t\rightarrow 1}\) and fusion mask M given two consecutive input frames \(I_0, I_1\) and timestep t. When \(t=0\) or \(t=1\), IFNet is similar to the classical optical flow models.

Left: The IFNet is composed of several stacked IFBlocks operating at different resolution. Right: In an IFBlock, we first backward warp the two input frames based on current approximated flow \(F^{i-1}\). Then the input frames \(I_0, I_1\), warped frames \(\widehat{I}_{t\leftarrow 0}, \widehat{I}_{t\leftarrow 1}\), the previous results \(F^{i-1}, M^{i-1}\) and timestep t are fed into the next IFBlock to approximate the residual of flow and mask. The privileged information \(I_t^{GT}\) is only provided for teacher

To handle the large motion encountered in intermediate flow estimation, we employ a coarse-to-fine strategy with gradually increasing resolution, as illustrated in Fig. 4. Specifically, we first compute a rough prediction of the flow on low resolution, which is believed to capture large motions easier, then iteratively refine the flow fields with gradually increasing resolution. Following this design, our IFNet has a stacked hourglass structure, where a flow field is iteratively refined via successive IFBlocks:

where \(F^{i-1}\) and \(M^{i-1}\) denote the current estimation of the intermediate flows and fusion map from the \((i-1)^{th}\) IFBlock, and \(\text {IFB}^{i}\) represents the \(i^{th}\) IFBlock. We use a total of 3 IFBlocks, and each has a resolution parameter, \((K^0, K^1, K^2)=(4,2,1)\). During inference time, the final estimation is \(F^n\) and \(M^n(n=2)\). Each IFBlock has a feed-forward structure consisting of serveral convolutional layers and an up-sampling operator. Except for the layer that outputs the optical flow residuals and the fusion map, we use PReLU [16] as the activation function. The cost volume [15] operator is computationally expensive and usually ties the starting point of optical flow to the input image. So it is not directly transferable.

Results of DVF [30] (Vimeo90K). After feeding the edge map of intermediate frames (privileged information) into the model, the estimated flows can be significantly improved, resulting in better reconstruction on validation set

We compare the runtime of the SOTA optical flow models [19, 53, 55] and IFNet in Table 1. Current flow-based VFI methods [3, 22, 41] usually need to run their flow models twice then process the bi-directional flows. Therefore the intermediate flow estimation in RIFE runs at a faster speed. Although these optical models can estimate inter-frame motion accurately, they are not suitable for direct migration to VFI tasks.

3.3 Priveleged Distillation for Intermediate Flow

We use an experiment to show that directly approximating the intermediate flows is challenging without access to the intermediate frame. We train DVF [30] model to estimate intermediate flow on Vimeo90K [61] dataset. As a comparison, we add an additional input channel to the DVF model, containing the edge map [12] of intermediate frames (denoted as “Privileged DVF"). Figure 5 shows that the quantization result of Privileged DVF is surprisingly high, while the flows estimated by DVF are blurry. Similar conclusions are also demonstrated in deferred rendering, showing that VFI will be simpler with some intermediate information [6]. This demonstrates that estimating optical flow between two images is easier for the model than estimating intermediate flow. This inspire us to design a privileged model to teach the original model.

We design a privileged distillation loss to IFNet. We stack an additional IFBlock (teacher model \(\text {IFB}^{Tea}\), \(K^{Tea} = 1\)) that refines the results of IFNet referring to the target frame \(I^{GT}_t\):

With the access of \(I^{GT}_t\) as privileged information, the teacher model produces more accurate flows. We define the distillation loss \(\mathcal {L}_{dis}\) as follows:

We apply the distillation loss over the full sequence of predictions generated from the iteratively updating process in the student model. The gradient of this loss will not be backpropagated to the teacher model. The teacher block will be discarded after the training phase, hence this would incur no extra cost for inference. It makes more stable training and faster convergence.

3.4 Implementation Details

Supervisions. Our training loss \(\mathcal {L}_{total}\) is a linear combination of the reconstruction losses \(\mathcal {L}_{rec}, \mathcal {L}^{Tea}_{rec}\) and privileged distillation loss \(\mathcal {L}_{dis}\):

where we set \(\lambda _{d} = 0.01\) to balance the scale of losses.

The reconstruction loss \(\mathcal {L}_{rec}\) models the reconstruction quality of the intermediate frame. The reconstruction loss has the formulation of:

where d is often a pixel-wised loss. Following previous work [40, 41], we use \(L_1\) loss between two Laplacian pyramid representations of the reconstructed image and ground truth (denoted as \(L_{Lap}\), the pyramidal level is 5).

Training Dataset. We use the Vimeo90K dataset [61] to train RIFE. This dataset has 51, 312 triplets for training, where each triplet contains three consecutive video frames with a resolution of \(448\times 256\). We randomly augment the training data using horizontal and vertical flipping, temporal order reversing, and rotating by 90\(^{\circ }\).

Training Strategy. We train RIFE on the Vimeo90K training set and fix \(t=0.5\). RIFE is optimized by AdamW [32] with weight decay \(10^{-4}\) on \(224\times 224\) patches. Our training uses a batch size of 64. We gradually reduce the learning rate from \(10^{-4}\) to \(10^{-5}\) using cosine annealing during the whole training process. We train RIFE on 8 TITAN X (Pascal) GPUs for 300 epochs in 10 h.

We use the Vimeo90K-Septuplet [61] dataset to extend RIFE to support arbitrary-timestep frame interpolation [9, 24]. This dataset has 91, 701 sequence with a resolution of \(448\times 256\), each of which contains 7 consecutive frames. For each training sample, we randomly select 3 frames \((I_{n_0}, I_{n_1}, I_{n_2})\) and calculate the target timestep \(t = (n_1 - n_0)/(n_2 - n_0)\), where \(0\le n_0< n_1< n_2 < 7\). So we can write RIFE’s temporal encoding to extend it. We keep other training setting unchanged and denote the model trained on Vimeo90K-Septuplet as RIFE\(_m\).

4 Experiments

We first introduce the benchmarks for evaluation. Then we provide variants of our models with different computational costs. We compare these models with representative SOTA methods. In addition, we show the capability of generating arbitrary-timestep frames and other applications using RIFE. An ablation study is carried out to analyze our design. Finally, we discuss some limitations of RIFE.

4.1 Benchmarks and Evaluation Metrics

We train our models on the Vimeo90K training dataset and directly test it on the following benchmarks.

Vimeo90K. There are 3,782 triplets in the Vimeo90K testing set [61] with resolution of \(448 \times 256\). This dataset is widely evaluated in recent VFI methods.

UCF101. The UCF101 dataset [52] contains videos with various human actions. There are 379 triplets with a resolution of \(256 \times 256\).

HD. Bao et al. [4] collect 11 videos for evaluation. The HD benchmark consists of four 1080p, three 720p and four \(1280 \times 544\) videos. Following the author of this benchmark, we use the first 100 frames of each video for evaluation.

X4K-1000FPS. A recently released high frame rate 4K dataset [50] containing 15 scenes for testing. We follow the evaluation of [44].

We measure the peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and interpolation error (IE) for quantitative evaluation. All the methods are tested on a TITAN X (Pascal) GPU. To report the runtime, we test all models for processing a pair of \(640\times 480\) images using the same device. Disagreements with some of the published results are explained in the Appendix.

4.2 Comparisons with Previous Methods

We compare RIFE with previous VFI models [3, 4, 8,9,10, 13, 27, 41, 43, 44, 61]. These models are officially released except SoftSplat [41]. A recently unofficial reproduction [48] report SoftSplat [41] is slower than ABME [44], and we can not verify it with the available materials. In addition, we train DVF [30] model and SuperSlomo [22] using our training pipeline on Vimeo90K dataset because the released models of these methods are trained on early datasets.

Interpolating Arbitrary-Timestep Frame. Arbitrary-timestep VFI is important in frame-rate conversion. We apply RIFE\(_m\) to interpolate multiple intermediate frames at different timesteps \(t \in (0, 1)\), as shown in Fig. 6. RIFE\(_m\) can successfully handle \(t=0.125~(8\times )\) which is not included in the training data.

To provide a quantitative comparison of multiple frame interpolation, we further extract every fourth frame of videos from HD benchmark [4] and use them to interpolate other frames. We divide the HD benchmark into three subsets with different resolution to test these methods. We show the quantitative PSNR between generated frames and frames of the original videos in Table 2. Note that DAIN [3], BMBC [44] and EDSC\(_m\) [8] can generate a frame at an arbitrary timestep. Some other methods can only interpolate the intermediate frame at \(t=0.5\). Thus we use them recursively to produce \(4\times \) results. Specifically, we firstly apply the single interpolation method once to get intermediate frame \(\widehat{I}_{0.5}\). Then we feed \(I_0\) and \(\widehat{I}_{0.5}\) to get \(\widehat{I}_{0.25}\) and so on. Furthermore, we test \(8\times \) interpolation in a recently released dataset, X4K-1000FPS [50]. Overall, RIFE\(_m\) is very effective in the multiple frame interpolation.

Model Scaling. To scale our models that can be compared with existing methods, we introduce two modifications following: test-time augmentation and resolution multiplying. 1) We flip the input images horizontally and vertically to get augmented test data. We infer and average (with flipping) these two results finally. This model is denoted as RIFE-2T. 2) We remove the first downsample layer of IFNet and add a downsample layer before its output to match the origin pipeline. We also perform this modification on RefineNet. It enlarges the process resolution of the feature maps and produces a model named RIFE-2R. We combine these two modifications to extend RIFE to RIFE-Large (2T2R).

Middle Timestep Interpolation. We report the performance of middle timestep interpolation in Table 3. For ease of comparison, we group the models by running speed. RIFE achieve very high performance compared to other small models. Meanwhile, RIFE needs only about 3 gigabytes of GPU memory to process 1080p videos. We get a larger version of our model (RIFE-Large) by model scaling, which runs about \(4\times \) faster than ABME [44] with comparable performance. We provide a visual comparison of video clips with large motions from the Vimeo90K testing set in Fig. 7, where SepConv [43] and DAIN [3] produce ghosting artifacts, and CAIN [10] causes missing-parts artifacts. Overall, RIFE (with small computation) can produce more reliable results.

4.3 General Temporal Encode

In the VFI task, our temporal encoding t is used to control the timestep. To show its generalization capability, we demonstrate that we can control this encoding to implement diverse applications. As shown in Fig. 8, if we input a gradient encoding \(t_p\), the RIFE\(_m\) will synthesize the two images from dynamic scenes in a “panoramic" view (use different timestamps for each column). The position relation of the vehicle in \(\widehat{I}_p\) is between \(I_0\) and \(I_1\). In other words, if \(I_0\), \(I_1\) are from the binocular camera, the shooting time of \(I_1\) is later than that of \(I_0\). \(\widehat{I}_p\) is the result of a wider FOV camera scan in columns. Similarly, this method may potentially eliminate the rolling shutter of the videos by having different timestamps for each horizontal row.

Qualitative comparison on Vimeo90K [61] testing set

4.4 Image Representation Interpolation

RIFE\(_m\) can interpolate other image representations using the intermediate flows and fusion map approximating from images. For instance, we interpolate the results of MiDaS [46] which is a popular monocular depth model, shown in Fig. 9. The synthesis formula is simply as follows:

where \(D_0, D_1\) are estimated by MiDas [46] and F, M are estimated by RIFE\(_m\). RIFE may potentially be used to extend some models and provide visually plausible effects when we ignore z-axis motion of objects.

4.5 Ablation Studies

We design some ablation studies on the intermediate flow estimation, distillation scheme, model design and loss function, shown in Table 4. These experiments use the same hyper-parameter setting and evaluation on Vimeo90K [61] and MiddleBury [2] benchmarks.

IFNet vs. Flow Reversal. We compare IFNet with previous intermediate flow estimation methods. Specifically, we use RAFT [55] and PWC-Net [53] with officially pre-trained parameters to estimate the bi-directional flows. Then we implement three flow reversal methods, including linear reversal [22], using a hidden convolutional layer with 128 channels, and the flow reversal layer from EQVI [28]. The optical flow models and flow reversal modules are combined together to replace the IFNet. Furthermore, we try to use the forward warping [41] operator to bypass flow reversal. These models are jointly fine-tuned with RefineNet. Because these models can not directly approximate the fusion map, the fusion map is subsequently approximated by RefineNet. As shown in Table 4, RIFE is more efficient and gets better interpolation performance. These flow models can estimate accurate bi-directional optical flow, but the flow reversal has difficulties in dealing with the object shift problem illustrated in Fig. 3.

Ablation on the Distillation Scheme. We observe that removing the distillation framework makes model training sometimes divergent. Furthermore, we show the importance of distillation design in following experiments. a1) Remove the privileged teacher block and use the last IFBlock’s results to guide the first two IFBlocks, denoted as “self-consistency"; a2) Use pre-trained RAFT [55] to estimate the intermediate flows based on the ground truth image, denoted as “RAFT-KD". This guidance is inspired by the pseudo-labels method [26]. However, this implementation relies on the pre-trained optical flow model and extremely increases the training duration (\(3\times \)). We found a1 and a2 suffer in quality. These experiments demonstrate the importance of optical flow supervision. Some recent work [25, 34] has also echoed the improvement using suitable optical flow distillation.

Ablation on RIFE’s Architecture and Loss Function. To verify the coarse-to-fine strategy of IFNet, we removed the first IFBlock and the first two IFBlocks in two experiments, respectively. We also try some other popular techniques, such as Batch Normlization (BN) [21]. BN does stabilize the training, but degrades final performance and increases inference overhead. We provide a pair of experiments to show \(L_{Lap}\) [40, 41] is quantitatively better than \(\mathcal {L}_1\).

Limitations. Our work may not cover some practical application requirements. Firstly, RIFE focuses on using two input frames and multi-frame input [24, 28, 60] is left to future work. One straightforward approach is to extend IFNet to use more frames as input. Secondly, most experiments are done with SSIM and PSNR as quantitative indexes. If human perception quality is preferred, RIFE can readily be changed to use the perceptually related losses [5, 42]. Thirdly, additional training data may be necessary for extending RIFE to various applications, such as interpolation for depth map and animation videos [51].

5 Conclusion

We develop an efficient and flexible algorithm for VFI, namely RIFE. A separate neural module IFNet directly estimates the intermediate optical flows, supervised by a privileged distillation scheme, where the teacher model can access the ground truth intermediate frames. Experiments confirm RIFE can effectively process videos of different scenes. Furthermore, an extra input with temporal encoding enables RIFE for arbitrary-timestep frame interpolation. The lightweight nature of RIFE makes it much more accessible for downstream tasks.

References

Anil, R., Pereyra, G., Passos, A., Ormandi, R., Dahl, G.E., Hinton, G.E.: Large scale distributed neural network training through online distillation. In: Proceedings of the International Conference on Learning Representations (ICLR) (2018)

Baker, S., Scharstein, D., Lewis, J., Roth, S., Black, M.J., Szeliski, R.: A database and evaluation methodology for optical flow. In: International Journal of Computer Vision (IJCV) (2011)

Bao, W., Lai, W.S., Ma, C., Zhang, X., Gao, Z., Yang, M.H.: Depth-aware video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Bao, W., Lai, W.S., Zhang, X., Gao, Z., Yang, M.H.: MEMC-Net: motion estimation and motion compensation driven neural network for video interpolation and enhancement. In: IEEE Transactions on Pattern Analysis and Machine Intelligence (IEEE TPAMI) (2018). https://doi.org/10.1109/TPAMI.2019.2941941

Blau, Y., Michaeli, T.: The perception-distortion tradeoff. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Briedis, K.M., Djelouah, A., Meyer, M., McGonigal, I., Gross, M., Schroers, C.: Neural frame interpolation for rendered content. ACM Trans. Graph. 40(6), 1–13 (2021)

Chen, X., Zhang, Y., Wang, Y., Shu, H., Xu, C., Xu, C.: Optical flow distillation: Towards efficient and stable video style transfer. In: Proceedings of the European Conference on Computer Vision (ECCV) (2020)

Cheng, X., Chen, Z.: Video frame interpolation via deformable separable convolution. In: AAAI Conference on Artificial Intelligence (2020)

Cheng, X., Chen, Z.: Multiple video frame interpolation via enhanced deformable separable convolution. In: IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) (2021). https://doi.org/10.1109/TPAMI.2021.3100714

Choi, M., Kim, H., Han, B., Xu, N., Lee, K.M.: Channel attention is all you need for video frame interpolation. In: AAAI Conference on Artificial Intelligence (2020)

Danier, D., Zhang, F., Bull, D.: Spatio-temporal multi-flow network for video frame interpolation. arXiv preprint arXiv:2111.15483 (2021)

Ding, L., Goshtasby, A.: On the canny edge detector. Pattern Recogn. 34(3), 721–725 (2001)

Ding, T., Liang, L., Zhu, Z., Zharkov, I.: CDFI: compression-driven network design for frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Ding, X., Zhang, X., Ma, N., Han, J., Ding, G., Sun, J.: RepVGG: making VGG-style convnets great again. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Dosovitskiy, A., et al.: Learning optical flow with convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2015)

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015)

Huang, Z., Heng, W., Zhou, S.: Learning to paint with model-based deep reinforcement learning. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2019)

Hui, T.W., Tang, X., Change Loy, C.: LiteFlowNet: a lightweight convolutional neural network for optical flow estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Ilg, E., et al.: Evolution of optical flow estimation with deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167 (2015)

Jiang, H., Sun, D., Jampani, V., Yang, M.H., Learned-Miller, E., Kautz, J.: Super SloMo: high quality estimation of multiple intermediate frames for video interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Jonschkowski, R., Stone, A., Barron, J.T., Gordon, A., Konolige, K., Angelova, A.: What matters in unsupervised optical flow. In: Proceedings of the European Conference on Computer Vision (ECCV) (2020)

Kalluri, T., Pathak, D., Chandraker, M., Tran, D.: FLAVR: Flow-agnostic video representations for fast frame interpolation. arXiv preprint arXiv:2012.08512 (2020)

Kong, L., et al.: IfrNet: intermediate feature refine network for efficient frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Lee, D.H., et al.: Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In: Proceedings of the IEEE International Conference on Machine Learning Workshops (ICMLW) (2013)

Lee, H., Kim, T., Chung, T.y., Pak, D., Ban, Y., Lee, S.: AdaCOF: adaptive collaboration of flows for video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Liu, Y., Xie, L., Siyao, L., Sun, W., Qiao, Y., Dong, C.: Enhanced quadratic video interpolation. In: Proceedings of the European Conference on Computer Vision (ECCV) (2020)

Liu, Y.L., Liao, Y.T., Lin, Y.Y., Chuang, Y.Y.: Deep video frame interpolation using cyclic frame generation. In: Proceedings of the 33rd Conference on Artificial Intelligence (AAAI) (2019)

Liu, Z., Yeh, R.A., Tang, X., Liu, Y., Agarwala, A.: Video frame synthesis using deep voxel flow. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2017)

Lopez-Paz, D., Bottou, L., Schölkopf, B., Vapnik, V.: Unifying distillation and privileged information. In: Proceedings of the International Conference on Learning Representations (ICLR) (2016)

Loshchilov, I., Hutter, F.: Fixing weight decay regularization in Adam. arXiv preprint arXiv:1711.05101 (2017)

Lu, G., Ouyang, W., Xu, D., Zhang, X., Cai, C., Gao, Z.: DVC: an end-to-end deep video compression framework. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Lu, L., Wu, R., Lin, H., Lu, J., Jia, J.: Video frame interpolation with transformer. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Luo, K., Wang, C., Liu, S., Fan, H., Wang, J., Sun, J.: UPFlow: upsampling pyramid for unsupervised optical flow learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Ma, N., Zhang, X., Zheng, H.T., Sun, J.: ShuffleNet v2: practical guidelines for efficient CNN architecture design. In: Proceedings of the European conference on computer vision (ECCV) (2018)

Meister, S., Hur, J., Roth, S.: UnFlow: unsupervised learning of optical flow with a bidirectional census loss. In: AAAI Conference on Artificial Intelligence (2018)

Meyer, S., Wang, O., Zimmer, H., Grosse, M., Sorkine-Hornung, a.: Phase-based frame interpolation for video. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

Mnih, V., et al.: Playing Atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602 (2013)

Niklaus, S., Liu, F.: Context-aware synthesis for video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Niklaus, S., Liu, F.: SoftMax splatting for video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Niklaus, S., Mai, L., Liu, F.: Video frame interpolation via adaptive convolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Niklaus, S., Mai, L., Liu, F.: Video frame interpolation via adaptive separable convolution. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2017)

Park, J., Lee, C., Kim, C.S.: Asymmetric bilateral motion estimation for video frame interpolation. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2021)

Porrello, A., Bergamini, L., Calderara, S.: Robust re-identification by multiple views knowledge distillation. In: Proceedings of the European Conference on Computer Vision (ECCV) (2020)

Ranftl, R., Lasinger, K., Hafner, D., Schindler, K., Koltun, V.: Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. In: IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) (2020)

Ranjan, A., Black, M.J.: Optical flow estimation using a spatial pyramid network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Reda, F., Kontkanen, J., Tabellion, E., Sun, D., Pantofaru, C., Curless, B.: Frame interpolation for large motion. arXiv (2022)

Reda, F.A., et al.: Unsupervised video interpolation using cycle consistency. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2019)

Sim, H., Oh, J., Kim, M.: XVFI: extreme video frame interpolation. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2021)

Siyao, L., et al.: Deep animation video interpolation in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Soomro, K., Zamir, A.R., Shah, M.: Ucf101: a dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402 (2012)

Sun, D., Yang, X., Liu, M.Y., Kautz, J.: PWC-Net: CNNs for optical flow using pyramid, warping, and cost volume. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Sun, S., Kuang, Z., Sheng, L., Ouyang, W., Zhang, W.: Optical flow guided feature: a fast and robust motion representation for video action recognition. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Teed, Z., Deng, J.: RAFT: recurrent all-pairs field transforms for optical flow. In: Proceedings of the European Conference on Computer Vision (ECCV) (2020)

Wu, C.Y., Singhal, N., Krahenbuhl, P.: Video compression through image interpolation. In: Proceedings of the European Conference on Computer Vision (ECCV) (2018)

Wu, Y., Wen, Q., Chen, Q.: Optimizing video prediction via video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Xiang, X., Tian, Y., Zhang, Y., Fu, Y., Allebach, J.P., Xu, C.: Zooming slow-MO: fast and accurate one-stage space-time video super-resolution. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Xu, G., Xu, J., Li, Z., Wang, L., Sun, X., Cheng, M.: Temporal modulation network for controllable space-time video super-resolution. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Xu, X., Siyao, L., Sun, W., Yin, Q., Yang, M.H.: Quadratic video interpolation. In: Advances in Neural Information Processing Systems (NIPS) (2019)

Xue, T., Chen, B., Wu, J., Wei, D., Freeman, W.T.: Video enhancement with task-oriented flow. In: International Journal of Computer Vision (IJCV) (2019)

Yuan, S., Stenger, B., Kim, T.K.: RGB-based 3d hand pose estimation via privileged learning with depth images. In: Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW) (2019)

Zhao, Z., Wu, Z., Zhuang, Y., Li, B., Jia, J.: Tracking objects as pixel-wise distributions. In: Proceedings of the European conference on computer vision (ECCV) (2022)

Zhou, M., Bai, Y., Zhang, W., Zhao, T., Mei, T.: Responsive listening head generation: a benchmark dataset and baseline. In: Proceedings of the European Conference on Computer Vision (ECCV) (2022)

Zhou, T., Tulsiani, S., Sun, W., Malik, J., Efros, A.A.: View synthesis by appearance flow. In: Proceedings of the European Conference on Computer Vision (ECCV) (2016)

Acknowledgement

This work is supported by National Key R &D Program of China (2021ZD0109803) and National Natural Science Foundation of China under Grant No. 62136001, 62088102.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Huang, Z., Zhang, T., Heng, W., Shi, B., Zhou, S. (2022). Real-Time Intermediate Flow Estimation for Video Frame Interpolation. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13674. Springer, Cham. https://doi.org/10.1007/978-3-031-19781-9_36

Download citation

DOI: https://doi.org/10.1007/978-3-031-19781-9_36

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19780-2

Online ISBN: 978-3-031-19781-9

eBook Packages: Computer ScienceComputer Science (R0)