Abstract

The 2020 update of the European Seismic Hazard Model (ESHM20) is the most recent seismic hazard model of the Euro-Mediterranean region. It was built upon unified and homogenized datasets including earthquake catalogues, active faults, ground motion recordings and state-of-the-art modelling components, i.e. earthquake rates forecast and regionally variable ground motion characteristic models. ESHM20 replaces the 2013 European Seismic Hazard Model (ESHM13), and it is the first regional model to provide two informative hazard maps for the next update of the European Seismic Design Code (CEN EC8). ESHM20 is also one of the key components of the first publicly available seismic risk model for Europe. This chapter provides a short summary of ESHM20 by highlighting its main features and describing some lessons learned during the model’s development.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Seismic hazard

- Seismogenic source

- Earthquake rate forecast

- Active faults

- Ground motion characteristic models

- ESHM20

- ESRM20

- European Seismic Hazard Model

- European Seismic Risk Model

1 The 2020 Update of the Seismic Hazard Model for Europe

The 2020 update of the European Seismic Hazard Model (ESHM20 [1]) was completed within the EU founded project “Seismology and Earthquake Engineering Research Infrastructure Alliance for Europe" (SERA Project, 2017–2020). ESHM20 was finalized in December 2021 and publicly released in April 2022.

ESHM20 is based on the same principles as the previous generation of pan-European seismic hazard models [2, 3], with state-of-the-art procedures consistently applied to input datasets across the entire pan-European region, without country-borders issues.

A seismotectonic probabilistic framework [4] was used to combine historical evidence of past earthquakes with geological data, which are unique aspects of how earthquakes occur in a seismotectonic setting. The resulting seismogenic source model includes completely harmonized and cross-border seismogenic area sources, as well as a hybrid model that incorporates active faults and background smoothed seismicity.

Furthermore, a novel ground motion model was developed and used to capture the source and attenuation properties of the regional scale. This model is represented by a regional backbone approach [5] to forecast the ground shaking due to earthquakes from shallow crust, subduction interface and in-slab, deep or volcanic sources [6, 7].

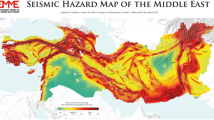

A complex computational pathway handles the inherent uncertainties, i.e. logic trees, and generates full sets of hazard results (i.e., hazard curves and maps, uniform hazard spectra). The spatial distribution of the ground shaking, depicted by the peak ground acceleration (PGA) for a probability of exceedance of 10% in 50 years (i.e. mean return period of 475 years) on reference rock conditions for the Euro-Mediterranean region is illustrated in Fig. 1. This is just an example of the multiple sets of results, all available online at the web-platformFootnote 1 of European Facilities for Earthquake Hazard and Risk (EFEHR, [8]). ESHM20 replaces the 2013 European Seismic Hazard Model (ESHM13, [3]) and it is the first regional model to provide two informative hazard maps for the next update of the European Seismic Design Code (CEN EC8). ESHM20 is also one of the key components of the first openly available seismic risk model for Europe (ESRM20, [9]).

This chapter provides a short summary of ESHM20 by highlighting some of its features and describing the key lessons learned, where applicable. The model components are briefly summarized in a sequential order, with an emphasis on key milestones and achievements. We conclude with a summary of the outcomes, focusing on the results and associated uncertainties.

2 Unified Hazard Input: Compilation, Curation and Harmonization

The quality and availability of earthquake data and information is without doubt a vital component of any probabilistic seismic hazard model. The main datasets to support the development of the ESHM20 are: the unified earthquake catalog combining historical [10] and instrumental earthquake catalogs, active fault datasets, and ground motion recordings [11].

In the development cycle of the regional seismic hazard models, there is a timely need to synchronize the collection of the datasets with the development of the main components such as earthquake sources and ground motion models. The idea is reasonable in concept, but there are many difficulties when all the ingredients are put together. Firstly, the compilation, curation, and harmonization of any earthquake related dataset is highly sensitive to the quality of raw data and/or metadata. Secondly, the density of earthquake data and information is not uniform across Europe, since some regions experience more earthquakes than others, resulting in a longer historical earthquake record or more ground shaking recordings. Furthermore, the geological information about active faults is incomplete across Europe, too. Given these constraints, often the compilation of the input datasets and the development of the hazard model components are done in parallel, resulting in long development periods.

Finally, the main input datasets (earthquake catalogues, active faults, ground motion recordings, flat files, site models, etc.) should be maintained and updated on a regular basis. In the next few sections, we will discuss a few lessons learned during the data compilation and curation of the main input datasets.

2.1 Historical Earthquake Catalogues

Historical earthquake data are essential to define the long-term characteristics of the seismicity such as the seismic activity rates and the maximum magnitude, identify the location of potential active faults, or recognize possible seismicity clusters. EPICA, (European PreInstrumental earthquake CAtalogue, [10]) is the 1000–1899 earthquake catalogue used to develop the ESHM20.

This compilation incorporates the most recent knowledge and data about historical seismicity, included in the European Archive of Historical Earthquake Data – AHEAD [12, 13]. EPICA complies with ESHM20’s requirement that earthquake data, information, and parameters be consistent across countries. This goal was achieved by reassessing, with homogeneous procedures across Europe, the earthquake parameters from raw macroseismic intensity data selected from AHEAD.

EPICA is the new version of SHEEC 1000–1899 [14] and contains 5703 earthquakes in the period 1000–1899 CE with a maximum reported intensity of 5 or a minimum moment magnitude Mw of 4.0. EPICA has 1035 more earthquakes than SHEEC which are mostly based on data sources published in the last few years, which also provide new data for half of the earthquakes already included in SHEEC 1000–1899. The revision of the input datasets and the lowering of the intensity/magnitude threshold also added 339 entries, while 49 earthquakes were assessed as fakes or duplications. Overall, the number of earthquakes increased from 2447 to 3622.

As for the SHEEC catalogue, EPICA relies on AHEAD’s knowledge of European historical seismicity, which collects and integrates data from national and local sources of historical earthquake data and the latest scientific literature. Due to national efforts, such knowledge is uneven across Europe [15], where historical earthquake research has always had various objectives and time schedules. Macroseismic data are available for all historical earthquakes in France and Italy, as well as for most of them in Spain, the United Kingdom, Switzerland, and Greece, but are scarce or nonexistent in Northern and Eastern Europe. In many of these places, historical earthquake parameters rely on decades-old parametric catalogues [14]. This affects the uniformity and dependability of continental-scale earthquake characteristics. Harmonizing, validating, and deriving parameters from intensity data is a slow process that should be continuous and a “near real-time” process.

In addition, the methods for deriving source parameters from intensity data need to be improved and should be standardized at the European scale [16] by means of a common effort. Also, the high uncertainty related to the parameters of pre-instrumental earthquakes is probably not completely explored and should be fully incorporated into the modeling of seismic sources.

Finally, extending EPICA to the pre-instrumental period of the twentieth century would improve long-term seismic data harmonization for the next generation of seismic hazard models.

2.2 Instrumental Earthquake Catalogues

The second part of the unified catalogue covers the period from 1900 CE to the end of 2014 CE, which we refer to as the “instrumental” earthquake catalogue (though this is a slight misnomer, as will be seen in due course). This part of the catalogue builds upon the previous European-Mediterranean Earthquake Catalogue (EMEC) published by [17] and modified for input into the ESHM13 by [18]. The full EMEC catalogue spans the period from 1000 CE to the end of 2014 CE. However, only the post-1900 period had been adopted for integration with the historical earthquake catalogue in the ESHM20. The EMEC catalogue is a compilation that identifies and incorporates data on earthquakes from local and regional sources around Europe to homogenize them into a common reference in which each event is represented by a single time, location, and harmonized magnitude. The process, described in [17] begins with a broad search of seismological bulletins, previously compiled catalogues, special investigations (such as a detailed seismological analysis of a specific event or sequence), and the bulletin of the International Seismological CentreFootnote 2 (ISC). Compiling the data into a large database eliminates erroneous measurements and separates non-tectonic earthquakes. A series of spatio-temporal join operations identify common events across data sources.

In the second step, the Euro-Mediterranean region is subdivided into geographic polygons within which specific hierarchies are applied for the selection of the preferred event location. As the source data contains earthquake magnitudes in various scales and by different agencies, hierarchies of magnitude conversion relations are applied to render the magnitude into a common reference scale equal to (or a proxy for) moment magnitude Mw. For the ESHM20 update to EMEC, we maintained both the conversion equations and the general philosophy of prioritizing special studies and local and national bulletins over regional or global bulletins, except where new data sources prompted changes. Extending the period of coverage to 2014 and incorporating new special studies and national compiled catalogues such as the CPTI15 for Italy [19], F-CAT for France [20], and catalogues of Turkey [21], Slovenia [22], and Romania [23] were priorities.

The catalogue now contains more than 55,700 earthquakes with Mw (or proxy) greater than 3.5 for all of Europe from 1900 to 2014 CE. In many national catalogues, earthquake parameters for events in the early 20th century may be constrained by macroseismic or instrumental data, or a mixture of both, transitioning to fully instrumental data by the mid 20th century.

The EMEC process, and especially the hierarchy selection philosophy, aims to align the European catalogue with national catalogues within national boundaries. This compilation process often delays the end of the catalogue by many years. Future efforts for catalogue compilation will seek to update the catalogue and operationalize the process more efficiently, incorporating recent events and allowing near real-time compilation. With the expansion of the European Integrated Data ArchiveFootnote 3, we expect to compile a wider range of seismological products for many well-recorded earthquakes and to disseminate more information.

2.3 Fault-Based Seismic Source Model

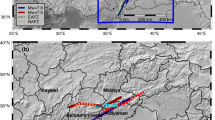

The fault-based seismic source model exploits the 2020 European Fault-Source Model (EFSM20), an update of the European Database of Seismogenic FaultsFootnote 4 (EDSF13, [24]), and several other recent compilations of active faults in the Euro-Mediterranean regions. The model considers two seismogenic source categories: crustal faults and subduction systems (Fig. 2). The main reasons for this subdivision rest on the different scaling relationships that govern the earthquake ruptures, the different ground-motion models, and the need to represent their geometry differently. Crustal faults are meant to capture the seismicity occurring in the brittle crust either at plate boundaries or within plate interiors. Subduction systems are intended to capture the different types of earthquakes occurring within a subduction zone, including the slab interface and intraslab earthquakes. The accretionary wedge and outer-rise seismicity are assumed to be dealt with by the crustal seismicity models.

For crustal faults, EFSM20 adopts a down-dip planar geometry in which the 3D fault surface is obtained by extrusion from the fault’s upper edge based on the dip and depth values. In addition to the essential geometric parameters (geographic location, depths, strike, dip, length, width), each fault is characterized by behavior parameters: the rake that defines the style of faulting, the slip rate, and the maximum magnitude. The maximum earthquake magnitude is estimated as the magnitude value corresponding to the largest possible rupture that a fault can host based on its dimensions and the magnitude scaling relations by [25, 26]. Based on the EFSM20 set of attributes, we derive a magnitude-frequency distribution (MFD) for each fault source based on the moment conservation principle [27, 28], assuming total seismic efficiency, a proxy of the regional seismicity, and an upper magnitude bound.

For subduction systems, EFSM20 adopts a 3D geometric reconstruction of the slab top surface based on various subsurface datasets (including but not limited to seismic reflection and refraction data, tomographic images, and seismicity distributions). These reconstructions were truncated at 300 km depth, assuming that deeper subduction earthquakes would not affect the hazard estimates. The seismic interface is assumed to geometrically coincide with the slab top surface within a depth range estimated from data and modeling of the 150 ℃ and 350–450 ℃ isotherms, the seismicity distribution, and the slab intersection with the Moho of the upper plate. Each subduction interface behavior is characterized by a style of faulting fixed as reverse, a convergence rate derived from geodetic observations across the subduction zones, and the maximum magnitude (Mmax). The maximum earthquake magnitude is estimated as the magnitude value corresponding to the largest possible rupture that the interface can host based on its dimensions and the magnitude scaling relations by [29]. Based on the EFSM20 set of attributes, we derive recurrence rate models for each slab interface based on the same principle as those for crustal faults [27, 28]. Finally, the intraslab geometry is defined by a volume constrained by the slab top surface and the crustal thickness of the lower plate. The MFD is entirely derived from deep seismicity.

The datasets described here (EFSM20 and the fault-based seismic source model) enrich the EFEHR services portfolio of the EPOS-Seismology community [8]. EFSM20 took advantage of the previous effort in this respect represented by EDSF13, which was likely a one-off occasion to systematically collect and harmonize fault data for earthquake hazard analysis at the European scale.

As of today, several regional collections of potential seismogenic faults exist [30,31,32,33], but the collection of different models still lacks systematic updates, and extension to not yet covered regions, especially the plate interiors. Although the making of EFSM20 largely benefited from these post-EDSF13 advancements, it required significant effort to harmonize data coming from inhomogeneous sources. While examining the literature on active faults of the last decade, it is apparent that research studies tend to concentrate on the seismic sources rather than of recent earthquakes or the re-appraisal of older ones, while study cases on “silent” regions are lagging behind.

As regards the present collection, the most critical parameter is the crustal fault slip rate, which remains a speculative estimate in many instances and has a direct impact on the moment-rate calculations. Also, the seismic efficiency (or long-term coupling) is very critical, but that aspect requires research and development rather than simple data collection. The geometric definition of crustal faults is less critical in a continent-wide hazard model, and the use of more detailed 3D geometries would likely require an exceptionally high computational cost. The simple planar fault model, on the other hand, may cause issues when used at a local scale and in the near-field region of ground motion [34].

3 Advanced Seismogenic Source Models: Consensus and Cross-Border Harmonized

A large-scale seismogenic source model is constructed by combining geological information with observed seismicity within a background tectonic context. The primary goal of such models is to characterize all possible earthquake magnitude-rupture scenarios that can cause ground shaking at a site of interest. In a probabilistic framework, these magnitude-rupture scenarios describe the size and location of earthquakes combined with the likelihood or frequency of occurrence to forecast how frequently earthquakes will occur. The ESHM20 seismic source model includes two seismogenic sources: an area source model and a hybrid model that combines a smoothed seismicity model (SSM) and shallow fault sources. This is a consolidated approach for modelling epistemic uncertainty in the seismic source characterization that has been already used at the national [35,36,37,38,39], regional [3, 40], and global level [41]. The individual sources of the ESHM20 are described by two features, i.e. geographical or spatial delineation and magnitude-frequency distribution. Both features are subject to uncertainties due to criteria for defining the earthquake sources, subjective delineation of the source boundary, information on the prevalent tectonic regimes, availability of active faults, limited observations, etc. It is difficult to identify which one of these factors is more important and which one might control the hazard estimates at a given location across Europe, because of the different challenges we face in modelling occurrences in the various tectonic regions. Given comparable input datasets and specific assumptions or guidelines to define earthquake sources, there is a high likelihood that different experts will propose alternative earthquake sources. Generally, this is the primary reason why the regional and national seismic hazard models disagree.

3.1 Seismogenic Source Models: Spatial Variability

To reduce discrepancies between the national and regional models, we employed a consensus area source model starting from recent national hazard models (i.e. Italy, Germany, Spain, United Kingdom, Slovenia, Switzerland, Turkey, and Romania). We performed a uniform cross-border harmonization based on layers i.e. tectonic information, geological evidence and earthquake homogeneity [40, 42]. As a result, the consensus area source model considers the opinions and knowledge of local experts. Eventually, it may be possible to compare it directly with the national models and make it easier to do sensitivity analyses across the borders of the country.

The second seismogenic source model is a hybrid source model that combines the active faults and the background seismicity spatially distributed with an adaptive kernel. The smoothed seismicity model (SSM) is developed within each tectonic domain by optimizing the adaptive kernel bandwidth, the smoothing parameters, declustering algorithm, and the declustering parameters. Training and validation sets helped to determine the optimal combination of options and parameters. While the training set is used to generate various smoothed seismicity models, the validation set is used to rank them in terms of the mutual information gain. It should be noted that in regions where active faults have not been mapped, such as Northern Europe, the spatial pattern of seismicity is only constrained by area sources and background smoothed seismicity. The complementary sources include the subduction interface, subduction in-slab zones, volcanic sources, and deep seismicity sources in Vrancea, Romania, and Southern Spain.

Conceptually, the two seismogenic source models aim at capturing the spatial variability of seismicity as seen in Fig. 3, where the consensus area sources model is depicted together with the active faults, and the subduction interface sources are illustrated. It is assumed that the location of the active fault provides a spatial proxy for the occurrence of future large-magnitude earthquakes (M > 6.0). Similarly, events with M > 6.5 are assigned to the subduction interfaces. The configuration of the ESHM20 seismogenic source models is an optimum solution for the calculation of the seismic hazard over a large-scale region, and they are equally weighted.

3.2 Seismogenic Source Models: Source Characterization

The main source typologies used in ESHM20 are area source zones, point sources, simple and complex faults as defined in [41]. We computed earthquake rates for each source by following these steps: 1) select complete events from a declustered earthquake catalogue, 2) compute the seismic activity parameters (i.e. aGR the seismic productivity and bGR the Gutenberg Richter slope) and their uncertainties, 3) choose a magnitude frequency distribution 4) apply statistical tests with observations 5) define the epistemic uncertainty model of each source. To characterize the seismic productivity of active faults, the process begins at step two.

The magnitude-windowing approach [43] was used to decluster unified earthquake catalogue of ESHM20. The option of declustering or not declustering the earthquake catalogue is a source of debate among the seismic hazard community; some argue that aftershocks can cause damage, so removing them may reduce seismic hazard estimates [44,45,46,47,48]. In this regard, we performed a sensitivity analysis as well as statistical testing of a few declustering techniques based on space-time windows, cluster methods, and correlation metrics, more details in [1]. Our findings show that the time-window and cluster methods are both suitable for use, with the former being preferred due to its performance in low to moderate seismicity regions.

We also find that the effect of declustering on the estimation of seismic parameter estimation (aGR and bGR) is not as significant as the completeness magnitude-time intervals. We therefore developed a quantitative technique for assessing the completeness that replaces expert judgment [49] with appropriate statistical testing. We primarily focus on quantifying the temporal course of earthquake frequency, i.e., the TCEF method [50], and combine it further with the maximum curvature method [51] to achieve the benefits of both methods. The novel technique is data-driven and has been applied to large-scale macro-zones similar to those used to compile the EMEC catalogue.

In the left panel, the seismic productivity of an area source in Southern Spain is described by two alternative MFDs: a double truncated GR distribution (black line) and its uncertainties (dashed-blue lines) and the Pareto tapered distribution (green line). On the right panel, the completeness intervals are given.

The activity parameters are computed, considering the uncertainties of the aGR- and bGR parameters, as well as their confidence intervals, for both data and prediction [1]. We bootstrapped one million samples of correlated aGR and bGR values using a multivariate normal distribution based on their values, covariance, and individual standard error. The discrete approximation method of probability distributions [52] is used to derive the lowest, middle, and upper values of the aGR and bGR parameters, representing the 16th, 50th, and 84th percentiles, respectively.

A double truncation magnitude-frequency distribution (MFD) is used to characterize annual occurrence rate (i.e., from lower to upper magnitude bounds). In addition, we consider a tapered Pareto distribution [53] which depicts faster decay in proximity to the maximum magnitude compared to a double-truncated GR. In low to moderate seismicity regions, double exponential recurrence rates may, in some cases, overestimate the observed rates for magnitudes 5 to 6. Figure 4 compares two recurrence distributions for an area source zone in Spain. The two distributions overlap in the low-to-moderate magnitude bins, but the tapered Pareto distribution depicts a faster decay of cumulative rates for Mw > 5.8. The tapered Pareto distribution’s corner magnitude allows for a new model of Mmax a value corresponding to a relevant threshold annual recurrence rate, i.e., 10–4. Thus, the Mmax of Pareto distribution is an alternative model of the Mmax which for the double truncated distribution is assigned from the historical seismicity as in ESHM13 (see Fig. 5).

For active faults, the geological slip-rates are assumed to span multiple seismic cycles and are used to calculate their seismic productivity. The active faults’ MFD is a double-truncation exponential distribution with a regionally estimated bGR-value. The Mmax of each fault source is obtained using empirical magnitude–geometry scaling laws [26].

The MFD’s shape is highly dependent on slip rates, fault geometry, and its maximum magnitude (Mmax). For a constant slip rate, increasing Mmax reduces the occurrence rates of low-to-moderate magnitude events. Because large earthquakes account for the vast majority of the total seismic moment rate, increasing Mmax requires fewer smaller earthquakes [27]. Therefore, the fault area that constrains the Mmax also influences fault productivity, with larger fault areas producing less seismic activity due to seismic moment conservation. Since the effects of the fault-area and Mmax are interconnected, only the Mmax effect is kept for the fault characterization. Unlike ESHM13, in which only the epistemic uncertainty of the Mmax was incorporated at the individual source level, ESHM20’s seismic source characterization combines for the area sources two sets of parameters, bGR-values and Mmax in two alternative MFDs for the area sources, and uncertainties on aGR-values and Mmax are defined for each active fault, respectively. This is aligned with the present-day state of practice of seismogenic source modeling which might bring the regional models closer to those of site-specific studies.

Another important aspect of the ESHM20 building process is the integration of statistical testing and sanity checks as a part of the model building process, as the scientific consensus was not always possible [38]. Thus, the objective of the testing phase is to quantify the consistency between the input observations and the forecast model and, by doing so, to eliminate any potential bias and errors introduced by incomplete datasets and/or the use of a uniform framework for the entire model. All phases of the model building, from earthquake catalog analysis to final earthquake rate model, underwent statistical testing, summarized as:

-

Series of likelihood tests to support the selection of the declustering technique, for which we used a target earthquake catalogue i.e. Mw > 4.5 from 2007 to 2014. These statistical tests are based on estimates of the average-rate corrected information gain per earthquake [54].

-

Consistency testing of the earthquake rate forecast yield by the individual sources, i.e. area sources and hybrid faults, plus the background seismicity. We compared two activity rate forecasts using the target earthquake catalogue (i.e., Mw > 4.5 from 2007 to 2014) on the basis of (i) their forecasted magnitude distribution and (ii) their forecasted spatial distribution.

-

Statistical scoring of the individual source model in geographical space.

-

Retrospective consistency tests comparing earthquake forecasts to observed seismicity over the entire catalogue’s duration. It is important to note that these consistency tests are retrospective because the input earthquake catalogue was used to characterize the source model.

Some of the challenges faced in the testing phase are related to data independency, limited datasets (i.e., small to moderate magnitude events and short observation time). Nonetheless, we believe that statistical testing and sanity checks should become increasingly common in the development of future hazard models.

4 Ground Motion Characteristic Models: Next Generation of Regional Models

4.1 Capturing Epistemic Uncertainty in Ground Motion Models

In a probabilistic framework, the ground motion model (GMM) provides the critical link between the earthquake rupture and the ground shaking expected to occur the site of interest. Generally, a GMM incorporates the regional features of the source, path, and site conditions as well as their aleatory and epistemic uncertainty. The aleatory uncertainty is represented by the standard deviation (\(\sigma \)) of the GMM, and typically comprises several components representing the event-to-event variability (\(\delta {B}_{e}\)), site-to-site variability (\(\delta S2{S}_{S}\)) and the remaining within-event variability (\(\delta {W}_{es}\)), which have mean values of zeros and standard deviations of, \({\phi }_{S2S}\) \({\phi }_{0}\) respectively. Aleatory variability is explicitly accounted for within the seismic hazard integral.

The vast majority of existing national and regional scale seismic hazard models, including the ESHM13, have generally captured epistemic uncertainty (or model-to-model uncertainty) by selecting several ground motion models from the available literature. These models have been identified as appropriate alternatives for modelling the ground motion in the target region in question, and are thus included by as alternative branches within a logic tree.

In recent years, the multi-model approach has been enhanced by using quantitative testing metrics based on the fit of the candidate models to observed data in order to identify better fitting GMMs and weight them accordingly (e.g., [55, 56]).

While the multi-model approach to characterizing epistemic uncertainty is both practical and widely understood by many hazard model end-users, it has theoretical limitations when it comes to the objective of characterizing the “real” epistemic uncertainty in the ground motion predictions [57]. Chief among these are the tendency to select (or give high weighting to) multiple models derived from similar datasets in a region, as well as problems of inconsistency between the source, path, and site parameterizations of the models.

These problems may manifest in several ways, from “pinching” in the spread of expected ground motions for certain magnitudes and distances, to the inherent contradiction that epistemic uncertainty is then modeled as being wider in regions where more data and models are available, and thus narrower in regions with less data, largely by virtue of the availability of models.

Given these limitations, recent developments in GMM characterization have moved toward an alternative approach called the scaled backbone approach, in which the best suited candidate GMM is identified and the epistemic uncertainty in the expected ground motion (and sometimes the aleatory variability) is captured by applying adjustments to the backbone GMM that better reflect the underlying uncertainty in the seismological characteristics of a region.

4.2 A Regionalized Scaled-Backbone Approach

The characterization of the GMM and its epistemic uncertainty in ESHM20 represents a radical departure from its predecessor by embracing the scaled backbone concept and calibrating the adjustments according to the available data for the region in question. The means to explore region-to-region variability in GMMs in Europe arises from the development of the Engineering Strong Motion (ESM) database Footnote 5, and its corresponding harmonized parametric flatfile [11]. The ESM flatfile boasts more than 20,000 processed ground motions from earthquakes in the European and Mediterranean region, an almost order of magnitude increase in data volume in comparison to that available at the time of the ESHM13 development. From this data we fit our backbone GMM using robust mixed effects regression [58]. But the large number of records means that we can quantify region-to-region variability in the model by introducing new random effects in addition to those listed above.

Specifically, the backbone GMM introduces region-specific random effects: source-region to source-region variability (\(\delta L2{L}_{l}\)) and residual attenuation region variability (\({\delta }_{c3}\)), which depend on the region in which the earthquake occurs (for \(\delta L2{L}_{l}\)) or in which the station is located (for \({\delta }_{c3}\)) according to prior regionalizations using the tectonic model and the regionalization of [1]. These additional residual terms allow us to identify regions of Europe in which earthquakes are typically more or less energetic than average, and regions where the attenuation may be faster or slower. As \(\delta L2{L}_{l}\) and \({\delta }_{c3}\) are random effects residual values, they are normally distributed with means of 0 and standard deviations of \({\tau }_{L2L}\) and \({\tau }_{c3}\) respectively. This allows us to quantify the distributions of these regional properties and represent them in the GMM logic tree by mapping the respective scaling factors and weights as a discrete approximation of the Gaussian distributions [52].

Full details on this process for shallow crustal seismicity can be found in [6], while construction of the scaled backbone GMM logic tree for other tectonic environments, such as the stable shield area of northeastern Europe, the subduction regions in the Mediterranean and the Vrancea deep seismic source, can be found in [7] and [1] respectively.

Organizing Uncertainties and Transitioning from Aleatory to Epistemic

The adoption of the regionalized scaled backbone GMM logic tree, and the manner in which it is derived, are not only intended to capture both the aleatory and epistemic uncertainties in ground motion prediction as we currently understand, but also to set in place a framework through which new data directly refine the calibration of the model and reduce epistemic uncertainty. This process is becoming more widely established in ground motion modelling as one of moving from an ergodic ground motion model (one in which the aleatory variability incorporates all of the region-to-region variability in the underlying data set) to a partially- or fully-non-ergodic model in which systematic source, path and site specific effects in a region are identified and extracted from the aleatory variability, leaving a reduced event-to-event and within-event variability (\({\tau }_{e0}\) and \({\varepsilon }_{0}\)) that should reflect the irreducible variability at the site.

How and why has this transition been adopted in ESHM20? The expansion of data in ESM includes new records from many new regions and new recording stations that are not present in previous databases. This means that the region-to-region variability within the data has increased, which results in a larger aleatory variability within a truly ergodic framework (i.e., \({\sigma }^{2}={{\tau }_{L2L}}^{2}+{{\tau }_{e0}}^{2 }+{{\tau }_{c3}}^{2}+{{\phi }_{S2S}}^{2 }+{{\varepsilon }_{0}}^{2}\)). The prior regionalizations, which are based on geology and tectonics, provide both a rationale and a practical basis for identifying systematic differences in the ground motion from one region to another (i.e., the \(\delta L2{L}_{l}\) and \({\delta }_{c3}\) terms).

Theoretically, these can be fixed for each specific region in question, leaving the aleatory variability as \({\sigma }^{2}={{\tau }_{e0}}^{2 }+{{\phi }_{S2S}}^{2 }+{{\varepsilon }_{0}}^{2}\), or even simply \({\sigma }^{2}={{\tau }_{e0}}^{2 }+{{\varepsilon }_{0}}^{2}\) if \(\delta S2{S}_{s}\) is known for a specific site. The epistemic uncertainty would then be limited only to the confidence limits of the \(\delta L2{L}_{l}\), \({\delta }_{c3}\) and \(\delta S2{S}_{s}\) values for the region and site in question. In practice, however, we refrain from making this leap in its entirety as we have many regions for which the region-specific random effects are not constrained by a large number of repeated observations, and differences from one region to another may reflect the dominance of a specific event or station. But this is where the framework for transition emerges, as new data from a given region can feed into this process, allowing better constraint of the region-specific parameters and, with time, reduction of the range of the confidence limits. What we envisage in the future, therefore, is a continual process of updating and recalibration of both the fixed and mixed effects coefficients of the model as new data emerge, rather than a complete revision or re-selection of models.

4.3 Site Amplification

For the corresponding ESRM20, we must characterize the surface ground motion across Europe, taking local site amplification into account. While more than 1,100 stations with three or more recordings are used for regression of the [58] model, only 419 have a measured Vs30 site condition; for the rest of the stations local soil conditions must be inferred from proxy conditions such as slope. The regression analysis undertaken by [58] provides more than 1,000 \(\delta S2{S}_{S}\) values but does not include a site amplification term. To develop a site amplification term for ESHM20 and ESRM20, we use \(\delta S2{S}_{S}\) to fit two piecewise linear models: one using only the subset of stations with measured Vs30, and one using the full set of stations with proxy Vs30. \(\delta S2{S}_{S}\) is larger when an inferred Vs30 value is assumed, as in the ESRM20 site conditions.

The reference ground motion condition required for the ESHM20 is Eurocode 8 class A (Vs30 = 800 m/s) rock, and we assume that the Vs30 value corresponds to a measured site condition. This is because as input to a design code we recognize that the amplification factors developed for the design code contain themselves an appropriate measure of conservatism such that adopting the higher \({\phi }_{S2S}\) value of the inferred proxy would risk double counting uncertainty. In the implementation of the GMM models in the OpenQuake Engine [41], however, the user can run to run the model with the ergodic site term that includes \({\phi }_{S2S}\), or run partially non-ergodic PSHA that excludes it.

For regional scale PSHA where site specific amplification is unknown the ergodic version should be used, but this flexibility does allow users to run partially non-ergodic PSHA at a site-specific level where the amplification function for the site in question may be input explicitly.

4.4 The Future Role for Ground Motion Simulations

In addition to the expansion of observed strong motion records, the last decade has seen rapid developments in the field of strong motion simulations, including improvements to the efficiency and accessibility of state-of-the-art simulation software. Though the ESM database saw an order of magnitude increase in ground motion records, the vast majority of these were for small-to-moderate magnitude and intermediate- to long-distances. In Europe, the number of recordings of near-field motions from large earthquakes has not increased by such a great amount, and the vast majority of new records for these scenarios are from a few particularly well-recorded large events. This means that our understanding of ground motions at short distances from large ruptures, and their corresponding variability, is still limited. This paucity of near-field records from large magnitudes had an influence on the initial large magnitude scaling term of the [58] GMM, which was found to predict a degree of oversaturation for large magnitudes (i.e. decreasing amplitude with increasing magnitude) that was subsequently corrected in [59]. Ground motion simulations will have a critical role to play in the coming years to help constrain and calibrate the GMMs themselves to better capture the influence of large-magnitude near-source effects such as hanging wall amplification and rupture directivity.

5 ESHM20: Insights of Results Uncertainties

In developing the ESHM20, we focused on incorporating the spatial and temporal variability of earthquake rate forecasts as well as the epistemic uncertainty and aleatory variability associated with ground shaking models. In this respect, a logic tree was used to explicitly manage alternative models and parameters that are weighted according to their probability or degree of confidence that they represent the best interpretation of a model component or of the methodology used to create it.

To make the computation of the regional hazard possible, the logic tree configuration had to be optimized, and this was done in conjunction with the parameterization of the individual sources. The use of correlated activity parameters for area sources (i.e., multiple aGRs, bGRs, and Mmax values) and for fault sources (multiple aGRs and Mmax values) were two important aspects of the model implementation.

Another critical aspect was the use of a single depth and faulting style for each source, which corresponds to the weighted mean value. Although uncorrelated uncertainties are routinely used in the implementation of source models for site-specific hazard analyses, they cannot be used at the national or regional level. The alternative is to generate random source models from uncorrelated logic tree branches, but this results in thousands of input models that are also difficult to manage.

We decided to pragmatically run the calculations with correlated earthquake rate models to optimize the computational demand, and randomly sample the full logic tree. Alternatively, a collapsed weighted rate model might reduces the influence of the extreme upper and lower values, which can have a significant impact on regional seismic risk calculation [9]. The OpenQuake Engine [41] is a suitable tool used for handling complex calculations such as the one for ESHM20; the libraries are open-source with full support from the GEM hazard experts and IT developers. These features, combined with the standardized and back-compatibility formats, make the OpenQuake Engine a versatile tool for computing seismic and risk worldwide.

Spatial variability is an intrinsic property of regional hazard models and results, so the uncertainty range is expected to be regional and differ between low-to-moderate and high seismicity, but this difference is not known a-priori. We investigated the spatial variability of the hazard results by comparing the upper (84th) and lower (16th) quantiles of the PGA hazard map for the 475-year return period given in Fig. 1. The ratio is calculated as log10(84th/16th) *100 [60]. Figure 6 shows high values of this ratio (70–100%) in central Europe, the Baltic region, and a few places in Ireland, Scotland, the Netherlands, and Northern Poland. These regions have limited data and low seismicity. Lower values (20 to 50%) are observed in the remaining regions with a median value of 40%, corresponding to a range of 2.5 between the 16th and 84th quantiles.

Did this uncertainty range reduce over time? Yes, in some regions within Switzerland, Hungary, Spain, Portugal, Italy and Turkey we can observe a decrease between ESHM13 and ESHM20. The ratio has decreased slightly in a few regions of Romania, the Balkans, Cyprus, and Greece. However, the uncertainty range for low to moderate seismicity remains quite large. The configuration and the implementation of these models might explain these changes. The ESHM13, for example, uses correlated and collapsed weighted rates, whereas the ESHM20 uses correlated branches with individual branches for seismicity parameters. Multiple GMPEs were used in the ESHM13, while backbone ground-motion models are available on the ESHM20.

When compared to the ESHM13 pattern, the 84th/16th ratio of ESHM20 appears to be more uniform across the entire region. Without entering into extensive discussions, we note that such a ratio is numerically unstable and extremely sensitive to the hazard level chosen (i.e., 475 year return periods). Since the values of seismic hazard for 475 years are very low in low seismicity regions, and we observed a numerical instability of these ratios. Longer return periods, i.e. 2500 years, are recommended because the ratio is more stable although the impact of aleatory variability of the ground motion models becomes significant at longer return periods. One solution is to separate aleatory variability from epistemic uncertainty. This will also aid in determining which uncertainty is controlled by the earthquake rate forecast and ground motion models’ epistemic uncertainties. However, this topic will be studied in a separate investigation.

Furthermore, we investigate the regional variation of a pivotal hazard parameter, the hazard curve decay, also known as the k-value. This parameter characterizes in a logarithmic space the link between the ground motion and the annual probability of exceeding it (or its corresponding return periods).

The k-values introduced herein fit the hazard curves for PGA and response spectra acceleration (SA) at 1 s between return periods of 475 and 5000 years. A constant k-value of 3 is frequently used as a direct proxy for scaling the reference design acceleration to another return period of interest (EC8, 2004). Figure 7 illustrates the results in exponential form (i.e., the 5000/475 ratio corresponds to 10(1/kvalue)), and it is evident that the approximation k = 3 is not applicable everywhere in the region [61]. [62] made similar observations after examining ESHM13’s k-value.

Low values (i.e., k-values below 1.8 and sometimes down to 1) correspond to regions of low to moderate seismicity in northern Europe, including France, Belgium, Germany, Portugal, and Spain, whereas high k-values are more frequently observed in regions of moderate-to-high seismicity, such as Romania, Italy, Greece, and Turkey. An intriguing observation is that the k-value appears to be lower in the vicinity of active faults, which is most apparent for faults located in regions of low to moderate seismicity, such as southern France, the Rhine Graben in Germany, eastern Austria and Catalonia, Spain. Another intriguing finding is that the hazard curve exponents for 1 s spectral acceleration and PGA are broadly similar, albeit slightly lower for the latter. It is consistent with the fact that the uniform hazard spectral shapes do not change significantly as return periods increase. According to preliminary research (not shown here), hazard exponent values have no obvious correlation with Mmax, bGR-value or attenuation clusters, but are primarily controlled by the hazard level and proximity to active faults. These new findings will help to define the importance factors for seismic design purposes, as well as feed discussions about the return period to consider for reference hazard maps (475 years in Europe, or 2475 years as in the United States [63]).

6 Final Remarks

We summarized in this chapter key findings, milestones and the lessons learned during the development of the 2020 update of the European Seismic Hazard model, which is the new cross-border reference for seismic hazard models and regional hazard estimates. ESHM20 replaces the 2013 European Seismic Hazard Model (ESHM13, [3]). ESHM20 is the first ever regional hazard model to provide two informative hazard maps for the next update of the European Seismic Design Code (CEN EC8, P. Labbe, this volume) and it serves as the foundation for the first uniform, openly available, European Seismic Risk Model [9].

The model is built within a probabilistic framework using advanced datasets, cutting-edge methods, fully documented and online available at hazard.efehr.org since December 2021.

For the first time, a regional seismic hazard model was released not only to the scientific community but also to the general public. For this purpose, a comprehensive set of explanatory materials has been developed addressing different target audiences ranging from disaster risk managers to the interested public.

The website of the European Facilities for Earthquake Hazard and RiskFootnote 6 has been redesigned to serve as the main information hub for accessing general information about earthquakes in Europe, learning more about earthquake hazard and risk, exploring interactive maps and data. Many of the information has been translated into several European languages and presented at a media event in April 2022. Therewith, the wealth of information the models offer has not only been made available to the scientific community, but also provides as an important legacy to improve earthquake resilience in all domains.

References

Danciu, L., et al.: The 2020 update of the European Seismic Hazard Model - ESHM20: Model Overview. EFEHR Technical Report 001, v1.0.0 (2021) https://doi.org/10.12686/A15

Jiménez, M., Giardini, D., Grünthal, G.: The ESC-SESAME unified hazard model for the European-mediterranean region. Bollettino di Geofisica Teorica ed Applicata. 42, 3–18 (2003)

Woessner, J., et al.: The 2013 European seismic hazard model: key components and results. Bull. Earthq. Eng. 13(12), 3553–3596 (2015). https://doi.org/10.1007/s10518-015-9795-1

Danciu, L., Giardini, D.: Global seismic hazard assessment program - GSHAP legacy. Annals of Geophysics 58(1), S0109 (2015). https://doi.org/10.4401/ag-6734

Douglas, J.: Capturing geographically-varying uncertainty in earthquake ground motion models or what we think we know may change. In: Pitilakis, K. (ed.) ECEE 2018. GGEE, vol. 46, pp. 153–181. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75741-4_6

Weatherill, G., Kotha, S.R., Cotton, F.: A regionally-adaptable “scaled backbone” ground motion logic tree for shallow seismicity in Europe: application to the 2020 European seismic hazard model. Bull. Earthq. Eng. 18(11), 5087–5117 (2020). https://doi.org/10.1007/s10518-020-00899-9

Weatherill, G., Cotton, F.: A ground motion logic tree for seismic hazard analysis in the stable cratonic region of Europe: regionalisation, model selection and development of a scaled backbone approach. Bull. Earthq. Eng. 18(14), 6119–6148 (2020). https://doi.org/10.1007/s10518-020-00940-x

Haslinger, F., et al.: Coordinated and interoperable seismological data and product services in Europe: the EPOS thematic core service for seismology. Annals of Geophysics 65(2), DM213–DM213 (2022) https://doi.org/10.4401/ag-8767

Crowley, H., et al.: European Seismic Risk Model (ESRM20) (2021) https://doi.org/10.7414/EUC-EFEHR-TR002-ESRM20

Rovida, A., Antonucci, A., Locati, M.: The European Preinstrumental Earthquake Catalogue EPICA, the 1000–1899 catalogue for the European Seismic Hazard Model 2020. Earth System Science Data Discussions 1–30 (2022) https://doi.org/10.5194/essd-2022-103

Lanzano, G., et al.: The pan-European Engineering Strong Motion (ESM) flatfile: compilation criteria and data statistics. Bull. Earthq. Eng. 17(2), 561–582 (2018). https://doi.org/10.1007/s10518-018-0480-z

Albini, P., Locati, M., Rovida, A., Stucchi, M.: European Archive of Historical EArthquake Data (AHEAD) (2013). https://doi.org/10.6092/INGV.IT-AHEAD

Locati, M., Rovida, A., Albini, P., Stucchi, M.: The AHEAD portal: a gateway to European historical earthquake data. Seismol. Res. Lett. 85, 727–734 (2014)

Stucchi, M., et al.: The SHARE European earthquake catalogue (SHEEC) 1000–1899. Journal of Seismology 17(2), 523–544 (2013)

Rovida, A.N., Albini, P., Locati, M., Antonucci, A.: Insights into preinstrumental earthquake data and catalogs in Europe. Seismol. Res. Lett. 91, 2546–2553 (2020). https://doi.org/10.1785/0220200058

Provost, L., Antonucci, A., Rovida, A., Scotti, O.: Comparison between two methodologies for assessing historical earthquake parameters and their impact on seismicity rates in the western alps. Pure Appl. Geophys. 179(2), 569–586 (2022) https://doi.org/10.1007/s00024-021-02943-4

Grünthal, G., Wahlström, R.: The European-Mediterranean Earthquake Catalogue (EMEC) for the last millennium. J Seismol. 16, 535–570 (2012). https://doi.org/10.1007/s10950-012-9302-y

Grünthal, G., Wahlström, R., Stromeyer, D.: The SHARE European Earthquake Catalogue (SHEEC) for the time period 1900–2006 and its comparison to the European-Mediterranean Earthquake Catalogue (EMEC). J. Seismolog. 17(4), 1339–1344 (2013). https://doi.org/10.1007/s10950-013-9379-y

Rovida, A., Locati, M., Camassi, R., Lolli, B., Gasperini, P.: The Italian earthquake catalogue CPTI15. Bull. Earthq. Eng. 18(7), 2953–2984 (2020). https://doi.org/10.1007/s10518-020-00818-y

Manchuel, K., Traversa, P., Baumont, D., Cara, M., Nayman, E., Durouchoux, C.: The French seismic CATalogue (FCAT-17). Bull. Earthq. Eng. 16(6), 2227–2251 (2017). https://doi.org/10.1007/s10518-017-0236-1

Kadirioğlu, F.T., et al.: An improved earthquake catalogue (M ≥ 4.0) for Turkey and its near vicinity (1900–2012). Bull. Earthq. Eng. 16(8), 3317–3338 (2016). https://doi.org/10.1007/s10518-016-0064-8

Živčić, M., Cecić, I., Čarman, M., Jesenko, T., Pahor, J.: The Earthquake Catalogue of Slovenia and the Surrounding Region (2018)

Raluca, D.: Romanian Earthquake Catalogue (ROMPLUS) (2022) https://data.mendeley.com/datasets/tdfb4fgghy/1 https://doi.org/10.17632/TDFB4FGGHY.1

Basili, R., et al.: European Database of Seismogenic Faults (EDSF) (2013) https://edsf13.ingv.it/ https://doi.org/10.6092/INGV.IT-SHARE-EDSF

Leonard, M.: Earthquake fault scaling: self-consistent relating of rupture length, width, average displacement, and moment release. Bull. Seismol. Soc. Am. 100, 1971–1988 (2010). https://doi.org/10.1785/0120090189

Leonard, M.: Self-consistent earthquake fault-scaling relations: update and extension to stable continental strike-slip faults. Bull. Seismol. Soc. Am. 104, 2953–2965 (2014). https://doi.org/10.1785/0120140087

Youngs, R.R., Coppersmith, K.J.: Implications of fault slip rates and earthquake recurrence models to probabilistic seismic hazard estimates. Bull. Seismol. Soc. Am. 75, 939–964 (1985)

Kagan, Y.Y.: Seismic moment distribution revisited: II. Moment conservation principle: seismic moment distribution revisited: II. Geophysical Journal Int. 149(3), 731–754 (2002) https://doi.org/10.1046/j.1365-246X.2002.01671.x

Allen, T.I., Hayes, G.P.: Alternative rupture-scaling relationships for subduction interface and other offshore environments. Bull. Seismol. Soc. Am. 107, 1240–1253 (2017). https://doi.org/10.1785/0120160255

DISS Working Group: Database of Individual Seismogenic Sources (DISS), version 3.3.0: A compilation of potential sources for earthquakes larger than M 5.5 in Italy and surrounding areas (2021) https://doi.org/10.13127/DISS3.3.0

Caputo, R., Pavlides, S.: Greek Database of Seismogenic Sources (GreDaSS): A compilation of potential seismogenic sources (Mw > 5.5) in the Aegean Region http://gredass.unife.it, (2013) https://doi.org/10.15160/UNIFE/GREDASS/0200

Atanackov, J., et al.: Database of active faults in slovenia: compiling a new active fault database at the junction between the alps, the dinarides and the Pannonian basin tectonic domains. Front. Earth Sci. 9, 604388 (2021). https://doi.org/10.3389/feart.2021.604388

García-Mayordomo, J., et al.: The Quaternary Active Faults Database of Iberia (QAFI v.2.0). J. Iberian Geology 38(1), 285–302 (2012). https://doi.org/10.5209/rev_JIGE.2012.v38.n1.39219

Passone, L., Mai, P.M.: Kinematic earthquake ground-motion simulations on listric normal faults. Bull. Seismol. Soc. Am. 107, 2980–2993 (2017). https://doi.org/10.1785/0120170111

Wiemer, S., et al.: Seismic Hazard Model 2015 for Switzerland (SUIhaz2015) (2016)

Sesetyan, K., et al.: A probabilistic seismic hazard assessment for the Turkish territory—part I: the area source model. Bull. Earthq. Eng. 16(8), 3367–3397 (2018). https://doi.org/10.1007/s10518-016-0005-6

Grünthal, G., Stromeyer, D., Bosse, C., Cotton, F., Bindi, D.: The probabilistic seismic hazard assessment of Germany—version 2016, considering the range of epistemic uncertainties and aleatory variability. Bull Earthquake Eng. 16(10), 4339–4395 (2018) https://doi.org/10.1007/s10518-018-0315-y

Meletti, C., et al.: The new Italian seismic hazard model (MPS19). Annals of Geophysics 64(1), SE112–SE112 (2021) https://doi.org/10.4401/ag-8579

Šket Motnikar, B., et al.: The 2021 seismic hazard model for Slovenia (SHMS21): overview and results. Bull Earthquake Eng. (2022). https://doi.org/10.1007/s10518-022-01399-8

Danciu, L., et al.: The 2014 earthquake model of the middle east: seismogenic sources. Bull. Earthq. Eng. 16(8), 3465–3496 (2017). https://doi.org/10.1007/s10518-017-0096-8

Pagani, M., et al.: OpenQuake engine: an open hazard (and risk) software for the global earthquake model. Seismol. Res. Lett. 85, 692–702 (2014). https://doi.org/10.1785/0220130087

Pagani, M., et al.: The 2018 version of the Global Earthquake Model: Hazard component. Earthq. Spectra 36, 226–251 (2020). https://doi.org/10.1177/8755293020931866

Grünthal, G.: The up-dated earthquake catalogue for the German Democratic Republic and adjacent areas - statistical data characteristics and conclusions for hazard assessment. In: Presented at the 3rd International Symposium on the Analysis of Seismicity and on Seismic Risk (Prague 1985) (1985)

Beauval, C., Hainzl, S., Scherbaum, F.: Probabilistic seismic hazard estimation in low-seismicity regions considering non-Poissonian seismic occurrence. Geophys. J. Int. 164, 543–550 (2006). https://doi.org/10.1111/j.1365-246X.2006.02863.x

Marzocchi, W., Taroni, M.: Some thoughts on declustering in probabilistic seismic-hazard analysis. Bull. Seismol. Soc. Am. 104, 1838–1845 (2014). https://doi.org/10.1785/0120130300

Taroni, M., Akinci, A.: Good practices in PSHA: declustering, b-value estimation, foreshocks and aftershocks inclusion; a case study in Italy. Geophys. J. Int. 224, 1174–1187 (2021). https://doi.org/10.1093/gji/ggaa462

Field, E.H., Milner, K.R., Luco, N.: The seismic hazard implications of declustering and poisson assumptions inferred from a fully time-dependent model. Bull. Seismol. Soc. Am. 112, 527–537 (2021). https://doi.org/10.1785/0120210027

Iervolino, I., Chioccarelli, E., Giorgio, M.: Aftershocks’ effect on structural design actions in Italy. Bull. Seismol. Soc. Am. 108, 2209–2220 (2018). https://doi.org/10.1785/0120170339

Stepp, J.: Analysis of completeness of the earthquake sample in the Puget Sound area and its effect on statistical estimates of earthquake hazard. In: Presented at the Proceedings of the 1st International Conference on Microzonazion, Seattle (1972)

Nasir, A., Lenhardt, W., Hintersberger, E., Decker, K.: Assessing the completeness of historical and instrumental earthquake data in Austria and the surrounding areas. Presented at the (2013)

Woessner, J., Wiemer, S.: Assessing the quality of earthquake catalogues: estimating the magnitude of completeness and its uncertainty. Bull. Seismol. Soc. Am. 95, 684–698 (2005). https://doi.org/10.1785/0120040007

Miller, A.C., Rice, T.R.: Discrete approximations of probability distributions. Manage. Sci. 29, 352–362 (1983)

Utsu, T.: Representation and analysis of the earthquake size distribution: a historical review and some new approaches. In: Wyss, M., Shimazaki, K., Ito, A. (eds.): Seismicity Patterns, their Statistical Significance and Physical Meaning, pp. 509–535. Birkhäuser, Basel (1999) https://doi.org/10.1007/978-3-0348-8677-2_15

Rhoades, D.A., Schorlemmer, D., Gerstenberger, M.C., Christophersen, A., Zechar, J.D., Imoto, M.: Efficient testing of earthquake forecasting models. Acta Geophys. 59, 728–747 (2011). https://doi.org/10.2478/s11600-011-0013-5

Delavaud, E., et al.: Toward a ground-motion logic tree for probabilistic seismic hazard assessment in Europe. J Seismol. 16, 451–473 (2012). https://doi.org/10.1007/s10950-012-9281-z

Lanzano, G., et al.: A revised ground-motion prediction model for shallow crustal earthquakes in Italy. Bull. Seismol. Soc. Am. 109, 525–540 (2019). https://doi.org/10.1785/0120180210

Atkinson, G.M., Bommer, J.J., Abrahamson, N.A.: Alternative approaches to modeling epistemic uncertainty in ground motions in probabilistic seismic-hazard analysis. Seismol. Res. Lett. 85, 1141–1144 (2014)

Kotha, S.R., Weatherill, G., Bindi, D., Cotton, F.: A regionally-adaptable ground-motion model for shallow crustal earthquakes in Europe. Bull. Earthq. Eng. 18(9), 4091–4125 (2020). https://doi.org/10.1007/s10518-020-00869-1

Kotha, S.R., Weatherill, G., Bindi, D., Cotton, F.: Near-source magnitude scaling of spectral accelerations: analysis and update of Kotha et al. (2020) model. Bull Earthquake Eng. 20(3), 1343–1370 (2022) https://doi.org/10.1007/s10518-021-01308-5

Douglas, J., Ulrich, T., Bertil, D., Rey, J.: Comparison of the ranges of uncertainty captured in different seismic-hazard studies. Seismol. Res. Lett. 85, 977–985 (2014). https://doi.org/10.1785/0220140084

Beauval, C., Bard, P.-Y., Danciu, L.: The influence of source- and ground-motion model choices on probabilistic seismic hazard levels at 6 sites in France. Bull. Earthq. Eng. 18(10), 4551–4580 (2020). https://doi.org/10.1007/s10518-020-00879-z

Weatherill, G., Danciu, L., Crowley, H.: Future directions for seismic input in European design codes in the context of the seismic hazard harmonisation in Europe (SHARE) project. In: Vienna conference on earthquake engineering and structural dynamics, pp. 28–30 (2013)

Luco, N., Ellingwood, B.R., Hamburger, R.O., Hooper, J.D., Kimball, J.K., Kircher, C.A.: Risk-targeted versus current seismic design maps for the conterminous United States (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Danciu, L. et al. (2022). The 2020 European Seismic Hazard Model: Milestones and Lessons Learned. In: Vacareanu, R., Ionescu, C. (eds) Progresses in European Earthquake Engineering and Seismology. ECEES 2022. Springer Proceedings in Earth and Environmental Sciences. Springer, Cham. https://doi.org/10.1007/978-3-031-15104-0_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-15104-0_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-15103-3

Online ISBN: 978-3-031-15104-0

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)