Abstract

We consider a multistage inventory model. At each stage, a company determines order quantities of several products to satisfy a demand. The demand is described by a discrete random process. If the demand on a product is more than inventory level of this product, the company has to make additional ordering by market price. Otherwise, the company has to hold the rest of this product by a known price. The company can use a storage. The capacity of the storage is bounded. We consider two objective functions in this model. The first objective function is the probability that the losses are less or equal to a desirable level. The second one is the quantile of losses. To solve the considered problem we reduce them to mixed integer linear programming problems. This reduction is based on introducing auxiliary variables. We suggest conditions ensuring the equivalence of the original problems and the reduced ones. Also, we consider the problems when the distribution of the random process is unknown. For this case, we prove the convergence of the sample approximation method. Numerical results are discussed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Stochastic programming

- Multistage problem

- Inventory model

- Sample approximation

- Quantile criterion

- Probabilistic criterion

1 Introduction

Stochastic programming problems are optimization problems with loss function depending on random parameters. In these problems, the objective function is defined as a functional of the random loss function. The theory of stochastic programming is described in [1,2,3]. The most widely used case of the objective function in stochastic programming is the expectation of losses. To take into account risks, the probabilistic and quantile criteria are used [4]. The probabilistic objective function is defined as the probability that the losses are less than or equal to a desirable level. The quantile objective function is the losses that cannot be exceeded with a fixed probability.

To model sequential decisions based on realizations of several random parameters, multistage stochastic programming problems are used. In these problems, the decision of each stage depends on realizations of random parameters on previous stages. For solving multistage problem with expectation criterion, the Bellman Principle can be applied (see, e.g., [2]). It can be shown that the Bellman Principle is not valid for multistage stochastic problems with quantile criterion. For this reason, other methods should be developed for these problems. By using auxiliary variables, these problems can be reduced to mixed integer programming problems [5, 6].

In this paper, a multistage inventory model is considered. The model is based on the model described in [3]. The review on inventory models can be found in [7]. Unlike the model in [3], we consider probabilistic and quantile objective functions. Also, we take into account the capacity of the storage. Based on ideas suggested in [3, 8, 9] for single-stage and two-stage problems, we suggest a method to reduce the considered problems to mixed integer linear programming problems. We solve these problems by using Gurobi solver [10].

In practice, the distribution of random parameters can be unknown. To solve problems with unknown distribution, sample approximation method can be applied [3]. The method was suggested for expectation optimization problems in [11], for problems with probabilistic constraints in [12], and for problems with probabilistic and quantile criteria in [13]. Application of the sample approximation method for multistage problems with expectation criterion is described in [14]. The convergence of approximations of multistage problems with expectation criterion is proved in [15, 16]. In this paper, the convergence of the sample approximation method is proved for the considered problems with discrete distribution of random process.

2 Problem Statement

We consider a company that has to satisfy demand on n types of products. The demand is described by a random process \(X = (X_t)\), \(t = \overline{1, T}\), taking values in \(\mathcal X \subset \mathbb R^n\) for each t. In this paper, we assume that the set \(\mathcal X\) is finite. At each stage t, the company determines order quantities \(u_t\in \mathbb R^n\) of n products. The unit prices of ordering are known and given by a vector c (\(c_i > 0\)). If the demand on the i-th product is more than inventory level of this product, the company has to make additional ordering by market unit price \(b_i > 0\), \(b = (b_1, \ldots , b_n)\). If the demand on the i-th product is less than inventory level of this product, the company has to hold the rest of this product by price \(h_i > 0\), \(h = (h_1, \ldots , h_n)\). The inventory level is equal to

where \([a]_+ \in \mathbb R^n\) is a vector such that \(\left( [a]_+\right) _i = \max \{a_i, 0\}\), \(x_t\) is the realization of the random vector \(X_t\). The initial values of the inventory level \(z_0 \in \mathbb R^n\) is known. Let us define the loss function

We take into account a constraint on the capacity of the storage:

where V is the capacity of the storage, \(v = (v_1, \ldots , v_n)\) is the vector consisting of the volumes of the product units.

The decision vector of each stage is considered as a function of realizations of the random process \(X_t\) on previous stages. Let us denote this function by \(\mathbf{u}_t:\mathcal X^{t-1} \rightarrow \mathbb R^n\), \(t = \overline{2, T}\). Note that the first stage strategy is deterministic because this is selected before the realization of the demand \(X_1\) is known. Let us introduce the notation

Let us introduce the probability function:

where \(\mathbf{P}\) is a probability, \(\varphi \in \mathbb R\) is a fixed value of losses. Notice that the probability function is the probability that the losses are less than or equal to the value \(\varphi \).

The quantile function is defined by

where \(\alpha \in (0, 1)\) is a fixed value of probability. The quantile function shows the minimal value of losses that cannot be exceeded with probability \(\alpha \).

The set of feasible strategies \(\mathbf{U}\) is defined as the set of \(\mathbf{u}\) with non-negative values such that constraints (1) and (2) are satisfied for \(u_t = \mathbf{u}_t(x_{[t-1]})\), \(t=\overline{2, T}\),

Thus, the probability maximization problem

and the quantile minimization problem

are considered.

Let us notice that problems (4) and (5) can be considered for the random process X with arbitrary distribution of the random parameters. In the next section these problems will be rewritten for the discrete distribution.

3 Equivalent Mixed Integer Problems

We suppose that each random vector \(X_t\) has a finite number of realizations belonging to the set \(\mathcal X = \left\{ x^1, \ldots , x^{M}\right\} \). Then we can use the notation \(u_t^{i_1\ldots i_{t-1}} = \mathbf{u}_t(x^{i_1}, \ldots , x^{i_{t-1}})\), \(t=\overline{2,T}\). To simplify the notation, we denote by \(I_t\) the tuple of indices \((i_1,\ldots , i_t)\). Thus,

We use the notation \(u_1^{I_{0}} = u_1\), \(z_0^{I_0} = z_0\). Let us denote by u the tuple consisting of the variable \(u_1\), M variables \(u_2^{i_1}\), \(M^2\) variables \(u_3^{i_1i_2}\),..., \(M^{T-1}\) variables \(u_T^{i_1\ldots i_{T-1}}\). Let U be the set of tuples u. Let us denote by \(p_{I} = p_{i_1\ldots i_T}\) the probability of the realization \(x^{I} = \left( x^{i_1}, \ldots , x^{i_{T}}\right) \) of the random process X, where \(I = I_T\). Also, we introduce variables \(z_t^{I_t} = z_t^{i_1\ldots i_t}\) corresponding to inventory levels for known realizations of the random process \(X_{[t]}\), \(t=\overline{1,T}\).

Let us notice that the objective functions in problems (4) and (5) can be considered as functions of u:

where

This allows us to replace problems (4) and (5) by problems

subject to

Constraints (8) and (9) follow from (1) and (2).

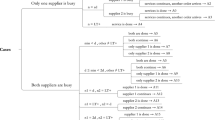

We will reduce problems (6) and (7) to mixed integer programming problems by using a method described in [8] for the discrete distribution. Let us introduce variables \(\delta _I\), \(I \in \{1, \ldots , M\}^T\), corresponding to realizations of the random process X. The value \(\delta _{I} = 1\) if \(\tilde{\varPhi }(u, x^I) \le \varphi \), otherwise \(\delta _{I} = 0\).

The ordered set of \(\delta _I\) is denoted by \(\delta \). Then the probability maximization problem (6) can be reduced to the problem:

subject to

where L is a sufficiently large constant.

The quantile minimization problem (7) is reduced to the problem:

subject to

Let z be the vector consisting of \(z_t^{I_t}\). Then, it follows from (11) that probability maximization problem (6) is equivalent to the problem:

subject to

By using the variables z, we obtain from (12) that problem (5) is equivalent to the problem

subject to (8)–(10), (15), and (13).

Let us introduce an auxiliary vector y consisting of auxiliary variables \(y_{t}^{I_t}\in \mathbb R^n\) to transform the considered problems into linear ones. Under the conditions given bellow in Theorem 1, \(y_{t}^{I_t}\) is equal to the vector whose i-th coordinate is equal to the additional ordering quantity of the i-th product. Let us consider the problem

subject to

Here and below, we write \(a \le b\), were a and b are vectors, if and else if \(a_i \le b_i\) for all i. Problem (17) contains \((n+2nM)(1+M+\ldots + M^{T-1})\) real variables, \(M^T\) integer variables, and \(M^T + (2nM+1)(1+M+\ldots +M^{T-1})\) constraints.

Theorem 1

Suppose that \( b \le h\), each random vector \(X_t\) has a finite number of realizations. Then problems (4) and (17) are equivalent.

Proof

It has been proved above that problem (14) is equivalent to problem (6). Let us notice that a solution to (14) is feasible in problem (17) for \(y_{t}^{I_t} = \left[ x_t^{i_t} - u_t^{I_{t-1}} - z_{t-1}^{I_{t-1}}\right] _+\) because \(z_t^{I_t} = \left[ u_t^{I_{t-1}} - x_t^{i_t} + z_{t-1}^{I_{t-1}}\right] _+\). Since the objective functions (14) and (17) are the same, the optimal objective value in (17) is more than or equal to the optimal one in (14).

Now, we show that there exists a solution to (17) satisfying the equalities

From this, it will follow that the optimal objective value in (17) cannot be more than the optimal one in (14) because in this case the values of \(z_t^{I_t}\) and \(u_t^{I_{t-1}}\) are feasible in (14).

Suppose that (24) is not valid, i.e., \(y_{t}^{I_t} > \left[ x_t^{i_t} - u_t^{I_{t-1}} - z_{t-1}^{I_{t-1}}\right] _+\). Then replacing \(y_{t}^{I_t}\) by

does not reduce the optimal objective value in problem (17). Thus, we can consider that constraint (24) is valid. Now, suppose that (23) is not valid. Let us replace \(z_t^{I_t}\) by \(\tilde{z}_t^{I_t} = z_t^{I_t} - \varDelta \), where

Then, due to (19), we need to change \(y_{t+1}^{I_{t+1}}\) by \(\tilde{y}_{t+1}^{I_{t+1}} = y_{t+1}^{I_{t+1}} + \varDelta \). Since \(b \le h\), from (18) it follows that this changing does not reduce the optimal objective value in problem (17). Therefore, it is proved that there exists a solution to problem (17) satisfying the equalities (23) and (24). Thus, the theorem is proved.

Remark 1

If the condition \(b \le h\) is not satisfied, we cannot guarantee that equality (23) is true. In this case, \(z_t^{I_t}\) is not equal to the inventory level. This implies that the solution to problem (17) is not feasible in problem (11).

In the same way, the quantile minimization problem (5) can be reduced to the mixed integer linear programming problem

subject to (18)–(22) and (13).

Compared to problem (17), problem (25) has one additional variable \(\varphi \) and one additional constraint (13).

Theorem 2

Suppose that \( b \le h\), each random vector \(X_t\) has a finite number of realizations. Then problems (5) and (25) are equivalent.

Proof

We have shown that problem (5) is equivalent to problem (16). Unlike Theorem 1, minimization problems are considered. Since problems (17) and (25) have the same constraints (18)–(19), we conclude that the optimal objective value in (25) is less than or equal to the optimal one in (16). As in the proof of Theorem 2, it can be noticed that there exists a solution to (25) satisfying the equalities (23) and (24). This proves Theorem 2.

4 Sample Approximation

Suppose that the probabilities \(p_I\) are unknown, but there is a sample X(1),..., X(N) of realizations of the random process X. By using the sample, the probabilities \(p_I\) can be estimated:

where N is the sample size, \(m_I\) is number of realization \(x^I\) in the sample, \(I \in \{1, \ldots , M\}^T\). Then the probability function (3) can be estimated by the function

where

The estimator of the quantile function is defined by the rule:

Let us consider the sample approximation of the probability maximization problem

and the sample approximation of the quantile minimization problem

From Theorem 1 and Theorem 2, it follows that problems (26) and (27) can be reduced to mixed integer problems if \(h \ge b\). Thus problem (26) is reduced to the problem

By Theorem 2, problem (27) is equivalent to the problem

Let us notice that the number of variables in the approximation problems (28) and (29) is equal to the number of variables in the mixed integer problems (17) and (25). This number does not depend on the sample size.

5 Convergence of Sample Approximations

Let \(U_\varphi \) be the set of solutions to problem (6), and let \(V_\alpha \) be the set of solutions to problem (7). Let us denote by \(U^N_\varphi \) and \(V^N_\alpha \) the sets of solutions to approximation problems (28) and (29), respectively. The optimal objective values of problems (6), (7), (28), (29) are denoted by \(\alpha ^*\), \(\varphi ^*\), \(\alpha ^*_N\), \(\varphi ^*_N\), respectively. Let

be the deviation of the set A from the set B.

Theorem 3

Suppose that each random vector \(X_t\) has a finite number of realizations. Then

almost surely.

Proof

It easily seen that the function \(\tilde{\varPhi }\) is continuous. From this, it follows [4] that there exists a solution to problem (6). Since \(\lim _{\Vert u\Vert \rightarrow \infty } \varPhi (u, x) = +\infty \), we can suppose that the set of feasible strategies is compact. It was proved in [13] that, under these conditions, the statement of Theorem 3 is valid. The convergence of the set deviations is proved in [17].

Theorem 4

Suppose that each random vector \(X_t\) has a finite number of realizations. Let \(\max _{u\in U} P_{\varphi ^*}(u) > \alpha \); then

almost surely.

Proof

As in the proof of Theorem 3, we can consider the U being compact. Since the function \(\tilde{\varPhi }\) is continuous and \(\max _{u\in U} P_{\varphi ^*}(u) > \alpha \), the conditions of the convergence given in [13] are satisfied for the sample approximations.

Let us notice that the condition \(\max _{u\in U} P_{\varphi ^*}(u) > \alpha \) is satisfied if

for all \(I \in \{1, \ldots , M\}^T\). This means that the conditions of Theorem 4 are not satisfied only for a finite number of values \(\alpha \).

6 Numerical Results

Let us consider the model for 5 types of products with the following data:

The number of stages is equal to 2. We assume that random vectors \(X_1\) and \(X_2\) are independent and identically distributed. The probability maximization problem and the quantile minimization problems have been solved for different number of realizations of the random vectors \(X_1\), \(X_2\). Let us notice that the random process X has \(M^2\) realizations, where M is the number of realizations of \(X_1\). We studied the dependence of the solution on the number M. We considered the following values of M: 5, 7, 9, 12, 13, 15. Realizations are given in Table 1. All realizations are assumed to be equiprobable.

For the computations we used M first values \(x^1, \ldots , x^M\). The computations were made on computer with Intel Core i9-10900 (16 GB RAM, 2.80 GHz) by using Gurobi optimization solver.

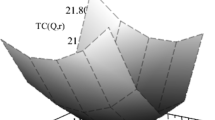

Results of solving the probability maximization problem with \(\varphi = 180\) are given in Table 2. We present the optimal objective values \(\alpha ^*\), optimal solutions of the first stage \(u^*_1\), and computation time \(\tau \) (seconds).

We can see that the probability maximization problem can be successfully solved for 225 realizations of the random process X. Notice that the equivalent mixed integer problem (17) has 2642 constraints and 2706 variables.

Results for the quantile minimization problem with \(\alpha = 0.9\) are given in Table 3, where \(\varphi ^*\) is the optimal objective value, \(u^*_1\) is an optimal solution of the first stage, \(\tau \) is computation time in seconds.

Comparing the results for the two problems, we can see that the quantile minimization requires more computations. It can be noticed that the structure of the optimal solutions are similar for different number of realizations.

Also, the problem of expected losses minimization [3] was solved for these data. The results of solving the problem are presented in Table 4, where \(m^*\) is the minimal expectation of the losses, \(u^*_1\) is an optimal solution of the first stage. The problem of expected losses minimization is reduced to a linear programming problem [3], and it does not require such complex calculations as the quantile and probability ones. From Table 4, it can be seen that the solution differs slightly from the solution of probabilistic and quantile problems. However, the minimal expected losses is less than the minimal quantiles of losses.

7 Conclusion

We suggested a method to solve optimization problems with probabilistic and quantile criteria in the multistage inventory level problem. For the case of discrete distribution, the problem was reduced to a mixed integer problem. However, due to the large dimension of the equivalent problem, the problems are hard for numerical solving. The growth of size is exponential with the number of stages. The numerical results have shown that the problem can be solved for 225 realizations of the process. Therefore, approximation methods for solving these problems can be a topic of additional research. The convergence of the sample approximation method was proved for the discrete distribution of the random parameters, although the continuous distribution is more common for the random demand. Unfortunately, the case of arbitrarily distribution is more complicated because this requires research on the convergence in functional spaces of strategies. This can be studied in future research. Also, the suggested methods can be applied for a wider class of multistage stochastic programming problems.

References

Birge, J.R., Louveaux, F.: Introduction to Stochastic Programming. Springer, NewYork (2011). https://doi.org/10.1007/978-1-4614-0237-4

Kall, P., Wallace, S.W.: Stochastic Programming. Wiley, Chichester (1994)

Shapiro, A., Dentcheva, D., Ruszczyński, A.: Lectures on Stochastic Programming. Modeling and Theory, SIAM, Philadelphia (2014)

Kibzun, A.I., Kan, Y.S.: Stochastic Programming Problems with Probability and Quantile Functions. Wiley, Chichester (1996)

Kibzun, A.I., Khromova, O.M.: On reduction of the multistage problem of stochastic programming with quantile criterion to the problem of mixed integer linear programming. Autom. Remote Control 75(4), 688–699 (2014). https://doi.org/10.1134/S0005117914040092

Kibzun, A.I., Ignatov, A.N.: Reduction of the two-step problem of stochastic optimal control with bilinear model to the problem of mixed integer linear programming. Autom. Remote Control 77(12), 2175–2192 (2016). https://doi.org/10.1134/S0005117916120079

Zipkin, P.H.: Foundations of Inventory Management. McGrow-Hil (2000)

Kibzun, A.I., Naumov, A.V., Norkin, V.I.: On reducing a quantile optimization problem with discrete distribution to a mixed integer programming problem. Autom. Remote Control 74(6), 951–967 (2013). https://doi.org/10.1134/S0005117913060064

Norkin, V.I., Kibzun, A.I., Naumov, A.V.: Reducing two-stage probabilistic optimization problems with discrete distribution of random data to mixed-integer programming problems*. Cybern. Syst. Anal. 50(5), 679–692 (2014). https://doi.org/10.1007/s10559-014-9658-9

Gurobi Optimization. https://www.gurobi.com/. Accessed 27 Feb 2022

Artstein, Z., Wets, R.J.-B.: Consistency of minimizers and the SLLN for stochastic programs. J. Convex Anal. 2, 1–17 (1996)

Pagnoncelli, B.K., Ahmed, S., Shapiro, A.: Sample average approximation method for chance constrained programming: theory and applications. J. Optim. Theory Appl. 142, 399–416 (2009) https://doi.org/10.1007/s10957-009-9523-6

Ivanov, S.V., Kibzun, A.I.: On the convergence of sample approximations for stochastic programming problems with probabilistic criteria. Autom. Remote Control 79(2), 216–228 (2018). https://doi.org/10.1134/S0005117918020029

Shapiro, A.: Inference of statistical bounds for multistage stochastic programming problems. Math. Methods Oper. Res. 58, 57–68 (2003). https://doi.org/10.1007/s001860300280

Pennanen, T.: Epi-convergent discretizations of multistage stochastic programs. Math. Oper. Res. 30(1), 245–256 (2005). https://doi.org/10.1287/moor.1040.0114

Pennanen, T.: Epi-convergent discretizations of multistage stochastic programs via integration quadratures. Math. Program. Ser. B. 116, 461–479 (2009) https://doi.org/10.1007/s10107-007-0113-9

Ivanov, S.V., Ignatov, A.N.: Sample approximations of bilevel stochastic programming problems with probabilistic and quantile criteria. In: Pardalos, P., Khachay, M., Kazakov, A. (eds.) MOTOR 2021. LNCS, vol. 12755, pp. 221–234. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-77876-7_15

Acknowledgements

The study was supported by the Russian Science Foundation (project No. 22-21-00213), https://rscf.ru/project/22-21-00213/.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ivanov, S.V., Mamchur, A.V. (2022). Multistage Inventory Model with Probabilistic and Quantile Criteria. In: Pardalos, P., Khachay, M., Mazalov, V. (eds) Mathematical Optimization Theory and Operations Research. MOTOR 2022. Lecture Notes in Computer Science, vol 13367. Springer, Cham. https://doi.org/10.1007/978-3-031-09607-5_13

Download citation

DOI: https://doi.org/10.1007/978-3-031-09607-5_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09606-8

Online ISBN: 978-3-031-09607-5

eBook Packages: Computer ScienceComputer Science (R0)