Abstract

Generalized multiprotocol label-switched (GMPLS) optical networks decrease the time taken to exchange the data among various users by generalizing tags of the packets. It has initiated a rebellion in optical systems for source-to-destination communication by providing mechanism for faster transmission of packets through internet protocol (IP) routers. It is being implemented by optical systems as it reduces the indicating and switching overheads than that of packet-switched arrangements. Within this chapter, GMPLS system is anticipated through dynamic clients. The different path computation techniques are being investigated on projected GMPLS networks. The assorted techniques entail round-robin (RR) algorithm, weighted round-robin (WRR) algorithm, and max-min algorithm. The algorithms taken for investigation are extremely accepted, but, as per literature, these techniques have never been implemented on GMPLS optical networks yet which shows the novelty of the work. The network output is judged against each other for abovementioned algorithms with respect to different factors like blocking probability, cost, makespan, etc. The consequences expose that WRR decreases the blocking probability up to <0.05 and energy consumption <30 mJ. This shows that WRR achieves the finest outcomes among the implemented techniques and boosts the quality of service (QoS) with respect to blocking probability, latency, makespan, and energy consumption in GMPLS optical networks.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

8.1 Introduction and Motivation

Speedy progress and the best intensification in the communication expertise have prearranged extent for the advancement of computing knowledge [1]. The major aim of the load equilibrium is to balance the load expeditiously between the nodes in such a way that no nodes are full and below loaded, i.e., the load must not be distributed to the nodes in a nonuniform manner, that one node is getting the load up to its full capacity and the other node is receiving zero load [2]. In the traditional methods, the internet protocol packets to be transported from one node to the other contained a lot of information in their header part like its complete address to which the packet is to be routed [1]. The networks process this complete information at each router occurring in the packet’s path which takes a lot of time, i.e., the speed becomes slow. The method of routing information transfer, to equalize the interchange load, on different paths and routers in the system is known as traffic engineering [3]. Multiprotocol label switching is generally utilized in optical systems to offer QoS assurance to data transfer [4]. MPLS uses ipv4 or ipv6 addresses to identify intermediate switches, routers, and end points. The packet transfers are directed through already defined label-switched paths (LSP) in MPLS. The traffic is distinct, i.e., an identifier or a label is added to the packets to differentiate the LSPs. This mark is used by label-switched router (LSR) to find out the LSP, and after that, it recognizes the requisite LSP in its individual advancing table to identify the finest route to move the packet further and gives a mark to subsequent bound [5, 6]. GMPLS offers improvements to MPLS to maintain system switching for wavelength, time and fiber switching in conjunction with packet switching. The strategy accomplishing GMPLS diminishes costs involving bandwidth, switching, and signaling [7, 8]. There is no need of any switch in GMPLS for reading tag in the frame of every packet. The switch material intrinsically involves label and switching procedures depending on wavelength and timeslot represented in GMPLS packet format in Fig. 8.1.

GMPLS boosts the potential of the entire process by distributing enormous quantity of parallel associations linking users in a method which is the primary requirement in optical communication that may involve hundreds of alike routes between a pair of users.

With the progressive growth, the world is approaching toward 5G revolution that is not only exemplified by the emergent capacity and development of the system performance but is also based on large connectivity and associated with diverse system cooperation capacity and optical networks with a suitable convergence with next-generation networks. For these situations, the entire network management will entail various automatic procedures to distribute appropriate resources according to the requirement of user maintaining high levels of quality of service (QoS), resilience, and quick response times [3]. GMPLS has initiated a rebellion in optical systems for uninterrupted communication. The continuously increasing demand of optical communication has significantly increased the problem of interference and bandwidth scarcity. Many adaptive techniques like design-based routing (DBR) and resource allocation in flexgrid networks have been implemented to increase the overall throughput. But these techniques have not reached their full potential, so these can be extended further to enhance their performance in terms of speed, capacity, path computation flexibility, security, etc. which in turn enhances the QoS. The GMPLS protocol, sprouting from MPLS, concentrates on signaling and routing procedures proposed to computerize the methods involved for the system to handle itself. GMPLS includes time-division multiplexing (TDM) and wavelength and spatial switching in addition to packet switching. GMPLS tries to deal with complications, shorten traffic engineering, and condense network blockages. GMPLS grants two fundamental purposes: (1) It mechanizes the establishment of continuous LSP for an array of switching fields (packet, TDM, lambda, fiber). (2) LSPs are ascertained at operative-described service stages. Thus, traffic engineering and path computing capabilities of GMPLS can prove to be a turning technology in 5G networks with its recognizable capabilities [2].

So far, numerous popular path computation techniques have been put into practice on cloud computing networks, but they have not been applied on GMPLS optical networks yet. The path computation algorithms like throttled load balancing algorithm, WRR algorithm, RR algorithm, randomized algorithm, and max-min algorithm have been executed on cloud computing as per the literature [9,10,11,12,13]. The work has been extended by putting WRR, RR, and max-min algorithms in practice on GMPLS optical networks and acquired excellent consequences with respect to blocking probability, cost, makespan, and throughput. In our earlier papers, the work has been done on developing the models for blocking probability [14]. Furthermore, weighted round-robin algorithm has been implemented on our proposed GMPLS optical network in our conference paper [15]. This chapter extends the work done in our conference paper and also incorporates the execution of other algorithms like RR and max-min algorithm on GMPLS optical system, and then all the implemented algorithms are compared with one another.

8.2 GMPLS Optical Network

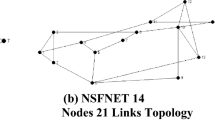

Inside the subsequent fragment, a graph enfolding the complete GMPLS optical network encompassing assorted consumers sited at their locations is offered. Furthermore, the arrangement of servers is shown in the network.

The GMPLS network together with the customers and the link providers is plotted in Fig. 8.2. In addition, needs coming up from one consumer to the adjacent consumers in the network is depicted. The red stars in the arrangement correspond to the site of request consumers make. The blue squares on the right part correspond to the site of servers that distribute wavelength and provide association rates to demanding consumers preserving the routing table and association reliability.

Figure 8.3 exemplifies the server allotment, depending on job carrying-out time taken by that server to the requesting consumers in optical arrangement. When the request from consumer occurs for data transmission, the system is investigated in terms of minimum execution time. Then the server performing the task in minimum is selected and allotted. The elected server dispenses the bandwidth and association rates to the consumers, and information starts transferring. The red lines express the alliance between the consumers and the selected server. This unambiguous outcome shows that second server takes minimum time and, therefore, is chosen for the link establishment.

8.3 Performance Metrics

This investigation of presentation metrics for bandwidth allocation is rooted in blocking probability, cost, latency, makespan, and energy consumption. The performance metrics is conferred as the following:

8.3.1 Blocking Probability

Probability is identified as degree to which an occurrence is expected to happen, calculated by the proportion of constructive cases to the entire amount of cases achievable [16]. Blocking probability for cleared calls is given in Eq. 3.1 [17]:

where μ is mean call rate, M indicates the number of channels, m indicates the number of calls generated in time T, and λ is the wavelength.

8.3.2 Cost

The network cost is defined as proper utilization of the available resources. The main parameters affecting the cost in the network are the network capacity and the transmission speed [18]. If the network capacity increases or the transmission speed increases in the network, then the network cost will decrease. The cost is represented in Eq. 3.2:

where TEx is total energy consumption by the network, Ex(t) is execution time, and N is total number of nodes.

8.3.3 Makespan

Makespan is employed to approximate the greatest task completion instance of the network by assessing terminating time of the most recent task, i.e., once all the jobs get planned. The minimization of makespan for particular network is required to finish the demand on time [19]. It is represented by Eq. 3.3:

where Rx is the number of requests per user.

8.3.4 Energy Consumption

The energy consumption in a system relies on the average rate of power consumption of users during the instance of action [20]. The power used refers to the power depleted in attaining and processing signals besides transmit and receive power. The power consumption is represented in Eq. 3.4:

where is ET(x) is the energy consumed by requesting user.

8.4 Various Path Computation Algorithms Implemented on Proposed GMPLS Optical Network

The basic difficulty in GMPLS optical systems is the process of routing and wavelength distribution. It is being confronted to attain a resourceful exploitation of optical cross connects in optical subsequent invention Internet deploying a GMPLS-based control plane [21]. In GMPLS optical networks, flexibility for computing the network route can be further improved to handle dynamic traffic demands. Different path computation algorithms like restriction-dependent routing techniques have been discussed for GMPLS optical arrangements which included route assignment and bandwidth distribution for data flow management as well as malfunction revival methods [22]. This chapter involves the implementation of various path computation algorithms on GMPLS optical networks like weighted round-robin algorithm, round-robin algorithm, and max-min algorithm. The other path computation algorithms include throttled load balancing algorithm and randomized algorithm. In the throttled load balancing algorithm, the user gives the requirement of the consignment equalizer to verify the exact virtual engine that admits particular packets effortlessly and executes the procedures demanded by the user. The consignment equalizer retains an index table of virtual machines and their accessibility status. Then the suitable virtual machine is assigned to the user for performing the required operations [9]. In randomized algorithm, the path is allocated randomly as soon as the user request occurs. This approach allocates the path quickly but has a limitation of getting slow end-to-end transmission due to the fact that randomly selected path may not be the shortest one. The literature review shows that RR, WRR, and max-min perform better than throttled load balancing algorithm and randomized algorithms [10]. Thus, the performance of RR and max-min is compared with WRR on the proposed GMPLS optical networks.

8.4.1 Round-Robin Algorithm

The round-robin algorithm commenced from the principle in which every person obtains the same part of something in rotations. It is the most traditional, easiest scheduling technique generally employed for multitasking. In this algorithm, every organized job performs one after another merely in a recurring row for a restricted time segment. It is the least complicated process for allocating user demands across a set of servers. The load balancer of round-robin algorithm advances the user demand to every server in a cyclic manner while moving down the record of servers in the set. At the conclusion of set, the load equalizer loops back and moves down the set again. It throws the subsequent demand to initial planned server, the other demand after that to the succeeding server, and so on. This technique also proposes hunger liberated implementation of methods. The CPU is reallocated to subsequent procedure once predetermined period of time is over. This period of time is known as time quantum/time slice. The anticipated procedure is added to the conclusion of the row [11]. It is a real instant technique that reacts to the occurrence within a particular time bound. It contracts with each and every process exclusive of some precedence. The most terrible case reaction time can be imagined if the entire amount of methods on run row is well-known. The round-robin method does not rely on burst time so this is simply executable on the system. Just that once a procedure is performed for a definite set of time, the method is anticipated, and a new procedure performs for that specified time episode. The processor output gets condensed with small slicing time of operating system. The performance of this algorithm profoundly relies on time quantum [10]. No priorities can be set for the methods. The most difficult task in round-robin scheduling is finding a correct time quantum.

8.4.2 Max-Min Algorithm

The max-min technique is generally utilized in the atmosphere where jobs are unscheduled in the beginning. It calculates anticipated implementation matrix and anticipated finishing time of every job on the accessible resources [12]. Then, the assignment having greatest anticipated duration on the whole is chosen and allocated to the resource having least overall implementation time. Lastly, just listed assignment is detached from the complete set of metatasks, the entire calculated times are revised, and the process is repeated awaiting set of tasks to be vacant. It performs better for short-length tasks. This technique selects big assignments first to perform ahead of completing smaller jobs. Now, assignment with greatest value is assigned to the server that takes minimum time to execute that task. As the quantity of time-consuming jobs to be performed becomes greater than less time-consuming jobs, the algorithm takes highest time to carry out assignments entirely present in set of metatasks. Therefore, the finishing time becomes utmost in this situation which makes this algorithm inefficient.

8.4.3 Weighted Round-Robin Algorithm

Weighted round-robin erects on the round-robin load balancing system. In case of WRR, every objective procedure or server is allocated a weight value rooted in criterion selected by the proprietor. The generally employed condition is the traffic handling capability of the server. The weight value for a target operation specifies a priority for each target operation in a group of target operations. The larger weight value indicates that the server entertains bigger fraction of user requests. For illustration, server X is allocated three weights and server Y one weight, and then the load equalizer sends three requests to server X for every one it drives to server Y [13]. When the weight is assigned to a target operation, it indicates the capacity of that target operation in comparison to other target operations within the server group. The transfer of requests is made in a cyclic way only in such a manner that higher weighted servers are allocated more number of sessions in every cycle.

8.5 Comparison of Different Algorithms

Inside this segment, all the three algorithms conversed in the above sections are evaluated with respect to blocking probability, makespan, cost, energy consumption, and throughput.

Figure 8.4 puts side by side the evaluation of GMPLS optical networks with respect to traffic load after executing all three various techniques taken into account. The deviation of blocking probability with escalating traffic load is shown. The graph exposed that the weighted round-robin algorithm is capable of achieving lowest blocking probability with escalating traffic load. This occurs because by employing WRR procedure, additional traffic stack can be involved exclusive of blocking as the path selected by this algorithm is based on the weights and analyzed to be the shortest. Thus, the flexibility in selecting the path increases. This leads to reduction in the data transfer obstacles which in turn lessens the blocking probability. The max-min approach gives the maximum blocking probability as it is least concerned with the computation of shortest path to complete the tasks.

The performance of GMPLS optical networks in terms of makespan and simulation time after implementing three different algorithms is compared in Fig. 8.5. The deviation of makespan with escalating simulation time is shown. The plot illustrates that the data transmission time from the starting user to the receiving user is very small even when the simulation time of the entire procedure is large by using WRR as compared to other algorithms. Thus, WRR is able to achieve lower interruptions in the path and fast speed. As a result, the stability of the network increases by using WRR than RR and max-min.

The variation of cost and simulation time after implementing the three different algorithms is compared for GMPLS optical networks in Fig. 8.6. The network cost is defined as proper utilization of the available resources. The cost should be as minimum as possible. The graph shows that the cost is minimum by using WRR than in cases when RR and max-min are used. This is because by using WRR, the network speed increases which in turn enhances the capacity of the GMPLS optical network. The increased network capacity with limited resources leads to decrease in the network cost. The graph also reveals that max-min gives maximum cost as it assigns the path that is accessible when the demand from user occurs without considering the time taken for completing the assignment by that particular server. This decreases network speed and hence the cost increases in case of max-min.

The variation of energy consumption and simulation time after implementing the three different algorithms is compared for GMPLS optical networks in Fig. 8.7. The energy consumption in a network depends on standard pace of power utilization by users during the instance of procedure. The utilized power refers to the power depleted during attainment and dispensations of signals along with transmit and receive power. It should be as small as possible. The graph shows that max-min consumes maximum energy, while WRR consumes the minimum energy.

The performance of GMPLS optical networks in terms of throughput and simulation time after implementing the three different algorithms is compared in Fig. 8.8. The variation of throughput with increasing simulation time is plotted. Throughput is the rate of successful message delivery over a communication channel. It refers to how much data can be transferred successfully from one location to another in a given amount of time. The plot reveals that WRR provides maximum throughput as compared to RR and max-min. This occurs because WRR gives minimum blocking probability in the system which in turn increases successful traffic handling capacity of the network, whereas max-min gives maximum blocking probability in the system resulting to decreased throughput.

8.6 Conclusion

Within this chapter, GMPLS optical network with users having vibrant arrangements has been anticipated. Subsequently, different path computation algorithms are applied on the anticipated GMPLS optical network. The assorted algorithms employed are round-robin algorithm, weighted round-robin algorithm, and max-min algorithm. These algorithms are taken because of their recognition and effectiveness in cloud computing. Every technique is premeditated and evaluated on the anticipated GMPLS optical network with respect to diverse parameters like blocking probability, cost, makespan, energy consumption, and throughput. The consequences disclose that WRR achieves the best outcomes among all the executed techniques and improves the quality of service in GMPLS optical networks, whereas max-min gives the worst performance among the three algorithms. The significance of our work is that the algorithms employed in our work have not been executed on GMPLS optical networks previously as per the literature. The extension of our work can be done by investigating other algorithms on GMPLS optical network and enhancing the QoS of the entire procedure.

References

S.R. Gundu, C.A. Panem, A. Thimmapuram, Real-time cloud-based load balance algorithms and an analysis. SN Comput. Sci. 1(187), 1–9 (2020)

N. Jerram, A. Ferral, MPLS in Optical Networks, White paper (Metaswitch Networks, 2001)

S. Alouneh, S. Abed, M. Kharbutli, B.J. Mohd, MPLS technology in wireless networks. Wireless Networks 20(5), 1037–1051 (2014) Springer

T.M. Almandhari, F.A. Shiginah, A performance study framework for Multi-Protocol Label Switching (MPLS) networks, in IEEE Conference GCC Conference and Exhibition, (2015), pp. 1–6

I. Hussain, Overview of MPLS technology and traffic engineering applications, in Networking and Communication Conference, (IEEE, 2004), pp. 16–17

H. Tamura, S. Nakazawa, K. Kawahara, Y. Oie, Performance analysis for QoS provisioning in MPLS networks. Telecommunication Systems 25(3), 209–230 (2004) Springer

T. Anjali, C. Scoglio, Threshold based policy for LSP and lightpath setup in GMPLS networks, in IEEE Conference on Communications, vol. 4, (2004), pp. 1927–1931

P. Ho, J. Tapolcai, A. Haque, Spare capacity reprovisioning for shared backup path protection in dynamic generalized multi protocol label switched networks. IEEE Trans. Reliab. 57(4), 551–563 (2008)

R. Panwar, B. Mallick, A comparative study of load balancing algorithms in cloud computing. Int. J. Comput. Appl. 117(24), 33–37 (2015)

M.M. Shahid, N. Islam, M.M. Alam, M.M. Suud, S. Musa, A comprehensive study of load balancing approaches in the cloud computing environment and a novel fault tolerance approach. IEEE Access 8, 130500–130526 (2020)

G.S.N. Rao, N. Srinivasu, S.V.N. Srinivasu, G.R.K. Rao, Dynamic time slice calculation for round robin process scheduling using NOC. Int. J. Electr. Comput. Eng. 5(6), 1480–1485 (2015)

N. Sharma, S. Tyagi, A comparative analysis of min-min and max-min algorithms based on the makespan parameter. Int. J. Adv. Res. Comput. Sci. 8(3) (2017)

F. De Rango, V. Inzillo, A. Ariza Quintana, Exploiting Frame Aggregation and Weighted Round Robin with Beam Forming Smart Antennas for Directional MAC in MANET Environments, Ad Hoc Networks, vol 89 (Elseveir, 2019), pp. 186–203

S.S. Monika, A. Wason, Blocking probability reduction in optical networks to enhance the quality of service, in IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), (University of British Columbia, Canada, November 1–3, 2018)

S.S. Monika, A. Wason, Reduction of blocking probability in GMPLS optical networks using weighted round robin algorithm, in National Conference on Biomedical Engineering (NCBE-2020), (NITTTR, 2020)

B. Berde, H. Adbelkrim, M. Vigoreux, R. Douville, D. Papadimitriou, Improving network performance through policy-based management applied to generalized multi-protocol label switching, in 10th IEEE Symposium on Computers and Communications (ISCC’05), (2005), pp. 739–745

S.S. Monika, A. Wason, Reduction of blocking probability in generalized multi-protocol label switched optical networks. J. Opt. Commun. (2019)

S.H.H. Madni, M.S. Abd Latiff, M. Abdullahi, S.M. Abdulhamid, M.J. Usman, Performance comparison of heuristic algorithms for task scheduling in IaaS cloud computing environment. PLoS One 12(5), 1–26 (2017)

N.R. Tadapaneni, A survey of various load balancing algorithms in cloud computing. Int. J. Sci. Adv. Res. Technol. 6, 1–3 (2020)

P. Ho, H.T. Mouftah, Path selection with tunnel allocation in the optical Internet based on generalized MPLS architecture, in IEEE International Conference on Communications, vol. 5, (2002), pp. 2697–2701

X. Yang, T. Lehman, C. Tracy, J. Sobieski, S. Gong, P. Torab, B. Jabbari, Policy-based resource management and service provisioning in GMPLS networks, in 25TH IEEE International Conference on Computer Communications, Barcelona, (2006), pp. 1–12

T. Eido, A. Mribah, T. Atmaca, Novel constrained-path computation algorithm for failure recovery and CAC in GMPLS optical networks, in 2008. ISCIS ’08. 23rd International Symposium on Computer and Information Sciences, Istanbul, (2008), pp. 1–6

Acknowledgments

This work was supported by Visvesvaraya PhD Scheme for Electronics and IT, Ministry of Electronics and Information Technology, Government of India. The unique awardee number is VISPHD-MEITY-1669.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Monika, Singh, S., Wason, A. (2022). Performance Evaluation of Path Computation Algorithms in Generalized Multiprotocol Label-Switched Optical Networks. In: Singh, S., Kaur, G., Islam, M.T., Kaler, R. (eds) Broadband Connectivity in 5G and Beyond. Springer, Cham. https://doi.org/10.1007/978-3-031-06866-9_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-06866-9_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-06865-2

Online ISBN: 978-3-031-06866-9

eBook Packages: Computer ScienceComputer Science (R0)