Abstract

The SAE International plays a major role in shaping research and development in the field of automated driving through its SAE J3016 automation taxonomy. Although the taxonomy contributed significantly to classification and development of automated driving, it has certain limitations. SAE J3016 implies an “all or nothing” approach for the human operation of the driving task. Within this paper, we describe the potential of moving considerations regarding automated driving beyond the SAE J3016. To this end, we have taken a structured look at the system consisting of the human driver and the automated vehicle. This paper presents an abstraction hierarchy based on a literature review. The focus lies particularly on the functional purpose of the system under consideration. In particular, optional parts of the functional purpose like driver satisfaction are introduced as a main part of the target function. We extend the classification into optional and mandatory aspects to the lower levels of abstraction within the developed hierarchy. Especially the decisions on movement and dynamics in terms of driving parameters and driving maneuvers offer a so far underestimated design space for (optional) driver interventions. This paper reveals that the SAE J3016 lacks a consideration of these kind of interventions. The identified design space does not replace the SAE J3016, it does however broaden the perspective provided by this important taxonomy.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Automated driving

- Driving experience

- Interfaces for cooperative driving

- User interaction

- Driver satisfaction

- Abstraction hierarchy

- Design spaces

- Taxonomy

1 Introduction

There are many positive effects anticipated with the introduction of automated driving. In particular, research focuses on increasing safety, reducing driver workload, and more flexible use of driving time. The SAE International (Society of Automotive Engineers) plays a major role in shaping research and development in the field of automated driving through its SAE J3016 automation taxonomy [1]. This taxonomy defines six levels of driving automation ranging from no automation to fully autonomous driving. For this classification, the taxonomy divides the dynamic driving task into “sustained lateral and longitudinal vehicle motion control” and “object and event detection and response”. In addition, the responsibility to act as fallback for the driving task and the operational design domain of the automation are used as criteria for the classification. Although the taxonomy has made a valuable contribution to the classification and development of automated driving, it has certain limitations.

The numerical levels are often understood as incremental steps of development [2], even though in recent revisions the taxonomy clarifies that the levels are nominally and not ordinally scaled [1]. Among others, this misunderstanding leads the development towards a complete replacement of the human being in control of the driving task [2]. Humans are often seen as the single source of potential failures, ignoring the fact that in many situations humans provide an important layer of safety [3]. Reducing solution space to a few discrete levels can lead to missing out on opportunities. For example, a missing or wrong definition of a level of the SAE taxonomy has already been described [4]. Levels 1 to 4 can sometimes lead to user confusion [5]. This is aggravated by the various names given to equivalent functions by different manufacturers, which can result in false user expectations [6]. These expectations are linked to the user’s mental model, in which less than six levels of driving automation were identified [7, 8].

The limited consideration of user interventions within the six levels implies an “all or nothing” approach for human control. This supports the misconception of a full replacement of the human being in automated driving as the final goal. Existing literature describes interaction concepts for automated vehicles that cannot be adequately categorized by this taxonomy e.g., maneuver control or haptic shared control [9]. However, more recent revisions of SAE J3016 provide a first starting point for describing user interventions across different levels using a definition of the term features [1]. Hence, there are some levels of cooperation between humans and driving automation for which the six levels of the SAE J3016 are not suitable.

Human control in driving automation is not necessarily a negative aspect. Next to the additional safety layer, manual driving control enables users to perceive an optimized driving experience (DX) through self-determined driving behavior. Today, automated vehicles offer only limited inputs during automated driving modes. Therefore, an individual user-centered adaptation of the journey cannot be achieved in these modes. It is necessary to understand the drivers with their purpose and goals of actions to find new design spaces for cooperative interaction concepts. Such concepts have the potential to transfer the advantages of manual driving to automated driving without removing the benefits of automated driving functions. This can lead to improved user experience of automated driving.

2 Methods

We aim to identify design spaces for novel driving control concepts that go beyond the implied “all or nothing” approach of the SAE J3016. The main focus of this paper lies on DX during an automated drive. To this end, we have taken a structured look at the system consisting of a driver and an automated vehicle. Following Rasmussen’s method of abstraction hierarchy (AH) from the field of work domain analysis [10], the focus lies particularly on the functional purpose of the system under consideration. This method is part of the cognitive work analysis framework, which is suitable for the design of innovative user interfaces [11].

AH as a method has been applied widely in the context of driving [11,12,13,14,15,16]. However, the scope of investigation was mostly manual driving. Unlike previous studies, we consider humans as part of the overall system. This allows for the description of non-driving-related tasks (NDRT) to be a functionality of the system. An AH divides the functional properties of a system into several layers of abstraction. Based on the functional purpose as the top level of the AH, abstract and concrete functions are arranged in multiple levels [17]. Typically, the AH is composed of five levels connected by means-end relations. Rasmussen’s original definition of these five levels was later generalized for a broader range of applications [18]. The top-level presents the functional purpose, followed by values and priority measures on the second layer. This layer shows metrics that help to evaluate the fulfillment of the functional purpose. The third layer consists of purpose-related functions and the fourth one shows object-related functions. These levels construct a connection between the upper levels and the basic resources and objects on the lowest level of abstraction. By considering all connections of one node to the level above, it is possible to identify the reason for its existence [11]. The connections to the lower level show what is needed to fulfill the node’s purpose [11]. All connections can be used to validate the AH. This involves checking the described structure and coherence for each node’s connections [11, 19].

The AH developed uses existing literature as its foundation, which is shortly presented in the respective sub-chapters. The definition of layers and the structure of connections provided by the general method has been used to structure the process of identification of relevant literature. In addition, existing applications of this method within the context of driving [11,12,13,14,15,16] were used as a starting point. The developed AH has been verified using the described method of validating each node based on its connections to other nodes.

AH as a method has limitations concerning automated control systems [20]. Therefore, we considered monitoring failures as another function of the system next to the inclusion of the human operator. For this purpose, we also looked at generic cases of failures based on a simplistic model of information processing and reaction.

By using the aforementioned method, design spaces for new interaction concepts have been identified. This paper provides an overview of the most relevant aspects of the described analysis and provides insights into used literature. We also describe proposed simplifications and the proposed design spaces.

3 Abstraction Hierarchy

In the following sub-chapters, the AH of the system consisting of the human operator and the automated car is described. For the sake of clarity, a generalized and simplified version of the developed AH is shown in Fig. 1. Therefore, some of the functions and objects identified were combined into logical groups. For each group, solely a selection of the influencing factors is shown. The relevance of the connections between the individual elements can vary. The numerous connections show the multilayered and complex interrelations existing between the elements of the AH.

3.1 Purpose and Goal Structure

In contrast to a distinct purpose, a goal defines a direction for the considered actions. However, goals and purpose are not always clearly separable. In current literature, identical aspects of driving are partly described as goals and partly as purposes of driving. We define an overall purpose-goal-structure of the system in this section, which is described as:

Safe, time efficient, ecological and satisfactory transport of passengers and/or load from one geographical location to another.

Safe Driving.

Increasing safety is often cited as a reason for introducing automated vehicles [21]. Although users do expect increased safety from automated driving, they are simultaneously expressing concerns [22, 23]. This is particularly associated with the concept of trust in automated driving [22, 24].

On the one hand safety is determined by the absence of harm or damage and on the other hand by metrics of criticality. E.g., time-to-collision (TTC) is widely used in the automotive context as a metric of criticality. Criticality is linked to the risk of harm or damage involved. Drivers specify a tolerance towards risk through their chosen driving style at the tactical level of the driving task and determine a maximum level of risk at the strategic level of the driving task [25]. This shows that the system partly allows for free choices of actions regarding this goal.

Time Efficient and Ecological Driving.

Users describe time advantages as a reason for choosing a car as means of transport over public transportation [26]. In automated driving, two aspects of time efficiency must be distinguished: On the one hand efficiency of travel can be described by the time needed to reach the destination. On the other hand, the possibility of using the time for NDRTs is a new aspect of time efficiency. Depending on the context of the trip and the NDRTs, there can be a large degree of flexibility of actions regarding this goal. A third issue often associated with efficiency is environmental sustainability or ecological driving. Due to climate change and environmental consciousness, a great extent of research is being conducted on this topic at current times [27]. Consequently, restrictions are imposed by most countries, which can reduce the free space of possible actions in movement regarding this goal.

Satisfactory Driving.

Evaluation criteria of satisfaction are, among others, UX and DX. The focus of this work is primarily placed on DX regarding the movement rather than on DX regarding the chosen vehicle. However, interactions may occur, e.g., the comfort of a seat depends on the movement of the vehicle [28].

Existing literature describes DX regarding the movement in different ways. In various models, the dimensions of comfort and (subjective) safety are described as relevant factors [29]. Safety has already been addressed partially, whereby the focus is on objective safety rather than on subjective safety. Subjective safety is defined by a feeling of control [30]. In automated driving, the feeling of safety is important across different user groups [31]. Perceived feeling of control is expected to be lower [32] and perceived safety is expected to be higher [33] in automated driving compared to manual driving. This correlates with trust in its functionality [34]. In addition to safety, perceived efficiency can also influence DX.

In the literature, different expected changes of perceived comfort are described, depending on the automation’s characteristics, the scenarios considered, and the individual user [22, 23]. Hartwich et al. [22] describe a dependency of perceived comfort on users’ trust. According to Engeln and Vratil [35], the dimension of comfort can be divided into action comfort, action enjoyment, usage comfort, and usage enjoyment. Enjoyment is characterized by the occurrence of situations driven by intrinsic motivation, while comfort is determined by situations driven by extrinsic motivation [35]. In the case of enjoyment, the action itself creates pleasure, while in the case of comfort, the result of the action creates pleasure [35]. The term driving fun is also often used to describe this. Automated vehicles are expected to cause a positive influence on comfort and a negative influence on driving fun [22, 23, 32]. For some user groups, driving fun can be identified as a crucial factor affecting the selection of automobiles as a preferred means of transportation compared to other options [36]. In addition, emerging boredom at automated driving and fun at manual driving are partly reasons for switching off automated driving functions [37]. Users also rate perceived driving pleasure differently when riding along with a human driver compared to automated driving [22].

The described aspects of driver satisfaction are often related to control or perceived control which are basic psychological needs of the user. Satisfaction allows a higher flexibility in choosing actions compared to other goals. Satisfaction is more difficult to measure than other goals. For example, an assessment of emotions based on camera footage is possible.

Goal Prioritization and Target Function.

The different aspects of the presented purpose-goal-structure can be prioritized. We propose that the objective elements of the goals of transportation and safety are to be considered as mandatory criteria for the overall goal fulfillment. A larger optional freedom of action with acceptance tolerance can be defined regarding the subjective goals. This means, for example, efficiency does not necessarily have to be optimized to the maximum. There are possible situations in which users purposefully want to drive inefficiently to increase satisfaction (e.g., a winding mountain road with a beautiful view instead of the faster motorway). The proposed concept of prioritization and categorization into optional and mandatory goals can be transferred to the lower levels of abstraction through the means-end relations of the AH to identify concrete spaces for interactions. The emerging concept of optional and mandatory behavior overlaps with general social behavior theories. For example following Rosenstiel [38], the behavioral determinants of willing, allowing, intending, and situational enabling could be used in a modified form to further specify freedom of action in (automated) driving regarding the control of movement and the control of non-driving-related tasks.

The prioritization of goals can change over time. This happens e.g., to ecological goals, as laws concerning environmental compatibility are constantly being tightened in some countries, which can reduce flexibility in choosing actions. For simplification, we propose to combine all sub-goals to an overall target function with adaptable weights for the criteria of the second level of the AH.

3.2 Control of Movement

General Driving Task.

Control of movement is represented by the driving task. Driving is a necessary task in order to fulfill the general purpose of transport. The individual style of driving influences the different sub-goals. According to Donges [39], the driving task is divided into navigation, guidance and stabilization. Navigation involves route planning, which can be automated by using a navigation system. There are possibilities for user interventions on this layer to adapt the route to the individual target function of the user via a selection of various alternative routes. To fulfill the navigation task, suitable driving maneuvers must be selected at guidance level. The guidance level also includes the selection of trajectories and parameters of driving. Implementation of any change in movement always takes place at the level of stabilization. Normally, a high degree of control over the movement is available here.

There are different driving styles that can be chosen to reach a destination. Multiple types of driving styles can be distinguished based on the choice of maneuvers and driving parameters [e.g., 40, 41]. In automated driving, the manufacturer usually defines the car’s driving style. Numerous studies can be found in the literature in which optimal specifications for maneuvers and parameters in automated driving are defined [e.g., 42]. Here, metrics of the second level of our AH are usually used as design criteria [cf. 42]. Among drivers the preferred driving style varies based on their personal characteristics and habits [43]. It may also vary depending on the driving environment [44]. In automated driving, there may be different driving styles preferred than in manual driving [45]. Driving maneuvers, trajectories and driving parameters represent the object-related functions of motion control in the proposed AH. As object-related functions, these aspects in the AH stand above basic objects and resources, which are combined into the three basic groups: passengers, vehicle, and environment.

Driving Maneuvers.

Maneuvers provide a rough scheme of movement. There is a distinction made between implicit and explicit maneuvers. Explicit maneuvers are those operation units of the guidance task that are complete on their own (e.g., “changing lanes”), while implicit maneuvers are not [46, 47]. Implicit maneuvers are only completed by the initiation of explicit maneuvers or the end of the journey (e.g., “following lane”) [46]. In some cases, there exists optional freedom of choice in the selection of explicit maneuvers. A change of lanes on the motorway, for example, can be considered as an optional action in terms of purpose, which solely influences efficiency and satisfaction. However, there are other situations in which a change of lanes is mandatory e.g., to take the correct exit to reach the determined destination. Implicit maneuvers are mandatory and can be interrupted by explicit optional maneuvers.

Parameters of Driving.

Maneuvers on their own are not sufficient to fully define the movement of a vehicle. Further parameters must be set to define the exact movement. This coincides with the driving styles that have been mentioned afore. A maneuver usually defines the reference systems for relevant driving parameters. In particular, the lane, other road users and environmental factors are possible references. Dynamic references (e.g., a vehicle in front) require a continuous flow of information to control the connected parameters. Primarily velocity, acceleration, distance, and time parameters can be described. Especially for distance parameters, a multitude of alternatives can be described based on varying references. The start or end time of a maneuver or a parameter change is a specific time parameter. Depending on the reference system, the chosen lane on a multi-lane road or the chosen parking space at a parking area, for example, can also be described as parameters of the current maneuver.

At least one alternative of possible parameters can usually be identified as highly relevant by the current maneuver. We propose to categorize parameters into maneuver parameters and movement parameters. For example, steering angle is a basic movement parameter and eccentricity between lane markings is a maneuver parameter for following the course of a road. On a free field, however, steering angle can become the maneuver parameter, as there are no reference points defined for the transverse guidance by the maneuver.

The optional space for choosing parameters varies depending on the driving situation. For example, in the case of following a free lane, the optional space of action regarding the velocity is larger than in the case of following another vehicle. Therefore, the choice of parameters usually offers optional freedom that is restricted by mandatory limits.

3.3 Control of Non-Driving-Related Tasks

If the automation allows users to perform NDRTs (level 3 or higher of SAE J3016), the driver is usually free to choose which tasks to perform. Activities such as reading, eating and watching a movie are possible tasks that users could perform [48]. The completion of some NDRTs may be mandatory for the user (e.g., work tasks). By completing mandatory NDRTs, overall time efficiency increases. Optional NDRTs can be considered as an influence on user satisfaction. For this reason, controlling NDRTs is considered a purpose-related function in this paper. The actual NDRT is the object-related function or defines the object-related function that utilizes the basic resources.

Each NDRT can require different resources of the driver. Although this may cause a distraction from the driving task, users will usually continue to perceive parts of the movement (e.g., through vestibular perception). During the NDRT observing surroundings [49], perception of the movement will be extensive.

The automation can intervene in NDRTs if these take place in controllable areas, e.g., on in-vehicle screens. Otherwise, the automation could only interact with the user via sensory cues (e.g., auditive or visual cues).

3.4 Monitoring, Failures, and Changes in Control

Monitoring Task.

The monitoring of automation by humans is described as a task for the fulfillment of safety goals in case of an automation failure [1]. Monitoring of the human by automation usually means observing the driver’s status to make sure the driver complies with his or hers monitoring task [1]. In addition to monitoring the interaction partner, an agent can also perform self-monitoring. This is mandatory for an automation of level 3 of SAE J3016 or above. Self-monitoring can take place, for example, through redundant system design or plausibility checks using stored knowledge.

For the monitoring task, all relevant information must be accessible by the monitoring agent. If an action with negative effects on the target function is detected, a change in control over the corresponding subtask should be initiated. Based on the prioritization of goals, we also propose to divide the monitoring task into mandatory and optional parts. This adjustment leads to mandatory and optional interventions in case of failures in the mandatory and optional action spaces.

Failures and Interventions.

To avoid failures, the acting agent (human and/or automation) must be fully aware of the overall target function. Within user-centered design, the target function from the user’s point of view should be the criterion used for optimization. The automation must perceive this since the target function varies depending on user and situation. However, the user’s wishes and needs are currently not well measurable and therefore represent the first source of possible failures, which particularly affects the optional goal components.

Despite complete knowledge of the target function, failures can occur for both agents and in each step of information processing and reaction. Therefore, we combined basic information processing models by Parasuraman et al. [50] and Endsley and Kaber [51] in connection with the previous insights. Figure 2 shows the combined process model.

Failures can occur in all steps of the model. In addition to failures, self-monitoring can lead to uncertainty in the execution of a processing step. For example, this could be the case when several layers of the previous step serve as an input and contradict each other. Uncertainties can be treated like (possible) failures, where an active involvement of the interaction partner is possible.

Failures are transmitted through the process model to the following processing steps and only become effective at execution level. Consequently, the user’s target function is influenced only in the last step of the process (cf. Fig. 2). A transmission of failures to the following steps can be prevented by intervening in every process step in which the failure or consequential failures occur. To do this, failures must be identified via monitoring and appropriate correction must be initiated.

A distinction must be made between transitions and interventions. Transitions are defined as changing between two different driving states of automated driving [52]. Driving states are usually understood as levels of SAE J3016. Transitions often cause a change of roles. Interventions, on the other hand, only describe a conscious active flow of information from the user towards the automation. An intervention can either require a transition or be necessary at the current driving state. The term takeover is often used in this context.

On decision level, failures can affect the mandatory reduction of action space and/or the decision in the remaining optional solution space (cf. Fig. 2). In addition, failures can occur on maneuver level and/or on parameter level. For example, the optimal maneuver may be known, but the parameterization, e.g., of the start time, may be erroneous.

If a decision is actively not being taken due to uncertainty regarding expected effects on mandatory goals, the resulting effect can have a negative influence on optional goals. If, for example, an overtaking maneuver is not initiated due to safety concerns, this only negatively affects optional goals like efficiency, as no action is being taken.

In research, the most critical failures are often considered because the focus is on safety goals. When viewing the system holistically, different types of failures can be distinguished. This is especially important for failures in the optional action space. Based on the conducted literature review and previous insights, we propose the following dimensions of description:

-

Time budget for failure correction: How much time remains before a corrective intervention must be made to be able to correct the failure without causing avoidable secondary failures?

-

Information requirements for failure correction: Does the automation require a static or a continuous flow of information from the user to correct the failure?

-

Effect of failure on user target function: Does the uncorrected state of driving has a negative effect on optional and/or mandatory parts of the overall target function from the driver’s point of view?

-

Initiator of failure correction: Does the human or the automation start the interaction for failure correction through a first active action?

These dimensions partly overlap with descriptions of transitions from Lu et al. [52]. Similar distinctions to dimensions three and four are also mentioned by Lu et al. [52] but are defined more broadly here. Additionally, in this work, an intervention is considered as initiated by the automation if the automation asks the user to perform an action. This is described differently by Lu et al. [52].

4 Design Spaces for User Interventions

In this chapter, there are general design spaces for user interventions described, which have been identified using the developed AH and described literature insights. Interventions are defined as takeovers of parts of the driving task in a defined driving state. Additionally, we reviewed alternative taxonomies of automation levels to point out possibilities to specify these design spaces and to find consistencies.

4.1 Identified Design Spaces Based on the System Analysis

As in the previous sections, the focus is on interventions into control of movement and optimization of DX. However, the AH can also be used to describe design spaces regarding NDRTs. For example, the automation may intervene in the execution of a secondary task if this is useful for the individual purpose-goal construct.

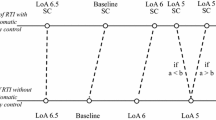

For interventions into control of movement different layers can be distinguished. We propose the classification of interventions shown in Fig. 3. Interventions at a lower level can influence interventions at a higher level or override them altogether. For example, the basic driving style can be adapted situationally via interventions into the choice of maneuvers and parameters. The level of control of the automation is higher for higher levels of intervention and the level of control of the human driver is higher for lower levels of intervention.

Based on the insights of the previous sections and our classification of interventions (Fig. 3), we propose the following design spaces. An automation should try to optimize movement to the driver’s target function. Therefore, the overall design space is described as follows.

-

1.

The automation should model the driver’s overall target function as closely as possible to be able to make decisions based on these. This should include individual optional components of the target function of the current user.

It cannot be expected that vehicles will be able to reproduce the target function completely in the near future. Intrinsically motivated optional actions are likely to be problematic. Therefore, design space two emerges.

-

2.

The user should be able to adapt the basic target function used by the automation to individual needs.

Normally the maneuver in execution defines a dominant set of maneuver parameters, which is most relevant for the current situation. For some parameters it is possible to define alternatives. Maneuver parameters are often connected to a dynamic reference target e.g., distance to another moving vehicle. This design space can be used for a correction of failures in the optional and the mandatory action space, as long as reference targets are perceived correctly by the automation.

Timing parameters open up another space for interaction concepts for an improved DX, which is described by design space three.

-

3.

The time between a decision to perform an action and the point of necessary execution can be used for interactions with the user.

This design space can be used for optional and mandatory interventions as well. The time span to be used can vary between situations. Optional interventions might often offer longer time spans than mandatory interventions. In case of a fallible automation (level 2 and 3 of SAE J3016), we propose two generic automation behaviors regarding the use of the optional action spaces.

-

Defensive optional decision behavior: The automation avoids optional actions to produce as few mandatory interventions as possible. This tends to make the optional components of the target function less well served or users may have to take advantage of these opportunities on their own.

-

Offensive optional decision behavior: The vehicle tends to provoke mandatory interventions through the execution of optional actions. This results in a tendency to serve the optional target components more often, but simultaneously in a tendency to produce more mandatory interventions.

The actual decision behavior might be a combination of both types. However, the types illustrate the connection between optional and mandatory interventions. Both types can be optimized through partial interventions into maneuver and parameter decisions.

-

4.

If the automation anticipates a (potentially) failure, it can actively request the user to intervene in the intended action on parameter or maneuver level.

This design space is usable for optional and mandatory actions. If interventions are considered in such a way as described here, the sources of (possible) failures could be mapped onto their (possible) effects on specific maneuvers and parameters. Thus, automation can direct interventions to specific parameters or maneuvers and does not need to trigger a general takeover. One has to differentiate between the impact of a desired action and the impact of the (expected) failure onto the target function of the user.

In some situations, users or automations can take wrong decisions. For mistaken decisions, the following design space arises.

-

5.

The user should be able to take back mistaken decisions by canceling the execution of a certain decision on maneuver or parameter level and, if necessary, choose an alternative.

Design space six partly overlaps with previous design spaces. It is also applicable to both optional and mandatory interventions.

The described design spaces often require the possibility of specifying certain target parameters or maneuvers by the user. Based on the previously presented model of information processing, the following more precise design space for optional interventions can be described.

-

6.

The automation can reduce possibilities for optional interventions at parameter and maneuver level through mandatory constraints to avoid a decision with a negative impact on mandatory goals.

If the automation may cause failures during determining the mandatory constraints for this design space, this contradicts design spaces four and five.

For mandatory interventions, the various characteristics of failures based on the proposed dimensions of description can be used to optimize emerging operating concepts.

The lowest level of interventions of Fig. 3 describes direct and continuous interventions of the user via at least one basic movement parameter that is not dependent on surroundings. A basic parameter of movement is e.g., steering wheel angle. This level of intervention is useful if the automation is not able to identify maneuver parameters correctly. Also, in some maneuvers the defined basic movement parameters are the only option because there are no reference points to be used. Design solutions for this statement are e.g., haptic shared control concepts [56].

4.2 Specification Using Alternative Automation Taxonomies

The general design spaces described in the previous section can be transformed into different interaction concepts. We reviewed alternative taxonomies to the SAE J3016 to discover consistencies with our findings and to point out possibilities to specify these design spaces. This is only presented briefly in this paper as an extension. An overview of different alternative taxonomies is given by Vagia et al. [53]. In this paper we used the taxonomies of Sheridan [54, 55] and Endsley and Kaber [51]. Both taxonomies describe ten levels of human automation interaction. These levels offer various modes of cooperation between both agents. Based on this, we identified the following basic dimensions for the description of user interventions in interaction concepts for automated systems.

-

1.

Informing the user: Is the user actively informed by the automation regarding an intervention?

-

2.

Decision selection by the user: Does the user have to or can they choose one option out of a set of possible actions?

There are two concepts of decision selection. Either the user can choose from the full set of actions, or the automation reduces the solution space to a certain set.

-

3.

Decision reviewing by the user: Does the automation present a concrete decision, which can or should be reviewed by the user?

If a decision is taken by the vehicle, a user intervention can be implemented using one of the following options.

-

The user can cancel or reverse the decision taken by the automation during execution.

-

The user is given a finite period of time to place a veto before the automated execution of the decision taken begins.

-

The user has to agree to the decision taken by the automation before the automated execution will begin.

These three types differ only in the duration given to the user for a potential veto. It is less than or equal to zero in case of the cancellation concept and it is infinite in case of the approval concept.

-

4.

Implementation of user interventions: How are user decisions implemented in relation to decisions taken by the automation?

The following three options show the possible range for this dimension.

-

The decision taken by the human operator is only implemented if the automation agrees.

-

A mixture between the decision taken by the automation and the one taken by the human is implemented.

-

The human’s decision is always implemented, even if the automation does not agree.

The first dimension (informing) is needed for all interventions initiated by the automation. In addition, users can be actively informed if a decision does not reach sufficient confidence and they should consequentially review it. This corresponds to design spaces four and five of the previous section. The second dimension overlaps with design spaces two, three and seven. In case of optional interventions, the automation can reduce the set of possible actions by the described mandatory constraints, if it recognizes them correctly. Dimension three corresponds with design space six of the previous section. Based on various possible situations in road traffic, a long time for an optional veto to be placed will not be applicable in every situation, which is why all of the described options of this dimension can be relevant. The dimension of implementation is primarily relevant for the lowest level of interventions as defined in Fig. 3, which is not considered in detail in this paper. Design solutions can be created by combining the different dimensions in their various forms. Not every combination is useful, but these descriptive dimensions of interventions can be used to create new design solutions based on the design spaces defined in the previous chapter.

5 Discussion and Limitations

This paper presents a system of design spaces for user interventions in automated driving. These design spaces derive from theoretical models and literature on human machine interaction and cognitive engineering. We define the safe, time efficient, ecological, and satisfying transport of human and/or load as the functional purpose of the system consisting of a driver and an automated vehicle. This definition leads to a large degree of complexity at its level of abstraction while it is still non-exhaustive.

AH as a method has limitations concerning automated control systems [20]. This article addresses this limitation by considering the user as part of the system and thus raising the analysis to a higher level of abstraction. We also included failures and the monitoring of failures into our analysis.

Since this is a theoretical approach, the most important limitation is the lack of applicable validation of the findings. Real world applications could lead to other findings, even if the current literature reveals a dependency between parameters, maneuvers and the functional purpose. Nevertheless, the results can be used to further structure future developments in this field. In addition, there are numerous statements in existing literature that fully or partially support our results. Also, in some studies, initial concepts for design spaces as described here have already been developed. For example, an option for adjusting driving style was recommended multiple times [42, 56, 57], which is in line with the findings of this work. Hecht et al. [58] describe alternative concepts for settings in route planning, which can also be understood as a concept to optimize the automated drive to the target function of the individual user.

First approaches of maneuver-based control have already been tested [9]. The focus was usually not on optional interventions. This paper reveals a potential in these kinds of interaction concepts for user-centered driving automation. As proposed, the maneuver defines dominant parameters in most cases. Applying these to user interventions is to be considered as a design space. However, it may make sense to partially switch to other parameters e.g., because an alternative parameter might be easier to track for the automation or to reduce the complexity of the interaction concept.

The described design spaces arise because of differences among user characteristics. In turn this also means that not every design space useful for every user.

Based on the findings of this work, the criticism towards SAE J3016 presented in the introduction can be expanded. SAE J3016 lacks a detailed description of interventions and of how they affect transitions between different levels. However, this does not argue against SAE J3016 itself, but shows the need of an extension of this taxonomy. It has become clear that focus must be shifted from safety as the main evaluation criterion to the overall target function of the individual user. Even if the system performs in an ideal way regarding safety, a positive value can be added for some user groups through optional interventions. Therefore, the driving task has to be disassembled into smaller units than currently done by SAE J3016. As described above, it is reasonable to look at the level of driving maneuvers and driving parameters.

With the identified design spaces implemented, complete takeovers of the driving task can potentially be reduced. With this approach, there could be automation created that integrates the driver in a situation-specific, proactive and user-centered manner to (partly) avoid mandatory failures and to achieve optimal DX. For mandatory takeovers, the entire spectrum of failures should be considered. In addition, the driver could exploit the optional space of action at any time, adapting to personal needs.

6 Conclusion

We have identified and described design spaces occurring beyond the scope of SAE J3016. Our findings broaden its perspective and should be used for an extension and not a replacement of the SAE J3016. Based on this, various alternative operating concepts can be developed for (partially) automated vehicles. A concrete implementation of these operating approaches and an evaluation of their real-world applicability is the reasonable next step. We propose that the aim of future driving automation should be a frequent, self-determined and variant-rich use of automation functions in order to fulfill users’ individual and situational needs. Safety is more important than driver satisfaction, but it should not be the only criterion of evaluation. The option to adapt the automated drive to users’ individual target functions via optional interventions can be used to improve the driving experience of at least some user groups at every level of driving automation described by SAE J3016.

References

SAE International: Taxonomy and Definitions for Terms Related to On-Road Motor Vehicle Automated Driving Systems: J3016_202104 (2021)

Stayton, E., Stilgoe, J.: It’s time to rethink levels of automation for self-driving vehicles [opinion]. IEEE Technol. Soc. Mag. 39(3), 13–19 (2020)

Bengler, K., Winner, H., Wachenfeld, W.: No human – no cry?. at – Automatisierungstechnik 65, 471–476 (2017). https://doi.org/10.1515/auto-2017-0021

Inagaki, T., Sheridan, T.B.: A critique of the SAE conditional driving automation definition, and analyses of options for improvement. Cogn. Technol. Work 21(4), 569–578 (2019). https://doi.org/10.1007/s10111-018-0471-5

Seppelt, B., Reimer, B., Russo, L., Mehler, B., Fisher, J., Friedman, D.: Consumer confusion with levels of vehicle automation. Driving Assess. Conf. 10, 391–397 (2019)

Abraham, H., Seppelt, B., Mehler, B., Reimer, B.: What’s in a name: vehicle technology branding & consumer expectations for automation. In: Boll, S., Pfleging, B., Donmez, B., Politis, I., Large, D. (eds.) Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications. AutomotiveUI 2017, pp. 226–234. ACM (2017). https://doi.org/10.1145/3122986.3123018

Homans, H., Radlmayr, J., Bengler, K.: Levels of driving automation from a user’s perspective: how are the levels represented in the user’s mental model? In: Ahram, T., Taiar, R., Colson, S., Choplin, A. (eds.) IHIET 2019. AISC, vol. 1018, pp. 21–27. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-25629-6_4

Zacherl, L., Radlmayr, J., Bengler, K.: Constructing a mental model of automation levels in the area of vehicle guidance. In: Ahram, T., Karwowski, W., Vergnano, A., Leali, F., Taiar, R. (eds.) IHSI 2020. AISC, vol. 1131, pp. 73–79. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-39512-4_12

Flemisch, F.O., Bengler, K., Bubb, H., Winner, H., Bruder, R.: Towards cooperative guidance and control of highly automated vehicles: H-Mode and conduct-by-wire. Ergonomics 57(3), 343–360 (2014). https://doi.org/10.1080/00140139.2013.869355

Rasmussen, J.: The role of hierarchical knowledge representation in decision making and system management. IEEE Trans. Syst. Man Cybern. 15(2), 234–243 (1985). https://doi.org/10.1109/TSMC.1985.6313353

Allison, C.K., Stanton, N.A.: Constraining design: applying the insights of cognitive work analysis to the design of novel in-car interfaces to support eco-driving. Automot. Innov. 3(1), 30–41 (2020). https://doi.org/10.1007/s42154-020-00090-5

Lee, S., Nam, T., Myung, R.: Work domain analysis (WDA) for ecological interface design (EID) of vehicle control display. In: ICAI 2008, pp. 387–392 (2008)

Li, M., Katrahmani, A., Kamaraj, A.V., Lee, J.D.: Defining a design space of the auto-mobile office: a computational abstraction hierarchy analysis. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 64(1), 293–297 (2020). https://doi.org/10.1177/1071181320641068

Stoner, H.A., Wiese, E.E., Lee, J.D.: Applying ecological interface design to the driving domain: the results of an abstraction hierarchy analysis. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 47(3), 444–448 (2003). https://doi.org/10.1177/154193120304700341

Birrell, S.A., Young, M.S., Jenkins, D.P., Stanton, N.A.: Cognitive work analysis for safe and efficient driving. Theor. Issues Ergon. Sci. 13(4), 430–449 (2012). https://doi.org/10.1080/1463922X.2010.539285

Walker, G., et al.: Modelling driver decision-making at railway level crossings using the abstraction decomposition space. Cogn. Technol. Work 23(2), 225–237 (2021). https://doi.org/10.1007/s10111-020-00659-4

Burns, C.M., Vicente, K.J.: Model-based approaches for analyzing cognitive work: a comparison of abstraction hierarchy, multilevel flow modeling, and decision ladder modeling. Int. J. Cogn. Ergon. 5(3), 357–366 (2001). https://doi.org/10.1207/S15327566IJCE0503_13

Reising, D.V.C.: The abstraction hierarchy and its extension beyond process control. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 44(1), 194–197 (2000). https://doi.org/10.1177/154193120004400152

McIlroy, R.C., Stanton, N.A.: Getting past first base: going all the way with cognitive work analysis. Appl. Ergon. 42(2), 358–370 (2011). https://doi.org/10.1016/j.apergo.2010.08.006

Lind, M.: Making sense of the abstraction hierarchy in the power plant domain. Cogn. Tech Work 5, 67–81 (2003). https://doi.org/10.1007/s10111-002-0109-4

Chan, C.-Y.: Advancements, prospects, and impacts of automated driving systems. Int. J. Transp. Sci. Technol. 6(3), 208–216 (2017). https://doi.org/10.1016/j.ijtst.2017.07.008

Hartwich, F., Schmidt, C., Gräfing, D., Krems, J.F.: In the passenger seat: differences in the perception of human vs. automated vehicle control and resulting HMI demands of users. In: Krömker, H. (ed.) HCII 2020. LNCS, vol. 12212, pp. 31–45. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-50523-3_3

Simon, K., Jentsch, M., Bullinger, A.C., Schamber, G., Meincke, E.: Sicher aber langweilig? Auswirkungen vollautomatisierten Fahrens auf den erlebten Fahrspaß. Z. Arb. Wiss. 69, 81–88 (2015). https://doi.org/10.1007/BF03373944

Mühl, K., Strauch, C., Grabmaier, C., Reithinger, S., Huckauf, A., Baumann, M.: Get ready for being chauffeured: passenger’s preferences and trust while being driven by human and automation. Hum. Factors 62(8), 1322–1338 (2020). https://doi.org/10.1177/0018720819872893

Zhang, Y., Angell, L., Bao, S.: A fallback mechanism or a commander? A discussion about the role and skill needs of future drivers within partially automated vehicles. Transp. Res. Interdiscip. Perspect. 9 (2021). https://doi.org/10.1016/j.trip.2021.100337

Gardner, B., Abraham, C.: What drives car use? A grounded theory analysis of commuters’ reasons for driving. Transp. Res. F: Traffic Psychol. Behav. 10(3), 187–200 (2007). https://doi.org/10.1016/j.trf.2006.09.004

Sciarretta, A., Vahidi, A.: Energy-Efficient Driving of Road Vehicles. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-24127-8

Naddeo, A., Califano, R., Cappetti, N., Vallone, M.: The effect of external and environmental factors on perceived comfort: the car-seat experience. In: Proceedings of the Human Factors and Ergonomics Society Europe, pp. 291–308 (2016)

Eberl, T.X.: Charakterisierung und Gestaltung des Fahr-Erlebens der Längsführung von Elektrofahrzeugen. Dissertation, Technische Universität München (2014)

Klebelsberg, D.: Das Modell der subjektiven und objektiven Sicherheit. Schweizerische Zeitschrift für Psychologie und ihre Anwendungen, pp. 285–294 (1977)

Frison, A.-K., Wintersberger, P., Liu, T., Riener, A.: Why do you like to drive automated? In: Fu, W.-T., Pan, S., Brdiczka, O., Chau, P., Calvary, G. (eds.) Proceedings of the 24th International Conference on Intelligent User Interfaces, IUI 2019, pp. 528–537. ACM, New York (2019). https://doi.org/10.1145/3301275.3302331

Rödel, C., Stadler, S., Meschtscherjakov, A., Tscheligi, M.: Towards Autonomous Cars. In: Miller, E. (ed.) Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2014. ACM, New York (2014). https://doi.org/10.1145/2667317.2667330

Helgath, J., Braun, P., Pritschet, A., Schubert, M., Böhm, P., Isemann, D.: Investigating the effect of different autonomy levels on user acceptance and user experience in self-driving cars with a VR driving simulator. In: Marcus, A., Wang, W. (eds.) DUXU 2018. LNCS, vol. 10920, pp. 247–256. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-91806-8_19

Hartwich, F., Beggiato, M., Krems, J.F.: Driving comfort, enjoyment and acceptance of automated driving - effects of drivers’ age and driving style familiarity. Ergonomics 61(8), 1017–1032 (2018). https://doi.org/10.1080/00140139.2018.1441448

Engeln, A., Vratil, B.: Fahrkomfort und Fahrgenuss durch den Einsatz von Fahrerassistenzsystemen. In: Schade, J., Engeln, A. (eds.) Fortschritte der Verkehrspsychologie. VS Verlag für Sozialwissenschaften, pp. 175–288 (2008)

Bier, L., Joisten, P., Abendroth, B.: Warum nutzt der Mensch bevorzugt das Auto als Verkehrsmittel? Eine Analyse zum erlebten Fahrspaß unterschiedlicher Verkehrsmittelnutzer. Z. Arb. Wiss. 73, 58–68 (2019). https://doi.org/10.1007/s41449-018-00144-9

van Huysduynen, H.H., Terken, J., Eggen, B.: Why disable the autopilot? In: Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2018, pp. 247–257. ACM, New York (2018). https://doi.org/10.1145/3239060.3239063

von Rosenstiel, L.: Wertewandel und Kooperation. In: Spieß, E. (ed.) Formen der Kooperation. Bedingungen und Perspektiven. Schriftenreihe Wirtschaftspsychologie, pp. 279–294. Verlag für angewandte Psychologie, Göttingen (1998)

Donges, E.: Aspekte der aktiven Sicherheit bei der Führung von Personenkraftwagen. Automobil-Industrie 27(2), 183–190 (1982)

Sagberg, F., Selpi, Piccinini, G.F., Engström, J.: A review of research on driving styles and road safety. Hum. Factors 57(7), 1248–1275 (2015). https://doi.org/10.1177/0018720815591313

Colombo, T., Panzani, G., Savaresi, S.M., Paparo, P.: Absolute driving style estimation for ground vehicles. In: 2017 IEEE Conference on Control Technology and Applications. CCTA, pp. 2196–2201. IEEE (2017). https://doi.org/10.1109/CCTA.2017.8062777

Ossig, J., Cramer, S., Bengler, K.: Concept of an ontology for automated vehicle behavior in the context of human-centered research on automated driving styles. Information 12(1), 21 (2021). https://doi.org/10.3390/info12010021

Chen, S.-W., Fang, C.-Y., Tien, C.-T.: Driving behaviour modelling system based on graph construction. Transp. Res. Part C: Emerg. Technol. 26, 314–330 (2013). https://doi.org/10.1016/j.trc.2012.10.004

Han, W., Wang, W., Li, X., Xi, J.: Statistical-based approach for driving style recognition using Bayesian probability with kernel density estimation. IET Intell. Transp. Syst. 13, 22–30 (2019). https://doi.org/10.1049/iet-its.2017.0379

Craig, J., Nojoumian, M.: Should self-driving cars mimic human driving behaviors? In: Krömker, H. (ed.) HCII 2021. LNCS, vol. 12791, pp. 213–225. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-78358-7_14

Schreiber, M., Kauer, M., Schlesinger, D., Hakuli, S., Bruder, R.: Verification of a maneuver catalog for a maneuver-based vehicle guidance system. In: 2010 IEEE International Conference on Systems, Man and Cybernetics, SMC 2010, pp. 3683–3689 (2010). https://doi.org/10.1109/ICSMC.2010.5641862

Kauer, M., Schreiber, M., Bruder, R.: How to conduct a car? A design example for maneuver based driver-vehicle interaction. In: 2010 IEEE Intelligent Vehicles Symposium, pp. 1214–1221 (2010). https://doi.org/10.1109/IVS.2010.5548099

Naujoks, F., Befelein, D., Wiedemann, K., Neukum, A.: A review of non-driving-related tasks used in studies on automated driving. In: Stanton, N.A. (ed.) AHFE 2017. AISC, vol. 597, pp. 525–537. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-60441-1_52

Pfleging, B., Rang, M., Broy, N.: Investigating user needs for non-driving-related activities during automated driving. In: Häkkila, J., Ojala, T. (eds.) Proceedings of the 15th International Conference on Mobile and Ubiquitous Multimedia, MUM 2016, pp. 91–99. ACM (2016). https://doi.org/10.1145/3012709.3012735

Parasuraman, R., Sheridan, T.B., Wickens, C.D.: A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 30(3), 286–297 (2000). https://doi.org/10.1109/3468.844354

Endsley, M.R., Kaber, D.B.: Level of automation effects on performance, situation awareness and workload in a dynamic control task. Ergonomics 42(3), 462–492 (1999). https://doi.org/10.1080/001401399185595

Lu, Z., Happee, R., Cabrall, C.D.D., Kyriakidis, M., de Winter, J.C.F.: Human factors of transitions in automated driving: a general framework and literature survey. Transp. Res. F: Traffic Psychol. Behav. 43, 183–198 (2016). https://doi.org/10.1016/j.trf.2016.10.007

Vagia, M., Transeth, A.A., Fjerdingen, S.A.: A literature review on the levels of automation during the years. What are the different taxonomies that have been proposed? Appl. Ergon. 53, 190–202 (2016). https://doi.org/10.1016/j.apergo.2015.09.013

Sheridan, T.B., Verplank, W.L.: Human and Computer Control of Undersea Teleoperators (1978)

Sheridan, T.B.: Telerobotics, Automation, and Human Supervisory Control. MIT Press, Cambridge (1992)

Festner, M., Eicher, A., Schramm, D.: Beeinflussung der Komfort- und Sicherheitswahrnehmung beim hochautomatisierten Fahren durch fahrfremde Tätigkeiten und Spurwechseldynamik. In: 11. Workshop Fahrerassistenzsysteme und automatisertes Fahren, pp. 63–73 (2017)

Beggiato, M., Hartwich, F., Krems, J.: Der Einfluss von Fahrermerkmalen auf den erlebten Fahrkomfort im hochautomatisierten Fahren. at – Automatisierungstechnik 65(7), 512–521 (2017). https://doi.org/10.1515/auto-2016-0130

Hecht, T., Sievers, M., Bengler, K.: Investigating user needs for trip planning with limited availability of automated driving functions. In: Stephanidis, C., Antona, M. (eds.) HCII 2020. CCIS, vol. 1226, pp. 359–366. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-50732-9_48

Acknowledgement

This work was funded by the BMW Group.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Steckhan, L., Spiessl, W., Quetschlich, N., Bengler, K. (2022). Beyond SAE J3016: New Design Spaces for Human-Centered Driving Automation. In: Krömker, H. (eds) HCI in Mobility, Transport, and Automotive Systems. HCII 2022. Lecture Notes in Computer Science, vol 13335. Springer, Cham. https://doi.org/10.1007/978-3-031-04987-3_28

Download citation

DOI: https://doi.org/10.1007/978-3-031-04987-3_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-04986-6

Online ISBN: 978-3-031-04987-3

eBook Packages: Computer ScienceComputer Science (R0)