Abstract

This paper aims at reviewing the development of the integration of Cognitive Neuroscience and Human-computer Interaction, and put forward the main directions of the development of Brain-computer Interaction in the future.

Research status and application of Human-computer Interaction based on Cognitive Neuroscience were reviewed by desktop analysis and literature survey, combined with the new research trends of Brain-computer Interface according to domestic and foreign development. According to the brain signal acquisition method and application, the types of BCI are divided into (1) Passive brain-computer interface: exploring and modeling neural mechanisms related to human interaction and on that basis realizing iterative improvement of computer design. (2) Initiative brain-computer interface: direct interaction between brain signal and computer. Then the main development direction of Brain-computer Interface field in the future from three progressive levels are discussed: broadening existing interactive channels, improving the reliability of human-computer interaction system and improving the interaction experience. The deep integration and two-way promotion of Cognitive Neuroscience and Human-computer Interaction will usher in the era of Brain-computer Interface and the next generation of artificial intelligence after overcoming a series of problems such as scene mining, algorithm optimization and model generalization.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 The Evolution of HCI

The development of Human-Computer Interaction (HCI) has experienced the computer-centered command-line Interface (CLI) which used Computer language to input and output information through perforated paper tape, the Graphic User Interface (GUI) represented by WIMP (Windows, Icon, Mouse, Point), the Touch-screen User Interface (TUI) developed with the maturity of intelligent mobile technology. With the boom in perception, computing exchange Interface between human and computer gradually evolved into Multi Model User Interface (MMUI), Perceptual User Interface (PUI), post-WIMP, non-WIMP, we called them Natural human-computer interaction phase, in which human five senses (vision, hearing, taste, smell and touch) and physical data are sufficiently understood as computer input commands and parameters in real-time. Natural human-computer interaction has the following characteristics:

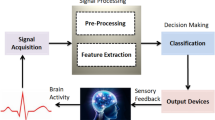

1.1 Provide Multi-modal Interaction Channels

In addition to supporting traditional input methods such as mouse and touch, intelligent human-computer system also supports eye movement interaction, voice interaction, somatosensory interaction, brain-computer interaction and other operation. Eye movement interactions are common in AR/VR devices, where users interact with them through gaze and blink. Voice interaction is one of the most vigorous development of human-computer interaction technology in recent years, speech recognition and speech synthesis of natural language processing has reached a more mature level, which to a certain extent, the catalytic voice the development of intelligent devices, such as a small degree of intelligent speakers, apple Siri and Microsoft small ice and other household intelligent voice. Somatosensory interaction more applications in the field of entertainment, such as nintendo body feeling game new adventures in fitness ring, on a fitness ring “push” and “stretch”, “flat” and a series of actions, to achieve “artillery” and “absorption of gold”, “jump”, such as operation, hit a monster, complete fitness adventure. In addition to motion-sensing games, AR and VR also support gamepads and gesture. Brain-computer interaction is a new type of interaction technology emerging in recent years. Its principle is to decode the brain neuron activity, interpret the user’s intention, emotion, cognitive state and consciousness by implanting chips into the brain or through non-invasive eeg signal acquisition equipment, and realize the direct information communication between the brain and the computer. See Fig. 1.

1.2 Support Discrete and Continuous Information Exchange

Traditional human-computer interaction, such as GUI, can only support users’ discrete operations on objects such as pictures and text through mouse and keyboard. The process of information exchange is discrete. Natural human-computer interaction system combines continuous and discrete. Through real-time and continuous acquisition, processing and analysis of multi-channel information of users by multi-sensors, rapid, real-time and adaptive information output can be made in a timely manner. It is a multi-dimensional interaction mode that integrates the time factor into the human-computer system.

1.3 Allowing for Fuzzy Interaction with Uncertainty

Natural interactions allow for user-submitted interactions without certain and definite. In the physical space, not only human beings are constantly changing, but also the spatial and temporal data in the surrounding environment are also updated. How to transform uncertainty into certainty and use it for human-computer system is an important problem in human-computer integration. Take eye-movement interaction as an example, the research shows that the eye-movement behaviors of users are different under different cognitive load conditions [1]. In the mouse click task, the shape, size, distance and other factors of the target will affect the final position of the user’s landing point [2]; In speech interaction, natural language is characterized by large amount of information, fuzziness, context and other uncertainties. Natural human-computer interaction supports the exploration and modeling of fuzziness and uncertainty in the process of human-computer interaction, so as to identify and predict the real intention and behavior of users, improve the support of intelligent system for people and improve user performance.

The era of natural human-computer interaction has promoted the prosperity of multi-channel perception and interaction technology, emphasizing the continuous real-time analysis of human motivation, state, intention and behavior and generating appropriate reasoning, with the goal of realizing the natural and intelligent integration and collaboration between human and computer. The brain is in charge of oversees human’s acceptance, processing and command of external information. The activity of neurons and the changes of brain network can reflect the most natural cognitive, emotional and behavioral states in real time, fast and truly, which has incomparable advantages to other channels.

1.4 Owning Rich Perceptual Ability

With the development of hardware technology, computers have a variety of sensory abilities similar to human eye, ear, nose, tongue and body. Intelligent system by sensors “look” to the user’s facial expressions and body movements and the surrounding environment, “listen” to the user’s voice information and the environment, user perception neural networks and brain neurons state, through the voice recognition technology, computing technology such as affective computing and analyzing the characteristic of the user’s intention, cognitive and emotional state and situation of information, make intelligent push and judgment, and adaptive adjustment of their own state, give appropriate feedback. The paper mainly describes the development of human-computer interaction and neuroscience after the human-computer interaction channel is expanded to neural signal.

In 2016, Chinese scientists represented by the Institute of Neuroscience, Chinese Academy of Sciences jointly published a paper titled “China Brain Project: Basic Neuroscience, Brain Diseases, and Brain Inspired Computing”, which introduced the research progress in Basic Neuroscience, Brain Diseases and brain-inspired Computing in China to the world. The Chinese Brain Project was launched [3]. Brain-computer interaction and brain-computer interface (BCI) have become the emerging representatives of natural interaction in human-computer interaction. Brain-computer interaction refers to the information exchange between human and computer through the direct connection pathway established between the brain and external devices. It is the most natural and harmonious interaction mode in NUI, which aims to realize the control of the brain to the computer by studying the neural mechanism of the brain’s electrical activity, or realize the brain-brain connection through the medium of the computer.

2 Human Data and BCI

In human-computer interaction system, the usage behavior of users and performance of computer are influenced by the interplay between computer and users’ awareness [4]. So to speak, human plays a vital role in HCI system. However, the complex human system is undergoing complex changes all the time. How to scientifically obtain and analyze human data is an important challenge in human-computer interaction.

2.1 Approach to Obtain Human Data

Generally, we obtain human data from four channels:

Subjective Review.

Subjective review refers to the acquisition of users’ insights into the system by means of questionnaires, interviews. Subjective data of users are obtained through the outline or scale drawn up in advance. Classical scales in HCI include NASA-TLX scale, SYSTEM availability scale (SUS), USER experience assessment Scale (UEQ), user emotion assessment scale (SAM) and so on.

Behavior Performance Measurement.

Behavioral indicators include user performance (completion time, completion rate, number of errors, etc.), and behavioral data such as eye movement, expression, voice, and body. Take eye movement behavior as an example, eye movement behavior includes location-based eye movement and non-location-based eye movement. Positioning eye movement refers to saccadic, smooth tracking, depth information focusing, rhythmical eye movement and physiological tremor of the eye affected by vestibular. Non-stationary eye movements include self-regulating movements such as pupil dilation and lens focus. There are three types commonly used in human-computer interaction: fixation point, saccadic, scanning path, and pupil diameter in non-locational eye movement [5]. Since behavior can be disguised, the above two methods have some problems in authenticity and effectiveness.

Physiological Monitoring.

Physiological parameter monitoring equipment appeared in the 1950s, divided into portable, wearable, desktop monitoring equipment, monitoring parameters including blood pressure, blood oxygen saturation, Electrocardiogram, heart rate, electroskin, electromyography, body temperature, respiration and other physiological data.

Neural Signal Detection.

Neural data refers to the collection of signals of electrical activity from the brain using devices such as EEG or fMRI. The cerebral cortex is composed of sensory, motor and associative areas. It realizes the perception and coordinated action of events by integrating the neuronal collection of cortical areas of multi-functional areas. It is highly correlated with the inner cognitive process and human behavior including feeling, perception, thinking, consciousness and emotion. The detection method based on neural signal has the characteristics of non-camouflage, authenticity, effectiveness and real-time, it is an ideal way to study what people think and do in human-computer system, and has high research value. In recent years, EEG has gradually begun to cross and integrate with the field of human-computer interaction, focusing on human state evaluation in human-computer system, neural feedback of computer performance to human, and even the emergence of brain-computer interface controlling computer through brainwaves, which has widened the interaction channel in traditional human-computer interaction. To some extent, this has promoted the development of human-computer interaction from the perspective of cognitive nerve.

Compared with the traditional subjective review method, the paper expounds oral physiological index assessment method (heart rate, ECG, EMG, blood pressure, etc.), and the behavior index assessment method (task completion time, completion, wrong number, number of seeking help, eye gaze, twitch, pupil diameter, etc.), the neural detection method interpret the user’s EEG data when interacting with the computer through time domain, frequency domain or the time-frequency analysis method, from the aspects of people’s cognition, emotion and so on. Regardless of individual differences and complexity of signal analysis, cognitive neural assessment method exhibits superior performance assessment compared to other measures of assessment due to its authenticity and real-time performance.

Many scholars have devoted themselves to the exploration of brain cognitive neural mechanism in the process of human-computer interaction, and are committed to providing cognitive neuroscience basis for brain-computer interface and the next generation of artificial intelligence. Relevant theories, research ideas and methods of cognitive neuroscience can help human-computer interaction establish a more accurate and more powerful cognitive processing function model, so as to evaluate human-computer interaction process and predict user interaction behavior, and finally design a suitable human-computer interaction system [6].

Among many brain signal acquisition technologies like fMRI, fNIRs and EEG, EEG is widely used due to its low cost, portability, non-invasive, and high temporal resolution. Electroencephalogram (EEG) is the overall reflection of the electrophysiological activity of brain nerve cells on the surface of the brain’s cortex or scalp. Sophisticated instruments record the potential difference between electrodes and reference electrodes on the scalp as voltage, which amplifies and yields the waveform of an Electroencephalogram over time. EEG is regarded as the first technology to realize brain-computer interaction and in this paper, EEG technology is the main technology being discussed.

According to the integration degree of Cognitive Neuroscience and Human-Computer Interaction, the research on brain-computer systems can be divided into two directions: Passive brain-computer interface and Initiative brain-computer interface.

2.2 Classification of BCI

Passive Brain-Computer Interface.

This kind of brain-computer interface mainly provides the identification and judgment of user state and computer design. This type of brain-computer interface requires constant, dynamic and continuous assessment to user without participation of user consciousness. (1) From the computers’ perspective, evaluate existing systems or prototypes using neuro data evidence to improve computer design and propose iterative design solutions and implement adaptive design; (2) From the users’ perspective: assess the user’ cognitive state when interact with human-computer system such as workload and emotion state, experience level, and exploring users’ neural model related to the human-computer system.

Initiative Brain-Computer Interface.

Initiative brain-computer interface provides direct interaction between brain signal and computer. This type of brain-computer interface is used when the user intends to operate the computer. The integration of human neural model and computer design emphasizes the one-way input or two-way interaction and cooperation between brain and computer, as well as the brain-brain interconnection between unilateral control or multiple computers.

3 Three Progressive Levels of Cognitive Neuroscience Used in HCI

Through secondary data research and desktop analysis, according to the degree of integration of human and computer in HCI system, the application of cognitive neuroscience to human-computer interaction can be divided into three progressive levels shown in Fig. 2.

3.1 Explore Neural Mechanisms Related to HCI

In addition to measuring people’s cognitive and emotional performance when using human-computer systems to assess the rationality of human-computer system design, cognitive neuroscience can also be used for “brain reading”, i.e. analyzing EEG data to determine the user’s current mode. At present, the recognition of human patterns in human-computer interaction based on cognitive neuroscience mainly includes the recognition of cognitive patterns, interactive intention and affective states. Exploration of neural mechanisms and the construction of cognitive neural models is as important to brain-computer interaction as the user’s mental model is to design modeling in design discipline.

User Intent Inference Before Interaction Occurs.

Another exploration direction of cognitive neuroscience in human-computer interaction contexts is to distinguish the differences in brain electrical activity under different intentions, such as click-unclickable intention, aimless browse-finding intention, decision intention, motor imagination intention and so on. Research results have been applied to the design of BCI system selection intention, AI system browsing pattern monitoring, intelligent recommendation system and adaptive computing.

Human-computer interaction intention refers to the goals and expectations of users when operating the computer system. It is important to improve the efficiency of human-computer interaction to avoid consuming a lot of resources and time in human-computer information exchange for the inference of uncertain information of users in terms of behavior and perception of intelligent system. Intent modeling and recognition are used to create human-robot Interaction and human-robot Interaction (HRI) Interaction paradigms in cognitive psychology. In psychology, human intention is divided into explicit intention and implicit intention. Explicit intention through facial expression, voice and gestures to express, implicit intention is vague and difficult to understand, electrical neural electrical activity can be a very good response user intent state, because of the cerebral cortex is the commander of the central nervous system, responsible for upload, give orders, on the time series of neural activity should come before the other mode. Therefore, the intention prediction dynamic model is established with the EEG data, and the real intention of the user can be inferred according to the neural activity time series, and the behavior of the intelligent system can be adaptively adjusted, which has certain advantages in terms of time and efficiency.

At present, intentional reasoning has a wide range of applications, including human-service robot interaction, traffic monitoring, vehicle assisted driving, military and flight monitoring, games and entertainment, helping the elderly and disabled system, virtual reality and other fields with potential application value. For example, by observing users’ information browsing behavior and brain electrical activity when using e-commerce websites, we can judge whether users are searching targets with goals or browsing without goals. In the intelligent driving system, the EEG characteristics of users during flexible braking and emergency braking are obtained to judge the braking intention of users. In an emergency, the intelligent driving car can take braking measures first to help users avoid danger [7]. The power of EEG in human-computer interaction intent reasoning can be carried out from three aspects: visual search intent, decision intent, and behavioral intent.

Visual Search Intent Reasoning.

Visual search intention refers to the user’s intention to search for information when browsing the website. Most scholars classify this process into aimless exploration intention, targeted search intention and transactional intention. In the case of aimless exploration intention, the user is not clear about the target, the search method is uncertain, and the target domain is unknown. The user scans the whole scene to obtain a general picture, and the process is open and dynamic, and the search process lacks obvious boundaries. Under the search intention with goals, users search for specific goals with interest and motivation for specific goals. The transactional intent is when the user needs to accomplish something, such as modifying the remark name. EEG signals corresponding to different visual search intentions are also different. scholars have proposed that the reasoning of visual search intention from the perspective of functional connection can obtain higher accuracy. For example, the PLV difference between browsing and searching intentions and the characteristics of intention transition period can be used for the user’s intention reasoning when browsing the interface [8] for specific targets, obtained phase locking value (PLV) of EEG data, extracted significant electrode pairs as characteristic values, and classified browsing intention using SVM, GMM and Bayes.

Decision Intention Reasoning.

Decision intention includes user preference prediction (like/dislike), next click behavior prediction (click/don’t click, combined with eye movement signal can predict the click location) and next action prediction (action decision or action direction), etc.

In terms of preference decision-making, when users observe the goals with different preference levels, the EEG will show significant difference. Rami N. Khushaba’s team, for example, uses EEG and ET data to quantify the importance of different shapes, colors, and materials to interpret consumers from neuroscience perspective. They found that there were clear and significant changes in brain waves in the frontal, temporal and occipital lobes when consumers made preference decisions. Using the mutual information (MI) method, they found that the Theta band (4–7 Hz) in the frontal, parietal and occipital lobes was most correlated with preference decision. Alpha (8–12 Hz) in the frontal and parietal lobes and beta (13–30 Hz) in the occipital and temporal lobes were also associated with preferred decision. Based on neuroscientific evidence, this paper classifies consumers dominated by color and consumers dominated by pattern, compares the differences in mutual information and brain activity between different channel pairs, and builds a decision model to deduce user preference [9]. According to this feature, users’ preferences for target objects can be judged by analyzing EEG data, so as to assist decision-making and reasoning.

In terms of click decision reasoning, studies have shown that when users browse websites, the EEG generated by clicking behavior is significantly different from the EEG without clicking behavior. The temporal statistical characteristics, Hjorth characteristics and the pupil diameter in eye movement can well classify users’ click intention [10]. Combining the fixation position of eye movements, the user’s click intention and click target can be inferred prior to the click behavior. Park et al. fused EEG and eye movement signals to identify the implicit interactive intention in the process of visual search, and found that the recognition accuracy of fused EEG and eye movement signals was about 5% higher than that of single physiological signal, which confirmed that the intent reasoning accuracy of fused EEG data better. This provides theoretical support for brain-computer interface technology [11].

In the aspect of behavioral decision prediction, the classical motor imagination paradigm is mainly combined. When users imagine the movement of different limbs or tongue, the EEG signals generated are significantly different, and then the intention of the user’s next movement direction is inferred. Classical motor imagination paradigm can induce the corresponding behavior of the EEG, through neural modeling to find the corresponding behavioral intention mapping. It has been widely and successfully used in complex systems and to help people with disabilities interact naturally, such as brain-controlled drones, brain-controlled aircraft turning left and right and shooting, and brain-controlled wheelchairs moving or turning. Gino Slanzi et al. explored EEG differences in click intentions in the experimental design of different information-seeking tasks on five website. Using EEG mean, standard deviation, maximum, minimum, Hjorth characteristics such as energy, mobility and complexity of time-frequency characteristics of approximate entropy, Petrosian fractal dimension, Higuchi, Hurst index, fractal dimension and nonlinear dynamic characteristics, combining with the pupil diameter of maximum, minimum, average and poor eye movement characteristics, such as using the Random Lasso algorithm for feature selection, get a linear classifier 71% classification accuracy [12].

Cognitive Pattern Classification.

Researchers designed tasks with different cognitive patterns in advance, collected EEG differences of subjects under different tasks, selected appropriate eigenvalues after data processing, and classified task patterns through computer learning or deep learning algorithms to realize pattern recognition for different cognitive tasks.

The most classic task types are from the experiments of Keirn et al. [6], which include resting state, complex task, geometric rotation task, letter combination task and visual counting task. The general classification accuracy can reach 75–90%. Different scholars have carried out studies on the basis of the five-cognitive task EEG dataset constructed by Keirn et al. Although Palaniappan achieved a classification accuracy of 97.5% in the classification of the task dicclassification with the best discrimination, its model generalization ability was insufficient [13]. Anderson et al. also obtained classification results up to 70% by using neural network and time averaging method [14]. Johnny Chung Lee used Keirn’s experimental mode, P3 and P4 brain electrode channels, and Weka’s CFSSubsetEeval operator feature selection method to screen out 23 features of the triclassification task and 16 features under the classification task, and found that the mean spectral power, alpha and beta-low power features played a crucial role in the cognitive classification task [15].

Recognition of User Physiological State During and After Interaction

Affective Pattern Recognition.

Affective pattern recognition is a part of Affective Computing. Certain areas and neural circuits in the brain are responsible for processing and processing emotional information. Studies have shown that emotion recognition based on EEG signals is more accurate than verbal evaluation, because brain signals do not “camouflage”. Therefore, it is of great significance to study the neural mechanisms associated with emotional states for affective computing and artificial intelligence. FM-Theta waves in the midfrontal cortex and FAA (Frontal Alpha Asymmetry) were associated with emotional expression in the brain. FM Theta is not only associated with attention, but also with pleasurable emotional experiences. Hemispheric Pleasure Hypothesis proposes that the alpha drop in the left frontal lobe represents positive emotions, while the alpha drop in the right frontal lobe indicates negative emotions. Therefore, the alpha asymmetry index FAA in the frontal lobe is often used for affective valence recognition. By processing and calculating the emotion-related EEG features, the result is mapped into the emotion space to realize emotion recognition and classification.

Koelstra et al. used DEAP database to classify emotions in two dimensions of VA, and proposed that arousal was negatively correlated with theta, alpha and gamma, while pleasure was correlated with all bands of EEG. Zheng et al. used computer learning method to study the stability pattern of EEG over time, and proposed that in terms of pleasure, temporal lobe Beta and Gamma were more active in pleasure state, alpha was more active in inferior occipital region in neutral emotion, beta was more active in inferior occipital region in negative emotion, and Gamma was more active in prefrontal lobe in negative emotion [16]. M. Stikic waiting 10 bandwidth according to the standard of the EEG in 20 electrodes and the power spectral density (PSD) in 10 regions, a total of 300 eigenvalues and coiflet function based wavelet analysis to extract the characteristic values of 6 bands 20 electrode 120 through F test, finally extracted 48 characteristic value, and found that most of the eigenvalues of the emotional good prediction effect comes from the frontal lobe, the frontal lobe and temporal lobe, most prediction effect is gamma frequency band, followed by theta and beta [17].

Cognitive State Calculation.

In the process of human-computer interaction, with the aggravation of human fatigue factors, there will be a series of physiological reactions, such as increased sensory threshold, decreased movement speed and accuracy, inattention, decreased memory ability, and thinking disorder, which will lead to decreased working ability, increased mistakes, and then lead to a series of human accidents. EEG can be used to assist in monitoring the physiological state of the user in human-computer system. Take the human-autonomous vehicle system as an example, fatigue detection can help the intelligent vehicle judge the state of the human in real time and take over the operation of the vehicle at the necessary time, so as to ensure the safety of the user and complete the intelligent collaboration between man and computer. For example, some scholars compared the EEG of human in normal state and physiological fatigue state (no sleep for 40 h), and found significant differences in brain networks, and proposed the method of EEG fatigue detection and applied it to the physiological fatigue detection of aircraft pilots. In addition, a number of studies have confirmed the role of EEG in early diagnosis and intervention in Alzheimer’s disease, depression and personality disorders.

The support of EEG technology to human-computer system during interaction is mainly reflected in the real-time monitoring and evaluation of the cognitive load of users during interaction with the system. By observing the EEG data related to the cognitive load generated by the interaction between the user and the human-computer system, the usability of the human-computer system is evaluated and the design errors are mined, and the design optimization of the human-computer system is finally helped. Cognitive load includes internal cognitive load, external cognitive load and related load. Internal load refers to the requirement of the inherent complexity of information on working memory ability, which is an indicator reflecting the difficulty of using human-computer system. External load refers to the form of information presentation, which is generated by the presentation mode of human-computer interface. Related load is the process of schema building and automation when users use the system. High cognitive load indicates problems in the use of the system and causes psychological stress to users. Cognitive load is expressed by specific spectral energy increases in different brain region. Results show that when the cognitive load increases, the activity of delta wave increase. Theta and alpha waves are related to the cognitive process of processing new information, and higher working memory load will lead to the enhancement of Theta and Beta [18]. Prefrontal gamma waves are correlated with task difficulty, and the more difficult the interactive task, the more active the prefrontal gamma waves are [19].

3.2 Improve Computer Performance Based on Neural Assessment

Among the systematic design methods of Human-Computer Interaction, besides the goal-oriented method and user-centered design method, the design method based on assessment is also widely used because of its efficiency, accuracy and low cost.

Designers usually organize subjective assessment, physiological assessment or neural assessment on the design prototype or the old version, dig out the inconformity of human behavior and needs in the current design, and carry out iterative design on the basis of the original design. The general process diagram see Fig. 3. On the one hand, evaluation is used to verify the rationality of a design, on the other hand, iteratively improve the design by means of evaluation [18].

In the process of human-computer interaction, the performance of intelligent system is evaluated by brain neural signals, such as usability, visual aesthetic and user experience.

Real-Time Evaluation.

From the time dimension, EEG based assessment can be divided into post-stimulus assessment and real-time assessment. Post-stimulus assessment refers to the analysis of EEG differences between the stimulus state and the resting state after a given human-computer interaction related stimulus, and then draw conclusions about the difficulty, aesthetic feeling, experience and other aspects of the stimulus objects. Real-time evaluation is based on the characteristics of high spatial and temporal resolution of EEG, and the real-time feedback of EEG on the subject materials can be obtained by real-time detection of EEG changes in the process of human-computer interaction. Take human-computer interface design evaluation as an example, ICONS with different design colors, shapes, text and graphics combinations were presented to the subjects, and EEG signals were used to analyze which design form better. By looking at different looks of smartphones, the brain electricity was used to assess which design was more acceptable to users [20].

Dynamic and Continuous Evaluation.

In terms of the type of results, neural data based evaluation can not only obtain independent judgments about the stimulus materials, such as difficult-easy, Beautiful-ugly, but also obtain continuous time series of neural signals if the stimulus materials are dynamically changing time series. Combining with Bayesian and hidden Markov algorithms, time series can also be predicted, which is the content of intent reasoning mentioned above.

3.3 Direct Interaction Between Brain Signal and Computer

Brain-computer interface, also known as “Brain-machine interface”, is a direct one-way or two-way communication connection between human and external devices. In the case of a one-way brain-computer interface, the computer either receives commands from the brain or sends signals to the brain, but cannot send and receive signals at the same time. Bidirectional brain-computer interface (BCI) allows the bidirectional information exchange between the brain and external devices to achieve human-computer cooperation.

In the aspect of unidirectional brain-computer interface, the EEG is connected with other external devices as a recording device of neural activity signal, and the external devices are directly controlled based on the neural activity signal. Five sensory channels of eye, ear, nose, tongue and body each perform their respective functions, and the absence of any interactive channel will bring inconvenience to basic life. In a sense, brain-computer interface can make up for the regret caused by the lack of channel. For example, the research on motor imagination in cognitive neuroscience provides relevant evidence of brain regions, frequency bands and components related to movement, which provides research basis for controlling movement through imagination. Intelligent medical wheelchair controlled by brainwave can realize the forward stopping and steering function of the wheelchair by real-time detecting the energy value of α wave, β wave, θ wave and δ wave. BCI also implements mind-control machinery for paralyzed people, for example, using brainwave-controlled robotic arms to help patients with physical disabilities complete specific tasks, as shown in Fig. 4.

4 Directions of HCI Research Based on Cognitive Neuroscience

The current research achievements in the field of cognitive neuroscience provide a large number of cognitive neural mechanisms for researchers in the design of BCI brain-computer interfaces and the design of the next generation of Artificial Intelligence (AI). The efficiency of BCI recognition and control can be greatly improved by selecting appropriate brain region channels and EEG characteristics according to the task type to be complete. In terms of AI, based on studies on basic cognitive functions related to attention and cognitive load and neural mechanisms related to advanced cognitive functions such as emotion recognition and empathy, the AI system is more humanized in terms of self-adaptation, personalization and human-computer collaboration.

4.1 Broaden Existing Interactive Channels

Traditional human-computer interaction relies on human’s visual, auditory, voice and tactile channels. These four-sense collaboration completes basic human-computer interaction and brings real experience, such as the interaction between human and touch-screen mobile phones. With the advent of the era of natural interaction, AR, VR and other intelligent devices have entered people’s lives. Eye movement interaction, somatosensory interaction and voice interaction have gradually become the mainstream interaction mode. As a natural interaction mode based on neuroscience, brain-computer interface (BCI) has attracted extensive attention. Brain-computer interface creats a direct connection between brain and external devices and provides a new interactive channel to implement two-way information exchange for the human-computer interaction system.

The use of brain-computer interfaces is generally considered in three situations. First, for patients lost basic interaction channels, such as patients with total paralysis, BCI provides them a path to control computers, products and assistant robots with neural signals, helps paralysis patients achieving the basic skills they need for daily life. By using BCI technology, products become the extension of the human body, such as brain-controlled wheelchairs. Second, when the user is in a complex work context such as operate with complex information systems with large amount of information input, operation is complex and each operation is critical, though all users’ multi-sensory channels are occupied, it is still difficult to complete the interactive tasks. In this case, BCI operation is a good choice. For example, studies have realized pilots control military aircraft, using the brain to control the aircraft to fly left and right and firing artillery shells, and achieved high identification accuracy [21]. Thirdly, some human-computer interaction tasks require people’s quick response. For example, in the military, the recognition and judgment of real and virtual scenes require quick action. At this time, the user’s intention is automatically judged by the brainwave, so that the computer can respond quickly and complete the target.

4.2 Improve the Reliability of Human-Computer Cooperation

Interaction based on the cognitive neuroscience provides fast and real user status and help the computer to judge the situation of human-computer system. Through the adaptive design and enhanced cognitive design, realize automatic control, determine under what circumstances give users help based on cognitive neuroscience evidence. When people deal with tasks of different cognitive difficulty, they consume different cognitive resources and carry different cognitive loads. For example, from the perspective of neuroscience, ratio of brain beta to alpha band power can reflect cognitive load to some extent. Under different task difficulty, the changes and differences of brain electrical activity can be used as the basis for human-computer cooperation, such as determining the weight of human-computer division of labor in human-computer interaction process, that is, in which scene the intervention of computer is needed and in which scene the decision of human is needed. To improve the reliability, safety and efficiency of human-computer system by recognizing, sensing and monitoring EEG state.

For example, intelligent driving systems that incorporate intelligent computing use EEG to monitor physical sleepiness to ensure safe driving; By monitoring the cognitive attraction, cognitive load and emotion of learners’ brain wave characteristics, the online learning system can adjust the learning plan and progress individually. Intelligent recommendation system realizes accurate recommendation through brainwave interest points; Persuasion robots use emotional computing to obtain people’s cognitive models and emotions, help people timely resolve bad emotions, and effectively persuade users to engage in a healthy lifestyle with real-time strategies. Alicia Heraz et al. [22] used the normalized peak characteristics of the four frequency bands of EEG to classify 8 emotions including anger, boredom, confusion, contempt, curiosity, delay, surprise and frustration by WEKA, and designed an intelligent system for emotion detection and intervention. The system consists of four modules: EEG signal acquisition module, signal processing module, emotional state induction module and emotion classification module. The intelligent system will have a certain positive impact on learners with disabilities, who are silent or have no interest in learning.

4.3 Optimize the Experience of Human-Computer Interaction

Brain-Computer Interface, the new human-computer interaction way, brings people new feelings and experiences. User experience is the actions, sensations, considerations, feelings and sense making of a person when interacting with a technical device or service [23]. User experience is composed of two main dimensions: hedonic and pragmatic aspects. Brain-computer interface bring natural and novel experiences from functional and social psychological level.

Especially in the field of entertainment, through novel interactive way brought by BCI to enhance the experience of players. For example, using the brain’s attention condition to control the flight of the drone. Under attention condition, users can control the drone to continue to fly in the sky. Laurent Bonnet et al. designed a two-player soccer game based on motor imagination potential. Players push the ball toward the left (or right) goal by imagining the movement of the left (or right) hand. This game also involves competition mode and cooperation mode, which expands the channel of multi-brain computer interaction design [24]. The brain-computer device represented by the NextMind with 8 electrodes and 60 g of weight converts the signals from the cerebral cortex into computer-readable digital commands through the brain-wave reading and analysis device, which can control the TV, input passwords, switch the color of lights according to the attention of color blocks, play duck games and VR games and so on. Open source platform allows researchers to enter, and in the near future, brain-computer games will become another NUI emerging game field after AR/VR.

5 Conclusion

Interdisciplinary integration is the general trend in today’s world. Due to the authenticity, timeliness and maturation of acquisition and analysis techniques of brain signal, cognitive neuroscience has been relatively applied in Human-computer interaction through the efforts of scholars from many fields in more than 30 years.

At the same time, after going through the development stage of CLI, GUI, MMUI, PUI and NUI, human-computer interaction has gradually stepped into the phase of multi-perception, rich modes and real-time continuous natural two-way information exchange, and there is a more urgent need for other natural and real data support besides human views and physiological data. This article take a non-invasive, high time resolution and low cost cerebral cortex signal collection method-EEG technology as an example to state crossover and fusion of EEG and Human-computer interaction not only brings the physiological, cognitive, emotional and intentional data of the user to the intelligent human-computer system from the perspective of neuroscience, but also provides the neural basis for the adaptive adjustment of the operating state and user-centered iterative improvement of the intelligent systems. What’s more, deep fusion of brain and computer leads to the birth of BCI technique. This seamless direct connection between human brain and intelligent system widens the human-computer interaction channel. Especially in the complex human-work interaction contexts, the additional interaction channel from neural signals can well solve the occupation of human interaction channels in complex environment and provide additional information output channel. High spatial and temporal resolution and authenticity of BCI, to some extent, guarantee the timeless and smooth completion of the work. Besides that, cognitive neuroscience is of great significance in the evaluation and adaptive work system design, it evaluates the work environment in real-time, continuously and dynamically from both human and computer aspects and provides neuroscience evidence for the design of adaptive human-work systems.

Today, design of HCI system based on cognitive neuroscience is one of the most popular directions in the field of NUI. At present, the cognitive functions such as attention, memory, emotion, decision of neural mechanism studies have been comparative maturity, the neural mechanism model and neural assessment method has been widely used in the design of human-computer collaboration system, especially the noninvasive brain-computer interface is preliminary already put into practical application, achieve the expand human-computer interaction channels, improve system reliability and improve the human-computer interaction experience.

However, due to individual differences in brain signal, the existing cognitive neural mechanism from individual dependent calculation to model generalization is still the focus and difficulty of the research. In addition, in the human-computer interaction process, more detailed neural loop working principle, feature extraction, algorithm optimization and more scene mining are challenges and opportunities for the development of human-computer interaction based on cognitive neuroscience in the future NUI, BCI and AI era. In general, the basic research and application of cognitive neuroscience in human-computer interaction are still in their infancy, and there is still a long way to go for the two to merge in nature.

References

Kosch, T., Hassib, M., Woźniak, P.W., et al.: Your eyes tell: leveraging smooth pursuit for assessing cognitive workload. In: Conference on Human Factors in Computing Systems – Proceedings (2018)

Ahn, M., Lee, M., Choi, J., Jun, S.C.: A review of brain-computer interface games and an opinion survey from researchers, developers and users. Sensors (Switzerland) 14 (2014). https://doi.org/10.3390/s140814601

Mu-ming, P., Du, J.L., Ip, N.Y., et al.: China brain project: basic neuroscience, brain diseases, and brain-inspired computing. Neuron 92, 591–596 (2016)

Qin, X., Tan, C.-W., Clemmensen, T.: Unraveling the influence of the interplay between mobile phones’ and users’ awareness on the user experience (UX) of using mobile phones. In: Barricelli, B.R., et al. (eds.) HWID 2018. IAICT, vol. 544, pp. 69–84. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05297-3_5

Cheng, S., Hu, Y., Fan, J., Wei, Q.: Reading comprehension based on visualization of eye tracking and EEG data. Sci. China Inf. Sci. 63(11), 1–3 (2020). https://doi.org/10.1007/s11432-019-1466-7

Keirn, Z.A., Aunon, J.I.: A new mode of communication between man and his surroundings. IEEE Trans. Biomed. Eng. 37 (1990). https://doi.org/10.1109/10.64464

Wang, X., Bi, L., Fei, W., et al.: EEG-based universal prediction model of emergency braking intention for brain-controlled vehicles. In: International IEEE/EMBS Conference on Neural Engineering, NER (2019)

Kang, J.S., Park, U., Gonuguntla, V., et al.: Human implicit intent recognition based on the phase synchrony of EEG signals. Pattern Recogn. Lett. 66 (2015). https://doi.org/10.1016/j.patrec.2015.06.013

Khushaba, R.N., Greenacre, L., Kodagoda, S., et al.: Choice modeling and the brain: a study on the Electroencephalogram (EEG) of preferences. Expert Syst. Appl. 39 (2012). https://doi.org/10.1016/j.eswa.2012.04.084

Slanzi, G., Balazs, J.A., Velásquez, J.D.: Combining eye tracking, pupil dilation and EEG analysis for predicting web users click intention. Inf. Fusion 35 (2017). https://doi.org/10.1016/j.inffus.2016.09.003

Park, U., Mallipeddi, R., Lee, M.: Human implicit intent discrimination using EEG and eye movement. In: Loo, C.K., Yap, K.S., Wong, K.W., Teoh, A., Huang, K. (eds.) ICONIP 2014. LNCS, vol. 8834, pp. 11–18. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-12637-1_2

Ko, K., Yang, H.C., Sim, K.B.: Emotion recognition using EEG signals with relative power values and Bayesian network. Int. J. Control Autom. Syst. 7 (2009). https://doi.org/10.1007/s12555-009-0521-0

Palaniappan, R.: Brain computer interface design using band powers extracted during mental tasks. In: 2nd International IEEE EMBS Conference on Neural Engineering (2005)

Anderson, C.W., Sijercic, Z.: Classification of EEG signals from four subjects during five mental tasks. Advances (1996)

Lee, J.C., Tan, D.S.: Using a low-cost electroencephalograph for task classification in HCI research. In: UIST 2006: Proceedings of the 19th Annual ACM Symposium on User Interface Software and Technology (2008)

Zheng, W.L., Zhu, J.Y., Lu, B.L.: Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. (2019). https://doi.org/10.1109/TAFFC.2017.2712143

Stikic, M., Johnson, R.R., Tan, V., Berka, C.: EEG-based classification of positive and negative affective states. Brain Comput. Interfaces 1 (2014). https://doi.org/10.1080/2326263X.2014.912883

Kumar, N., Kumar, J.: Measurement of cognitive load in HCI systems using EEG power spectrum: an experimental study. Procedia Comput. Sci. 84, 70–78 (2016)

Bouzekri, E., Canny, A., Martinie, C., Palanque, P., Gris, C.: Using task descriptions with explicit representation of allocation of functions, authority and responsibility to design and assess automation. In: Barricelli, B.R., et al. (eds.) HWID 2018. IAICT, vol. 544, pp. 36–56. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-05297-3_3

Ding, Y., Guo, F., Zhang, X., et al.: Using event related potentials to identify a user’s behavioural intention aroused by product form design. Appl. Ergon. 55 (2016). https://doi.org/10.1016/j.apergo.2016.01.018

Zhao, M., Gao, H., Wang, W., Qu, J.: Research on human-computer interaction intention recognition based on EEG and eye movement. IEEE Access 8 (2020). https://doi.org/10.1109/ACCESS.2020.3011740

Heraz, A., Frasson, C.: Predicting the three major dimensions of the learner’s emotions from brainwaves. Int. J. Electric. Comput. Eng. 2, 3 (2007)

Schrepp, M., Held, T., Laugwitz, B.: The influence of hedonic quality on the attractiveness of user interfaces of business management software. Interact. Comput. 18 (2006). https://doi.org/10.1016/j.intcom.2006.01.002

Bonnet, L., Lotte, F., Lécuyer, A.: Two brains, one game: design and evaluation of a multiuser BCI video game based on motor imagery. IEEE Trans. Comput. Intell. AI Games 5 (2013). https://doi.org/10.1109/TCIAIG.2012.2237173

Acknowledgments

This study was supported by the Foundation of Education Department of Inner Mongolia Autonomous Region, CHINA (No. ZSZX21098).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 IFIP International Federation for Information Processing

About this paper

Cite this paper

Miao, X., Hou, Wj. (2022). Research on the Integration of Human-Computer Interaction and Cognitive Neuroscience. In: Bhutkar, G., et al. Human Work Interaction Design. Artificial Intelligence and Designing for a Positive Work Experience in a Low Desire Society. HWID 2021. IFIP Advances in Information and Communication Technology, vol 609. Springer, Cham. https://doi.org/10.1007/978-3-031-02904-2_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-02904-2_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-02903-5

Online ISBN: 978-3-031-02904-2

eBook Packages: Computer ScienceComputer Science (R0)