Abstract

Reinforcement learning is a machine learning technique that makes a decision based on a sequence of actions. This allows changing a game agent’s behavior through feedback, such as rewards or penalties for their actions. Recent work has been demonstrating the use of reinforcement learning to train agents capable of playing electronic games and obtain scores even higher than professional human players. These intelligent agents can also assume other roles, such as creating more complex challenges to players, improving the ambiance of more complex interactive games and even testing the behavior of players when the game is in development. Some literature has been using a deep learning technique to process an image of the game. This is known as the deep Q network and is used to create an intermediate representation and then process it by layers of neural network. These layers are capable of mapping game situations into actions that aim to maximize a reward over time. However, this method is not feasible in modern games, rendered in high resolution with an increasing frame rate. In addition, this method does not work for training agents who are not shown on the screen. In this work we propose a reinforcement learning pipeline based on neural networks, whose input is metadata, selected directly in the game state, and the actions are mapped directly into high-level actions by the agent. We propose this architecture for a tower defense player agent, a real time strategy game whose agent is not represented on the screen directly.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

One of the main goals of the Artificial Intelligence (AI) field is to produce fully autonomous agents that interact with their environments to learn optimal behaviors, improving over time through trial and error. Creating AI systems that are responsive and can effectively learn has been a long-standing challenge, from robots, which can perceive and react to the environment around them, to purely software-based agents, who can interact with natural language and multimedia [12].

Within the AI field of study there are various approaches, proposed models and architectures. Supervised machine learning is an approach in which the training process is based on previously annotated training set. The process requires that the agent maps the input with the output, adjusting in accordance to the obtained error. However, in a situation where there is a very large sequence of actions to be labeled, this approach becomes unfeasible.

Another approach is Reinforcement Learning (RL), which can be determined as a mathematical structure based on psychological and neurological principles for autonomous experience-oriented learning. In this situation, labeled input/output pairs are not needed. The network learns by exploiting previous knowledge (through a reward mathematical function) to make decisions in new situations. These have been assuming an important role in many situations, particularly in video games.

Talking about video games, intelligent agents can create more challenging situations for the player. In particular, the elaboration of strategies according to the player’s behavior in the environment can make the actions of the autonomous agents cease to be obvious and predictable.

An interesting category of video games include strategy games and, in particular, of the Tower Defense (TD) genre. This type of game requires strategic thinking over time to formulate a plan that maximizes the player’s survival time. As it is impractical to label the actions that the agent must take at each moment of time, reinforcement learning is a viable learning strategy for the problem.

1.1 Tower Defense

Real-time Strategy (RTS) are games in which the player manages several characters or units with the objective of prevailing in some kind of conflict or obtaining some specific achievement [1]. In general, this challenge is significantly more difficult than the planning challenge in classic board games, such as chess, mainly because several units must be moved at any time and the state space is usually very large. The planning horizon can be extremely long, where the actions taken at the start of a game affect the overall strategy. In addition, there is the challenge of predicting the moves of one or more opponents, who also have multiple units. RTS are games that do not progress in discrete turns, but where actions can be taken at any time. The RTS games add the challenge of prioritizing time to the already substantial challenges of playing strategy games [1].

AI has been used in RTS games for research and innovation, in particular relying in Deep Learning (DL) topologies [2]. TD is a subgenre of RTS of games and it usually consists of a path where some enemies move and, around that path, the player can position some towers, responsible for causing damage to enemies. Enemies usually come in waves, groups of enemies that hoard together to try to complete the path. If an enemy manages to reach the end of the path, the player is usually punished in some way, such as losing life points, losing overall score or other form of punishment.

The work described in this paper led us through the design and development of a TD game to control the variables needed to research the application of RL for an agent. Also we did not find in the literature another implementation of agents to play Tower Defense. The first step is to focus on how to transfer the game state to the agent so that it uses it to perform actions in that environment. Therefore, the construction of the TD is focused on facilitating the provision of data to the agent’s input.

1.2 Using Reinforcement Learning in Games

Traditionally, RL uses the information on screen (pixels) as the source of processing [6, 7]. More recently, other format of data has been using in some games, as an example, in StarCraft [8, 11].

Some research has been done around Atari games to explore RL. In these games, agents often become even better than professional human players, without the need to explicitly code the logic of the game and its rules [9]. In other words, the agent learns by itself just by looking at the pixels of the game, the score and the ability to choose an action (activate buttons on a controller) just like a human player would do [9].

Even though the decision making appears to be simple, it hides the difficult task that any player who has ever played a game has probably noticed, which is the process of making instant decisions based on one of a single combination of pixels in a large number of possibilities that can appear on the screen at any time. In addition, there is also the chance that the player will find new, creative, situations.

Moreover, game environments are usually only partially observable. This is because the player is forced to make choices based on an indirect representation of the game (the screen) instead of knowing the parameters that govern the logic of the game. Thus, the player does not fully know the environment in which he is inserted. In addition, the actions to be taken by the player are also indirect, since the player’s decisions are in terms of buttons to be pressed, not semantic actions in the game [6].

So, the challenge is complex, but definable: how to use the experience of playing a game to make decisions that increase the score and generalize this decision-making process to new situations that didn’t happen before [6]?

Deep Q-network (DQN) take advantage of Artificial Neural Network (ANN) to solve this challenge. The architecture can be divided into two parts. First, a series of convolutional layers learns to detect increasingly abstract features of the game’s entry screen. Then, a dense classifier maps the set of these features present in the current observation to an output layer with a node for each combination of buttons in the controller [6]. A better representation of this process is shown in Fig. 1. The input consists of pixels, which are mapped into sensory characteristics that summarize what is being perceived on the screen through convolutional layers. These characteristics are used by later layers that perform the processing and choose the actions to be taken.

We propose a simplified architecture for the agent. Instead of receiving pixels as input, we propose to use metadata. These metadata consists of relevant information collected directly in the game. In this way, the sensory mapping shown in Fig. 1, which depends on the processing of a large amount of pixels, is replaced by data that is generated by the game itself, significantly reducing the necessary computational power.

2 Implementation

Considering the type of the game, the requirements of the RL agent and the objective of this work, the metadata collected from the game include: Score, number of enemies on the screen, money, lives remaining, the construction positions of the towers. The output was composed of actions such as: building a tower in a certain place, doing nothing, upgrade a tower, destroy a tower (Fig. 2).

This approach has another difference from other works. In TD games there is no real representation of the agent in the world: there is no avatar moving from side to side, taking shots at enemies. Only the results of the actions taken by the agent in the environment are shown, making it impossible to observe which are the places where the agent will build the first towers, which ones he should improve or even destroy. In this work it will be presented only the result of the strategies of the intelligent agent carrying out to reach the final objective of surviving the wave of enemies.

2.1 Tower Defense Project

The software developed was structured into two main modules, the first being TD itself and the second being the agent. As a RTS, the actions taken should be at the game’s execution time, making the agent’s execution to be in sync.

The TD itself is composed of several parts. The loop is responsible for the recognition of the pressed keys, after which comes all the control of the enemies and the towers existing in the game and finally the agent does all its controls, including training the network, generating a output.

All the data that comes from the TD can serve as input for the agent. However, using too much data for the network input can slow the agent’s learning process. Even so, using less data can make the agent never be able to converge, since he does not have enough information to achieve its purpose.

The training process depend on two main factors. The input data and the configuration of the neural network. The variation of the input of the network was a common process in this type of work. There were no values, or ideas for what the ideal input would look like for the network. In relation to the configuration of the neural network, several changes were made to the hidden layers of the network. Sometimes making it deeper and sometimes more shallow. During the development of the work described in this paper, many configurations were tried.

Initially TD was planned with a number of features, such as global improvements to the towers, slowing down enemies, a chance to apply attacks that would do double or triple damage and a means of restoring life. However, during the development process, it was noticed that several concepts initially foreseen should be abandoned due to the increase in the diversity of plays, so the game was simplified.

In a TD game, the assignment of lives to the player is a means of making the game come to an end. All enemies have an amount of damage that is discounted from lives when they reach the end of the path. The agent has a initial value of 20, and when it reaches zero, the game is over. Moreover, in the context of this work, further simplifications were made, restricting to a single, infinite, wave of enemies that are gradually strengthened. In addition, for progressively increasing the difficulty level, there are two mechanisms. The first is to increase the amount of health of new enemies generated as time passes. The second is to unlock more types of enemies as the agent progresses through the game. Initially only slimes are generated and, as time goes by, other enemies are unlocked offering new challenges to the player.

Some definitions that were used to implement the game are:

-

1.

Enemies: responsible for the difficulty factor of the game. Each enemy has information such as: the amount of money he gives when he dies, the amount of points he gives when he dies, the amount of life he takes when crossing the defined path, the amount of life he has and the speed at which the enemy moves. Six types of enemies have been created where each has different attribute values.

-

2.

Path: where enemies travel, from a starting point to an end point.

-

3.

Agent: controls the construction, improvement and sale of the towers. Each of these actions has consequences that may not be immediate, adding to the game’s challenge. The agent must take actions considering what he observes in the environment. The agent’s goal is to achieve the highest score possible before losing all their lives.

-

4.

Towers: responsible for defeating enemies that are walking the path around them. The different types of towers are characterized by information such as the damage done to enemies for each attack it makes, the range of each attack, the price to buy it, the price to make an improvement and the speed at which it executes an attack. The improvement function has been added to the towers, where at a cost of some cash value, the attack range and damage is increased. It is also possible for the towers to be sold, returning part of the money invested in them.

-

5.

Score: represents the agent’s ability to play the game. The higher the score, the better the agent was able to deal with the situations presented during the game. The score is increased by killing enemies throughout the game.

-

6.

Money: resource for building and improving the towers. Money is earned by shooting down enemies or selling towers.

-

7.

Lives: number that represents the agent’s health. Condition of end of the game.

2.2 Parametrization

As RTS game, a balance is necessary for the game to be fun and offer a fair challenge. Therefore, there was a calibration phase of the game parameters in search of this balance. The game was calibrated in a way that allows a challenge to the human, but that is subject to considerable scoring.

The dimension of the enemies was based on mixing some attributes. Each enemy has a unique characteristic and certain combinations in the generation of enemies would create challenging situations. For example, if some enemies are resistant and resilient (have a lot of life) and slow speed, and are spawned simultaneously with several fast and weak enemies, they could take advantage to get to the end of the path, since the towers would be focused on attacking slow enemies that first entered the tower’s reach. Table 1 shows the values given to enemies of TD.

The definition of the number and characteristics of the towers was defined looking for balance in relation to the enemies. Starting with a balanced tower in its attributes and spawning others with an attribute with a high value and another with a low value. Thus, for each tower there were scenarios in which it fit most efficiently. With this, the agent had several strategies to explore, just varying the combination of the towers and their positions. Table 2 shows the characteristics used by the towers.

The data presented in Table 2 are only the base values for each tower. Performing the improvement in the tower causes some of these parameters to be upgraded, allowing the game to be more balanced for the agent’s side. Table 3 presents the data that are added for each improvement made in each type of tower. All towers have a maximum limit of ten improvements.

There are some other responsibilities that have been attributed to the game map, such as generating new enemies. The value for this generation was set at 1.5 s for the graphical version. In addition to generating new enemies, the map is also responsible for gradually increasing the game’s difficulty. The difficulty of the game is increased every 30 s.

2.3 Development

The first developed version of the game has a graphical interface, enabling both the agent and a human to play the game. Also during this version, numerous parameter calibrations were made, based on observations of the game.

Figure 3 shows the game screen where it can be seen that there are eight gray dots and three of them are already occupied with towers. These are the possible points at which the player can build the towers. The number of points and the positions of the towers were maintained in all experiments.

In preliminary tests it was observed that each match with graphical interface took a long time to finish, which would prevent the execution of an appropriate amount of tests. Typically, agents trained by RL in gaming environments need several iterations to converge [10]. Therefore, it was necessary to look for an efficient way to simulate the execution of the game, before proceeding with the development of the agent. Matches took a long time to run due to the graphical interface, but also because the game’s events were based on the real world clock. The reliance on the clock was necessary for humans to be able to play, allowing the observation and calibration of parameters, making the game balanced and capable of formulating strategies. To make executions faster, the game was reimplemented without the graphical interface. Reliance on the game’s real world clock has also been eliminated, for better analysis of the RL training and results.

With this change, TD can be seen as a simulator. In the graphical version, the timing of game events was based on the real world clock. For example, new enemies appeared every 1.5 s from the real world clock. So, no matter how efficient the implementation is, it would be necessary to wait a second and a half for a new enemy to enter the game. Since all the events in the game were tied to the real world clock, this made the execution of a game too long. Although the idea of the simulator is not to depend on the clock in the real world, it is necessary to use some measure of time to synchronize the events that happen in the game. In this way, we call each iteration of the game a cycle.

To preserve the balance of the simulated game with the calibration obtained in the graphical version, it was necessary to find a way to map the clock time in cycles. For this, we created a unit called Epoch, equivalent to one second of the clock time, which consists of 840 cycles.

The transition from the graphics game to the simulation had to be done using some data as a basis. These data were taken from enemy move speeds and are: 0.5; 0.75; 1; 1.25; 1.5; 1.75 and 2. The first step in working with this data was to find a common value among all these data and with this common value future calculations would not result in float values. In order for all of them to become whole values, each of them was multiplied by four, resulting in the values: 2, 3, 4, 5, 6, 7 and 8. With these new values, a minimum common multiple among all was realized. them, resulting in 840, so every 840 cycles we have an Epoch.

In the first version of the simulator, all cycles were performed. In each cycle, several checks were made to determine if it was time to trigger possible events. However, in the vast majority of cycles there was no event to trigger, which made the checks exhaustive and inefficient. As a result, the total of running time wasn’t absurdly lower than in the graphic version of the game.

In a second version of TD without an interface, the idea was to skip cycles that would have no events to run. For this, an execution list was created, where each game object calculated in which cycle the next event would be triggered.

The execution queue consists of a collection of events ordered by the number of cycles until their next execution. The descriptor of each event consists of a tuple containing a reference to the object, the number of cycles until the next execution and the number of cycles between the executions of the events. In the following example, the next event will be triggered by the SLIME_1 object in 10 cycles. This means that it is not necessary to simulate the next 10 cycles as no event will happen. In this way, SLIME_1 triggers its event and 10 cycles are subtracted from the number of cycles for the next execution of all objects in the list. In addition, SLIME_1 is reinserted in the list with 20 cycles remaining until its next run, which corresponds to its period.

Queue before SLIME_1 execution: [[ SLIME_1, 10, 20], [ SCORPION_1, 15, 15], [ BASIC, 420, 840]] Queue after SLIME_1 execution: [[ SCORPION_1, 5, 15], [ SLIME_1, 20, 20], [ BASIC, 410, 840]]

If an enemy dies, or a tower is sold, these objects are immediately removed from the execution list. When a new tower is purchased or a new enemy is generated, they are placed in the execution list in order.

3 Development of the RL Agent

The development of an intelligent agent using a RL approach is an experimental task. This approach requires careful design of system components such as inputs, outputs and the learning mechanism. In addition, there are several components in the system that are configurable, which makes it necessary to carry out several experiments to determine the best combinations of parameters.

There would be countless ways to extract the metadata and use them as input for the agent, like the model proposed for the agent, shown in Fig. 2, which had its input characterized by metadata taken from TD. So we had to test various combinations of metadata. The next step was the definition of a ANN whose learning mechanism should be able to approximate the Q function. Finally, the end point, where the agent could perform actions in the game such as: construction, improvement and towers sales. We did an exploration looking for different ways to represent the way out.

One of the challenges of agents using DQN is that the neural network used tends to forget previous experiences, as they replace them with new experiences [13]. Therefore, it is necessary to maintain a collection of experiences and observe them to train the model with those previous experiences. This collection of experiences is known as memory.

All the agents implemented in this work had their memory with a defined size of 2000. Each piece of memory consists of:

[state, action, reward, next_state, done]

State and next state are the information the agent has about the environment in the current state and the next state, respectively. Each state represents the agent’s entries. Action is the action that the agent performed when it was in state. Reward says how much reward the agent has accumulated between state and next state. Done is used only to inform the end of the game after this action has been taken.

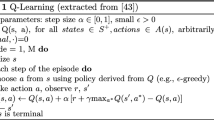

DQN approximates the Q function, which relates a state and an action to the expected reward. To approximate the Q function using a neural network, an error function which can be optimized is necessary. Such a function lists how much a prediction differs from the real value of the function. Equation 1, proposed by [13], presents the error function used in agent training. s is the current state and a is the action taken. s′ is the state reached from the action a in state s. a′ is the action that maximizes the reward in the s′ state. r is the reward between s and s′. The equation is the quadratic error between the prediction Q (s, a), in relation to the value of r + γ Q(s′; a′). In other words, it represents the divergence between the predicted value for the reward when taking the action a in the state s in relation to a precise approximation, obtained after obtaining the reward and forecasting the reward in the next state.

Usually, the reward is tied to the score the agent is getting, [4, 13, 14]. In this work we follow this same idea. The reward was considered in three scenarios. Being a periodic reward, a reward linked to enemies and a penalty when the agent loses the game.

3.1 Game Metadata

The metadata taken from the game varied according to the various tests performed. The agent’s input varied in size and even the form in which the agent’s environment was represented.

Table 4 presents some of the information taken from the game and passed to the agent.

The most relevant point is the diversity of combinations that we can provide to the agent. In this work, we explored only some of the possibilities of the various combinations of information in the game. The various combinations directly impact on how the agent observed the environment, consequently its performance as well.

In the first versions of the game, some humans were put to play and we collected the various scores they obtained. In relation to the implemented agents, the average performance obtained was lower than that of the human. However, it is possible to observe an evolution of the score over the various combinations assembled for the agent to observe the environment. This evolution is shown by Table 5.

The average score that a human reached 18680 points, in a few matches the human already managed to reach high scores. However, the agents performed 2309 matches, and during the various combinations the maximum average increased. Also decreasing the difference between maximum score and the mean score. Keeping this pattern, increasing the amount of executions the agent could get results closer to human.

4 Conclusion

In this work several agents were developed using Reinforcement Learning techniques based on Deep Q-Learning to play Tower Defense. In other jobs using Deep Q-Learning, agents usually receive video frames from the game as input. In this work we investigate the possibility of the agent receiving metadata from the match as input. As we did not find in the literature another implementation of agents to play Tower Defense, the agent was built in an experimental and incremental way.

Initially the game was designed with the aim of encouraging the player to create strategies to overcome the increasingly challenging hordes of enemies. Firstly, the game was implemented with a graphical interface, allowing humans to play. Using this version, the game was calibrated to offer a balance between challenge and fun. Several parameters of the agent were initially adjusted in this version.

As the graphical version was designed for humans to play, the timing of the game’s events was dependent on the real world clock. This caused the matches to take a long time to execute. This would prevent long-term experiments, which is important for assessing the trend of the agent’s progress. The solution found was to re-implement the game in a version that does not depend on the real world clock and that does not use a graphical interface. This implementation was called a simulated version. All other agents were developed in this new version.

This work showed that it is possible to build an agent based on Reinforcement Learning capable of playing Tower Defense. No other work in the literature reports the development of autonomous agents to learn how to play this type of game. In addition, the design of metadata based inputs made it possible to use input data that is simpler than approaches based on video frames. For this reason, this approach is promising to embed agents based on reinforcement learning in devices with less computational power.

The results reported in this work suggest new directions for future work. The agents used in this work do not directly model the temporal relationship between the sequences of actions and their respective consequences. Future work might include the evaluation of how the use of techniques based on temporal patterns, such as Long short-term memory (LSTM)s and hidden Markov chains can be used to consider temporal behavior. Another possible research would be to evaluate the strategies created by the agent. Strategy evaluation is a technique that can help improve the calibration of parameters in other games. Exploring more input variations for agents is also a possible future work, seeking to provide more relevant information for decision making. Finally, a comparison with an agent based on video frames would also be interesting to assess the difference in performance between this approach and the approach presented in this work.

References

Justesen, N., Bontrager, P., Togelius, J., Risi, S.: Deep Learning for Video Game Playing. arxiv (2017). https://arxiv.org/pdf/1708.07902.pdf

Peng, P., et al.: Multiagent Bidirectionally-Coordinated Nets for Learning to Play StarCraft Combat Games. CoRR (2017). http://arxiv.org/abs/1703.10069

Gym library. https://gym.openai.com/. Accessed 16 May 2020

Deep Q-Learning with Keras and Gym. https://keon.github.io/deep-q-learning/. Accessed 20 May 2020

How to teach AI to play Games: Deep Reinforcement Learning. https://towardsdatascience.com/how-to-teach-an-ai-to-play-games-deep-reinforcement-learning-28f9b920440a. Accessed 12 May 2020

Advanced DQNs: Playing Pac-man with Deep Reinforcement Learning. https://towardsdatascience.com/advanced-dqns-playing-pac-man-with-deep-reinforcement-learning-3bd99e0814. Accessed 25 Apr 2020

How to match DeepMind’s Deep Q-Learning score in Breakout. https://towardsdatascience.com/tutorial-double-deep-q-learning-with-dueling-network-architectures-4c1b3fb7f756. Accessed 25 Oct 2019

Justesen, N., Risi, S.: Learning Macromanagement in StarCraft from Replays using Deep Learning. CoRR (2017). https://arxiv.org/pdf/1707.03743.pdf

My first experience with deep reinforcement learning. https://medium.com/ai-society/my-first-experience-with-deep-reinforcement-learning-1743594f0361. Accessed 21 Feb 2020

Mnih, V., et al.: Playing Atari with Deep Reinforcement Learning. arxiv (2013). https://arxiv.org/pdf/1312.5602v1.pdf

Usunier, N., Synnaeve, G., Lin, Z., Chintala, S.: Episodic Exploration for Deep Deterministic Policies: An Application to StarCraft Micromanagement Tasks. CoRR (2016). https://arxiv.org/pdf/1609.02993.pdf

Arulkumaran, K., Deisenroth, M.P., Brundage, M., Bharath, A.A.: A Brief Survey of Deep Reinforcement Learning. arxiv (2017). https://arxiv.org/pdf/1708.05866.pdf

Mnih, V., et al.: Human-level control through deep reinforcement learning. Nature 518, 529 (2015)

Van Hasselt, H., Guez, A., Silver, D.: Deep reinforcement learning with double q-learning. In: Thirtieth AAAI Conference on Artificial Intelligence (2016)

Acknowledgments

This work has been supported by FCT – Fundação para a Ciência e Tecnologia within the Project Scope: UIDB/05757/2020.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Dias, A., Foleiss, J., Lopes, R.P. (2022). Reinforcement Learning in Tower Defense. In: Barbedo, I., Barroso, B., Legerén, B., Roque, L., Sousa, J.P. (eds) Videogame Sciences and Arts. VJ 2020. Communications in Computer and Information Science, vol 1531. Springer, Cham. https://doi.org/10.1007/978-3-030-95305-8_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-95305-8_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-95304-1

Online ISBN: 978-3-030-95305-8

eBook Packages: Computer ScienceComputer Science (R0)