Abstract

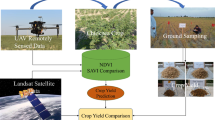

It is well known that nowadays remote sensing has a very crucial role in agricultural applications using in particular spectral indices as analysis tools useful to describe the temporal and spatial variability of crops, derived from processing of satellite images, each with different resolutions on the ground, according to the satellite of origin. It is also known that today such information can also be obtained through the use of sensors mounted on UAV (Unmanned Aerial Vehicle). In the present note we want to carry out a detailed analysis to define the condition of vigor of a vineyard situated in the province of Reggio Calabria (Southern Italy), comparing multispectral satellite images (Sentinel-2) with those provided by UAV platforms at low altitude, using as a parameter of effectiveness the relationship between the NDVI (Normalized Difference Vegetation Index) and the vigor of the crops. It is also proposed a GIS (Geographic Information System) for the management of agricultural land in order to build a system that can provide alerts in case interventions are needed depending on crop water stress.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Precision Agriculture

In recent years, Precision Agriculture (PA) has received significant attention in the agricultural world. It enables automated management of portions of land on a “sub-apple” scale by integrating information technology and agronomic practices [1].

With the intention of integrating the concepts of business management and process automation, precision agriculture is a management strategy [2] that uses information technology to collect data from multiple sources in order to use them in decisions regarding field production activities [3].

Particularly interesting for this purpose is the crop monitoring that is based on observations carried out directly on crops in place in order to obtain data on phenological stages, nutritional status [5], phytosanitary status [6], production expectations [4, 7], production maps [8], etc. It is essential that this is automated given the large amount of data to be collected and processed [9].

The crop monitoring, in particular, uses remote sensing data and is based on the link that exists between a series of parameters related to the leaf curtain [10] that can express the vegetative-productive responses of plants and evaluate the variability as a function of the different behavior of surfaces and bodies [11] to the phenomena of absorption or reflection of light in the visible and infrared [12].

Since the ‘70s, thanks to satellite remote sensing, large agricultural areas have been monitored for stock forecasting purposes [13], thus providing useful data for agriculture itself. Remote sensing techniques are very useful in fact to assess the state of vegetative health [14] thanks to the particular optical behavior of plants in the infrared radiation band [15]. The expensive flights of airplanes equipped with special cameras were soon supplanted by satellites that, continuously orbiting around the Earth, acquire data on the electromagnetic emission of objects on the Earth's surface, and therefore also of crops, with their multispectral sensors if passive, radar if active. Passive sensors in particular, however, have limitations in the necessarily diurnal acquisition and in the eventual cloud cover that constitutes an important obstacle. Also, the level of detail achievable does not allow certain types of analysis on small plots of land.

On the other hand, UAVs can be very useful by collecting more detailed georeferenced information with different types of sensors [16,17,18,19, 32].

In viticulture in particular, facing difficulties during production cycles by defining an adequate crop management, the PA approach has the final goal of improving vineyard yield and grape quality, while reducing all wastes, costs and decreasing environmental impact [20].

A correct knowledge of the spatial variability between and within crops is a fundamental factor for winemakers to estimate yield and quality results. Data provided by optical sensors in multispectral and hyperspectral imagery systems are exploited to calculate a broad set of crop-related indices (such as, for example, the LAI - Leaf Area Index [21]), of which the normalized difference vegetation index (NDVI) is one of the most widely used because it is related to crop vigor and, therefore, to estimated quantity and quality of field production.

MultiSpectral Instrument (MSI) of Sentinel 2 covers large areas and many satellite programs (i.e., Landsat, Sentinel-1 and Sentinel-2) now freely supply datasets, so promoting the exploitation of satellite imagery for many applications, agricultural too, including multisensor and multiresolution data fusion [22, 23]. Sentinel-2 from the European Space Agency (ESA) offers decameter resolution with six days revisiting time and efficient resolution in analyzing crop variability and conditions. But if we consider crops as orchards and vineyards (with discontinuities in layouts) remote sensing is more difficult. In fact, the existence of paths between yields and weedy vegetation within the cultivated land can greatly influence the overall calculation of spectral indices, resulting in a less accurate assessment of crop status. In order to overcome this criticality, new approaches and algorithms have been developed that also use multispectral data from UAVs [23, 24].

Low-altitude platforms, as UAVs with airborne sensors, by acquiring images with high resolution, having also flexible flight planning, allow to differentiate pure canopy pixels from other objects, even classifying details within canopies [25].

Specifically, it is possible to successfully combine an unmanned rotary-wing platform with a multispectral sensor in order to detect and monitor water-stressed areas of orchards, vineyards and olive groves [26].

NDVI index calculated by processing images taken in the infrared, is a parameter used in PA because it is directly related to the health of the vegetation, allowing to discover problems such as nutrient deficiency, the presence of parasitic infections or conditions of water stress. Early detection of such situations allows for targeted and effective intervention with cost savings and increased crop yield. Often infrared detection allows problems to be detected before they are visible to the naked eye [27, 28].

Multispectral sensors used on UAVs can record at least three channels like a normal camera but one of the channels is replaced by infrared. Although multispectral sensors can acquire in more than four bands, and multispectral cameras can record more than the 3 channels here defined, in this application each image will consist of two visible colors plus infrared [29, 30]. The NDVI index is thus calculated in a single image through a variant of the standard formula. The processing is done automatically in the GIS we used (QGis). The maps obtained after processing are false-color maps in which red represents areas of maximum vitality and are called “Vigor Maps” [31, 33].

In this paper, we are presenting an in-depth analysis of vineyards by comparing MultiSpectral Instrument (MSI) provided by a decameter resolution satellite and a low altitude UAV platform. The effectiveness of MSI from Sentinel-2 and UAV airborne sensors, with very high resolution, was evaluated considering the relationship between crop vigor and NDVI. UAV data were compared with satellite images, by calculating three NDVI indices to analyzing the contribution of the vineyard elements considering: (i) whole agricultural area; (ii) only vine canopies; and (iii) only inter-row soil [5, 34, 35].

Obviously, the proposed methodology can be extended to other types of crops grown in rows, where the crop canopies do not extend over the entire area or where the presence of grass or bare soil is significant [6, 36, 37].

2 Materials and Methods

Our study analyzed a vineyard situated in Bova Superiore, a small municipality in the province of Reggio Calabria (South Italy), locality Briga, covering an area of about 0.42 hectares. The cultivated territory is located in an area between latitudes [37.9855 and 37.9862’] and longitudes of [15.9142 and 15.917719’] and includes a series of parcels cultivated as vineyards, the most representative of which have respectively an extension of about 0.24 ha and 0.18 ha (Fig. 1).

The vineyard is located on a sloping land with a varied morphology, with an altitude ranging from 600 to 800 m above sea level and an orientation mainly facing south.

The interaxis between rows is 2 m, between one row and another there is a space of one meter while the width of the canopy of the row is about one meter. The planting dates back to 2016.

For the irregular land morphology as elevation, and soil characteristics, the considered vineyard is presumably characterized by variations in vine vigor within and between plots.

For extending the study to different phenological vine phases, we conducted survey campaigns (both satellite and drone) from May to September 2020. The vigor in fact varies throughout the phenological cycle, and consequently we acquired the images in four stages between flowering and ripening for considering various vegetative states [38]. However, some meteorological trends (including below-average rainfall) caused stress on crops by affecting the growth of plants [44].

As satellite data were used Sentinel-2 Level 2A acquired on May 24, July 28, August 27 and September 21 2020 at 09:40 UTC (in Fig. 2 you can see an example) and in the Table 1 we report the image characteristics [39].

Regarding instead the multispectral images acquired by drone, it is noted that it was used the Parrot Bluegrass drone that integrates the multispectral sensor Parrot Sequoia, suitable for use in agriculture can capture images of crops in both the visible spectrum, both in the infrared. This system includes:

-

A multispectral sensor recording crop images of crops in four spectral bands: Green (500 nm Bandwidth 40 nm), Red (660 nm Bandwidth 40 nm), Red-edge (735 nm Bandwidth 10 nm) and Near Infrared (790 nm Bandwidth 40 nm).

-

An RGB camera (16 MP).

-

An integrated 64 GB memory.

-

A built-in brightness sensor (‘sunshine’ sensor) that records light situation and calibrates automatically the four multispectral sensors. The 'sunshine' sensor integrates an SD card slot to expand storage capacity.

-

Sequoia also integrates GPS and IMU (Inertial Measurement Unit).

Table 2 shows UAV, sensor’s image and characteristics.

Figure 3 shows the parcels on the ground, with the superimposition of the hyperspectral camera images.

In Fig. 3 (a) are shown in false colors (NIR channels, red and green) the limits of the considered particles (marked by yellow polygons identified with “Area-A” and “Area-B”), returned by UAV. In particular the UAV-based multispectral images were processed with the Agisoft PhotoScan® software, processing imagery sequences of more than 1000 images acquired by a Parrot Sequoia® multispectral camera [40,41,42]. UAV flights were conducted on May 6, June 27, August 10, and September 15 at different hours.

The UAV route was designed to maintain flight height about 30 m above the ground by correctly defining waypoint sets. With this specification, the aerial GSD images measure 5 cm (Table 2).

Figure 3 shows the parcels on the ground, with the superimposition of the multispectral camera images, reference system is WGS84.

2.1 Data Processing

At this point we proceeded to comparing and analyzing the images obtained from the UAV and satellite with respective spatial resolutions after selecting from the Sentinel images the pixels fully enclosed in the limits of the 2 study areas considered “Area A” and “Area B” (Fig. 3).

In this regard, a procedure has been implemented to automatically determine the value of NDVI from satellite (NDVIsat), to achieve homogenization of Sentinel and UAV data (through a downsampling of correlation between pixels s(i, j) from satellite and P(i,j) from UAV), to calculate the NDVI from UAV (NDVIuav) and to calculate both the NDVI for the leaf canopies of the vines (NDVIvin) and NDVI of inter-row area (NDVIint).

In fact, important for evaluating the variability in the vineyard and therefore the vines vigor is NDVI index, thus calculated for the pixels of the Sentinel image s(i,j) thanks to the spectral data) in RED and NIR bands:

A preliminary downsampling method of the high-resolution UAV images was done to allow the comparison of the UAV-based MSI and the Satellite imaging. So we proceeded to sampling the UAVs, data (at higher resolution) for comparing them with the corresponding satellite data, i.e. the set of UAV data D corresponding to P(i,j):

Thus the satellite data s(i,j) and UAV data P(i,j) show the same subset of the vineyard. Three NDVIs were analyzed from the VHR 2data from the multispectral sensor mounted on the UAV, then compared with the satellite data on:

(i) the entire cultivated area P(i,j):

(ii) the pixels of the canopies:

(iii) the pixels of the inter-rows:

In Fig. 4, (a) shows the NDVIsat map obtained the full set of pixels selected from Satellite imagery; (b) shows an NDVIuav map congruent (correctly aligned, at the same spatial resolution) to those derived from satellite imagery (NDVIsat); (c) shows the complete NDVIvin map; (d) shows the NDVIint map of the inter-row ground, derived by processing the UAV images (Table 3).

At this point, as in [5], we consider a particular study area of the vineyard of limited dimensions about 10 m by 10 m (size about 1 pixel) calculating NDVIuav in false color (Fig. 5a), NDVIuav in two classes canopy and inter-row (5b), NDVIvin value calculated in the set Pvin (5c) and NDVIint calculated in the set Pint (5d).

Study area with 10 m ×10 m vineyard selection: (a) Enhancement of subset P(8, 20) of UAV map D2 in false colours (Green, Red and NIR); (b) pixels d(u, v) ⊂ P(8, 20) classified into two classes: Pvin (green), vine canopies, and Pint (brown), inter-row ground; (c) NDVI values of Pvin, vine canopies; (d) inter-row ground Pint. (Color figure online)

3 Results

At this point, having available the NDVI values, both from UAV and satellite, we proceed to interpolate (correlation) the various data obtained, related to the categories NDVIuav, NDVIvin, NDVIint, in order to assess whether the response from the two methodologies can be comparable [43]. For evaluating the comparison of satellite and UAV images effectiveness in depicting and evaluating the vigor value variability estimated relative to the vineyard, we calculated four NDVI maps over time:

-

an NDVIsat map from satellite images;

-

(i) an NDVIuav full map (spectral values from all the pixels showing both inter-row ground and vine canopies);

-

(ii) an NDVIvin map of vine canopies;

-

and (iii) an NDVIint map for paths between rows.

For comparing UAV-based images with satellite images (having 10 m GSD), UAV images - high-resolution - were decampioned as previously indicated.

The matching between each pair of spatiotemporal maps was examined using pair was examined with statistical comparison techniques, in particular Pearson's correlation coefficient, as measure of map similarity, after operating a normalization procedure focusing differences of the map pair.

With a preliminary investigation we examined the robustness of the set of data comparing NDVIsat map and NDVIuav full map, for the two Areas and four acquisition campaigns as can be seen by way of example from the correlation for the pair of images D1/S1, detailed for “Area A”, “Area B” (note Fig. 6a–c where the NDVIsat map values (x axis) are correlated with:

-

(a) the NDVIuav full map (y axis);

-

(b) the NDVI values enhanced of NDVIvin map (y axis);

-

(c) the leftover NDVI values of the NDVIint map (y-axis).

The values of the Pearson correlation coefficients obtained, referred to as RSat/UAV, confirmed the consistency between the information obtained by the two platforms.

Once the coherence of the chosen dataset was verified, the information quality offered by the two types of NDVI maps on vineyard vegetative condition was tested by comparing the NDVIsat and NDVIuav maps with the three-class field assessment of vigor performed by experienced vigor operators (Fig. 6). Statistical techniques based on analysis of variance did not show a significant difference between vigor groups.

The use of an image with a resolution of about 30 cm such as a WorldView-3, although not comparable to the drone data as resolution, would still allow a better definition of the vigor of the vines and, more generally, of the row crops.

WorldView-3 offers 31 cm ground sampling distance (GSD) for the panchromatic band and 124 cm for the 8 multispectral bands. With imagery from the WorldView-3 satellite, a much more accurate vigor analysis could be accomplished by proceeding as was done here with the UAV. In this case, since the inter-row areas are discriminable (those, acquired by decametric satellite sensor, lead to a bad understanding of the actual vigor of the vines), we could also provide a verification with Object Based Image Analysis (OBIA), operating firstly a segmentation of the canopies and inter-row areas, then proceeding separately to the classification of the vigor through the different NDVI found in the extraction of the objects formed with OBIA (extracting objects directly from satellite imagery is one of the strengths of OBIA, which is used in a wide range of applications [27]).

As a result of these analyses we realized a GIS (Fig. 7) for monitoring and managing agricultural land with Remote Sensing using, as input data, UAV images and Very High Resolution (VHR) satellite imagery classified with OBIA. The GIS is useful for agriculture in general and not only for vineyard management, and takes into account the geomorphology of the land, climatic conditions (wind, rain etc.) and moisture conditions of the soil for the crops. This system can provide alerts in case interventions are needed depending on crop water stress.

Our analysis of satellite imagery at decametric resolution revealed limitations in providing information on the conditions of vineyards: indeed crop information can be modified by inter-row soil which, as for row crops, could modify the assessment. In fact, we found a strong correspondence between NDVIsat satellite map and NDVIint map obtained from UAV images depicting just inter-row pixels (table on Pearson's correlation coefficients above 0.6 over 65% of the map pairs and never below 0.52). In addition, we found a poor relationship among NDVIsat satellite map and NDVIvin map, that in UAV images considers only pixels of he vine canopies. More than 87% of the Pearson Rsat/vin correlation coefficients were equal to or less than 0.42 (Table 4).

This analysis shows that, in the presence of crops in which paths and inter-row surfaces cover a significant part of cultivated land, as vineyards, we see that the information by the satellite platforms with sensor of decameter spatial resolution is not sufficient to correctly assess the condition of the crops and their variability. The inter-row area may be bare soil, or covered by grass, by other crops for integrated pest control, according to the crop choices made. Thus, vineyard vigor may not match that of the inter-row areas, leading to biased assessments of vineyard vigor from decameter spatial resolution images. We verified the efficacy of NDVIvin and NDVIsat maps in identifying plant vigor, in accordance with expert field assessment, using statistical techniques based on analysis of variance method. The results show that, in crops where is inter-row ground, the information acquired from satellite platforms at decametric resolution do not allow to correctly assess the state and variability of the crops, which instead is possible and accurate through the use of sensors from UAV [34, 35]. This additional verification confirmed the most important result of the analysis performed, demonstrating that, when crops have inter-row areas covering a significant portion of the cultivated land, such as vineyards, satellite-acquired radiometric information may have difficulty in correctly assessing crop condition and variability. In these scenarios, to properly evaluate variation inside and between vines, high-resolution images are needed.

4 Conclusions

Our article introduces an in-depth analysis with a comparing of multispectral vineyard imagery acquired from satellite platforms as Sentinel-2 [36], at decameter resolution, and ultra-high resolution, low altitude UAV platforms. We evaluated the effectiveness of the specified satellite images and those from UAVs based on NDVI defining vineyard vigor. A farmland located in Bova Superiore (Calabria, Southern Italy) was selected as experimental site for the realization of four imaging campaigns scheduled according to the main phenological stages of the grapevine.

The outcomes demonstrate that in vineyards the data captured by satellite systems at decametric resolution are not adequate to correctly assess the condition and variability of crops. In fact, on the basis of Sentinel-2 images, vineyard vigor could be in discordance with inter-row zones obtaining an erroneous assessments of vineyard vigor. This was demonstrated by an in-depth analysis of the contribute of the various components within the cultivated land by determining three distinct NDVI indices from the high-resolution UAV images, considering: (i) the entire cropland area; (ii) only the vine canopy; and (iii) only the soil pixels between the rows. The satellite-based NDVI maps were shown to be better. correlated to NDVI maps calculated from high-resolution UAV imagery relating only to inter-row surfaces, whereas NDVI from UAV imagery relating only to pixels representing vine canopies better describes vineyard vigor. The proposed approach can be extended to other types of crops grown with substantial inter-row spaces.

The GIS realized for monitoring and managing agricultural land with Remote Sensing with UAV images and VHR satellite imagery classified with OBIA is very useful for agricultural management, producing also alerts in case of crop stress.

References

Arnó, J., Martínez-Casasnovas, J.A., Ribes-Dasi, M., Rosell, J.R.: Review, precision viticulture, research topics, challenges and opportunities in site-specific vineyard management. Span. J. Agric. Res. 7, 779–790 (2009)

Silvestroni, O., Lanari, V., Lattanzi, T.: Canopy management strategies to control yield and grape composition of Montepulciano grapevines. Aust. J. Grape Wine Res 25, 30–42 (2018)

Bramley, R.G.V., Hamilton, R.P.: Understanding variability in winegrape production systems. Aust. J. Grape Wine Res 10, 32–45 (2004)

Song, J., et al.: Pinot Noir wine composition from different vine vigour zones classified by remote imaging technology. Food Chem 153, 52–59 (2014)

Khaliq, A., Comba, L., Biglia, A., Ricauda Aimonino, D., Chiaberge, M., Gay, P.: Comparison of satellite and UAV-based multispectral imagery for vineyard variability assessment. Remote Sensing. 11, 436 (2019)

Primicerio, J., Gay, P., Ricauda Aimonino, D., Comba, L., Matese, A., Di Gennaro, S.F.: NDVI-based vigour maps production using automatic detection of vine rows in ultra-high resolution aerial images. In: Proceedings of the 10th European Conference on Precision Agriculture, Israel, pp. 465–470 (2015)

Hall, A., Lamb, D.W., Holzapfel, B., Louis, J.: Optical remote sensing applications in viticulture - a review. Aust. J. Grape Wine Res 8, 36–47 (2002)

Lanjeri, S., Melia, J., Segarra, D.: A multi-temporal masking classification method for vineyard monitoring in central Spain. Int. J. Remote Sens 22, 3167–3186 (2001)

Bramley, R., Proffitt, A.P.B.: Managing variability in viticultural production. Grapegrow. Winemak 427, 11–16 (1999)

Enenkel, M., et al.: What rainfall does not tell us—enhancing financial instruments with satellite-derived soil moisture and evaporative stress. Remote Sens. 10, 1819 (2018)

Romero, M., Luo, Y., Su, B., Fuentes, S.: Vineyard water status estimation using multispectral imagery from an UAV platform and machine learning algorithms for irrigation scheduling management. Comput. Electron. Agric 147, 109–117 (2018)

Comba, L., Biglia, A., Ricauda Aimonino, D., Gay, P.: Unsupervised detection of vineyards by 3D point-cloud UAV photogrammetry for precision agriculture. Comput. Electron. Agric 155, 84–95 (2018)

Dobrowski, S.Z., Ustin, S.L., Wolpert, J.A.: Remote estimation of vine canopy density in vertically shoot-positioned vineyards: determining optimal vegetation indices. Aust. J. Grape Wine Res 8, 117–125 (2002)

Sun, L., et al.: Daily mapping of 30 m LAI and NDVI for grape yield prediction in California vineyards. Remote Sens 9, 317 (2017)

Johnson, L.F.: Temporal stability of an NDVI-LAI relationship in a Napa Valley vineyard. Aust. J. Grape Wine Res 9, 96–101 (2003)

Johnson, L.F., Bosch, D.F., Williams, D.C., Lobitz, B.M.: Remote sensing of vineyard management zones: Implications for wine quality. Appl. Eng. Agric 17, 557–560 (2001)

Barrile, V., Candela, G., Fotia, A., Bernardo, E.: UAV survey of bridges and viaduct: workflow and application. In: Misra, S., et al. (eds.) ICCSA 2019. LNCS, vol. 11622, pp. 269–284. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-24305-0_21

Barrile, V., Fotia, A., Bernardo, E.: The submerged heritage: a virtual journey in our seabed. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 42(2/W10), 17–24 (2019)

Robinson, N.P., et al.: A dynamic landsat derived normalized difference vegetation index (NDVI) product for the conterminous United States. Remote Sens. 9, 863 (2017)

Barrile, V., Bilotta. G.: An application of remote sensing: object oriented analysis of satellite data. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 37, 107–114 (2008)

Johnson, L.F., Roczen, D.E., Youkhana, S.K., Nemani, R.R., Bosch, D.F.: Mapping vineyard leaf area with multispectral satellite imagery. Comput. Electron. Agric 38, 33–44 (2003)

Sentinel-2A Processing Baseline (02.04). https://sentinel.esa.int/web/sentinel/missions/sentinel-2/news/-/article/new-processing-baseline-02-04-for-sentinel-2a-products, Accessed 11 Jan 2020

Borgogno-Mondino, E., Lessio, A., Tarricone, L., Novello, V., de Palma, L.: A comparison between multispectral aerial and satellite imagery in precision viticulture. Prec. Agric. 19(2), 195–217 (2017). https://doi.org/10.1007/s11119-017-9510-0

Senthilnath, J., Kandukuri, M., Dokania, A., Ramesh, K.N.: Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 140, 8–24 (2017)

Peña, J.M., Torres-Sánchez, J., de Castro, A.I., Kelly, M., López-Granados, F.: Weed mapping in early-season maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 8, e77151 (2013)

Comba, L., Gay, P., Primicerio, J., Ricauda Aimonino, D.: Vineyard detection from unmanned aerial systems images. Comput. Electron. Agric. 114, 78–87 (2015)

Barrile, V., Bilotta, G., Fotia, A., Bernardo, E.: Road extraction for emergencies from satellite imagery. In: Gervasi, O., et al. (eds.) ICCSA 2020. LNCS, vol. 12252, pp. 767–781. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58811-3_55

Barrile, V., Bilotta, G., Fotia, A., Bernardo, E.: Integrated GIS system for post-fire hazard assessments with remote sensing. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. XLIV, 13-20 (2020)

Deng, L., Mao, Z., Li, X., Zhuowei, H., Duan, F., Yan, Y.: UAV-based multispectral remote sensing for precision agriculture: a comparison between different cameras. ISPRS J. Photogramm Remote Sens. 146, 124–136 (2018). https://doi.org/10.1016/j.isprsjprs.2018.09.008

Navia, J., Mondragon I., Patino, D., Colorado, J.: Multispectral mapping in agriculture: Terrain mosaic using an autonomous quadcopter UAV. In: 2016 International Conference on Unmanned Aircraft Systems (ICUAS), pp. 1351–1358 (2018)

Hall, A., Louis, J., Lamb, D.W.: A method for extracting detailed information from high resolution multispectral images of vineyards. CiteSeerx 10M (2001)

Barrile, V., Bernardo, E., Candela, G., Bilotta, G., Modafferi, A., Fotia, A.: Road infrastructure heritage: from scan to infrabim. WSEAS Trans. Environ. Dev. 16, 633–642 (2020). https://doi.org/10.37394/232015.2020.16.65

Reza, M.N., Na, I.S., Baek, S.W., Lee, K.-H.: Rice yield estimation based on K-means clustering with graph-cut segmentation using low-altitude UAV images. Biosyst. Eng. 177, 109–121 (2018)

Albetis, J., et al.: Detection of Flavescence dorée grapevine disease using unmanned aerial vehicle (UAV) multispectral imagery. Remote Sens. 9, 308 (2017)

Barrile, V., Fotia, A., Candela, G., Bernardo, E.: Integration of 3D model from UAV survey in BIM environment. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 42(2/W11), 195–199 (2019)

ESA Earth Online. https://earth.esa.int/documents/247904/685211/Sentinel-2_User_Handbook, Accessed 25 Nov 2019

Ducati, J.R., Bombassaro, M.G., Fachel, J.M.G.: Classifying vineyards from satellite images: a case study on burgundy’s côte d’or. Oeno One 48, 247–260 (2014)

Copernicus Open Access Hub. https://scihub.copernicus.eu/dhus/#/home, Accessed 11 Jan 2020

Louis, J., Charantonis, A., Berthelot, B.: Cloud detection for sentinel-2. In: Proceedings of the ESA Living Planet Symposium, Bergen, Norway (2010)

Agisoft©. https://www.agisoft.com, Accessed 11 Jan 2020

Parrot Drones©. https://www.parrot.com/business-solutions-us/agriculture#agriculture, Accessed 11 Jan 2020

MicaSense. https://www.micasense.com/accessories/#!/Calibrated-Reflectance-Panel, Accessed 11 Jan 2020

Jiang, R., et al.: Assessing the operation parameters of a low-altitude UAV for the collection of NDVI values over a paddy rice field. Remote Sens. 12, 1850 (2020)

DatiMeteo. https://datimeteo.it, Accessed 11 Aug 2020

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Bilotta, G., Bernardo, E. (2022). UAV for Precision Agriculture in Vineyards: A Case Study in Calabria. In: Borgogno-Mondino, E., Zamperlin, P. (eds) Geomatics and Geospatial Technologies. ASITA 2021. Communications in Computer and Information Science, vol 1507. Springer, Cham. https://doi.org/10.1007/978-3-030-94426-1_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-94426-1_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-94425-4

Online ISBN: 978-3-030-94426-1

eBook Packages: Computer ScienceComputer Science (R0)