Abstract

In this paper, we consider an infinite-service resource queueing system \(M/GI/\infty \) operating in a random environment. When the environment changes its state, the service time and the occupied resource do not change for the customers already under service, however, for the new customers the arrival rate, the service time distribution, and resource requirements are changed. We apply the dynamic screening method and perform asymptotic analysis to find the approximation of the probability of the total amount of occupied resource.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Network slicing in 5G can be defined as a network configuration that allows multiple networks (virtualized and independent) to be created on top of a common physical infrastructure. This configuration has become an essential component of the overall 5G architectural landscape [17]. Each “slice” or portion of the network can be allocated based on the specific needs of the application, use case or customer.

Network slicing involves dividing the physical architecture of 5G into multiple virtual networks or layers. Each network layer includes control plane functions, user traffic plane functions, and a radio access network. Each layer has its own characteristics and is aimed at solving a particular business problem. 3GPP defines three standard network layers [1]:

-

super-broadband access (eMBB, Enhanced Mobile Broadband) – users of the global Internet, CCTV cameras;

-

ultra-reliability and low latency (URLLC, Ultra Reliable Low Latency Communication) – driverless transport, augmented and virtual reality;

-

low enegry and low latency (IoT, Internet of Things) – millions of devices transmitting small amounts of data from time to time.

Figure 1 shows an example of a network slicing by traffic (service) types. In this paper, it is proposed to consider a resource queueing system operating in a random environment as a mathematical model of such a technology, assuming that service requests occur when the random environment is in the appropriate state. We analyze the system in the limiting condition of extremely frequently changing states of the environment, thus, this will insignificantly affect the main probabilistic and numerical characteristics. We assume that requests for the services form a Poisson process with constant intensity, depending on the type of service (i.e. the state of the random environment). The request service duration and the amount of the provided resource also depend on the type of service and do not change for requests that are in service when the random environment changes its state. We present a detailed description of the model in the Sect. 2.

There are many papers where queues in a random environment were studied. For example, [13] considers queueing system M/M/C, where the arrivals and service rates are modulated by a random environment for which the underlying process C(t) is an irreducible continuous-time Markov chain. B. D’Auria [6] investigated an \(M/M\infty \) queue whose parameters depend on an external random environment that is specified by a semi-Markovian process. Boxma and Kurkova [2] studied an M/M/1 queue with the special feature that the speed of the server alternates between two constant values. There are a lot more papers [3, 4, 7,8,9,10, 12], where authors consider queueing systems operating in a random environment.

Resource queueing systems have been analyzed extensively in recent years. For example, in [15], a model of a multi-server queueing system with losses caused by lack of resources necessary to service claims was considered. In [18], it was investigated a heterogeneous wireless network model in terms of a queueing system with limited resources and signals that trigger the resource reallocation process. In [19] Tikhonenko studied a queueing system with processor sharing and limited resources.

In [16], it was considered an \(M/GI/\infty \) queueing system in a random environment. The dynamic screening method and asymptotic analysis were applied as well as we do in this paper. In [5], a mathematical model of an insurance company in the form of the infinite-server queueing system operating in a random environment was studied using the asymptotic analysis method. Paper [11] considers a non-Markovian infinite-server multi-resource queuing system. The result was found under the asymptotic condition of the growing intensity of the arrival process. All these papers consider an infinite-server queue in a random environment or with requirements for resources. Unlike them, we consider the \(M/GI/\infty \) queueing model with both a random environment and requirements for resources in this paper.

The paper is organized as follows. In Sect. 2, the mathematical model is described and the goal of the study is formulated. In Sect. 3, the dynamic screening method is explained, moreover, balance equations are obtained and written using characteristic functions. The asymptotic analysis and final equations are derived in Sect. 4. Section 5 presents a numerical example and conclusions on the accuracy of the obtained approximation.

2 Mathematical Model

Consider a queueing system with an unlimited number of servers and an unlimited capacity of some resource that operates in a random environment (Fig. 2), such the functioning of the system depends on the environment state. The random environment is specified by a continuous-time Markov chain with a finite number of states \(k\in \{1,\dots ,K\}\) and generator \(\mathbf {Q}=\{q_{k\nu }\},\,k,\nu =1,\dots ,K\). When the process is in state k, the rate of the Poisson arrival process is equal to \(\lambda _k\) and the service time has distribution with CDF \(B_k(x)\). We compose the arrivals rates into diagonal matrix \(\mathbf {\Lambda }=\text {diag}\{\lambda _k\},\,k=1,\dots ,K\). In addition, each arrival occupies a resource of a random size \(v_k>0\) with the CDF \(G_k(y)={\text {P}}\{v_k<y\}\) which depends on the environment state.

When the environment state changes, the resource amounts and the service rates change only for new customers, as for customers already under service, these values stay the same. When a customer completes servicing, it leaves the system and releases the resource that it occupied during the capture. Capture is understood as the moment when the customer arrives, at which the resource is allocated.

A stochastic process \(\left\{ k(t),i(t),v(t)\right\} \) describes the system’s state at time t as follows:

-

the environment state at time t is denoted by \(k(t)\in \{1,\dots ,K\}\),

-

the number of customers in the system at time t is denoted by \(i(t)\in \{0,1,2,\dots \}\),

-

the total amount of occupied resource at time t is denoted by \(v(t) \ge 0\).

Our goal is to find the steady-state probability distribution of the total amount of the occupied resources v(t).

3 Dynamic Screening Method

We consider an infinite-server queue with non-exponential service times. This is the reason why we can not apply some classical methods directly here (for example, the method of supplementary variables). Otherwise, we should deal with the number of variables and equations that are unlimited and changing. To avoid the problem, we apply the dynamic screening method [14] whose modification for the resource queue is described below.

3.1 Method Description

Let at moment \(t_0\) the system is empty. We fix a moment \(T>{{t}_{0}}\) in the future. Let us draw two time axes (Fig. 3). The moments of customers arrivals are marked on the axis 0. We mark on axis 1 only those arrivals that before the moment T have not finished their service. We name the arrivals on axis 1 as “screened”, and the entire point process on axis 1 is named as the “screened process”.

Let us define function \({{S}_{k}}(t)\) that determines the dynamic screening probability on axis 1 as follows:

The customer arrived at the system at the moment \(t<T\) does not finish service before moment T and occupies a certain amount of the resource with probability \({{S}_{k}}(t)\). On the other hand, the customer leaves the system and releases the resource occupied at the arriving with the probability \(1-{{S}_{k}}(t)\), hence it is not considered in the “screened” process. The values of \({{S}_{k}}(t)\) belong to the segment [0, 1]. In Fig. 3, colored areas depict different states of the random environment.

Let us denote the number of screened arrivals, which occurred during time \([{{t}_{0}},t)\) by n(t), and the total amount of resource occupied by the screened customers by w(t).

Basing on the results obtained in [14] and [11], it is not hard to show that

for any non-negative values of m and x. So, we can first study the stochastic process \(\{k(t),n(t),w(t)\}\) instead of process \(\{k(t),i(t),v(t)\}\). After that, we can substitute \(t=T\) into the final expressions and obtain the goal due to the moment T is chosen arbitrarily.

3.2 Balance Equations

We denote the probabilities

For \(P_k(n,w,t)\), we write the following system according to the total probability law and dynamic screening method

We obtain the system of Kolmogorov integro-differential equations

with the initial condition

where \({{r}_{k}}\) is the element of row vector \(\mathbf {r}\), which satisfies the system of equations:

and \(\mathbf {e}\) is a column vector that consists of ones.

3.3 Characteristic Functions

Let us define the partial characteristic functions as

where \(j=\sqrt{-1}\), and we rewrite the system (2)–(3) for functions \(h_k(u,v,t)\)

with the initial condition

where

Then, we rewrite the obtained system as matrix equation

with the initial condition

where

and \(\mathbf {I}\) is identity matrix.

4 Asymptotic Analysis Method

Problem (4)–(5) seems as it can not be solved analytically in a direct way, so, we apply the method of asymptotic analysis to solve it.

4.1 Method Description

Asymptotic analysis method for queueing systems consists of analysis of the equations defining any characteristics of the system and allows to obtain probability distribution and numerical characteristics in the analytical form under some asymptotic condition. We will use the asymptotic analysis method under the condition of growing arrivals rate and extremely frequent changes in the environment state to solve the Eq. (4)–(5). We set

Then (4) can be rewrite as

4.2 First Order Asymptotic

and then it can be rewritten as

In (8) we set \(\varepsilon \rightarrow 0 \) and denote

this yields

it then follows that \(\mathbf {f_1}(x,y,t)\) can be represented as a product

where \({\varPhi }_{1}(x,y,t)\) is a scalar function that satisfies the initial condition \({\varPhi }_{1}(x,y,t_0)=1\).

We multiply (8) by \(\mathbf {e}\), divide by \(\varepsilon \), set \(\varepsilon \rightarrow 0\) and substitute (9):

where

The solution of Eq. (10) is given as

Denoting

and making substitution inverse to (9) and (7), we obtain the first order approximation

4.3 Second Order Asymptotic

In (4), we make a substitution

we obtain

We substitute

into (13), and we obtain

As \(\varepsilon \rightarrow 0\), denoting

we obtain

This yields

where \(\varPhi _2\left( x,y,t\right) \) is a scalar function that satisfies the initial condition \({\varPhi }_{2}(x,y,t_0)=1\). We represent \(\mathbf {f}_{2}\left( x,y,t\right) \) as the expansion

where \(\mathbf {g}\left( t\right) \) is a row vector.

We substitute the first degree Maclaurin expansion of \(e^{z}\) into Eq. (15)

Then we substitute (17) into the obtained equation

We divide both sides by \(\varepsilon \) and set \(\varepsilon \rightarrow 0\)

This yields

Hence, vector \(\mathbf {g}\left( t\right) \) is defined by the inhomogeneous linear system. The solution \(\mathbf {g}\left( t\right) \) of the system (18) we can write as

where c(t) is an arbitrary scalar function and the row vector \(\mathbf {d}\left( t\right) \) is any specific solution to system (18) satisfying a certain condition, for example:

The solution has the form

where

Finally, the solution of (18) has the form

Let us now derive the explicit expression for the function \(\varPhi _2\left( x,y,t\right) \). To do this, we approximate the exponential function in (15) with the second degree Maclaurin expansion and make substitution (17). This yields the equation

where

We then multiply both parts of the obtained equation by vector \(\mathbf {e}\). Due to (18), we can write

Denoting

we obtain the solution

Making substitution inverse to (16), (14) and (12), we obtain the second order approximation

4.4 Main Result

Multiplying by vector \(\mathbf {e}\), we obtain the approximation of the characteristic function of stochastic process \(\{n(t),w(t)\}\)

and going to steady-state regime, we put \(t=T\) and \(t_0\rightarrow -\infty \), using (1), we obtain the approximation for the characteristic function of process \(\{i(t),v(t)\}\)

where

Here notation \(\mathbf {X}\circ \mathbf {Y}\) means Hadamard (element-wise) product of matrices \(\mathbf {X}\) and \(\mathbf {Y}\).

Finally, the steady-state probability distribution of two-dimensional stochastic process \(\{i(t),v(t)\}\) can be approximated by the Gaussian distribution with means vector

and covariance matrix

In particular, the stationary characteristic function of the distribution of the total amount of occupied resource v(t) can be approximated as follows:

So, this distribution can be approximated by the Gaussian one with mean \(Nm_2\) and variance \(NK_{22}\).

5 Numerical Example

Consider the following example. Let the random environment have three states \(\{1,2,3\}\) and be defined by the generator

Let we have Poission arrivals with intensities

Let service times have gamma distribution with the following shape and rate parameters:

and resource requirements, also, have gamma distribution with parameters

Here indices mean the state of the random environment when the customer arrives.

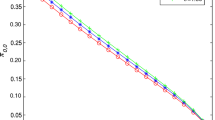

Let us estimate the accuracy of obtained approximation (19) and find a lower bound of parameter N for the applicability of the proposed approximation. To do this, we carried out series of simulation experiments (in each of them \(10^{7}\) arrivals were generated) for increasing values of N and compared the asymptotic distributions with the empiric ones by using the Kolmogorov distance \(\varDelta = \sup \limits _x |F(x)-\tilde{F}(x)|\) as an accuracy measure. Here F(x) is the cumulative distribution function (CDF) built on the basis of simulation results, and \(\tilde{F}(x)\) is the CDF based on Gaussian approximation (19).

Table 1 presents the Kolmogorov distances between the asymptotic and empirical distribution functions of the total amount of resources occupied in the system. We see that the approximation accuracy increases with growing of parameter N, which is also illustrated by Fig. 4.

If we suppose that the error \(\varDelta \le 0.05\) is acceptable, we may conclude that the Gaussian approximation can be applicable for the cases \(N>15\).

6 Conclusion

In the paper, we have studied a resource queueing system \(M/GI/\infty \) operating in a random environment. We have considered the case when the service time and the occupied resource do not change for the customers already under service when the random environment state changes. We have applied the dynamic screening method and the asymptotic analysis method to find the approximation of the probability of the total amount of occupied resource. It has been obtained that this distribution can be approximated by Gaussian distribution under the condition of growing arrival rate and extremely frequent changes of the states of the random environment. The parameters of the corresponding Gaussian distribution have been obtained.

References

3GPP: Study on Enhancement of Network Slicing (Release 16). 3GPP TS 23.740 V16.0.0 (December 2018)

Boxma, O.J., Kurkova, I.A.: The M/M/1 queue in a heavy-tailed random environment. Stat. Neerl. 54(2), 221–236 (2000). https://doi.org/10.1111/1467-9574.00138

Choudhury, G.L., Mandelbaum, A., Reiman, M.I., Whitt, W.: Fluid and diffusion limits for queues in slowly changing environments. Commun. Stat. Stoch. Models 13(1), 121–146 (1997). https://doi.org/10.1080/15326349708807417

Cordeiro, J.D., Kharoufeh, J.P.: The unreliable M/M/1 retrial queue in a random environment. Stoch. Model. 28(1), 29–48 (2012). https://doi.org/10.1080/15326349.2011.614478

Dammer, D.: Research of mathematical model of insurance company in the form of queueing system in a random environment. In: Dudin, A., Nazarov, A., Kirpichnikov, A. (eds.) ITMM 2017. CCIS, vol. 800, pp. 204–214. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-68069-9_17

D’Auria, B.: \(M/M/\infty \) queues in semi-Markovian random environment. Queueing Syst. 58(3), 221–237 (2008). https://doi.org/10.1007/s11134-008-9068-7

D’Auria, B.: Stochastic decomposition of the queue in a random environment. Oper. Res. Lett. 35(6), 805–812 (2007). https://doi.org/10.1016/j.orl.2007.02.007

Jiang, T., Liu, L., Li, J.: Analysis of the M/G/1 queue in multi-phase random environment with disasters. J. Math. Anal. Appl. 430(2), 857–873 (2015). https://doi.org/10.1016/j.jmaa.2015.05.028

Krenzler, R., Daduna, H.: Loss systems in a random environment: steady state analysis. Queueing Syst. 80(1-2), 127–153 (2014). https://doi.org/10.1007/s11134-014-9426-6

Krishnamoorthy, A., Jaya, S., Lakshmy, B.: Queues with interruption in random environment. Ann. Oper. Res. 233(1), 201–219 (2015). https://doi.org/10.1007/s10479-015-1931-4

Lisovskaya, E.Y., Moiseev, A.N., Moiseeva, S.P., Pagano, M.: Modeling of mathematical processing of physics experimental data in the form of a non-Markovian multi-resource queuing system. Russ. Phys. J. 61(12), 2188–2196 (2019). https://doi.org/10.1007/s11182-019-01655-6

Liu, Y., Honnappa, H., Tindel, S., Yip, N.K.: Infinite server queues in a random fast oscillatory environment. Queueing Syst. 98(1-2), 145–179 (2021). https://doi.org/10.1007/s11134-021-09704-z

Liu, Z., Yu, S.: The M/M/C queueing system in a random environment. J. Math. Anal. Appl. 436(1), 556–567 (2016). https://doi.org/10.1016/j.jmaa.2015.11.074

Moiseev, A., Nazarov, A.: Queueing network \(MAP-(GI/\infty )^K\) with high-rate arrivals. Eur. J. Oper. Res. 254(1), 161–168 (2016). https://doi.org/10.1016/j.ejor.2016.04.011

Naumov, V.A., Samuilov, K.E., Samuilov, A.K.: On the total amount of resources occupied by serviced customers. Autom. Remote. Control. 77(8), 1419–1427 (2016). https://doi.org/10.1134/s0005117916080087

Nazarov, A., Baymeeva, G.: The M/G/\(\infty \) queue in random environment. In: Dudin, A., Nazarov, A., Yakupov, R., Gortsev, A. (eds.) ITMM 2014. CCIS, vol. 487, pp. 312–324. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-13671-4_36

Samdanis, K., Costa-Perez, X., Sciancalepore, V.: From network sharing to multi-tenancy: the 5g network slice broker. IEEE Commun. Mag. 54(7), 32–39 (2016). https://doi.org/10.1109/MCOM.2016.7514161

Sopin, E., Vikhrova, O., Samouylov, K.: LTE network model with signals and random resource requirements. In: 2017 9th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT) (2017). https://doi.org/10.1109/icumt.2017.8255155

Tikhonenko, O.M.: Queuing system with processor sharing and limited resources. Autom. Remote. Control. 71(5), 803–815 (2010). https://doi.org/10.1134/s0005117910050073

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Krishtalev, N., Lisovskaya, E., Moiseev, A. (2021). Resource Queueing System \(M/GI/\infty \) in a Random Environment. In: Vishnevskiy, V.M., Samouylov, K.E., Kozyrev, D.V. (eds) Distributed Computer and Communication Networks: Control, Computation, Communications. DCCN 2021. Lecture Notes in Computer Science(), vol 13144. Springer, Cham. https://doi.org/10.1007/978-3-030-92507-9_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-92507-9_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92506-2

Online ISBN: 978-3-030-92507-9

eBook Packages: Computer ScienceComputer Science (R0)