Abstract

The 3D reconstruction of upper limb anatomy plays a significant role in many biomedical fields as ergonomics, motion rehabilitation, prosthesis design. Conventional manual measurements have been progressively replaced by 3D optical scanning in collecting and storing 3D anatomical data, thus increasing reliability and data accuracy, shortening, at the same time, the overall acquisition process. However, the real-time scanning of human body parts still represents a complex task since it is challenging to keep the arm in a stable position and avoid artifacts in the collected data. Also, optical undercut geometries often impair the 3D reconstruction’s completeness. In this paper, a compact and low-cost 3D scanning system has been developed by integrating three D415 Intel RealSense cameras. The three depth cameras have been assembled in a circular rig to define a lightweight handheld scanner capable of carrying out 3D data acquisition in different scenarios. The optical system has been validated through anthropometric measurements on different subjects.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction and Background

In the medical field, the 3D digital information of the surface patient’s anatomy plays a significant role in designing highly customized medical devices [1]. More effective and comfortable therapies can be enabled by bespoke orthoses, customized prostheses, or active controllers. The rehabilitation of the upper limb functionalities, for example, may involve working with patients who have musculoskeletal disorders due to strokes, dystonia, or sports injuries. Creating an advanced patient’s biomechanical profile is thus crucial for developing effective bespoke assistive controllers, and, for this purpose, the 3D scanning of the patient’s upper limb anatomy represents its starting point.

Body sizes are conventionally taken by tape measurements characterized by invasive and time-consuming processes [2]. Also, accuracy is a significant concern since a considerable level of subjectivity characterizes manual measurements. Instead, the patient’s morphology is usually acquired through plaster molds. Hand-crafting processes are then adopted to customize the medical device and enhance comfort once the patient wears the prototype. The effectiveness of the final appliance, therefore, depends on both skills and expertise of the specialist.

In the last two decades, manual measurements have been replaced by 3D optical scanning in the acquisition of human anatomy, thus increasing reliability and data accuracy, shortening, at the same time, the overall process. The digital anatomical model, created as an output of the acquisition process, represents an effective support in the design of bespoke devices, also allowing the extraction of measurement of interest at any time. The standard procedure has been progressively replaced using computer-aided design (CAD) and computer-aided engineering (CAE) tools. However, a significant step forward was possible thanks to the development of Additive Manufacturing (AM) technologies that allowed the direct fabrication of fully functional parts starting from a 3D model, without involving the traditional manufacturing process [3,4,5].

Capturing human upper limbs’ 3D shape and motion has been the scientific focus for many researchers due to its applications in many engineering fields as computer graphics, human-computer interaction, rehabilitation, and robotics. The recent technological advancement in off-the-shelf RGB-D cameras has further increased this interest, especially for virtual and augmented reality applications. Many technologies exist, and countless are the scientific community’s approaches [6, 7].

In this paper, a compact and low-cost 3D scanning system, composed of multiple RGB-D sensors, has been developed to perform real-time acquisitions of upper limb anatomy. Three D415 Intel RealSense cameras have been assembled in a circular rig to define a lightweight handheld scanner. The system effectiveness has been assessed by acquiring upper limb anatomies of different subjects and comparing anthropometric measurements with conventional tape data collection.

2 Upper Limb 3D Scanning: Main Issues

The 3D scanning of human upper limbs (i.e., hands, fingers, wrists, and forearms) is not a trivial task since it is not easy for the patient to keep the arm in a stable and static position for the duration of the whole scanning process. Therefore, artifacts due to involuntary movements are usually present in the 3D scanned data and must be handled [8]. For this reason, popular phase shift-based structured light methods are not suitable since they require at least three images, and the use of real-time single-shot techniques would be preferable [9]. However, critical issues remain since fingers have many degrees of freedom, move quickly, and look similar in color and shape, thus making difficult the tracking process. Moreover, the hand and fingers shape lead to self-occlusions, which make ambiguous their pose inference.

Technical literature has investigated the use of low-cost sensors, as the Kinect sensor (Microsoft, Redmond, WA, USA) to track the full-body motion by depth cameras and skeleton stream and the Leap Motion Controller (LMC, Leap Motion, San Francisco, CA, USA) for hand tracking [10].

2.1 Scanning Layout

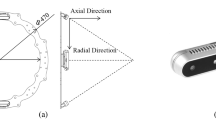

Obtaining a 360° view of the scanned arm raises two issues: long acquisition time and registration issues between different clouds. A handheld multi-sensor layout was developed, exploiting three D415 Intel RealSense sensors placed at 90° on a circular rig to solve both these issues (Fig. 1). A full metrological characterization of the D415 Intel RealSense was performed in [11], demonstrating its effectiveness as a low-cost device for biomedical 3D-scanning applications. In the present work, the three optical sensors are mounted on the rig to have both a radial and an axial orientation. This allows acquiring target surfaces placed in front of the sensor, even if they do not go through the ring (e.g., in the case of a patient with impaired mobility). The two diametrically opposite sensors allow obtaining a wide acquisition angle around the arm axis. In contrast, the third sensor is oriented to acquire some arm’s typical optical undercut, such as the thumb back surface. A similar approach was followed in [7] where eight D415 Intel RealSense were assembled in two circular rigs composed of 4 sensors each. This configuration, however, defines a static architecture, which represents a limiting factor for the acquisition of patients suffering from partially inhibited musculoskeletal system function (i.e., dystonia disorders).

2.2 Device Calibration

The relative placement of the sensors needs to be calibrated to automatically register the three point clouds acquired in each frame during the scanning stage. To achieve this result, a calibration specimen was designed, and 3D printed (Fig. 2). The specimen has three color-coded markers, which allow to automatically detect and isolate the specimen with respect to the background in the scanned data. The specimen is placed in front of the device, approximately in the rig’s center, and acquired by the three sensors.

The markers are then used to obtain a rough alignment of the three point clouds with respect to a reference geometry obtained through the CAD model of the specimen, Fig. 3(a). Finally, the registration of the three-point clouds with respect to the reference geometry is refined through an Iterative Closest Point (ICP) algorithm, Fig. 3(b), to obtain the three transformations defining the relative placement of the sensors. It is worth noting that the described calibration procedure is fully automated; thus, the user must only acquire the specimen taking care that all the markers are visible by all the sensors.

2.3 Scanning Workflow

The scanning process can be carried out by the user (e.g., therapist), handling the structure and rotating it around the extended patient’s arm’s central axis. Preliminary acquisition trials demonstrated that, a small number of viewpoints (5 ÷ 8) is enough to achieve the 360° description of the arm surface. Thus, all the acquisitions shown in this paper were carried out with six different viewpoints, requiring an acquisition time of about 55 s. In patients with impaired mobility, some issues could arise since it could not be possible for the patient to extend his arm fully. In this case (e.g., the arm is folded close to the body), it is possible to exploit the sensors’ axial orientation to frontally scan the patient, as shown in Fig. 4, even if it is no more possible to obtain a 360° scan of the arm.

2.4 Point Clouds Elaboration

Once the acquisition stage is completed, three series of different point clouds are obtained, coming from each of the sensors. The elaboration stage requires to: 1) register the three separated point clouds deriving from the sensors to a common reference frame, 2) remove noise from the clouds, and 3) register the subsequent acquisition with respect to each other. To this extent, a semi-automatic procedure was developed. Firstly, the point clouds acquired by different sensors can be registered to a common reference frame by exploiting the roto-translation determined during the calibration stage. This is a straightforward step, which only requires applying three known rigid transformations to the acquired data. Subsequently, this registration can be further refined using an automatic ICP algorithm to the three clouds of the single viewpoint, thus obtaining their final relative placement. After this step, the three sensors’ points clouds are appropriately aligned, but the subsequent scans (e.g., the six viewpoints) are still noisy and not properly registered, as shown in Fig. 5(a).

The point clouds are then elaborated to remove noise, i.e., detect and delete outliers. This is performed through an automatic algorithm that detects clusters in the point clouds, separated by a given distance. The points belonging to each detected cluster are counted, and all the clusters with numerosity lower than a given threshold are deleted. Both the separation distance and the cluster numerosity can be set to achieve more or less aggressive noise reduction. Finally, the point clouds need to be registered to obtain the full reconstruction of the patient’s arm. This is achieved by the conventional 3–2–1 pairwise registration approach [12]: firstly, three corresponding points are selected on consecutive scans to obtain a rough registration. Secondly, an ICP algorithm is used to refine the registration. This process is repeated for all the six viewpoints, and a final global registration algorithm is then applied to refine the results further, obtaining the 360° point cloud as shown in Fig. 5(b).

After the elaboration, the data consist of 3D coordinates of the point cloud (de-noised, registered), RGB texture for each point, and surface normal. This information is stored in arrays: three columns of data for the coordinates, three columns for the texture, and three columns for the normal, being the number of rows equal to the number of measured points. The data can then be saved in several formats, such as.txt or ASCII files.

3 Assessment of Anatomical Scanning

The developed scanning system was tested, performing several acquisitions to assess hardware and software functionality, the system’s usability, the effectiveness of the measurement, and the training time needed to become confident with the equipment. To this extent, three different researchers performed a complete scan of each other arms. This first assessment also allowed to verify the scanning outcome with respect to anthropometric measurements taken through rulers. These tests demonstrated that, after about five trials, each researcher was able to perform a successful complete scanning of the human arm. Figure 6 shows the scanning results for one of the researchers from the six different viewpoints. As stated, each viewpoint is the combination of the acquisition of three sensors, thus providing the surface of a wide-angle. The figure highlights that the full 360° acquisition was achieved, with no gaps or relevant holes in arm and forearm geometry. Some noise and surface holes can be noted between the fingers, which are the most challenging region to be acquired. Nevertheless, arm and forearm surfaces are smooth. After the training trials, all the researchers could complete the acquisition procedure in less than 55 s.

The good qualitative results of Fig. 6 were quantitatively evaluated by comparing the measurement of some key points of the arm on the 3D scanning and on the actual arm. The comparison results are shown in Table 1.

The first column shows an image of the performed measurements, the second column reports the measurement value as obtained from the 3D scanning (rounded to the nearest unit), the third column reports the mean value of the corresponding measurement taken through a tape with five repetitions, the fourth columns shows the standard deviation of the tape measurement, while the fifth column reports the percentual difference between 3D scan and tape measurements. The assessment produced promising results concerning the usability and reliability of the system. It is worth noting that a high standard deviation was found concerning the tape measurements due to significant difficulties for the operator to select the proper measurement point on the arm repeatedly. This also justifies the absolute measurement discrepancies, which are to be ascribed to uncertainty in the tape measurement.

3.1 Issue Related to Patient Movement

The previous section demonstrated that 360° acquisition of the patient’s arm is possible for a stretched and still arm. On the other hand, some issues may arise if the patient cannot stretch the arm or be perfectly still. This phenomenon was simulated by repeating the scanning with different grades of arm movement and different arm positions. The first trial results are shown in Fig. 7, highlighting an acquisition issue since the last two frames of the scanning showed the wrist movement. Indeed, the subject slightly changed the hand and the forearm’s angle, thus impairing the registration process. Nevertheless, two separate surfaces could be defined, which can be processed separately. Indeed, even if the last two views could not be aligned with the previous, all the frames were successfully acquired, meaning no sides of the arm are missing in the acquisition. Thus, the arm geometry’s 360° information is still preserved if the two separate clouds are considered.

Some more trials were then performed, simulating the typical movement of dystonia patients. To this extent, several tests were repeated, mimicking tremors of the hand and/or of the arm, to assess the movements’ impact on the scanning quality. In particular, two examples will be reported. The first case was simulated, assuming that the patient could keep the arm almost stretched, even if he could not be still. The second case was simulated considering that the patient could not stretch the arm at all and could not be still either. In the first case, the six acquisitions around the arm could be performed as for a healthy subject, while in the second case, the arm was folded too close to the subject’s body. Thus a 360° view was not possible, but several different orientations were acquired to describe as much geometry as possible. The same post-processing procedure (cloud registration and denoising) applied to healthy patients was used in both cases. Figure 8(a) shows the registered point clouds for the first case, while Fig. 8(b) shows the second case results. As can be noted, in both cases, the proper registration of different viewpoints to obtain a unique point cloud is not possible because of the uncontrolled movement, which leads to inconsistent clouds between acquisitions. Nevertheless, the single views were separately elaborated to assess if they are still usable for measurement purposes. Some examples are reported in Fig. 9(a) and (b), which show two different viewpoints of the first and second simulated cases, respectively. The images demonstrate that, even if the full 360° point cloud cannot be achieved, the clouds corresponding to single viewpoints are not impaired by the patient’s movement since the device’s frame rate is sufficiently high to avoid blur corrupted or noisy acquisitions. As can be seen, the single frame appears consistent and can be used to assess some specific anthropometric measurements or describe some portions of the geometry.

The issues related to patient’s movement may be mitigated by using fixed supports to constrain the patient’s arm. Nevertheless, this could impair the usability of the system for most fragile users (such as dystonia patients), thus it was discarded at the present research stage.

4 Conclusion

The 3D scanning of upper limb geometry was studied and discussed in this paper. The main issues concerning the acquisition process were discussed, and a scanning device was proposed to accomplish the task. To this extent, a compact, low-cost handheld scanner was assembled based on D415 Intel RealSense cameras. Three sensors were mounted on a rig at 90°, to allow for a wide-angle scanning in a single frame. The device's automatic calibration procedure was proposed, basing on a simple calibration specimen and color-coded markers. Consequently, the scanning workflow was presented, consisting of acquisition, clouds denoising, and registration. The device was then tested, showing promising results in terms of usability (only five training acquisitions were need, acquisition time (360° acquisitions obtained in about 55 s), and accuracy (good comparison with tape measurements). A more extensive validation campaign with a larger number of patients and a more robust tape measurement procedure is under development for further characterization of the system. Finally, some critical issues of the acquisition process were investigated, which are mainly related to involuntary movements. Nevertheless, the research activity demonstrated that the procedure is robust to this noise source: the full 360° acquisition of moving subjects could not be achieved. However, the single viewpoints resulted in being usable for further processing.

References

Barone, S., Neri, P., Paoli, A., Razionale, A.V.: Design and manufacturing of patient-specific orthodonticappliances by computer-aided engineering techniques. Proc. Inst. Mech. Eng. 232, 54–66 (2018)

Xia, S.B., Guo, S.M., Li, J.Y., Istook, C.: Comparison of different body measurement techniques: 3D stationary scanner, 3D handheld scanner, and tape measurement. J. Text. Inst. 110, 1103–1113 (2019)

Baronio, G., Harran, S., Signoroni, A.: A critical analysis of a hand orthosis reverse engineering and 3D printing process. Appl. Bionics Biomech. 2016 (2016)

Barrios-Muriel, J., Romero-Sanchez, F., Alonso-Sanchez, F.J., Rodriguez Salgado, D.: Advances in orthotic and prosthetic manufacturing: a technology review. Materials 13 (2020)

Paterson, A.M., Bibb, R., Campbell, R.I., Bingham, G.: Comparing additive manufacturing technologies for customised wrist splints. Rapid Prototyping J. 21, 230–243 (2015)

Zollhofer, M., et al.: State of the art on 3D reconstruction with RGB-D cameras. Comput. Graph. Forum 37, 625–652 (2018)

Buonamici, F., Carfagni, M., Puggelli, L., Servi, M., Volpe, Y.: A Fast and Reliable Optical 3D Scanning System for Human Arm. In: Roucoules, L., Paredes, M., Eynard, B., Morer Camo, P., Rizzi, C. (eds.) JCM 2020. LNME, pp. 268–273. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-70566-4_43

Paoli, A., Neri, P., Razionale, A.V., Tamburrino, F., Barone, S.: Sensor architectures and technologies for upper limb 3D surface reconstruction: a review. Sensors-Basel 20 (2020)

Wang, Z.Z., Zhang, C.S.: Three-dimensional hand reconstruction by single-shot structured light line pattern. IEEE Access 6, 59881–59890 (2018)

Guzsvinecz, T., Szucs, V., Sik-Lanyi, C.: Suitability of the kinect sensor and leap motion controller—a literature review. Sensors-Basel 19 (2019)

Carfagni, M., et al.: Metrological and critical characterization of the Intel D415 stereo depth camera. Sensors-Basel 19 (2019)

Zhu, H., et al.: A review of point set registration: from pairwise registration to groupwise registration. Sensors-Basel 19 (2019)

Acknowledgement

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 856998.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Neri, P., Barone, S., Paoli, A., Razionale, A.V., Tamburrino, F. (2022). A Depth-Camera Based System for the Real-Time Scanning of Upper Limb Anatomy. In: Rizzi, C., Campana, F., Bici, M., Gherardini, F., Ingrassia, T., Cicconi, P. (eds) Design Tools and Methods in Industrial Engineering II. ADM 2021. Lecture Notes in Mechanical Engineering. Springer, Cham. https://doi.org/10.1007/978-3-030-91234-5_25

Download citation

DOI: https://doi.org/10.1007/978-3-030-91234-5_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-91233-8

Online ISBN: 978-3-030-91234-5

eBook Packages: EngineeringEngineering (R0)