Abstract

The training is very important for the application of electromyography (EMG) prosthesis. Because the traditional training with physical prostheses is inefficient and boring, the virtual training system, which has natural advantages in terms of intuitiveness and interactivity, is more widely used. In this study, a virtual training system for intelligent upper limb prosthesis with bidirectional neural channels has been developed. The training system features motion and sensation neural interaction, which is realized by an EMG control module and sense feedback module based on vibration stimulation. A Human-machine closed-loop interaction training based on the virtual system is studied. The experiments are carried out, and the effectiveness of the virtual system in shortening the training time and improving the operation ability of prosthesis has been verified.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Advanced myoelectric prosthesis can provide multi-DOF intuitive control and it is expected to integrate sense feedback [1], while complex training is essential for effective operation [2]. In traditional training, patients need to wear physical prosthesis, which is costly and boring. Virtual reality uses computer to simulate 3-D virtual world and human senses to make users feel immersive [3]. In recent years, it is widely used in the simulation of medical and military training. The application of virtual reality in prosthetic training can not only overcome the limitations of environment and equipment but also greatly increase the enthusiasm and initiative of patients.

The most famous virtual training system is Virtual Integration Environment [4] (VIE), which is developed by APL Laboratory of Johns Hopkins University in the second phase of DARPA Revolutionizing Prosthetics program, as shown in Fig. 1. VIE uses the modular prosthesis and Delta3D as the simulation engine to rebuild the function of the modular prosthesis in the virtual environment. But limited by the performance of rendering engine and graphics processor at that time, VIE needs three desktop computers to form a parallel architecture to ensure the real-time performance of the system. Compared with VIE, Virtual Reality Environment [5] (VRE) of Brown University in the United States, adopts human bone skin technology (Fig. 2), and has configurable parameters on the interface. With the development of computer hardware, head mounted display is also used in virtual training system. Chau B [6] uses HTC vive head mounted display to add interactive scenes in daily life in virtual training, and the amputation end positioning is more accurate. Ortiz Catalan [7] and others applies augmented reality (AR) technology to the training platform, and connect the virtual hand to the end of the patient’s stump.

A precision grip in VIE [4].

VRE from front [5].

At present, most virtual rehabilitation systems, including VIE, focus on the forward control channel, but few systems can provide haptic feedback to amputee to form a closed-loop control. The ability of two-way information interaction and control is one of the core characteristics of intelligent prosthetic [8], and the perception ability is also a research hotspot in the field of intelligent prosthetic. Compared with visual feedback, the prosthetic practice with haptic feedback can make the control effect more accurate and natural, as well as enhance the immersion of virtual training system and improve the user experience. Additionally, most of the virtual training systems only have a virtual prosthetic hand or wrist, which cannot completely meet the needs of patients with upper arm amputation.

The virtual training system established in this paper includes not only multi-DOF prosthetic hand with the finger driven independently, but also a 7-DOF anthropomorphic prosthetic arm, and we consider the cooperative operation of arm and hand. We design a two way neural interaction module of motion and sensation, which is composed of a multi-mode neural control submodule based on EMG and a sense feedback submodule to form a control loop and realize the interconnection between the prosthesis and the human nervous system. As a closed-loop interactive training platform, the system has significant effectiveness and superiority for the design debugging, control algorithm verification and optimization in the development process of prosthesis.

2 Overall Description of the Virtual Training System

The training system consists of 3 function units: virtual reality software subsystem, neural control subsystem and sense feedback subsystem. Virtual reality software subsystem provides reasonable human-computer interaction interface and realistic virtual reality environment. It integrates EMG control method and sense feedback strategy, including automatic demonstration mode and control training mode. Neural control subsystem is mainly responsible for the acquisition and decoding of human EMG signal. After filtering and amplification, the data acquisition equipment performs A/D conversion and inputs it to the system. The sense feedback subsystem feeds back torque and joint angle in the process of virtual operation to the user through vibration stimulation. The overall architecture of the system is described in Fig. 3.

3 Design of the Virtual Training System

3.1 Upper Limb Prosthetic Model

In order to make patients have a better immersion [9], our virtual training system uses the data of the actual prosthesis 3D model to build a virtual scene. The virtual prosthesis is composed of HIT-V [10] hand and 7-DOF arm as shown in Fig. 4. HIT-V has five fingers and 11 active joints. Except thumb, the other four fingers are modular prosthetic finger with two knuckles. Thumb has a pronation/abduction joint in addition. The size of HIT-V is slightly smaller than that of normal male hands. The prosthetic arm consists of wrist, elbow and shoulder. The wrist joint and shoulder joint have 3-DOF of pitch, lateral swing and rotation, and the elbow joint has 1-DOF of bending.

HIT-V hand compared with a normal male hand [11].

The virtual prosthesis used in our system is the shell of 3D upper-limb prosthetic model, that is, only the prosthetic shell and the necessary rotating shaft are retained. So that the system has better execution efficiency and virtual visual effect. Through SolidWorks 3D modeling, read by Open Inventor, the virtual prosthesis display window is shown in Fig. 5.

3.2 Software Subsystem

As shown in Fig. 6, the system interface includes virtual scene demonstration window, control interface and menu bar. In the virtual scene demonstration window, users can zoom in or out to focus on particular joints as well as change the perspective. The control interface is the main operation platform of the software, in which there are various indicators and results display options. The menu bar contains operation commands, such as files, views, etc.

Automatic Presentation Mode.

Automatic presentation mode has been designed to help users get familiar with the system. This mode is used to demonstrate the position and rotation range of the joint, various virtual objects and the correct grasping operations. In this mode, users learn the control information mainly through observation.

“Auto Demo” and “Stop Demo” buttons are used to control the demo process. When “Auto Demo” is selected, the system state will be initialized, that is, the virtual prosthesis will be restored to its initial posture, the angle slider and other controls in the control interface are initialized, as well as the signal acquisition channel, signal duration and other edit box controls are set to zero. Then, the virtual prosthesis demonstrates 6 operations which is already set up in the system.

Control Training Mode.

Control training mode is used to realize the function of myoelectric prosthetic training, including multi-mode neural control and sense feedback. In the process of collecting EMG, firstly, the function named “SignalInput” is called to input the information of the data acquisition card. Then, the “Channel” edit boxes display the value of EMG in real time, and the waveform of flexion signal and extensor signal are drawn with iPlot, as shown in Fig. 7. The classification results of EMG in the classifier are displayed in the “Classification Results” edit box, the corresponding control indicator is activated. The “Signal Duration” edit box displays the time of EMG. The movement speed of virtual prosthesis can be adjusted by the speed slider in the interactive interface.

When virtual prosthesis collides with the virtual object, the torque sensor displays the contact torque value of the fingertip. The rectangular indicator light is activated. The serial port of communication is opened. The torque information is mapped into the control information of the feedback component. The lower computer drives the micro vibration motor and feeds back the contact information to the user.

3.3 Neural Control Subsystem

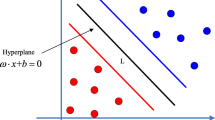

The training system in this paper uses surface electromyography (SEMG) as the input control signal, and adopts the control method based on finite state machine proposed in reference [12]. After the SEMG of a pair of flexion/extensor muscles of the radial wrist are collected, the action intention is obtained after classification and decoding, and 6 typical grasp modes with the frequency of more than 80% [13] in daily life can be controlled.

Two DJ-03 electrodes are attached to the muscle abdomen of flexor carpi radialis and extensor longus with appropriate pressure. By controlling the states of the forearm muscles, EMG can be categorized into 4 classes: rest, extension, flexion, and clench. Figure 8 shows the hand movement and its EMG. The flexion signal waveform is represented by dotted line, and the extensor signal waveform is represented by solid line. By setting the long-term action threshold, the above four signals are divided into short extension, short flexion, short clench, long extension, long flexion, long clench and rest. Then the control state and operation of prosthesis are selected according to the seven signals. The coding control process is shown in the Fig. 9.

3.4 Sense Feedback Subsystem

Calculation of Virtual Force.

Contact force is important to force control in prosthetic grasping. In this paper, the spring-damping model is used as the contact force model of virtual environment. Compared with the commonly used pure stiffness model, this method reflects the contact force between finger and object more comprehensively, and is suitable for more characteristic objects.

When solving the virtual contact force, it is necessary to determine the deformation of the virtual object after contact. When the knuckle of the virtual prosthetic hand contacts with the surface of the virtual object, the surface of the virtual object will be deformed. When the balance is reached, the position of the knuckle of the virtual prosthetic hand relative to the surface of the virtual object is offset, which is recorded as the collision depth \(\Delta x\).

The mechanical properties of virtual objects can be obtained by the following:

Where \(f\) is virtual contact force, \(\vartriangle x\) is deformation variable, \(K\) is the stiffness coefficient, and \(B\) is the damping coefficient.

Figure 10 is a schematic diagram of the contact force, which is used to calculate the contact force between the knuckle and the virtual object. Point A represents the initial position of the knuckle contact point, and its coordinate is \(\left( {x_{1} ,y_{1} ,z_{1} } \right)\). Point B represents the position of the knuckle contact point after moving, and its coordinate is \(\left( {x_{2} ,y_{2} ,z_{2} } \right)\).

The distance between point \(A\) and point \(B\) is the shape variable, expressed by \(\Delta x\). \(x_{t}\) is the actual position and \(x_{e}\) is the initial contact position. So \(\Delta x = x_{t} - x_{e}\).

According to Hooke’s law, the contact force \(F\) is as following:

Sense Feedback.

The function of two-way information interaction and control is one of the core characteristics of intelligent prosthesis, and perception is the hotspot in the field of prosthesis now. The survey shows that the prosthesis with sense feedback can effectively improve the performance of prosthesis. To serve the actual prosthesis, the virtual training system should also have sense feedback system.

We use the vibration tactile feedback, which is relatively simple and easy to implement. The average value of contact force is selected as the feedback information. According to the experimental data of physical prosthesis, the fingertip force of HIT-V ranges from 0 N to 6.5 N [10]. After testing, the duty cycle is 10% when the vibration can be felt, and the duty cycle exceeds 70% when the vibration intensity is not obvious. So we choose 10%– 70% as the output space of duty cycle and map the contact force received from the training system to this space.

4 Experiments

4.1 Human-Machine Closed-Loop Interactive Experiment

Human- machine closed-loop interactive (HMCLI) experiment is carried out by using the virtual training system. In this paper, we set up two training methods: traditional training and HMCLI training. By comparing the coding success rate and operation coding time of different training methods when reaching the same index, we can verify the effectiveness of the system in the process of training patients to use EMG prosthesis.

Subjects.

Six healthy male subjects, aged between 24 and 30, were recruited in this study. All subjects had no experience in EMG control and had not used the system before. They all signed the informed consent. The six subjects were divided into two groups for traditional training and HMCLI training.

The Process of the Experiments.

Traditional method is to use the physical prosthesis for training. The subjects judge whether the coding is correct by observing. In HMCLI training, the subjects can not only adjust according to waveform, classification results and duration of the two channel EMG displayed on the interactive interface, but also feel the contact information through vibration feedback to compare the difference between the actual result and the expected result.

After learning the EMG control coding method, 6 grasp commands appear randomly for 5 times, and the subjects need to complete each command within 10 s. The success rate of coding will be tested every 15 min. They have some time to rest each round. Each subject trains for 90 min. The succeed operation time and the coding time will be recorded. The process of training is as shown in Fig. 11.

The success rate of coding and succeed operation time are used as evaluation indexes, and the success rate of coding \(P\) is defined as following:

Where \(N\) is the number of detection, \(n\) is the number of correct operation. The succeed operation time is defined as: starting from the subject clear grasp command to the correct grasp of the virtual prosthesis.

Results and Discussion.

Figure 12 shows the average success rate of coding of the two groups. It can be seen from the figure that the rate of HMCLI training is significantly higher than that of the traditional training in the same time. After training, the average rate of success of traditional training is 73.3%, while the rate of interactive training is 92.2%, which basically meets the training requirements.

Figure 13 shows the average coding time of the two groups. According to the figure, the time of HMCLI training is significantly less than that of traditional training, with the most significant difference at the initial stage. After 90 min training, the average coding time of traditional training and HMCLI training are 5.5 s and 4.8 s respectively. It indicates that within same time, the subjects used HMCLI training are more proficient in the control of prosthesis.

The results also show that the coding success rate is negatively correlated with the complexity of gesture code. For example, in the traditional training, the success rate of cylinder grasp which can be chosen by default is the highest, up to 96.21%. However, the rate of lateral pinch is low. Therefore, optimizing coding algorithm is also an effective way to improve the training effect. It takes a lot of time to evaluate a new algorithm. By using HMCLI training, the time of this link can be significantly shortened. So the algorithm developers can better focus on the design and implementation of the algorithm, so as to speed up the research process of physical prosthetic control.

5 Conclusion

To solve the problems of low efficiency in the training of using EMG prosthesis, we use virtual reality technology to establish an intelligent upper limb prosthesis training system with motor-sensory bidirectional neural channel. Firstly, we analyze the kinematics of the arm hand system to control the motion of the upper limb prosthesis. Then 3 functional units are designed to realize the training function of the system. Virtual reality software subsystem includes automatic demonstration mode and control training mode. The neural control subsystem collects EMG signals to obtain human operation intention to control the virtual prosthesis. The sense feedback subsystem feeds back torque to the user through vibration stimulation. In addition, we establish a HMCLI platform based on the system, and conduct a comparative experiment of training effect. This paper analyzes the function of the system and proves the effectiveness of the system in shortening training time and improving the operation ability of amputees.

References

Dhillon, G.S., Horch, K.W.: Direct neural sense feedback and control of a prosthetic arm. IEEE Trans. Neural Syst. Rehabil. Eng. 13(4), 468–472 (2015)

Perry, B.N., Armiger, R.S., Yu, K.E., et al.: Virtual integration environment as an advanced prosthetic limb training platform. Front. Neurol. 9, 785 (2018)

Wang, C.W., Gao, W., Wang, X.R.: The theory, implementation and application of virtual reality technology. Tsinghua university, Beijing (1996)

Armiger, R.S., Tenore, F.V., Bishop, W.E., et al.: A real-time virtual integration environment for neuroprosthetics and rehabilitation. J. Hopkins APL Tech. Dig. 30(3), 198–206 (2011)

Linda, R., Katherine, E., Lieberman, K.S., Charies, K.: Using virtual reality environment to facilitate training with advanced upper-limb prosthesis. J. Rehabil. Res. Dev. 48(6), 707–718 (2011)

Chau, B., Phelan, I., Ta, P., et al.: Immersive virtual reality therapy with myoelectric control for treatment-resistant phantom limb pain: case report. Innov. Clin. Neurosci. 14(7–8), 3–7 (2017)

Ortiz, C.M.: Phantom motor execution facilitated by machine learning and augmented reality as treatment for phantom limb pain: a single group, clinical trial in patients with chronic intractable phantom limb pain. Lancet 388(10062), 2885 (2016)

Johannes, M.S., Bigelow, J.D., Burck, J.M., et al.: An overview of the developmental process for the modular prosthetic limb. J. Hopkins APL Tech. Dig. 30(3), 207–216 (2011)

Hsiu, H., Ulrich, R., Shu, L.: Investigating learners’ attitudes toward virtual reality learning environments: based on a constructivist approach. Comput. Educ. 55, 1171–1182 (2010)

Gu, Y., Yang, D., Huang, Q., et al.: Robust EMG pattern recognition in the presence of confounding factors: features, classifiers and adaptive learning. Expert Syst. Appl. 96, 208–217 (2018)

Zeng, B., Fan, S., Jiang, L., Liu, H.: Design and experiment of a modular multisensory hand for prosthetic applications. Ind. Robot: Int. J. 44(1), 104–113 (2017)

Yang, D., Jiang, L., Zhang, X., Liu, H.: Simultaneous estimation of 2-DOF wrist movements based on constrained non-negative matrix factorization and Hadamard product. Elsevier Ltd. (2020)

Taylor, C.L., Schwarz, R.J.: The anatomy and mechanics of the human hand. Artif. Limbs 2, 22–35 (1955)

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (No. 91948302 and No. 51875120).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Hu, Y., Jiang, L., Yang, B. (2021). Research on Virtual Training System for Intelligent Upper Limb Prosthesis with Bidirectional Neural Channels. In: Liu, XJ., Nie, Z., Yu, J., Xie, F., Song, R. (eds) Intelligent Robotics and Applications. ICIRA 2021. Lecture Notes in Computer Science(), vol 13015. Springer, Cham. https://doi.org/10.1007/978-3-030-89134-3_29

Download citation

DOI: https://doi.org/10.1007/978-3-030-89134-3_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-89133-6

Online ISBN: 978-3-030-89134-3

eBook Packages: Computer ScienceComputer Science (R0)