Abstract

Recently, in the field of surgical visualization, augmented reality technology has shown incomparable advantages in oral and maxillofacial surgery. However, the current augmented reality methods need to develop personalized occlusal splints and perform secondary Computed Tomography (CT) scanning. These unnecessary preparations lead to high cost and extend the time of preoperative preparation. In this paper, we propose an augmented reality surgery guidance system based on 3D scanning. The system innovatively designs a universal occlusal splint for all patients and reconstructs the virtual model of patients with occlusal splints through 3D scanning. During the surgery, the pose relationship between the virtual model and the markers is computed through the marker on the occlusal splint. The proposed method can replace the wearing of occlusal splints for the secondary CT scanning during surgery. Experimental results show that the average target registration error of the proposed method is \(\text{1.38}\pm \text{0.43 mm}\), which is comparable to the accuracy of the secondary CT scanning method. This result suggests the great application potential and value of the proposed method in oral and maxillofacial surgery.

L. Ding and L. Shao—These authors contributed equally to this work and should be considered co-first authors.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The traditional method of oral and maxillofacial surgery performs preoperative planning and operation simulation according to CT images, mainly relying on the prior knowledge and experience of the surgeon [1]. In recent years, researchers have studied several oral and maxillofacial surgical guidance systems based on augmented reality technology [2,3,4,5,6]. Wang et al. [7] used integral imaging technology to realize the 3D display of the mandible virtual model, enhancing the depth perception during the surgical guidance process. Ma et al. [8] proposed an augmented reality display system based on integral videography. The system can provide a 3D image with full parallax and help surgeons directly observe the hidden anatomical structure with naked eyes. Through a half-silvered mirror, observers can directly perceive the real environment while receiving the reflected virtual content, avoiding the problem of hand–eye coordination during surgery. The marker-less image registration method used in AR scenes must usually extract the features of objects [9]. However, the process of extracting the boundary shape is complicated and time-consuming. Zhu et al. [10] designed personalized occlusal splints for each patient, achieving the superimposition of the virtual skull model on the patient by identifying fiducial marks and tracking patient displacement. This method has high precision and real-time performance that satisfy the intraoperative requirements. However, this method requires a significant amount of time to design an occlusal splint based on the CT data of each patient before the operation.

This study proposes an augmented reality system for oral and maxillofacial surgery. The proposed system uses a 3D scanner to perform 3D reconstruction. The virtual model is superimposed on the real object by tracking the reference image marker. The principal prototype of the system was tested on a skull phantom and cadaver mandibles. The results show that the accuracy of the proposed system meets the clinical needs. In addition, our method does not require patients to undergo secondary CT scans. Furthermore, we only need to design a universal occlusal splint for all patients to meet the surgery requirement. We summarize our contributions as follows. (1) A universal occlusal splint is designed for most patients, replacing the design of personalized occlusal splints for each patient, thereby greatly reducing the time and material costs. (2) Instead of secondary CT scanning, the virtual model is reconstructed by 3D scanning to achieve a “seamless” integration of the virtual and real situations. The influence of fusion precision and external factors om accuracy is analyzed from several aspects.

2 Experimental Setup

Figure 1 illustrates the setup of the proposed augmented reality display system. M represents the universal occlusal splint. A marker is pasted on the occlusal splint, which is rigidly fixed to the skull phantom. R represents the skull phantom. The size of the skull phantom is the same as that of a human skull. S represents the 3D scanner (Artec EVA, Canada), which is used to obtain the virtual model for the experiment. C represents the camera (sy8031, Shenzhen, China). The maximum frame number of video processing is 28 frames/s, and the resolution is 1080p. V represents a computer monitor, which is used to obtain the fusion view. Optical tracker N (NDI Polaris, Canada) and navigation tracking probe X are used to verify the fusion error.

3 Methods

3.1 System Framework

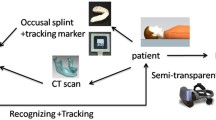

Figure 2 illustrates the framework of the proposed augmented reality display system for oral and maxillofacial surgery. The framework mainly includes the following parts: virtual model production, marker recognition, and fusion view generation.

To obtain the virtual model, a universal occlusal splint with a marker is fixed with a skull phantom or a mandible. The virtual model is reconstructed using a 3D scanner, and the pose of the virtual model is corrected by the ICP registration algorithm. During marker recognition, to obtain the matching region between the marker and the input video stream, the ORB feature points are detected and saved, and the feature descriptor is calculated. The marker coordinate is transformed to the screen coordinate through the marker. To obtain the fusion view, the relative position between the virtual model and the marker is fixed similarly as the real scene. After recognizing the marker, the pose of the marker is calculated in real time, and the virtual object’s pose is updated according to the marker. When measuring the fusion accuracy, quantitative results are obtained by the optical tracker and the navigation tracking probe.

3.2 Virtual Model Production

Universal Occlusal Splint Design

We collected the CT data of mandibles in 100 patients. Three-dimensional models of each mandible were reconstructed by threshold segmentation (Mimics) and surface reconstruction, as shown in Figs. 3(a), (b) and (c). The mandible dataset was constructed as follows:

The 3D models of each mandible are composed of a set of points, where m denotes the total number of vertices on the mandibular model, and each vertex is represented by \({\rm x},{\rm y},{\rm z}\); i indicates an index of 100 cases. The data volume of each mandible is down sampled. The statistical shape is established based on principal component analysis [11]. The average shape model is consequently obtained, as shown in Fig. 3(d), and \(f\) can be used to describe any 3D statistical shape in the mandibular training data set, as shown in Formula (2):

where \(f\) indicates the statistical shape parametric model of 100 mandible cases. \(\overline{f}\) indicates the average 3D statistical shape matrix of the mandible. \({a}_{i}\) indicates the eigenvector matrix describing the morphological changes of the first m – 1 principal components. \({b}_{i}\) indicates the matrix describing the specific model parameters. The statistical shape model contains the structural features of 100 cases and can be used as a standard mandible template. As shown in Fig. 3(e), a 3D groove model, which is suitable for most people and can be fixed with the lower teeth of the template, is designed according to the template. To facilitate the identification of the marker during the virtual reality fusion process, a connecting rod is led out from the groove model. The end of the rod is designed as a square plane where the image marker can be placed. The recognition rate is higher when the marker size is set to 50 mm × 50 mm than other sizes. Therefore, the size of the square plane is designed to be 50 mm × 50 mm. Finally, the universal occlusal splint is 3D-printed, as shown in Fig. 3(f).

Design of universal occlusal splint. (a) Segmentation and reconstruction of case1, (b) Segmentation and reconstruction of case2, (c) Segmentation and reconstruction of case100, (d) Statistical shape model of 100 cases, (e) Design universal occlusal splint based on statistical shape model, (f) 3D printed universal occlusal splint.

Virtual Model Pose Correction

The universal occlusal splint is fixed with the skull phantom to form a whole model, as shown in Fig. 4(a). The virtual skull model reconstructed by 3D scanning, which is consistent with the size of the real skull phantom, is shown in Fig. 4(b). The relative position between the virtual model and the marker is not unified in the virtual and real spaces. Therefore, their position relationship in the virtual and real spaces must be unified before obtaining the fusion view. We set up a reference plane plate with the same size as the square plane of the occlusal splint at the origin of the marker coordinate system. The reference plane plate and the occlusal splint are composed of point sets. We suppose that the point set on the reference plate is \({p}_{i}\), and the point set of the occlusal splint is represented by \({q}_{i}\). The ICP registration algorithm [12] is used to register the square plane of the occlusal splint with the reference plane. Consequently, the conversion matrix that minimizes E in Eq. (3) is obtained:

The virtual skull model is moved to the origin of the reference image marker coordinate system through transformation matrix T, as shown in Fig. 4(c). After identifying the image marker, the virtual model is correctly superimposed on the real environment according to the position and pose of the marker.

3.3 Marker Recognition

The ORB feature points [13, 14] of the image marker are extracted, and the BRIEF algorithm [15] is used to calculate the feature descriptors. All the feature points and the feature descriptors are stored as feature templates for determining whether the marker is included in the video stream frame.

Gray-scale processing is performed on the acquired input frame image to detect the feature points. The Hamming distance is used as the similarity measure to check the corresponding relationship between the marker and the input frame image. Feature matching is carried out afterward. \(Y={y}_{0}{y}_{1}\cdots {y}_{n}\) indicates the ORB descriptor marker. \(X={x}_{0}{x}_{1}\cdots {x}_{n}\) indicates the ORB feature descriptor of the input frame image. The Hamming distance is,

where \(\alpha \) represents the threshold of the Hamming distance. When the Hamming distance between the two descriptors is greater than the threshold, the two images have a large error and the video stream has no marker. When the distance is less than the threshold, the part of the input frame image containing a large amount of feature information in the video stream can be determined, and the position of the marker can be identified.

3.4 Fusion View Generation

For the augmented reality system, the goal is to superimpose the virtual model in real space. Therefore, drawing the virtual model at the target coordinate position accurately is a particularly important step for the augmented reality system. O\(\{{X}_{m},{Y}_{m},{Z}_{m}\}\), C\(\{{X}_{c},{Y}_{c},{Z}_{c}\}\), \({\{x}_{c},{y}_{c}\}\), and \(\{{x}_{d},{y}_{d}\}\) represent the marker, camera, ideal screen, and actual screen coordinates, respectively.

The marker coordinate system takes the center of the image marker as the origin. In Sect. 3.2, the coordinates of the virtual model and the marker are unified. In real space, the relative transformation matrix of the camera and the marker can be estimated by the pose of the marker. The augmented reality registration task is converted to calculate a spatial transformation relationship, mapping the relationship between the marker and screen coordinates. That is, the virtual and real space coordinates are connected. The fusion view is displayed on the actual screen coordinates. The transformation relation between the marker and camera coordinates follows the following formula:

where R denotes the rotation matrix, and T denotes the translation matrix. Ideally, the conversion relationship between the camera and ideal screen coordinates is as follows:

Given the linear distortion of camera lens, the result of pose estimation is the transformation relationship between the camera and actual screen coordinate systems:

where \({h}_{x},{h}_{y}\) indicates the scale factor. \(f\) indicates the camera’s focal length. \(h\) indicates the distortion parameter. \(({u}_{0},{v}_{0})\) indicates the camera’s principal point coordinate. These parameters can be obtained by camera calibration. If \({T}_{c2m}\) can be found by identifying the marker, then an accurate coordinate can be provided for the virtual model drawing [16].

4 Experiments and Results

4.1 Experiment on Skull Phantom

Verification Points Selection

The verification points on the obvious physical feature structure that originally exists must be selected. In addition, the selection of verification points on the skull phantom must consider whether these points have high coverage for direction and face shape when used as evaluation indexes. In the proposed system, the points covering most of the maxillofacial region are selected from the skull phantom. Two of the feature points are not selected as verification points because they are blocked by the square plane of the occlusal splint. The name and label of each verification point selected on the skull phantom are shown in Fig. 6(a). Among the points, points 1, 2, 4, 6, 7, and 9 are the verification points of the right half of the skull phantom, and points 2, 3, 5, 6, 8, and 10 are the verification points of the left half of the skull phantom.

Measurement Scheme

The processed virtual model is imported into the fusion view display module. When the marker is recognized, the virtual model is superimposed onto the real phantom. The initial scene of the skull phantom is shown in Fig. 5(a). For the virtual model that was smoothed in the process of structured light 3D reconstruction, the verification points selected on the real model were difficult to identify. Therefore, 11 verification points were added to the skeletal characteristic position of the skull phantom and highlighted. The superimposed effect is shown in Fig. 5(b). When collecting the virtual and real verification points for precision measurement, the skull virtual model data will affect the recognition of the verification points, which could lead to visual errors. Therefore, unnecessary virtual model data can be deleted, and only the verification points are retained. At this time, the effect after superimposition is shown in Fig. 5(c).

The navigation probe was pointed to each of the verification points under the optical tracker, and the probe tip positions were saved. The virtual coordinate \({P}_{virtual}=({x}_{iv},{y}_{iv},{z}_{iv})\) \( \left( {i = {{1}},{{2}},{{3 \ldots \ldots {\rm n}}}} \right) \) and real coordinate \( P_{{real}} = \left( {x_{{ir}} ,y_{{ir}} ,z_{{ir}} } \right)\left( {i = {{1}},{{2}},{{3 \ldots \ldots {\rm n}}}} \right) \) of each verification point were then recorded. The absolute value of the distance between the virtual and real verification points is taken as the fusion error (target registration error (TRE)) [7, 17, 18] and used to evaluate the accuracy of the superimposed result.

As shown in Fig. 6(b), the probe was first used to select the real space fiducial points, and the real coordinates \({P}_{real}\) were recorded. Then, the corresponding virtual fiducial points were selected according to augmented reality fusion. The virtual coordinates \({P}_{virtual}\) were recorded. We took \({P}_{real}\) and \({P}_{virtual}\) as a set of data, forming a complete set of data by recording each verification point separately in order [3]. In addition, we set the skull phantom rotation according to the angle disc to verify the fusion error under different angles. A complete set of data was obtained through measurements at intervals of 10° from –60° to + 60° (Fig. 6(c)), and all data were recorded and then analyzed and sorted. We took TRE measurements under the same conditions in triplicates, and the mean values were calculated. All measurements were completed by the same operator.

Comparison of the Accuracy in Three Methods

In reference [19], Chen et al. built a virtual skull model and designed a printed personalized occlusal splint based on the patient’s CT images. They generated an integrated virtual model by nesting the virtual occlusal splint on the teeth shape. In reference [10], the skull phantom was fixed with the designed personalized occlusal splint, and the entire CT data was obtained by secondary scanning. The virtual model for the experiment was segmented from CT images, and the artificial marker was used for augmented reality display. Given that each verification point is visible at 0°, the TRE at 0° is the most suitable for verifying the overall average fusion accuracy. The TRE results of the proposed method and the methods in references [19] and [10] are shown in Table 1.

As shown in Table 1, the average fusion error of the proposed system is equivalent to that in reference [19], verifying the feasibility of the proposed system in guiding oral and maxillofacial surgery. The system in reference [10] has the highest fusion accuracy because its integrated model is derived from a second CT scan. However, a second CT scan increases the patient’s radiation risk. The preoperative preparation in references [19] and [10] includes three processes: the CT scan, segmentation, and the reconstruction of the virtual model; personalized occlusal splint design; and printing. The proposed system saves in CT scan and modeling segmentation time. The establishment of the virtual model in the proposed system is completed by 3D scanning. Furthermore, the proposed system can reduce CT radiation exposure and the cost of medical treatment while ensuring accuracy.

Influence of Different Angles on Fusion Accuracy

The TRE of each fiducial point under different angles is measured to verify the influence of the angle om fusion accuracy. Figure 7 (a) shows the TRE of points 1, 2, 4, 6, 7, and 9 during skull phantom rotation from 0° to 60°. Figure 7(b) shows the TRE of points 2, 3, 5, 6, 8, and 10 during skull phantom rotation from −60° to 0°.

Figures 7(a) and 7(b) shows that the error of point 1 decreases as the angle increases, and the errors of points 2 and 6 increase with the angle. This phenomenon occurs because point 1 is located at the edge of the skull, and the angle between point 1 and the center line of the camera’s field of view decreases as the model’s rotation angle increases. The smaller the angle between the verification point and the center line of the camera’s field of view is, the smaller the fusion error of the point will be [19]. Given that point 10 is nearest to the marker, the error range of point 10 is smaller than those of the other points, indicating that the nearer the point to the marker is, the more stable the fusion effect would be.

4.2 Cadaveric Mandible Experiment

Four cadaveric mandible cases used in our experiment are collected from Peking Union Medical College Hospital. The measurement scheme was applied to the mandible of four cases to verify the stability and versatility of the proposed system. The feasibility of this method in practical medical applications was verified by measuring the fusion accuracy on the mandible cases.

Results and Analysis

The superimposition process between the virtual model and the real scene was performed at 0° because all verification points are visible the tracking effect the most stable at the 0° position. We selected several verification points that can be observed at the 0° position on the mandible in advance, as shown in Fig. 8(d). Four cadaveric mandible cases were selected for the experiment, and the measurement scheme employed was the same as that in the skull phantom experiment. The same universal occlusal splint was fixed on the mandible of the four cases. Then, the entire virtual models were reconstructed. After recognizing the image marker, the virtual model was superimposed onto the real environment, forming the “integrated image” on the monitor. The initial states of the mandible and the virtual model reconstructed by structured light are shown in Figs. 8(a) and 8(b), respectively, and the effect of overlay is shown in Fig. 8(c). The feature points are highlighted, the virtual model display is hidden, and only the verification points used to verify the accuracy of virtual real fusion are retained. The fusion effect is shown in Fig. 8(d). The results of TRE are shown in Fig. 9. The TREs of cases 1, 2 and 3 are less than 2 mm, satisfying the surgery requirement. The fluctuation of the TRE curve in Fig. 9 can be attributed to the following: the errors caused by manual measurement, image recognition, and the environmental lighting changes.

5 Conclusion

This paper proposes a new augmented reality display system in oral and maxillofacial surgery. According to the statistical shape model of the mandible of 100 patients, a general occlusal splint was designed. The virtual model of the real phantom and cadaveric mandible cases were obtained by 3D scanning and positioned and tracked in real space based on the image marker. The fusion accuracy and feasibility of the proposed system were verified by comparison with the method in reference [19]. Surgeons can mark the planned surgical plan and the path of the surgery on the patient’s 3D model before the operation using the proposed system. During the operation, the virtual model and the actual surgical area fusion view can be obtained easily when patients wear a universal occlusal splint. The trajectory marked by the doctor in advance will be displayed on the planned position in the real scene to assist the doctor in the operation. Experimental results show that the accuracy of the proposed system is within the allowable range of actual surgical error, with an average fusion error of 1.38 \(\pm \) 0.43, proving the reliability of the proposed system. In addition, personalized splints and secondary CT scanning are no longer necessary in the proposed system. In summary, the proposed system has development potential for assisting surgeons in improving surgical accuracy and reducing operational difficulty.

References

Chana, J.S., Chang, Y.M., Wei, F.C.: Segmental mandibulectomy and immediate free fibula osteoseptocutaneous flap reconstruction with endosteal implants: an ideal treatment method for mandibular ameloblastoma. Plast. Reconstr. Surg. 113(1), 80–87 (2004)

Ma, L., et al.: Augmented reality surgical navigation with accurate CBCT-patient registration for dental implant placement. Med. Biol. Eng. Comput. 57(1), 47–57 (2018). https://doi.org/10.1007/s11517-018-1861-9

Gao, Y., Lin, L., Chai, G.: A feasibility study of a new method to enhance the augmented reality navigation effect in mandibular angle split osteotomy. J. Cranio-Maxillofac. Surg. (2019)

Badiali, G., Ferrari, V., Cutolo, F.: Augmented reality as an aid in maxillofacial surgery: Validation of a wearable system allowing maxillary repositioning. J. Craniomaxillofac. Surg. 42(8), 1970–1976 (2014)

Mamone, V., Ferrari, V., Condino, S.: Projected augmented reality to drive osteotomy surgery: implementation and comparison with video see-through technology. IEEE Access 8, 169024–169035 (2020)

Azimi, E., Song, T., Yang, C., et al.: Endodontic Guided treatment using augmented reality on a head-mounted display system. Healthc. Technol. Lett. 5(5), 201–207 (2018)

Wang, J., Suenaga, H., Liao, H.: Real-time computer-generated integral imaging and 3D image calibration for augmented reality surgical navigation. Comput. Med. Imaging Graph. 40, 147–159 (2015)

Ma, L., Fan, Z., Ning, G., Zhang, X., Liao, H.: 3D visualization and augmented reality for orthopedics. In: Zheng, G., Tian, W., Zhuang, X. (eds.) Intelligent Orthopaedics. Advances in Experimental Medicine and Biology, vol. 1093, pp. 193–205. Springer, Singapore (2018). https://doi.org/10.1007/978-981-13-1396-7_16

Wang, J., Suenaga, H.: Video see-through augmented reality for oral and maxillofacial surgery. Int. J. Med. Robot. Comput. Assist. Surg. (2017)

Zhu, M., Liu, F., Chai, G.: A novel augmented reality system for displaying inferior alveolar nerve bundles in maxillofacial surgery. Sci. Rep. 7, 42365 (2017)

Lüthi, M.: Statismo-a framework for PCA based statistical models. Insight 1,1–18 (2012)

Besl, P.J., Mckay, H.D.: A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 14(2), 239–256 (1992)

Cui, X.: Augmented Reality Assistance System Framework Research Based on Boeing 737 Aircraft Pre-flight Maintenance, pp. 21–27. Civil Aviation University of China, Tianjin (2019)

Rublee, E., Rabaud, V., Konolige, K.: ORB: an efficient alternative to SIFT or SURF. In: International Conference on Computer Vision. IEEE (2012)

Calonder, M., Lepetit, V., Strecha, C., Fua, P.: BRIEF: binary robust independent elementary features. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6314, pp. 778–792. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15561-1_56

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Jiang, T., Zhu, M., Chai, G., Li, Q.: Precision of a novel craniofacial surgical navigation system based on augmented reality using an occlusal splint as a registration strategy. Sci. Rep. 9(1), 501 (2019)

Murugesan, Y.P., Alsadoon, A., Manoranjan, P.: A novel rotational matrix and translation vector algorithm: geometric accuracy for augmented reality in oral and maxillofacial surgeries. Int. J. Med. Robot. Comput. Assist. Surg. (2018)

Chen, L., Li, H., Shao, L.: An augmented reality surgical guidance method based on 3D model design. In: The 20th National Conference on Image and Graphics (NGIG), vol. 144, pp. 28–30 (2020)

Acknowledgement

This work was supported by the National Key R&D Program of China (2019YFC0119300), the National Science Foundation Program of China (62025104, 61901031), and Beijing Nova Program (Z201100006820004) from Beijing Municipal Science & Technology Commission.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

The authors declare that there are no conflicts of interest related to this article.

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Ding, L. et al. (2021). Novel Augmented Reality System for Oral and Maxillofacial Surgery. In: Peng, Y., Hu, SM., Gabbouj, M., Zhou, K., Elad, M., Xu, K. (eds) Image and Graphics. ICIG 2021. Lecture Notes in Computer Science(), vol 12889. Springer, Cham. https://doi.org/10.1007/978-3-030-87358-5_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-87358-5_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-87357-8

Online ISBN: 978-3-030-87358-5

eBook Packages: Computer ScienceComputer Science (R0)