Abstract

As organizations grow, it is often challenging to maintain levels of efficiency. It can also be difficult to identify, prioritize and resolve inefficiencies in large, hierarchical organizations. Collaborative crowdsourcing systems can enable workers to contribute to improving their own organizations and working conditions, saving costs and increasing worker empowerment. In this paper, we briefly review relevant research and innovations in collaborative crowdsourcing and describe our experience researching and developing a collaborative crowdsourcing system for large organizations. We present the challenges that we faced and the lessons we learned from our effort. We conclude with a set of implications for researchers, leaders, and workers to support the rise of collective intelligence in the workplace.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Crowdsourcing

- Collective intelligence

- Decision support systems

- Collaborative crowdsourcing

- Continuous improvement

- Efficiency

1 Introduction

As organizations grow larger, the layers of administrative, legal, and managerial roles tend to grow proportionately. These added layers inevitably produce increased complexity and increased risk of organizational inefficiencies. As a result, large organizations often hire external assistance to help them operate more efficiently. Public and private organizations spend billions of dollars annually assessing their performance and investing in external entities to identify and resolve organizational inefficiencies. External consulting firms are expensive, require extensive employee time, and only see a snapshot in time of the organization’s dynamics. Collaborative crowdsourcing systems can enable workers to actively and continuously participate in improving their own organization, saving costs and increasing worker empowerment.

We have developed GECKOS (Generating Employee Crowdsourced Knowledge for Organizational Solutions) to enable large organizations to leverage their own workers to continuously identify and resolve inefficiencies. GECKOS leverages the latest research findings on collaborative crowdsourcing to enable workers to anonymously contribute issues they are experiencing at work and participate in resolving them. Workers can collaborate with other workers to prioritize which issues to address first, generate ideas to address the issue(s), select highest-quality ideas that can be used to resolve the issue, and synthesize good ideas into holistic solution proposals.

2 Background: Collaborative Crowdsourcing Research

The development of GECKOS was motivated by recent innovations in collaborative crowdsourcing, which presented a unique opportunity to address the inefficiency challenges faced by large, hierarchical organizations. Our research question lies at the intersection of this problem and opportunity: How can we design a collaborative-crowdsourcing system that successfully leverages workers within an organization to enhance its efficiency?

Crowdsourcing has gained popularity as an approach to enable large numbers of contributors (often from outside an organization) to contribute to solve problems or complete tasks in a variety of domains. Research has found that a crowd can in some instances outperform domain experts, even when crowd members individually do not possess any expertise and are not particularly accurate [1]. Historically, crowdsourcing approaches have primarily focused on eliciting individual, independent inputs which are either aggregated or used to select the best individual contribution within the crowd (e.g., [2]). However, recent research has demonstrated the value of algorithms and workflows to structure and support collaboration among contributors.

Examples of workflows supporting collaboration include MicroTalk, which has been shown to enhance the quality of generated responses by structuring collaboration among contributors [3]. The MIT Collaboratorium provides a platform to support collaborative deliberation around complex challenges [4]. The Collaboratorium provides a structure to organize, think about, and select issues and ideas to address issues. Bag of Lemons is a relatively simple innovation that posits that it is easier for humans to select the worst ideas (i.e., bag of lemons) than it is to select the best ideas (i.e., bag of stars) and this can be leveraged when crowdsourcing idea selection [5]. The Mechanical Novel workflow provides support to enable crowd contributors to collaborate to complete complex, generative tasks like writing a fictional novel [6].

Another set of relevant research and innovations comes from anonymity/privacy research. In order for workers to feel comfortable contributing their honest input, they need to trust that they can contribute without fear of repercussion. AnonRep provides an approach to keep users anonymous while also holding them accountable [7]. Anonymous communication networks can also help ensure that workers trust that their contributions cannot be tracked by management. These networks include free and open-source options (e.g., torproject.org) as well as experimental options [8].

3 GECKOS – Generating Employee Crowdsourced Knowledge for Organizational Solutions

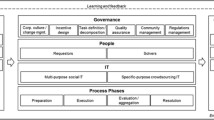

In designing GECKOS, we leveraged existing innovations and research to create a sound, functional tool to support large organizations. As we developed our initial design concepts, we received feedback from partner organizations and from our sponsor. We also interviewed workers at partner organizations to better understand how they identified and resolved issues. Our initial GECKOS concept is shown in Fig. 1.

We incrementally developed an experimental testbed and conducted a series of experiments with real workers, real issues and ideas, real solutions, and in real organizations. These experiments were designed to provide value to the company, while concurrently providing sound experimental results to contribute to the scientific body of knowledge. It was critical that the issues (i.e., stimuli) presented to workers (i.e., participants) were real issues that workers would report if the system was implemented in their organization. We elicited over 100 issues from workers at our partner organization and populated an experimental task to mimic what participants would have generated with a real GECKOS implementation. Ensuring that the results of our experiments provided value to the leadership of our partner organization turned out to be critical to develop an increasingly efficient testbed to research collaborative crowdsourcing in organizations.

Currently, we have a complete functional prototype of GECKOS (Fig. 2). The existing system leverages many of Majchrzak and Malhotra’s insights [9], especially when it comes to visibility of other users’ input and its importance to both engagement and collaboration. The laboratory versions of these workflows proved too constraining and brittle to be feasible within GECKOS, but we incorporated features of research innovations (e.g., argumentation framework, Bag of Lemons, Mechanical Novel). Priority was given to enabling users to engage and disengage as desired while supporting high-quality collective outputs.

4 Challenges and Lessons Learned

In this section, we share some of the lessons we learned from developing and testing GECKOS. We believe our experience and lessons learned can provide valuable information to researchers, leaders, and workers interested in leveraging collaborative crowdsourcing to enhance organizational efficiency.

4.1 Designing a Crowdsourcing System that Can Successfully Support Collective Action by Workers to Improve Their Organization

Translating Laboratory Research Findings into Applied/Practical Design for Organizations.

When we began designing GECKOS, our priority was to support interaction among contributors and to maximize the quality of the collective output. We leveraged innovations like the MicroTalk workflow [3], which is proven to enhance the quality of the collective output when compared to not using the argumentation workflow. For example, in our original design we had a stage in which users who reported similar issues would work together in small groups (e.g., three users) to frame the issue for the rest of the organization. We tested our implementation of the MicroTalk logic to support issue framing internally with our research team and it worked well. While conceptually sound, our original approach required a level of commitment from users that made it too brittle and impractical. These requirements are too restrictive for a collaborative crowdsourcing system in which users self-select to contribute and may choose to stop contributing at any time. When that is the case, we must design for resilience. We achieved this by creating a more open, less structured interface that allowed users to dynamically see contributions from other users and add their inputs in a self-initiated way at a time of their own choosing.

Our original design also prevented users from seeing the input of other participants in an organic manner and constrained when they were able to contribute. When designing a system in which users must self-select to contribute, it is key to design for engagement. Further, being able to see other users’ input, even when one is not being asked to contribute, makes the system more attractive and engaging to users. This visibility allows workers to self-select to contribute to individual topics of interest to the user.

A more open, less structured interface also has drawbacks. For example, seeing other users’ input upfront can bias the contributions of subsequent users [10]. While workflows can help strike the balance between minimizing bias and benefiting from the responses from others, they need to be flexible enough to allow users to disengage without affecting the outcome. This can be a difficult balance to strike in collaborative-crowdsourcing systems. In one of our experiments, we explored a workflow that incorporated features of the bag-of-lemons approach [5]. Users in the experimental condition completed the workflow before accessing the workspace. The workflow provided some benefits when it comes to idea quality while not reducing engagement after they accessed the workspace in a substantial manner.

When considering self-selection situations, designing for resilience and engagement become higher priority in this context than designing for maximizing output quality. There are ways in which workflows to enhance quality can be leveraged, but they cannot be too restrictive. The workflow we found useful just involved an extra step prior to accessing the open interface.

Balancing User Anonymity with Some Users’ Desire for Recognition.

The ability to contribute anonymously is critical for a system like GECKOS. Users must be able to contribute anonymously and they must trust that this is the case. In our interviews, workers expressed skepticism about the anonymity of existing methods used by their organizations (e.g., employee surveys). While we did not implement all of the anonymity measures during this phase of the effort, we did create a system that prevented us from being able to identify contributors.

While we erred on the side of ensuring anonymity, some users expressed their desire to be identified so that their leaders could recognize them for their contributions. To the extent that inability to be recognized for contributions could negatively impact participation, this should be taken into consideration. In our current version, we intentionally were not able to track users in order to reward them or share their names with leadership. While innovations exist that could enable us to do this (e.g., AnonRep [7]), it is also important to note that tracking users to identify them for recognition is going to have a negative impact on the trust of users worried about anonymity.

Challenge of Synthesizing Ideas into a Comprehensive Solution.

Collaborative crowdsourcing is best suited to elicit information from contributors, prioritize, rank, rate, or even deliberate and argue. However, synthesizing information across ideas into a comprehensive solution is a challenging task to complete through a collaborative crowdsourcing process. A workflow like Mechanical Novel involves too many steps and iterations with temporal constraints to make it practical in organizational settings. In this effort, we did explore a couple of alternatives, including adding a step which is completed by leadership or smaller teams of organization workers who would create a set of proposals to present to the crowd to vote or prioritize. This is better than most existing civic-engagement processes, which typically gather ideas from contributors who do not then get a voice on selecting among proposed solutions. More research is needed in the development of lean workflows to support solution development.

4.2 Conducting Research with, and Providing Value to, Our Partner Organizations and Their Workers

Establishing Partnerships and Developing an Experimental Testbed.

One of the accomplishments of this effort was to establish an experimental testbed to conduct collaborative-crowdsourcing research with a partner organization. Majchrzak and Malhotra [9] described how challenging and time-consuming it is to create and maintain an experimental testbed for this type of research. We established partnerships with multiple separate organizations and were able to a successful experimental testbed with the smaller of the three (i.e., less than less than 1000 workers). And its size likely played a role in the success of this partnership. In the other two cases, a health-care system with over 10,000 workers and a Fortune 100 company, the same inefficient processes which this effort is trying to address (e.g., legal departments with rigid criteria that do not accommodate for unusual or innovative agreements) likely played a role in the partnership not reaching the experimental testbed stage.

Developing and nurturing this partnership involved a gradual process of gaining buy-in from leadership and workers at the partner organization. Buy-in was hardest at first, without a fully developed system. As we experimented using GECKOS to prioritize issues encountered by their employees, generate ideas to resolve top-priority issues, and so on, the value of the system became apparent to leadership and the level of support and enthusiasm also grew. This was very insightful in terms of how to develop a fruitful collaboration and set up an experimental testbed within a limited amount of time. Currently, we have a process that allow us to configure and conduct experiments with over 100 workers at this partner organization within a few weeks. This capability is invaluable when you are developing and testing crowdsourcing tools.

Research Limitations.

There are a few challenges that relate specifically to conducting research with workers within organizations. First, follow-up experiments to explore variations of the same experiment and answer questions about what the results mean cannot be done with the same issues and/or ideas as in the first experiment. It would need to be at least a separate issue or set of issues. Otherwise, participants would see redundancy in the task content and would likely not remain as genuinely engaged. For example, if we conducted experiments while workers were generating ideas to, let us say, improve the communication strategy within the company, and we wanted to test a follow-up hypothesis with a modified workflow, we would not be able to use a modified workflow on the same issue. While the value obtained from the stimuli being realistic and motivating to employees is worth the sacrifice, this limitation makes answering questions definitely more challenging with an experimental testbed like this one. Ideally, this challenge could be addressed by having a large number of partner organizations as part of the testbed.

Another important challenge is assessing the quality of the collective output. We were interested in understanding which approaches result in better collective outputs, but establishing output quality is not straightforward. For example, participants may generate ideas to resolve an issue they are experiencing at work or prioritize issues reported by their peers for resolution. What is the ground truth to which to compare the collective output? We addressed this problem by using a new sample from the same population of workers (i.e., a panel of judges) to rank the collective outputs obtained from both methods based on perceived quality (e.g., idea quality, prioritization quality). The results showed some promise. For example, panel of judges used in the pre-tests we conducted with Amazon Mechanical Turk® workers and the experiments with workers seemed to show consistent results. In one experiment, we created a ‘ground truth’ for issue priority based on the frequency with which users reported the issue. The results from the panel of judges were also consistent with this ‘ground truth’. The panel-of-judges approach provides some value, but additional measures of collective-output quality useable with real-world stimuli are needed.

5 The Future of Collaborative Crowdsourcing in the Workplace

The work described in this paper highlights both the inherent challenge of developing a successful collaborative-crowdsourcing system for large organizations and its potential to democratize management/leadership and support more efficient and adaptive organizations. We present the implications of this work to researchers/technologists, leaders/managers, and workers at organizations separately.

Implications for Researchers and Technologists.

Conducting research on collaborative-crowdsourcing systems using the real issues and ideas of real workers of real organizations poses challenges, limits experimental control, and constrains design. However, establishing a partnership with mid-size organizations that have more flexibility than extremely large organizations can enable researchers and technologists establish a testbed to use for experimentation. The nature of the stimuli used and the experiments can be such that the organization’s leadership can see the value of the partnership even before the promised system is provided to them. Once workers are engaged and issues and ideas that they recognize are important are presented, it is much easier to efficiently engage both leadership and workers.

Implications for Leaders and Managers.

The success of collaborative-crowdsourcing systems within organizations is not merely a technological issue. Leaders and managers within organizations play a key role in enabling these systems to achieve their potential or preventing them from doing so. In the book Team of Teams, General McChrystal discusses what a more distributed, decentralized, adaptive leadership might look like [11]. In order to truly grasp the impact that these systems could have when it comes to managing/leading large organizations, leaders and managers will have to be willing to give up some decision-making/problem-solving control and trust in the collective wisdom of their workers. Without this mindset in leadership/management, the impact will be dampened and the potential of these systems will not be fully realized.

Implications for Workers and Worker Trust.

The way things are typically done in organizations and systemic/cultural issues (e.g., individualistic society) also present challenges regarding how individual users contribute to the system. For example, we need to ensure that workers can trust that their input is anonymous and that it is safe to contribute. Mainstream management practices that localize the decision-making authority and responsibility in a few hands engender mistrust and limit the ability of workers to truly participate in important decisions. And workers are used to operating in this environment. Just like placing a fishbowl fish in a bathtub does not mean that the fish is going to swim through the whole bathtub, the virtual walls of the ways in which workers are used (and expected) to engage within organizations can limit the impact of collaborative-crowdsourcing systems. The success of these systems also relies on workers giving them a genuine try, donning a collectivistic mindset, and working to advance the collective wellbeing and efficiency of their organization.

Ultimately, the goal of GECKOS is to democratize organizational management in a way that serves both workers and leadership. New technological advances and scientific discoveries can support more inclusive, participative, democratic, and cooperative organizations. Collaborative-crowdsourcing systems like GECKOS can help realize this more inclusive, participative, democratic, and cooperative future.

References

Surowiecki, J.: The Wisdom of Crowds. Anchor, Chicago (2005)

Jeppesen, L.B., Lakhani, K.R.: Marginality and problem-solving effectiveness in broadcast search. Organ. Sci. 21, 1016–1033 (2010)

Drapeau, R., Chilton, L., Bragg, J., Weld, D.: Microtalk: using argumentation to improve crowdsourcing accuracy. In: Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, vol. 4, no. 1 (2016)

Klein, M., Iandoli, L.: Supporting Collaborative Deliberation Using a Large-Scale Argumentation System. MIT Sloan Paper No. 4691–08 (2008). http://ssrn.com/abstract=1099082

Klein, M., Garcia, A.C.B.: High-speed idea filtering with the bag of lemons. Decis. Support Syst. 78, 39–50 (2015)

Kim, J., Sterman, S., Cohen, A.A.B., Bernstein, M.S.: Mechanical novel: crowdsourcing complex work through reflection and revision. In: Plattner, H., Meinel, C., Leifer, L. (eds.) Design Thinking Research. UI, pp. 79–104. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-60967-6_5

Zhai, E., Wolinsky, D.I., Chen, R., Syta, E., Teng, C., Ford, B.: Anonrep: towards tracking-resistant anonymous reputation. In: 13th {USENIX} Symposium on Networked Systems Design and Implementation ({NSDI}, no. 16 (2016)

Barman, L., et al.: PriFi: a low-latency and tracking-resistant protocol for local-area anonymous communication. In: Proceedings of the 2016 ACM on Workshop on Privacy in the Electronic Society, pp. 181–184 (2016)

Majchrzak, A., Malhotra, A.: Unleashing the Crowd. Springer International Publishing, Berlin (2020)

Eickhoff, C.: Cognitive Biases in Crowdsourcing. In: Proceedings of the Eleventh ACM international Conference on Web Search and Data Mining, pp. 162–170 (2018)

McChrystal, G.S., Collins, T., Silverman, D., Fussell, C.: Team of Teams: New Rules of Engagement for a Complex World. Penguin (2015)

Acknowledgments

The work described was funded through an Office of Naval Research (ONR) Small Business Innovation Research grant (N00014–19-C-1011).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Mateo, J.C., McCloskey, M.J., Grandjean, A., Cone, S.M. (2021). Leveraging Collaborative Crowdsourcing to Empower Workers to Improve Their Organizations. In: Kantola, J.I., Nazir, S., Salminen, V. (eds) Advances in Human Factors, Business Management and Leadership. AHFE 2021. Lecture Notes in Networks and Systems, vol 267. Springer, Cham. https://doi.org/10.1007/978-3-030-80876-1_43

Download citation

DOI: https://doi.org/10.1007/978-3-030-80876-1_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-80875-4

Online ISBN: 978-3-030-80876-1

eBook Packages: EngineeringEngineering (R0)