Abstract

Concepts shape experience and create understanding. Accordingly, a key question is how concepts are created, represented, and used. According to embodied cognition theories, concepts are grounded in neural systems that produce experiential and motor states. Concepts are also contextually situated and thus engage sensorimotor resources in a dynamic, flexible way. Finally, on that framework, conceptual understanding unfolds in time, reflecting embodied as well as linguistic and social influences. In this chapter, we focus on concepts from the domain of affect and emotion. We highlight the context-sensitive nature of embodied conceptual processing by discussing when and how such concepts link to sensorimotor and interoceptive systems. We argue that embodied representations are flexible and context dependent. The degree to which embodied resources are engaged during conceptual processing depends upon multiple factors, including an individual’s task, goals, resources, and situational constraints.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Concepts structure our knowledge and our knowledge influences how we perceive, interpret, and experience the world. This makes understanding how concepts are created, represented, and used a central issue in psychology and cognitive science. According to theories of embodied cognition, our concepts are grounded in neural systems that produce perceptual and motor states (Barsalou, 1999, 2008). For instance, understanding the concept of APPLE involves accessing modality-specific information about our experiences with apples—what they look like, what they feel like in our hands, the sound they make when we bite into them, their taste, how they influence feelings of hunger, and so on. Similarly, understanding emotion concepts also involves accessing modality-specific information. For example, the concept of HAPPINESS includes information about how we experience our own internal and external bodily states when we feel happy, as well as how happy people look, sound, and act.

Embodied theories are typically contrasted with the more traditional amodal theories of semantic memory and conceptual processing that developed out of the mind as computer metaphor that dominated early cognitive science (e.g., Collins & Loftus, 1975). According to the traditional perspective, modal experiences are transformed into abstract, symbolic representations. On this view, information about what an apple looks or tastes like remains available to conceptual knowledge. Critically, however, that knowledge is not represented in a modal format. Instead, it is represented in an abstract, amodal manner, and the abstractness of the representation is important. Indeed, on the traditional view, the amodal nature of concepts is precisely what makes them powerful (Fodor, 1975). After all, what allows us to understand the idea of an “apple”, “knife”, “anger”, “happiness”, “justice”, or “revenge” is moving beyond our individual experiences of these things to extract their abstract conceptual “cores”. On this amodal view, understanding the essence of “happiness” involves the apprehension of its abstract features, just as understanding the essence of “even number” disregards whether the number is 2, 18, or 586, is displayed in Roman numerals, or is written in pink.

Conceptual meaning is often indexed by our vocabulary—e.g., the concept of HAPPINESS is indexed by the word ‘happiness.’ One of the challenges for theories of conceptual processing and representation is how arbitrary symbols, such as the word form “anger” or “happiness”, obtain their meaning. For the conceptual system to support meaning, the symbols in the mind need to be connected to their content—that is, they need to be grounded in some way (Harnad, 1990). By the traditional approach in cognitive science, amodal symbols are meaningful by virtue of their role in a larger compositional system governed by truth-preserving operations (Fodor, 1975). One argument that has been raised against these traditional approaches, however, is that it is not clear how meaning enters the system, as in Searle’s (1980) thought experiment about the Chinese Room. Because, according to traditional accounts, the meaning of abstract symbols derives from their relationship to other abstract symbols, such accounts have been likened to the attempt to learn a foreign language from a dictionary that defines new words in terms of other words from the unknown language.

We can type “What color are apples?” into a sophisticated chatbot and it can provide us with a sensible response, such as “Red, but not all apples are red…” However, that knowledge does not seem on par with the understanding of someone who has experience seeing apples in that conceptual meaning is not grounded. Mary, the color-blind neuroscientist, appears to lack something essential in her understanding of color, even if she knows that firetrucks and stop signs are typically red. Does someone who has never experienced happiness (pain, love, or sexual desire) truly know its core meaning?

This challenge of grounding is less problematic from an embodied perspective by which symbols are grounded because they are linked to sensorimotor information (Barsalou, 2008). The relationship to the referent is part of the symbol’s form. The concept of HAPPINESS, for example, recruits neural resources involved in the bodily experience of happiness. When people think about the meaning of happiness, they simulate a relevant experience of happiness—either from memory or constructively using currently relevant resources. This does not mean that when we think and talk about apples that we activate the entirety of our apple-related sensorimotor information. Nor does it imply we access a context-invariant set of features that constitute a semantic core.

Rather, the activation of embodied content varies as a function of contextual factors (Winkielman et al., 2018). Throwing an apple involves different bodily experiences than eating one—and, consequently, so does thinking about throwing an apple versus thinking about eating one. In many (but not all) situations, the goal of conceptualization is simply to perform a task with as little effort as possible. In such cases, we suggest that a situated sensorimotor satisficing approach is typically taken. The emphasis on the context-dependent nature of embodied information is why we call our model the CODES model. It stands for context-dependent embodied simulation (Winkielman et al., 2018).

At the core of the chapter, we will primarily focus on emotion concepts, their grounding, and the context-dependent nature of sensorimotor activations during conceptual processing. Emotion concepts are interesting because they include concrete sensorimotor features as well as abstract relational ones. Emotions have perceptual features associated with external bodily changes, such as action tendencies and facial expressions. Emotions also have features associated with internal bodily changes, such as changes in heart rate or breathing. They certainly have a phenomenal component—privately experienced feelings. Finally, emotions have abstract relational features. For example, a particular feeling of anger has a cause and a result. Likewise, anger has at least one experiencer and often one or more targets or recipients (emotions are about someone or something). We begin below with the grounding problem and a discussion of how emotion concepts can be grounded in bodily experience. Next, we discuss the context-dependent nature of the activation of sensorimotor information in the processing of emotion concepts. Finally, we return to the difficult question of abstraction and non-perceptual aspects of emotion and other concepts (Borghi et al., 2017).

Grounding Emotion Concepts in the Neural States Associated with Action and Perception

Emotion concepts vary in complexity, ranging from the deceptively simple such as GOOD and BAD, to the cognitively sophisticated SCHADENFREUDE, and to highly abstract concepts such as BEAUTY. During development, children’s concepts are closely aligned with their rudimentary affective reactions to stimuli, their “yeah” and “yuck” experiences, and their concepts are limited to basic emotions, such as HAPPINESS, ANGER, SADNESS, and DISGUST (Harris, 2008). While these emotion concepts may lack sophistication, they are nonetheless abstract. Children understand both that emotions are mental states, and that the same emotion can arise from perceptually dissimilar causes (Harris, 2008). So, whereas concrete concepts such as APPLE can be grounded in relatively similar sensorimotor experiences, abstract emotional concepts such as HAPPINESS cannot.

Note, however, that even though an emotion can be elicited by vastly different stimuli, the same emotion tends to feel similar across its occurrences. There is a family resemblance to the feeling associated with different instances of happiness, for instance. These feelings can be traced to neural substrates that are involved in the representation of bodily states (Craig, 2008). There is a debate as to what extent self-reported emotional states can be predicted by distinct patterns of autonomic activity (Barrett, 2019; Kragel & LaBar, 2013). Although there is a reasonable agreement that consciously experiencing one’s emotional state involves perceiving one’s body, the internal state cannot be the entire story. For one thing, similar physiological states can be construed as different emotions depending on the perceived events linked to them (Schacter & Singer, 1962). For instance, the arousal of fear can be misattributed to sexual arousal (Dutton & Aron, 1974). Accordingly, recent accounts of emotions highlight the role of situated conceptualization in the construction of emotional experience (Wilson-Mendenhall et al., 2013). That is, while HAPPINESS may always be grounded in some embodied experience, the specific composition of modalities that create the experience vary situationally (e.g., happiness after scoring an exciting goal differs from happiness on a calm, quiet evening).

Additionally, while the perception of internal states can be an embodied resource for grounding emotion concepts, one needs more than interoceptive information to learn the meaning of emotion words (Pulvermüller, 2018). Although a mother can tell her child that she feels happy, the child cannot directly experience the mother’s happiness. However, because the child can observe the mother’s actions and vocalizations, these observable features may help bridge the gap between consciously perceived internal states, concepts, and language (Pulvermüller, 2018). In fact, because action is a fundamental aspect of emotion, it can be a source for the experiential grounding of emotion concepts.

Different action tendencies are associated with different emotions (Frijda, 1986). For instance, happiness and anger both motivate approach behaviors, while disgust and fear motivate avoidance and withdrawal. The neural organization of motivation is also influenced by hand dominance, a characteristic that determines how we perform many actions. This makes sense if motivation is associated with approach (dominant hand) and withdrawal/defensive (non-dominant hand) actions. Accordingly, in right-handed individuals, approach-related emotions are associated with activity in the left frontotemporal cortex, while withdrawal-related emotions are associated with activity in right frontotemporal cortex (Harmon-Jones et al., 2010). For left-handers, the motivational lateralization is reversed (Brookshire & Cassasanto, 2012). Moreover, hand dominance also predicts the extent to which stimulation of the left or right dorsolateral prefrontal cortex via transcranial magnetic stimulation increases or decreases feelings of approach-related emotions (Brookshire & Cassasanto, 2018).

Another important type of motor activity related to emotions is facial expressions. Different facial expressions are associated with different emotions (Ekman & Friesen, 1971) and their motor profiles afford fitness-enhancing behaviors. For example, facial expressions of disgust such as nose wrinkling reduce sensory acquisition, while expressions of fear such as eyes widening enhance it (Susskind et al., 2008). Moreover, the tight relationship between action and emotion means that we can predict people’s emotions by observing their actions. Body postures (Aviezer et al., 2012), facial expressions (Ekman & Friesen, 1971), and even more subtle motor activity around the eyes (Baron-Cohen et al., 2001) provide information that can help an observer identify what another individual is likely feeling. The systematic relationship between external emotional expressions and internal emotional states provides a means of connecting the two, and thus can serve as the basis for the development of emotional concepts.

This connection is made easier because of the correspondence between perception and action. Observing others displaying emotions can lead to emotional contagion (Hatfield et al., 1993), and spontaneous facial mimicry (Dimberg, 1982). There are also neurons in the parietal cortex that fire when observing an action or when performing it (Rizzolatti & Craighero, 2004). Empathizing with another person’s pain activates neural circuits that are involved in the first-person experience of pain (Cheng et al., 2010). Note that for the grounding problem, it is not essential whether these connections exploit predispositions or are entirely learned (Heyes, 2011). The point is that these mechanisms provide a means for bridging external and internal experiences.

But to have meaningfully reliable concepts to organize our thinking, it helps to have language. As we have discussed, emotion concepts include concrete sensorimotor features associated with internal and external bodily states, and abstract relational features that connect these states to the source of the emotional response. No two experiences of a particular emotion are identical. The broad range of experiences that are associated with an emotion share a family resemblance, and the binding of these shared semantic features can be strengthened by their association to words and language (Pulvermüller, 2018).

It should be noted that word forms are typically arbitrarily related to their referents—they are abstract in this regard. Yet they still have embodied features—they are spoken, heard, written, and read. Words and linguistic symbols play an important role in conceptualization. Manipulating access to emotion words through priming or semantic association can facilitate or impair recognition of so-called “basic” emotional–facial expressions (Lindquist et al., 2006). They can also be used to help develop more sophisticated concepts such as beauty and immorality, through language use. However, even sophisticated, affect-laden abstract concepts can be grounded in embodied experiences. IMMORALITY is associated with and can be manipulated by feelings of anger and disgust; BEAUTY involves interoceptive feelings associated with contemplation, and wonderment is linked to the motivation to approach the object we find beautiful (Fingerhut & Prinz, 2018; Freedberg & Gallese, 2007).

In sum, we have suggested that emotions are closely associated with action and perception, most notably in the context of action tendencies involving approach and avoidance, emotional expressions, and interoception. Although the stimuli that elicit any given emotion are highly variable, the internal and external responses they elicit are less so. For a given emotion, different experiences share a family resemblance and their co-occurrence with particular word forms provides a basis for aggregating across their shared semantic features (see Pulvermüller, 2018). Together, these elements provide a means for grounding emotion concepts in embodied experiences. Tethered to language, emotion concepts can not only get off the ground, but can be used to construct even more sophisticated concepts. Below, we describe empirical research that has been used to support the hypothesis that emotion concepts are grounded in embodied states, as well as alternative interpretations of these data.

Empirical Support for Embodied Emotion Concepts

As we have discussed, embodied theories suggest that neural resources involved in action, perception, and experience provide semantic information and can be recruited during conceptual processing (Barsalou, 2008; Niedenthal, 2007; Niedenthal et al., 2005; Winkielman et al., 2018). During conceptual processing, these somatosensory and motor resources can be used to construct partial simulations or ‘as if’ loops (Adolphs, 2002, 2006). While peripheral activity (e.g., facial expressions) can also be recruited and influence conceptual processing, it is often not necessary. Instead, it is the somatosensory and motor systems in the brain that are critical (Damasio, 1999). Also, the sensorimotor neural resources that are recruited during conceptual processing do not need to exactly match those of actual emotional experiences—they can be partial and need not be consciously engaged (Winkielman et al., 2018).

The simplest way to test whether emotion concepts are embodied is to present single words and to measure the physiological responses they elicit. A commonly used physiological measure of emotional response is facial electromyography (EMG). By placing electrodes on different muscle sites of the face, one can evaluate the expressions participants make, even when those expressions are quite subtle. EMG studies that present words and pictures have found that participants smile to positive stimuli and frown to negative ones, though the effect is weaker for words than pictures (Larsen et al., 2003). In proper task conditions, concrete verbs associated with emotional expressions (e.g., “smile”) elicit robust EMG responses (smiles and frowns, respectively), while abstract adjectives (e.g., “funny”) elicit weaker, affect congruent responses (Foroni & Semin, 2009). Taboo words and reprimands presented in first and second languages elicit affective facial responses (Baumeister et al., 2017; Foroni, 2015), and increased skin conductance relative to control words (Harris et al., 2003). These effects are greater in the native language, where the affective element of the concepts is arguably more strongly represented. Additionally, multiple neuroimaging studies have found that affectively charged words can activate brain regions that are associated with the experience of affect and emotion (Citron, 2012; Kensinger & Schacter, 2006).

These studies show a connection between emotion concepts and their associated embodied responses. However, there are multiple reasons that such responses could occur. Consistent with the embodied perspective, it is possible that embodied responses are partially constitutive of emotion concepts, viz. that they play some representational role. From a strong embodiment perspective, this would be because the conceptual and sensorimotor systems are one and the same (Binder & Desai, 2011). From a weak embodiment position, conceptual representations are embodied at different levels of abstraction and the extent to which a concept activates sensorimotor systems at any given time depends upon conceptual familiarity, contextual support, and the current demand for sensorimotor information (Binder & Desai, 2011). Alternatively, embodied activity might be functionally relevant for conceptual processing, but distinct from conceptual representations. For instance, the physiological activity might be the result of elaboration after the concept has been retrieved. Finally, the physiological responses might be completely epiphenomenal, reliably accompanying conceptual activity but playing no functional role. Amodally represented concepts might, as a side effect, trigger affective reactions or spread activation to physiological circuits (Mahon & Caramazza, 2008). It is also possible that some of the embodied activity is representational, some is elaborative, and some is epiphenomenal. Correlational studies cannot adjudicate between these different possibilities (Winkielman et al., 2018).

More compelling evidence in favor of the hypothesis that emotion concepts draw on neural resources involved in action and perception comes from research on subjects who have impaired motor function. Individuals with Motor Neuron Disease and Parkinson’s have motor deficits and these deficits are associated with impaired action-word processing (Bak & Chandran, 2012; García & Ibáñez, 2014). Individuals on the autistic spectrum whose motor deficits impair their emotional expression also have abnormal processing of emotion-related words, and the extent of their language processing deficit is predicted by the extent of their motor problems (Moseley & Pülvermuller, 2018).

Complementing the correlational research above are studies that involve experimental manipulation of motor activity in neurotypical subjects in order to measure its impact on conceptual processing. Different emotional–facial expressions involve different patterns of facial activity (Ekman & Friesen, 1971). The Zygomaticus major is involved in pulling the corners of the lips back into a smile. Having participants bite on a pen that is held horizontally between their teeth without moving their lips generates tonic Zygomaticus activity (as measured by facial EMG) and prevents smiling (Davis et al., 2015, 2017; Oberman et al., 2007). Generating tonic muscle activity injects noise into the system while preventing movement mimicry at the periphery. Impairing smiling mimicry slows the detection and recognition of expressions changing between happiness and sadness (Niedenthal et al., 2001). Disrupting the motor system this way also impairs the recognition and categorization of subtle expressions of happiness but not subtle expressions that rely heavily on the motor activity at the brow, such as anger and sadness (Oberman et al., 2007).

These sorts of interference studies reveal a systematic relationship between the targeted muscles and the emotional expressions those muscles mediate: interfering with smiling muscles impairs the recognition of smiles but not frowns. For example, in a study that manipulated tonic motor activity either at the brow or at the mouth, interfering with activity at the brow impaired recognition of expressions that rely heavily on activity on the upper half of the face, such as anger, while interfering with activity at the mouth impaired recognition of expressions such as happiness that rely more on the lower half of the face (Ponari et al., 2012). The claim that different halves of the face provide more diagnostic information about emotional expressions has been validated both by facial EMG (Oberman et al., 2007) and a recognition task that involved composite images that were half emotionally expressive and half neutral (Ponari et al., 2012).

Interfering with the production of facial expressions also can also impair language processing in an affectively consistent manner. In an emotion classification task in which participants quickly sorted words into piles associated with different emotions, interfering with motor activity on the lower half of the face slowed the categorization of words associated with HAPPINESS and DISGUST relative to a control condition, but not those associated with ANGER or NEUTRAL (Niedenthal et al., 2009). Expressions of happiness and disgust both rely heavily on lower face muscles, for smiling and wrinkling the nose, respectively, while anger does not. Another way in which motor activity has been manipulated is through subcutaneous injections of Botox (a neurotoxin that induces temporary muscular denervation). Botox injections at the Corrugator supercilli muscle site, a brow muscle active during frowning and expressions of anger, slowed comprehension of sentences about sad and angry situations but not happy ones (Havas et al., 2010).

These data are compelling both because they use experimental methods and because the observed impairments are selective to specific emotions. The selectivity of the findings rules out the possibility that the manipulations are simply awkward and impair conceptual processing in general. They also cannot be explained by epiphenomenal accounts that propose that the embodied activity is a downstream consequence of conceptual processing because disrupting downstream consequences should not impair antecedent processes. However, it remains possible that these effects impaired cognitive processes that were not semantic in nature but were instead involved in decision-making or elaboration.

These alternative explanations are difficult to rule out with studies that utilize behavioral measures because categorical behavioral responses involve semantic processes as well as processes related to decision-making. To distinguish these two sets of processes requires a measure with high temporal resolution in conjunction with a paradigm that can distinguish between different stages of processing, such as event-related brain potentials (ERP). Different ERP components are associated with different cognitive processes. The N400 ERP component is a negative-going deflection that peaks around 400 ms and is associated with semantic retrieval. Although different stimulus modalities (e.g., language and pictures) influence the scalp topography of the component, a larger (more negative) N400 occurs in response to stimuli that induce greater semantic retrieval demands. Additionally, the N400 dissociates from other cognitive processes such as those involved in elaboration and decision-making (Kutas & Federmeier, 2011).

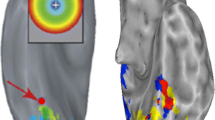

To evaluate whether interfering with embodied resources influenced semantic retrieval, we conducted an N400 ERP study in which we interfered with the smiling muscle using the aforementioned “pen” manipulation (in fact, we used a wooden chopstick) as participants categorized emotional–facial expressions along a dimension of valence (i.e., expressing a very good to very bad feeling). In the control condition, participants loosely held the chopstick horizontally between their lips (see Fig. 2.1 for a depiction of both the interference and the control conditions in these studies). EMG was measured at the cheek and brow as a manipulation check. In the control condition, participants mimicked the expressions. In the interference condition, there were no signs of happiness mimicry, just tonic noise at the cheek (and not the brow). Relative to the control condition, interfering with smiling increased the N400 when participants categorized expressions of low-intensity happiness, but not for expressions of anger (Davis et al., 2017). This suggests that embodied motor resources play a causal role in semantic processes involved in emotion recognition. Moreover, although the interference manipulation affected a neural indicator of semantic retrieval, it did not influence participants’ ratings of emotional valence. So, while these data indicate embodied responses to emotional stimuli facilitate associated semantic retrieval processes, they also suggest that these effects are extremely subtle.

Facial action manipulation used in Davis et al. (2015, 2017). In the interference condition, the chopstick is placed between the teeth and the lips with the mouth closed. In the control condition, it is placed at the front of the lips, not between the teeth. The interference condition involves biting lightly on the chopstick to hold it between one’s teeth and lips and this generates tonic noise on the lower half of the face (measured at the Zygomaticus major) relative to the control, as observed in the baseline EMG activity (left. The figure is based on data from the manipulation check in Davis et al. (2015). Whiskers represent 95% CI). In addition, the manipulation interferes with smiling mimicry since the chopstick is held toward the back corners of the lips. This makes it difficult to lift the corners of the lips into a smile. The control condition induces significantly less baseline Zygomaticus noise (left), and the location of the chopstick makes it relatively easy to pull the corners of the lips up into a smile (right)

We conducted another experiment similar to the one just mentioned in which we presented subjects with sentences about positive and negative events rather than facial expressions. The sentences in this study were constructed in positive and negative pairs, such that their valence depended on an affectively charged word, and that word was the third to last in the sentence (e.g., “She reached into the pocket of her coat from last winter and found some (cash/bugs) inside it”). This allowed us to evaluate whether any embodiment effects occurred during lexical retrieval (e.g., cash or bugs) and/or at a higher level of conceptual processing, during the construction of a situation model at the end of the sentence (Zwaan, 2009). We found an N400 difference as a function of the smiling interference condition for positive but not negative sentences. The N400 difference did not occur at the lexical level (e.g., cash) but instead at the sentence final word, suggesting the embodied interference manipulation affected higher order semantic processes involved in sentence processing. We found no effect of the interference manipulation on participants’ overt ratings of the sentences. Given that the embodiment manipulation influenced neural markers of comprehension at the level of the situation model but not the lexical level and not at a behavioral level, these data are most consistent with a weak embodiment. If the conceptual and sensorimotor systems were one and the same—strong embodiment—one would expect N400 effects at the lexical level at the very least, and plausibly at the behavioral level. Instead, the effects were subtler.

Another indication that embodiment effects can be nuanced and subtle comes from a repetitive transcranial magnetic stimulation (rTMS) emotion detection experiment in which rTMS was applied over right primary motor cortex (M1), right primary somatosensory cortex (S1), or the vertex in the control condition (Korb et al., 2015). Participants viewed videos of facial expressions changing either from neutral to happy or from angry to happy. Their task was to identify when the expression changed. Although the rTMS manipulation had no effects in the males tested, among females, rTMS over M1 and S1 delayed both mimicry and the detection of smiles. These findings suggest a causal connection between activity in the motor and somatosensory cortex and the recognition of happiness, but only among a subset of the participants.

Taken as a whole, these studies support the hypothesis that neural resources involved in action and perception play a functional role in semantic processing of emotion concepts. Processing emotional words and faces can provoke embodied responses in an emotion-specific manner. Persons with motor processing abnormalities show deficits in understanding language about action and emotion. Moreover, interfering with people’s embodied responses to emotional stimuli impacts semantic retrieval in an emotion-specific manner. However, these studies also show that embodiment effects are often subtle and idiosyncratic. We suggest that this is because embodied physiological responses have a diverse array of causes, including accessing conceptual representations, elaborative inferences, and emotional reactions, whose relevance for cognition varies greatly across tasks. In the next section, we focus on the context-dependent nature of embodiment in conceptual processing.

The Context-Dependent Nature of Embodied Emotion Concepts

In our CODES model, we suggest that embodied resources are used to ground the construction of simulations. Importantly, the embodied resources involved in any given simulation are dependent on the context-specific cognitive needs of the individual. Embodied information is most useful in situations that require relatively deep semantic processing and inferential elaboration. For emotion concepts, this is most common in situations that involve attempting to understand or predict the behaviors of others or oneself. This is similar to hypotheses that embodied simulations can be used to create as-needed predictions of interoceptive states (Barrett & Simmons, 2015) and anticipation of emotional consequences (Baumeister et al., 2007). What sets our model apart is its emphasis on the flexible nature of the recruitment of embodied resources during these simulations. For example, when the goal is to cultivate a deep empathic understanding of a loved one’s feelings, sensorimotor recruitment may be quite extensive. In other situations, the recruitment might be quite minimal, akin to sensorimotor satisficing.

One example of how task demands influence embodied recruitment comes from research on the processing of emotion words in a shallow or deep manner (Niedenthal et al., 2009). In these studies, participants viewed words that referred to emotional states (e.g., ‘foul’ or ‘joyful’), concepts associated with emotional states (e.g., “slug” or “sun”), and neutral control words (e.g., “table” or “cube”). In the shallow processing task, participants were asked to judge a superficial feature of the words, namely whether the word appeared in upper or lower case. In the deeper processing task, participants had to judge whether or not the words were associated with emotions. In each of these tasks, facial EMG was recorded from muscle sites associated with the expression of positive or negative emotions. Consistent with the cognitive demand aspect of the CODES model, participants displayed affectively congruent emotional expressions when processing the words for meaning, but not when deciding whether they were printed in upper or lower case. Interestingly, these results argue against the suggestion that embodied responses to words reflect automatic affective reactions to stimuli. Indeed, if embodied responses were reflexive, they should have been evident in the shallow processing task as well as the deep one.

However, it could be argued that the shallow task was so shallow that participants did not even read the words. To address this concern, Niedenthal et al. (2009) conducted an additional experiment in which participants were presented with emotion words (e.g., “frustration”) and told to list properties of those words while facial EMG was recorded. Critically, participants were asked to either produce properties for an audience interested in “hot” features of the concepts (such as a good friend that could be told anything) or for one interested in “cold” features (such as a supervisor with which they have a formal relationship). Both conditions involved deep conceptual processing, and both led to the production of normatively appropriate emotion features. However, the “hot” emotion condition led to greater activation of valence-consistent motor responses. As simulating an emotional experience is more relevant for processing “hot” emotional features than for experientially detached “cold” ones, these data support the context-dependent aspect of the CODES model and suggest there are multiple routes of representation during conceptual processing.

Another example of emotion cognition without “hot” embodied content is emotion recognition in patients with Möbius Syndrome, a congenital form of facial paralysis. Although these patients cannot produce (and mimic) emotional–facial expressions, they can still recognize them on par with neurotypical controls (Rives Bogart & Matsumoto, 2010). Such findings undermine strong embodiment views that suggest emotion concepts lacking relevant sensorimotor experiences and production capacities would be deficient. As advocates of the CODES model, we suggest that while these patients lack experience with mimicry, they do have extensive experience decoding emotional expressions via visual resources. As such, their concepts of emotions may be quite different from individuals who have a lifetime of facial mimicry. Moreover, data suggests that when asked to draw fine-grained distinctions among emotional expressions, some patients with Möbius Syndrome do perform worse than controls (Calder et al., 2000).

More generally, recent research has revealed a potential role for individual differences in the representation and operation of emotion concepts. Above, we reviewed evidence suggesting that brain regions underlying action and perception help to ground emotion concepts, and that peripheral motor activity, in turn, strengthens their activation. As such, mimicry—especially spontaneous mimicry—can reflect weaker or stronger accessibility of emotion concepts. There is now research that reveals individual differences in mimicry elicitation and in its perceived social efficacy (for a review, see Arnold, Winkielman, & Dobkins, 2019).

An example of this is work on mimicry and loneliness—perceived social isolation—which is associated with negative affect and physiological degradation (Cacioppo & Hawkley, 2009). If physiological and motor (i.e., action) activity is more weakly coupled with emotion concepts in some people than others, those individuals may suffer, particularly in the social world. Accordingly, we have found that loneliness is associated with impaired spontaneous smile mimicry during the viewing of video clips of emotional expressions (Arnold & Winkielman, 2020). By contrast, loneliness was unrelated to overt positivity ratings of the smile videos. This reveals a dissociation between perceptual (external) and physiological/behavioral (internal) aspects of the smile, a point to which we return later.

Critically, the influence of loneliness was specific to spontaneous (not deliberate) mimicry for positive (not negative) emotions; depression and extraversion were not associated with any mimicry differences (Arnold & Winkielman, 2020). Further, spontaneous smiling to positively valanced images (e.g., cute puppies) was not affected by loneliness, suggesting the representation of positive emotion/joy remains intact in lonely individuals—but that perhaps it is not recruited as readily in social contexts.

Smile mimicry is intrinsically rewarding and facilitates social rapport (Hess & Fischer, 2013), so lonely individuals may be hindered in achieving social connection, the very resource they require for health. Do lonely individuals ground positive emotion concepts such as joy differently in social vs. nonsocial domains, and might this representational shift underlie other aspects of loneliness? Social psychologists have suggested loneliness results in part from an early attentional shift—implicit hypervigilance for social threat—whereby socially negative stimuli are processed more readily than socially positive ones (Cacioppo & Hawkley, 2009). Thus, it is possible that implicit potentiation of negative versus positive emotion concepts (internally) within one’s perceived (social) world is a component of loneliness maintenance and suffering.

Another domain of important individual difference research comes from work on interoception—the sense of the physiological condition of the body (Craig, 2008). Interoception is the process of sensing, representing, and regulating internal physiological states in the service of homeostasis. Seminal theories of emotion suggest that bodily responses to ongoing events both contribute to emotional experience and influence behavior (Damasio, 1999; James, 1884), as interoception mediates this translation (for a review, see Critchley & Garfinkel, 2017). Thus, interoception is important for numerous subjective feelings, including hunger, fatigue, temperature, pain, arousal, and sensual touch. Measures of interoceptive processing reflect distinct dimensions, including objective interoceptive accuracy, subjective interoceptive sensibility, and metacognitive interoceptive awareness (Garfinkel et al., 2015). Operationalization of distinct dimensions of interoception and growing research on their influence in perception and behavior in neuro- and a-typical individuals reveals interoception’s pervasive (if subtle) influence on experience.

Higher interoceptive accuracy is associated with feeling emotions more strongly (Barrett et al., 2004). By contrast, lower interoceptive accuracy has been linked to difficulty in understanding one’s own emotions (i.e., alexithymia, Brewer et al., 2016) and deficits in emotion regulation (Kever et al., 2015). Since variations in dimensions of interoception are associated with a myriad of psychological disorders (Khalsa et al., 2018), it is possible that fluctuations in interoceptive processing may drive (mal)adaptive behavior stemming from a (mis)match between expected and actual feeling states. Recent accounts of interoceptive predictive coding elaborate this idea and highlight its consequences for mental health (Barrett et al., 2016; Seth, Suzuki & Critchley, 2012).

Dysregulated interoception was recently implicated in suboptimal social interaction and loneliness (Arnold et al., 2019; Quadt et al., 2020). One component of these accounts is that interoception confers higher emotional fidelity in a way that has consequences for social interaction. If one can accurately sense and describe their own feelings, they may be able to better represent another’s feelings by using common neural resources in better-defined “as-if” loops (Damasio, 1999), allowing for greater empathy and social connection. Likewise, dysregulated interoception might affect the representation and accessibility of emotion concepts in loneliness as well as more generally.

A recent meta-analysis demonstrated that in addition to the traditional five senses, interoception uniquely contributes to conceptual grounding (Connell et al., 2018). These authors found that participants associated “sensations in the body” with concepts to a similar degree compared to the five traditional sensory modalities and interoception was found to be a relatively distinct modality for the conceptual association. Interoceptive grounding drove perceptual strength more strongly for abstract concepts than concrete ones and was particularly relevant for emotion concepts. Interoceptive strength was also found to enhance semantic facilitation in a word recognition task over and above the other five sensory modalities. Although the link between interoception and conceptual grounding requires more research, extant evidence suggests that interoception may confer a critical “feeling” component to concepts that are important for well-being and social interaction.

In this section, we have reviewed experimental data that reveals a considerable degree of variability in the extent of sensorimotor recruitment for emotion concepts. Bodily responses, such as facial mimicry, must not be reflexively elicited, but rather occur more readily for semantic processing of emotional language, especially when people consider “hot” features of these concepts. Because emotion concepts have many dimensions, sensorimotor recruitment is not strictly necessary to understand them. However, individual differences in facial mimicry of smiles are associated with the capacity for positive social engagement. Individual differences in interoceptive ability are associated with emotional experience and the ability to reason about one’s own emotions as well as those of others. Finally, we have pointed to a possible link between interoceptive sensations and the grounding of emotion concepts.

The Role of Context

Contemporary accounts of semantic memory provide for some degree of contextual variability for concepts. This assumption is based on a wide range of findings from cognitive neuroscience, cognitive psychology, psycholinguistics, computational linguistics, and semantics (Barsalou, 2008; Barsalou & Medin, 1986; Coulson, 2006; Lebois et al., 2015; Pecher & Zwaan, 2017; Tabossi & Johnson-Laird, 1980; Yee & Thompson-Schill, 2016). Accordingly, embodied simulations highlight different aspects of experience in a context-dependent manner. In a feature-listing task, participants list features such as green and striped for WATERMELON, but red and with seeds for HALF-WATERMELON (Wu & Barsalou, 2009). Presumably, “watermelon” invites a perceptual simulation of the external features of a watermelon, while “half-watermelon” invites a perceptual simulation of its internal features.

Emotional states, too, are multifaceted and, like watermelons, their relevant features are subject to contextual variability. Emotions have internal features, such as the motivational urges they elicit and the way they feel in the moment, as well as external features, such as the actions they elicit and the way they are expressed in the face and the body. When the goal is to take the perspective of an angry person and understand how they are feeling, the internal features may be at the forefront of a simulation. However, if the goal is to anticipate the behaviors of that angry individual, external features might be highlighted. In this section, we describe behavioral and neuroimaging data that reveal how internal and external focus can influence embodied simulations.

In a behavioral study that used a switch cost paradigm, participants read a series of sentences that described emotional and non-emotional mental states (Oosterwijk et al., 2012). Sentences varied in whether each was focused on internal characteristics, (e.g., “She was sick with disgust.”) or external ones (e.g., “Her nose wrinkled with disgust.”) and, critically, whether they were preceded by a sentence with a similar internal versus external focus. We found that sentences were read faster when they followed a sentence with a similar focus than when they followed one with a different focus (Oosterwijk et al., 2012). These data suggest that switching from an “‘internal” to an “external” focus induces a processing cost just as switching between visual and auditory features does (Collins et al., 2011).

A follow-up fMRI study revealed that even when controlling for particular emotions, reading sentences about internally focused emotional states activated different brain regions than sentences with an external focus (Oosterwijk et al., 2015). More specifically, sentences with an “internal” focus activated the ventromedial prefrontal cortex, a brain region associated with the generation of experiential states, while those with an “external” focus activated a region of the inferior frontal gyrus related to action representation. Consistent with the CODES model, emotion concepts recruit different embodied resources in contexts that highlight internal versus external features.

Further evidence that context influences embodied representations of emotion concepts comes from an fMRI study that manipulated emotion perspective and whether or not a given emotion pertained to the self or someone else (Oosterwijk et al., 2017). In this study, participants were asked to read sentences that described different aspects of emotion. The sentences described either actions (e.g., pushing someone away), situations (e.g., being alone in a park), or internal sensations (e.g., increased heart rate). In one task, the participants were asked to imagine themselves experiencing these different aspects of emotions. In keeping with previous research, processing these sentences activated networks of brain regions related to action planning, mentalizing, and somatosensory processing, respectively (Oosterwijk et al., 2017).

In a second task, participants were presented with emotion pictures and asked to focus on the person’s actions (i.e., “HOW” the target person in the picture was expressing their emotion), the situation (‘WHY’ the target was feeling the emotion they were expressing), or internal sensations (i.e., “WHAT” the target person was feeling in their body). Interestingly, multi-voxel pattern analysis was able to accurately classify the participants’ task (HOW, WHY, or WHAT) in the picture study based on the patterns of brain activity in the sentence-reading task (Oosterwijk et al., 2017). Conceptualizing emotion as it relates to actions, situations, and internal sensations each involves different neural circuits. However, for any given aspect of an emotion concept (e.g., what the target person was feeling in their body), the neural resources recruited were quite similar. This was the case regardless of whether the prompt was a sentence or a picture, and regardless of whether the task involved drawing inferences about oneself or others.

Conclusion

In sum, research to date suggests that sensorimotor resources are involved in the processing of emotion concepts. However, these findings also underline the context-specific nature of embodied simulations. Individual differences in embodied experiences, differences in task demands, and varying cognitive goals can all influence the extent to which embodied representations either are or are not recruited in a particular situation for a particular individual. This conclusion argues against simplistic models of conceptual embodiment in which the representations are inflexible packages of somatic and motor reactions. It also argues against strong models of embodied emotion that claim that peripheral motor simulation is a necessary component of emotion concepts. Instead, the data suggest that there are multiple ways in which different embodied resources are recruited for conceptual processing.

Importantly, our embrace of the embodiment perspective is compatible with an important role for abstraction in any satisfactory account of emotion concepts. After all, the emergence of concepts like SCHADENFREUDE, APPRECIATION, or even LOVE requires fairly advanced cognitive capacities that may require semantic associations built from linguistic experience. Returning to Mary the color-blind scientist, research comparing color concepts in sighted and congenitally blind participants suggests semantic associates of color terms lead to highly similar color concepts in these two groups (Saysani, Corballis, M. C. & Corballis, C. M., 2018). These investigators asked participants to rate the similarity of different pairs of color terms and used multidimensional scaling to produce perceptual maps. Remarkably, only minor differences were found in the color maps of sighted and blind participants (Saysani et al., 2018). Clearly, the concept of RED differs somewhat in sighted and congenitally blind participants. But what exactly is missing, and how important the missing part is, again depends on context, and the facets of meaning. In some contexts, understanding LOVE or PAIN seems impossible without the ability to experience it, but not in other contexts.

This is what makes emotion concepts fascinating—they require a hybrid approach, which integrates sensorimotor, linguistic, and social inputs (Borghi, 2020). As such, there is much to be learned about this topic. But, for now, it is clear that the embodiment perspective provides a valuable window into the intricate mechanisms of the human mind.

References

Adolphs, R. (2002). Neural systems for recognizing emotion. Current Opinion in Neurobiology, 12, 169–177.

Adolphs, R. (2006). How do we know the minds of others? Domain-specificity, simulation, and enactive social cognition. Brain Research, 1079(1), 25–35.

Arnold, A. J., & Winkielman, P. (2020). Smile (but only deliberately) though your heart is aching: Loneliness is associated with impaired spontaneous smile mimicry. Social Neuroscience, 10, 1–13.

Arnold, A. J., Winkielman, P., & Dobkins, K. (2019). Interoception and social connection. Frontiers in Psychology, 10, 2589. https://doi.org/10.3389/fpsyg.2019.02589

Aviezer, H., Trope, Y., & Todorov, A. (2012). Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science, 338, 1225–1229.

Bak, T. H., & Chandran, S. (2012). What wires together dies together: Verbs, actions and neurodegeneration in motor neuron disease. Cortex, 48, 936–944.

Baron-Cohen, S., Wheelwright, S., Hill, J., Raste, Y., & Plumb, I. (2001). The “Reading the Mind in the Eyes” Test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. The Journal of Child Psychology and Psychiatry and Allied Disciplines, 42, 241–251.

Barrett, L. F. (2019). Search of emotions. Current Biology, 29, R140–R142.

Barrett, L. F., Quigley, K. S., Bliss-Moreau, E., & Aronson, K. R. (2004). Interoceptive sensitivity and self-reports of emotional experience. Journal of Personality and Social Psychology, 87, 684–697.

Barrett, L. F., Quigley, K. S., & Hamilton, P. (2016). An active inference theory of allostasis and interoception in depression. Philosophical Transactions of the Royal Society B: Biological Sciences, 371, 20160011. https://doi.org/10.1098/rstb.2016.0011

Barrett, L. F., & Simmons, W. K. (2015). Interoceptive predictions in the brain. Nature Reviews Neuroscience, 16, 419–429.

Barsalou, L. W. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22, 577–660.

Barsalou, L. W. (2008). Grounded cognition. Annual Review of Psychology, 59, 617–645.

Barsalou, L. W., & Medin, D. L. (1986). Concepts: Static definitions or context-dependent representations? Cahiers De Psychologie, 6, 187–202.

Baumeister, J. C., Foroni, F., Conrad, M., Rumiati, R. I., & Winkielman, P. (2017). Embodiment and emotional memory in first vs. second language. Frontiers in Psychology, 8, 394.

Baumeister, R. F., Vohs, K. D., Nathan DeWall, C., & Zhang, L. (2007). How emotion shapes behavior: Feedback, anticipation, and reflection, rather than direct causation. Personality and Social Psychology Review, 11, 167–203.

Binder, J. R., & Desai, R. H. (2011). The neurobiology of semantic memory. Trends in Cognitive Science, 15, 527–536.

Borghi, A. M. (2020). A future of words: Language and the challenge of abstract concepts. Journal of Cognition, 3, 42.

Borghi, A. M., Binkofski, F., Castelfranchi, C., Cimatti, F., Scorolli, C., & Tummolini, L. (2017). The challenge of abstract concepts. Psychological Bulletin, 143, 263–292.

Brewer, R., Cook, R., & Bird, G. (2016). Alexithymia: A general deficit of interoception. Royal Society Open Science, 3, 150664. https://doi.org/10.1098/rsos.150664

Brookshire, G., & Casasanto, D. (2012). Motivation and motor control: Hemispheric specialization for approach motivation reverses with handedness. PLoS One, 7, e36036.

Brookshire, G., & Casasanto, D. (2018). Approach motivation in human cerebral cortex. Philosophical Transactions of the Royal Society B: Biological Sciences, 373, 20170141.

Cacioppo, J. T., & Hawkley, L. C. (2009). Perceived social isolation and cognition. Trends in Cognitive Science, 13, 447–454.

Calder, A. J., Keane, J., Cole, J., Campbell, R., & Young, A. W. (2000). Facial expression recognition by people with Möbius syndrome. Cognitive Neuropsychology, 17, 73–87.

Cheng, Y., Chen, C., Lin, C. P., Chou, K. H., & Decety, J. (2010). Love hurts: An fMRI study. Neuroimage, 51, 923–929.

Citron, F. M. M. (2012). Neural correlates of written emotion word processing: A review of recent electrophysiological and hemodynamic neuroimaging studies. Brain & Language, 122, 211–226.

Collins, A. M., & Loftus, E. F. (1975). A spreading-activation theory of semantic processing. Psychol Rev, 82(6), 407–428. http://doi.org/10.1037/0033-295X.82.6.407

Collins, J., Pecher, D., Zeelenberg, R., & Coulson, S. (2011). Modality switching in a property verification task: An ERP study of what happens when candles flicker after high heels click. Frontiers in Psychology, 2, 10. https://doi.org/10.3389/fpsyg.2011.00010

Connell, L., Lynott, D., & Banks, B. (2018). Interoception: The forgotten modality in perceptual grounding of abstract and concrete concepts. Philosophical Transactions of the Royal Society B: Biological Sciences, 373, 20170143. https://doi.org/10.1098/rstb.2017.0143

Coulson, S. (2006). Constructing meaning. Metaphor and Symbol, 21, 245–266.

Craig, A. D. (2008). Interoception and emotion: A neuroanatomical perspective. Handbook of Emotions, 3, 272–288.

Critchley, H. D., & Garfinkel, S. N. (2017). Interoception and emotion. Current Opinion in Psychology, 17, 7–14.

Damasio, A. R. (1999). The feeling of what happens: Body and emotion in the making of consciousness. Houghton Mifflin Harcourt.

Davis, J. D., Winkielman, P., & Coulson, S. (2015). Facial action and emotional language: ERP evidence that blocking facial feedback selectively impairs sentence comprehension. Journal of Cognitive Neuroscience, 27, 2269–2280.

Davis, J. D., Winkielman, P., & Coulson, S. (2017). Sensorimotor simulation and emotion processing: Impairing facial action increases semantic retrieval demands. Cognitive, Affective, & Behavioral Neuroscience, 17, 652–664.

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology, 19, 643–647.

Dutton, D. G., & Aron, A. P. (1974). Some evidence for heightened sexual attraction under conditions of high anxiety. Journal of Personality and Social Psychology, 30, 510–517.

Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17, 124–129.

Fingerhut, J., & Prinz, J. J. (2018). Wonder, appreciation, and the value of art. Progress in Brain Research, 237, 107–128.

Fodor, J. A. (1975). The language of thought (Vol. 5). Harvard University Press.

Foroni, F. (2015). Do we embody second language? Evidence for ‘partial’ simulation during processing of a second language. Brain and Cognition, 99, 8–16.

Foroni, F., & Semin, G. R. (2009). Language that puts you in touch with your bodily feelings. Psychological Science, 20, 974–980.

Freedberg, D., & Gallese, V. (2007). Motion, emotion and empathy in esthetic experience. Trends in Cognitive Sciences, 11, 197–203.

Frijda, N. H. (1986). The emotions. Cambridge University Press.

García, A. M., & Ibáñez, A. (2014). Words in motion: Motor-language coupling in Parkinson’s disease. Translational Neuroscience, 5, 152–159.

Garfinkel, S. N., Seth, A. K., Barrett, A. B., Suzuki, K., & Critchley, H. D. (2015). Knowing your own heart: Distinguishing interoceptive accuracy from interoceptive awareness. Biological Psychology, 104, 65–74.

Harmon-Jones, E., Gable, P. A., & Peterson, C. K. (2010). The role of asymmetric frontal cortical activity in emotion-related phenomena: A review and update. Biological Psychology, 84, 451–462.

Harnad, S. (1990). The symbol grounding problem. Physica D: Nonlinear Phenomena, 42, 335–346.

Harris, C. L., Aycicegi, A., & Gleason, J. B. (2003). Taboo words and reprimands elicit greater autonomic reactivity in a first language than in a second language. Applied Psycholinguistics, 24, 561–579.

Harris, P. L. (2008). Children’s understanding of emotion. In M. Lewis, J. M. Haviland-Jones, & L. F. Barrett (Eds.), Handbook of emotions (pp. 320–331). The Guilford Press.

Hatfield, E., Cacioppo, J. T., & Rapson, R. L. (1993). Emotional contagion. Current Directions in Psychological Science, 2, 96–100.

Havas, D. A., Glenberg, A. M., Gutowski, K. A., Lucarelli, M. J., & Davidson, R. J. (2010). Cosmetic use of botulinum toxin—A affects processing of emotional language. Psychological Science, 21, 895–900.

Hess, U., & Fischer, A. (2013). Emotional mimicry as social regulation. Personality and Social Psychology Review, 17, 142–157.

Heyes, C. (2011). Automatic imitation. Psychological Bulletin, 137, 463–468.

James, W. (1884). What is emotion? Mind, 9, 188–205.

Kensinger, E. A., & Schacter, D. L. (2006). Processing emotional pictures and words: Effects of valence and arousal. Cognitive, Affective, and Behavioral Neuroscience, 6, 110–126.

Kever, A., Pollatos, O., Vermeulen, N., & Grynberg, D. (2015). Interoceptive sensitivity facilitates both antecedent- and response-focused emotion regulation strategies. Perspectives on Individual Differences, 87, 20–23.

Khalsa, S. S., Adolphs, R., Cameron, O. G., Critchley, H. D., Davenport, P. W., Feinstein, J. S., et al. (2018). Interoception and mental health: A roadmap. Biological Psychiatry Cognitive Neuroscience Neuroimaging, 3, 501–513.

Korb, S., Malsert, J., Rochas, V., Rihs, T. A., Rieger, S. W., Schwab, S., & Grandjean, D. (2015). Gender differences in the neural network of facial mimicry of smiles–An rTMS study. Cortex, 70, 101–114.

Kragel, P. A., & LaBar, K. S. (2013). Multivariate pattern classification reveals autonomic and experiential representations of discrete emotions. Emotion, 13, 681–690.

Kutas, M., & Federmeier, K. D. (2011). Thirty years and counting: Finding meaning in the N400 component of the event-related brain potential (ERP). Annual Review of Psychology, 62, 621–647.

Larsen, J. T., Norris, C. J., & Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology, 40, 776–785.

Lebois, L. A., Wilson-Mendenhall, C. D., & Barsalou, L. W. (2015). Are automatic conceptual cores the gold standard of semantic processing? The context-dependence of spatial meaning in grounded congruency effects. Cognitive Science, 39, 1764–1801.

Lindquist, K. A., Barrett, L. F., Bliss-Moreau, E., & Russell, J. A. (2006). Language and the perception of emotion. Emotion, 6, 125–138.

Mahon, B. Z., & Caramazza, A. (2008). A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. Journal of Physiology-Paris, 102, 59–70.

Moseley, R. L., & Pulvermueller, F. (2018). What can autism teach us about the role of sensorimotor systems in higher cognition? New clues from studies on language, action semantics, and abstract emotional concept processing. Cortex, 100, 149–190.

Niedenthal, P. M. (2007). Embodying emotion. Science, 316, 1002–1005.

Niedenthal, P. M., Barsalou, L. W., Winkielman, P., Krauth-Gruber, S., & Ric, F. (2005). Embodiment in attitudes, social perception, and emotion. Personality and Social Psychology Review, 9, 184–211.

Niedenthal, P. M., Brauer, M., Halberstadt, J. B., & Innes-Ker, Å. H. (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cognition & Emotion, 15, 853–864.

Niedenthal, P. M., Winkielman, P., Mondillon, L., & Vermeulen, N. (2009). Embodiment of emotion concepts. Journal of Personality and Social Psychology, 96, 1120–1136.

Oberman, L. M., Winkielman, P., & Ramachandran, V. S. (2007). Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Social Neuroscience, 2, 167–178.

Oosterwijk, S., Mackey, S., Wilson-Mendenhall, C., Winkielman, P., & Paulus, M. P. (2015). Concepts in context: Processing mental state concepts with internal or external focus involves different neural systems. Social Neuroscience, 10, 294–307.

Oosterwijk, S., Snoek, L., Rotteveel, M., Barrett, L. F., & Scholte, H. S. (2017). Shared states: Using MVPA to test neural overlap between self-focused emotion imagery and other-focused emotion understanding. Social Cognitive and Affective Neuroscience, 12, 1025–1035.

Oosterwijk, S., Winkielman, P., Pecher, D., Zeelenberg, R., Rotteveel, M., & Fischer, A. H. (2012). Mental states inside out: Switching costs for emotional and nonemotional sentences that differ in internal and external focus. Memory & Cognition, 40, 93–100.

Pecher, D., & Zwaan, R. A. (2017). Flexible concepts: A commentary on Kemmerer (2016). Language, Cognition and Neuroscience, 32, 444–446.

Ponari, M., Conson, M., D’Amico, N. P., Grossi, D., & Trojano, L. (2012). Mapping correspondence between facial mimicry and emotion recognition in healthy subjects. Emotion, 12, 1398–1403.

Pulvermüller, F. (2018). The case of CAUSE: Neurobiological mechanisms for grounding an abstract concept. Philosophical Transactions of the Royal Society B: Biological Sciences, 373, 20170129.

Quadt, L., Esposito, G., Critchley, H. D., & Garfinkel, S. N. (2020). Brain-body interactions underlying the association of loneliness with mental and physical health. Neuroscience & Biobehavioral Reviews, 116, 283–300.

Rives Bogart, K., & Matsumoto, D. (2010). Facial mimicry is not necessary to recognize emotion: Facial expression recognition by people with Moebius syndrome. Social Neuroscience, 5, 241–251.

Rizzolatti, G., & Craighero, L. (2004). The mirror-neuron system. Annual Review of Neuroscience, 27, 169–192.

Saysani, A., Corballis, M. C., & Corballis, P. M. (2018). Colour envisioned: Concepts of colour in the blind and sighted. Vis Cogn, 26(5), 382–392.

Searle, J. (1980). Minds, brains and programs. Behavioral and Brain Sciences, 3, 417–457.

Seth, A. K., Suzuki, K., & Critchley, H. D. (2012). An interoceptive predictive coding model of conscious presence. Front Psychol, 2, 395. https://doi.org/10.3389/fpsyg.2011.00395

Schachter, S., & Singer, J. (1962). Cognitive, social, and physiological determinants of emotional state. Psychological Review, 69, 379–399.

Susskind, J. M., Lee, D. H., Cusi, A., Feiman, R., Grabski, W., & Anderson, A. K. (2008). Expressing fear enhances sensory acquisition. Nature Neuroscience, 11, 843–860.

Tabossi, P., & Johnson-Laird, P. N. (1980). Linguistic context and the priming of semantic information. The Quarterly Journal of Experimental Psychology, 32, 595–603.

Wilson-Mendenhall, C. D., Barrett, L. F., & Barsalou, L. W. (2013). Situating emotional experience. Frontiers in Human Neuroscience, 7, 764.

Winkielman, P., Coulson, S., & Niedenthal, P. (2018). Dynamic grounding of emotion concepts. Philosophical Transactions of the Royal Society b: Biological Sciences, 37, 20170127.

Wu, L. L., & Barsalou, L. W. (2009). Perceptual simulation in conceptual combination: Evidence from property generation. Acta Psychologica, 132, 173–189.

Yee, E., & Thompson-Schill, S. L. (2016). Putting concepts into context. Psychonomic Bulletin & Review, 23, 1015–1027.

Zwaan, R. A. (2009). Mental simulation in language comprehension and social cognition. European Journal of Social Psychology, 39, 1142–1150.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Davis, J.D., Coulson, S., Arnold, A.J., Winkielman, P. (2021). Dynamic Grounding of Concepts: Implications for Emotion and Social Cognition. In: Robinson, M.D., Thomas, L.E. (eds) Handbook of Embodied Psychology. Springer, Cham. https://doi.org/10.1007/978-3-030-78471-3_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-78471-3_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-78470-6

Online ISBN: 978-3-030-78471-3

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)