Abstract

In the past, several works have considered usability, user experience, and design principles when developing Domain-Specific Languages (DSL). To understand those principles, when developing and evaluating a DSL, is a challenge since not all design goals have the same relevance in different types of systems or DSL domains. Researchers from the Software Engineering and Human-Computer Interaction fields, for example, have mentioned in the recent past that usability or user experience evaluations usually do not use well-defined techniques to assess the quality of software products, or do not describe them accurately. Therefore, this paper will investigate whether the usability methods or techniques are still a trending topic to the DSL developers. To do that, we present an update to a Systematic Literature Review on usability evaluation that was performed in 2017. As a result, we identified that usability evaluation of DSLs is not only a trending topic but the discussions have increased in the past years. Furthermore, in this paper, we present an extension of a taxonomy on usability evaluation of DSLs.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Evaluation methods and techniques

- Human-computer interaction

- Domain-specific languages

- Systematic literature review

- Usability evaluation

1 Introduction

A Domain-Specific Language (DSL) is a language with a high level of abstraction optimized for a specific class of problems. It is generally less complex than a General-Purpose Language (GPL) [23], e.g. Python or C#. Commonly, DSLs are developed in coordination with experts in the field for which the DSL is being designed for. In many cases, DSLs should be used not by software teams, but by non-programmers who are fluent in the domain of the DSL [9]. The implementation of DSLs can bring several benefits, such as efficiency, clearer thinking, stakeholder integration, and quality [15]. Nonetheless, despite the increase in the number of DSLs, several of them are not successful since their usability is not properly evaluated.

Usability evaluation can improve DSLs since its main goal is to identify problems that might occur during DSLs use. Furthermore, usability solutions can address cognitive, perceptive, or motor capacity from users during system interaction. Nonetheless, usually, usability evaluation is dealt with in a generic process. However, some authors [3, 21] have shown that usability should be related to the evaluated domain, varying the evaluation method, performance metrics, or even the order of efficiency, efficacy, and satisfaction metrics, for example, depending on the application area. We believe the same can be applied to DSLs evaluation since there is no ready solution once DSL varies according to its purpose [30]. Usability is an important requirement in DSL evaluation. Although several different authors consider that concern [3, 5,6,7, 30,31,32, 34], we believe there are several trending topics on the usability evaluation of DSLs that should be investigated.

Previously, a Systematic Literature Review (SLR) carried out by Rodrigues et al. [32] identified that DSL designers consider aspects of usability in the creation of DSL. In that SLR twelve studies were selected for analysis, those studies were used to answer three research questions and understand how usability was dealt with in a DSL project. Furthermore, it also presented the techniques or approaches used to evaluate the usability of DSLs, as well as identified what kind of problems were found during the evaluation process.

The results helped to build a taxonomy that helped researchers to design new DSLs or mainly to evaluate the usability of DSLs. Besides, the results of that SLR relied on identifying problems and resources for the Usa-DSL Framework [30, 31] to evaluate the usability of a DSL, in addition to assisting in the design of the Usa-DSL Process [29]. Although the SLR has shown that most researchers evaluate their DSL on an ad hoc basis, or do not describe the used methods, it was important to obtain the state of the art up to that moment.

Therefore, this paper presents an update on the study from Rodrigues et at. [32]. It improves on the previous study, updating the Usa-DSL Framework and also extending the usability evaluation taxonomy regarding usability heuristics and specific guidelines for DSLs evaluation.

For this study, the Systematic Literature Review (SLR) protocol [16] and ThothFootnote 1 [20], a web-based tool to support systematic reviews, were used. The main digital libraries used were from ACM, IEEE, ScienceDirect, and Scopus. The SLR searched papers from June 2016 to September 2020. The study allowed us to identify primary studies in both Human-Computer Interaction (HCI) and Software Engineering (SE).

In order to understand how usability evaluation of DSLs has been investigated in the past few years, we set the following three research questions: i) Was the importance of usability considered during DSL development? ii) What were the evaluation techniques that were applied in the context of DSLs? iii) What were the problems or limitations identified during the DSL usage?

During the analysis of our SLR, we found several papers that consider DSL usability evaluation an important topic [3, 5,6,7, 25, 30, 31, 34]. Other papers, even though in a general way, present DSL usability evaluation and discuss the importance of preparation, data collection, analysis, and results’ consolidation for the evaluation [13, 14, 27]. Some papers propose the use of a framework for DSL usability evaluation, e.g. USE-ME [5], whose main goal is to help DSL developers and it is used in the development life cycle that follows the Agile Manifesto; and, Usa-DSL [30, 31], whose main goal is to guide the DSL usability evaluation process, in a high-level abstraction regarding the metrics that will be used.

In this paper, we will also show that metrics regarding efficiency, efficacy, usage simplicity and easiness, usefulness, time spent to fulfill tasks are discussed in several papers [2, 18, 19, 28]. Those metrics are aligned to the metrics used in the Usa-DSL framework, i.e., easy to learn, remember, effort/time, perceived complexity, satisfaction, conclusion rate, task error rate, efficiency, or effectiveness. Furthermore, some papers use questionnaires [26, 33, 35], some are based on cognitive dimensions [7, 14], and others propose manual evaluation techniques [3].

Few works discuss limitations and usability problems in the analyzed DSLs [2, 13, 17, 26]. They usually discuss problems related to lack of expressiveness, either from their grammar or the domain they intend to represent. Another problem that we could identify is related to the subjects that participate in the evaluation. Usually, researchers invite DSL developers and not specialists in the DSL domain or even DSL final users.

Finally, we will present the SLR protocol and discuss the results of the SLR, which point out that usability evaluation of DSLs is still a trending topic and further research is needed. We will also present an extended taxonomy used in the Usa-DSL framework and in the Usa-DSL process to guide the development of DSL usability evaluation artifacts.

This paper is organized as follows. Section 2 presents the SLR protocol and discusses the main findings of this work as well as discussions on the usability evaluation of DSL trending topics. Section 5 presents a Taxonomy for DSL Evaluation. Section 6 presents the final remarks of this work.

2 Systematic Literature Review

This section presents a Systematic Literature Review (SLR) protocol, in which the main focus was to identify and analyze the trending topics of the evaluation process of DSLs. To assist the SLR protocol we use the Thoth tool. The period in which the SLR was executed was from June 2016 to September 2020. This study allowed us to identify primary studies in both Human-Computer Interaction (HCI) and Software Engineering (SE) fields.

2.1 SLR Planning

In the planning stage, we performed the following activities in order to establish an SLR protocol: the establishment of the research goals and research question, definition of the search strategy, selection of primary studies, quality assessment, definition of the data extraction strategy, and selection of synthesis methods.

The goals of the study were to examine whether HCI aspects were considered or not during the development of a DSL, to know the techniques and approaches used to evaluate DSLs, and whether there were problems and limitations regarding DSL evaluation when HCI techniques were used. To do achieve those goals we set the following three research questions:

-

RQ1. Was the importance of usability considered during DSL development?

-

RQ2. What were the evaluation techniques that were applied in the context of DSLs?

-

RQ3. What were the problems or limitations identified during the DSL usage?

Search Strategy: the following digital libraries were used: ACMFootnote 2; IEEEFootnote 3; ScienceDirectFootnote 4; and ScopusFootnote 5.

Selection Criteria: the following inclusion (IC) and exclusion (EC) criteria were used:

-

IC1: The study must contain at least one of the terms related to HCI evaluation in DSLs in the title or abstract;

-

IC2: The study must present some type of DSL evaluation;

-

EC1: The study is about evaluation but not DSLs;

-

EC2: The study is not written in English;

-

EC3: The study is about DSL but does not present an evaluation;

-

EC4: The study is about HCI but not DSL evaluation.

2.2 SLR Execution

During this phase, the search string construction, studies selection, quality assessment, data extraction and synthesis were performed. The information produced during the execution of the RSL can be accessed at ZenodoFootnote 6.

Search String Construction: The search string was built based on terms from DSL and HCI, from usage evaluation and usability, and their synonyms. Figure 1 shows the generic string used in the digital libraries.

Quality Assessment: This step was performed by two evaluators who analyzed each one of the studies and answered the quality assessment questions as follows: yes, partially, and no. Each answer was graded as: 1 for yes, 0.5 for partially, and 0 for no. After answering the 5 quality assessment questions, only studies that were scored 2.5 to 5 were considered for further analysis. Table 1 shows only the articles that were considered to be read. The final quality score is the average from the assessment of the two evaluator. The quality assessment questions were:

-

QA1. Did the article make any contribution to HCI?

-

QA2. Did the article present any usability evaluation technique?

-

QA3. Did the article present the analysis of the results?

-

QA4. Did the article describe the evaluated DSL?

-

QA5. Did the article describe the encountered usability problems?

Primary Studies Selection: The performed search, based on the search string for each database, returned the number of studies presented in Fig. 2.

In the first phase of the SLR, 44 papers returned from ACM, 39 from IEEE, 146 from Scopus, and 14 from ScienceDirect, resulting in 243 papers. When eliminating duplicate papers and applying the inclusion, exclusion, and quality criteria, 21 papers remained, which were thoroughly read. Figure 2 shows the number of papers that were selected after each phase.

3 SLR Analysis and Answers to Research Questions

This section presents the answers to the research questions from Sect. 1. The answers are based on the 21 studies selected in Sect. 2.2.

RQ1. Was the importance of usability considered during DSL development?

The importance of usability in the development or evaluation of DSL was discussed in most of the selected studies. However, some studies quote usability evaluation instruments but do not describe the process itself. In a nutshell: i) some of these studies evaluate the environment in which the DSL is developed, without evaluating the language itself; ii) some define usability criteria to evaluate the language, but without relating them to the quality of use criterion; iii) some studies compared GPL with the DSL also without discussing the usability process, which could be involved in the development and evaluation of these languages. In the next paragraphs we summarize each of the studies analyzed in this paper.

First we mention papers that are related to usability of DSL somehow, but do not consider usability during the DSL development [2, 12, 13, 18, 19, 27, 28, 33].

Alhaag et al. [2] presented a user evaluation to identify language usefulness. The effectiveness and efficiency characteristics were measured based on the results of a task given to the participants and the time spent to complete the task. Furthermore, five other characteristics were evaluated: satisfaction, usefulness, ease of use, clarity, and attractiveness, through a subjective questionnaire created in accordance with the Common Industry Format (CIF) for usability test reports.

Nandra and Gorgan [27] adopted an evaluation processes to compare the use of GPL to a DSL, but there was no discussion on usability criteria. They made a comparison between Python and the WorDel DSL, using as criteria the average time in which the participants perform a certain task, code correctness, syntax errors, number of interactions in the editing area code (such as mouse clicks and key presses), and task execution precision.

Nosál et al. [28] addressed the user experience, without relating that to the usability criteria. This study presented an experiment with participants who had no programming knowledge, which they were organized into two groups to verify whether a customized IDE would facilitate the syntax comprehension of a programming language when compared to a standard IDE.

Finally, Henriques et al. [12] presented a DSL usability evaluation through SUS (System Usability Scale), which is a numerical usability evaluation scale with a focus on effectiveness, efficiency, and user satisfaction; Liu et al. [18] evaluated the web platform that runs a DSL, but not the language itself; and, Rodriguez-Gil [33] used an adaptation of UMUX (Usability Metric for User Experience), which is an adaptation of SUS.

Regarding usability analysis during the DSL development process, the following studies considered that in their research [3, 5,6,7, 11, 14, 17, 25, 26, 30, 31, 34, 35].

Shin-Shing [34] indicated that more studies evaluate usability in terms of effectiveness and efficiency than about other usability criteria. In that study, a measure of the feasibility of Model Driven Architecture (MDA) techniques was also made in terms of effectiveness and efficiency. However, there was no description of the process for usability evaluation.

Cachero et al. [7] presented a performance evaluation between two DSL notations, one textual and one graphical. For their evaluation, a Cognitive Dimensions Framework (CDF) was used. This framework defines a set of constructions to extract values for different notations, so that at least, partially, the differences in language usability are observed. For example, the extent to which a product can be used by participants to achieve particular goals such as effectiveness, efficiency and satisfaction in a specific use context.

Hoffmann et al. [14] stated that, despite being a very important task, usability evaluation is often overlooked in the development of DSLs. For DSLs that are translated into other languages, a first impression of the efficiency of a DSL can be obtained by comparing the number of lines of code (LoC) to the generated output. In their paper, they presented a heuristic evaluation in which some cognitive dimensions of the CDF are observed. However, an evaluation is not performed.

Msosa [26] observed through a survey of studies that the Computer-Interpretable Guideline (CIG) has no emphasis on the usability of the modeling language, since aspects of usability or human factors are rarely evaluated. This can result in the implementation of inappropriate languages. Furthermore, incompatible domain abstractions between language users and language engineers remain a recurring problem with regard to language usability. To evaluate the presented language, a survey was conducted, using the System Usability Scale (SUS) questionnaire, in order to obtain the participants’ perception of the DSL.

Le Moulec et al. [17] focused on the importance of DSL documentation, which, they claimed, imply in a better understanding of the language. In their study, a tool was proposed to automate the production of documentation based on artifacts generated during the DSL implementation phase, and an experiment was carried out using the tool in two DSLs. Furthermore, they observed the efficiency in automating language documentation and, consequently, improving usability.

Barisic et al. [6] defined DSL usability as the degree to which a language can be used by specific users, to meet the needs of reaching specific goals with effectiveness, efficiency and satisfaction, within a specific context of use. The authors also mentioned that, although there is a lack of general guidelines and a properly defined process for conducting language usability evaluation, they are slowly being recognized as an important step. In another study, Barisic et al. [5] argued that there is still little consideration to user needs when developing a DSL. They also mentioned that, even though, the creation of DSLs may seem intuitive, it is necessary to have means to evaluate its impact. This can be performed using a real context of use, with users from the target domain.

Bacíková et al. [3] indicated that DSLs are directly related to the usability of the their domain. They also argued that it is important to consider domain-specific concepts, properties and relationships, especially when designing a DSL. The study also considered discussions related to the User Interface.

Poltronieri et al. [31] mentioned that domain engineers aim, through different languages, to mitigate difficulties encountered in the development of applications using traditional GPLs. One way to mitigate these difficulties would be through DSLs. Therefore, DSLs should have their usability adequately evaluated, in order to extract their full potential. In their study, a framework for evaluating the usability of DSLs was proposed.

Mosqueira-Rey and Alonso-Ríos [25] also indicated an increase in research related to the evaluation of usability of DSLs through subjective and empirical methods. Although there are studies based on heuristics for interface evaluation, there is a shortage with regard to heuristic methods.

In order to reflect the user needs, Gilson [11] found that usability related to Software Language Engineering (SLE) has been poorly addressed, despite DSLs directly involving end users. For the author, evaluating the theoretical and technical strength of a DSL structure is very common. Nonetheless, usability issues are often overlooked in these evaluations.

Table 2 presents a summary of the topics that were discussed in each analyzed paper regarding the importance of usability considered during the DSL development.

RQ2. What were the evaluation techniques that were applied in the context of DSLs?

Researchers use quantitative (14/21) or qualitative (13/21) data to analyze the DSL usability. Several use both (8/21) and only in two situations we could not identify the data type that was used by the researchers.

Regarding technique, Usability Evaluation (8/21) was the most used one. Other techniques were also used, i.e., Usability Testing and Heuristic Evaluation. Several papers (8/21) did not describe which technique was used.

Different instruments were applied in the usability evaluation. Questionnaire was the most used instrument (13/21), but other instruments to support data gathering, such as logs, scripts, interviews, audio and video recordings and other tools that observe the tasks performed by the users during the evaluation were also used. Only two papers did not describe the instruments that were used in the usability evaluation.

Table 3 shows a summary of the evaluation techniques, instruments and data types that each study uses.

RQ3. What were the problems or limitations identified during the DSL usage?

The analyzed papers present some limitations or problems regarding, either the evaluated DSL or the evaluation process that they performed. From the 21 selected articles, not all of they present the problems found in their DSL (see Table 4). Only Hesenius and Gruhn [13], Henriques et al. [12], Liu et al. [18] and Nosal et al. [28] somehow present the encountered problems. The other articles have limitations found in general, that is, in the designed DSL or in the evaluations. Bacíková et al. [3], Rodriguez et al. [33] and Silva et al. [35] did not present any problem or limitation in their studies.

Regarding the study by Hesenius and Gruhn [13], the GestureCards notation has some limitations in special circumstances, and two potential problems were described: being voluminous and in the description of spatial gestures. These problems occur because GestureCards uses spatial positioning to denote temporal relations of partial gestures. Thus, the volume problem occurs when the gestures are composed of multiple partial gestures that are defined separately. It was suggested that to avoid those problems, the parallel gestures can be combined in a graphic representation when shared with the other features. The authors argue that this will be sufficient for most of the use cases.

Liu et al. [18] discussed the organizational problems for business people, as employees use their own mobile devices to process workflow tasks. Due to that, a middleware-based approach, called MUIT (Mobility, User Interactions and Tasks), was introduced to develop and deploy mobility, user interactions and tasks in Web Services Business Process Execution Language (WS-BPEL) mechanisms. This DSL allows to significantly reduce the manual efforts for developers with regard to user interactions, avoiding to use more than one type of code and thus offering satisfactory support for user experiences. On the one hand, the authors pointed out that some users from the healthcare area still complain that MUIT touch controls are not good when they process electronic patient records. On the other hand, no limitations were pointed out.

Henriques et al. [12] presented the OutSystems platform, a development environment composed of several DSLs, used to specify, build and validate data from Web applications on mobile devices. The DSL for Business Process Technology (BPT) process modeling, had a low adoption rate due to usability problems, increasing maintenance costs. Furthermore, they found a limitation related to population selection, because, due to resource limitations, all participants in the usability experiments were members of OutSystems. The authors believe that business managers should also be invited and that more experiments should therefore be conducted with interested parties.

Mosqueira-Rey and Alonso-Ríos [25] also identified usability problems in their case study, which uses heuristics that help to identify real usability problems. As problems they pointed out that DSL identifiers do not have a clear meaning, some acronyms have no obvious meaning and certain identifiers are difficult to remember.

Nosal et al. [28] results indicate that even IDE customizations can significantly alleviate the problems caused by syntactic problems in the language. As limitations, the authors mentioned the low representativeness of Embedded DSLs (EDSL) when compared to real-world DSLs. An EDSL can be much more complex from a syntactic point of view, as it can include variables, functions, structures, etc. The benefits of the proposed technique (for example, file templates) may become insignificant due to the complexity of the language syntax. Therefore, generalization of the results for all EDSLs is not possible and a replication of the experiment with a more complex EDSL is necessary. In terms of domain abstractions, the study by Msosa [26] mentioned that the incompatibility of some domain abstractions is a limitation that can present some barriers and negatively impact the usability of a DSL.

Shin-Shing [34] analyzed techniques for reverse engineering and model transformations in Model Driven Architecture (MDA). Their evaluation was performed using usability metrics, i.e. productivity and efficiency. In their paper, they concluded that such techniques are still immature and superficial, requiring further studies in order to improve them. They emphasized that, before performing further usability evaluations, they first needed to pay more attention to the techniques and methods used during the MDA development phases.

Nandra and Gorgan [27] presented and evaluated the DSL WorDeL interface, which was designed to facilitate the connection of existing processing operations in high level algorithms. The authors compared the WordeL DSL with the Python language. In their comparison, Python showed better results in terms of time to describe each task. Besides, the WorDeL DSL produces better results in terms of accuracy, with higher percentages of tasks completed correctly. WorDeL was also better in terms of accuracy when evaluated for its expressiveness.

Hoffmann et al. [14] did not present limitations on the evaluation, but mentioned that the number of lines of code can be considered a problem with regard to DSL usability. The authors mentioned that “For DSLs that are translated into other languages, the efficiency of a DSL can be obtained by comparing the number of lines of code between the source program and the generated output”. They also used the McCabe’s cyclomatic complexity [22] to analyze the language. Both lines of code and cyclomatic complexity are quantitative measures that can be applied to roughly estimate the effort to understand, to test and to maintain a program. Furthermore, the DSL was not used by end users.

Le Moulec et al. [17] found limitations regarding the contextualization of the documentation. Specifically, the model is customized to correspond to the DSL documentation concept. However, the provided examples had no context that were easily related to the original model. Another limitation was related to code compliance, the generated documentation did not help new users to make the models faster. Furthermore, some participants read all the available documentation before starting the exercises, while others did that when they started to use the editor. Therefore, the subjects spent around 30 min to perform the basic exercise, showing that the initial learning curve is a challenge to be faced in future works. Similarly, Le Moulec et al., and Logre and Déry-Pinna [19], mentioned that the relevance of the participants’ choice was not analyzed, making this a limitation.

Barisicic et al. [6] mentioned that the selection of participants might be a limitation in their study. They tried to mitigate the problem through tutors that would help the participants when they needed to answer the questions. This helped to guarantee the validity and integrity of the results. Likewise, the study by Alhaag et al. [2] also presented the background of the participants as a limitation for their study. As a solution to mitigate this threat, Alhaag et al. indicated that the platform should be evaluated by domain experts with little technical knowledge in order to better explore new metadata in their domain. Like the previous two studies, Gilson et al. [11] also described the lack of evaluation with end users as a limitation for their study.

Poltronieri et al. [30, 31] presented a framework focused on evaluating the usability of DSLs, called Usa-DSL framework. The authors pointed out the lack of a flow or process that would help the participants in the creation of the evaluation, guiding their steps, what should be done and at what time to perform a certain activity. Unlike the framework of Poltronieri et al., Barisic et al. [5] focused only on evaluating usability with end users and through experimental studies.

4 Evolution of Usability Evaluation for DSL

This section summarizes the evolution on research of usability evaluation of DSL from Rodrigues et al. [32]. In the past 5 years there was a significant increase in published studies on usability evaluation of DSL. The previous Systematic Literature Review (SLR) was performed without limiting the initial year and looked for papers that were published until 2016. In that SLR, 12 papers were selected. The current SLR selected 21 papers that were published from 2016 to 2020. This showed an increase of 75% in the number of selected papers that somehow are related to usability evaluation of DSLs. Hence, usability evaluation of DSL is still a trending topic. Actually, it seems that there is an increase on the research in this area. Some of the analyzed papers are more straightforward to mention that [1, 5, 12, 14, 18, 31, 36] than others.

One interesting point from the previous SLR to this one, is that, in the previous SLR authors seemed to be interested to evaluate their DSL, without any concern on the protocol, technique and instruments that were used [1, 8, 10]. In the current SLR it was clear the increased concern on the development or organization of the techniques and instruments that are used to evaluate the DSL usability [4, 12, 13, 35]. Furthermore, new protocols, frameworks and even processes to evaluate the DSL usability have been proposed. For example, Barisic et al. [5] and Poltronieri et al. [31] developed frameworks to evaluate DSL usability focusing on the ease of use. On the one hand, Bacikova et al. [3] presented ways to automatize the evaluation, while Mosqueira-Rey et al. [24] have created some heuristics to evaluate DSL usability.

Although authors have increased their preoccupation to use well-defined techniques, instruments and processes to evaluate usability of DSLs, they are still neglecting to better describe the problems or limitations that they find when evaluating DSL usability. Usually, authors describe problems or limitations when the DSL is used, but neglect to describe the DSL usability problems. It seems that the focus has been on whether users can use the language rather than to identify usability problems that the language contains. For example, few studies present user perception, use satisfaction, system intuitiveness, real-world representation, among others. Some authors [13, 14] even use some terms (i.e. number of lines of code, usage time, efficiency and efficacy) that are not directly related to usability. This might be one of the reasons that several DSLs are not successful.

5 Evolution taxonomy for DSL evaluation

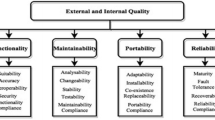

Based on the selected studies and the research questions, this section presents a taxonomy extension of terms used during the evaluation of DSLs. This taxonomy was structured as a conceptual mapping (see Fig. 3). This taxonomy was based on the terms that were mentioned in the studies selected in our previous SLR [32] and in the SLR presented in this paper.

Figure 3 shows the main groups of categories represented as the external rectangles: framework/approaches, data type, usability evaluation methods, instruments, profile user, software engineering evaluation method and evaluation metrics. In each of these groups, there is a set of categories, for example, a user profile can have the following categories: HCI expert, DSL expert, potential user, final user. This figure also presents new categories and one new group (i.e. process) that were not present in the previous taxonomy. These new categories are represented by gray rectangles in the figure. Basically the new categories are: i) frameworks: Usa-DSL framework and USE-ME; ii) process: Usa-DSL process; iii) instruments: data log, challenge solution, video recording, task recording; iv) Software Engineering Evaluation Method: quasi-experiment; and, v) metrics: conciseness, readability and comprehensibility. Furthermore, the figure also shows some categories represented by dashed round rectangles. These categories are not directly mentioned in the studies presented neither in the previous or in this SLR, but are important in the development of a framework and process to evaluate DSLs.

Similarly from what we found when answering the research questions presented in this paper, this taxonomy reflects the lack of standards when planning and applying DSL usability evaluation. We can highlight that many of the authors mention that they do not use standard techniques, methods or instruments when they evaluate their DSL. However, some authors have been developing new frameworks and processes to systematize and help language engineers to use standard methods, instruments and metrics for usability evaluation of DSLs.

6 Final Remarks

The literature has shown that issues related to usability, user experience and design principles have been increasingly considered in the DSL project. Recognizing and understanding the relationship among these principles in the development and evaluation of DSL has been a challenge, as not all interaction design goals have the same relevance in all types of systems and, in the case of DSL, in the DSL domain.

Therefore, DSL usability evaluation still remains an important research topic [3, 5, 6, 25, 30, 31], since there are still several issues that need to be improved. For example, several authors that evaluate DSL usability do not use HCI methods and in several papers they do not describe the evaluation execution in a proper way [2, 7, 11, 17, 19, 27, 28, 34]. Some of the authors evaluate the usability using well-defined instruments, but do not use specific methods, while others use ad-hoc methods to do that [13, 33]. Sometimes this lack of proper evaluation leads to the DSL being unsuccessful, because when the users are unable to use the language in its full, or need to follow many steps and remember different paths, they end up giving up.

Another relevant point is that language developers still do not use available usability frameworks or processes. Nonetheless, some researchers have proposed new ways to evaluate DSL usability. In this paper we presented an extension to the previous published taxonomy for usability evaluation of DSLs. It interesting to notice that after five years of the first published taxonomy, new categories were used by the researchers when evaluating their DSL.

Although the focus of this SLR was not to analyze tools to support the usability evaluation of DSLs, this is a subject that should be better investigated. This would allow researchers to understand how usability evaluation of DSLs is planned, executed, analyzed and reported by those tools.

Notes

- 1.

Thoth: http://lesse.com.br/tools/thoth/.

- 2.

ACM: http://portal.acm.org/.

- 3.

IEEE: http://ieeexplore.ieee.org/.

- 4.

ScienceDirect: http://www.sciencedirect.com/.

- 5.

Scopus: https://www.scopus.com/.

- 6.

References

Albuquerque, D., Cafeo, B., Garcia, A., Barbosa, S., Abrahão, S., Ribeiro, A.: Quantifying usability of domain-specific languages: an empirical study on software maintenance. J. Syst. Softw. 101, 245–259 (2015)

Alhaag, A.A., Savic, G., Milosavljevic, G., Segedinac, M.T., Filipovic, M.: Executable platform for managing customizable metadata of educational resources. Electron. Libr. 36(6), 962–978 (2018)

Bacikova, M., Galko, L., Hvizdova, E.: Manual techniques for evaluating domain usability. In: 14th International Scientific Conference on Informatics, pp. 24–30 (2017)

Bacikova, M., Maricak, M., Vancik, M.: Usability of a domain-specific language for a gesture-driven IDE. In: Federated Conference on Computer Science and Information Systems (FedCSIS), pp. 909–914, September 2015

Barisic, A., Amaral, V., Goulão, M.: Usability driven DSL development with USE-ME. Comput. Lang. Syst. Struct. 51, 118–157 (2018)

Barisic, A., Cambeiro, J., Amaral, V., Goulão, M., Mota, T.: Leveraging teenagers feedback in the development of a domain-specific language: the case of programming low-cost robots. In: 33rd Symposium on Applied Computing (SAC), pp. 1221–1229. SAC, ACM (2018)

Cachero, C., Melia, S., Hermida, J.M.: Impact of model notations on the productivity of domain modelling: an empirical study. Inf. Softw. Technol. 108, 78–87 (2019)

Cuenca, F., Bergh, J., Luyten, K., Coninx, K.: A user study for comparing the programming efficiency of modifying executable multimodal interaction descriptions: a domain-specific language versus equivalent event-callback code. In: 6th Workshop on Evaluation and Usability of Programming Languages and Tools, pp. 31–38. ACM, New York (2015)

Fowler, M.: Domain Specific Languages. 1 edn. Addison-Wesley (2010)

Gibbs, I., Dascalu, S., Harris, F.C., Jr.: A separation-based UI architecture with a DSL for role specialization. J. Syst. Softw. 101, 69–85 (2015)

Gilson, F.: Teaching software language engineering and usability through students peer reviews. In: 21st International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings, pp. 98–105 (2018)

Henriques, H., Lourenço, H., Amaral, V., Goulão, M.: Improving the developer experience with a low-code process modelling language. In: 21th International Conference on Model Driven Engineering Languages and Systems, pp. 200–210 (2018)

Hesenius, M., Gruhn, V.: GestureCards-a hybrid gesture notation. Human-Computer Interaction 3(EICS), 1–35 (2019)

Hoffmann, B., Chalmers, K., Urquhart, N., Guckert, M.: Athos - a model driven approach to describe and solve optimisation problems: an application to the vehicle routing problem with time windows. In: 4th International Workshop on Real World Domain Specific Languages (RWDS). ACM (2019)

Kelly, S., Tolvanen, J.: Domain-Specific Modeling: Enabling Full Code Generation. IEEE Computer Society, Wiley-Interscience, Hoboken (2008)

Kitchenham, B.: Guidelines for performing systematic literature reviews in software engineering. Technical report, Keele University, Durham, UK (2007)

Le Moulec, G., Blouin, A., Gouranton, V., Arnaldi, B.: Automatic production of end user documentation for DSLs. Comput. Lang. Syst. Struct. 54, 337–357 (2018)

Liu, X., Xu, M., Teng, T., Huang, G., Mei, H.: MUIT: a domain-specific language and its middleware for adaptive mobile web-based user interfaces in WS-BPEL. IEEE Trans. Serv. Comput. 12(6), 955–969 (2019)

Logre, I., Déry-Pinna, A.M.: MDE in support of visualization systems design: a multi-staged approach tailored for multiple roles. Hum.-Comput. Interact. 2, 1–17 (2018)

Marchezan, L., Bolfe, G., Rodrigues, E., Bernardino, M., Basso, F.P.: Thoth: a web-based tool to support systematic reviews. In: 2019 International Symposium on Empirical Software Engineering and Measurement, pp. 1–6 (2019)

Mator, J., Lehman, W., Mcmanus, W., Powers, S., Tiller, L., Unverricht, J., Still, J.: Usability: adoption, measurement, value. Hum. Factors J. Hum. Factors Ergon. Soc. (2020)

McCabe, T.J.: A complexity measure. IEEE Trans. Softw. Eng. SE–2(4), 308–320 (1976)

Mernik, M., Heering, J., Sloane, A.: When and how to develop domain-specific languages. ACM Comput. Surv. 37, 316–344 (2005)

Mosqueira-Rey, E., Alonso-Ríos, D.: Usability heuristics for domain-specific languages (DSLs). In: 35th Symposium on Applied Computing, pp. 1340–1343. ACM (2020)

Mosqueira-Rey, E., Alonso-Ríos, D.: Usability heuristics for domain-specific languages. In: 35th Symposium on Applied Computing (SAC), pp. 1340–1343 (2020)

Msosa, Y.J.: FCIG grammar evaluation: a usability assessment of clinical guideline modelling constructs. In: Symposium on Computers and Communications, pp. 1141–1146 (2019)

Nandra, C., Gorgan, D.: Usability evaluation of a domain specific language for defining aggregated processing tasks. In: 15th International Conference on Intelligent Computer Communication and Processing, pp. 87–94 (2019)

Nosal, M., Poruban, J., Sulir, M.: Customizing host IDE for non-programming users of pure embedded DSLs: a case study. Comput. Lang. Syst. Struct. 49, 101–118 (2017)

Poltronieri, I., Zorzo., A.F., Bernardino., M., Medeiros., B., de Borba Campos., M.: Heuristic evaluation checklist for domain-specific languages. In: 16th International Joint Conference on Computer Vision. Imaging and Computer Graphics Theory and Applications - Volume 2 HUCAPP: HUCAPP, pp. 37–48. SciTePress, INSTICC (2021)

Poltronieri, I., Zorzo, A.F., Bernardino, M., de Borba Campos, M.: USA-DSL: Usability evaluation framework for domain-specific languages. In: 33rd Symposium on Applied Computing (SAC), pp. 2013–2021. ACM (2018)

Poltronieri, I., Zorzo, A.F., Bernardino, M., de Borba Campos, M.: Usability evaluation framework for domain-specific language: a focus group study. ACM SIGAPP Appl. Comput. Rev. 18, 5–18 (2018)

Rodrigues, I., de Borba Campos, M., Zorzo, A.: Usability evaluation of domain-specific languages: a systematic literature review. In: 19th International Conference on Human-Computer Interaction, pp. 522–534 (05 2017)

Rodríguez-Gil, L., García-Zubia, J., Orduña, P., Villar-Martinez, A., López-De-Ipiña, D.: New approach for conversational agent definition by non-programmers: a visual domain-specific language. IEEE Access 7, 5262–5276 (2019)

Shin, S.S.: Empirical study on the effectiveness and efficiency of model-driven architecture techniques. Softw. Syst. Model. 18(5), 3083–3096 (2019)

Silva, J., et al.: Comparing the usability of two multi-agents systems DSLs: SEA\_ML++ and DSML4MAS study design. In: 3rd International Workshop on Human Factors in Modeling, vol. 2245, pp. 770–777 (2018)

Sinha, A., Smidts, C.: An experimental evaluation of a higher-ordered-typed-functional specification-based test-generation technique. Empir. Softw. Eng. 11, 173–202 (2006)

Acknowledgements

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001. Avelino F. Zorzo is supported by CNPq (315192/2018-6).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Poltronieri, I., Pedroso, A.C., Zorzo, A.F., Bernardino, M., de Borba Campos, M. (2021). Is Usability Evaluation of DSL Still a Trending Topic?. In: Kurosu, M. (eds) Human-Computer Interaction. Theory, Methods and Tools. HCII 2021. Lecture Notes in Computer Science(), vol 12762. Springer, Cham. https://doi.org/10.1007/978-3-030-78462-1_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-78462-1_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-78461-4

Online ISBN: 978-3-030-78462-1

eBook Packages: Computer ScienceComputer Science (R0)