Abstract

How do people make decisions, what are the driving forces, and what are their ethical and moral disorders? The explanatory pattern of human rationality is subject to other disturbances than those of AI. The concept of human rationality, its importance in modeling, and as a basis for argumentation are introduced. Its shortcomings are demonstrated with a concrete economic example. It is demonstrated that economical irrational decisions are prevalent. A set of cognitive biases are elaborated and explained using concrete examples from COVID-19 that are caused by statistical misinterpretation. Humans’ significant tendencies to “cheat a little” are elaborated with Dan Ariely’s “fudge factor” explained. In particular, in the context of further rollout of AI technologies, it is demonstrated how this immoral problem increases. As a further example, an initiative that appears ostensibly ethical-moral praiseworthy—the movement to inclusive perspectives in the investment industries—is analyzed against the background of limited moral responsibility. Concepts are presented to foster morality in a sensible manner by taking joint responsibility.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- AI

- Base rate fallacy

- Behavioral economics

- CAPM

- Confirmation bias

- COVID-19

- Egocentric bias

- Escape pattern

- Fudge factor

- Group think

- Inclusive capitalism

- Infection rate

- Halo effect

- Homo oeconomicus

- Irrationality

- LCA

- Overconfidence bias

- Prevalence

- Principal–agent theorem

- Rationality

- Reproduction rate

- SAFe

- Stewardship

1 Learning Objectives

-

1.

Identify the importance of a rationality-based view in economic models and approaches.

-

2.

Assess the limitations of this one-sided approach in both economic and ethical terms.

-

3.

Explain and justify deficiencies such as irrationality and cognitive biases and establish a moral distance to them.

-

4.

Recognize the ethical consequences of a misinterpretation or misunderstanding of data sets.

-

5.

Reflect recent trends in the convergence of morals and economics and critically identify the motivation behind them.

-

6.

Realize the additional ethical challenge AI technologies imposes on decision-making, as psychological distance increases.

2 The Concept of Rationality

From the discussion of preference utilitarianism (see Sect. 1.4.2), it can be deduced that even in difficult situations (e.g., the “trolley” problem) the best (mini-mired negative or maximum positive) result for the respective context can be achieved by rational decision. This functional rationalism is inherent in many models of the economy. It assumes that the human being acts in each case in a way that maximizes the benefit and thus is purpose-oriented. This logically founded ideal type, called “homo oeconomicus,” is not at all to be found in reality, since man is not only rational but also characterized by emotion. In this respect, a purely economic view is not enough. Therefore, findings of psychology are increasingly taken into account. Examples of this are behavioral finance (i.e., the “psychology of investors” and the study of shareholder behavior) or behavioral risk management (Shefrin 2016) (i.e., the “pathology” of organizations in dealing with risks).

The cognitive limitations of individuals and also of groups can lead to misjudgments and thus often to worse results than a rational decision would promise.

Ultimately, another problem area is that the motivation of individuals is not necessarily congruent with the company’s goals (see as well Sect. 1.3 where the discrepancy between individual’s and society’s perception was dealt with).

In the following, the different problem areas are briefly discussed.

3 Rationality as an Explanatory Pattern

Most management approaches are still based on the assumption of rational behavior. Economic models, which are still influential today, are based on the assumption of a “homo oeconomicus,” although this is known to be an inadequate description of actual actions. An example from the financial market should illustrate this: The basis of many of the models used today, such as the still predominant capital market theories, is Markowitz’s portfolio theory from the 1960s (Brealey et al. 2016), as well as the Nobel Prize-winning “Capital Asset Pricing Models” (“CAPM”) based on it, after W.F. Sharpe and others. Without going into detail, the factual positive correlation between expected return and risk (“no risk—no fun”) is certainly rationally obvious. But that the markets act highly irrationally can always be seen, especially when the company’s share price falls despite good real results or vice versa. It is therefore not surprising if a model that is not designed for prediction (but is nevertheless used for it) only provides limited coverage with reality.

Example of market irrationality in the context of COVID-19: Take the example of the share price development in Germany under the spell of the COVID-19 crisis: The business newspaper Handelsblatt of 11.05.2020 headlines in its morning briefing “Economy paradox: The worse the economic data and the profit expectations of the companies, the stronger the share prices currently rise” (Jakobs 2020).

The reason why such one-dimensional rational attempts of explanation are common despite the well-known limitation may be the logical and thus understandable derivation of the relations. This creates confidence and orientation. Irrational—thus not deducible behavior—would not be understandable in explanations and thus hardly acceptable.

In addition, a purely rational approach offers a convenient “escape pattern”: instead of critically weighing up the most diverse qualitative and quantitative aspects and making this process of knowledge transparent, traditional statistics are used—sometimes very unreflectively—to justify ethically difficult decisions. This can have fatal consequences in extreme situations, where good decisions are more important than ever, because the starting point is not right. Bent Flyvbjerg sums it up with his approach “regression to the tail” when he outlines that in extreme situations such as natural disasters, terrorist attacks, and pandemics, the risk of a disaster is very high. “Prudent decision makers will not count on luck—or on conventional Gaussian risk management, which is worse than counting on luck, because it gives a false sense of security—when faced with risks that follow the law of regression to the tail” (Flyvbjerg 2020).

Example COVID-19—Statistics: The unreflective handling of statistical findings can be demonstrated again very well with the example of COVID-19: In particular in the media, three characteristic numbers are represented and serve as argumentations and justifications for political decisions; without their force of expression, inaccuracies and restrictions are critically examined:

-

Infection rate (the number of new infections per day)

-

Mortality rate (the number of deaths related to COVID-19)

-

Reproduction rate R: the (statistical) number of newly infected persons during the current so-called generational period (mean period from the infection of a person to the infection of the subsequent cases infected by him/her, in Germany in April 2020 within 4 days) in relation to the sum of new infections of the previous generational period. A reproduction number R = 2 corresponds to a doubling within the generation time, R = 1 to a linear and R = 0.5 to a halving of new infections (Gigerenzer et al. 2020).

Table 2.1 shows the individual outstanding problem areas in the (statistical) data.

In general, the process chain should be viewed from a rational point of view. The questions shown in Fig. 2.1 can help in this regard. Very often, problems arise in sampling, evaluation, and proper communication.

With regard to an ethical context in connection with artificial intelligence, the following can be said about rational behavior, which holds both opportunities and risks:

-

1.

Due to its complexity (social morality vs. individual ethos, socialization and cultural dependencies, generational conflicts, variability, etc.) ethics is already a challenge that cannot be clearly described by rationality. This is expressed, among other things, in the different philosophical ethical approaches (see Chap. 1).

-

2.

(Human) Persons who have to make decisions in ethically relevant questions combine different roles at the same time: A manager is not only a business person with the obligation to protect the interests of the company, but at the same time an individual who brings her or his entire personality from emotionally charged experience / skills / knowledge / needs and social / family role into the decision-making process.

-

3.

Non-human AI-supported automation or bots, on the other hand, are one-dimensionally trained for their functional role and the tasks involved. They lack the complexity of human life. Even if it should ever be possible to come close to human empathy (Bak 2016), authentic empathy is not recognizable for the foreseeable future due to the lack of (human) desiderataFootnote 1 (Hüther 2018).

From this it can be deduced that a mature AI in a society with clear ethical preferences (e.g., deontological approaches or utilitarianism) could make ethically more consistent decisions than humans, precisely because of its one-dimensionality (see Trolley Problem in Sect. 1.4.2). However, this chance is also opposed by the significant risk that due to this limitation of the AI emotionless and thus in the true sense of the word “inhuman” seeming decisions are made.

4 Irrationality and Cognitive Biases

Irrational decisions are often caused by cognitive distortions. In the wide field of behavioral economics, there are now a wide variety of conspicuities that promote irrational decisions. Some aspects important for the ethical dimension are listed below, without claiming to be complete. Particularly problematic are a number of biases, which are manifested in various forms. Examples are

-

Overconfidence bias: The phenomenon also known as hybris, where the more difficult the task is, the greater the overconfidence. This is particularly noticeable in management circles when decisions are made without a self-critical assessment of the probability of success of a risky venture.

-

Overestimating one’s own importance (egocentric bias): This is expressed, for example, by unrealistically overestimating one’s own contribution to a joint success result and marginalizing the contribution of others.

-

Tendency to attach and maintain known false beliefs (confirmation bias): This is also articulated as the so-called anchor effect. An example of this is the phenomenon that when a project is first assessed, a quantification that is not reliable at this point in time, such as “we can save an estimated X million euros with this”, is repeatedly used as a reference in the further course of the project, even though in the meantime much better findings have been obtained, perhaps a much smaller number than the first-mentioned figure.

-

Base rate fallacy: The problem is the correct interpretation of probabilities, which refer as percentage value to a base rate. This misinterpretation is widespread, as the experiment at Harvard Medical School shows (Hoffrage et al. 2000):

If a test to detect an infection with a prevalence of 1/1000 (i.e., one person actually infected per 1000 persons) has a false-positive rate of 5% (i.e., 5% of the tested persons test positive due to measuring/testing errors of the first type, even though in reality there is no infection), what is the probability that a person tested positive is actually infected (assuming that nothing is known about the symptoms or signs of illness of the tested person)? In the classic Harvard case, 27 out of 60 respondents (experts, employees, and students) answered with 95%—almost half of the respondents. But now the correct answer is of a completely different order! If Bayes’ theorem had been correctly applied, one would not have succumbed to the “false-positive“paradox.Footnote 2

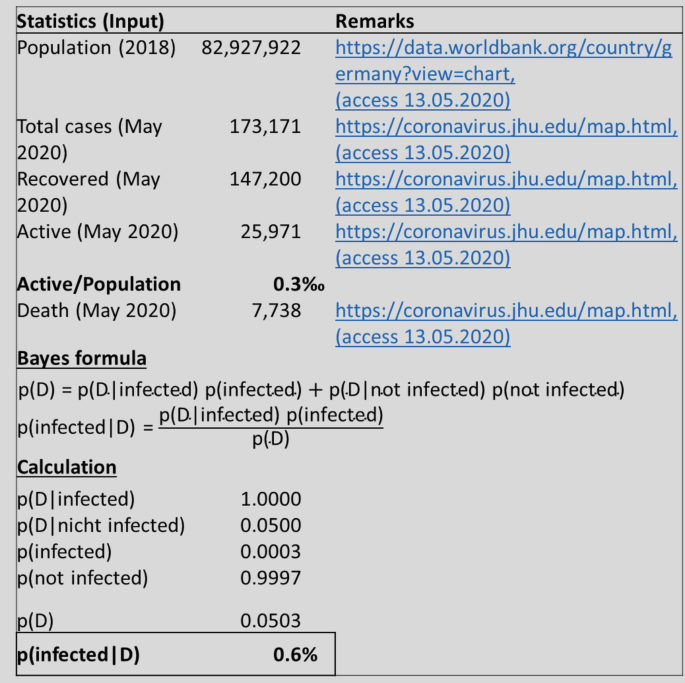

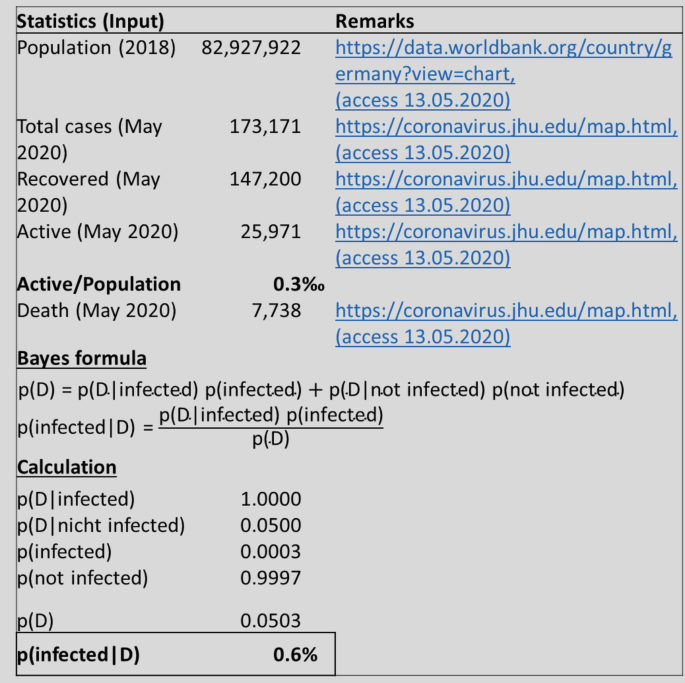

Example of Base Rate Fallacy in COVID-19 Infection Testing in Germany

If a test for COVID-19 infection with a prevalence of 1/1000 (i.e., one person actually infected per 1000 people) has a false-positive rate of 5 percent (i.e., Type I error due to measuring/testing errors), the test is considered to be false positive (i.e., 5% of the tested persons are tested positive, even if there is no actual infection). What is the probability that a person tested positive is actually infected—assuming that they know nothing about the symptoms or signs of the person (e.g., the scenario of a comprehensive nation-wide COVID-19 test)? The following data and applying Bayes’ formula show a probability of 0.6%!

-

Distortions due to emotional influences (e.g., put on rose-colored glasses): this problem, which is also related to the halo effect, results from the fact that it is easy to lose the overall context and the critical view (“wishful thinking”) if a characteristic outshines other circumstances. This can be observed very well in the climate protection debate and environmentally friendly technologies. For example, one positive aspect of the introduction of electric vehicles is certainly the reduction in the amount of climate-damaging exhaust gases in city centers. However, if one considers that the necessary electrical energy is not necessarily generated in an environmentally friendly way, that the raw materials for the necessary energy storage in the vehicle are extracted under ethically questionable conditions, and that the batteries have considerable power losses, the advantage of higher engine efficiency is quickly put into perspective. A comprehensive consideration of efficiency and life cycle assessment (Life cycle assessment(“LCA”)) would be appropriate for a realistic assessment.

Another problem with cognitive bias is the sequence of decisions. Often these decisions are made in isolation one after the other (sequentially), so that major problems can arise here: Instead of anticipating dependencies and only making decisions at the latest possible point in time,Footnote 3 individual decisions are made in an early phase, which cannot be revised without loss. This loss can manifest itself both materially in the form of additional costs and emotionally in the form of admitting a wrong decision. Especially the latter is often more difficult to cope with.

Finally, another cognitive problem—group-think—needs to be addressed: In an effort to reach a consensus, groups may make decisions that ignore individual critical voices. In addition, groups are more willing to take a higher risk than any individual of that group. This can be explained by the collective responsibility, which does not hold the individual accountable in case the decision turns out to be wrong. Attempts to explain this can be found in the egocentric distortion (see above) or in the prospect theory (Kahneman and Tversky 1979), which describes risk-seeking behavior in the loss range but risk-averse behavior in the profit range.

The unfavorable combination of group-think, excessive optimism, and overconfidence bias led, for example, in the financial crisis of 2008 to the crash of major US financial institutions Fannie Mae, Freddie Mac, AIG (Shefrin 2016).

5 Moral Distance to Irrational Behavior

A significant effect in the context of behavioral economics, especially in the ethical context of relevance, is the so-called Fudge factor (Ariely 2012): Small, everyday inaccuracies are often dealt with without a guilty conscience or accompanied by justifications such as “others do it too” or “nobody notices.” The decision to cheat is not rational, but nevertheless very common—with fatal consequences (see below). Behavioral economist and psychologist Dan Ariely demonstrates this clearly in his experiments (Ariely 2012).

Example of identifying the “fudge factor”: Ariely influenced groups of subjects who were to take a simple written arithmetic test by giving the participants designer sunglasses in advance: one group was told that these sunglasses were authentic, the second group was told that they were fake, and the third control group was not told anything at all. Each group had the opportunity to deceive during the test. In the control group, 42% had cheated. In the authentic group, the deception rate decreased to 30%. In the interpretation, the participants’ self-image was strengthened by the advantageous deal. But in the group with the allegedly fake glasses the fraud rate was 74%! This leads to the conclusion that deliberate dishonesty—no matter how small—increases the probability for others to do the same.

The probability of cheating also increases with the psychologic distance to the crime. This is an important aspect especially in the environment of digitization (and artificial intelligence). “The more cashless our society becomes, the more our moral compass slips” (Dan Ariely).

With the further spread of AI, the psychological distance will inevitably increase massively. Figure 2.2 shows the principle relationship of honesty and care.

6 Morals and Economics

Morality and economy are in conflict with each other. What a society advocates (morality) is only, in exceptional cases, congruent with the interests of economically active decision-makers, who ultimately perform a fiduciary task for the company’s equity investors.

In this respect, the approach of the economist Adam Smith (1723–1790) offers only one facet, which does not comprehensively reflect reality. In essence, Smith’s approach says that through the “invisible hand of the market”—i.e., through self-regulation from supply and demand—the respective maximization of property ultimately also increases the prosperity of the general public. However, the reality is much more complex: For example, entrepreneurial economic success (profit in particular) does not automatically lead to an increase in employees’ wages and salaries or the creation of more jobs. Far away from Smith’s ideal, there is rather an exploitation of market power up to monopolistic structures. One example of this is the tax avoidance of the large GAFAFootnote 4 Internet groups, which have been systematically shifting their profits (legally) through licenses for years in such a way that they did not pay any taxes in the important sales market of Europe (where in fact no development took place). The participation of the European national economies has failed to materialize. On the contrary, their market power leads (even if not originally intended) to the fact that these companies exert considerable influence on the respective national politics (Beutelsbacher et al. 2016), as the examples of Amazon and Luxembourg or Apple and Ireland have shown: in 2017, official politics opposed the political initiative of the European Commission to eliminate the tax loopholes through a digital tax. The trend over the last decade shows that these four mega-corporations together have already reached a market capitalization equivalent to 1/3 of the entire EU 27 (excluding the UK, which left the EU on January 31, 2020)Footnote 5 and Apple’s market capitalization exceeded the magic $2 trillion mark on August 19, 2020, almost reaching the GDP of the third largest EU economy, Italy. Such monopolistic structures effectively cancel out the laws of a free and fair market with a socially legitimized political force. Even in stakeholder economies, in which a socially more acceptable variant of the liberal market economy is represented, there are considerable distortions due to market asymmetries, for example in the labor market.

Interestingly, some change is now emerging from the entrepreneurial perspective and a—certainly profit-driven interest—in taking into account the trend toward sustainability in social and ecological terms. Inclusive capitalism will be explained below as an example.

6.1 Inclusive Capitalism: Example of an Initiative to Consider the Sustainability Aspect—Not Only for Ethical Reasons

Today’s standard financial reporting—certified by auditors—no longer does justice to the increasing complexity of sustainable corporate management and reporting to its stakeholders: funds from the last century are still being used, which do not at all capture the essential components that characterize the value of a company. This is due in particular to the backward-looking focus on financial indicators (ex post) and thus the neglect of long-term—leading—indicators that characterize the internal value of a company and its impact on the corporate environment. Some studies show that in 2018 only 20% of the company’s value will be recorded in the balance sheet, compared to over 80% in the mid-1970s (Brand Finance 2018).

More or less suitable indicators and modern management methods are available in abundance—nevertheless, the comprehensive implementation of such methods in the broad mass of organizations is mostly to be considered a failure: the reasons are to be found in the one-sidedness of the content, the complexity, and the actual motivation (mostly focused on one’s own profile or business advantage) of those responsible for implementation. Well-known examples are the “Shareholder Value“e.g. with the “Economic Value Add—EVA” or the “Balanced Score Card.”

The monitoring structure of compliance is no longer up to date, as both the process-bound internal and the avoidance of independent external (but paid for before the organization to be reviewed) review by auditors do not meet the highly competitive pressure. Due to

-

1.

Lack of standardization in comprehensive reporting (which in turn is due to mostly manual or max. Semi-automated processes)

-

2.

Latent and/or concrete dependencies (e.g., in some cases substantial economic and financial losses)

there is neither a reliable transparency nor reliability of the audit certificates itself. A good example is the comparison of the Wirecard scandal in 2020—the now insolvent tech company, which barely 2 years earlier had been included in the renowned DAX 30, Germany’s “most valuable” 30 companies, and whose balance sheet forgeries were recognized far too late on a large scale. The similarity to the US scandal about Enron about 20 years earlier is astonishing and sobering: despite tighter regulations and progress in digitization, it is frightening to have to state that obviously nothing has been learned. In both cases, auditors have a special role to play, since the expectation of stakeholders (and thus shareholders in the narrower sense) is that reporting is reliable. However, this was not achieved in the abovementioned cases and led to considerable damage to the company’s reputation, which, at least in the Enron case, led to the dissolution of the auditing company.Footnote 6

Various initiatives that were already concerned with changing or expanding the reporting system were only able to establish themselves to a limited extent. The reasons for this are in two areas:

-

1.

Complexity:

There is too much reporting complexity and therefore not operationalizable for many (e.g., GRI—Group Reporting Initiative—an extremely comprehensive set of rules and regulations with regard to sustainable management, which at best large companies with a high level of resources can only implement to some extentFootnote 7)

-

2.

Sloping toward auditing firms:

Drivers of the initiative are obviously biased toward accounting auditors (presumably primarily on generating new business) and not consensus-oriented, so that neither the companies that generate primary value added (e.g., manufacturing companies) were sufficiently involved, nor were the competitors of these drivers visible in the initiative, which could have ensured broader acceptance. An example is the initiative “Coalition for Inclusive Capitalism” (EY 2018), which of its 31 members only involved nine(!) companies worldwide with primary value creation and all others only from the field of asset management (asset ownersFootnote 8). In addition, this project was dominated by an auditing and consulting firm.Footnote 9

Despite this sobering summary, it should be pointed out that a “keep it up” with single-sided pure short-term economic focus seems as unsuccessful as some current initiatives that are characterized by exuberating complexity (Vieweg 2020b). Finding a practicable, purposeful, and pragmatic middle way that includes those actually affected is a concrete challenge. Here it quickly becomes apparent that the use of artificial intelligence can help in the sense of information processing for evidence-based decisions and to avoid cognitive (human) bias. On the other hand, skills, beliefs, and values of individuals are crucial to find the “right” decision in a changed context (see Fig. 2.3). In this respect, a complementary approach seems to be promising. This can be seen in the case of extended reporting outlined above: Key upstream and downstream key performance indicators—uniformly defined, recorded in a standardized way, and automatically processed in compliance with good business practice (“Machine”) can lead to a pragmatically sustainable and decision-useful supply of information to stakeholders, taking into account the individual situation of what is feasible in a meaningful way (“Human”).

6.2 Stewardship as an Approach to Sustainable and Ethically-Morally “Good” Corporate Governance

The field of tension between the motivation of corporate decision-makers and their monitoring is sufficiently described in theoretical considerations ranging from principal-agent theorem to stewardship (Davis et al. 1997) theory and illustrates the agents’ framework for action.

The starting point for each of these theories is a different conception of man, assumed motivation, and thus congruently derived focus of action of the principal (owner and his representative, e.g., supervisory board) and the agent acting on behalf of the principal (manager, e.g., board of directors). The stewardship theory complements the principal–agent theory in a context-dependent manner and thus offers further explanations for the respective behavior of the actors.

The principal–agent theory assumes a fundamental conflict of goals between the two actors, which results from the human image of selfish opportunism. The motivation assumed for the agent is extrinsic; i.e., the manager is not primarily interested in the well-being of the company. Accordingly, the principal distrusts the agent, and the principal’s primary framework for action will lie in monitoring the agent.

In contrast to this, the stewardship theory sees a fundamental congruence of goals between the two actors, which is based on the idea of a manager who is primarily concerned with the well-being of society and the company, i.e., who is intrinsically motivated and puts personal interests second. Accordingly, the principal trusts the agent and the principal’s primary scope of action will be to advise the agent.

For the company, there are considerable differences in efficiency, provided that the assumed basic assumptions correspond to reality in each case: thus, with the principal–agent approach, costs (damage) can be minimized (see Fig. 2.4: Agent/Agent), whereas with the stewardship approach, corporate performance is maximized accordingly (see Fig. 2.4: Steward/Steward).

Extended stewardship theorem (own extended presentation, based on Davis et al. (1997))

If the ethical and moral dimension is now added to the stewardship approach, it becomes clear that the social contribution is also maximized beyond the corporate framework.

In order to remain within the picture of the extended stewardship theory, the challenge of sustainable and ethically-morally “good” corporate governance is therefore to minimize the risk of constellations that do not lie on the main diagonal of Fig. 2.3 (“red fields”), which should be evidence-based.

7 Finish Line Quiz

2.01 | The model of the “homo oeconomicus” is… | |

1 | … is a proven and comprehensive approach to reflect reality. | |

2 | … is a concept introduced in times when the psychological dimension of business decision was not reflected at all. | |

3 | … fully reflects the behavioral aspects of human decision-making. | |

4 | … is not used anymore. | |

2.02 | Comparing humans and AI regarding rational decision-making process in business, … | |

1 | … humans combine with their personality different roles at the same time, which influences the decision. | |

2 | … humans combine with their personality different roles, though they focus their decision on the business side. | |

3 | … AI mirrors various aspects of human perspectives in the decision-making. | |

4 | … AI does not take decisions; humans do. | |

2.03 | Given a prevalence of 1/1000 on a virus test with a false-positive rate of 1.0%, what is the probability that a person tested positive is actually infected (assuming that they know nothing about the symptoms of the person)? | |

1 | 99.0% | |

2 | 95.0% | |

3 | 9.1% | |

4 | 1.0% | |

2.04 | What is to be expected if people act in an environment of fake? | |

1 | They stick to their principles and ethos. | |

2 | Large fraud cases with significant damage are to be expected, as all people act fraudulently. | |

3 | Given possibilities of conceiving “short cuts” people tend to cheat “a little.” | |

4 | People always cheat at the same intensity, independent of the environment. | |

2.05 | How are personal commitment and psychological distance related to each other? | |

1 | Importance of ethos is highest if functional supervision is given. | |

2 | Psychological distance is higher in functional responsibility than in personal accountability. | |

3 | Psychological distance is lower in functional responsibility than in functional supervision. | |

4 | Importance of ethos is low if personally informed. | |

2.06 | Adam Smith’s theory of the “invisible hand” is … | |

1 | … flawed, because compensation rise of executives is not directly mirrored in payment stagnation to staff. | |

2 | … flawed, because compensation rise of executives is directly mirrored in payment stagnation to staff. | |

3 | … flawed, because compensation rise of executives is not directly mirrored in payment rise to staff. | |

4 | … fits, because compensation rise of executives is directly mirrored in payment rise to staff. | |

2.07 | Extending the valuation of a business beyond traditional (financial) reporting such as the balance sheet (aka statement of financial position) is … | |

1 | … adequate, as the minority of the company’s value is recorded on the balance sheet. | |

2 | … likely to produce additional complexity that is motivated by auditing firms, financial advisory, rating firms, and financial information brokers to extend their business opportunities. Comprehensive technology assessment is not envisaged. | |

3 | … likely to reduce additional complexity although a comprehensive technology assessment is envisaged. | |

4 | … inadequate, as the minority of the company’s value is not recorded on the balance sheet. | |

2.08 | Humans and machines are complementary in such regard that … | |

1 | … humans are better in context mismatch prevention. | |

2 | … machines have a higher degree of misperception. | |

3 | … humans are better in avoiding misperception. | |

4 | … machines are better in context mismatch prevention. | |

2.09 | Stewardship theory sees the following critical constellations between the actors: | |

1 | When the principal correctly expects the manager to apply stewardship. | |

2 | When the principal falsely expects the manager to act as agent. | |

3 | When the principal acts economically, as the manager does. | |

4 | When the principal acts short term economically and the manager pursues high ethical standards. | |

Notes

- 1.

See Sect. 1.4.2.

- 2.

The correct answer in the classic Harvard case is 2%.

- 3.

As this practice is used successfully by agile methods such as the scaledagileframework.com (SAFe®) (SAFe 2020), that assumes variability and preserve options (Vieweg 2020a).

- 4.

GAFA—referred to Google, Apple, Facebook, and Amazon. Sometimes quoted as GAFAM including Microsoft: in August 2020 the five US-tech giants had a market cap of >$ 7.4 TN, which is c. 45% of the entire EU 27 BIP (2019).

- 5.

Own analysis based on ec.europa.eu/eurostat “European Union: Gross domestic product (GDP) in the member states in current prices in 2019.” and (Janson 2020).

- 6.

Arthur Anderson was at that time one of the world’s “Big Four” accounting firms and was dissolved in the wake of the Enron scandal; the consulting business was renamed “Accenture.” The EY involved in the Wirecard case currently has to fear corresponding damage to its reputation; see (FT 2020).

- 7.

The main manual alone comprises more than 400 pages!

- 8.

This term is misleadingly used in the “Embarkment Project for Inclusive Capitalism,” since insurance companies and pension funds also manage assets.

- 9.

16 of the 19 members come from the consultancy and auditing firm EY (2018).

References

an der Heiden, M., & Hamouda, O. (2020). Schätzung der aktuellen Entwicklung der SARS-CoV-2-Epidemie in Deutschland – Nowcasting. Epidemiologisches Bulletin, 17, 10–16. https://doi.org/10.25646/6692.4.

Ariely, D. (2012). The honest truth about dishonesty: How we lie to everyone - especially ourselves. New York: HarperCollins.

Bak, P. (2016). Maschinelle Empathie. In Zu Gast in Deiner Wirklichkeit. Berlin, Heidelberg: Springer Spektrum.

Beutelsbacher, S., Sommerfeldt, N., & Zschäpitz, H. (2016). Die gefährliche Dominanz der großen Vier. Retrieved May 11, 2020, from https://www.welt.de/finanzen/article150809163/Die-gefaehrliche-Dominanz-der-grossen-Vier.html

Brand Finance. (2018). Global Intangible Finance Tracker (GIFTTM) 2018—An annual review of the world’s intangible value. Retrieved from http://brandfinance.com/images/upload/gift.pdf

Brealey, R., Myers, S. C., & Allen, F. (2016). Principles of corporate finance (12th ed.). New York: McGraw-Hill.

Davis, J. H., Schoorman, F. D., & Donaldson, L. (1997). Toward a stewardship theory of management. The Academy of Management Review, 22(1), 20–47.

EU. (2019). European Union: Gross domestic product (GDP) in the member states in current prices in 2019. Retrieved May 13, 2020, from ec.europa.eu/eurostat

EY. (2018). EPIC – Embarkment Project for Inclusive Capitalism. Retrieved May 13, 2020, from https://www.epic-value.com/

Flyvbjerg, B. (2020). The law of regression to the tail: How to mitigate pandemics, climate change, and other deep disasters. Retrieved November 1, 2020, from https://www.sciencedirect.com/science/article/pii/S1462901120308637?via%3Dihub

FT. (2020). EY fights fires on three audit cases that threaten its global reputation, FT June 8, 2020. Retrieved June 20, 2020, from https://www.ft.com/content/576e4c7f-93e5-4e8a-b5ba-5e1161533c5a

Gigerenzer, G., Krämer, W., Schüller, K., & Bauer, T. K. (2020). Corona-Pandemie: Die Reproduktionszahl und ihre Tücken. Retrieved May 11, 2020, from https://www.rwi-essen.de/unstatistik/102/

Hoffrage, U., et al. (2000). Communicating statistical information. Science. https://doi.org/10.1126/science.290.5500.2261.

Hüther, G. (2018). Würde – Was uns stark macht. New York: Random House.

Jakobs, H.-J. (2020). Schlachthöfe, der neue Hotspot. Retrieved May 11, 2020, from https://www.handelsblatt.com/meinung/morningbriefing/morning-briefing-schlachthoefe-der-neue-hotspot/25817868.html

Janson, M. (2020). Jahrzehnt des Wachstums für US-Techriesen [Digitales Bild]. Retrieved May 11, 2020, from https://de.statista.com/infografik/20417/marktkapitalisierung-von-gafam/

Kahneman, D., & Tversky, A. (1979). Prospect theory: An analysis of decision under risk. Econometrica, 47(2), 263. https://doi.org/10.2307/1914185.

SAFe. (2020). Retrieved May 11, 2020, from www.scaledagileframework.com

Shefrin, H. (2016). Behavioral risk management: Managing the psychology that drives decisions and influences operational risk. New York: Palgrave.

Vieweg, S. (2020a). The art of unleashing full SAFe potential – results from an independent empirical research amongst SAFe experts. Retrieved October 02, 2020, from https://youtu.be/Ck7Nh54Il38

Vieweg, S. (2020b). Response for the consultation paper on the development of the CFA Institute ESG Disclosure Standards for Investment Products. Retrieved October 02, 2020, from https://www.cfainstitute.org/-/media/documents/code/esg-standards/esg-consultation-paper-comment-stefan-vieweg.ashx?la=en&hash=AB522D11420C85A288361897A6170F49B4ED9BDE

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Vieweg, S.H. (2021). Human Rationality and Morals. In: Vieweg, S.H. (eds) AI for the Good. Management for Professionals. Springer, Cham. https://doi.org/10.1007/978-3-030-66913-3_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-66913-3_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-66912-6

Online ISBN: 978-3-030-66913-3

eBook Packages: Business and ManagementBusiness and Management (R0)