Abstract

The iterative Gaussian filter method is proposed to eliminate the phase error of the wrapped phase (which is recovered from the low-quality fringe images). The main approach is regenerating the fringe images from the wrapped phase and performed the iterative Gaussian filter. Generally, the proposed iterative Gaussian filter method can filter the noise without interference from reflectivity, improve the measurement accuracy and recover the wrapped phase information from the low-quality fringe images. The proposed method is verified by the experiment results. For the binocular system, the proposed method can improve the measurement accuracy (the root mean square (RMS) deviations of measurement results can reach 0.0094 mm).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Optical three-dimensional (3D) measurement methods, are playing an increasingly important role in modern manufacturing, such as structured light method [1] and phase measurement deflectometry [2]. Where, the phase shifting technique [3] is used to determine the phase information. The accuracy of phase information directly determines the measurement accuracy. Generally, the quality of the phase shift images (contains: noise, non-linear intensity and surface reflectivity), the number of phase shift steps and the intensity modulation parameter are the main reasons that affect phase accuracy. Increasing the number of phase shift steps will greatly reduce the measurement speed. And generally, the adjustment range of intensity modulation parameter is limited. In summary, it is an effective way to improve the accuracy of phase recovery by suppressing the phase error caused by the low-quality of the fringe images.

Filtering methods are used to inhibit the noise in captured fringe images. For instance,Gaussian filtering [4] is used to inhibited the phase errors caused by Gaussian noise in the captured fringe images. The fuzzy quotient space-oriented partial differential equations filtering method is proposed in literature [5] to inhibit Gaussian noise contrary to literature [4]. The median filtering [6] is used to preprocess the captured images and filter out the invalid data by the masking. The wavelet denoising method and Savitzky-Golay method are proposed in literature [7, 8] and literature [4], respectively. The captured images are converted to the frequency domain for filtering [9] the captured fringe images.Thereby the influence of noise is inhibited and the accuracy of phase recovery is improved. The gamma value of the light source [10, 11] is calibrated to correct the non-linear intensity. Tn literature [12], the measurement error (caused by Gamma) is inhibited by the Gamma calibration method expressed as Fourier series and binomial series theorem. A robust gamma calibration method based on a generic distorted fringe model is proposed in literature [13]. In literature [14], a gamma model is established to inhibit phase error by deriving the relative expression.

The multi-exposure and polarization techniques are applied to solve the measurement of objects with changes in reflectivity. For instance, multi-exposure technique [15] is proposed to measure objects with high reflectivity. Among them, reference image with middle exposure is selected and used for the slight adjustment of the primary fused image. High signal-to-noise ratio fringe images [16, 17] are fused from rough fringe images with different exposures by selecting pixels with the highest modulated fringe brightness. In literature [18], the high dynamic range fringe images are acquired by recursively controlling the intensity of the projection pattern at pixel level based on the feedback from the reflected images captured by the camera. The absolute phase is recovered from the captured fringe images with high dynamic range by multi-exposure technique. Spatially distributed polarization [19] state is proposed to measure objects with high contrast reflectivity. Generally, the degree of linear polarization (DOLP) is estimated, and the target is selected by DOLP, finally the selected target is reconstructed. The polarization coded can be applied for the target enhanced depth sensing in ambient [20]. But, the polarization technique is generally not suitable for the measurement of complex objects.

In general, the factors (noise, non-linear intensity and surface reflectance changes) that affect the quality of the captured phase shift fringe images are comprehensive and not isolated. When the noise is suppressed by the filtering method, the captured images are distorted by the interference surface reflectivity. And the multi-exposure [15,16,17,18] limited in the measurement speed for its large number of required project images. in order to improve the phase accuracy from the low-quality fringe images (affected by noise, non-linear intensity and surface reflectance changes), an iterative Gaussian filter method is proposed. The main approach is regenerating the fringe images from the wrapped phase and performed the iterative Gaussian filter. Generally, the proposed iterative Gaussian filter method can filter the noise without interference from reflectivity, improve the measurement accuracy and recover the wrapped phase information from the low-quality fringe images.

2 Principle of Iterative Phase Correction Method

For the optical 3D measurement methods, the standard phase shift fringe technique is widely used because of its advantages of good information fidelity, simple calculation and high accuracy of information restoration. A standard \( N \)-steps phase shift algorithm [21] with a phase shift of \( {\pi \mathord{\left/ {\vphantom {\pi 2}} \right. \kern-0pt} 2} \) is expressed as

where \( A(x,y) \) is the average intensity, \( B(x,y) \) is the intensity modulation, \( N \) is the number of phase step, and \( \phi (x,y) \) is the wrapped phase to be solved for. \( \phi (x,y) \) can be calculated from the Eq. (1).

The phase error in wrapped phase (\( \phi \)) is propagated from the intensity (\( I_{n} \)) . The relationship between the intensity standard variance \( \sigma_{{I_{n} }} \) and the phase standard variance \( \sigma_{\phi } \) is calculated by the principle of the error propagation and described as

\( \frac{\partial \phi }{{\partial I_{n} }} \) (The partial derivatives of \( \phi \) to \( I_{n} \)) is derived from Eq. (2).

The relationship between the wrapped phase and the intensity is expressed as follows.

In addition, the influence of error sources to \( N \) images is not different from one to another, therefore:

In summary, the relationship between \( \sigma_{{I_{n} }} \) and \( \sigma_{\phi } \) is calculated and expressed as

As can be seen from the above content, reducing phase errors caused by phase shift images, increasing the number of the phase shift steps and improving the intensity modulation \( B \) are the main method to reduce the phase error in wrapped phase. In which, increasing the number of phase shift steps means to reduce the measurement speed. And, the intensity modulation parameter is limited by the measurement system (such as camera and projector) and the properties of measured objects (such as reflectivity). In summary, inhibit the phase error caused by the image error is a feasible way to improve the phase accuracy. Image noise (\( n_{oise\_n} (x,y) \)), non-linear intensity of light source (\( g_{amma} \)) and surface reflectivity changes (\( r(x,y) \)) are the main factors that affect the quality in captured fringe images.

The noise of fringe images is generally expressed as Gaussian distribution. Hence, the noise in captured fringe images can be inhibited by Gaussian filtering. And gamma value is calibrated to inhibit the phase errors caused by non-linear intensity. However, the pixels in the imaging area are affected by noise and the non-linear intensity are not uniform (Fig. 1), due to the non-uniform characteristics of the reflectivity of the object surface. The convolution operation is performed on the filter area in Gaussian filtering. For the Uneven reflectivity of objects, filtering effect is affected. Therefore, the conventional Gaussian filter method is limited in improving the wrapped phase accuracy from the objects with uneven reflectivity (Fig. 1).

Hence, an iterative Gaussian filter method with iterative Gaussian filtering is proposed to improve the accuracy of wrapped phase accuracy recovered from the phase shift images (Fig. 2).

Step 1: initialing calculation \( \phi^{c} \): \( \phi^{c} = \arctan \left\{ {\frac{{\sum\limits_{n = 1}^{N} {I_{n}^{c} \sin \left( {\frac{2\pi (n - 1)}{N}} \right)} }}{{\sum\limits_{n = 1}^{N} {I_{n}^{c} \cos \left( {\frac{2\pi (n - 1)}{N}} \right)} }}} \right\},n = 1,2, \cdots N \), when the projected fringe pattern is \( I_{n} \), the image captured by the camera is \( I_{n}^{c} \);

Step 2: wrapped phase \( \phi^{c} \) is projected to the phase shift fringe image space, then \( I_{n}^{g} \) is generated: \( I_{n}^{g} (x,y) = A(x,y) + B(x,y)\cos [\phi^{c} (x,y) + \frac{(n - 1)}{N}2\pi ] \);

Step 3: \( {}^{F}I_{n}^{g} \) is determined by filtering the images \( I_{n}^{g} \) with Gaussian filter, and the wrapped phase \( {}^{F}\phi^{c} \) is recalculated according to step 1;

Step 4: repeat step 2 and step 3. The stop condition is that the phase error between \( {}^{F}\phi^{c} \) and \( \phi^{c} \) is less than the set threshold \( T \).

It is worth noting that the quality of fringes is improve by Gaussian filtering without changing the sine of fringes if the objects with uniform reflectivity. This is the theoretical premise that step 3 can improve the accuracy of phase recovery by projecting the phase with errors into the fringe image space and performing Gaussian filtering.

3 Application-Binocular Structured Light

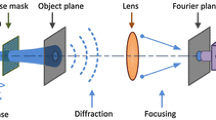

Phase shift is a key technique in optical measurement methods, which is applied to the phase measurement deflectometry and structured light. The accuracy of phase recovery directly determines the accuracy of 3D measurement. The performance of the proposed iterative Gaussian filter method is verified by the binocular structured light [22], as shown in Fig. 3.

3.1 Measurement Principle of Binocular Structured Light

The binocular structured light is established with two cameras (camera 1, camera 2) and a projector. The light projected by the projector is reflected by the object point \( w \) and imaged on the pixel \( p_{1} \) of camera 1 and pixel \( p_{2} \) of camera 2. \( {}^{{c_{1} }}{\mathbf{R}}_{{c_{2} }} {}^{{c_{1} }}{\mathbf{T}}_{{c_{2} }} \) is the posed relationship between the two cameras. \( {\mathbf{K}}_{1} \) and \( {\mathbf{K}}_{2} \) are the intrinsic parameters of camera 1 and camera 2, respectively. The coordinates of the object point \( w \) are \( {}^{{c_{1} }}X_{w} \) and \( {}^{{c_{2} }}X_{w} \) in the coordinates systems of camera 1 and camera 2, respectively. The coordinates of the object point \( w \) is determined according to the reflected light \( r_{1} \) determined by \( p_{1} \) and reflected light \( r_{2} \) determined by \( p_{2} \). The correspondence between \( p_{1} \) and \( p_{2} \) is determined from the absolute phases calculated from the captured fringe images.

The coefficient parameters \( k_{1} \) and \( k_{2} \) is determined through the Eq. (9). The camera intrinsic parameters \( {\mathbf{K}}_{1} \), \( {\mathbf{K}}_{2} \), and the posed relationship \( {}^{{c_{1} }}{\mathbf{R}}_{{c_{2} }} {}^{{c_{1} }}{\mathbf{T}}_{{c_{2} }} \) are calibrated by the iterative calibration method [23].

3.2 Measurement Experiments

The binocular structured light is constructed by two cameras (resolution: 1280 × 1024 pixels) with 12-mm lens and a laser projector (resolution: 1280 × 1024 pixels). The multi-frequency heterodyne is used to determine the phase order of the wrapped phase. The periods are chosen as (28, 26, 24) in multi-frequency heterodyne. Befor measurement, the system parameters of binocular structured light is calibrated at first. During calibration process, the calibration board (chess board) at different positions are captured by two cameras. The iterative calibration method is applied to determine the intrinsic parameters of the two cameras and the their posed relationship, as shown in Table 1. The posed relationship (the camera 2 relative to camera 1) is \( {}^{{c_{1} }}{\mathbf{R}}_{{c_{2} }} {}^{{c_{1} }}{\mathbf{T}}_{{c_{2} }} \). To verify the performance of the proposed iterative phase correction technique, the non-linear parameter (gamma) of the projector is not calibrated.

A Φ38.092-mm standard spherical with the surface accuracy of 0.5-μm is measured to verify the proposed iterative Gaussian filter method. As shown in Fig. 4, the absolute phase obtained by direct Gaussian filtering is less accurate than the absolute phase recovered from the proposed iterative Gaussian filter method.

The absolute phase decoding from the captured image with Gaussian filtering and iterative phase correction. (a): The phase shift fringe image captured by camera 2; (b): image obtained by applying the Gaussian filter to (a); (c):\( {}^{F}I_{n}^{g} (x,y) \) determined from the absolute phase; (d): the absolute phase calculated from (a); (e): the absolute phase calculate by the iterative Gaussian filter method from (c); (f): the absolute phases of blue line in (d) and red line in (e),respectively; (g): the enlarged view of the red box in (f). (Color figure online)

Figure 5 shows the measurement results of standard spherical. The error of the reconstructed point cloud with direct Gaussian filtering is shown in Fig. 5(c). The reconstruction error are 0.13 mm and the RMS deviations is 0.0247 mm. The error of the reconstructed point cloud with proposed iterative Gaussian filter method is shown in Fig. 5(d). The less accurate parts of the reconstruction error are around 0.04 mm and the RMS deviations is 0.0094 mm.

The measurement results of standard spherical. (a): the point cloud determined from the absolute phase calculated from the captured images with direct Gaussian filtering; (b): the point cloud determined from the absolute phase calculated from the captured images with iterative phase correction; (c): the reconstruction error of (a); (d): the reconstruction error of (b).

The conventional direct filtering method will fail when reconstructing the 3D information of object with change drastically in reflectivity (for instance the reflectivity is too low or too high), as shown in Figs. 6(b), 7(b). The iterative Gaussian filter method proposed is not sensitive to surface reflectivity. Therefore, effective measurement results can still be obtained by measuring the surface of the objects whose reflectance change more drastically, as shown in Figs. 6(d), 7(c). The error of the reconstructed point cloud with direct Gaussian filtering is shown in Fig. 6(c), The reconstruction error are 0.48 mm and the root mean square (RMS) deviations is 0.042 mm. The error of the reconstructed point cloud with proposed iterative Gaussian filter method is shown in Fig. 6(d). The less accurate parts of the reconstruction error are around 0.13 mm and the RMS deviations is 0.024 mm. When the surface reflectivity of the object decreases, the intensity of the captured phase shift fringe images also decreases. When the reflectivity of the surface of the object is very low, it is difficult to recover the effective phase from the captured fringe images by convention methods.

The measurement results of table tennis (low reflectivity in localized areas). (a): the captured phase shift fringes; (b): the surface determined from (a) with direct Gaussian filtering; (c): the reconstruction error of (b); (d): the surface determined from (a) with iterative phase correction; (e): the reconstruction error of (d).

The experimental results show (Fig. 7) that the wrapped phase information can be recovered from the low-quality phase shift fringe images by the iterative Gaussian filter method, and then measurement accuracy can be improved. Generally, compared with conventional methods, the proposed iterative Gaussian filter method can filter the noise without interference from reflectivity, improve the measurement accuracy and recover the wrapped phase information from the low-quality fringe images (which is difficult to recover with the conventional methods). Thereby, compare with multi-exposure technique, objects (with drastically changing surface reflectivity) can be reconstructed without additional projection of phase shift fringe images.

4 Conclusions

The iterative Gaussian filter method is proposed to recover the wrapped phase information. The whole approach is regenerating the fringe images from the wrapped phase and performing the iterative Gaussian filter. The phase errors caused by the low-quality in fringe images are effectively inhibited. Therefore, the proposed iterative Gaussian filter method can be applied to the structured light method for improve the measurement accuracy. Especially, the proposed method can be used for the phase recovery from the objects with large changes in reflectivity (which is very difficult to be effectively recovery with the conventional methods). And, the effectiveness of the proposed method is verified by experiments.

References

Zhang, S.: High-speed 3D shape measurement with structured light methods: a review. Opt. Lasers Eng. 106, 119–131 (2018)

Chang, C., Zhang, Z., Gao, N., et al.: Improved infrared phase measuring deflectometry method for the measurement of discontinuous specular objects. Opt. Lasers Eng. 134, 106194 (2020)

Zuo, C., Feng, S., Huang, L., et al.: Phase shifting algorithms for fringe projection profilometry: a review. Opt. Lasers Eng. 109, 23–59 (2018)

Butel, G.P.: Analysis and new developments towards reliable and portable measurements in deflectometry. Dissertations & Theses - Gradworks (2013)

Yu, C., Ji, F., Xue, J., et al.; Fringe phase-shifting field based fuzzy quotient space-oriented partial differential equations filtering method for gaussian noise-induced phase error. Sensors 19(23), 5202 (2019)

Skydan, O.A., Lalor, M.J., Burton, D.R.: 3D shape measurement of automotive glass by using a fringe reflection technique. Meas. Sci. Technol. 18(1), 106 (2006)

Wu, Y., Yue, H., Liu, Y.: High‐precision measurement of low reflectivity specular object based on phase measuring deflectometry. Opto-Electron. Eng. 44(08), 22–30 (2017)

Wu, Y., Yue, H., Yi, J., et al.: Phase error analysis and reduction in phase measuring deflectometry. Opt. Eng. 54(6), 064103 (2015)

Yuhang, H., Yiping, C., Lijun, Z., et al.: Improvement on measuring accuracy of digital phase measuring profilometry by frequency filtering. Chin. J. Lasers 37(1), 220–224 (2010)

Zhang, S.: Absolute phase retrieval methods for digital fringe projection profilometry: a review. Opt. Lasers Eng. 107, 28–37 (2018)

Zhang, S.: Comparative study on passive and active projector nonlinear gamma calibration. Appl. Opt. 54(13), 3834–3841 (2015)

Yang, W., Yao, F., Jianying, F., et al.: Gamma calibration and phase error compensation for phase shifting profilometry. Int. J. Multimedia Ubiquit. Eng. 9(9), 311–318 (2014)

Zhang, X., Zhu, L., Li, Y., et al.: Generic nonsinusoidal fringe model and gamma calibration in phase measuring profilometry. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 29(6), 1047–1058 (2012)

Cui, H., Jiang, T., Cheng, X., et al.: A general gamma nonlinearity compensation method for structured light measurement with off-the-shelf projector based on unique multi-step phase-shift technology. Optica Acta Int. J. Opt. 66, 1579—1589 (2019)

Song, Z., Jiang, H., Lin, H., et al.: A high dynamic range structured light means for the 3D measurement of specular surface. Opt. Lasers Eng. 95, 8–16 (2017)

Jiang, H., Zhao, H., Li, X.: High dynamic range fringe acquisition: A novel 3-D scanning technique for high-reflective surfaces. Opt. Lasers Eng. 50(10), 1484–1493 (2012)

Zhao, H., Liang, X., Diao, X., et al.: Rapid in-situ 3D measurement of shiny object based on fast and high dynamic range digital fringe projector. Opt. Lasers Eng. 54, 170–174 (2014)

Babaie, G., Abolbashari, M., Farahi, F.: Dynamics range enhancement in digital fringe projection technique. Precis. Eng. 39, 243–251 (2015)

Xiao, H., Jian, B., Kaiwei, W., et al.: Target enhanced 3D reconstruction based on polarization-coded structured light. Opt. Express 25(2), 1173–1184 (2017)

Xiao, H., Yujie, L., Jian, B., et al.: Polarimetric target depth sensing in ambient illumination based on polarization-coded structured light. Appl. Opt. 56(27), 7741 (2017)

Liu, Y., Zhang, Q., Su, X.: 3D shape from phase errors by using binary fringe with multi-step phase-shift technique[J]. Opt. Lasers Eng. 74, 22–27 (2015)

Song, L., Li, X., Yang, Y.G,. et al.: Structured-light based 3d reconstruction system for cultural relic packaging[J]. Sensors 18(9), p. 2981 (2018)

Datta, A., Kim, J. S, Kanade, T.: Accurate camera calibration using iterative refinement of control points. In: 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops. IEEE (2010)

Acknowledgements

This study is supported by the National Natural Science Foundations of China (NSFC) (Grant No. 51975344, No. 51535004) and China Postdoctoral Science Foundation (Grant No. 2019M662591).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Chen, L., Yun, J., Xu, Z., Huan, Z. (2020). Iterative Phase Correction Method and Its Application. In: Chan, C.S., et al. Intelligent Robotics and Applications. ICIRA 2020. Lecture Notes in Computer Science(), vol 12595. Springer, Cham. https://doi.org/10.1007/978-3-030-66645-3_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-66645-3_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-66644-6

Online ISBN: 978-3-030-66645-3

eBook Packages: Computer ScienceComputer Science (R0)