Abstract

Having a good Human-Computer Interaction (HCI) design is challenging. Previous works have contributed significantly to fostering HCI, including design principle with report study from the instructor view. The questions of how and to what extent students perceive the design principles are still left open. To answer this question, this paper conducts a study of HCI adoption in the classroom. The studio-based learning method is adapted to teach 83 graduate and undergraduate students in 16 weeks long with four activities. A standalone presentation tool for instant online peer feedback during the presentation session is developed to help students justify and critique other’s work. Our tool provides a sandbox, which supports multiple application types, including Web-applications, Object Detection, Web-based Virtual Reality (VR), and Augmented Reality (AR). After presenting one assignment and two projects, our results shows that students acquired a better understanding of the Golden Rules principle over time, which is demonstrated by the development of visual interface design. The Wordcloud reveals the primary focus was on the user interface and sheds light on students’ interest in user experience. The inter-rater score indicates the agreement among students that they have the same level of understanding of the principles. The results show a high level of guideline compliance with HCI principles, in which we witness variations in visual cognitive styles. Regardless of diversity in visual preference, the students present high consistency and a similar perspective on adopting HCI design principles. The results also elicit suggestions into the development of the HCI curriculum in the future.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Human-Computer Interaction

- Instant online peer feedback

- Interface design

- Learners’ perspective

- User study design

- Inter-rater measurement

1 Introduction

HCI has a long, rich history, and its origin can be dated back to the 1980s with the advent of personal computing. As such, computers were no longer considered an expensive tool, and room-sized dedicated to experts in a given domain. Consequently, the need to have an easy and efficient interaction for general and untrained users became increasingly vital for technology adoption. In recent years, along with the presence of new devices (e.g., smartphones, tablets), HCI has expanded its perceptual concept from interaction with a computer to that of any target device, and it has been incorporated into multiple disciplines, such as computer science, cognitive science, and human-factors engineering.

Having a good HCI design is a challenging task since it not only has to cope with “more than just a computer now... but considerable awareness that touch, speech, and gesture-based interfaces” [4] but also requires a substantial cognitive effort to think and make the product that encompassed the aspects of useful, usable, and ultimately used by the public [13], such as user interfaces assisting monitoring and system operational tasks [7, 15]. Literature work has contributed significantly to fostering HCI from imposing design principles, processes, guidelines to teaching. For example, the ACM SIGCHI Executive Committee [12] developed a set of curriculum recommendations for HCI in education, or Ben Shneiderman [22] suggested eight golden rules of interface design. Adopting the existing guidelines, teachers/instructors have attributed an abundance of efforts to support students in understanding the concept, developing, and creating a good interaction design in classrooms [4, 10]. In line with instructing learners, curriculum, teaching/learning methods, project outputs, tools/techniques are reported to share with the HCI community. In this regard, findings are often observed from the instructors’ perspectives, the questions of how, and to what extent students perceive the design principles are unexplored.

Having the answers to these questions would play an essential indicator for both educators and learners as it allows them to reshape their perceptual thinking on how HCI is being taught and learned. For instructors, looking at HCI from students’ perspectives enables them to reorganize teaching materials and methods so that learning performance can be best achieved. For learners, the opportunity of having their point of view to justify or being justified by instructors/peers would allow them to develop the critical thinking skills prepared for their future careers.

To the best of our knowledge, no work in the literature exploits these questions in the HCI domain, making this research a unique contribution. In this study, we seek to answer the aforementioned questions by decomposing them into sub-questions as 1) Given a set of design principles/guidelines, to what extents students follow them, 2) Which part of the HCI design the learners focus on, and 3) Do they have the same perspectives on adopting design principles and are these views consistent? By addressing these research questions qualitatively and quantitatively, the contributions of our paper can be laid out as:

-

it reports the instructional methods and instruments used for teaching and learning HCI in the classroom.

-

it provides an analysis of the qualitative method for student peer-review project assessment.

-

it extracts insights of HCI design principles adoption in the classroom.

The rest of this paper is organized as follows: Section 2 summarizes existing research that is close to our paper. Section 3 presents study design methods for collecting students’ information. Section 4 analyzes data and provide insights in detail. Section 5 concludes our paper with future work direction.

2 Related Work

Numerous researches on teaching HCI guidelines have been studied: from the general design of the course, major topics should be covered to the incorporation of user-centered design. One of the fundamental literature in designing the HCI curriculum is presented in 1992 by Hewett et al. [12], “ACM SIGCHI Curricula for Human-Computer Interaction”. The report provided “a blueprint for early HCI courses” [4], which concentrated on the concept of “HCI-oriented,” not “HCI-centered,” programs. Therefore, it is beneficial to frame the problem of HCI broadly enough to aid learners and practitioners to avoid the classic pitfall of design separated from the context of the problem [12]. Regarding the in-class setting, PeerPresents [21] introduced a peer feedback tool using online Google docs on student presentations. With this approach, students receive qualitative feedback by the end of class. To encourage immediacy in the discussion, our system provides feedback on-the-fly: the presenters receive feedback and questions as they are submitted to the system, the presenters can start the discussion right after their presentation session. Instant Online Feedback [8] demonstrates the use of instant online feedback on face-to-face presentations. Besides the similar feedback form containing quantitative and qualitative aspects, we include the demo of the final interface within the form so that the audience can directly perform testing. Online feedback demonstrates its advantages in terms of engagement, anonymity, and diversity. Online Feedback System [11] facilitates students’ motivation and engagement in the feedback process. Compared to the face-to-face setting, the establishment of anonymity encourages more students to participate and contributes to balanced participation in the feedback process [8]. Along with a higher quantity, diversity in the feedback elicits novel perspectives and reveals unique insights [16]. For a classroom setting, time is often limited within a class session. With such time restriction, the time frame dedicated to giving feedback should be held rather low [8]. Peer feedback should be delivered in a timely manner [14]: for online classes, the feedback only demonstrates its efficiency if provided within 24 h. Another study shows that instant feedback helps enhance accuracy estimate than that received at the end of a study because of its immediacy [17].

3 Materials and Methods

3.1 Teaching Method and Activities

The main goal of our study is to investigate the adoption of HCI design principles in the classroom setting from the learners’ perspective. It is a daunting task for instructors to justify the adoption level due to variations in visual cognitive styles. For example, teachers may like bright and high contrast design, whereas students prefer a colorful dashboard. Thus, a teaching method and assessment should be carefully designed to help motivate and engage students and adequately reflect their performance level. Literature work has proposed several teaching methods [3], such as inquiry-based learning, situated-based learning, project-based learning, and studio-based learning. As such, we chose the studio-based learning approach due to its characteristics, encompassed our critical factors. These characteristics can be laid out as follows:

-

Students should be engaged in project-based assignments (C1).

-

Student learning outcomes should be iteratively assessed in formal and informal fashion through design critiques (C2).

-

Students are required to engage in critiquing the work of their peers (C3).

-

Design critiques should revolve around the artifacts typically created by the domains (C4).

Based on the above criteria, we divided the entire course work into four main activities spanning over 16-week long, including 1) Introduction to HCI, 2) Homework assignment, 3) Projects engagement: Throughout the course, students were actively involved in two projects (characteristic C1), and 4) Evaluation and design critiques: In each assignment/projects both instructors and students were engaged for evaluations (characteristics C2, C4), this process not only helps to avoid bias in the results but also allows learners to reflect their learning for justifying their peers (characteristic C3). The assignment’s purpose is to give students some practice in the design of everyday things. In the first project, we incorporated the problem-based learning method on the problem extended from the assignment. The second project had a different approach by adapting the computational thinking-based method, where students can explore a real-life problem and then solve it on their own.

3.2 Participants

The present study was conducted with university students enrolled in the Human-Computer Interaction course in computer science. There was a total of 83 students, of which 62 students are undergraduate, 13 masters, and eight doctoral students. There were 64 males and 19 females.

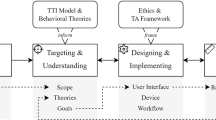

3.3 Assessment Tool

Aalberg and Lors [1] indicated that ‘the lack of technology support for peer assessment is one likely cause for the lack of systematic use in education.’ To address these issues, many assessment tools have been introduced [8, 9, 21]. However, no such a comprehensive tool enables instructors to carry out a new task that has not been presented. Particularly, in our study, the evaluation requires features such as instant feedback, timing control, list preview, or even 3D objects embedded applications. We developed a standalone tool to facilitate peer evaluation and data collection, supporting multiple application types, including webpage, web-based VR, and AR. The tool is expected to serve these goals:

-

G1 From the presenter’s perspective: Each presenter (group or individual) demonstrates their interface design within their session. The presenter can see the feedback and evaluation visualization within their session.

-

G2 From the audience’s perspective: The audience can give comments and evaluations for the current design presented [9]. Authentication for the in-class audience is necessary for input validity.

-

G3 The system presents online, instant evaluation from the audience (anonymously) to the presenter without interrupting the presentation.

User Interface. Figure 1 describes our web presentation system according to three perspectives. Panel A shows the presenter list (student names and group members’ images have been customized for demonstration purposes). This view allows the instructors to manage students’ turn to present, each group has two thumbnail images for sketch and final design, indicating the development process. Panel B is the presenter’s view with live comments on the right-hand side, updated on-the-fly. The average scores and evaluation are updated live in the presenter’s view – visualized in an interactive, dynamic chart. Panel C is the audience’s view for providing scores and feedback on the presenting project.

Each presentation is given a bounded time window for demonstration and discussion. The system automatically switches to the next presentation when the current session is over, renewing both Panel B and C interfaces. During the presentation, the clock timer in the two panels are synchronized, and the assessment and comments submitted from Panel C are updated live on Panel B. Questions for peer assessment consist of seven 10-point Likert scale questions (which ranged from “strongly disagree(1)” to “strongly agree(10)”) and one open-ended question. The criteria for scoring are as follows.

-

Q1, Does the interface follow the Golden rules and principle in design [22]?

-

Q2, Usability: The ease of use of the interface [24].

-

Q3, Visual appealing: Is the design visually engaging for the users [23]?

-

Q4, Interactivity: To what extent does the interface provide user interactions [23]?

-

Q5, Soundness: The quality and proper use of the audio from the application [23].

-

Q6, Efforts: Does the group provide enough effort for the work?

-

Q7, Teamwork: Is the work equally distributed to team members?

3.4 Assignment and Project Outputs

There were a total of 126 visual interaction designs as assignment and project outcomes, including 82 sketches from the assignment, 21 problem-based designs, and 22 computational thinking based solutions. Figure 2 presents some selected work corresponding to the assignment, project 1, project 2, respectively.

Previous work showed that there was some ambiguity in the results when students gave scores to their peers. Part of the issue is that they wanted to be ‘nice’ or aimed to get the job done. To alleviate these issues, unusual score patterns will be excluded, and evaluators (students) will be notified anonymously through their alias names. Examples of good grading and inadequate grading are presented in Fig. 3. Good grading is illustrated via the diversity in assessment outcome among different criteria, opposite to inadequate examples.

Selected work on interface design for the individual assignment (A), project 1 (B), project 2 (C). For each project 1 and 2, sketches are on the left, and final designs are on the right. Our study covers a variety of applications, including Object Detection (as a Computer Vision application), Web-based 3D application, VR, and AR. The interdisciplinary nature is presented in the final result.

4 Results

4.1 R1: Given a Set of Design Principles/guidelines, to What Extents Students Follow Them?

In order to tackle the first research question, we are looking for an indication among the students that reflects the overall level of guideline compliance. To measure the extent that the learners follow the guidelines, we use the typical statistic measures mean and standard deviation, where each record is a quantitative assessment from a user to a presenter, spreading on the provided criteria.

Table 1 presents the mean and standard deviation on the peer assessment score for project 1 and project 2. In both projects, Efforts criterion always has the highest mean value, indicating that the students highly appreciate the efforts their peers put in. Regarding project 1, Visual Design has the lowest mean (8.20) and also the highest standard deviation (1.48), showing variation visual cognitive styles and diverse individual opinions on what should be considered “visually engaging”. In project 2, Golden Rules criterion has the mean increased and became the criterion with the lowest standard deviation (1.12), meaning that there is less difference between the perception of Golden Rules among the students, demonstrating that the students present better compliance to the guidelines. As an experience learned from project 1, Sounds was introduced in project 2 as a channel for feedback interactions. Sounds having the lowest mean (7.33) and highest standard deviation (2.30), setting it apart from other standard deviations (around 1.1 1.2), indicating that the students had difficulty incorporating this feature on their projects. Overall, the other criteria have their mean values increased from project 1 to project 2, showing a better presentation and understanding of the guidelines.

4.2 R2: Which Part of the HCI Design the Learners Focus On?

We answer this question using a qualitative method for analysis; we take inputs from students’ comments for each project. We expected that the majority of the captured keywords would be centered around UI design, as indicated in previous literature that most of the HCI class focus on UI design [10, 12].

We constructed a wordcloud of the most frequent words in regards to course content, with stop words removed. Figure 4 presents the most frequent words used in the project 1 – interface design for a smart mirror. Bigger font size in the wordcloud indicates more frequent occurrences, hence more common use of the words. Essentially, wordcloud gives an engaging visualization, which can be extended with a time dimension to maximize its use in characterizing subject development [6], being able to provide insights within an interactive, comprehensive dashboard [19]. Hereafter, the number in parentheses following a word indicates the word’s frequency. In terms of dominant keywords, besides the design topic such as mirror (72), design (40), interface (33), the students were interested in the service that the interface provides: widget (34) and feature (23), then color (24), text (20) button (19) – the expectation on fundamental visual components aligned, with cluttered (16) and consistent (16) – highlighting the most common pitfall and standard that the design should pay close attention. Functionality in design are taken into account: touch (4), draggable (8), facial recognition (6), voice command (3). As such, when looking at an application, students focused primarily on UI design, hence our study confirmed existing research [10, 12].

Furthermore, user experience (UX) concerns are demonstrated through the students’ perspective. By exploring more uncommon terms, user’s experience is indicated by: understandable (4), usability (2), helpful (2). Users’ emotions and attitudes towards the product are expressed: easy (8), love (5) and enjoy (2) (positive), in contrast to hard (15), distracting (5), difficult (3), confusing (2) (negative). Indeed, the comments reflect the views of students: “The mirror is well done. The entire thing looks very consistent and I enjoy the speaking commands that lets you know what you are doing.” (compliment), “Accessing the bottom menu to access dark mode could be a little confusing for some users as no icons are listed on the screen.” (suggestion). The findings on UX can complement existing work in ways that emphasize the need to integrate UX subjects in the HCI curriculum. HCI principles can be considered a crucial instrument for UX development; these results validated that HCI is the forerunner to UX design [13].

Overall, we can classify the keywords into three groups: UI, UX and Implementation. Besides UI and UX discussed above, the Implementation aspect is viewed through data (10), implementation (8), api (6), function (5), and coding (2). Compared to the expectation, there is a minimal amount of terminologies to the principle used, such as golden rule (2) – with only two occurrences, as opposed to the large number of visual elements mentioned in practical development. Some students even suggested wheelchair (3), as they consider the design for a variety of users. This pattern demonstrates that the focus shifts from theoretical design to direct visual aesthetics, as the students perceived and adopted the HCI principles effectively to apply them in the empirical application. To sum up, the keywords retrieved from the wordcloud cover primarily UI and UX interests from the students’ perspective in the process of adopting HCI into interface design, demonstrating the diversity and broad coverage that peer assessment outcomes can provide. The method can be scaled to other disciplines that regard crowd wisdom in the development process.

4.3 R3: Do They Have the Same Perspectives on Adopting the Design Principles and Are These Views Consistent?

To answer the third question, we are looking for an agreement among students when they evaluated their peers. We hypothesize that the HCI principles are adopted when the scores provided by learners are agreed, and this agreement may imply that students have the same level of understanding of the principles.

To measure the level of agreement among learners, we use the intraclass correlation coefficient (ICC) [18], where each student is considered a rater, the project is the subject of being measured. ICC is widely used in the literature because it is easy to understand, can be used to assess both relative and absolute agreement, and the ability to accommodate a broad array of research scenarios compared to other measurements such as Cohen’s Kappa or Fleiss Kappa [20]. Cicchetti [5] provides a guideline for inter-rater agreement measures, which can be briefly described as poor (less than 0.40), fair (between 0.40 and 0.59), good (between 0.60 and 0.74), excellent (between 0.75 and 1.00).

Table 2 provides the results on the level of agreement and consistency when students evaluate their peers. There is a difference in the number of raters (83 students in total, 77 in project 1, and 75 in project 2); this is due to the exclusion of ambiguous responses, as noted in Subsect. 3.4 and Fig. 3. It can be seen from Table 2 that students tend to give the same score (agreement score = 0.963) given the principle guideline (or golden rules) in project 1, so do as in project 2 (agreement score = 0.922). These scores are considerably high (excellent) when mapped to the inter-rater agreement measure scales suggested by Cicchetti [5]. In addition, we also find a high consistency among students when evaluating the other aspects of the projects such as efforts, interactivity, usability, and visual design since consistency scores are all above 0.75.

5 Conclusion and Future Work

In this paper, we have presented the learners’ perspective on the perception and adoption of HCI principles in a classroom setting. A standalone web presentation platform is utilized to gather instant online peer feedback from students throughout the course. The qualitative analysis of peer feedback demonstrated that the students primarily emphasize the design feature and then visual components and interactivity. This approach can be extended to present subject evolution and student development over time, providing a bigger context. The outcome also pointed out that the interests spread in both UI and UX, suggesting further incorporation of UX in the HCI curriculum, which is currently having a shortage in the existing literature. On the quantitative analysis, we have found that the students followed the guidelines by a large margin. The results indicated a high level of agreement among the students, determined by the inter-rater agreement measures. We also have found high consistency within the class regarding other aspects evaluated, such as efforts, interactivity, usability, and visually appealing.

This research, however, is subject to several limitations. The course duration in this study is short, with only 16 weeks; a more prolonged period can help reduce random errors. Another limitation is the sample size, with 83 students; we believe that a larger sample size could increase the generalizability of the result (nevertheless, our sample size meets the minimum requirement suggested by [2]). We will expand the study in the following semesters for future work, and we expect that researchers can conduct related studies to confirm our findings.

References

Aalberg, T., Lorås, M.: Active learning and student peer assessment in a web development course. In: Norsk IKT-konferanse for forskning og utdanning (2018)

Albano, A.: Introduction to educational and psychological measurement using R (2017)

Carter, A.S., Hundhausen, C.D.: A review of studio-based learning in computer science. J. Comput. Sci. Coll. 27(1), 105–111 (2011)

Churchill, E.F., Bowser, A., Preece, J.: Teaching and learning human-computer interaction: past, present, and future. Interactions 20(2), 44–53 (2013)

Cicchetti, D.V.: Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 6(4), 284 (1994)

Dang, T., Nguyen, H.N., Pham, V.: WordStream: interactive visualization for topic evolution. In: Johansson, J., Sadlo, F., Marai, G.E. (eds.) EuroVis 2019 - Short Papers. The Eurographics Association (2019). https://doi.org/10.2312/evs.20191178

Dang, T., Pham, V., Nguyen, H.N., Nguyen, N.V.: AgasedViz: visualizing groundwater availability of Ogallala Aquifer, USA. Environ. Earth Sci. 79(5), 1–12 (2020)

Figl, K., Bauer, C., Kriglstein, S.: Students’ view on instant online feedback for presentations. In: Proceedings of the AMCIS 2009, p. 775 (2009)

Gehringer, E.F.: Electronic peer review and peer grading in computer-science courses. ACM SIGCSE Bull. 33(1), 139–143 (2001)

Greenberg, S.: Teaching human computer interaction to programmers. Interactions 3(4), 62–76 (1996)

Hatziapostolou, T., Paraskakis, I.: Enhancing the impact of formative feedback on student learning through an online feedback system. Electron. J. E-learn. 8(2), 111–122 (2010)

Hewett, T.T., et al.: ACM SIGCHI Curricula for Human-Computer Interaction. ACM, New York (1992)

Interaction Design Foundation: What is Human-Computer Interaction (HCI)? (2019). https://www.interaction-design.org/literature/topics/human-computer-interaction. Accessed 1 July 2020

Kulkarni, C.E., Bernstein, M.S., Klemmer, S.R.: PeerStudio: rapid peer feedback emphasizes revision and improves performance. In: Proceedings of the Second (2015) ACM Conference on Learning@ Scale, pp. 75–84 (2015)

Le, D.D., Pham, V., Nguyen, H.N., Dang, T.: Visualization and explainable machine learning for efficient manufacturing and system operations. Smart Sustain. Manuf. Syst. 3(2), 127–147 (2019)

Ma, X., Yu, L., Forlizzi, J.L., Dow, S.P.: Exiting the design studio: leveraging online participants for early-stage design feedback. In: Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, pp. 676–685 (2015)

Magrabi, F., Coiera, E.W., Westbrook, J.I., Gosling, A.S., Vickland, V.: General practitioners’ use of online evidence during consultations. Int. J. Med. Inf. 74(1), 1–12 (2005)

McGraw, K.O., Wong, S.P.: Forming inferences about some intraclass correlation coefficients. Psychol. Methods 1(1), 30 (1996)

Nguyen, H.N., Dang, T.: EQSA: Earthquake situational analytics from social media. In: Proceedings of the IEEE Conference on Visual Analytics Science and Technology (VAST 2019), pp. 142–143 (2019). https://doi.org/10.1109/VAST47406.2019.8986947

Nichols, T.R., Wisner, P.M., Cripe, G., Gulabchand, L.: Putting the kappa statistic to use. Qual. Assur. J. 13(3–4), 57–61 (2010)

Shannon, A., Hammer, J., Thurston, H., Diehl, N., Dow, S.: PeerPresents: A wab-based system for in-class peer feedback during student presentations. In: Proceedings of the 2016 ACM Conference on Designing Interactive Systems, DIS 2016, pp. 447–458. Association for Computing Machinery, New York (2016). https://doi.org/10.1145/2901790.2901816

Shneiderman, B., Plaisant, C., Cohen, M., Jacobs, S., Elmqvist, N., Diakopoulos, N.: Designing the User Interface: Strategies for Effective Human-Computer Interaction. Pearson, London (2016)

Sims, R.: Interactivity: a forgotten art? Comput. Hum. Behav. 13(2), 157–180 (1997)

Nguyen, V.T., Hite, R., Dang, T.: Web-based virtual reality development in classroom: from learner’s perspectives. In: Proceedings of the IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR 2018), pp. 11–18 (2018). https://doi.org/10.1109/AIVR.2018.00010

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Nguyen, H.N., Nguyen, V.T., Dang, T. (2020). Interface Design for HCI Classroom: From Learners’ Perspective. In: Bebis, G., et al. Advances in Visual Computing. ISVC 2020. Lecture Notes in Computer Science(), vol 12510. Springer, Cham. https://doi.org/10.1007/978-3-030-64559-5_43

Download citation

DOI: https://doi.org/10.1007/978-3-030-64559-5_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-64558-8

Online ISBN: 978-3-030-64559-5

eBook Packages: Computer ScienceComputer Science (R0)