Abstract

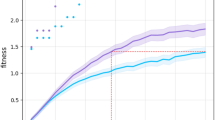

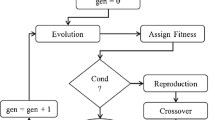

Evolutionary Algorithms have been applied in robotics over the last quarter of a century to simultaneously evolve robot body morphology and controller. However, the literature shows that in this area, one is still unable to generate robots that perform better than conventional manual designs, even for simple tasks. It is noted that the main hindrance to satisfactory evolution is poor controller generated as a result of the simultaneous variation of the morphology and controller. As the controller is a result of pure evolution, it is not given a chance to improve before fitness is calculated, which is the equivalent of reproducing immediately after birth in biological evolution. Therefore, to improve co-evolution and to bring artificial robot evolution a step closer to biological evolution, this paper introduces Reinforced Co-evolution Algorithm (ReCoAl), which is a hybrid of an Evolutionary and a Reinforcement Learning algorithm. It combines the evolutionary and learning processes found in nature to co-evolve robot morphology and controller. ReCoAl works by allowing a direct policy gradient based RL algorithm to improve the controller of an evolved robot to better utilise the available morphological resources before fitness evaluation. The ReCoAl is tested for evolving mobile robots to perform navigation and obstacle avoidance. The findings indicate that the controller learning process has both positive and negative effects on the progress of evolution, similar to observations in evolutionary biology. It is also shown how, depending on the effectiveness of the learning algorithm, the evolver generates robots with similar fitness in different generations.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Prabhu, S.G. R., Seals, R.C., Kyberd, P.J., Wetherall, J.C.: A survey on evolutionary-aided design in robotics. Robotica 36, 1804–1821 (2018)

Plomin, R.: Blueprint: how DNA makes us who we are. The MIT Press

Lan, G., Jelisavcic, M., Roijers, D.M., Haasdijk, E., Eiben, A.E.: Directed Locomotion for Modular Robots with Evolvable Morphologies. In: Parallel Problem Solving from Nature—PPSN XV. pp. 476–487. Springer, Cham

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. The MIT Press

Baxter, J., Bartlett, P.L., Weaver, L.: Experiments with infinite-horizon, policy-gradient estimation. J. Artif. Intell. Res. 15, 351–381 (2001)

Drugan, M.M.: Reinforcement learning versus evolutionary computation: a survey on hybrid algorithms. Swarm Evolut. Comput. 44, 228–246 (2019)

Hillis, W.D.: Co-evolving parasites improve simulated evolution as an optimization procedure. Physica D 42, 228–234 (1990)

Beigi, A., Mozayani, N.: A simple interaction model for learner agents: an evolutionary approach. IFS 30, 2713–2726 (2016)

Moriarty, D.E., Schultz, A.C., Grefenstette, J.J.: Evolutionary algorithms for reinforcement learning. J. Artif. Intell. Res. 11, 241–276 (1999)

Girgin, S., Preux, P.: Feature Discovery in Reinforcement Learning Using Genetic Programming. In: Genetic Programming, pp. 218–229. Springer, Berlin (2008).

Whiteson, S., Stone, P.: Evolutionary function approximation for reinforcement learning. J. Mach. Learn. Res. 7, 877–917 (2006)

Stulp, F., Sigaud, O.: Path integral policy improvement with covariance matrix adaptation. In: International conference on machine learning, pp. 1547–1554 (2012).

Buzdalova, A., Matveeva, A., Korneev, G.: Selection of auxiliary objectives with multi-objective reinforcement learning. In: Companion Publication of the Annual Conference on Genetic and Evolutionary Computation, pp. 1177–1180. ACM Press (2015)

Karafotias, G., Eiben, A.E., Hoogendoorn, M.: Generic parameter control with reinforcement learning. In: Conference on Genetic and Evolutionary Computation, pp. 1319–1326. ACM Press (2014)

Pettinger, J.E., Everson, R.: Controlling genetic algorithms with reinforcement learning. In: Conference on Genetic and Evolutionary Computation, pp. 692–692. ACM Press (2002)

Miagkikh, V.V., Punch, W.F., III: An approach to solving combinatorial optimization problems using a population of reinforcement learning agents. In: Conference on Genetic and Evolutionary Computation, pp. 1358–1365. ACM Press (1999)

Khadka, S., Tumer, K.: Evolution-guided policy gradient in reinforcement learning. In: Conference on Neural Information Processing Systems, pp. 1196–1208 (2018)

Prabhu, S.G.R., Kyberd, P., Wetherall, J.: Investigating an A-star Algorithm-based Fitness Function for Mobile Robot Evolution. In: International Conference on System Theory, Control and Computing, pp. 771–776. IEEE (2018)

Auerbach, J., et al.: RoboGen: robot generation through artificial evolution. In: International Conference on the Synthesis and Simulation of Living Systems, pp. 136–137. The MIT Press (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Prabhu, S.G.R., Kyberd, P.J., Melis, W.J.C., Wetherall, J.C. (2021). Does Lifelong Learning Affect Mobile Robot Evolution?. In: Matoušek, R., Kůdela, J. (eds) Recent Advances in Soft Computing and Cybernetics. Studies in Fuzziness and Soft Computing, vol 403. Springer, Cham. https://doi.org/10.1007/978-3-030-61659-5_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-61659-5_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-61658-8

Online ISBN: 978-3-030-61659-5

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)