Abstract

On-road object tracking is a critical module for both Advanced Driving Assistant System (ADAS) and autonomous vehicles. Commonly, this function can be achieved through single vehicle sensors, such as a camera or LiDAR. Consider the low cost and wide application of optical cameras, a simple image segmentation-based on-road object tracking model is proposed. Different from the detection-based tracking with bounding box, our model improves tracking performance from the following three aspects: 1) the Positional Normalization (PONO) feature is used to enhance the target outline with common convolutional layers. 2) The inter-frame correlation of each target used for tracking relies on mask, this helps the model reducing the influences caused by the background around the targets. 3) By using a bidirectional LSTM module capable of capturing timing correlation information, the forward and reverse matching of the targets in consecutive frames is performed. We also evaluate the presented model on the KITTI MOTS (Multi-Object and Segmentation) task which collected from out door environment for autonomous vehicle. Results show that our model is three times faster than Track RCNN with slightly drop on sMOTSA, and is more suitable for deployment on vehicular low-power edge computing equipment.

Supported by Hangzhou Innovation Institution, Beihang University.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Multi-object tracking (MOT) in out door environment is a basic and challenging computer vision task [1,2,3]. It has a wide range of application scenarios and actual requirement, such as security monitoring, industrial production, precision guidance, aerial warning and transportation [4]. In the era of big data, related face recognition systems, satellites, surveillance cameras, vehicle-mounted systems, etc. provide rich data sources for researches [5] and also greatly promote the development of related technologies.

Due to the unclear definition of tracking tasks when dealing with partially visible, occluded, or cropped targets, and the lack of well defined and organized dataset and evaluation metrics, previous track systems lack comprehensive evaluation. Until the emergence of large-scale labeled datasets [6,7,8] in recent years, multi-target tracking algorithms and models have been able to develop rapidly [9]. However, most of the MOT are designed for data collected from fixed scene or with static sensors.

With the rapid development of sensor technology, big data processing and artificial intelligence, autonomous driving technology that can improve transportation efficiency, traffic safety, and travel convenience, has received widespread attention in academia and industry in recent years [10]. At the same time, complex road environments, limited vehicle platform and highly random obstacles also pose challenges to autonomous vehicle perception systems. The MOT is one of the most basic and critical perception modules for autonomous vehicle [11, 12].

The autonomous vehicle often equipped with rich sensors and can obtain information of the surrounding environment. Optical camera is the most commonly used sensors, and the vision-based MOT is widely studied [13]. Recent artificial intelligence solutions use deep learning modeling and big data-driven to build MOT model [14]. The convolutional neural network model relies on high-performance graphics cards, making the deployment of the model in a vehicle environment a challenge. Therefore, it is very necessary to study the tracking algorithm that balances performance and accuracy.

In this paper, a vision-based one stage MOT model is proposed for autonomous vehicle. Our tracking architecture is modified from Track R-CNN [3]. We first combine the Positional Normalization (PONO) [15] feature with a lightweight CNN backbone for refining object segmentation. The PONO combines first and second moments of features that can capture structural information. The bi-directional Long Short Term Memory (Bi-LSTM) module is then used to further enhance the association information of inter-frame targets. Finally, the segmentation masks of targets reduce the influence of the background, such as occluded targets and similar background. The final model can achieve better tracking performance while processing video stream data in a more efficient way. The contributions of this paper are summarized as followsFootnote 1:

-

We present a light-weight segmentation-based multi-object tracking model for autonomous vehicular platform.

-

The model combines PONO features and Bi-LSTM modules to capture the spatial-temporal features of targets from continues frames for better tracking.

-

We evaluate the presented model on KITTI Multi-Object and Segmentation (MOTS) dataset.

The rest of this paper organized as follows. The related work of deep learning based tracking models are summarized in Sect. 2. We present the segmentation-based tracking model architecture in Sect. 3, and evaluation results on KITTI MOTS dataset in Sect. 4. The final Section concludes the current model and possible improvements.

2 Related Works

Most of current object tracking algorithms follow the tracking-by-detection fashion which detect the targets frame by frame, and combine with matching algorithms to assign track ID for the same objects. The whole process mainly consists of three steps: detection, feature extraction/motion prediction and association. The detection module first find all possible targets in each frame. And then, the second module extracts a feature set for each target based on detection results. Finally, the association module matches targets with similar features or performs motion estimation based on inter-frame information.

The deep learning-based model mainly borrowed from object detection task directly [16,17,18], because the convolutional neural network models can automatically extract robust features for detection task instead of hand-made-craft features or simple statistical features. And then, the detection results from continuous frames are sent to different trackers, such as correlation filter [19,20,21], Kalman filter [22,23,24], clustering algorithm [25, 26] or Hungarian algorithm [27], optical flow [28], etc., for ID matching.

As mentioned before, the deep learning models are mainly used for solve the detection process, both one-stage and two-stage models are used. The 2D bounding box (BBox) [22, 23, 29] is a common and efficient way to describe the results. In static scenes and top view applications, such as the security monitoring [30], face recognition, etc., the 2D BBox can capture the main outline of the targets. However, when dealing with occlusion targets and close-range targets, such as following a bus or waiting for traffic lights in a crowded traffic environment, the BBox may contain other objects in the background. This has a little effect on classification and detection tasks, but it will affect the characterization of the target, resulting in matching failure [3]. Segmentation-based tracking use masks for each target, and can significantly reduce the interference caused by the background. Moreover, splitting detection and feature extraction also affects the overall efficiency of the model.

The current solution avoids the usage of segmentation networks mainly for the following reasons. Segmentation models are usually very large, especially for the multi-channel results, and will affect processing efficiency. The segmentation-based annotation datasets are also very limited. Traditional features, such as HOG, SIFT, Daisy, etc., cannot generate from irregular masks.

To overcome the above problems, several studies perform end-to-end tracking, and use convolutional features for both detection and target encoding [3, 8, 31] to speed up the model efficiency. Moreover, combining with segmentation model, the convolutional encoding of the target mask is also more accurate.

The Siamese network is another CNN-based tracking solution, which consists of two parallel branches for different purposes. Namely, a searching branch response for high-level semantic representation at global area, and a detecting branch for low-level fine-grained representation at local area. The later branch is often offline trained for the predefined targets, and shares the weights with its twin branch. Fully convolutional Siamese trackers can achieve real-time performance, but due to not fully exploit semantic and objective information, it is often less accurate than other state-of-the-art works. Subsequent researches modified the Siamese network from different aspects, such as constructing cascaded region proposals based on convolution features from different layers of Siamese network [32], combining with Graph Convolutional Network (GCN) [33], increasing network depth [34] and refining match at different stage [35].

It is also important to consider temporal information in tracking tasks, and the most simple way is to combine with LSTM module [36]. TrackR-CNN [3] also deployed LSTM in their model, but found that there is no essential improvement. Other works [30, 37] introduce the Bi-LSTM to explore target matching from both forward and reverse image sequences. Ning Wang et al. [38] even designed a novel unsupervised deep tracking framework to compute the consistency loss for network training from forward and backward image sequences.

Base on previous works, our model tries to optimize the data and detection results through PONO features and segmentation, and combine Bi-LSTM to capture temporal features.

3 Proposed Model

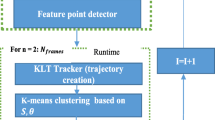

The architecture of the proposed model is presented in Fig. 1, the main workflow is borrowed from TrackR-CNN [3]. We also embed the whole detection, segmentation and target feature extraction and inter-frame matching into a whole CNN workflow. We first use a lightweight ResNet50 [39] backbone combining with the profile enhanced module of PONO to get the spatial association of the target from consecutive frames. Different lengths of subset sequences are also evaluated. A Bi-LSTM module that can extract temporal information is attached behind. This module learns the inter-frame relationship from frontward and backward, and each gate state exchanges information between the two parallel branches. The whole backbone combines spatio-temporal feature together for region proposal and generates mask, classification score and association vector for each target. All information is used for linking the inter-frame targets into tracks over time.

3.1 ResNet50 with PONO

To reduce total floating-point operations per second (Flops) of the model, we first use the ResNet50 as spatial-feature backbone. However, shallow networks will reduce the model fitting ability, especially on segmentation tasks. Therefore, we combine the Positional Normalization feature with ResNet50. Different from previous feature normalization (e.g., Batch, Group, Layer or Instance normalization), PONO not only benefit for network convergence, but also offer a clear structural information from the input images, as shown in Fig. 2(top). PONO achieves this by normalizing over the channels at any given pixel location, and the extracted statistics are position dependent and reveal structural information at this particular layer as Eq. 1.

Where \(u_{w,h,b}\) and \(\sigma _{w,h,b}\) are the mean and standard deviation or the first and second order extension, respectively. \(\epsilon \,=\,10^{-5}\) is a small constant coefficient. The w, h, c, b are the width, height, channel and batch of input feature map of current layer, and X is activation. Commonly, after the normalization operation is deployed on each layer, the two extracted statistics are discarded. PONO treats them as single channel feature maps with same scale of input, and combine them to convolutional feature together as final output. It can be seen that the added amount of calculation is very small, however, the outline information is very significant and can be used to assist segmentation. The ResNet50 can be treat as texture encoding, while the PONO can be treat as outline encoding. Figure 2 shows an example of how to combine the common convolutional layer and a 3-layer residual module with PONO layer together.

3.2 Bi-LSTM

Building sequence model with RNN or LSTM module can capture temporal information, however, previous tracking tasks only considered forward order. However, if we treat the motion of vehicles and pedestrians as event, each frame is highly related with its context states (both forward and backward). Several human pose estimation and tracking tasks [37] use Bi-LSTM for encoding the temporal state of the target. Instead of using stacked LSTM, we use single Bi-LSTM unit to encode both forward and backward states of on-road objects as temporal-feature. We attached Bi-LSTM module behind the convolutional backbone, and to ensure the effectiveness of LSTM training, the nature order of frame sequence is used for training instead of random sampling.

3.3 Multi-task Region Proposal

As the previous two backbone modules generate spatio-temporal features, the final region proposal of our model directly borrow the multi-task network from TrackR-CNN [3, 30, 37]. It contains three individual branches, i.e., a mask-based segmentation, a classification and association vector generator. The fixed association vector is 128 generated from a fully connected layer from the mask, and is used for tracking with the Hungarian algorithm.

The whole model is trained on KITTI MOTS Challenge datasetFootnote 2. This dataset is extended from KITTI tracking dataset which contains 21 labeled sequences. Among them, the sequence {0, 1, 3, 4, 5, 9, 11, 12, 15, 17, 19, 20} for training and sequence {2, 6, 7, 8, 10, 13, 14, 16, 18} for validation. Each time we feed more than one frame as model input as a sliding window for training and validation.

4 Experiments

We first quantify the background noise introduced by Bounding box to the target detection process in an open road environment. Figure 3 shows two examples of the bounding box based detection and mask based detection, it is easy to see that the bounding box introduces background noises and overlapped vehicles.

Take the MOTS dataset of KITTI for example. We evaluate the pixel noise introduced by bounding boxes by comparing with mask labels in Table 1. We evaluate the pixels of mask and bounding box for cars and pedestrians according to Eq. 2, respectively. Where the cumulative pixel (CP) is calculated by adding all the mask/bbox of class (car/ped) according to the tracking ID (t) in each sequence. The mask and bounding box donate incremental pixels (megapixel) of each category of targets in the 21 sequences. Seq 17 does not contain cars, and Seq 3, 5, 6, 8, 18 and 20 do not contain pedestrians.

From Table 1, it is easy to see that the ratio of the mask/BBox for car ranges from 0.12 to 0.82, and the ratio of the mask/BBox for pedestrian ranges from 0.23 to 0.61. The average m/b for the 21 sequences is 0.28 for cars and 0.31 for pedestrians, respectively. The smaller the ratio, the greater the background noise introduced by BBox. And the pedestrians are affected more than cars, this may lead to poor tracking results for pedestrians when dealing with BBox. The overall ratio ranges from 0.13 to 0.65, and only 6 out of 21 sequences have a ratio larger than 50%.

We then train and evaluate our proposed model in the same way mentioned in Track R-CNN [3], the only difference is our hardware is a little different from theirs. All the testings are deployed on a GPU server with NVidia RTX2080ti@11.3 Gbps, with 32G memory and Intel Core i9-9900K CPU. During training, we can only set a maximum batch size of 7 due to the limitation of the GPU memory, the rest of the configuration is basically consistent with the original experiment [3].

The MOSTA (multi-object tracking and segmentation accuracy), MOTSP (mask-based multi-object tracking and segmentation precision) and sMOTSA (soft multi-object tracking and segmentation accuracy)

[3] are introduced to evaluate the tracking model and is calculated as Eq. 3. Where TP is comprised of hypothesized masks which are mapped to a ground truth mask, and \(\widetilde{TP}\) is the number of true positives. FP are hypothesized masks that are not mapped to any ground truth mask. FN are the ground truth masks which are not covered by any hypothesized mask. M denote the latest tracked predecessor of a ground truth (mask), or  if no tracked predecessor exists. The set IDS is defined as the set of ground truth masks whose predecessor was tracked with a different id. MOTSA is the mask-based multi-target tracking accuracy metric that only consider successfully tracked targets. MOTSP is the mask-based multi-object tracking and segmentation precision. sMOTSA measures both segmentation as well as detection and tracking quality.

if no tracked predecessor exists. The set IDS is defined as the set of ground truth masks whose predecessor was tracked with a different id. MOTSA is the mask-based multi-target tracking accuracy metric that only consider successfully tracked targets. MOTSP is the mask-based multi-object tracking and segmentation precision. sMOTSA measures both segmentation as well as detection and tracking quality.

The batch size of 5 is used during all training and testing, as we found the enlarged batch size can slightly increase the model performance and has no influence on FPS during testing. When the batch size is greater than 5, the model performance will not continue to improve, as shown Table 2.

Table 3 illustrates the comparison of different model settings and model performances, two different backbones (Resnet50 and Resnet101) are used during testing, \(+\) means the backbone is integrated with PONO features in each module. The backbone is designed for charging the spatial feature, and is attached with a temporal module. Three different temporal modules are compared in our work, 3DCNN, LSTM and Bi-LSTM. In the end of the model, two tracking vector generation mechanisms are considered, i.e., generating from the RPN and from the mask.

We only evaluate the sMOTSA and FPS (Frame per Seconds) in the current stage. The \(\surd \) donates the selected combination of modules for each setting, \(+\) donates the PONO feature is used, and \(\surd \surd \) means two repeatedly stacked modules. As we focus on tracking task, we randomly select a sequence during training and testing each time, and use the default frame order as model input.

The configuration1 is Track R-CNN [3] with a combination of Resnet101, 3DCNN, RPN-based tracking vector, it achieves the highest sMOTSA on both car and pedestrian, but can only reach 3.12 FPS on NVidia Titan XP@12G. This is far cry from the basic requirement of 10 Hz in autonomous system. By replacing the backbone with Resnet50, the FPS increases to 5.79 (nearly twice the original value), however, the sMOTSA drop to 68.1 an 37.3, respectively. Then, we add PONO feature with Resnet50 backbone, the model FPS is surprisingly reached 10.29, and the car sMOTSA increased by about 2%, but pedestrian sMOTSA drops to 30.2. Which means that the PONO can heavily increase the backbone speed, and has benefit for large targets of cars.

Configuration 4, 5 and 6 show the performances of different temporal modules, except for the 3DCNN and LSTM, we also added a Bi-LSTM to capture the patterns from both toward and backward. Compare to configuration2, LSTM module shows higher processing efficiency for extracting temporal features than 3DCNN, but the sMOTSA is much lower than 3DCNN. The stack of two LSTM shows no better than single LSTM module, and increased processing time from 8.82 FPS to 6.61 FPS. Moreover, the Bi-LSTM shows similar performance on car, but much higher sMOTSA on pedestrian (42.4), and configuration6 can achieve the highest FPS at 13.37 among all configurations. This also donates that convolution-based Bi-LSTM can confirm the timing characteristics of the target through reverse information and greatly improve the efficiency of feature extraction and later tracking.

We further adjust the weight of the temporal module, as this is not a main module, we only consider two different conditions, i.e., 0.05 and 0.8, for Bi-LSTM. Considering the configuration6 and 7, when using Resnet50 with PONO, the sMOTSA of car further improves to 72.3 while the sMOTSA of pedestrian drops to 34. We then enlarged the weight of temporal module to 0.8 as in configuration8, both the sMOTSA of the car and pedestrian have increased significantly, and this process has not affect on FPS. In configuration9, the tracking vector is generated from mask instead of RPN, the tracking performance of sMOTSA is raises to 74.9 for car and 43.6 for pedestrian, and the FPS only drops 0.21. This is very close to Track R-CNN with a deeper backbone of Resnet101, but the FPS has increased more than three times, and can meet the needs of autonomous driving systems. Table 4 lists the detailed tracking indicators of the above-mentioned models with different configurations on cars and pedestrians.

Figure 4 illustrates an example of ID matching based on the mask-based tracking vectors, the upper figure donates segmentation results at time T, while the bottom figure donates segmentation results at time \(T+1\). After segmentation, we use the tracking vectors in each frame for matching track IDs with the famous Hungary algorithm. Figure 4(a) is the segmentation results in frame T and \(T+1\). While Fig. 4(b), 4(c), 4(d), 4(e) and 4(f) donate the matching process for each targets. Due to the van and bus are ignored classes in KITTI tracking dataset, the model does not tracking on them during both training and testing. We also illustrated the calculated distances between the current target vectors (in \(T+1\) frame) and last target vectors (in T frame), each target uses the same color of number and BBox. Our model successfully tracked four cars and a pedestrian, i.e., Car34, Ped83, Car44, Car52 and Car53, between the two frames.

This process can also be presented as matching matrix with distances information as shown in Fig. 5. When the element on the main diagonal is the smallest, and the distance of the same target (track ID) in the adjacent frames has the shortest distance, the model can achieve the best matching result based on the tracking vector distances. The vector distance between all correctly matched target frames is less than 1 (around 0.5).

Figure 6 illustrates four examples of 2D tracking results of our final model, the gray trajectory lines are ground truth calculated according to BBox center, while the trajectory line consistent with the same color of the mask is the predicted result of our model. It is easy to see that the predicted trajectories of the final model are very close to the ground truth in ideal occasions.

5 Conclusion

The cars and pedestrians are two main moving targets in open street environment. Accurate tracking of dynamic targets is an important module for perception system of autonomous vehicles, and can provide powerful information support for subsequent driving decision making and motion planning. To address accurate and efficient dynamic target tracking in dynamic open street area, this paper presented a novel segmentation-based object tracking model. We use light-weight backbone of Resnet50 with PONO module for generating the spatial features, and then combined with a Bi-LSTM for generating temporal feature from continuous inter-frame information in forward and reverse orders. The final tracking vector generated from segmentation mask also helps increase the tracking performance. Testing results on the public KITTI MOTS dataset show that the tracking performance of our model is slightly lower than Resnet101 based Track R-CNN on MOTSA, MOTSP and sMOTSA, but is three times faster and can be deployed for autonomous vehicles.

The current model still has some drawbacks, such as the tracking is relied on segmentation results, when the segmentation fails or is wrong, the entire system will fail. Moreover, the current tracking results on pedestrian is also not remarkable and safe enough for usage. We plan to solve those problems with re-identification strategies in the future.

Notes

- 1.

We provide code online at https://github.com/XYunaaa/Fast-Segmentation-based-Object-Tracking-Model.

- 2.

References

Porzi, L., Hofinger, M., Ruiz, I., Serrat, J., Bulò, S.R., Kontschieder, P.: Learning multi-object tracking and segmentation from automatic annotations. arXiv preprint arXiv:1912.02096 (2019)

Osep, A., Voigtlaender, P., Weber, M., Luiten, J., Leibe, B.: 4D generic video object proposals. arXiv preprint arXiv:1901.09260 (2019)

Voigtlaender, P., et al.: Mots: multi-object tracking and segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7942–7951 (2019)

Li, X., Weiming, H., Shen, C., Zhang, Z., Dick, A., Van Den Hengel, A.: A survey of appearance models in visual object tracking. ACM Trans. Intell. Syst. Technol. (TIST) 4(4), 1–48 (2013)

Ward, J.S., Barker, A.: Undefined by data: a survey of big data definitions. arXiv preprint arXiv:1309.5821 (2013)

Leal-Taixé, L., Milan, A., Reid, I., Roth, S., Schindler, K.: Motchallenge 2015: towards a benchmark for multi-target tracking. arXiv preprint arXiv:1504.01942 (2015)

Milan, A., Leal-Taixé, L., Reid, I., Roth, S., Schindler, K.: Mot16: a benchmark for multi-object tracking. arXiv preprint arXiv:1603.00831, 2016

Chen, Y., Jing, L., Vahdani, E., Zhang, L., He, M., Tian, Y.: Multi-camera vehicle tracking and re-identification on AI city challenge 2019. In: Proceedings of CVPR Workshops (2019)

Milan, A., Schindler, K., Roth, S.: Challenges of ground truth evaluation of multi-target tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 735–742 (2013)

Shi, W., Alawieh, M.B., Li, X., Yu, H.: Algorithm and hardware implementation for visual perception system in autonomous vehicle: a survey. Integr. 59, 148–156 (2017)

Leal-Taixé, L., Milan, A., Schindler, K., Cremers, D., Reid, I., Roth, S.: Tracking the trackers: an analysis of the state of the art in multiple object tracking. arXiv preprint arXiv:1704.02781 (2017)

Ouyang, Z., Niu, J., Liu, Y., Guizani, M.: Deep CNN-based real-time traffic light detector for self-driving vehicles. IEEE Trans. Mob. Comput. 19(2), 300–313 (2019)

Luo, W., et al.: Multiple object tracking: a literature review. arXiv preprint arXiv:1409.7618 (2014)

Ciaparrone, G., Sánchez, F.L., Tabik, S., Troiano, L., Tagliaferri, R., Herrera, F.: Deep learning in video multi-object tracking: a survey. Neurocomputing 381, 61–88 (2020)

Li, B., Wu, F., Weinberger, K.Q., Belongie, S.: Positional normalization. In: Advances in Neural Information Processing Systems, pp. 1620–1632 (2019)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Redmon, J., Farhadi, A.: Yolov3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Szegedy, C., Ioffe, S., Vanhoucke, V., Alemi, A.A.: Inception-v4, inception-ResNet and the impact of residual connections on learning. In: 31st AAAI Conference on Artificial Intelligence (2017)

Wang, L., Xu, L., Kim, M.Y., Rigazico, L., Yang, M.H.: Online multiple object tracking via flow and convolutional features. In: 2017 IEEE International Conference on Image Processing (ICIP), pp. 3630–3634. IEEE (2017)

Wang, Q., Zhang, L., Bertinetto, L., Hu, W., Torr, P.H.: Fast online object tracking and segmentation: a unifying approach. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1328–1338 (2019)

Zhao, D., Hao, F., Xiao, L., Tao, W., Dai, B.: Multi-object tracking with correlation filter for autonomous vehicle. Sensors 18(7), 2004 (2018)

Bewley, A., Ge, Z., Ott, L., Ramos, F., Upcroft, B.: Simple online and realtime tracking. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 3464–3468. IEEE (2016)

Yu, F., Li, W., Li, Q., Liu, Y., Shi, X., Yan, J.: POI: multiple object tracking with high performance detection and appearance feature. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 36–42. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_3

Chen, J., Sheng, H., Zhang, Y., Xiong, Z.: Enhancing detection model for multiple hypothesis tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 18–27 (2017)

Tan, X., et al.: Multi-camera vehicle tracking and re-identification based on visual and spatial-temporal features. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 275–284 (2019)

Zhang, S., et al.: Tracking persons-of-interest via adaptive discriminative features. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9909, pp. 415–433. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46454-1_26

Kieritz, H., Hubner, W., Arens, M.: Joint detection and online multi-object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1459–1467 (2018)

Weinzaepfel, P., Revaud, J., Harchaoui, Z., Schmid, C.: Deepflow: large displacement optical flow with deep matching. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1385–1392 (2013)

Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: Atom: accurate tracking by overlap maximization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4660–4669 (2019)

Maksai, A., Fua, P.: Eliminating exposure bias and metric mismatch in multiple object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4639–4648 (2019)

Li, X., Ma, C., Wu, B., He, Z., Yang, M.H.: Target-aware deep tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1369–1378 (2019)

Fan, H., Ling, H.: Siamese cascaded region proposal networks for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7952–7961 (2019)

Gao, J., Zhang, T., Xu, C.: Graph convolutional tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4649–4659 (2019)

Li, B., Wu, W., Wang, Q., Zhang, F., Xing, J., Yan, J.: Siamrpn++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4282–4291 (2019)

Wang, G., Luo, C., Xiong, Z., Zeng, W.: SPM-tracker: series-parallel matching for real-time visual object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3643–3652 (2019)

Milan, A., Rezatofighi, S.H., Dick, A., Reid, I., Schindler, K.: Online multi-target tracking using recurrent neural networks. In: 31st AAAI Conference on Artificial Intelligence (2017)

Kim, C., Li, F., Rehg, J.M.: Multi-object tracking with neural gating using bilinear LSTM. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 200–215 (2018)

Wang, N., Song, Y., Ma, C., Zhou, W., Liu, W., Li, H.: Unsupervised deep tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1308–1317 (2019)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Acknowledgment

This work has been supported by National Natural Science Foundation of China (61772060, 61976012), Qianjiang Postdoctoral Foundation (2020-Y4-A-001), and CERNET Innovation Project (NGII20170315).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Dong, X., Niu, J., Cui, J., Fu, Z., Ouyang, Z. (2020). Fast Segmentation-Based Object Tracking Model for Autonomous Vehicles. In: Qiu, M. (eds) Algorithms and Architectures for Parallel Processing. ICA3PP 2020. Lecture Notes in Computer Science(), vol 12453. Springer, Cham. https://doi.org/10.1007/978-3-030-60239-0_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-60239-0_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-60238-3

Online ISBN: 978-3-030-60239-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)