Abstract

The estimation of rockfall hazards is usually based only on hazards related to rockfall propagation. The rockfall failure hazard is not currently well defined, and only a few studies have truly addressed this topic. The basics of slope stability assessment are reviewed. Here, we propose a summary of the standard methods used to assess susceptibility to rock mass failure, mainly based on techniques from the mining industry or tunneling. Most of them are qualitative. Many susceptibility scales have been described. Due to computer power and the high-resolution topography in real 3D, topography analysis and standard kinematic tests have been adapted and improved to obtain rockfall susceptibility. Hazard assessments based on the power law are one of the best and only ways to obtain a real assessment of rockfall hazard failure; however, they present some drawbacks that must be solved. The most promising avenues of research for rockfall failure hazards are linked to rock mass strength degradation, which is currently observed using high-resolution 3D monitoring of cyclic deformations with hysteresis. These are the resulting movements caused by groundwater circulations, thermal cycles, earthquakes, rainfall, etc. In conclusion, the rockfall hazard will be improved by better understanding these processes in addition to the chemical weathering effect.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

Here, we use the term “rockfall failure hazard” to describe the failure hazard in relation to the volume of rocks that will lead to rockfall, which includes any type of mechanism such as slides, wedges, and toppling (Hungr et al. 2014). To date, rockfall failure hazards have not been studied in great detail. Most rockfall hazard studies are mainly based on rockfall trajectory modeling, arbitrarily fixing the failure frequency. Nevertheless, there are methods that are designed to provide an assessment the slope rock mass strength such as slope mass rating (SMR) (Romana 1988). Some recent studies, using new technologies such as light detection and ranging (LiDAR), structure from motion (SfM) photogrammetry, thermal imaging, passive seismic monitoring and InSAR, allow us to investigate the hazard (frequency-volume relationship) (Hantz 2011; Williams et al. 2018) loss of strengths of rock instability (Levy et al. 2010) and potential fatigue processes (Rouyet et al. 2017). Furthermore, the impact of rainfall, freezing and thaw cycles (D’Amato et al. 2016) or thermal effects (Collins and Stock 2016) on rock fatigue or rockfall triggering are now increasingly being studied. It is also clear that even for limited volumes, there can be some precursory movements leading to failure (Royán et al. 2013; Kromer et al. 2017).

Here, we present the basic factors controlling the rock slope stability and examine the potential external factors that can lead to failure, especially the loss of strength. We start our review from the slope angle thresholds above which the rockfall is prone to observed strength degradation. The time dependence of this degradation is the ultimate objective of this research.

The Challenges of Rockfall Hazards

The main challenge is to improve the risk assessment of rockfall, which implies a better understanding of how rockfall hazards must be assessed.

The Rockfall Risk and Hazard

A rock instability (RI) is a potentially unstable rock compartment which is generally made up of several individual blocks of different volumes. The rockfall risk linked to several RIs on an object at risk W occupying the domain Χ can be written in a conceptual way (modified after Leroueil and Locat 1998; Jaboyedoff et al. 2001; Volkwein et al. 2011 Farvacque et al. 2019):

where the unit of risk is a cost per year or the number of causalities per year considering several RIs and the object at risk:

-

W(Χ) the object-at-risk’s value or number of people located in the domain Χ, which is located in the potential impacted area;

-

V(E(X), W(X)) is the vulnerability of the object (W(X)) at risk or the lethality of the person to the specific block intensity, i.e. energy E, at location X;

-

Exp(Χ) is the exposure to a hazard, which means the presence of the object or a person in the domain Χ with value ranging from 0 to 1;

-

H(RI,Χ,E(X)) dE is the incremental frequency for a range of intensity (energy) dE of the blocks coming from instability RI in domain Χ.

The rockfall hazard in domain X can be decomposed into two terms (Fig. 1) (Leroueil and Locat 1998; Jaboyedoff et al. 2001; Volkwein et al. 2011):

where λ(RI) is the frequency of the failure (= temporal frequency) of the blocks for a given range of volumes dV coming off from the RI. Pp(RI, Χ, E(V, X)|V) is the probability of propagation of one RI for a given range of volumes dV providing the probability for a given energy density E by all the blocks reaching Χ. Such formalism can be more refined, but these formulas provide a good framework to tackle risks and hazards. The present paper is related only to the assessment of λ(RI, V).

Hazard Versus Susceptibility

The hazard, as indicated above, is deduced from a quantitative approach, providing the temporal frequency of hazard of a given intensity. The relationship intensity-frequency is an example of such quantification. However, formally, it is the intensity at a given location. For rockfalls, it is kinetic energy. For the rockfall source, the hazard is a temporal frequency depending on volume.

The susceptibility is based on an empirical scale of the likelihood of a danger at a given location based on the rating of the predisposing factors and summarizing them in a relative hazard scale (Ferrari et al. 2016; Fell et al. 2008). It is often used for qualifying the rockfall failure hazard of the sources based on various choices of internal parameters (IPs) and external factors (EFs). For instance, the SMR provides a preliminary assessment of slope stability (Romana, 1988).

Site-Specific or Regional Assessment

The hazard assessment process is very different if it is dedicated to a full slope or a specific site (localized hazard). Regionally, it is based on average knowledge and statistics, while for specific instability, the main mechanisms of instability (Fig. 2) can be deduced, and more detailed investigations can be performed based on instability geometry, mechanical parameters tests, calculations, movements monitoring, etc. Here, we consider both types.

(adapted from: Hantz et al. 2003a)

Main rockfall mechanisms

Elements that Control the Rockfall Failure Hazard

The evolution with time of a slope system’s stability can be described in terms of internal parameters (IPs) and external factors (EFs) (Fig. 3). The IPs can be considered as functions that evolve under the effect of the EFs. To ideally characterize a potential instability, we need to identify (Volkwein et al. 2011): (1) the pre-failure processes and (2) the areas sensitive to rapid strength degradation leading to slope failure (Jaboyedoff et al. 2005; Leroueil and Locat, 1998). IPs can be summarized as proposed by Volkwein et al. (2011):

External factors and intrinsic parameters for rockfall stability and triggers (after Volkwein et al. 2011)

(a) Morphology: slope types (e.g. slope angle, height of slope, profile), exposure, type of relief (depending on the controlling erosive processes), etc.

(b) Geology: rock types and weathering, variability of the geological structure, bedding, type of deposit, folded zone, etc.

(c) Fracturing: joint sets, trace lengths, spacing, fracturing intensity, etc.

(d) Mechanical properties of rocks and soil: cohesion, friction angle, etc.

(e) Activity: movements or rockfall, etc.

(f) Hydrogeology: permeability, joint permeability, etc.

It must be emphasized that the joint sets or discontinuities are assumed to be the main features controlling the stability (Hoek and Bray, 1981), which is supported by the fact that more intensely fractured rock produces more rockfall events (Coe and Harp, 2007). As stated in Volkwein et al. (2011), the EF actions on IPs are mainly:

-

gravitational effects;

-

water circulation: hydrology or hydrogeology, climate, precipitation in the form of rainfall or snow, infiltration rates, groundwater;

-

weathering;

-

erosion;

-

seismicity;

-

active tectonics;

-

freezing and thawing, permafrost, which are increasingly invoked to explain rockfall activities (Frayssines and Hantz, 2006; Matsuoka and Sakai, 1999; Matsuoka, 2008; Gruner, 2008);

-

heat and cooling cycles by sun exposure (Collins and Stock, 2016),

-

rainfall impact (D’Amato et al. 2016);

-

nearby instabilities;

-

human activities (anthropogenic factors);

-

etc.

These non-exhaustive lists of IPs and EFs include the key parameters used by the existing methods used to assess the hazard or susceptibility to rockfall failure.

Geotechnical Basics

To illustrate the parameters and factors controlling rock instabilities, the simple model of a sliding block permits us to understand the issues linked to the stability of rock slopes (Fig. 4). The factor of safety of a sliding block submitted to pore water pressure and seismic acceleration is given by (Wyllie, 2018):

Illustration of an idealized rockslide including back crack and pore water pressure (after Hoek and Bray, 1981)

where c is the cohesion, ϕ is the friction angle, kh is the ratio of the horizontal seismic acceleration to the earth acceleration g, α is the slope angle of the planar failure, β is the slope angle of the slope, W is the weight of the sliding mass, U is the water force caused by the water pressure at the failure surface A, and V is the horizontal force applied by the water at the back of the instability within the back crack.

This model clearly shows that if ϕ or c decreases, the stability is diminished. The increase in water pressure and seismic acceleration are factors that destabilize the slope. However, all EFs can influence the stability, and we can see that modifying the geometric properties of the rock mass ground may lead to important changes in the safety factors. Hoek and Bray (1981) proposed creating a graph that illustrates the relationship of a slope height with its slope angle based on the previous Eq. 3, assuming a dry slope, and that the back crack with a depth z is located to minimize the stability:

In addition, the dip angle of the failure surface follows a plane that maximises the cohesion assuming a fixed ϕ (Hoek and Bray, 1981):

inserting the unit weight of the rock \( \gamma \), it leads to:

Starting from the geometry from Fig. 5b, the slope height can be expressed by minimizing the stability regarding the slope angle α to obtain the Culman model (Taylor 1948):

These relationships permit to back-analyze cases in a simple way. This process demonstrates the strong dependence of stability on slope height for steep slopes. Figure 5a also shows that unstable slopes can have a very high factor of safety, demonstrating that c and ϕ are either evolving under EFs or have a high variability and that no groundwater is considered in this simple model. There are other types of back-analyses that provide apparent cohesion of discontinuities, knowing the dip angle of the failure plane, the friction angle and the height of the slope (Locat et al. 2000).

Furthermore, it is clear from Eq. 3 that groundwater can have a strong external effect, reducing the factor of safety by more than 30%. The earthquake effect can be assessed for sliding by a simple model based on the critical acceleration (Wilson and Keefer, 1985), and for specific cases, methods used to assess the failure hazard are often based on the Newmark (1965) method.

Field Surveys

The basic tool used to assess rockfall source hazard is based on field surveys. Experienced professionals can easily identify rocky compartments looking at a rock wall. Often, field observations are the basis for many of the methods used to qualify hazard or susceptibility.

In many cases, detailed mapping is required to assess the stability of the source areas, such as for the “gorge du Tarn” in France, where a sub-horizontal carbonate platform is incised by a deep gorge (Pauly and Payany, 2002). The rock mass is fractured, and high rock columns are created by regional joint sets, which are not simple to characterize as unstable (Fig. 6). The destabilization seems to be often created by “thermally induced wedging” (Bakun-Mazor et al. 2013). The stability of such columns and the probability of failure are very difficult to assess without an expert approach, which demonstrates that field work and conceptual models are of primary importance in such cases.

The identification of special geological and mechanical settings requires a detailed field survey to identify the location prone to rockfall initiation, such as the influence of folding on rockfall susceptibility. Coe and Harp (2007) demonstrated using both field observations and the rock mass quality (Q) index (Barton et al. 1974; Harp and Noble, 1993), in which the formation of folds decreases the friction angle of flexural slip along discontinuities by smoothing the roughness. In addition, the hinges have been shown to be more susceptible to rockfall than limbs because they are more fractured.

Field surveys are also important for characterizing the rockfall volume delineated by discontinuities (Agliardi and Crosta, 2003).

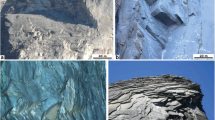

3D Techniques

Major advances in recent years for rockfall source characterization include the high-resolution DEM (digital elevation model) and 3D cloud points, which are produced by LiDAR (Light Detection and Ranging) (Tonon and Kottenstette, 2006; Abellán et al. 2014) and SfM (structure from motion) (Tonon and Kottenstette, 2006; Kromer et al. 2019). The LiDAR or laser scanner can be static or installed on vehicles, boats, drones or planes, and there are also handheld versions (Jaboyedoff and Derron, 2020). SfM is based on photogrammetric techniques using several images with large overlaps. It can be based on any type of cameras or pre-existing pictures (Guerin et al. 2020a). It allows structural characterization (Slob et al. 2002; Sturzenegger and Stead, 2009; Jaboyedoff et al. 2007; Gigli and Casagli, 2011; Abellán et al. 2014) and monitoring (Royan et al. 2014; Kromer et al. 2017; 2019). It can now be considered a basic tool for any study of rock outcrops (virtual outcrop), especially because SfM methods are very cheap. In addition, currently, the accessibility to very high-resolution devices (~ 50 μm) that can capture microtopography may have a strong impact on the rock joint roughness characterization (Mah et al. 2013).

Geometrical Methods

As mentioned, the first aspect of rock slope stability is linked to the presence of a steep slope, which is the primary factor influencing rockfall initiation. Another important aspect of rock slope hazards is to quantify volumes and to characterize their geometry, which is important for frequency estimation and/or instability mechanism understanding.

Using Slope

Strahler (1954) showed that the slope angle of uniform lithology follows a Gaussian distribution based on the digital elevation model (DEM). Following this idea, Rouiller et al. (1997) proposed decomposing the histogram of slope angles in several Gaussian distributions (Fig. 7) and attributing them a geomorphological meaning (Loye et al. 2009). Identifying the population of the steep slope, i.e. cliffs, it allows us to select the limit angle for which this population dominates, which is chosen as the limit to detect the zone prone to rockfall initiation. This appears to also work for DEMs with low resolution (25 m), as demonstrated in the Saasthal in the Swiss Alps, where most scars of past and present rock instabilities are located within slopes with angles higher than the defined threshold of 50° (Fig. 8). It is also extremely efficient for high-resolution DEMs (1 m) to create geomorphic maps (Loye et al. 2009). It has been used by several authors (Crosta et al. 2015; Corona et al. 2013; Lopez-Saez et al. 2016; Losasso et al. 2017; Farvacque et al. 2019). The extracted distribution was used to create a susceptibility scale for rock slope failure (Michoud et al. 2012). Fernandez-Hernandez et al. (2012) used this method to define a limiting slope of 50°, below which their susceptibility index Is was null. They also added other criteria (see below).

Empirical Approaches

There are very simple ways to create susceptibility maps based on geographic information systems (GIS), which permit the development of heuristic techniques (Van Westen 2006). This process consists of giving weights to spatialize IPs or EFs and aggregating them by summing or multiplying them to obtain a final weight. This process can be used for any type of IP or EF. Such susceptibility often depends on the available data and the objective of the study. GIS and related software permit the use of any type of map. For instance, in Switzerland, the 1:25,000 vectorized topographic maps include polygons of the cliff areas (Jaboyedoff and Labiouse, 2003; Loye et al. 2009).

Baillifard et al. (2003) developed a susceptibility (Sij) that simply sums the number of criteria (k) present in raster maps for each pixel ij to detect the rock instability above the roads, assuming that rijk = 0 is null if the criterion is not reached and rijk = 1 if otherwise:

Five different maps were used: (1) cliff extracted from digitized topographic maps, (2) steep slope > 45° extracted from a raster at 25 m, (3) a scree slope with a buffer of 100 m, (4) a buffer of 150 m around important faults, and (5) the slope located above a road. The results showed that the past instabilities along roads in Valais (Switzerland) possessed a susceptibility ≥ 3. Saroglou (2019) applied the same strategy at the nationwide level of Greece but with a score for each criterion going from 3 to 1 for the highest susceptibility. A lower number represents a higher susceptibility. This rockfall susceptibility index (RSI) for pixel ij is given by:

where Lij is the class of the lithology, Rij is the annual rainfall, Eij is the earthquake impact and Fij is the presence of a fault. Only five rockfalls among 43 were ranked within pixels with a high RSI. The identical approach was applied on El Hierro volcanic island (Canary Archipelago) assuming a susceptibility index calculated summing five parameters within the slope steepest than 50°: slope angle, topographic profile curvature, lithology, vegetation cover and dike density. This procedure provided mainly very high susceptibility indexes for known rockfall source areas (Fernandez-Hernández et al. 2012).

Such examples demonstrate that by using simple rules, it is possible to obtain a first screening of the instability. The major issue is to obtain a high-resolution DEM that is optimal.

Susceptibility to Earthquakes

Keefer (1993) proposed a very simple method to assess the failure of rock slopes induced by earthquakes. It is based on a dichotomous decision tree, allowing us to assess the hazard of failure (Fig. 9). Some additional parameters can be considered, such as the presence of vegetation that can reduce the effect of earthquakes for M > 6.5 and groundwater that increases the susceptibility.

Decision tree for susceptibility of rock slope failure (From Keefer, 1993)

Kinematic Tests

Traditionally, rock mechanics uses kinematic tests using stereonets (Hoek and Bray, 1981), looking at the average orientation of slopes and testing the feasibility of a set of discontinuities to slide on planes, wedges or topples, adding some criteria linked to the basal friction angle.

Pure Geometrical Kinematic Tests

Kinematic tests are usually used to verify if a mechanism is possible. It is usually based on a unique slope orientation representing the geometry within stereonets (Hoek and Bray, 1981). Since DEMs became available, kinematic tests have been adapted in computer codes (Wagner et al. 1988; Gokceoglu et al. 2000; Jaboyedoff et al. 2004a).

Often, kinematic tests include friction angles and empirical limitations in addition to geometrical criteria, but this can be an issue because these tests do not integrate the effects of water and joint strength degradation. As a consequence, as a first approximation, the mechanical properties are not used.

As a basic hypothesis, the most important parameter is the density of dangerous structures that intersect the topography. For that purpose, it is necessary to know the mean spacing L for the discontinuities and their mean trace length T, their orientations and the slope face orientation for each DEM cell or pixel (Fig. 10). Then, the average number of structures per cell can be calculated.

For potential planar failures, the direction of sliding must point out of the slope, i.e. the slope angle of discontinuity αi must be lower than the apparent slope angle of cell βc in the sliding direction ωi, αi < βc, and a geometrical restriction of the direction of sliding can be added (Hoek and Bray, 1981):

where \( \omega_{c} \) is the dip direction or aspect of the cell. The number of potential planar failures Np produced by a joint set is given by (Jaboyedoff et al. 2004a; Matasci et al. 2015):

where Ac is the surface area of the topographic face of a cell given by the horizontal surface of the cell divided by cos β, which is the slope angle cosines, L the mean spacing and T the mean trace length of the discontinuities. δ is the angle between the normal vectors of the local slope and the discontinuity set. If Np is lower than 1, it means that the instability size is larger than the cell size, i.e. the potential rockfall sources frequency is lower but the volume is larger. For Nt, the number of topplings, the normal must point downward in the slope \( (90 - \alpha_{i} \le\upbeta_{c} ) \) with an additional condition that can be applied:

The directions of wedge failure have the same restrictions as the planar failure replacing the dip direction and the discontinuity slope angle by the wedge direction of sliding (Fig. 11). If γ is the angle between the two joint sets with mean spacings Li and Lj, and δ is the angle between the wedge axis and the normal to the topography, then the number of wedges is given by (Matasci et al. 2015):

Using these three numbers (Np, Nt and Nw), it is possible to obtain a susceptibility index Sc to rockfall failure in each cell of the DEM (Matasci et al. 2015a):

Matasci et al. (2015a) were able to compare the actual rockfall activity with the estimated susceptibility (Figs. 12 and 13). This was done within small catchments in Ticino (Switzerland), where the rockfall was trapped and the total volume was measured for a period. The results showed the total number of potential structures within six different catchments:

Rockfall susceptibility map of the western side Monte Generoso (Ticino; Switzerland) draped on a 3D DEM, indicating the number of potential failures Sc and the volumes of the blocks trapped in the protective barriers for each catchment (from Matasci et al. 2015a; DEM, 2 m cell size, Swisstopo)

Relationships of the volume of blocks trapped in the protective barriers for each catchment and the total number of potential failures (from Matasci et al. 2015a)

And was well-correlated with the observed rockfall activity within the catchment. This technique was more efficient than the estimations based on the catchment surface areas or the GSI (Hoek, 1994).

Kinematic Tests Integrating Stress Tensor

Günther (2003) developed the kinematic test software SLOPEMAP, considering the outcropping of geological structures. It builds a digital structural model (DSM) based on field data and uses an interpolator that can include bedding, folds and joint sets (Günther et al. 2004). Assuming a vertical maximum stress in the slope within the cell of the raster, the stress tensor is deduced, and the stability is computed to detect the potential unstable zones. A module integrating shallow groundwater flow using similar techniques as shallow landslide techniques is also implemented (Günther et al. 2004).

Kinematic Tests Integrating Geomechanics and Probabilistic Approaches

As proposed by Hoek and Bray (1981) and Wyllie (2018), kinematic tests can include geo-mechanical properties. The basal friction angle ϕ for slides must be less steep than the dip angle of the sliding direction of planar or wedges (Fig. 11). The friction angle can also be introduced for toppling.

Several authors developed a probabilistic approach by assuming probability distribution functions for the parameters used to calculate the probability of failure, which corresponds to the probability Pff, to obtain a safety factor below 1 for planar failure, wedges or topple (Scavia et al. 1990; Carter and Lajtai, 1992).

Using simulations of variable wedges, Park and West (2001) proposed decomposing the probability of failure Pf in two terms:

where Nk is the number of possible kinematic tests that are positive, NT is the total number of tests, Pfk is the probability that the movement is mechanically possible and Nf is the total number of cases with a factor of safety below 1 among Nk, which corresponds to the probability Pff.

Gokceoglu et al. (2000) introduced not only the friction angle in the kinematic test but also the probabilistic approach for the discontinuity orientations. They identified 3 joint sets in the Altindag (Turkey) and estimated their spatial variability. By simulations for each pixel of the DEM, they produced a probability of failure, Pfk, by simulating millions of kinematic tests based on the probabilistic approach of stability (Fig. 14).

(Modified after Gokceoglu et al. 2000)

Map of the probability of wedge failure in Altindag (Turkey) with ϕ = 35° and 3 sets of discontinuities including their variabilities.

Grenon and Hadjigeorgiou (2008) proposed a full Monte Carlo simulation of wedge stability based on an idealized slope. A discrete fracture network (DFN) model was created using the Veneziano model (Dershowitz and Einstein, 1988), and Pfk is estimated by evaluating the intersection of the fracture network with the slope (Fig. 15). The average trace lengths, the average spacings and the average orientations and the variances of 5 sets of discontinuities were measured in the field. They assumed that the spacings followed exponential negative distributions and that the orientations were simulated using a Fisher distribution. The results showed that the combination of the different couples of discontinuities did not have the same probability of failure but that it is necessary to use a large number of simulations to explore all possible intersections with topography.

(modified from Grenon et al. 2014)

Example of fractures in the DFN intercepting a plane-oriented (scale in meters). The simulations show that most wedges are small, i.e. ninety-nine percent of the 76,079 wedges are smaller than 155 m3 in volume

Kinematic Test in Real 3D

Kinematic tests, including mechanics, are now used for cloud points, which means that real 3D topography is considered, including overhangs. Gigli et al. (2014) developed a tool to use point clouds and created a kinematic index (KI), which provides a probability of failure similar to Gokceoglu et al. (2000) but in 3D.

Matasci et al. (2018) modified the susceptibility developed for raster, assuming a density of discontinuities per unit area of the point cloud A = 1 m2 and \( \delta \) the angle of the normal vectors of the discontinuity and the topography.. The susceptibility for the planar joint i becomes:

where αi is the slope angle of the discontinuity, which comes from the inverse of the factor of safety for a planar surface FS = tanϕ/tan α. The number of wedges is given by (see eq. 13):

However, the probability P that the two sets can intersect to form a wedge corresponds to the product of the ratios of the trace length T and the apparent spacing:

The wedge stability also depends on the factor K (Hoek and Bray, 1981), which considers the effect of the angle between joint sets:

where θ is the slope of the joints in the plane perpendicular to the direction of sliding taken from the horizontal line. For wedge failure, the wedge susceptibility is inversely proportional to the safety factor, excluding the dependence on the friction angle:

where αij is the dip angle of the intersection of the joints. As the kinematic test for toppling is very similar to the planar failure, if we consider the pole of the joint sets instead of the sliding direction, we obtain:

When the slope is an overhang, then (23) is replaced by:

The lateral direction limitations of sliding are mostly identical to the standard one (see above). The final susceptibility per unit area at a given point of the 3D surface is given by.

The susceptibility scale must be calibrated. It provides relevant results, demonstrating the potential of this method. The rockface of Les Drus (Mont-Blanc massif) shows good agreement with the observations as well as in the Yosemite Valley (Figs. 16 and 17). The number of instabilities increases with susceptibility.

(modified from Matasci et la. 2018)

Rockfall susceptibility a compared to the activity b between November 2011 and September 2014 in the Drus rock face

(modified from Matasci et al. 2018)

Plot of the total rockfall susceptibility (Stot) for past known events from the face of the Drus (Mont-Blanc, France) and in the Glacier Point cliff (Yosemite Valley)

Power Law and Inventories

Inventories of rockfalls are fundamental to obtaining access to frequencies of the events related to the magnitude, i.e. volumes in the case of rockfall sources. In addition, it is the basic tool used to identify the dependence of rockfall events, on EFs and IPs. There is an inventory of scars, fallen blocks or potential instabilities.

Volume Power Laws

Wieczorek et al. (1995) showed that the rockfall volume sources in Yosemite Valley followed a power law, which was confirmed by a recent study (Guerin et al. 2020a), and Hungr et al. (1999) showed it along a road. The inventories of volume of events are based on field analyses, and currently, by an analysis of the differences between point clouds acquired either by laser scanner or SfM (Fig. 18). Dussauge et al. (2003) gave the frequency of failure of volume larger than V as:

(modified after Guerin et al. 2014)

a Example of rockfall source detection in the Mont Saint-Eynard cliff, comparing August 2009 and November 2012 terrestrial laser scanner data. b Power law deduced from 344 scars > 0.05 m3

where N0 is the number of failures larger than V0 that occurred during period Δt and b is the exponent deduced from the inventory. In addition, the return period τ is given by:

This method allows us to assess rockfall hazards (Hungr et al. 1999; Dussauge-Peisser et al. 2002; Hantz, 2011). In addition, assuming a random process, it allows us to calculate the probability that n instabilities of volume larger than V occur during a period of time T, with λ = 1/ τ, is given by (Hantz et al. 2003a):

It must be reminded that the probability that at least one event occurs during T, which is given by (Hantz et al. 2003a):

In addition, the probability and the frequency are equivalent, i.e. P(n > 0, T) ≈ λT, only if λT < <1. This type of approach provides the quantification of cliff erosion retreat rate by integrating (modified after Hantz et al. 2003b):

It provides the volume per unit of time knowing the minimum (V0) and the maximum volume (Vm), and the rate of retreat is simply given by volume dividing Vtot by the surface area of the cliff. The interest of such a method is also that if V0 and Vm are known and the cliff retreat velocity is known by other methods (Domènech et al. 2018), it can provide an estimation of the rockfall failure hazard. It must be emphasized that other types of distribution laws are used, such as the generalized Pareto distribution (De Biagi et al. 2017).

The Drawback of Power Laws

The new techniques allow to monitor cliffs periodically at a high resolution, from every hour to several months. Observed rockfall scars are often the result of several events (Van Veen, et al. 2017; Williams et al. 2018, 2019). The b value increases as the interval between acquisitions diminishes (Fig. 19), But the retreat rate obtained in both cases is the same. In addition, if the small volumes are not fully recorded, the power law has a rollover for a small value. In addition, if the volume cannot be larger than a certain size, the distribution becomes steeper for large volumes.

(modified after Williams et al. 2018)

a Rockfall scars identified nearly every hour for 10 months. The colors represent the time since 31 December. b Volume–frequency distributions calculated from the above inventory indicating the differences existing if the time-step interval varies from 1 h to 30 days

Other Types of Inventories

Regional inventories can provide some basic information, such as the type of rock, the structures that control the mechanisms (Copons and Vilaplana, 2008), and the weather parameters (Corò et al. 2015; D’Amato et al. 2016).

As stated above, the inventories of rockfall events can be performed using a series of point clouds. However, in some areas, only one acquisition of the 3D topography exists. In some cases, when the structures are easy to recognize, such as joint sets that delineate block shapes well, it may be possible to extrapolate the magnitude frequency of the sources based on the basal sliding surfaces and the heights of the scars (Santana et al. 2012). This permitted a Monte Carlo simulation to deduce a power law for the volumes of the source areas. This study was prolonged by the analysis of the potential instability following the same scheme but this time looking at the discontinuities that delineate blocks that may fail by deducing the block sizes by both measurements and simulations using the distributions of the spacing of the discontinuities (Mavrouli et al. 2015).

The frequency of rockfalls can also be assessed by the rockfall boulders. The advantage is that there are usually a lot of deposited blocks, allowing to fit a power law for the block volume distribution (Ruiz-Carulla et al. 2015; Hantz et al. 2016). The disadvantage is that the length of the deposition period is usually not known, except for some recent blocks. De Biagi et al. (2017) used the temporal information given by these recent blocks to transform the block volume distribution into a block volume—temporal frequency relation. Hantz et al. (2016) combined the retreat rate of the cliff with the block volume distribution to obtain the block volume—temporal frequency relation. The analysis of the impacts on trees using a dendrogeomorphic approach can provide a return period for rockfall (Stoffel et al. 2011), which can be used as a proxy for rockfall failure. Bull and Brandon (1998) showed that lichenometry (the size of the lichens is growing with time) may provide an interesting tool for dating events. In New Zealand, these authors demonstrated that several clusters of rockfalls were triggered by several earthquakes.

Hazard Rating Based on Geomechanics Factors

In rock mechanics, different approaches have been developed to assess rock mass quality (Barton et al. 1974; Hoek and Brown, 1997; Bieniawski, 1973, 1993). Many methods developed for rockfall source hazards are based on such multiparameter rating systems derived from tunneling and mining engineering. such as rock mass rating (RMR) (Bieniawski, 1973, 1993), rock mass quality Q (Barton et al. 1974) or the geological strength index (GSI) (Hoek, 1994). The main parameters used by these different approaches are:

-

Rock quality designation (RQD) (Deere, 1963), which is a measure of the density of discontinuities per unit of volume;

-

The sets of discontinuity spacings;

-

The joint roughness;

-

The weathering state of the discontinuity surface;

-

The strength of the intact rock;

-

The groundwater.

Each parameter possesses a scale that is used for the rating. The RMR and GSI are equivalent (Hoek, 1994), but the GSI is simpler in its application. Brideau et al. (2007) applied such an approach to slope stability analysis. A similar method was developed by Selby (1980, 1982) for geomorphological applications. RMR was adapted within the SMR (Romana, 1988, 1993), which is more adapted for rock slopes by introducing parameters related to the joint-slope orientation relationship, but this can now be performed directly using kinematic tests with a DEM (see above). Effect such as blasting is also added. Some attempts have been made to use point clouds to calculate part of the SMR (Riquelme et al. 2016). These approaches are aimed to assess the instantaneous stability of future excavations but not the time evolution of the slopes. So, they can be used for rockfall susceptibility assessment, but they are not enough for true rockfall hazard assessment including temporal frequency.

Using similar considerations, Pierson et al. (1990) developed the rockfall hazard rating system (RHRS) but added the slope height, erosion, block size, and climatic conditions. However, its full version includes a risk aspect. It has been modified integrating SMR (Budetta, 2004). Several versions of the RHRS exist (Ferrari et al. 2016). To provide an example of RHRS for the part dedicated to rockfall hazard score to failure (PS), Santi et al. (2009) proposed a formula for cut slope in sedimentary rocks (Budetta and Nappi, 2013):

where SH is the slope height, RF is the rockfall frequency, LF is the launching features (block that may fall), AS is the slope aspect, IN is the degree of interbedding, and AP is the aperture of discontinuities. The two most important variables are RF and LF that indicates the presence of a favorable rock mass to fail.

Hudson (1992) proposed the rock engineering system (RES), which considers several parameters and creates a matrix of interaction between them to qualify the causes and the effects of each one to the other. The sums of this rating provide the weight of each parameter. To qualify a slope based on the early work of Cancelli and Crosta (1993), Mazzoccola and Hudson (1996) used 20 parameters that must be evaluated by a rating ranging from 0 to 3, using parameters such as lithology, potential instability or intact rock mass strength. This method has been applied, for instance, along coastal areas (Budetta et al. 2008).

Harp and Noble (1993) observed rockfall linked to earthquakes. They evaluated susceptibility based on the Q-value from Barton et al. (1974) and proposed a hazard rating using a modified Q-value based on six discontinuity characteristics evaluated in the field:

where Jv is the total number of discontinuities, Jn is a number quantifying the number of joint sets (20–0.5), Jr is a number that characterizes the roughness of the discontinuities (0.5–4), Ja is the join state of alteration (4–0.75), Jw is the water reduction factor (0–1.0) and AF characterizes the joint apertures (1-15). Coe and Harp (2007) used this method to show the increase in susceptibility in fold hinges in folded limestones. In an area suffering earthquakes, Parise (2002) compared the Q-value with the method of Keefer (1993), which provided similar results. Harp and Noble (1993) proposed in their study that the number of rockfall with Q<Q’ within a given surface area is given by:

where No is the total number of rockfall events, for a given earthquakes sequence in California and a corresponds to the inverse of the average susceptibility Q. Such an approach is conceptually interesting because it links an observation to a statistical frequency distribution.

Back Analysis

Often, back analysis can be performed to obtain and permit information about the ranges of values such as friction angle, apparent cohesions or rock bridges. The most difficult type is to reconstruct the former topography and then choose the right mechanism and surfaces of failure, e.g. a scar of a former wedge of approximately 13,000 m3 in Switzerland (Fig. 20). A simple back calculation indicates that if no cohesion exists and no rock bridges are considered, the friction angle must be as high as 40.5°. Some rock bridges can then be suspected (Fig. 20).

Back analysis of a wedge in Saasthal (Switzerland), showing a manual reconstruction of the topography and the two joints involved. a. Identification of the surfaces in red (the paleo-topography) and in blue and green (the two planes). b. Point used to calculate the plane ordinations. c. Stereonet of wedge in lower hemisphere

A simple back analysis of a wedge failure that occurred between 29-30 October 2011 in Les Drus (Mont-Blanc Massif, France) necessitates at least 3% of rock bridges using the concept of cohesion and friction angle equivalent (Jennings, 1972). This result was confirmed by the calculation of fresh rock failure in the scar (Matasci et al. 2015b) (Fig. 21).

(modified after Matasci et al. 2015b)

A. wedge failure of 29–30 October 2011, with a volume of 43,000 m3. B. Safety factor and percentage of rock bridges

Using Rockfall Modeling to Assess Failure Susceptibility

To assess the activity of rockfall, one method consists of establishing a link between rockfall sources and scree slopes. Menendez Duarte and Marquinez (2002) used the watershed area (GIS function) and the surface areas in pixels of the active scree slopes that are linked to the sources to deduce a susceptibility value. Based on the trajectories of rockfall using the software HY-STONE, Frattini et al. (2008) linked the screes to their potential sources, which allows us to identify the active cliffs if they are linked to active scree slopes. This method is of interest because with powerful computers and efficient numerical models, it permits the fast assessment of rockfall failure susceptibility. This method can also be used as a tool to test whether the rock sources reach any object, which permits the identification of zones that must be monitored or mitigated (Fig. 22).

(modified after Noël et al. 2016). The same grayscale is applied to three parameters: (1) the source area where dark gray indicates the probability of reaching the road; (2) the number of impacts per square meter reaching the road (grayscale on the road); and (3) the block velocities indicated by the trajectories

Rockfall simulation based on point cloud

Fragmentation at Source

The probability of propagation can be affected by rock fragmentation, which changes the rockfall hazard. Therefore, one of the aspects of the source characterization is to estimate the potential fragmentation by breakage. Ruiz-Carulla et al. (2017) proposed a rockfall fractal fragmentation model (RFFM) starting from the in situ block size distribution (IBSD), which provides the rockfall block size distribution (RBSD) (Fig. 23). It is based on three parameters: the probability of failure of the initial volume in smaller blocks, the survival rate of the blocks at each stage of breakage, and a factor that describes the fractal relationship between block sizes. By calibrating the three parameters, the authors were able to reproduce the observations made in Pyrenees (Fig. 23).

Remarks

The previous sections were dedicated to the assessment of the hazard failure or susceptibility to failure of rockfall sources by using geomorphic, geotechnical or observational parameters. However, none of them were linked to the time evolution of the stability. In recent years, improvements in observational techniques have permitted monitoring of destabilization processes. It is not yet operational but is starting to provide new results and is opening new avenues of research.

Processes Mainly Driven by Groundwater and Precipitation

Weathering

Weathering is known to be one of the main factors decreasing strength. The degree of weathering is used to qualify the mechanical properties; nevertheless, it remains a qualitative and approximative qualification, since rock is diverse, but it must reflect the degree of degradation by chemical or physical weathering from fresh rock (Gonzalez de Vallejo and Ferrer 2011). It usually follows a scale from I to VI, i.e. from fresh rock to soils. It is also an input of the GSI classification (Hoek, 1994) but remains a fuzzy concept (Fig. 24).

(Modified from Wyllie, 2018)

Weathering profile and associated geotechnical weathering grades

Implicit weathering effects or fatigue are often used to model strength degradation by changing cohesion and/or friction angle (Eberhardt et al. 2004). Recent works show that strength weakening can occur for altered granite (Chen et al. 2019) by submitting them to wetting at ambient temperature and drying (105°) cycles, showing the that it increases with number of cycles.

Chemical weathering is recognized as an important weakening process for sedimentary rocks by the dissolution of minerals, but it is also possible to strengthen the rock by precipitation-inducing cementations (Chigira and Oyama, 2000). Matsukura and Hirose (2000) experimentally showed that the weathering of rocks depends on lithology and the environment controlling the chemistry of water. The situation can be diverse. Within samples taken in cataclastic material from fault zones, in increasingly weathered material approaching the la Clapière landslide (France), Lebourg et al. (2011) observed that the friction angle increased slightly and the cohesion decreased by leaching fine-grained material. In contrast, Jaboyedoff et al. (2004b) proposed that friction and cohesion may decrease in granite within the sliding surface by crushing and chemical weathering (feldspar dissolution and clay precipitation), inducing soil-like behavior. In some special environments, such as along coastal cliffs, the effect of seawater can induce salt crystallization, inducing a decrease in strength (Duperret et al. 2005).

Rainfall Effect

Rainfall has always been observed to affect rockfalls, but few papers have reported correlations. Nevertheless, most of the studies indicate a strong dependence of rockfall activity on rainfall intensities (Fig. 25). D’Amato et al. (2016) demonstrated for limestone cliff near Grenoble (France) (Fig. 18) that the frequency of rockfall occurrence was 26 times higher than that in “normal conditions” if the precipitation intensity was higher than 5 mm/h. This value is nearly four times greater than the effect of freezing and thawing in this environment. Based on an inventory of 245 rockfall events over 13 years in Hong Kong, Chau et al. (2003) showed that the threshold to trigger rockfall ranges from 150 to 200 mm in precipitation (Fig. 26) based on two days of antecedent rainfall statistics (for one day, the rockfall event is not significant in terms of the number of events). However, significant rockfall can develop when 100 mm rainfall occurs in one day. These authors conclude that the best indicator for rockfall hazard triggering is the two-day antecedent precipitation because of its good correlations with event frequencies.

Statistics of the average number of rockfalls per day with respect to the two antecedent days before the rockfall event (after Chau et al. 2003)

Two case studies of rockfall disasters among other impacting roads showed in northern Taiwan that rockfall triggering occurred when 94.5 mm/h and nearly 200 mm precipitation were reached (Wei et al. 2014). In Hokkaido (Japan), rock toppling was triggered elven days after the end of 10 days of precipitation for a total precipitation of 290 mm. This indicates a delayed rise in the groundwater level by precipitation (Yamagishi, 2000). Helmstetter and Garambois (2010) showed that in rock slope instabilities such as La Clapière (France) rockfalls can be triggered by rainfall of more than 1 mm that also occurs after a delay of a few days. This demonstrated the interplay of direct effects with infiltration and groundwater.

Rock Breathing

Groundwater is known to have a great effect on stability. One of the potential sources of fatigue of a rock mass can be caused by cyclic variation in the groundwater table (Jaboyedoff et al. 2009). Recent observations based on GB-InSAR permit the observation of rock breathing within the rock face located close to the active rock instability of Mannen in Norway (Figs. 27 and 28) (Rouyet et al. 2017). The results showed inflation (movement outward from the rock cliff) during the snowmelt period, i.e. June to mid-July, and a deflation later during the summer (movement toward the rock cliff). The cause is most likely the water infiltration in the fracture network during the melting phase, inflating the rock mass by hydrostatic pressure. In addition, in the lower part, the joints are potentially obstructed by ice, which can increase the pore pressures. The infiltration is obvious at the top of the cliff by the presence of small lakes and syphons infiltrating down into the rock mass. Once the melt ends, the permeability is great enough to allow rather fast drainage, and the rock mass retracts. This process may follow a hysteresis, meaning that progressively, the rock mass expands irreversibly, progressively dislocating the rock mass.

Process Mainly Driven by Thermal Effects

Sunshine Effect and Associated Thermal Effects

It is well known that the sun can heat rock faces to rather high temperatures, but the real affect has been recognized and modeled only recently. In May 2000, Rochers de Valabres (France) suffered a 2000 m3 rockslide, with no apparent triggering factors or source of destabilization, by “thermally induced wedging” (Bakun-Mazor et al. 2013) (Fig. 6). Later, investigations showed that the area suffered daily temperature fluctuations that induced daily cyclic movements of nearly 1 mm. The modeling based on elastoplastic rheology showed a possible potential increase in the downward displacement in a few days that led to failure (Gunzburger et al. 2005).

Seasonal downward movement fluctuations have been shown above the Randa rockslide (Switzerland), which are correlated with ambient temperature (Jaboyedoff et al. 2004c; Gischig et al. 2011a). Observations and models show hysteresis of the displacements if the model includes slip weakening (Gischig et al. 2011a; 2011b). This model showed in that case study that stress variation significantly affected the slope up to 100 m depth.

A study in Yosemite Valley (USA) has shown that an exfoliation sheet was bent by up to 1 cm in summer in the center over a length of 19 m with increasing daily temperature by a delay of approximately 2 h (Collins and Stock, 2016). It was also demonstrated that seasonal variation existed, which showed a long-term increase in the bending deformation (Fig. 29). The difference in temperature of the Yosemite exfoliation sheet (Fig. 30) from 8 AM to 11:40 AM indicated a difference up to 24 °C (Guérin et al. 2020b).

a Monthly maximum aperture of the rock sheet in b showing the seasonal cycles and drift indicating the extension of the sheet (after Collins and Stock, 2016)

Currently, the application of thermal imaging is emerging as a tool to monitor rock outcrops and to understand rock fatigue. For instance, Pappalardo et al. (2016) developed a cooling rate index (CRI) that corresponds to the ability to exchange heat with air. The CRI is correlated with the degree of fracturing or is anticorrelated to the RQD. Furthermore, it appears that thermal imaging can be a tool to identify weakness (Pappalrado et al. 2016) and rock bridges (Guerin et al. 2019). A rock flake can show a hotter temperature at its surface in front of rock bridges (Fig. 31).

(modified after Guerin et al. 2019)

Thermal image of Boot Flake on 8 October indicating a hotter zone in the middle lower part ha that corresponds to the rock bridges

Freezing and Thawing

The effect of freezing has long been proposed as a destabilizing factor for rock slopes by ice-blocked drainage (Wyllie, 2018). The freezing and thawing cycles generate expansion during phase changes, cycles of thermal contraction and expansion of ice, and a final expansion before melting (D’Amato et al. 2016). D’Amato et al. (2016) showed that rockfall frequency increased by seven times compared to normal conditions in the limestone cliffs above Grenoble (Fig. 18) and observed that most of these rockfall initiations occurred during the melting phase of the ice because of its expansion, in addition to the effect of water pressure caused by melted ice water (Fig. 32).

(modified after Matasci et al. 2015b)

Ice in the Les Drus rock face visible after a rockfall.

In high alpine environments, as in the Mont-Blanc Massif (France), it is well established that the active layer (zone that can suffer temperature >0°) of the permafrost is deeper during hot periods, leading to an increase in rockfall activity triggered by ice melting, as in 2003 and 2015 (Ravanel et al. 2017). Out of these exceptional periods, global warming continues to degrade permafrost and trigger rockfalls (Ravanel et al. 2010).

Surface crack monitoring at nearly 3000 m a.s.l. in the Alps demonstrated that the freezing and thawing cycles, within the active layer or within the surface freezing and thaw zone without permafrost, lead to crack opening by frost wedging, i.e. freezing in cracks of snow melt water, rainfall, etc. The active layer thickness or frost-thawing layer plays an important role in rockfall volume initiation (Ravanel et al. 2017; Matsuoka and Sakai, 1999). The erosion rate measured in a rock cliff at nearly 3000 m a.s.l. in a non-permafrost zone rock wall facing SW reached 6.5 mm/year (Kenner et al. 2011).

The ice appears before failure; it becomes a cement before any failure, it maintains stability while rock bridges decrease in size.

Rock Stability Degradation

The unstable rock masses are linked to their ground either by rock bridges or maintained by friction. As it approaches failure, these links are broken or weakened. Seismometers installed on the unstable rock mass allow monitoring of the response to seismic noise, which provides information about its stiffness. Lévy et al. (2010) showed that the 1st mode of resonance of a limestone rock column of 21,000 m3 decreases its resonance frequency with time. This frequency was correlated and mostly reversible with air temperature. The freezing and thaw cycles led to a decrease in the resonance frequency, which led to irreversible damage. Note that during the freezing period, the frequency can increase because of the stronger link of the rock mass with its substratum. Rainfall can decrease the frequency because the weight of the column increases or because the joints change their mechanical properties with water infiltration (no pressure is expected because there is no possibility to rise the pressure because of fractures).

Short-Term Rockfall Forecast

The previous sections were dedicated to the rockfall failure hazard in the long term, but here we look at the short-term hazard assessment, in other words, the forecast of the failure time. The traditional monitoring technics allow to measure the displacements of some specific points. Currently, it is possible to monitor failure in 3D. It is possible to identify a forecast over a few days. In 2006, monitoring using the LiDAR technique allowed us to forecast the collapse of a 170,000 m3 rockfall after moving at 1.25 m/day in the Eiger area (Switzerland) (Oppikofer et al. 2008). GB-InSAR can also be used to monitor rockfall initiation (Intrieri et al. 2016), but the resolution is lower. Blocks in rock faces in Catalonia have failed, clearly showing an exponential acceleration before rock failure (Royán et al. 2014). Similar behavior has been observed in the final failure stage of a block failure within the Séchilienne rockslide, where the area was scanned every 30 min by a terrestrial laser scanner (Fig. 33). The identification of the toppling movement was easily performed by computing the difference between cloud points (Kromer et al. 2017).

Example of toppling a block of 80 m3 failure showing the pre-failure movements. This was analyzed based on cloud points acquired every 4 h (from Kromer et al. 2017). a. Block location in the Séchillienne rockslide (France). b. 3D projection showing the deformation along the local normal (color scale). c. Time series of the displacement of points A, B and C located on the moving block; Point D is stable. d. Point A, B and C velocities averaged over 24 h

Such a failure phase for small volumes compared to large rock instability is still under investigation because it seems it does not follow the inverse velocity model (Fukuzono 1990; Voight, 1989). Rose and Hungr (2007) pointed out that the limited volumes compared to deep seated rockslides do not follow the inverse velocity model.

Prior to failure, the seismicity shows an increase in the rate of seismic events and signal energy (Amitrano et al. 2005). The time of detachment shows a clear increase in seismic signal at relatively low frequency (Le Roy et al. 2019).

These studies are also fundamental because they can be analyzed to understand which patterns are leading to failure and which patterns are not.

New Techniques

Various attempts are in progress to assess rockfall failure susceptibility and hazards with new techniques. Some attempts have been made using fuzzy logic based on altitude difference, number of discontinuities, number of wedges, number of potential slides and known source area (Aksoy and Ercnoglu 2006). Different machine learning methods have been applied to identify rockfall source areas, which provided rather good results (Fanos et al. 2018). They were based on topographic indexes and other GIS data types. Losasso and Sdao (2018) used a neural network to assess rockfall source susceptibility based on lithology, DEM, slope angles, land use, etc. and the former scars to train the neurones.

The drawbacks of these methods are that they will favor the most important factors, such as slope angle, and are black boxes. However, it will be very interesting to use a large database with a large amount of information to try to extract the main trend of rockfall causes and to know the rockfall frequency in different contexts.

Discussion and Conclusion

In most cases, the rockfall hazard assessment is based on rockfall propagation Pp, and a few methods are dedicated to the failure frequency λ. When λ is quantified, it is usually by empirical methods, semi-empirical methods or oversimplified geomechanical models leading to a susceptibility scale. In the first part, this paper presented the main concepts and tools used to assess the hazard of rock failure, but we did not integrate the time dependence of the stability, except for the water table changes and earthquake accelerations.

There is no real-time dependence models of the stability in terms of its link to the degradation of strength. Furthermore, there is a need to consider more complex mechanisms of failure, mainly linked to rock bridge progressive failure (Lévy et al. 2010; Guerin et al. 2019). However, this cannot be done without improving our knowledge on the external factors that degrade the strength of rock masses or discontinuities (Chen et al. 2019). The approach will be very different if it is a site-specific or a regional study.

It is possible to extract more information from real 3D surface imaging than what is currently performed. It must permit us to characterize the degree of fracturing more precisely, which is beyond the scope of this paper. It can go up to microtopography with a potable laser scanner to characterize the roughness of joints in the field and probably detect its evolution under weathering effects.

Computers increase their capacity, and models based on simulations of discrete fracture networks (DFNs) (Grenon and Hadjigeorgiou, 2008), including high-resolution 3D topography coupled with time-dependent stability evolution, will certainly be the next step to assess failure hazards or susceptibility based on the principle of Park and West (2001). The effects of groundwater and earthquakes must be better assessed. However, in any case, this will be linked to the traditional field work, with systems such as GIS (Hoek, 1994) or others presented here or those that are being newly developed (Riquelme et al. 2016).

The inventory and its statistics must be developed by improving the probabilistic models (De Biagi et al. 2017). There are many drawbacks because power laws present some limitations, such as volume limits, that must be addressed. Another issue is to link the fracturing of the sources with the real volume involved in one event and the number of blocks that will be generated (Ruiz-Carulla et al. 2016).

The biggest challenges are linked to the quantification of the influence of external factors that lead to strength degradation. We know now that fatigue can be caused by cyclic slope movements, including hysteresis linked to groundwater water table level changes, thermal effects such isolation and freezing and thawing and earthquakes. In addition, until now, rainfall effects have been seen only as a triggering factor and not as a degrading factor, which must also be assessed in the future. This can be summarized by the degradation of joints and rock mass strengths and the progressive failure of rock bridges (Ruiz-Carulla et al. 2016).

One tool that can be used to better understand this process is to perform an increasing back analysis of events, carefully reconstructing past topography and rock bridges, including the effect of external factors such as insolation, water circulations, freezing and thawing, and weathering evolution. This additional information will permit us to conceptualize Bayesian probabilistic models and have enough inputs to use machine learning methods when the data are enough.

References

Abellán A, Oppikofer T, Jaboyedoff M, Rosser NJ, Lim M, Lato M (2014) State of science: terrestrial laser scanner on rock slopes instabilities. Earth Surf Process Land 39(1):80–97. https://doi.org/10.1002/esp.3493

Agliardi F, Crosta GB (2003) High resolution three-dimensional numerical modelling of rockfalls. Int J Rock Mech Min Sci 40(4):455–471

Aksoy H, Ercanoglu M (2006) Determination of the rockfall source in an urban settlement area by using a rule-based fuzzy evaluation. Nat Hazards Earth Syst Sci 6(6):941–954

Amitrano D, Grasso JR, Senfaute G (2005) Seismic precursory patterns before a cliff collapse and critical point phenomena. Geophys Res Lett 32(8)

Baillifard F, Jaboyedoff M, Sartori M (2003) Rockfall hazard mapping along a mountainous road in Switzerland using a GIS-based parameter rating approach. Nat Hazards Earth Syst Sci 3:435–442. https://doi.org/10.5194/nhess-3-435-2003

Bakun-Mazor D, Hatzor YH, Glaser SD, Carlos Santamarina J (2013) Thermally vs. seismically induced block displacements in Masada rock slopes. Int J Rock Mech and Min Sci 61:196–211

Barton N, Lien R, Lunde J (1974) Engineering classification of rock masses for the design of tunnel support. Rock Mech 6(4):189–236

Bieniawski ZT (1973) Engineering classification of jointed rock masses. Trans S Afr Inst Civ Eng 15:335–344

Bieniawski ZT (1993) Classification of rock masses for engineering: the RMR system and future trends. Compr Rock Eng 3:553–573

Brideau M-A, Stead D, Roots C, Orwin J (2007) Geomorphology and engineering geology of a landslide in ultramafic rocks, Dawson City, Yukon. Eng Geol 89:171–194

Budetta P (2004) Assessment of rockfall risk along roads. Nat Hazards Earth Syst Sci 4:71–81

Budetta P, Nappi M (2013) Comparison between qualitative rockfall risk rating systems for a road affected by high traffic intensity. Nat Hazards Earth Syst Sci 13(6):1643–1653

Budetta P, Santo A, Vivenzio F (2008) Landslide hazard mapping along the coastline of the Cilento region (Italy) by means of a GIS-based parameter rating approach. Geomorphology 94(3):340–352

Bull WB, Brandon MT (1998) Lichen dating of earthquake-generated regional rockfall events, Southern Alps, New Zealand. Geol Soc Am Bull 110:60–84

Cancelli A, Crosta G (1993) Hazard and risk assessment in rockfall prone areas. In: Skip BO (ed) Risk reliability in ground engineering. Thomas Telford, London, pp 177–190

Carter BJ, Lajtai EZ (1992) Rock slope stability and distributed joint systems. Can Geotech J 29(1):53–60

Chau KT, Wong RHC, Liu J, Lee CF (2003) Rockfall hazard analysis for hong kong based on rockfall inventory. Rock Mech Rock Eng 36(5):383–408

Chen X, He P, Qin Z (2019) Strength weakening and energy mechanism of rocks subjected to Wet-Dry Cycles. Geotech Geol Eng 37(5):3915–3923

Chigira M, Oyama T (2000) Mechanism and effect of chemical weathering of sedimentary rocks. Eng Geol 55(1):3–14

Coe JA, Harp EL (2007) Influence of tectonic folding on rockfall susceptibility, American Fork Canyon, Utah, USA. Nat Hazards Earth Syst Sci 7(1):1–14

Collins BD, Stock GM (2016) Rockfall triggering by cyclic thermal stressing of exfoliation fractures. Nat Geosci 9(5):395–400

Copons R, Vilaplana JM (2008) Rockfall susceptibility zoning at a large scale: from geomorphological inventory to preliminary land use planning. Eng Geol 102:142–151

Corò D, Galgaro A, Fontana A, Carton A (2015) A regional rockfall database: the Eastern Alps test site. Environ Earth Sci 74(2):1731–1742

Corona C, Trappmann D, Stoffel M (2013) Parameterization of rockfall source areas and magnitudes with ecological recorders: when disturbances in trees serve the calibration and validation of simulation runs. Geomorphology 202:33–42

Crosta GB, Agliardi F, Frattini P, Lari S (2015) Key issues in rock fall modeling, hazard and risk assessment for rockfall protection. Engineering Geology for Society and Territory—vol 2. Springer International Publishing, Cham, pp 43–58

D’Amato J, Hantz D, Guerin A, Jaboyedoff M, Baillet L, Mariscal A (2016) Influence of meteorological factors on rockfall occurrence in a middle mountain limestone cliff. Nat Hazards Earth Syst Sci 16(3):719–735

De Biagi V, Napoli ML, Barbero M, Peila D (2017) Estimation of the return period of rockfall blocks according to their size. Nat Hazards Earth Syst Sci 17(1):103–113

Deere DU (1963) Technical description of rock cores for engineering purposes, Felsmechanik und In Qenieurgeologie (Rock Mechanics and Engineering Geology), 1:1, 16–22. 64

Dershowitz WS, Einstein HH (1988) Characterizing rock joint geometry with joint system models. Rock Mech Rock Eng 21:21–51

Domènech G, Corominas J, Mavrouli O, Merchel S, Abellán A, Pavetich S, Rugel G (2018) Calculation of the rockwall recession rate of a limestone cliff, affected by rockfalls, using cosmogenic chlorine-36. Case study of the Montsec Range (Eastern Pyrenees, Spain). Geomorphology 306:325–335

Duperret A, Taibi S, Mortimore RN, Daigneault M (2005) Effect of groundwater and sea weathering cycles on the strength of chalk rock from unstable coastal cliffs of NW France. Eng Geol 78(3):321–343

Dussauge C, Grasso JR, Helmstetter A (2003) Statistical analysis of rockfall volume distributions: implications for rockfall dynamics. J Geophys Res: Solid Earth, 108(B6)

Dussauge-Peisser C, Helmstetter A, Grasso J-R, Hantz D, Desvarreux P, Jeannin M, Giraud A (2002) Probabilistic approach to rock fall hazard assessment: potential of historical data analysis. Nat Hazards Earth Syst Sci 2:15–26. https://doi.org/10.5194/nhess-2-15-2002

Eberhardt E, Stead D, Coggan JS (2004) Numerical analysis of initiation and progressive failure in natural rock slopes—the 1991 Randa rockslide. Int J Rock Mech Min Sci 41(1):69–87

Fanos AM, Pradhan B, Mansor S, Yusoff ZM, Abdullah AFb (2018) A hybrid model using machine learning methods and GIS for potential rockfall source identification from airborne laser scanning data. Landslides 15(9):1833–1850

Farvacque M, Lopez-Saez J, Corona C, Toe D, Bourrier F, Eckert N (2019) How is rockfall risk impacted by land-use and land-cover changes? Insights from the French Alps. Global Planet Change 174:138–152

Fell R, Corominas J, Bonnard C, Cascini L, Leroi E, Savage WZ (2008) Guidelines for landslide susceptibility, hazard and risk zoning for land use planning. Eng Geol 102(3):85–98

Fernandez-Hernández M, Paredes C, Castedo R, Llorente M, de la Vega-Panizo R (2012) Rockfall detachment susceptibility map in El Hierro Island, Canary Islands. Spain Nat Hazards 64(2):1247–1271

Ferrari F, Giacomini A, Thoeni K (2016) Qualitative Rockfall Hazard Assessment: A Comprehensive Review of Current Practices. Rock Mech Rock Eng 49(7):2865–2922

Frattini P, Crosta G, Carrara A, Agliardi F (2008) Assessment of rockfall susceptibility by integrating statistical and physically-based approaches. Geomorphology 94(3):419–437

Frayssines M, Hantz D (2006) Failure mechanisms and triggering factors in calcareous cliffs of the Subalpine Ranges (French Alps). Eng Geol 86(4):256–270

Fukuzono T (1990) Recent studies on time prediction of slope failure. Landslide News 4:9–12

Gigli G, Casagli N (2011) Semi-automatic extraction of rock mass structural data from high resolution LIDAR point clouds. Int J Rock Mech Min Sci 48(2):187–198

Gigli G, Morelli S, Fornera S, Casagli N (2014) Terrestrial laser scanner and geomechanical surveys for the rapid evaluation of rock fall susceptibility scenarios. Landslides 11(1):1–14

Gischig VS, Moore JR, Evans KF, Amann F, Loew S (2011a) Thermomechanical forcing of deep rock slope deformation: 1. Conceptual study of a simplified slope. J Geophys Res: Earth Surf 116(F4)

Gischig VS, Moore JR, Evans KF, Amann F, Loew S (2011b) Thermomechanical forcing of deep rock slope deformation: 2. The Randa rock slope instability. J Geophys Res: Earth Surf 116(F4)

Gokceoglu C, Sonmez H, Ercanoglu M (2000) Discontinuity controlled probabilistic slope failure risk maps of the Altindag (settlement) region in Turkey. Eng Geol 55:277–296

González de Vallejo L, Ferrer M (2011) Geological Engineering. CRC Press/ Balkema, p 678

Grenon M, Hadjigeorgiou J (2008) A design methodology for rock slopes susceptible to wedge failure using fracture system modelling. Eng Geol 96(1–2):78–93

Grenon M, Matasci B, Jaboyedoff M, Stock GM (2014) Discrete fracture network modelling using Coltop3D for rockfall potential assessment at Glacier Point, Yosemite Valley. Proceedings of t DFNE 2014, the 1st international conference on discrete fracture network engineering, Vancouver

Gruner U (2008) Climatic and meteorological influences on rockfall and rockslides (“Bergsturz”). In: Protection of populated territories from floods, debris flow, mass movements and avalanches, 26–30 May 2008, 147–158

Günther A (2003) SLOPEMAP: programs for automated mapping of geometrical and kinematical properties of hard rock hill slopes. Comput Geosci 29(7):865–875

Günther A, Carstensen A, Pohl W (2004) Automated sliding susceptibility mapping of rock slopes. Nat Hazards Earth Syst Sci 4:95–102. https://doi.org/10.5194/nhess-4-95-2004

Guérin A, Hantz D, Rossetti J-P, Jaboyedoff M (2014) Brief communication “Estimating rockfall frequency in a mountain limestone cliff using terrestrial laser scanner”. Nat Hazards Earth Syst Sci Discuss 2:123–135. https://doi.org/10.5194/nhessd-2-123-2014

Guérin A, Jaboyedoff M, Collins BD, Derron M-H, Stock GM, Matasci B, Boesiger M, Lefeuvre C, Podladchikov YY (2019) Detection of rock bridges by infrared thermal imaging and modeling. Sci Rep 9(1):13138

Guerin A, Stock GM, Radue MJ, Jaboyedoff M, Collins BD, Matasci B, Avdievitch N, Derron M-H (2020a) Quantifying 40 years of rockfall activity in Yosemite Valley with historical Structure-from-Motion photogrammetry and terrestrial laser scanning. Geomorphology 356:107069

Guerin A, Jaboyedoff M, Collins BD, Stock GM, Derron M-H, Abellán A, Matasci B (2020b) Remote thermal detection of exfoliation sheet deformation. Landslides. https://doi.org/10.1007/s10346-020-01524-1

Gunzburger Y, Merrien-Soukatchoff V, Guglielmi Y (2005) Influence of daily surface temperature fluctuations on rock slope stability: case study of the Rochers de Valabres slope (France). Int J Rock Mech Min Sci 42(3):331–349

Hantz D (2011) Quantitative assessment of diffuse rock fall hazard along a cliff foot. Nat Hazards Earth Syst Sci 11(5):1303–1309

Hantz D, Vengeon JM, Dussauge-Peisser C (2003a) An historical, geomechanical and probabilistic approach to rock-fall hazard assessment. Nat Hazards Earth Syst Sci 3:693–701. https://doi.org/10.5194/nhess-3-693-2003

Hantz D, Dussauge-Peisser C, Jeannin M, Vengeon JM (2003b) Rock fall hazard assessment: from qualitative to quantitative failure probability. In: Int conf on Fast Slope Movements, Naples, 263–267

Hantz D, Ventroux Q, Rossetti J-P, Berger F (2016) A new approach of diffuse rockfall hazard. Landslides and Engineered Slopes. CRC Press, Experience, Theory and Practice, Napoli, Italy, pp 1531–1535

Harp EL, Noble MA (1993) An engineering rock classification to evaluate seismic rock-fall susceptibility and its application to the Wasatch Front. Bull Ass Eng Geol 30(3):293–319

Helmstetter A, Garambois S (2010) Seismic monitoring of Séchilienne rockslide (French Alps): analysis of seismic signals and their correlation with rainfalls. J Geophys Res 115(F3)

Hoek E (1994) Strength of rock and rock masses. ISRM News J 2(2):4–16

Hoek E, Bray JW (1981) Rock slope engineering. The Institution of Mining and Metallurgy, London, p 358

Hoek E, Brown ET (1997) Practical estimates or rock mass strength. Int J Rock Mech Min Sci Geomech Abstr 34(8):1165–1186

Hudson JA (1992) Rock engineering systems: theory and practice. Ellis Horwood, London, p 185

Hungr O, Evans SG, Hazzard J (1999) Magnitude and frequency of rock falls along the main transportation corridors of southwestern British Columbia. Can Geotech J 36:224–238

Hungr O, Leroueil S, Picarelli L (2014) The varnes classification of landslide types, an update. Landslides 11(2):167–194

Intrieri E, Gigli G, Lombardi L, Raspini F, Salvatici T, Bertolini G (2016) Integration of ground-based interferometry and terrestrial laser scanning for rockslide and rockfall monitoring. Rend Online Soc Geol Ital 41:243–246

Jaboyedoff M, Labiouse V (2003) Preliminary assessment of rockfall hazard based on GIS data. 10th international congress on rock mechanics ISRM 2003—technology roadmap for rock mechanics. Johannesburgh, South Africa, pp 575–578

Jaboyedoff M, Derron MH (2020) Landslide analysis using laser scanner. In: Tarolli P (ed) Remote Sensing of Geomorphology. Elsevier

Jaboyedoff M, Baillifard F, Hantz D, Heidenreich B, Mazzoccola D (2001) Terminologie. In: Carere K,´ Ratto S, Zanolini FE (eds) Prevention des mouvements´ de versants et des instabilites de falaises, pp 48–57

Jaboyedoff M, Derron MH, Locat J, Michoud C (2021) Traité Mouvements Gravitaires. PPUR

Jaboyedoff M, Metzger R, Oppikofer T, Couture R, Derron MH., Locat J. Turmel D (2007) New insight techniques to analyze rock-slope relief using DEM and 3D-imaging cloud points: COLTOP-3D software. In Eberhardt E, Stead D, Morrison T (eds) Rock mechanics: Meeting Society’s Challenges and demands, vol 1, Taylor & Francis. pp 61–68

Jaboyedoff M, Baillifard F, Philippossian F, Rouiller J-D (2004a) Assessing fracture occurrence using “weighted fracturing density”: a step towards estimating rock instability hazard. Nat Hazards Earth Syst Sci 4:83–93. https://doi.org/10.5194/nhess-4-83-2004

Jaboyedoff M, Baillifard F, Bardou E, Girod F (2004b) Weathering, cycles of saturation-unsaturation, and strain effects as principal processes for rock mass destabilization. Q J Eng Geol Hydrogeol 37:95–103

Jaboyedoff M, Ornstein P, Rouiller JD (2004c) Design of a geodetic database and associated tools for monitoring rock-slope movements: the example of the top of Randa rockfall scar. Nat Hazards Earth Syst Sci 4(2):187–196

Jaboyedoff M, Baillifard F, Derron MH, Couture R, Locat J, Locat P (2005) Switzerland modular and evolving rock slope hazard assessment methods. In: Senneset K, Flaate KA, Larsen J (eds) Landslide and avalanches. ICFL

Jaboyedoff M, Couture R, Locat P (2009) Structural analysis of Turtle Mountain (Alberta) using digital elevation model: Toward a progressive failure. Geomorphology 103(1):5–16

Jennings JE (1972) An approach to the stability of rock slopes based on the theory of limiting equilibrium with a material exhibiting anisotropic shear strength, stability of rock slopes, proceedings of the 13th us symposium on rock mechanics (ed. ej cording), urbana, Illinois, New York

Keefer DK (1993) The susceptibility of rock slopes to earthquake-induced failure. Bull Assoc Eng Geol 30(3):353–361

Kenner R, Philips M, Danioth C, Denier C, Thee P, Zgraggen A (2011) Investigation of rock and ice loss in a recently deglaciated mountain rock wall using terrestrial laser scanning: gemsstock, Swiss Alps. Cold Reg Sci Technol 67:157–164

Kromer R, Walton G, Gray B, Lato M, Group R (2019) Development and optimization of an automated fixed-location time lapse photogrammetric rock slope monitoring system. Remote Sens 11(16):18

Kromer RA, Abellán A, Hutchinson DJ, Lato M, Chanut M-A, Dubois L, Jaboyedoff M (2017) Automated terrestrial laser scanning with near-real-time change detection – monitoring of the Séchilienne landslide. Earth Surf Dyn 5(2):293–310

Le Roy G, Helmstetter A, Amitrano D, Guyoton F, Le Roux-Mallouf R (2019) Seismic analysis of the detachment and impact phases of a rockfall and application for estimating rockfall volume and free-fall height. J Geophys Res: Earth Surf 124(11):2602–2622

Lebourg T, Mickael H, Hervé J, Samyr EBB, Thomas B, Swann Z, Emmanuel T, Maurin V (2011) Temporal evolution of weathered cataclastic material in gravitational faults of the La Clapiere deep-seated landslide by mechanical approach. Landslides 8(2):241–252

Leroueil S, Locat J (1998) Slope movements—geotechnical characterization, risk assessment and mitigation. In: Maric B, Lisac L, Szavits-Nossan A (eds) Geotechnical Hazards. Balkema, Rotterdam, pp 95–106

Lévy C, Baillet L, Jongmans D, Mourot P, Hantz D (2010) Dynamic response of the Chamousset rock column (Western Alps, France). J Geophys Res 115(F4)