Abstract

In this paper, measures of mutual independence of many-vector random processes were defined. Based on these measures, permutation tests of mutual independence of these random processes were also given. The properties of the described methods were presented using simulation studies for univariate and multivariate processes.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Many processes currently used in different fields of science and research lead to random observations that can be analyzed as curves. We can also find a large amount of data for which it would be more appropriate to use some interpolation techniques and consider them as functional data. This approach turns out to be essential when data have been observed at different time intervals.

Earlier, Górecki et al. (2017, 2020) showed how to use commonly known measures of correlation for two sets of variables: \(\rho V\) coefficient (Escoufier 1973), distance correlation coefficient (\({{\,\mathrm{dCorr}\,}}\)) (Székely et al. 2007), and HSIC coefficient (Gretton et al. 2005) for multivariate functional data.

In this paper, using \(\rho V\) and \({{\,\mathrm{dCorr}\,}}\) coefficients, we define measures of mutual independence of vector random processes whose realizations are multidimensional functional data. Based on these measures, permutation tests of mutual independence of vector random processes \(\pmb {X}_1,\ldots ,\pmb {X}_K\), \(K\ge 2\), \(\pmb {X}_i\in {L}_2^{p_i}(I)\), where \(L_2(I)\) is a Hilbert space of square-integrable functions on the interval I, \(i=1,\ldots ,K\) are also considered.

The rest of this paper is organized as follows. We first review the concept of transformation of discrete data to multivariate functional data (Sect. 2). Section 3 contains the functional version of the \(\rho V\) and \({{\,\mathrm{dCorr}\,}}\) coefficients. Section 4 is devoted to measures of mutual independence of vector random processes and permutation tests of mutual independence associated with these measures. Section 5 contains the results of our simulation experiments.

2 Functional Data

Let us assume that \(\pmb {X}=(X_1,X_2,...,X_p)^{\top }\in L_2^p(I)\) is p-dimensional random process, where \(L_2(I)\) is the Hilbert space of square-integrable functions on the interval I. Moreover, assume that the kth component of the vector \(\pmb {X}\) can be represented by a finite number of orthonormal basis functions \(\{\varphi _b\}\) of space \(L_2(I)\):

Let \(\pmb {\alpha }=(\alpha _{10},\ldots ,\alpha _{1B_1},\ldots ,\alpha _{p0},\ldots ,\alpha _{pB_p})^{\top }\) and

where \(\pmb {\varphi }_{k}(t)=(\varphi _{0}(t),...,\varphi _{B_k}(t))^{\top }\), \(k=1,...,p\).

Using the above matrix notation, process \(\pmb {X}\) can be represented as

This means that the realizations of a process \(\pmb {X}\) are in finite-dimensional subspace of \(L_2^p(I)\).

We can estimate the vector \(\pmb {\alpha }\) on the basis of n independent realizations \(\pmb {x}_1,\pmb {x}_2,\ldots ,\pmb {x}_n\) of the random process \(\pmb {X}\) (functional data).

Typically data are recorded at discrete moments in time. Let \(x_{kj}\) denote an observed value of the feature \(X_k\), \(k=1,2,\ldots ,p\) at the jth time point \(t_j\), where \(j=1,2,...,J\). Then our data consist of the pJ pairs \((t_{j},x_{kj})\). These discrete data can be smoothed by continuous functions \(x_k\) and I is a compact set such that \(t_j \in I\), for \(j=1,...,J\).

Details of the process of transformation of discrete data to functional data can be found in Ramsay and Silverman (2005), Horváth and Kokoszka (2012), or in Górecki et al. (2014).

3 \(K=2\) Case

For two random vectors \(\pmb {X}\in {R}^p\) and \(\pmb {Y}\in {R}^q\), Escoufier (1973) introduced correlation coefficient \(\rho V\) as a nonnegative number given by

where \(\Vert \cdot \Vert _F\) denoted the Frobenius norm and

is a covariance matrix of vectors \(\pmb {X}\) and \(\pmb {Y}\).

Correlation coefficient \(\rho V\) has the following properties: \( \rho V_{\pmb {X},\pmb {Y}}=0\) if and only if random vectors \(\pmb {X}\) and \(\pmb {Y}\) are uncorrelated. Moreover, if the joint distribution of \(\pmb {X}\) and \(\pmb {Y}\) is \(p+q\) dimensional normal distribution, random vectors \(\pmb {X}\) and \(\pmb {Y}\) are independent.

We may extend this coefficient to two random processes \(\pmb {X}\in L_2^p(I)\) and \(\pmb {Y}\in L_2^q(I)\) assuming that

Moreover, if processes \(\pmb {X}\) and \(\pmb {Y}\) have the form

then Górecki et al. (2017)

In this case, the problem of testing the correlation of processes \(\pmb {X}\) and \(\pmb {Y}\) is equivalent to the problem of zeroing the coefficient \(\rho V_{\pmb {\alpha },\pmb {\beta }}\).

Note, that the coefficient \(\rho V_{\pmb {X},\pmb {Y}}\) is appropriate only for linear dependence. It is useless for more complicated situations. It “cannot see” nonlinear dependencies. In such a situation, we ought to use some other measures of dependence.

One such measure is proposed by Székely et al. (2007) distance correlation. Let us denote by \(\phi _{X,Y}\) and \(\phi _{X}\), \(\phi _{Y}\) the joint and the marginals characteristic functions of random vectors \(\pmb {X}\in R^p\) and \(\pmb {Y}\in R^q\), respectively. Distance correlation of random vectors \(\pmb {X}\in R^p\) and \(\pmb {Y}\in R^q\) is a nonnegative number given by

where

and

The weight function w is chosen to produce scale free and rotation invariant measure that does not go to zero for dependent random vectors.

Defining the joint characteristic function of processes \(\pmb {X}\in L_2^p(I)\) and \(\pmb {Y}\in L_2^q(I)\) as

where

and assuming that processes \(\pmb {X}\) and \(\pmb {Y}\) have the form (2) we have

Górecki et al. (2017).

Thus, we can reduce the problem of testing the independence of random processes \(\pmb {X}\) and \(\pmb {Y}\) to the problem of testing the significance of their distance correlation \({{\,\mathrm{dCorr}\,}}(\pmb {X},\pmb {Y})\).

4 \(K>2\) Case

Let us now discuss the problem of testing mutual independence for more than two vector processes.

Let \(\pmb {X}_1\in L_2^{p_1}(I),\pmb {X}_2\in L_2^{p_2}(I), \ldots ,\pmb {X}_K\in L_2^{p_K}(I)\) be random processes with the following representation:

Additionally, let the covariance matrix for vectors \(\pmb {\alpha }_1,\pmb {\alpha }_2,\ldots ,\pmb {\alpha }_K\) have the form:

Assuming joint \(p_1+p_2+\cdots + p_k\) dimensional normal distribution of vectors \(\pmb {\alpha }_1,\pmb {\alpha }_2,\ldots ,\pmb {\alpha }_K\), the problem of testing the null hypothesis

is equivalent to the problem of testing the null hypothesis

Let us define coefficient of mutual correlation \(\rho MV\) as a positive number given by

Assuming that the processes meet the assumptions of model (3) and that the joint distribution of vectors \(\pmb {\alpha }_1,\pmb {\alpha }_2,\ldots ,\pmb {\alpha }_K\) is normal, the problem of testing the mutual independence is equivalent to the problem of testing the significance of coefficient \(\rho MV\).

Another way to test the mutual independence is to reduce this problem to a problem using two processes.

Let \({{\,\mathrm{Corr}\,}}(\pmb {X}_i,\pmb {X}_j)\) be some measure of dependence for vector processes \(\pmb {X}_i\) and \(\pmb {X}_j\) with property: \({{\,\mathrm{Corr}\,}}(\pmb {X}_i,\pmb {X}_j)=0\) if and only if vector processes \(\pmb {X}_i\) and \(\pmb {X}_j\) are independent, \(i,j=1,2,\ldots ,K\), \(i\ne j\).

Note that in the place of \({{\,\mathrm{Corr}\,}}\) we may put, e.g., \({{\,\mathrm{dCorr}\,}}\).

Let

Following the idea from Jin and Matteson (2018), we may define the coefficients of multiple independence as

and

Thus, the following theorem is true:

Theorem 1

\(\pmb {X}_1, \pmb {X}_2,\ldots ,\pmb {X}_K\) are independent if and only if \(\mathscr {R}(\pmb {X})=0\) or \(\mathscr {S}(\pmb {X})=0\).

Hence, the problem of testing the null hypothesis

is equivalent to the problem of testing the null hypothesis

To verify these hypotheses, we propose to use a permutation test.

5 Example

5.1 Univariate Case

Let

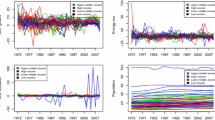

where \(\varepsilon _{1t},\) \(\varepsilon _{2t}\) and \(\varepsilon _{3t}\) are independent random variables with N(0, 0.25) distribution. We generated 1000 random realizations for each process with length 100 (Fig. 1). To smooth the data we used Fourier series with 15 elements. Clearly, processes \(X_t\) and \(Y_t\) are linearly dependent and processes \(X_t\), \(Z_t\) and \(Y_t\), \(Z_t\) are non-linearly dependent.

From Table 1 (third column), we see that all measures of correlation for functional data detect dependence (at significance level \(5\%\)) when at least one pair of linearly dependent processes exist. However, when we have nonlinear dependence only measures based on \({{\,\mathrm{dCorr}\,}}\) detect it.

5.2 Multivariate Case

Following Krzyśko and Smaga (2019) we consider the functional sample \(\pmb x_1(t),\ldots , \pmb x_n(t)\) of size \(n = 1000\) containing realizations of the random process \(\pmb X(t) = (X(t), Y(t), Z(t))\), \(t\in [0, 1]\) of dimension \(p = 3\). These observations are generated in the following discretized way:

where \(i = 1,\ldots , n\), \(t_j\), \(j = 1,\ldots , 100\) are equally spaced design time points in [0, 1], the matrix \(\pmb \Phi (t)\) is as in (1) and contains the Fourier basis functions only and \(B_k = 5\), \(k=1,\ldots ,p\), \(\pmb \alpha _i\) are 5p-dimensional random vectors, and \(\pmb \varepsilon _{ij} = (\varepsilon _{ij1},\ldots ,\varepsilon _{ijp})^{\top }\) are measurement errors such that \(\varepsilon _{ijk}\sim N(0, 0.025r_{ik})\) and \(r_{ik}\) is the range of the kth row of the matrix

\(k = 1,\ldots ,p\). The random vectors \(\pmb \alpha _{i}\) are generated similarly to Todorov and Pires (2007) and Jin and Matteson (2018) in the following two setups:

- S1:

-

Normal distribution and equal covariance matrices: \(\pmb \alpha _i\sim N(\pmb 0_{5p}, \pmb I_{5p}).\)

- S2:

-

Part of \(\pmb \alpha _i\) for (X(t), Y(t)) is from \(N(\pmb 0_{5(p-1)}, \pmb I_{5(p-1)})\) and the first element of \(\pmb \alpha _i\) for Z(t) is \({{\,\mathrm{sgn}\,}}(\alpha _1\alpha _{5 + 1})W,\) where \(W\sim {{\,\mathrm{Exp}\,}}(1/\sqrt{2})\) and the remaining \({p-1}\) elements are \(N(\pmb 0_{5(p-1) - 1}, \pmb I_{5(p-1) - 1})\). Clearly, (X(t), Y(t), Z(t)) is a pairwise independent but mutually dependent triplet.

Setup S1 is simple no dependence example. All tests correctly deal with this problem (Table 1, fourth column). Setup S2 is much harder to deal with. For whole triplet of data, all methods indicate dependence (Table 1, fifth column). For a pair of variables, all methods correctly detect independence for all pairs of processes.

6 Conclusions

We have considered the measuring and testing mutual dependence for multivariate functional data based on the basis functions representation of the data. We propose few measures of mutual dependence for multivariate functional data based on the equivalence to mutual independence through characteristic functions (Székely et al. 2007) and on \(\rho V\) coefficient (Escoufier 1973). The performance of the proposed methods was studied in simulations. Their results have indicated that the proposed methods perform quite well. Finally, we can propose to use measures and tests based \({{\,\mathrm{dCorr}\,}}\) coefficient. Such methods correctly detect linear and nonlinear dependence structure both for univariate and multivariate processes.

References

Escoufier, Y.: Le traitement des variables vectorielles. Biometrics 29(4), 751–760 (1973)

Górecki, T., Krzyśko, M., Waszak, Ł., Wołyński, W.: Methods of reducing dimension for functional data. Stat. Transit. New Ser. 15(2), 231–242 (2014)

Górecki, T, Krzyśko, M., Wołyński, W.: Correlation analysis for multivariate functional data. In: Palumbo, F., Montanari, A., Vichi, M. (eds.) Data Science, Studies in Classification, Data Analysis, and Knowledge Organization, pp. 243–258. Springer International Publishing (2017)

Górecki, T., Krzyśko, M., Wołyński, W.: Independence test and canonical correlation analysis based on the alignment between kernel matrices for multivariate functional data. Artif. Intell. Rev. 53, 475–499 (2020)

Gretton A., Bousquet O., Smola A., and Schölkopf B.: Measuring statistical dependence with Hilbert-Schmidt norms. In: Jain, S., Simon, H.U., Tomita, E. (eds.) Algorithmic Learning Theory. ALT 2005. Lecture Notes in Computer Science, vol. 3734, pp. 63–77. Springer, Berlin, Heidelberg (2005)

Horváth, L., Kokoszka, P.: Inference for Functional Data with Applications. Springer (2012)

Jin, Z., Matteson, D.S.: Generalizing distance covariance to measure and test multivariate mutual dependence via complete and incomplete V-statistics. J. Multivariate Anal. 168, 304–322 (2018)

Krzyśko, M., Smaga, Ł.: A multivariate coefficient of variation for functional data. Stat. Interface 12, 647–658 (2019)

Ramsay, J.O., Silverman, B.W.: Functional Data Analysis, 2nd edn. Springer (2005)

Székely, G.J., Rizzo, M.L., Bakirov, N.K.: Measuring and testing dependence by correlation of distances. Ann. Stat. 35, 2769–2794 (2007)

Todorov, V., Pires, A.M.: Comparative performance of several robust linear discriminant analysis methods. Revstat. Stat. J. 5, 63–83 (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Górecki, T., Krzyśko, M., Wołyński, W. (2021). Measuring and Testing Mutual Dependence for Functional Data. In: Chadjipadelis, T., Lausen, B., Markos, A., Lee, T.R., Montanari, A., Nugent, R. (eds) Data Analysis and Rationality in a Complex World. IFCS 2019. Studies in Classification, Data Analysis, and Knowledge Organization. Springer, Cham. https://doi.org/10.1007/978-3-030-60104-1_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-60104-1_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-60103-4

Online ISBN: 978-3-030-60104-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)