Abstract

Deep learning based Multiple Object Tracking (MOT) currently relies on off-the-shelf detectors for tracking-by-detection. This results in deep models that are detector biased and evaluations that are detector influenced. To resolve this issue, we introduce Deep Motion Modeling Network (DMM-Net) that can estimate multiple objects’ motion parameters to perform joint detection and association in an end-to-end manner. DMM-Net models object features over multiple frames and simultaneously infers object classes, visibility and their motion parameters. These outputs are readily used to update the tracklets for efficient MOT. DMM-Net achieves PR-MOTA score of 12.80 @ 120+ fps for the popular UA-DETRAC challenge - which is better performance and orders of magnitude faster. We also contribute a synthetic large-scale public dataset Omni-MOT for vehicle tracking that provides precise ground-truth annotations to eliminate the detector influence in MOT evaluation. This 14M+ frames dataset is extendable with our public script (Code at Dataset, Dataset Recorder, Omni-MOT Source). We demonstrate the suitability of Omni-MOT for deep learning with DMM-Net, and also make the source code of our network public.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Multiple Object Tracking (MOT) [10, 25, 42, 44] is a longstanding problem in Computer Vision [27]. Contemporary deep learning based MOT has widely adopted the tracking-by-detection paradigm [43], that capitalizes on the natural division of detection and data association tasks for the problem. In standard MOT evaluation protocol, the object detections are assumed to be known and public detections are provided on evaluation sequences, and MOT algorithms are expected to output object tracks by solving the data association problem.

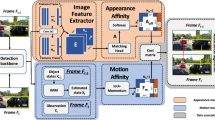

Schematics of the proposed end-to-end trainable DMM-Net: \(N_F\) frames from time stamp \({t_1:t_2}\) are input to the network. The frame sequence is first processed with a Feature Extractor comprising 3D ResNet-like [20] convolutional groups. Outputs of the last groups 2 to 7 are processed by Motion Subnet, Classifier Subnet, and Visibility Subnet. Each sub-network uses 3D convolutions to learn features that are concatenated to predict anchor tubes’ motion parameters (\(\varvec{\MakeUppercase {O}}_M \in \mathbb {R}^{N_T\times N_P \times 4}\)), object classes (\(\varvec{\MakeUppercase {O}}_C \in \mathbb {R}^{N_T\times N_C}\)), and visibility (\(\varvec{\MakeUppercase {O}}_V \in \mathbb {R}^{N_F \times N_T \times 2}\)), where \(N_T\), \(N_P\) and \(N_C\) denote the number of anchor tubes, motion parameters and object classes (including background). We explain anchor tubes and motion parameters in Sect. 4. DMM-Net is trained with its specialized loss. For deployment, the anchor tubes predicted by the DMM-Net are filtered to compute tracklets defined over multiple frames. These tracklets are later combined to form a complete track.

Although attractive, using off-the-shelf detectors for MOT also has undesired ramifications. For instance, a deep model employed for the subsequent data association task (or a constituent sub-task) gets biased to the detector. The detector performance can also become a bottle-neck for the overall tracker. Additionally, composite techniques resulting from independent detectors fail to harness the true representation power of deep learning by sacrificing end-to-end training etc. It also seems unnatural that a MOT tracker must be evaluated on different detectors to interpret its tracking performance. All these problems are potentially solvable if trackers can implicitly detect the target objects, and detector bias can be removed from the ground-truth labeling of the tracks. This work makes strides towards these solutions.

We make two major contributions towards setting the tracking-by-detection paradigm free from off-the-shelf detectors in deep learning settings. Our first contribution comes as the first-of-its-kind deep network that performs object detections and data association by estimating multiple object motion parameters in an end-to-end manner. Our network, called Deep Motion Modeling Network (DMM-Net), predicts object motion parameters, their classes and their visibility with the help of three sub-networks, see Fig. 1. These sub-networks exploit feature maps of frames in a video sequence that are learned with a Feature Extractor comprising seven 3D ResNet-like [20] convolutional groups. Instead of individual frames, DMM-Net simultaneously processes multiple (i.e. 16) frames. To handle multiple tracks in those frames, we introduce the notion of anchor tubes that extends the concept of anchor boxes in object detection [26] along the temporal dimension for MOT. Similar to [26], these anchor tubes are pre-defined to reduce the computation and improve the network speed. The predicted motion parameters can describe the shape offset and scale of each pre-defined anchor tube in the spatio-temporal space. We propose individual losses over the comprehensive representations of the sub-networks to predict object motion parameters, object classes and visibility. The DMM-Net output is readily usable to update the tracks. As our second major contribution, we propose a realistic large-scale dataset with accurate and extensive ground-truth annotations. The proposed dataset, termed Omni-MOT for its comprehensive coverage of the MOT scenarios, is generated with CARLA simulator [13] for vehicle tracking. The dataset comprises 14M+ frames, 250K tracks, 110 million bounding boxes, three weather conditions, three crowd levels and three camera views in five simulated towns. By eliminating the use of off-the-shelf detectors in ground-truth labeling, it removes any detector bias in evaluating the techniques.

The Omni-MOT dataset and DMM-Net source code are both publicly available for the broader research community. For the former, we also provide software tools to freely extend the dataset. We demonstrate the suitability of the Omni-MOT for deep models by training and evaluating DMM-Net on its videos. We also augment DMM-Net with Omni-MOT and evaluate our technique on the popular UA-DETRAC challenge [45]. The remote server computed results show that DMM-Net is able to achieve a very competitive 12.80 score for the comprehensive PR-MOTA metric with the overall speed of 123 fps. The orders of magnitude increase in the speed is directly attributed to the intrinsic detections in our tracking-by-detection technique.

2 Related Work

Multiple Object Tracking (MOT) is a fundamental problem in Computer Vision that has attracted significant interest of researchers in recent years. For a general review of the related literature, we refer to Luo et al. [27] and Emami et al. [14]. Here, we focus on the key contributions that relate to this work more closely. With the recent advances in object detectors, tracking-by-detection is fast becoming the common contemporary paradigm for MOT [38, 39, 43, 46]. In this scheme, objects are first detected frame-wise and later associated with each other across multiple frames. Relying on off-the-shelf detectors, techniques following this paradigm mainly focus on object association, which can make them inherently detector biased. These methods can be broadly categorized as local [34, 41] and global [10, 37, 48] approaches. The former use two frames for data association, while the latter associate objects over a larger number of frames.

More recent global techniques cast data association into a network flow problem [4, 7, 33, 40]. For instance, Berclaz et al. [4] solved a constrained flow optimization problem for MOT and used the k-shortest paths algorithm for associating the tracks. Chari et al. [8] added a pairwise cost to the min-cost network flow framework and proposed a convex relaxation of the problem. However, such methods rely on object detectors rather strongly, which makes them less attractive in the presence of occlusions and misdetections. To mitigate the problems resulting from occlusions, Milan et al. [29] employed a continuous energy minimization framework for MOT that incorporates explicit occlusion reasoning and appearance modeling. Wen et al. [47] also proposed a data association technique based on undirected hierarchical relation hyper-graph.

Sun et al. [42] proposed a deep affinity network to model features of pre-detected objects and compute object affinities by the same network. Bea et al. [3] modified the Siameses Network to learn deep representations for MOT with object association. There are few instances of deep learning techniques that aim at removing the reliance of tracking on independent detectors. For instance, Feichtenhofer et al. [15] proposed an R-FCN based network [9] that performs object detection and jointly builds an object model to be used for data association. However, their method is limited to frame-wise detections. Consequently, it only allows frame-by-frame association, requiring manual adjustment of temporal stride. Our technique is inherently different, as it directly computes tracklets over multiple frames by motion modeling, enabling realtime solutions while considering all the frames.

Besides the development of novel techniques, the role of datasets is central to the advancement of deep learning based MOT. Currently, a few large datasets for this task are available, e.g. PETS2009 [17], KITTI [18], DukeMTMC [36], PoseTrack [23], and MOT Challenge datasets [25, 28]. These datasets are recorded in the real world with pedestrians and vehicles as the objects of interest. We refer to the original works for more details on these datasets. Below, we briefly discuss UA-DETRAC [45] for its high relevance to our contribution.

UA-DETRAC [45] is a large dataset for traffic scenes MOT. It provides object bounding boxes, their IDs and information on the overlapped ratio of the objects. However, the provided detections are individually generated by detectors and hence are prone to errors. This results in an undesired detector-bias in tracker evaluation. Besides, different pre-processing procedures of the detectors employed by the dataset also cause problems in fair evaluation. Although UA-DETRAC has served a great purpose in advancing the state-of-the-art in MOT for vehicles, the aforementioned issues call for a more transparent dataset that does not rely on off-the-shelf detectors for evaluating trackers. This work provides such a dataset with realistic settings and complete control over the environment conditions and camera viewpoints.

3 Omni-MOT Dataset

We term the proposed dataset as Omni-MOT (OMOT) dataset for its comprehensive coverage of the conditions and scenarios possible in MOT. The dataset is publicly available for download. Moreover, we also make the recording script for the dataset public that will enable the community to further extend the data The provided script has the ability to generate multi-camera videos. The dataset is recorded using virtual cameras in the CARLA simulator [13].

To the best of our knowledge, Omni-MOT is the first realistic large dataset for tracking vehicles that completely relinquishes off-the-shelf detectors in ground truth generation, and provides comprehensive annotations. Moreover, with the provided scripts, the dataset can easily be extended for future research. To put the scale of Omni-MOT into perspective, the provided number of frames is almost 1, 200 times larger than MOT17. The number of provided tracks and boxes respectively are 210 and 30 times larger than UA-DETRAC. Not to mention, all the boxes and tracks are simulator generated that avoids any labeling error. Considering that OMOT can also be used for data augmentation, we make the ground truth for the test videos public as well. Please see the supplementary material of the paper for complete details of the dataset.

4 Deep Motion Modeling Network

To absolve deep learning based tracking-by-detection from independently pre-trained off-the-shelf detectors, we propose Deep Motion Modeling Network (DMM-Net) for online MOT (see Fig. 1). Our network enables MOT by jointly performing object detection, tracking, and classification across multiple video frames without requiring pre-detections and subsequent data association. For a given input video, it outputs objects’ motion parameters, classes, and their visibility across the input frames. We provide a detailed discussion of our network below. However, we first introduce the used notations and conventions.

-

\(N_F, N_C, N_P, N_T\) denote the number of input frames, object classes (0 for ‘background’), time-related motion parameters, and anchor tubes.

-

W, H are the frame width, and frame height.

-

\(\varvec{\MakeUppercase {I}}_t\) denotes the video frame at time t. Subsequently, a 4-D tensor \(\varvec{\MakeUppercase {I}}_{t_1:t_2:N_F}\in \mathbb {R}^{3\times N_F \times W \times H}\) denotes \(N_F\) video frames from time \(t_1\) to \(t_2-1\). For simplicity, we often ignore the subscript “\(:N_F\)”.

-

\(\varvec{\MakeUppercase {B}}_{t_1:t_2:N_F}, \varvec{\MakeUppercase {C}}_{t_1:t_2:N_F}, \varvec{\MakeUppercase {V}}_{t_1:t_2:N_F}\) respectively denote the ground truth boxes, classes and visibilities in the selected \(N_F\) video frames from time \(t_1\) to \(t_2-1\). The text also ignores “\(:N_F\)” for these notations.

-

\(\varvec{\MakeUppercase {O}}_{M, t_1:t_2:N_F}, \varvec{\MakeUppercase {O}}_{C, t_1:t_2:N_F}, \varvec{\MakeUppercase {O}}_{V, t_1:t_2:N_F}\) denote the estimated motion parameters, classes, and visiblities. With time stamps and frames clear from the context, we simplify these notations as \(O_M, O_C, O_V\). In Fig. 2, we illustrate object visibility, classes and motion parameters.

Conventions: The shape of a network output tensor is considered to be Batch \(\times \) Channels \(\times \) Duration \(\times \) Width \(\times \) Height, where Duration accounts for the number of frames. For brevity, we often ignore the Batch dimension.

4.1 Data Preparation

Appropriate data preparation is important for training (and later testing) our network. In this process, we also avail the opportunity of augmenting the data to induce robustness in our model against camera photometric distortions, background scene variations, and other practical factors. The inputs expected by our network are summarized in Table 1. For training a robust network, we perform the following data pre-processing:

(Best viewed in color) Illustration of motion visibility, class, and motion parameters: The visibility of the vehicle goes from 1 (fully visible) to 0 (invisible) and back to 0.5 (partially visible). Classes are predefined as 1 for vehicle and 0 for everything else. Motion parameters {\(p_{11}, \cdots , p_{43}\)} are used to locate the center x (cx), center y (cy), width (w) and height (h) of the tube/tracklet at anytime. We employ a quadratic motion model leveraging \(4\times 3\) matrices. (Color figure online)

-

1.

Stochastic frame sequence is introduced by randomly choosing \(N_F\) frames from \(2N_F\) frames.

-

2.

Static scene emulation is done by duplicating selected frames \(N_F\) times.

-

3.

Photometric camera distortions are introduced in the frames by scaling each pixel by a random value in the range [0.7, 1.5]. The resulting frames are converted to HSV format, and their saturation channel is again scaled by a random value in [0.7, 1.5]. A frame is then converted back to RGB format and re-scaled by a random value in the same range. This process of photometric distortion is inspired by [42].

-

4.

Frame expansion is performed by enlarging video frames by a random factor in the range [1, 1.2]. To that end, we pad the original frames with extra pixels whose value is set to the mean pixel value of the training data.

In our pre-processing, the above-mentioned steps 2–4 are applied to the frames with probability 0.01, 0.5, 0.5, respectively. The resulting frame are then resized to the fixed dimension \(H\times W \times 3\), and horizontally flipped with a probability of 0.5. We simultaneously process the selected \(N_F\) frames by applying the above mentioned transformations. These RGB video frames are then arranged as 4D-tensors \(\varvec{\MakeUppercase {I}}_{t_1:t_2} \in \mathbb {R}^{3 \times N_F \times W \times H}\). We fill the ground truth data matrices \(\varvec{\MakeUppercase {B}}_{t_1:t_2}\), \(\varvec{\MakeUppercase {C}}_{t_1:t_2}\), \(\varvec{\MakeUppercase {V}}_{t_1:t_2}\) with the detected boxes, and visibilities in the video frames from \(t_1\) to \(t_2-1\). We set the labeled boxes, classes, and visibilities of fully occluded boxes to 0. We also remove the tracks whose ratio of fully occluded boxes are greater than \(\delta _v\). We let \(N_F=16\) in our experiments.

(a) One anchor tube and three tracks are shown to illustrate the search for the best track of the anchor tube. (b) is the simplified demonstration of (a). Based on the largest overlap between the first tube box and the first visible track box, Track 2 is selected as the best match. (Color figure online)

4.2 Anchor Tubes

Inspired by the effective Single Shot Detector (SSD) [26] for object detection, we extend the core concept of this technique for MOT. Analogous to the anchor boxes of SSD, we introduce anchor tubes for DMM-Net. Here, an anchor tube is a set of anchor boxes (and associated object class and visibility), that share the same location in multiple frames along the temporal dimension (see supplementary material for further visualization). The information of anchor tubes is encoded with the tensors \(\varvec{\MakeUppercase {B}}_{t_1:t_2}\), \(\varvec{\MakeUppercase {C}}_{t_1:t_2}\), and \(\varvec{\MakeUppercase {V}}_{t_1:t_2}\). The DMM-Net is designed to predict the tube shape offset parameters along the temporal dimension, the confidence for each object class, and the visibility of each box in the tube.

Computing anchor tubes for network training can also be interpreted as further data preparation for DMM-Net. We first employ a search strategy to find the best-matched track for each anchor tube, and subsequently encode the tubes by their best-matched tracks. For the former, we specify the overlap between an anchor tube and a track as the overlap ratio between the first visible box of the track and the corresponding box in the anchor tube. A simplified illustration of this concept is presented in Fig. 3 with one anchor tube and three tracks. The anchor tube 0 iterates over all the tracks to find the largest overlap ratio. The first box of Track 1 and the first two boxes of Track 2 are occluded. Therefore, the overlap ratio of the anchor box filled red is used for selecting the best-matched track, i.e. Track 2 for the largest overlap.

Used ResNeXt block: (conv, x\(^3\), F) denote ‘F’ convolutional kernels of size \(x \times x \times x\). BN is Batch Normalization [22] and shortcut connection is summation.

To encode the anchor tubes, we employ their best-matched tracks (\(\varvec{\MakeUppercase {B}}_{t_1:t_2}\)) along with the classes (\(\varvec{\MakeUppercase {C}}_{t_1:t_2}\)) and visibility (\(\varvec{\MakeUppercase {V}}_{t_1:t_2}\)). We denote a box of the \(i^{\text {th}}\) anchor tube at the \(t^{\text {th}}\) frame as \(a_{i, t} = (a_{i, t}^{cx}, a_{i, t}^{cy}, a_{i, t}^{w}, a_{i, t}^{h})\), where the superscripts cx and cy indicate the (x, y) location of the center, and w, h denote the width and height of the box. We use the same notation in the following text as well. Each anchor box is encoded by the box of its best-matched track as follows:

where \((b_{i,t}^{cx}, b_{i,t}^{cy}, b_{i,t}^{w}, b_{i,t}^{h})\) describe the box of the best-matched track at the \(t^{\text {th}}\) frame, and \((g_{i,t}^{cx}, g_{i,t}^{cy}, g_{i,t}^{w}, g_{i,t}^{h})\) represent the resulting encoded box for the best-matched track. Following this encoding, we replace each original box, class, and visibility by its newly encoded counterpart. In the above-mentioned encoding, an anchor tube that has its best-matched track overlap ratio less than \(\delta _o\), is identified as the ‘background’ class.

4.3 Motion Model

For motion modeling, we leverage a quadratic model in time. One of the outputs of DMM-Net is a tensor of motion parameters \(\varvec{\MakeUppercase {O}}_M \in \mathbb {R}^{N_T \times 4 \times N_P}\), where \(N_P=3\) in our experiments and \(N_T\) indicates the number of anchor tubes. We estimate an encoded anchor tube for a track as:

where \(\hat{g}_{i, t}\) indicates an estimated box descriptor of the \(i^{\text {th}}\) encoded anchor tube at the \(t^{\text {th}}\) frame, the superscripts cx, cy, w and h indicate the center x, center y, height, width of the box respectively, and \(\{p_{11}, \cdots , p_{43}\}\) are the motion parameters . Each encoded ground-truth anchor tube can be decoded into a ground truth track by Eq. (1). We further elaborate on the relationship between the motion parameters and the ground truth tracks in the supplementary material. Note that the used motion function is replaceable by any differential function in our technique. We choose quadratic motion modeling considering vehicle tracking as our primary objective.

4.4 Architecture

As shown in Fig. 1, our network comprises a Feature Extractor and three sub-network. The network simultaneously processes \(N_F\) video frames for which features are first extracted. The feature maps of six intermediate layers of the Feature Extractor are fed to the sub-networks. These networks predict tensors containing motion parameters (\(\varvec{\MakeUppercase {O}}_M \in \mathbb {R}^{N_T\times N_P \times 4}\)), object classes (\(\varvec{\MakeUppercase {O}}_C \in \mathbb {R}^{N_T \times N_C}\)), and visibility information (\(\varvec{\MakeUppercase {O}}_V \in \mathbb {R}^{N_F \times N_T \times 2}\)).

Feature Extractor: We construct our Feature Extractor based on the ResNeXt blocks of the 3D ResNet [20]Footnote 1. The architectural details of the blocks are provided in Fig. 4. The blocks accept ‘F’ channel input that allows us to simultaneously model spatio-temporal features in \(N_F\) frames. We enhance the 3D ResNet architecture by removing the fully-connected and softmax layers of the network and appending two extra convolutional groups denoted as Conv6_x and Conv7_x. We perform this enhancement because the additional convolutional layers are still able to encode further higher level spatio-temporal features of the frames. Details on the convolutional groups used by the Feature Extractor are provided in Table 2. Here, Conv1_x (abbreviated as C1_x) contains 64 convolutional kernels of size \(7 \times 7 \times 7\), stride \( 1 \times 2 \times 2\). A \(3\times 3 \times 3\) max-pooling layer with stride 2 is inserted before Conv2_x for down sampling. Each convolutional layer is followed by batch normalization [21] and ReLU [31]. Spatio-temporal down-sampling is performed by Conv3_1, Conv4_1 and Conv5_1 with a stride of 2.

Sub-networks: The DMM-Net has three sub-networks to compute motion parameters, object classes, and their visibility. These networks use the outputs of Conv2_x to Conv7_x groups of the Feature Extractor. Each sub-network processes the input with six convolutional layers. The architectural details of all three sub-networks are summarized in Table 3. The kernel shape, padding size and stride are the same for each network, whereas the employed numbers of kernels are different. We fix the temporal kernel size for each network to \(\{8, 4, 2, 1, 1, 1\}\). Denoting the numbers of anchor tubes defined for the six input feature maps of a sub-network by \(\varvec{\mathcal {\MakeUppercase {K}}}\), we let \(\varvec{\mathcal {\MakeUppercase {K}}} = \{10, 8, 8, 5, 4, 4\}\) in our experiments. For each sub-network, we concatenate the output feature maps of each convolutional layer. We reshape the concatenated feature according to the dimensions mentioned in the last row of the table. These outputs are subsequently used to compute the network loss.

Network Loss: Based on the architecture, the overall loss of DMM-Net is defined as a weighted sum of three sub-losses, including the motion loss (\(\mathcal {\MakeUppercase {L}}_M\)), the classification loss (\(\mathcal {\MakeUppercase {L}}_C\)) and the visibility loss (\(\mathcal {\MakeUppercase {L}}_V\)), given as:

where N is the number of positive anchor tubes, and \(\alpha \), \(\beta \) are both hyper-parameters. The positive anchor tubes exclude the background tubes.

The motion loss \(\mathcal {\MakeUppercase {L}}_{M}\) is defined as the sum of Smooth-L1 losses between the ground truth encoded tracks \(g_{i,t}\) and their predicted encoded tracks \(\hat{g}_{i,t}\), formally:

where Pos is the set of positive encoded anchor tube indices. We compute \(\hat{g}_{i,t}^m\) using Eq. (2) discussed in Sect. 4.3.

For the classification loss \(\mathcal {\MakeUppercase {L}}_{C}\), we employ the hard negative mining strategy [26] to balance the positive and negative anchor tubes, where the negative tubes correspond to the background. We denote \(x_{i,j,t}^p \in \{1, 0\}\) to be an indicator for matching the \(i^{\text {th}}\) anchor tube and the \(j^{\text {th}}\) ground-truth track of class ‘p’ at the \(t^{\text {th}}\) frame. Each anchor tube at least has one best-matched ground-truth track, implying \(\sum _i x_{i,j,t}^p \ge 1\) for any t. The classification loss of the DMM-Net is defined as:

where \(\hat{c}_{i,t}^p = \frac{\mathop {exp}c_{i,t}^p}{\sum _p \mathop {exp} c_{i,t}^p}\) such that \(c_{i, t}^p\) is the predicted confidence of the object being for class \(p: p \in \{1, \cdots , N_C\}\), Neg is the set of negative encoded anchor tube indices. We also consider the visibility estimation task fro classification viewpoint and classify each positive box into invisible ‘0’ or visible ‘1’ box. Based on that, the loss is computed as:

where \(\hat{v}_{i,t}^q = \frac{\mathop {exp}v_{i,t}^q}{\sum _q\mathop {exp}v_{i,t}^q}\), and \(y_{i,j,t}^q\) is an indicator for matching the \(i^{\text {th}}\) anchor tube and the \(j^{\text {th}}\) ground-truth track of visibility ‘q’ at the \(t^{\text {th}}\) frame, such that \(q \in \{0, 1\}\).

Deployment of DMM-Net: For the \(2N_F\) frames \(\varvec{\MakeUppercase {I}}_{t_i:t_{i+1}:2N_F}\) from \(t_i\) to \(t_{i+1}\), the trained DMM-Net selects \(N_F\) frames as its input and outputs predicted tubes encoded by object motion parameter matrix \((\varvec{\MakeUppercase {O}}_M)\), classification matrix \((\varvec{\MakeUppercase {O}}_C)\) and visibility matrix \((\varvec{\MakeUppercase {O}}_V)\). These matrices are filtered and the track set \(\varvec{\mathcal {\MakeUppercase {T}}}_{t_i}\) is updated by associating the filtered tracklets by their IOU with the previous track set \(\varvec{\mathcal {\MakeUppercase {T}}}_{t_{i-1}}\).

4.5 Deployment

The trained DMM-Net is readily deployable for tracking (Fig. 5). The overall tracker processes \(2N_F\) frames \(\varvec{\MakeUppercase {I}}_{t_i:t_{i+1}:2N_F}\), where the DMM-Net first selects \(N_F\) consecutive frames and outputs the predicted tubes encoded with object motion parameters, classes and visibility using Eq. (2). The tubes are decoded with Eq. (1). A filtration is then done to remove the undesired tubes and we get tracklets (details to follow). We compute updated trajectory set \(\varvec{\mathcal {\MakeUppercase {T}}}_{t_i}\) by associating the tracklets with the previous trajectory set \(\varvec{\mathcal {\MakeUppercase {T}}}_{t_{i-1}}\) using their IOUs.

To filter, we first remove tubes with confidence lower than a threshold \(\delta _c\). We subsequently perform a Tube None Maximum Suppression (TNMS). To that end, we cluster the detected tubes of the same category into multiple groups by their IOUs with a threshold \(\delta _{nms}\). Then, we only keep one tube for each group that has the maximum confidence of being positive. The kept tubes, namely “tracklets”, are employed to update trajectory set.

We initialize our track set \(\varvec{\mathcal {\MakeUppercase {T}}}_{t_i}\) with as many trajectories as the number of tracklets. The track set is updated from \(t_i^{\text {th}}\) to \(t_{i+1}^{\text {th}}\) stamp using the Hungarian algorithm [30] applied to an IOU matrix \(\varvec{\varPsi } \in \mathbb {R}^{n'_{t_{i-1}}\times n_{t_i}}\), where \(n'_{t_{i-1}}\) is the number of element in the previous track set, and \(n_{t_i}\) is the number of tracklets. Notice that we perform association of tracklets and not the individual objects. The object association remains implicit and is done inside the DMM-Net which lets us define the tubes. The association of tracklets, defined across multiple frames, leads to significant computational gain. This is one of the core reasons of the orders of magnitude gain in the tracking speed of our technique over existing methods, as will be clear in Sect. 5.

Example of calculating Tube IOU. There are two tracklets (yellow, cyan). Their intersection is green. The tracklet IOU is the maximum IOU of the visible box pair. Although IOU at \(t=2\) is the largest, the lined yellow object is invisible, hence, we select the second largest IOU at \(t=1\) as the tracklet IOU.

To form the matrix \(\varvec{\varPsi }\), we must compute IOU between the existing tracklets and the new tracklets. Figure 6 shows the procedure adopted for calculating the IOU between two tracklets with a simplified example. Overall, our tracker is an on-line technique in the sense that it does not use future frames to predict object trajectories. Concurrently, DMM-Net can perform tracking in real-time that makes it a highly desirable technique for practical applications.

5 Experiments

We evaluate our technique using the proposed Omni-MOT dataset and the popular UA-DETRAC challenge [45]. The former is to demonstrate both the effectiveness of our network for MOT and trainability of deep models for MOT with Omni-MOT. We mainly focus on ‘vehicle’ tracking in this work. The proposed dataset is also for the vehicle tracking problem. Whereas DMM-Net is trained here for vehicle tracking, it is possible to train it for e.g.. pedestrian tracking.

Implementation Details: We implement the DMM-Net using Pytorch [32] and train it using NVIDIA GeForce GTX Titan GPU. The hyper-parameter values are selected with cross-validation to maximize the MOTA metric on the validation set of UA-DETRAC. The chosen values of the hyperparameters are as follows. Batch size \(B = 8\), number of training epochs per model \(= 20\), number of input frames \(= 16\), input frame size \(= 168 \times 168\), We use Adam Optimizer [24] for training. Other hyper-parameter values are \(\delta _{c}=0.4, \delta _{nms}=0.3,\) and \(\delta _o=0.5\).

Omni-MOT Dataset: To demonstrated the efficacy of DMM-Net and trainability of deep models on our dataset, we select five scenes from the Omni-MOT and perform tracking. The scenes are chosen with Easy camera viewpoint with clear weather conditions. The selected scenes are from Town 02 (with 50 vehicles) that are indexed 0, 1, 5, 7 and 8 in the dataset. In this experiment, we train and test DMM-Net using the train and test partitions of the selected scene, as provided by Omni-MOT. Our training required 59.2 h for 22 epochs.

We use both CLEAR MOT [5], and MT/ML [35] metrics and summarize the results in Table 4. For the definitions of metrics we refer to the original works. We refer to [25] for the details on the comprehensive metrics MOTA and MOTAP. The table provides metric values for both training and test partitions. The variation between these values is a clear indication that despite the Easy camera view, the dataset provides reasonable challenges for the MOT task. We provide results with additional view points in the supplementary material to further put the reported values into better perspective.

An illustration of tracking for Camera\(\_0\) is also provided in Fig. 7 (top). Our technique is able to easily track vehicles that are stationary, (e.g. 1), moving along a straight path (e.g. 2), and moving along a curved path (e.g. 3), which justifies the selection of our motion model. Additionally, our tracker has the power to deal with even full occlusions between the frames. We show such a case for a hard camera view point in the middle row of Fig. 7. In this case, vehicle-1 is totally occluded at the \(86^{th}\) frame which can be detected and tracked by our tracker. At the \(99^{th}\) frame, vehicle-1 is partially occluded, but the tracklets association performs correctly. Note that, our tracker does not need to be evaluated with (detector-based) UA-DETRAC metrics [45] as the Omni-MOT dataset provides ground truth detections without using off-the-shelf detectors.

UA-DETRAC: The UA-DETRAC challenge [45] is arguably the most widely used large-scale benchmark for MOT of vehicles. It comprises 100 videos @ 25 fps, recorded in 24 locations with frame size \(540\times 960\). For this challenge, results are computed by a remote server using CLEAR MOT and MT/ML metrics with Precision-Recall curve of the detection. We refer to [45] for further details on the metrics. In Table 5, we show our results on the UA-DETRAC challenge. The pre-fix PR for the metrics indicates the use of Precision-Recall curve. It can be seen that DMM-Net achieves excellent MOTA score, which is widely considered as the most comprehensive metric for MOT. We also provide results for DMM-Net+ which augments the UA-DETRAC training set with a subset of Omni-MOT. This subset contained 8 random videos from Omni-MOT. We can see an overall performance gain with this augmentation, exemplifying the utility of Omni-MOT in data augmentation.

Notice that our technique does not require an external ‘detector’ and achieves highly promising PR-MOTA score for UA-DETRAC @ 120+ fps - orders of magnitude faster than the existing methods. In the third row of Fig. 7, we show an example of tracking result. The box color indicates the predicted object identity. Our tracker leverages information from previous frames to detect partially occluded objects, e.g.. vehicle-3 in frame 518. The figure also shows the generalization power of our in-built detection mechanism. For instance, vehicle-1 is a very rare vehicle that is consistently assigned the correct identity. In the supplementary material, we provide further example tracking videos that clearly demonstrate successful tracking by DMM-Net for UA-DETRAC.

6 Conclusion

In the context of tracking-by-detection, we proposed a deep network DMM-Net that removes the need for an explicit external detector and performs tracking at 120+ fps to achieve 12.80% PR-MOTA value on the UA-DETRAC challenge. Our network meticulously models object motions, classes and their visibility that are subsequently used for efficiently associating the object tracks. The proposed network provides an end-to-end solution for detection and tracklet generation across multiple video frames. We also propose a realistic CARLA simulator based large-scale dataset with over 14M frames for vehicle tracking. The dataset provides precise and comprehensive ground truth with full control over data parameters, which allows for the much needed transparency in evaluation. We also provide scripts to generate more data under our framework and we make the code of DMM-Net public.

Notes

- 1.

Notation are adopted from the original work.

References

Andriyenko, A., Schindler, K.: Multi-target tracking by continuous energy minimization. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1265–1272 (2011). https://doi.org/10.1109/CVPR.2011.5995311

Andriyenko, A., Schindler, K., Roth, S.: Discrete-continuous optimization for multi-target tracking. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1926–1933 (2012). https://doi.org/10.1109/CVPR.2012.6247893

Bae, S.H., Yoon, K.J.: Confidence-based data association and discriminative deep appearance learning for robust online multi-object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 40(3), 595–610 (2018). https://doi.org/10.1109/TPAMI.2017.2691769

Berclaz, J., Fleuret, F., Türetken, E., Fua, P.: Multiple object tracking using k-shortest paths optimization. IEEE TPAMI 33(9), 1806–1819 (2011). https://doi.org/10.1109/TPAMI.2011.21

Bernardin, K., Stiefelhagen, R.: Evaluating multiple object tracking performance: the CLEAR MOT metrics. Eurasip J. Image Video Process. 2008 (2008). https://doi.org/10.1155/2008/246309

Bochinski, E., Eiselein, V., Sikora, T.: High-Speed tracking-by-detection without using image information. In: 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance, AVSS 2017 (2017). https://doi.org/10.1109/AVSS.2017.8078516

Butt, A.A., Collins, R.T.: Multi-target tracking by Lagrangian relaxation to min-cost network flow. In: Proceedings of CVPR, pp. 1846–1853 (2013)

Chari, V., Lacoste-Julien, S., Laptev, I., Sivic, J.: On pairwise costs for network flow multi-object tracking. In: Proceedings of CVPR, 07–12 June, pp. 5537–5545 (2015). https://doi.org/10.1109/CVPR.2015.7299193

Dai, J., Li, Y., He, K., Sun, J.: R-FCN: object detection via region-based fully convolutional networks. In: NIPS 2016 Proceedings of the 30th International Conference on Neural Information Processing Systems, pp. 379–387 (2016). https://academic.microsoft.com/paper/2407521645

Dehghan, A., Modiri Assari, S., Shah, M.: GMMCP tracker: globally optimal generalized maximum multi clique problem for multiple object tracking. In: Proceedings of CVPR, pp. 4091–4099 (2015)

Dicle, C., Camps, O.I., Sznaier, M.: The way they move: Tracking multiple targets with similar appearance. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2304–2311 (2013). https://doi.org/10.1109/ICCV.2013.286

Dollar, P., Appel, R., Belongie, S., Perona, P.: Fast feature pyramids for object detection. IEEE Trans. Pattern Anal. Mach. Intell. (2014). https://doi.org/10.1109/TPAMI.2014.2300479

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., Koltun, V.: CARLA: an Open Urban Driving Simulator. In: Proceedings of the 1st Annual Conference on Robot Learning, pp. 1–16 (2017). http://arxiv.org/abs/1711.03938

Emami, P., Pardalos, P.M., Elefteriadou, L., Ranka, S.: Machine learning methods for solving assignment problems in multi-target tracking. arXiv:1802.068971(1), 1–35 (2018)

Feichtenhofer, C., Pinz, A., Zisserman, A.: Detect to track and track to detect. In: Proceedings of the IEEE International Conference on Computer Vision 2017-October, pp. 3057–3065 (2017). https://doi.org/10.1109/ICCV.2017.330

Felzenszwalb, P.F., Girshick, R.B., McAllester, D., Ramanan, D.: Object detection with discriminatively trained part-based models. IEEE TPAMI 32(9), 1627–1645 (2010)

Ferryman, J., Shahrokni, A.: PETS2009: Dataset and challenge. In: Proceedings of the 12th IEEE International Workshop on Performance Evaluation of Tracking and Surveillance, PETS-Winter 2009 (2009). https://doi.org/10.1109/PETS-WINTER.2009.5399556

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? the KITTI vision benchmark suite. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 3354–3361 (2012). https://doi.org/10.1109/CVPR.2012.6248074

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (2014). https://doi.org/10.1109/CVPR.2014.81

Hara, K., Kataoka, H., Satoh, Y.: Can Spatiotemporal 3D CNNs Retrace the History of 2D CNNs and ImageNet? In: CVPR, pp. 6546–6555 (2018). https://doi.org/10.1109/CVPR.2018.00685, http://arxiv.org/abs/1711.09577

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (2016). https://doi.org/10.1109/CVPR.2016.90

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv (2015)

Iqbal, U., Milan, A., Gall, J.: PoseTrack: joint multi-person pose estimation and tracking. In: Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017 (2017). https://doi.org/10.1109/CVPR.2017.495

Kingma, D.P., Ba, J.L.: Adam: a Method for Stochastic Optimization. In: ICLR 2015 : International Conference on Learning Representations 2015 (2015). https://academic.microsoft.com/paper/2964121744

Leal-Taixé, L., Milan, A., Reid, I., Roth, S., Schindler, K.: MOTChallenge 2015: towards a benchmark for multi-target tracking. arXiv:1504.01942 [cs] pp. 1–15 (2015). http://arxiv.org/abs/1504.01942

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Luo, W., et al.: Multiple object tracking: a literature review. arXiv:1409.7618v4, pp. 1–18 (2017). https://doi.org/10.1145/0000000.0000000

Milan, A., Leal-Taixe, L., Reid, I., Roth, S., Schindler, K.: MOT16: a benchmark for multi-object tracking. CoRR abs/1603.0 (2016). http://arxiv.org/abs/1603.00831

Milan, A., Roth, S., Schindler, K.: Continuous energy minimization for multitarget tracking. IEEE Trans. Pattern Anal. Mach. Intell. 36(1), 58–72 (2014). https://doi.org/10.1109/TPAMI.2013.103

Munkres, J.: Algorithms for the assignment and transportation problems. J. Soc. Ind. Appl. Math. 5(1), 32–38 (1957). https://doi.org/10.1137/0105003

Nair, V., Hinton, G.: Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning (2010)

Paszke, A., et al.: Automatic differentiation in PyTorch. Adv. Neural Inf. Process. Syst. 30(Nips), 1–4 (2017)

Pirsiavash, H., Ramanan, D., Fowlkes, C.C.: Globally-optimal greedy algorithms for tracking a variable number of objects. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1201–1208 (2011). https://doi.org/10.1109/CVPR.2011.5995604

Reid, D., et al.: An algorithm for tracking multiple targets. IEEE Trans. Autom. Control 24(6), 843–854 (1979)

Ristani, E., Solera, F., Zou, R., Cucchiara, R., Tomasi, C.: Performance measures and a data set for multi-target, multi-camera tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 17–35. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_2

Ristani, E., Tomasi, C.: Tracking multiple people online and in real time. In: Cremers, D., Reid, I., Saito, H., Yang, M.-H. (eds.) ACCV 2014. LNCS, vol. 9007, pp. 444–459. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-16814-2_29

Roshan Zamir, A., Dehghan, A., Shah, M.: GMCP-tracker: global multi-object tracking using generalized minimum clique graphs. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7573, pp. 343–356. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33709-3_25

Shafique, K., Shah, M.: A noniterative greedy algorithm for multiframe point correspondence. IEEE Trans. Pattern Anal. Mach. Intell. 27(1), 51–65 (2005)

Sheng, H., Zhang, Y., Chen, J., Xiong, Z., Zhang, J.: Heterogeneous association graph fusion for target association in multiple object tracking. IEEE Trans. Circ. Syst. Video Technol. 29, 3269–3280 (2018)

Shitrit, H.B., Berclaz, J., Fleuret, F., Fua, P.: Multi-commodity network flow for tracking multiple people. IEEE TPAMI 36(8), 1614–1627 (2014)

Shu, G., Dehghan, A., Oreifej, O., Hand, E., Shah, M.: Part-based multiple-person tracking with partial occlusion handling. In: Proceedings of CVPR, pp. 1815–1821. IEEE (2012)

Sun, S., Akhtar, N., Song, H., Mian, A., Shah, M.: Deep affinity network for multiple object tracking 13(9), 1–15 (2018). http://arxiv.org/abs/1810.11780

Tian, Y., Dehghan, A., Shah, M.: On detection, data association and segmentation for multi-target tracking. IEEE Trans. Pattern Anal. Mach. Intell. 41, 2146–2160 (2018)

Voigtlaender, P., et al.: Mots: multi-object tracking and segmentation. In: Proceedings of CVPR, pp. 7942–7951 (2019)

Wen, L., et al.: UA-DETRAC: a new benchmark and protocol for multi-object detection and tracking (2015). http://arxiv.org/abs/1511.04136

Wen, L., Du, D., Li, S., Bian, X., Lyu, S.: Learning non-uniform hypergraph for multi-object tracking. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8981–8988 (2019)

Wen, L., Li, W., Yan, J., Lei, Z., Yi, D., Li, S.Z.: Multiple target tracking based on undirected hierarchical relation hypergraph. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 1282–1289 (2014). https://doi.org/10.1109/CVPR.2014.167

Wu, B., Nevatia, R.: Detection and tracking of multiple, partially occluded humans by Bayesian combination of edgelet based part detectors. Int. J. Comput. Vis. 75(2), 247–266 (2007)

Zhu, J., Yang, H., Liu, N., Kim, M.: Online Multi-Object Tracking with Dual Matching Attention Networks, pp. 1–17 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Sun, S., Akhtar, N., Song, X., Song, H., Mian, A., Shah, M. (2020). Simultaneous Detection and Tracking with Motion Modelling for Multiple Object Tracking. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12369. Springer, Cham. https://doi.org/10.1007/978-3-030-58586-0_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-58586-0_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58585-3

Online ISBN: 978-3-030-58586-0

eBook Packages: Computer ScienceComputer Science (R0)