Abstract

Underwater optical images often suffer from color cast, edge-blurring and low contrast due to the medium absorption and scattering in water. To solve these problems, we propose an effective technique to improve underwater image quality. First, we introduce an effective color balance strategy based on affine transform to address the color distortion. Then we convert the underwater image from RGB color space to CIE-Lab color space for contrast improvement. In ‘L’ component’s nonsubsampled contourlet transform (NSCT) domain, global contrast adjustment and multi-scale edge sharpening are conducted respectively for lowpass and bandpass direction subbands. Finally, a color-corrected and contrast-enhanced output image can be generated by inverse NSCT and conversion back to RGB color space. The propose method is a single image approach that does not require prior knowledge about the underwater imaging conditions. Experimental results show that our method outperforms state-of-the-art methods both in qualitative and quantitative evaluation. It generally results in good perceptual quality, with significant enhancement of the global contrast, the color, and the image structure details.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years, underwater imaging is more and more widely used in practical applications such as water conservancy project, marine biology research, underwater archaeology and underwater robot vision [1]. Acquiring clear images from underwater environments is one of primary issues in these scientific missions [2]. However, underwater images often suffer from poor visibility, due to the absorption and scattering of light as it propagates through the water. The attenuation of light depends both on the light’s wavelength and the distance it travels. The attenuation of light reduces the light energy, and the wavelength dependent attenuation causes color distortions that increase with an object’s distance. In addition, the scattering caused by suspended particles in water results in contrast degradation and hazy appearance, making edge details lost. Often, an artificial light source is added to the imaging device to try to increase the visibility range in the scene, which leads to uneven illumination. Thus, restoring images degraded by an underwater environment is challenging and improving the visual quality of underwater images is of great importance to further image processing, such as edge detection, image segmentation [3], target recognition [4] and so on.

Generally, underwater images can be restored and enhanced by two categories of algorithms and techniques: physics-based methods and image-based methods [5]. The physics-based methods enhance underwater images by considering the basic physics model of light propagation in water. Dark Channel Prior (DCP) dehazing method [6] for outdoor foggy scenes is widely used in underwater image restoration [7,8,9,10,11,12], due to the similar effect of light scattering. The algorithm based on wavelength compensation and image dehazing (WCID) [7] segments the foreground and the background regions based on DCP, and uses this information to remove the haze and color distortion by compensating attenuated light along the propagation path. Galdran et al. [11] introduce the Red Channel prior (RCP) which is interpreted as a variant of the DCP to recover colors associated with short wavelengths in underwater. Drews et al. [12] present the Underwater Dark Channel Prior (UDCP), which basically considers that the blue and green color channels are the underwater visual information source.

Since physics-based methods are sensitive to modeling assumptions and parameters, these methods may fail in extremely variable underwater environment. Image-based methods are usually simpler and more efficient than physics-based methods without considering any physical model for the image formation. Li et al. [13] propose an underwater image enhancement method which includes underwater image dehazing based on a minimum information loss principle and contrast enhancement based on histogram distribution prior. Fu et al. [14] introduce a two-step enhancing strategy to address color shift and contrast degradation. The approach proposed by Ancuti et al. [15] builds on the fusion of two images that are directly derived from the color correction version of original degraded image. For shallow-water images, Huang et al. [16] present relative global histogram stretching (RGHS) method based on adaptive parameter acquisition. In these algorithms, global contrast, edges sharpness and color naturalness are improved to generate a high quality image.

In this paper, we propose a novel enhancement method for single underwater image. First, we introduce an effective color balance algorithm to correct the color distortion caused by the selective absorption of light with different wavelength along scene depth. After removing the undesired color castings, the underwater image in RGB color space is converted into CIE-Lab color space. In CIE-Lab color space, ‘L’ component, which is equivalent to the image lightness, is used to enhance image contrast and details, while ‘a’ and ‘b’ components are preserved. Then, we adopt global contrast adjustment strategy for lowpass subband and multi-scale edge sharpening for bandpass direction subbands in ‘L’ component’s nonsubsampled contourlet transform (NSCT) domain. The final enhanced image is obtained by inverse NSCT and color space conversion to RGB. In this way, image contrast and edges are both enhanced while colors are balanced. By qualitative and quantitative comparisons, experiment results show the superiority of our method to existing methods. Moreover, our method can be effectively applied in underwater machine vision systems.

The rest of this paper is organized as follows: Sect. 2 describes the proposed underwater image enhancement method. The experimental results and performance evaluation are given in Sect. 3. The conclusions are drawn finally in Sect. 4.

2 The Proposed Approach

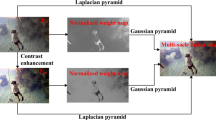

In our approach, color balance aims at compensating for the color cast caused by the selective absorption of colors with depth, while image enhancement is considered to enhance the edges and details of the scene, to mitigate the loss of contrast resulting from backscattering. Figure 1 shows the entire processing of our proposed method. Firstly, we apply an affine transform based on cumulative histogram statistics to each channel in RGB color space for color correction. In CIE-Lab color space, ‘L’ component is corresponding to the image brightness, while ‘a’ and ‘b’ components are corresponding to the image chrominance. Considering the independence of luminance and chromaticity in CIE-Lab color space, we convert the underwater image from RGB color space to CIE-Lab color space for contrast enhancement. Then, we adopt multiscale NSCT transform tool to obtain the lowpass subband coefficients and bandpass directional subband coefficients of ‘L’ component image. Since each pixel of the transform subbands corresponds to that of the original image in the same spatial location, we can gather the geometrical information pixel by pixel from the NSCT coefficients. By using global contrast enhancement strategy in lowpass subband, shadows and uneven illumination is eliminated and the global dynamic range is well stretched. In addition, adaptive multi-scale nonlinear mapping is conducted in bandpass direction subbands to sharp edges and remove noise. Finally, a color-corrected and contrast-enhanced output image can be generated by inverse NSCT and conversion back to RGB color space.

2.1 Underwater Color Balance

Light attenuation underwater leads to different degrees of color change, depending on the wavelength of each light beam. As the depth increases, red light with a longer wavelength declines faster than green and blue light, resulting in a characteristic bluish-greenish tone in underwater images. To correct the color cast, we apply an affine transform based on cumulative histogram distribution to each channel in RGB color space.

The assumption [17] underlying this algorithm is that the highest values of R, G, B observed in the image must correspond to white, and the lowest values to obscurity. The values of the three channels R, G, B, can be fully stretched to occupy the maximal possible range [0, 255] by using an affine transform to each channel. Considering the possible outliers in the histogram, we clip a small percentage of the pixels at the beginning and end of the histogram, and saturate the pixels to the boundary values of the remaining pixels range, before applying the affine transform. When performing a color balance on the N pixels of image in each channel, we saturate a percentage \( c_{1} \) of the pixels on the left side of the histogram to \( V_{\hbox{min} } \), and a percentage \( c_{2} \) of pixels on the right side to \( V_{\hbox{max} } \). \( V_{\hbox{min} } \) and \( V_{\hbox{max} } \), the saturation extrema, can be found by the cumulative histogram of the pixel values. The cumulative histogram bucket labeled i contains the number of pixels with value lower or equal to i. \( V_{\hbox{min} } \) is the lowest histogram label with a value larger than \( N \times c_{1} \), and \( V_{\hbox{max} } \) is the label immediately following the highest histogram label with a value lower than or equal to \( N \times \left( {1 - c_{2} } \right) \). Finally, the pixel interval \( [V_{\hbox{min} } ,V_{\hbox{max} } ] \) is mapped to [0, 255] by affine transformation as follows:

where \( x \) denotes the input pixel value, and \( f\left( x \right) \) denotes the output pixel value. The percentage \( c_{1} \) and \( c_{2} \) are chosen according to:

where \( ratio\left( \lambda \right) \) is a adjustment factor for \( \lambda \in \{ R,G,B\} \) channel, which is defined as:

where \( I_{\lambda } \) represents \( \lambda \in \{ R,G,B\} \) color channel of the image I.

Despite color balance is crucial to recover the color, using this correction step is not sufficient since the edges and details of the scene have been affected by the scattering. Therefore, we propose an effective enhancement approach to deal with the hazy nature of the color corrected image in the next section.

2.2 Adaptive Underwater Image Enhancement in CIE-Lab Color Space

In CIE-Lab color space, ‘L’ component is corresponding to the image luminance, while ‘a’ and ‘b’ components are corresponding to the image chrominance. After removing the undesired color castings, the underwater image in RGB color space is converted into CIE-Lab color space for contrast enhancement. Since NSCT [18] can effectively capture geometry and directional information of images in different scales and directions, we adopt multiscale NSCT-based enhancement method to improve ‘L’ component image, while ‘a’ and ‘b’ components are no change. After conducting NSCT on ‘L’ component image, we obtain the lowpass subband which contains overall contrast information and is almost noiseless, and a series of the bandpass directional subbands which contain not only edges but also noise. Then, we handle the lowpass subband and the bandpass directional subbands respectively.

Global Contrast Adjustment for Lowpass Subband.

The NSCT lowpass subband represents overall contrast of the image. Taking into account of the presence of non-uniform illumination in the underwater image, uneven brightness tends to appear in the NSCT lowpass subband. Thus, we use the multiscale retinex (MSR) approach [19, 20] to remove the inhomogeneous illumination. Then, an adaptive global contrast adjustment function is proposed to manipulate the lowpass subband coefficients. In this stage, the global dynamic range of the image can be sufficiently and accurately adjusted to improve contrast, eliminate shadows and uneven illumination. The adaptive global contrast adjustment function is defined as follows:

where

\( C_{(1,0)} \) is the NSCT lowpass subband coefficient at the first scale, i.e., the coarsest scale. \( \hat{C}_{(1,0)} \) is the processed NSCT lowpass subband coefficient at the first scale.

\( C_{\hbox{max} (1,0)} \) denotes the maximum absolute coefficient amplitude in the lowpass subband.

\( \bar{C}_{(1,0)} \) denotes the mean value of absolute coefficient amplitude in the lowpass subband.

Multi-scale Edge Sharpening for Bandpass Direction Subbands.

The NSCT bandpass directional subbands contain edges of the image at different scales and directions with noise. Because edges tend to correspond to the large NSCT coefficients and noise corresponds to the small NSCT coefficients in bandpass directional subbands, noise can be effectively suppressed by thresholding. The hard-thresholding rule is used for estimating the unknown noiseless NSCT coefficients. In this paper, the thresholds for each bandpass directional subband can be chosen according to:

We set k = 4 for the finest scale and k = 3 for the remaining ones. The noise standard deviation \( \sigma \) and individual variances \( \tilde{\sigma }_{s,d}^{2} \) are calculated by using Monte-Carlo simulations [21, 22].

Subsequently, we present a multi-scale nonlinear mapping function to modify the NSCT bandpass direction subbands coefficients at each scale and direction independently and automatically so as to achieve multiscale edge sharpening. The proposed multi-scale nonlinear mapping function is given by:

where

\( C_{(s,d)} \) is the NSCT bandpass direction subband coefficient in the subband indexed by scale s and direction d. \( \hat{C}_{(s,d)} \) is the processed NSCT bandpass direction subband coefficient.

\( C_{\hbox{max} (s,d)} \) denotes the maximum absolute coefficient amplitude in the subband indexed by scale s and direction d.

\( \bar{C}_{(s,d)} \) denotes the mean value of absolute coefficient amplitude in the subband indexed by scale s and direction d.

The final output enhanced image can be obtained by inverse NSCT and conversion back to RGB color space.

3 Experimental Results and Analysis

In this section, the effectiveness of the proposed algorithm is validated through computer simulation. We compare our method with the existing specialized underwater restoration/enhancement methods (i.e., RCP [11], UDCP [12], HDP [13], TSA [14]) both qualitatively and quantitatively. All the implementations are implemented under MATLAB R2018a environment on a PC platform with a 3.6 GHz Intel Core i7 CPU, 16 GB RAM and 64-bits OS.

3.1 Qualitative Evaluation

We test many images in our experiments, and some typical test images and the results of different methods are shown in Fig. 2. The test images contain different color distortion, such as bluish and greenish color, and also contain various distance from objects to camera.

As shown in Fig. 2, RCP [11] shows high robustness in recovering the visibility of the considered scenes, but the contrast and detail sharpness of the restored image are not enough. UDCP [12] brings in obvious color deviation and tends to produce artifacts on restored results in some cases. HDP [13] significantly increases the brightness and contrast of underwater images, but tends to generate over-enhancement and obscure the details due to uneven illumination. TSA [14] can well deal with the color cast and increase the contrast of underwater images, but edge sharpness is less than our proposed method. In summary, the proposed method is the only approach which can simultaneously enhance the contrast, sharpen the edges and eliminate the non-uniform illumination while balancing colors, and has relatively good performance on a variety of underwater images.

3.2 Quantitative Evaluation

To quantitatively evaluate the performance of different methods, we employ three metrics (i.e., image information entropy [23], average gradient [24] and UCIQE [25]). The image information entropy measures the information level of an image. The higher the entropy value is, the more information contains. The average gradient [26] measures the sharpness of an image, and the larger the average gradient is, the clearer the image is. The UCIQE metric is designed specifically to quantify the non-uniform color cast, blurring and low-contrast that characterize underwater images. A higher UCIQE score indicates the result has better balance among the chroma, saturation and contrast. The average scores are shown in Table 1 with the optimal values in bold.

As shown in Table 1, our proposed method has the highest average value among all these metrics, in accordance with the lowest distortion and the highest quality of enhanced results in general. Both qualitative and quantitative assessments proof that our method is more effective to enhance the visibility, improve details, correct colors and eliminate noise in underwater images.

4 Conclusions

In this paper, we propose a new underwater image enhancement method based on color balance and edge sharpening. The proposed method includes underwater image color balance algorithm and a contrast enhancement algorithm. Considering the color distortion caused by different wavelength attenuation, linear stretching based on cumulative histogram statistics in RGB color space is firstly introduced to compensate for the loss of the colors. Then, by converting to CIE-Lab color space, we deal with ‘L’ component in multiscale NSCT domain for contrast and edge enhancement. In the NSCT lowpass subband of ‘L’ component, MSR algorithm is applied to remove the inhomogeneous illumination, and a new nonlinear transform is presented to stretch the global dynamic range. At the same time, the multiscale nonlinear mapping is designed to enhance the edges and suppress noise in each NSCT bandpass direction subband. Therefore, the proposed algorithm allows for underwater image denoising, color correction, edge sharpening and contrast enhancement to be achieved automatically and simultaneously. Experimental results show that our method can generate promising results while comparing with other methods.

References

Kocak, D.M., Dalgleish, F.R., Caimi, F.M., et al.: A focus on recent developments and trends in underwater imaging. Marine Technol. Soc. J. 42(1), 52–67 (2008)

Sahu, P., Gupta, N., Sharma, N.: A survey on underwater image enhancement techniques. Int. J. Comput. Appl. 87(13), 19–23 (2014)

Liu, Z., Xiang, B., Song, Y., et al.: An improved unsupervised image segmentation method based on multi-objective particle swarm optimization clustering algorithm. Comput. Mater. Continua 58(2), 451–461 (2019)

Wang, N., He, M., Sun, J., et al.: ia-PNCC: noise processing method for underwater target recognition convolutional neural network. Comput. Mater. Continua 58(1), 169–181 (2019)

Schettini, R., Corchs, S.: Underwater image processing: state of the art of restoration and image enhancement methods. EURASIP J. Adv. Signal Process. 2010, 1–14 (2010)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011)

Chiang, J.Y., Chen, Y.C.: Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 21(4), 1756–1769 (2012)

Wen, H., Tian, Y., Huang, T., et al.: Single underwater image enhancement with a new optical model. In: IEEE International Symposium on Circuits and Systems, pp. 753–756 (2013)

Drews, P., Nascimento, E., Moraes, F., Botelho, S., Campos, M.: Transmission estimation in underwater single images. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 825–830 (2013)

Sathya, R., Bharathi, M., Dhivyasri, G.: Underwater image enhancement by dark channel prior. In: International Conference on Electronics and Communication Systems, pp. 1119–1123 (2015)

Galdran, A., Pardo, D., Picon, A., et al.: Automatic Red-Channel underwater image restoration. J. Vis. Commun. Image Represent. 26(1), 132–145 (2015)

Drews, P.L.J., Nascimento, E.R., Botelho, S.S.C., et al.: Underwater depth estimation and image restoration based on single images. IEEE Comput. Graphics Appl. 36(2), 24–35 (2016)

Li, C.Y., Guo, J.C., Cong, R.M., et al.: Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 25(12), 5664–5677 (2016)

Fu, X., Fan, Z., Ling, M., et al.: Two-step approach for single underwater image enhancement. In: 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), pp. 789–794 (2017)

Ancuti, C.O., Ancuti, C., Vleeschouwer, C.D., Bekaert, P.: Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 27(1), 379–393 (2018)

Huang, D., Wang, Y., Song, W., Sequeira, J., Mavromatis, S.: Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In: Schoeffmann, K., Chalidabhongse, T.H., Ngo, C.W., Aramvith, S., O’Connor, N.E., Ho, Y.-S., Gabbouj, M., Elgammal, A. (eds.) MMM 2018. LNCS, vol. 10704, pp. 453–465. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-73603-7_37

Limare, N., Lisani, J.L., Morel, J.M., et al.: Simplest color balance. Image Process. Line 1, 297–315 (2011)

Cunha, A.L., Zhou, J.P., Do, M.N.: The nonsubsampled contourlet transform: theory, design, and applications. IEEE Trans. Image Process. 15(10), 3089–3101 (2006)

Jobson, D.J., Rahman, Z., Woodell, G.A.: A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6(7), 965–976 (1997)

Jobson, D.J., Rahman, Z., Woodell, G.A.: Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 6(3), 451–462 (1997)

Donoho, D.L., Johnstone, J.M.: Ideal spatial adaptation via wavelet shrinkage. Biometrika 81(3), 425–455 (1994)

Po, D.D.Y., Do, M.N.: Directional multiscale modeling of images using the contourlet transform. IEEE Trans. Image Process. 15(6), 1610–1620 (2006)

Xie, H.L., Peng, G.H., Wang, F., et al.: Underwater image restoration based on background light estimation and dark channel prior. Acta Optica Sinica 38(01), 18–27 (2018)

Jiang, Z.X., Pu, Y.: Underwater image color compensation based on electromagnetic theory. Laser Optoelectron. Progress 55(08), 237–242 (2018)

Yang, M., Sowmya, A.: An underwater color image quality evaluation metric. IEEE Trans. Image Process. 24(12), 6062–6071 (2015)

Jin, M., Wang, T., Ji, Z., et al.: Perceptual gradient similarity deviation for full reference image quality assessment. Comput. Mater. Continua 56(3), 501–515 (2018)

Acknowledgments

This work was supported by the National Natural Science Foundation of China (No. 41706103) and the Natural Science Foundation of Jiangsu Province (No. BK20170306), and the Fundamental Research Funds for the Central Universities (No. 2017B17714).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhou, Y., Tang, Y., Huo, G., Yu, D. (2020). Underwater Image Enhancement Based on Color Balance and Edge Sharpening. In: Sun, X., Wang, J., Bertino, E. (eds) Artificial Intelligence and Security. ICAIS 2020. Lecture Notes in Computer Science(), vol 12240. Springer, Cham. https://doi.org/10.1007/978-3-030-57881-7_64

Download citation

DOI: https://doi.org/10.1007/978-3-030-57881-7_64

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-57880-0

Online ISBN: 978-3-030-57881-7

eBook Packages: Computer ScienceComputer Science (R0)